Hive 查看执行计划

1、基本语法

EXPLAIN [EXTENDED | DEPENDENCY | AUTHORIZATION] query-sql

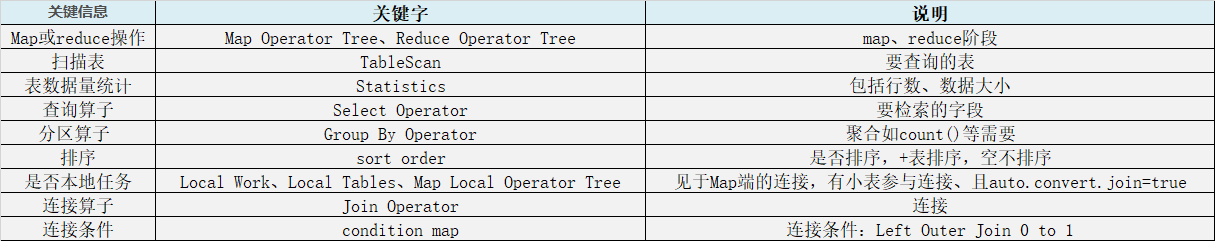

执行计划关键信息

2、简单示例

2.1、先来看一个简单的栗子

explain select * from temp_shop_info;

执行计划

STAGE DEPENDENCIES: Stage-0 is a root stage //这里只有一个 stage "" STAGE PLANS: //执行计划 Stage: Stage-0 //Stage-0 Stage 的编号 Fetch Operator limit: -1 Processor Tree: TableScan //扫描表 alias: temp_shop_info // 别名 这里没有给表起别名 默认是表名 Select Operator //查询的字段以及类型 expressions: shop_id (type: string), commodity_id (type: string), sale (type: int) outputColumnNames: _col0, _col1, _col2 //输出的字段 ListSink

2.2、下面是一个带聚合函数的执行计划

explain select shop_id, sum(sale) sale_sum from temp_shop_info group by shop_id;

执行计划

/** 有两个 stage ,其中 Stage-1 是根 stage,Stage-0 依赖于 Stage-1; */ STAGE DEPENDENCIES: Stage-1 is a root stage Stage-0 depends on stages: Stage-1 STAGE PLANS: Stage-1 Stage: Stage-1 Spark Edges: Reducer 2 <- Map 1 (GROUP, 2) DagName: hui_20221020203721_fefd07c4-9120-4d3f-ad13-abee4b852834:2 Vertices: // map 操作 Map 1 Map Operator Tree: // 扫描表读取数据 TableScan alias: temp_shop_info //表里数据的函数和大小 13 行 和 104 bt Statistics: Num rows: 13 Data size: 104 Basic stats: COMPLETE Column stats: NONE Select Operator //查询操作 expressions: shop_id (type: string), sale (type: int) outputColumnNames: shop_id, sale Statistics: Num rows: 13 Data size: 104 Basic stats: COMPLETE Column stats: NONE Group By Operator // group aggregations: sum(sale) keys: shop_id (type: string) mode: hash outputColumnNames: _col0, _col1 Statistics: Num rows: 13 Data size: 104 Basic stats: COMPLETE Column stats: NONE Reduce Output Operator // reduce key expressions: _col0 (type: string) sort order: + Map-reduce partition columns: _col0 (type: string) Statistics: Num rows: 13 Data size: 104 Basic stats: COMPLETE Column stats: NONE value expressions: _col1 (type: bigint) Execution mode: vectorized Reducer 2 //reduce Execution mode: vectorized Reduce Operator Tree: Group By Operator aggregations: sum(VALUE._col0) keys: KEY._col0 (type: string) mode: mergepartial outputColumnNames: _col0, _col1 Statistics: Num rows: 6 Data size: 48 Basic stats: COMPLETE Column stats: NONE File Output Operator compressed: false Statistics: Num rows: 6 Data size: 48 Basic stats: COMPLETE Column stats: NONE table: input format: org.apache.hadoop.mapred.SequenceFileInputFormat output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe "" Stage: Stage-0 Fetch Operator limit: -1 Processor Tree: ListSink ""

分类:

HIve

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下