Flink Sink

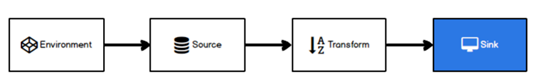

Sink有下沉的意思,在Flink中所谓的Sink其实可以表示为将数据存储起来的意思,也可以将范围扩大,表示将处理完的数据发送到指定的存储系统的输出操作. 之前我们一直在使用的print方法其实就是一种Sink

kafkaSink

依赖添加

<dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka_2.11</artifactId> <version>1.12.0</version> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.75</version> </dependency>

启动 kafka

kk.sh start

import com.alibaba.fastjson.JSON; import org.apache.flink.api.common.functions.MapFunction; import org.apache.flink.api.common.serialization.SimpleStringSchema; import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer; import org.apache.kafka.clients.producer.ProducerConfig; import org.wdh01.bean.WaterSensor; import java.util.Properties; /** * kafka sink */ public class Flink07_Sink_Kafka { public static void main(String[] args) throws Exception { //1、获取执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); //2、读取端口数据 & 转换为 javaBean SingleOutputStreamOperator<WaterSensor> waterSensorDS = env.socketTextStream("hadoop201", 9998). map(new MapFunction<String, WaterSensor>() { @Override public WaterSensor map(String value) throws Exception { String[] split = value.split(","); return new WaterSensor(split[0], Long.parseLong(split[1]), Integer.parseInt(split[2])); } }); //3、将数据转换写入 kafka Properties properties = new Properties(); properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "hadoop201:9092"); waterSensorDS.map(new MapFunction<WaterSensor, String>() { @Override public String map(WaterSensor value) throws Exception { System.out.println("JSON " + JSON.toJSONString(value)); return JSON.toJSONString(value); } }).addSink(new FlinkKafkaProducer<String>("test", new SimpleStringSchema(), properties)); //4、执行任务 env.execute(); } }

启动消费者

bin/kafka-console-consumer.sh --bootstrap-server hadoop201:9092 --topic topic_sensor

发送测试数据观察idea结果。

RedisSink

引入依赖

<dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-redis_2.11</artifactId> <version>1.1.5</version> </dependency>

事先启动 redis 服务和 redis 客户端

示例代码

import org.apache.flink.api.common.functions.MapFunction; import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.redis.RedisSink; import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisPoolConfig; import org.apache.flink.streaming.connectors.redis.common.mapper.RedisCommand; import org.apache.flink.streaming.connectors.redis.common.mapper.RedisCommandDescription; import org.apache.flink.streaming.connectors.redis.common.mapper.RedisMapper; import org.wdh01.bean.WaterSensor; /** * kafka sink */ public class Flink08_Sink_Redis { public static void main(String[] args) throws Exception { //1、获取执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); //2、读取端口数据 & 转换为 javaBean SingleOutputStreamOperator<WaterSensor> waterSensorDS = env.socketTextStream("hadoop103", 9998). map(new MapFunction<String, WaterSensor>() { @Override public WaterSensor map(String value) throws Exception { String[] split = value.split(","); return new WaterSensor(split[0], Long.parseLong(split[1]), Integer.parseInt(split[2])); } }); //3、将数据转换写入 redis FlinkJedisPoolConfig jedsPool = new FlinkJedisPoolConfig.Builder() .setHost("hadoop103") .setPort(6379) .build(); waterSensorDS.addSink(new RedisSink<>(jedsPool, new MyRedisMapper())); //4、执行任务 env.execute(); } public static class MyRedisMapper implements RedisMapper<WaterSensor> { @Override public RedisCommandDescription getCommandDescription() { //指定数据类型是 HSET return new RedisCommandDescription(RedisCommand.HSET, "Sensor"); } @Override public String getKeyFromData(WaterSensor data) { return data.getId(); } @Override public String getValueFromData(WaterSensor data) { return data.getVc().toString(); } } }

查看redis 是否收到了数据

redis-cli --raw

ElasticsearchSink

引入依赖

<dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-elasticsearch6_2.11</artifactId> <version>1.12.0</version> </dependency>

示例代码

import org.apache.flink.api.common.functions.MapFunction; import org.apache.flink.api.common.functions.RuntimeContext; import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.elasticsearch.ElasticsearchSinkFunction; import org.apache.flink.streaming.connectors.elasticsearch.RequestIndexer; import org.apache.flink.streaming.connectors.elasticsearch6.ElasticsearchSink; import org.apache.http.HttpHost; import org.elasticsearch.action.index.IndexRequest; import org.elasticsearch.client.Requests; import org.wdh01.bean.WaterSensor; import java.util.ArrayList; import java.util.HashMap; /** * kafka sink */ public class Flink09_Sink_ES { public static void main(String[] args) throws Exception { //1、获取执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); //2、读取端口数据 & 转换为 javaBean SingleOutputStreamOperator<WaterSensor> waterSensorDS = env.socketTextStream("hadoop103", 9998). map(new MapFunction<String, WaterSensor>() { @Override public WaterSensor map(String value) throws Exception { String[] split = value.split(","); return new WaterSensor(split[0], Long.parseLong(split[1]), Integer.parseInt(split[2])); } }); //3、将数据转换写入 ES ArrayList<HttpHost> httphosts = new ArrayList<>(); httphosts.add(new HttpHost("hadoop103", 9200)); ElasticsearchSink.Builder<WaterSensor> waterSensorBuilder = new ElasticsearchSink.Builder<WaterSensor>(httphosts, new MyESSinkFunction()); //一条数据也刷新 ES,否则数据进去看不到 测试的时候设置一下,便于观看效果 waterSensorBuilder.setBulkFlushMaxActions(1); ElasticsearchSink<WaterSensor> build = waterSensorBuilder.build(); waterSensorDS.addSink(build); //4、执行任务 env.execute(); } public static class MyESSinkFunction implements ElasticsearchSinkFunction<WaterSensor> { @Override public void process(WaterSensor waterSensor, RuntimeContext runtimeContext, RequestIndexer requestIndexer) { //创建 index 请求 HashMap<String, String> source = new HashMap<>(); source.put("ts", waterSensor.getId().toString()); source.put("vc", waterSensor.getVc().toString()); IndexRequest indexRequest = Requests.indexRequest() .index("sensor") .type("_doc") .id(waterSensor.getId()) .source(source); //写入 requestIndexer.add(indexRequest); } } }

自定义Sink

如果Flink没有提供给我们可以直接使用的连接器,那我们如果想将数据存储到我们自己的存储设备中,需要自定义一个到Mysql的Sink

mysql 建表

use test; CREATE TABLE `sensor` ( `id` varchar(20) NOT NULL, `ts` bigint(20) NOT NULL, `vc` int(11) NOT NULL, PRIMARY KEY (`id`,`ts`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

引入 mysql 依赖

<dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.18</version> </dependency>

示例代码

import org.apache.flink.api.common.functions.MapFunction; import org.apache.flink.configuration.Configuration; import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.api.functions.sink.RichSinkFunction; import org.wdh01.bean.WaterSensor; import java.sql.Connection; import java.sql.DriverManager; import java.sql.PreparedStatement; /** * mysql sink */ public class Flink10_Sink_Mysql { public static void main(String[] args) throws Exception { //1、获取执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); //2、读取端口数据 & 转换为 javaBean SingleOutputStreamOperator<WaterSensor> waterSensorDS = env.socketTextStream("hadoop103", 9998). map(new MapFunction<String, WaterSensor>() { @Override public WaterSensor map(String value) throws Exception { String[] split = value.split(","); return new WaterSensor(split[0], Long.parseLong(split[1]), Integer.parseInt(split[2])); } }); //3、将数据转换写入 mySQL waterSensorDS.addSink(new MySink()); //4、执行任务 env.execute(); } public static class MySink extends RichSinkFunction<WaterSensor> { //声明链接 private Connection connection; private PreparedStatement preparedStatement; @Override public void open(Configuration parameters) throws Exception { super.open(parameters); connection = DriverManager. getConnection("jdbc:mysql://hadoop103:3306/test?useSSL=false", "root", "521hui"); preparedStatement = connection.prepareStatement ("insert into sensor values (?,?,?) ON DUPLICATE KEY UPDATE ts=?,vc=?"); } @Override public void close() throws Exception { super.close(); preparedStatement.close(); connection.close(); } @Override public void invoke(WaterSensor value, Context context) throws Exception { //占位符赋值 preparedStatement.setString(1, value.getId()); preparedStatement.setLong(2, value.getTs()); preparedStatement.setInt(3, value.getVc()); preparedStatement.setLong(4, value.getTs()); preparedStatement.setInt(5, value.getVc()); //执行sql preparedStatement.execute(); } } }

另一个版本

import org.apache.flink.api.common.functions.MapFunction; import org.apache.flink.connector.jdbc.JdbcConnectionOptions; import org.apache.flink.connector.jdbc.JdbcExecutionOptions; import org.apache.flink.connector.jdbc.JdbcSink; import org.apache.flink.connector.jdbc.JdbcStatementBuilder; import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.wdh01.bean.WaterSensor; import java.sql.PreparedStatement; import java.sql.SQLException; public class Flink11_Sink_JDBC { public static void main(String[] args) throws Exception { //1、获取执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); //2、读取端口数据 & 转换为 javaBean SingleOutputStreamOperator<WaterSensor> waterSensorDS = env.socketTextStream("hadoop103", 9998). map(new MapFunction<String, WaterSensor>() { @Override public WaterSensor map(String value) throws Exception { String[] split = value.split(","); return new WaterSensor(split[0], Long.parseLong(split[1]), Integer.parseInt(split[2])); } }); //3、将数据转换写入 mySQL waterSensorDS.addSink(JdbcSink.sink( "insert into sensor values (?,?,?) ON DUPLICATE KEY UPDATE ts=?,vc=?", new JdbcStatementBuilder<WaterSensor>() { private PreparedStatement preparedStatement; private WaterSensor waterSensor; @Override public void accept(PreparedStatement preparedStatement, WaterSensor value) throws SQLException { //占位符赋值 preparedStatement.setString(1, value.getId()); preparedStatement.setLong(2, value.getTs()); preparedStatement.setInt(3, value.getVc()); preparedStatement.setLong(4, value.getTs()); preparedStatement.setInt(5, value.getVc()); } }, JdbcExecutionOptions.builder().withBatchSize(1).build(), new JdbcConnectionOptions.JdbcConnectionOptionsBuilder() .withUrl("jdbc:mysql://hadoop103:3306/test?useSSL=false") .withDriverName("com.mysql.jdbc.Driver") .withUsername("root") .withPassword("521hui") .build() )); //4、执行任务 env.execute(); } }

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY