Flink TransForm (一)

TransForm 简介

转换算子可以把一个或多个DataStream转成一个新的DataStream.程序可以把多个复杂的转换组合成复杂的数据流拓扑。

常用算子

1、map

作用 将数据流中的数据进行转换, 形成新的数据流,消费一个元素并产出一个元素

参数 lambda表达式或MapFunction实现类

返回 DataStream → DataStream

示例

public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); //从元素中读取数据 DataStreamSource<Event> eventDStream = env.fromElements( new Event("令狐冲", "/home", 1000L), new Event("依琳", "/cat", 9000L), new Event("任盈盈", "/pay", 8000L) ); //转换:提取 user //使用自定义类实现 ,MapFunction SingleOutputStreamOperator<String> users = eventDStream.map(new MyMapFun()); //也可以使用匿名类实现 SingleOutputStreamOperator<String> user1 = eventDStream.map(new MapFunction<Event, String>() { @Override public String map(Event value) throws Exception { return value.user; } }); //对于只有一个方法而言:可以使用lambda 表达式 SingleOutputStreamOperator<String> user2 = eventDStream.map(data -> data.user); users.print(); user1.print(); user2.print(); env.execute(); } //自定义 MapFunction public static class MyMapFun implements MapFunction<Event, String> { @Override public String map(Event value) throws Exception { return value.user; } }

2、flatMap

作用 消费一个元素并产生零个或多个元素

参数 FlatMapFunction实现类

返回 DataStream → DataStream

示例

public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); //从元素中读取数据 DataStreamSource<Event> eventDStream = env.fromElements( new Event("令狐冲", "/home", 1000L), new Event("依琳", "/cat", 9000L), new Event("任盈盈", "/pay", 8000L) ); //实现FlatMapFunction SingleOutputStreamOperator<String> flatmapRes = eventDStream.flatMap(new MyFlatMapFun()); flatmapRes.print(); //使用匿名类 eventDStream.flatMap(new FlatMapFunction<Event, String>() { @Override public void flatMap(Event value, Collector<String> out) throws Exception { out.collect(value.user); out.collect(value.url); out.collect(value.timestamp.toString()); } }).print(); //使用lambda表达式 eventDStream.flatMap((Event value, Collector<String> out) -> { out.collect(value.user); out.collect(value.url); out.collect(value.timestamp.toString()); }).returns(new TypeHint<String>() { }).print(); env.execute(); } public static class MyFlatMapFun implements FlatMapFunction<Event, String> { @Override public void flatMap(Event value, Collector<String> out) throws Exception { out.collect(value.user); out.collect(value.url); out.collect(value.timestamp.toString()); } }

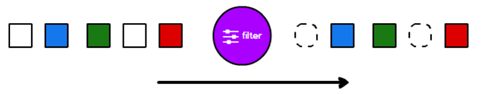

3、filter

作用 根据指定的规则将满足条件(true)的数据保留,不满足条件(false)的数据丢弃

参数 FlatMapFunction实现类

返回 DataStream → DataStream

示例

public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); //从元素中读取数据 DataStreamSource<Event> eventDStream = env.fromElements( new Event("令狐冲", "/home", 1000L), new Event("依琳", "/cat", 9000L), new Event("任盈盈", "/pay", 8000L) ); //传入实现了 filterFunc SingleOutputStreamOperator<Event> filter = eventDStream.filter(new MyFilterFun()); //传入匿名类 SingleOutputStreamOperator<Event> filter1 = eventDStream.filter(new FilterFunction<Event>() { @Override public boolean filter(Event value) throws Exception { return value.user.equals("依琳"); } }); //使用lambda表达式 eventDStream.filter(data -> data.user.equals("依琳")).print(); filter.print(); filter1.print(); env.execute(); } public static class MyFilterFun implements FilterFunction<Event> { @Override public boolean filter(Event value) throws Exception { return value.user.equals("依琳"); } }

4、Connect

作用 在某些情况下,需要将两个不同来源的数据流进行连接,实现数据匹配,比如订单支付和第三方交易信息,这两个信息的数据就来自于不同数据源,连接后,将订单支付和第三方交易信息进行对账,此时,才能算真正的支付完成。Flink中的connect算子可以连接两个保持他们类型的数据流,两个数据流被connect之后,只是被放在了一个同一个流中,内部依然保持各自的数据和形式不发生任何变化,两个流相互独立。

参数 另外一个流

返回 DataStream[A], DataStream[B] -> ConnectedStreams[A,B]

import org.apache.flink.streaming.api.datastream.ConnectedStreams; import org.apache.flink.streaming.api.datastream.DataStreamSource; import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.api.functions.co.CoMapFunction; public class Flink10_Transform_Connect { public static void main(String[] args) throws Exception { //1、获取执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1);//若 并行度1 按顺序读取数据,否则不按顺序 //2、从端口读取数据 DataStreamSource<String> StringDS = env.socketTextStream("hadoop103", 9998); DataStreamSource<String> socketTextStream2 = env.socketTextStream("hadoop103", 9988); //3、将 socketTextStream2 转换为 Int SingleOutputStreamOperator<Integer> intDs = socketTextStream2.map(String::length); //方法引用 // SingleOutputStreamOperator<Integer> map = socketTextStream2.map(data -> data.length()); //lambel 表达式 //4、连接 2 个流 ConnectedStreams<String, Integer> connect = StringDS.connect(intDs); //5、处理连接之后的流 SingleOutputStreamOperator<Object> map = connect.map(new CoMapFunction<String, Integer, Object>() { @Override public Object map1(String value) throws Exception { return value; } @Override public Object map2(Integer value) throws Exception { return value; } }); //6、打印 map.print(); //7、执行 env.execute(); } }

注意:

- 两个流中存储的数据类型可以不同

- 只是机械的合并在一起, 内部仍然是分离的2个流

- 只能2个流进行connect, 不能有第3个参与

5、union

作用 对两个或者两个以上的DataStream进行union操作,产生一个包含所有DataStream元素的新DataStream

import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.datastream.DataStreamSource; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; public class Flink01_Transform_Union { public static void main(String[] args) throws Exception { //1、获取执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); //2、读取端口数据 DataStreamSource<String> socketTextStream1 = env.socketTextStream("hadoop103", 9998); DataStreamSource<String> socketTextStream2 = env.socketTextStream("hadoop103", 9988); //3、union 链接两个数据 DataStream<String> union = socketTextStream1.union(socketTextStream2); //4、打印 union.print(); //5、执行 env.execute(); } }

connect与 union 区别:

- union之前两个流的类型必须是一样,connect可以不一样

- connect只能操作两个流,union可以操作多个;

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下