tensorflow入门到没门

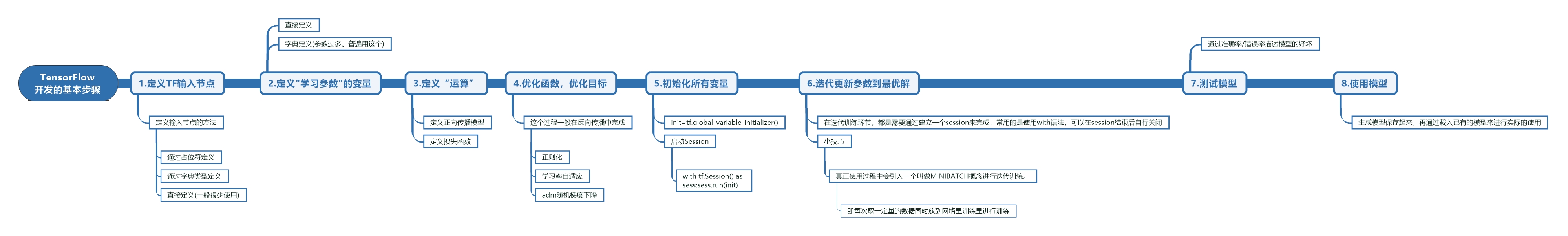

tensorflow 开发的基本步骤

入门

import tensorflow as tf

import numpy as np

# 创建数据

x_data = np.random.rand(100).astype(np.float32)

# 学习系数接近.1 bias 接近.3

y_data = x_data * .1 + .3

# 开始创建结构

# 一维的数列 范围-1到1

Weights = tf.Variable(tf.random_uniform([1], -1.0, 1.0))

biases = tf.Variable(tf.zeros([1]))

# 预测

y = Weights * x_data + biases

# 损失率

loss = tf.reduce_mean(tf.square(y - y_data))

# 优化器

optimizer = tf.train.GradientDescentOptimizer(.5)

# 减少误差 目的提高准确度

train = optimizer.minimize(loss)

# 初始值 初始变量

init = tf.global_variables_initializer()

# 结束创建结构

sess = tf.Session()

sess.run(init) # 激活init

for step in range(201):

sess.run(train)

if step % 20 == 0: # 每个20步打印一下结果

print(step, sess.run(Weights), sess.run(biases))

Session会话

import tensorflow as tf

import numpy as np

matrix01 = tf.constant([[3, 3]])

print(matrix01)

matrix02 = tf.constant([

[2],

[2]

])

print(matrix02)

product=tf.matmul(matrix01,matrix02) # 矩阵乘法

print(product)

# 方法1

# sess=tf.Session()

# print(sess)

# result=sess.run(product)

# print(result)

# sess.close()

# 方法2

with tf.Session() as sess:

result1=sess.run(product)

print(result1)

变量Variable

import tensorflow as tf

import numpy as np

state = tf.Variable(0, name="counter")

print(state.name)

one = tf.constant(1)

new_value = tf.add(state , one)

print(new_value)

update = tf.assign(state, new_value)

print(update)

init=tf.global_variables_initializer() # 初始化所有变量

with tf.Session() as sess:

sess.run(init)

for _ in range(3):

sess.run(update)

print(sess.run(state))

传入值placeholder

import tensorflow as tf

import numpy as np

input01 = tf.placeholder(tf.float32)

input02 = tf.placeholder(tf.float32)

output = tf.multiply(input01, input02)

with tf.Session() as sess:

print(sess.run(output, feed_dict={input01: [7.], input02: [8.]}))

激励函数

http://www.tensorfly.cn/tfdoc/api_docs/python/nn.html

添加结构

import tensorflow as tf

import numpy as np

# 添加层

def add_layer_func(inputs, input_size, output_size, activation_function=None):

Weights = tf.Variable(tf.random_normal([input_size, output_size]))

biases = tf.Variable(tf.zeros([1, output_size]) + 0.1)

Wx_plus_biases = tf.matmul(inputs, Weights) + biases

if activation_function is None:

outputs = Wx_plus_biases

else:

outputs = activation_function(Wx_plus_biases)

return outputs

建造神经网络

import tensorflow as tf

import numpy as np

# 添加神经层

def add_layer_func(inputs, input_size, output_size, activation_function=None):

Weights = tf.Variable(tf.random_normal([input_size, output_size]))

biases = tf.Variable(tf.zeros([1, output_size]) + 0.1)

Wx_plus_biases = tf.matmul(inputs, Weights) + biases

if activation_function is None:

outputs = Wx_plus_biases

else:

outputs = activation_function(Wx_plus_biases)

return outputs

# 输入层 创建数据

x_data = np.linspace(-1, 1, 300)[:, np.newaxis]

noise = np.random.normal(0, 0.05, x_data.shape)

y_data = np.square(x_data) - 0.5 + noise

# 隐藏层

xs = tf.placeholder(tf.float32, [None, 1])

ys = tf.placeholder(tf.float32, [None, 1])

hide = add_layer_func(xs, 1, 10, activation_function=tf.nn.relu)

# 输出层

prediction = add_layer_func(hide, 10, 1, activation_function=None)

# 预测

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys - prediction), reduction_indices=[1]))

train_step = tf.train.GradientDescentOptimizer(.1).minimize(loss) # 学习效率 一般小于1

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

for i in range(1000):

sess.run(train_step, feed_dict={xs: x_data, ys: y_data})

if i % 50 == 0:

print(sess.run(loss, feed_dict={xs: x_data, ys: y_data}))

可视化

优化器

https://www.tensorflow.org/api_docs/python/tf/train#Optimizers

posted on 2019-04-07 00:00 Indian_Mysore 阅读(95) 评论(1) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号