docker报错:报错br-xxxx 之Docker-Compose 自动创建的网桥与局域网络冲突

故障描述:

当我使用docker-compose的方式部署内网的harbor时。它自动创建了一个bridge网桥,与内网的一个网段(172.18.0.1/16)发生冲突,docker 默认的网络模式是bridge ,默认网段是172.17.0.1/16。

多次执行docker-compose up -d 部署服务后,自动生成的网桥会依次使用: 172.18.x.x ,172.19.x.x....

然后碰巧内网的一个网段也是172.18.x.x。这样就导致这台机器死活也连不到172.18.x.x这台机器。

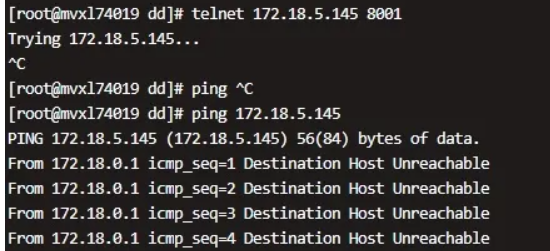

现象:

telnet不通,也无法ping通。。

解决方案:

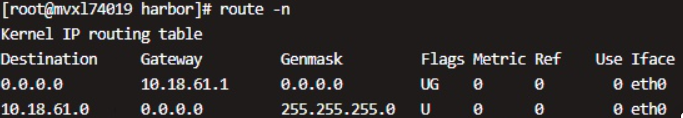

1、查看路由表:

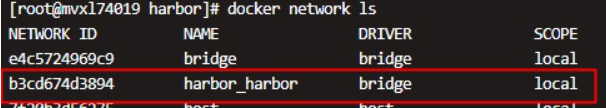

2、查看docker network如下:

3、将docker-compose应用停止

docker-compose down

4、修改docker.json文件

下次docker启动的时候docker0将会变为172.31.0.1/24,docker-compose自动创建的bridge也会变为172.31.x.x/24

# cat /etc/docker/daemon.json { "debug" : true, "default-address-pools" : [ { "base" : "172.31.0.0/16", "size" : 24 } ] }

5、删除原来有冲突的bridge

# docker network ls NETWORK ID NAME DRIVER SCOPE e4c5724969c9 bridge bridge local b3cd674d3894 harbor_harbor bridge local 7f20b3d56275 host host local 7b5f3000115b none null local # docker network rm b3cd674d3894 b3cd674d3894

6、重启docker服务

# systemctl restart docker

7、查看ip a和路由表

# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:50:56:a3:f4:69 brd ff:ff:ff:ff:ff:ff inet 10.18.61.80/24 brd 10.18.61.255 scope global eth0 valid_lft forever preferred_lft forever 64: br-6a82e7536981: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:4f:5a:cc:53 brd ff:ff:ff:ff:ff:ff inet 172.31.1.1/24 brd 172.31.1.255 scope global br-6a82e7536981 valid_lft forever preferred_lft forever 65: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:91:20:87:bd brd ff:ff:ff:ff:ff:ff inet 172.31.0.1/24 brd 172.31.0.255 scope global docker0 valid_lft forever preferred_lft forever # route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 10.18.61.1 0.0.0.0 UG 0 0 0 eth0 10.18.61.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0 172.31.0.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0 172.31.1.0 0.0.0.0 255.255.255.0 U 0 0 0 br-6a82e7536981 # docker network ls NETWORK ID NAME DRIVER SCOPE 27b40217b79c bridge bridge local 6a82e7536981 harbor_harbor bridge local 7f20b3d56275 host host local 7b5f3000115b none null local

可以看到64: br-6a82e7536981 和 65: docker0的网段都已经变成172.31.x.x了。说明配置ok了

8、启动docker-compose

# docker-compose up -d

如果出现以下错误

Starting log ... error Starting registry ... error Starting registryctl ... error Starting postgresql ... error Starting portal ... error Starting redis ... error Starting core ... error Starting jobservice ... error Starting proxy ... error ERROR: for log Cannot start service log: network b3cd674d38943c91c439ea8eafc0ecc4cea6d4e0df875d930e8342f6d678d135 not found ERROR: No containers to start

因为没有自动切换。NETWORK ID:b3cd674d3....还是原来的NETWORK ID

需要绑定bridge

# docker network ls NETWORK ID NAME DRIVER SCOPE 27b40217b79c bridge bridge local 6a82e7536981 harbor_harbor bridge local 7f20b3d56275 host host local 7b5f3000115b none null local # docker network connect network_name container_name [root@mvxl74019 harbor]# docker network connect harbor_harbor nginx [root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-jobservice [root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-core [root@mvxl74019 harbor]# docker network connect harbor_harbor registryctl [root@mvxl74019 harbor]# docker network connect harbor_harbor registry [root@mvxl74019 harbor]# docker network connect harbor_harbor redis [root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-db [root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-portal [root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-log [root@mvxl74019 harbor]# docker-compose up -d Starting log ... done Starting registry ... done Starting registryctl ... done Starting postgresql ... done Starting portal ... done Starting redis ... done Starting core ... done Starting jobservice ... done Starting proxy ... done

9、检查服务是否正常

# docker-compose ps Name Command State Ports --------------------------------------------------------------------------------------------- harbor-core /harbor/entrypoint.sh Up (healthy) harbor-db /docker-entrypoint.sh Up (healthy) harbor-jobservice /harbor/entrypoint.sh Up (healthy) harbor-log /bin/sh -c /usr/local/bin/ ... Up (healthy) 127.0.0.1:1514->10514/tcp harbor-portal nginx -g daemon off; Up (healthy) nginx nginx -g daemon off; Up (healthy) 0.0.0.0:80->8080/tcp redis redis-server /etc/redis.conf Up (healthy) registry /home/harbor/entrypoint.sh Up (healthy) registryctl /home/harbor/start.sh Up (healthy)

10、再尝试是否能telnet通目标IP: 172.18.5.145

可以看到是已经能够telnet通和ping通了。

说明:此文档参考转载至:https://zhuanlan.zhihu.com/p/379305319,仅用于学习使用

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

2020-01-06 redis主从配置