第十二章 Kubernetes的服务暴露插件--traefik

1、前言

- 之前部署的coredns实现了k8s的服务在集群内可以被自动发现,那么如何使得服务在k8s集群外被使用和访问呢?

使用nodeport星的Service:此方法只能使用iptables模型,无法使用kube-proxy的ipvs模型

使用Ingress资源(本教程使用):Ingress只能调度并暴露7层应用,特指http和https协议 - Ingress是k8s API的标准资源类型之一,也是一种核心资源,它其实就是一组基于域名和URL路径,把用户的请求转发至指定Service资源的规则;

- 可以将集群外部的请求流量,转发至集群内部,从而实现“服务暴露”;

- Ingress控制器是能够为Ingress资源监听某套接字,然后根据Ingress规则匹配机制路由调度流量的一个组件;

- 说白了,Ingress就相当于nginx+一段GO语言的脚本;

常用的Ingress控制器的实现软件如下:

Ingress-nginx;

HAProxy;

Traefik;

2、部署Traefik(Ingress控制器)

2.1 准备traefik镜像

在10.4.7.200操作

[root@hdss7-200 ~]# docker pull traefik:v1.7.2-alpine

[root@hdss7-200 ~]# docker images|grep traefik

traefik v1.7.2-alpine add5fac61ae5 2 years ago 72.4MB

[root@hdss7-200 ~]# docker tag add5fac61ae5 harbor.od.com/public/traefik:v1.7.2

[root@hdss7-200 ~]# docker push harbor.od.com/public/traefik:v1.7.2

2.2 准备traefik资源配置清单

在10.4.7.200操作

创建清单目录

[root@hdss7-200 ~]# mkdir /data/k8s-yaml/traefik

[root@hdss7-200 ~]# cd /data/k8s-yaml/traefik/

创建rbac.yaml

[root@hdss7-200 traefik]# vim rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

创建ds.yaml

注意:- --kubernetes.endpoint=https://10.4.7.10:7443

注意:hostPort最好是分别写两个,不然可能会出现8080web端口未被代理,导致最后访问traefik页面无法访问的情况,可以参考一下github上的配置https://github.com/traefik/traefik/blob/v1.7/examples/k8s/traefik-ds.yaml

[root@hdss7-200 traefik]# vim ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

#--------prometheus自动发现增加内容--------

annotations:

prometheus_io_scheme: "traefik"

prometheus_io_path: "/metrics"

prometheus_io_port: "8080"

#--------增加结束--------------------------

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

containers:

- image: harbor.od.com/public/traefik:v1.7.2

name: traefik-ingress

ports:

- name: controller

containerPort: 80

hostPort: 81

- name: admin-web

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --insecureskipverify=true

- --kubernetes.endpoint=https://10.4.7.10:7443

- --accesslog

- --accesslog.filepath=/var/log/traefik_access.log

- --traefiklog

- --traefiklog.filepath=/var/log/traefik.log

- --metrics.prometheus

创建svc.yaml

[root@hdss7-200 traefik]# vim svc.yaml

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress

ports:

- protocol: TCP

port: 80

name: controller

- protocol: TCP

port: 8080

name: admin-web

创建ingress.yaml

[root@hdss7-200 traefik]# vim ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-web-ui

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefik.od.com

http:

paths:

- path: /

backend:

serviceName: traefik-ingress-service

servicePort: 8080

2.3 依次执行创建资源

在其中一个运算节点操作

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/rbac.yaml

serviceaccount/traefik-ingress-controller created

clusterrole.rbac.authorization.k8s.io/traefik-ingress-controller created

clusterrolebinding.rbac.authorization.k8s.io/traefik-ingress-controller created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/ds.yaml

daemonset.extensions/traefik-ingress created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/svc.yaml

service/traefik-ingress-service created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/ingress.yaml

ingress.extensions/traefik-web-ui created

如果出现容器起不来,报错如下:

(iptables failed: iptables --wait -t filter -A DOCKER ! -i docker0 -o docker0 -p tcp -d 172.7.22.4 --dport 80 -j ACCEPT: iptables: No chain/target/match by that name.

那么重启docker服务(所有运算节点),将之前的iptables规则加回来

[root@hdss7-22 ~]# systemctl restart docker

[root@hdss7-22 ~]# iptables-save |grep -i postrouting|grep docker0

-A POSTROUTING -s 172.7.22.0/24 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 172.7.22.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

查看pod

[root@hdss7-21 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-64f49f5655-smzzz 1/1 Running 1 22h

traefik-ingress-6xbxz 1/1 Running 0 22s

traefik-ingress-xdt5s 1/1 Running 0 12m

查看监听端口

[root@hdss7-21 ~]# netstat -anplt|grep 81

tcp 0 0 0.0.0.0:81 0.0.0.0:* LISTEN 31285/docker-proxy

tcp6 0 0 :::81 :::* LISTEN 31291/docker-proxy

查看service

[root@hdss7-21 ~]# kubectl get svc -o wide -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

coredns ClusterIP 192.168.0.2 <none> 53/UDP,53/TCP,9153/TCP 22h k8s-app=coredns

traefik-ingress-service ClusterIP 192.168.116.188 <none> 80/TCP,8080/TCP 17m k8s-app=traefik-ingress

查看ingress

[root@hdss7-21 ~]# kubectl get ingress -n kube-system

NAME HOSTS ADDRESS PORTS AGE

traefik-web-ui traefik.od.com 80 4s

3、配置反向代理

在10.4.7.11/12的代理节点部署,以10.4.7.11为例

[root@hdss7-11 ~]# vim /etc/nginx/conf.d/od.com.conf

upstream default_backend_traefik {

server 10.4.7.21:81 max_fails=3 fail_timeout=10s;

server 10.4.7.22:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.od.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

[root@hdss7-11 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-11 ~]# nginx -s reload

4、配置域名解析

在10.4.11DNS服务器上操作

[root@hdss7-11 ~]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020010504 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

traefik A 10.4.7.10

保存退出,重启服务

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# dig -t A traefik.od.com @10.4.7.11 +short

10.4.7.10

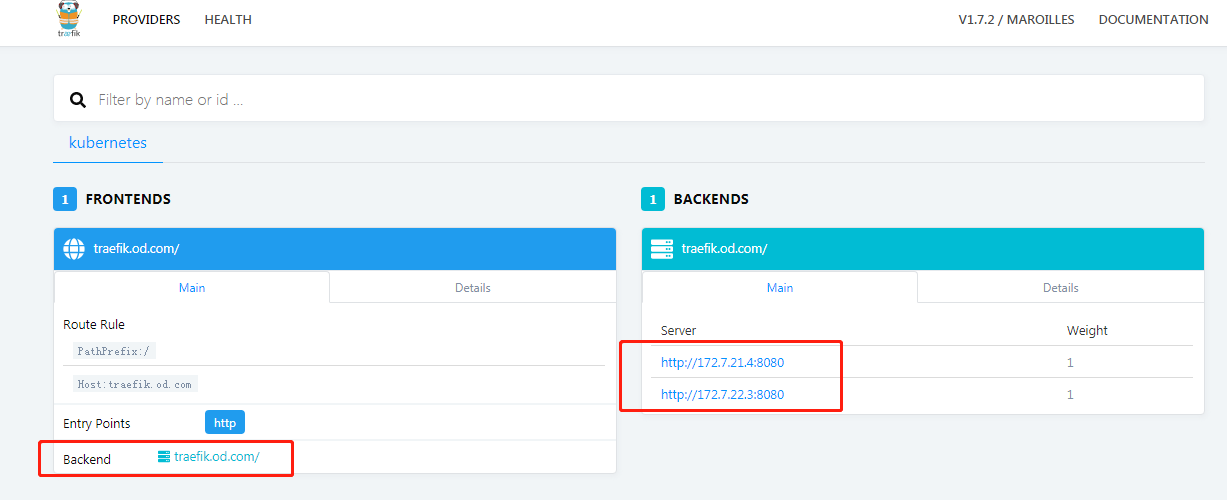

5、浏览器访问

http://traefik.od.com/dashboard/

6、问题排查

当访问http://traefik.od.com/dashboard/有问题时,我们应该从根源找起

首先,我们知道访问的traefik的web控制页面,这个服务时启动在容器中的,在ds.yaml配置中我们知道,容器(pod)端口是8080,我们去查看pod

[root@hdss7-21 ~]# kubectl get pod -o wide -n kube-system

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-64f49f5655-smzzz 1/1 Running 1 23h 172.7.21.3 hdss7-21.host.com <none> <none>

traefik-ingress-7pl4d 1/1 Running 0 18m 172.7.21.5 hdss7-21.host.com <none> <none>

traefik-ingress-ms7st 1/1 Running 0 18m 172.7.22.4 hdss7-22.host.com <none> <none>

查看service

[root@hdss7-21 ~]# kubectl get svc -o wide -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

coredns ClusterIP 192.168.0.2 <none> 53/UDP,53/TCP,9153/TCP 23h k8s-app=coredns

traefik-ingress-service ClusterIP 192.168.163.186 <none> 80/TCP,8080/TCP 19m k8s-app=traefik-ingress

查看ipvs规则

[root@hdss7-21 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 10.4.7.21:6443 Masq 1 1 0

-> 10.4.7.22:6443 Masq 1 0 0

TCP 192.168.0.2:53 nq

-> 172.7.21.3:53 Masq 1 0 0

TCP 192.168.0.2:9153 nq

-> 172.7.21.3:9153 Masq 1 0 0

TCP 192.168.163.186:80 nq

-> 172.7.21.5:80 Masq 1 0 0

-> 172.7.22.4:80 Masq 1 0 0

TCP 192.168.163.186:8080 nq

-> 172.7.21.5:8080 Masq 1 0 0

-> 172.7.22.4:8080 Masq 1 0 0

TCP 192.168.191.8:80 nq

-> 172.7.21.4:80 Masq 1 0 0

TCP 192.168.196.123:80 nq

-> 172.7.22.2:80 Masq 1 0 0

UDP 192.168.0.2:53 nq

-> 172.7.21.3:53 Masq 1 0 0

从上面我们可以推断出service192.168.163.186:8080代理了pod172.7.21.5:8080和pod172.7.22.4:8080

我们首先看pod是否可以被访问,如下,说明是没有问题

[root@hdss7-21 ~]# curl 172.7.22.4:8080

<a href="/dashboard/">Found</a>.

在看service是否有问题,如下。也是ok的

[root@hdss7-21 ~]# curl 192.168.163.186:8080

<a href="/dashboard/">Found</a>.

查看在ds.yaml中可以知道,service的8080端口是被我们宿主机10.4.7.21和10.4.7.22的81端口代理的,如下

[root@hdss7-21 ~]# netstat -anplt|grep 81

tcp 0 0 0.0.0.0:81 0.0.0.0:* LISTEN 49252/docker-proxy

tcp6 0 0 :::81 :::* LISTEN 49258/docker-proxy

查看宿主机81是否可以被访问,无法访问是正常的,应为他直接访问的是容器的80端口

[root@hdss7-12 ~]# curl 10.4.7.21:81

404 page not found

再次最后一次nginx代理

在10.4.7.11和12中nginx的配置,我们知道10.4.7.21和22的81端口被server_name *.od.com代理,所以我们访问域名,如下,也是没有问题的

[root@hdss7-21 ~]# curl traefik.od.com

<a href="/dashboard/">Found</a>.

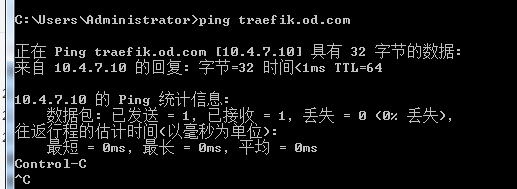

那么最后我们的外网是否可以访问呢,在我们的物理主机上查看DNS解析是否生效,如下,也是没问题的

按照上面的思路一步步排查,都没有问题的话,就可以成功访问traefik页面了

浙公网安备 33010602011771号

浙公网安备 33010602011771号