prometheus监控k8s集群

文档配视频效果更佳哦:视频地址

k8s集群监控之prometheus

prometheus官网:官网地址

1.1 Prometheus的特点

-

多维度数据模型,使用时间序列数据库TSDB而不使用mysql。

-

灵活的查询语言PromQL。

-

不依赖分布式存储,单个服务器节点是自主的。

-

主要基于HTTP的pull方式主动采集时序数据。

-

也可通过pushgateway获取主动推送到网关的数据。

-

通过服务发现或者静态配置来发现目标服务对象。

-

支持多种多样的图表和界面展示,比如Grafana等。

1.2 基本原理

1.2.1 原理说明

Prometheus的基本原理是通过各种exporter提供的HTTP协议接口

周期性抓取被监控组件的状态,任意组件只要提供对应的HTTP接口就可以接入监控。

不需要任何SDK或者其他的集成过程,非常适合做虚拟化环境监控系统,比如VM、Docker、Kubernetes等。

互联网公司常用的组件大部分都有exporter可以直接使用,如Nginx、MySQL、Linux系统信息等。

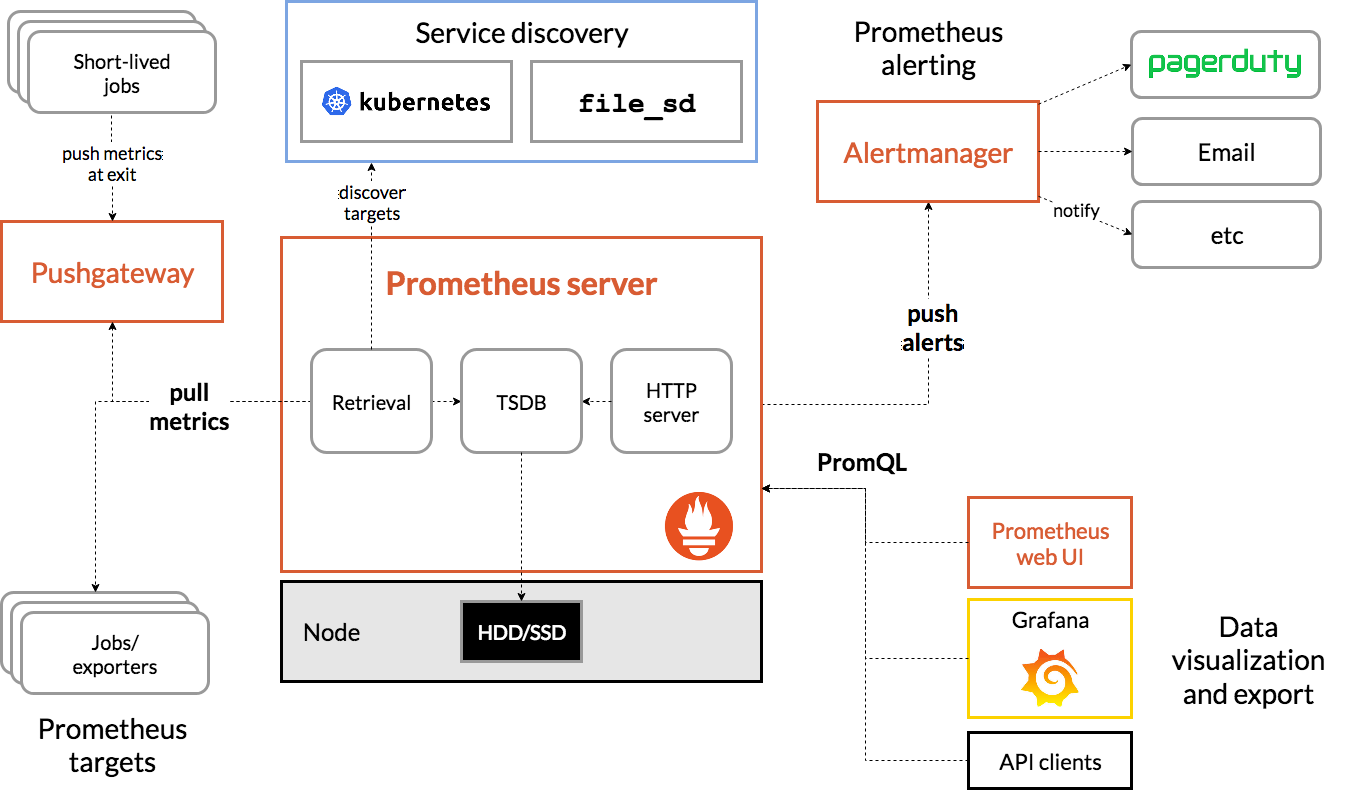

1.2.2 架构图:

1.2.3 三大组件

-

Server 主要负责数据采集和存储,提供PromQL查询语言的支持。

-

Alertmanager 警告管理器,用来进行报警。

-

Push Gateway 支持临时性Job主动推送指标的中间网关。

1.2.4 架构服务过程

Prometheus Daemon负责定时去目标上抓取metrics(指标)数据

每个抓取目标需要暴露一个http服务的接口给它定时抓取。

支持通过配置文件、文本文件、Zookeeper、DNS SRV Lookup等方式指定抓取目标。PushGateway用于Client主动推送metrics到PushGateway

而Prometheus只是定时去Gateway上抓取数据。

适合一次性、短生命周期的服务。Prometheus在TSDB数据库存储抓取的所有数据

通过一定规则进行清理和整理数据,并把得到的结果存储到新的时间序列中。Prometheus通过PromQL和其他API可视化地展示收集的数据。

支持Grafana、Promdash等方式的图表数据可视化。

Prometheus还提供HTTP API的查询方式,自定义所需要的输出。Alertmanager是独立于Prometheus的一个报警组件

支持Prometheus的查询语句,提供十分灵活的报警方式。

1.2.5 常用的exporter

prometheus不同于zabbix,没有agent,使用的是针对不同服务的exporter

正常情况下,监控k8s集群及node,pod,常用的exporter有四个:

1.部署kube-state-metrics

我调度了多少个 replicas?现在可用的有几个?

多少个 Pod 是 running/stopped/terminated 状态?

Pod 重启了多少次?

我有多少 job 在运行中?

而这些则是 kube-state-metrics 提供的内容,它基于 client-go 开发,轮询 Kubernetes API,并将 Kubernetes的结构化信息转换为metrics。

#1.官网:我们k8s集群是1.22.x版本,所以选择v2.2.4,具体版本对应看官网

https://github.com/kubernetes/kube-state-metrics

https://github.com/kubernetes/kube-state-metrics/tree/v2.2.4/examples/standard

#注意:里面存放的是我们的yaml文件,自己去下载安装

#2.下载并推送到我们私有镜像仓库harbor上

[root@k8s-node01 ~]# mkdir /k8s-yaml/kube-state-metrics -p

[root@k8s-node01 ~]# cd /k8s-yaml/kube-state-metrics

[root@k8s-master01 kube-state-metrics]# ls

cluster-role-binding.yaml cluster-role.yaml deployment.yaml service-account.yaml service.yaml

#这里需要上传或者下载需要的镜像到每个节点

#3.创建资源

[root@k8s-master01 kube-state-metrics]# kubectl create -f .

#4.验证测试

[root@k8s-master01 ~]# kubectl get pod -n kube-system -o wide |grep kube-state-metrics

[root@k8s-master01 kube-state-metrics]# curl 172.161.125.55:8080/healthz

OK

2.部署node-exporter

node-exporter是监控node的,需要每个节点启动一个,所以使用ds控制器

# 官网地址:https://github.com/prometheus/node_exporter

作用:将宿主机的/proc system 目录挂载给容器,是容器能获取node节点宿主机信息

[root@k8s-master01 ~]# docker pull prom/node-exporter:latest

[root@k8s-master01 ~]# mkdir -p /k8s-yaml/node-exporter/

[root@k8s-master01 ~]# cd /k8s-yaml/node-exporter/

[root@k8s-master01 node-exporter]# vim node-exporter-ds.yaml

[root@k8s-master01 node-exporter]# cat node-exporter-ds.yaml

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: node-exporter

namespace: kube-system

labels:

daemon: "node-exporter"

grafanak8sapp: "true"

spec:

selector:

matchLabels:

daemon: "node-exporter"

grafanak8sapp: "true"

template:

metadata:

name: node-exporter

labels:

daemon: "node-exporter"

grafanak8sapp: "true"

spec:

containers:

- name: node-exporter

image: prom/node-exporter:latest

imagePullPolicy: IfNotPresent

args:

- --path.procfs=/host_proc

- --path.sysfs=/host_sys

ports:

- name: node-exporter

hostPort: 9100

containerPort: 9100

protocol: TCP

volumeMounts:

- name: sys

readOnly: true

mountPath: /host_sys

- name: proc

readOnly: true

mountPath: /host_proc

imagePullSecrets:

- name: harbor

restartPolicy: Always

hostNetwork: true

volumes:

- name: proc

hostPath:

path: /proc

type: ""

- name: sys

hostPath:

path: /sys

type: "

[root@k8s-master01 node-exporter]# kubectl apply -f node-exporter-ds.yaml

3. 部署cadvisor

收集k8s集群docker容器内部使用资源信息 blackbox-exporte

收集k8s集群docker容器服务是否存活

该exporter是通过和kubelet交互,取到Pod运行时的资源消耗情况,并将接口暴露给Prometheus。

cadvisor由于要获取每个node上的pod信息,因此也需要使用daemonset方式运行

cadvisor采用daemonset方式运行在node节点上,通过污点的方式排除master

同时将部分宿主机目录挂载到本地,如docker的数据目录

#官网地址:https://github.com/google/cadvisor

https://github.com/google/cadvisor/tree/v0.42.0/deploy/kubernetes/base

[root@k8s-master01 ~]# mkdir /k8s-yaml/cadvisor && cd /k8s-yaml/cadvisor

[root@k8s-master01 cadvisor]# cat ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: cadvisor

namespace: kube-system

labels:

app: cadvisor

spec:

selector:

matchLabels:

name: cadvisor

template:

metadata:

labels:

name: cadvisor

spec:

hostNetwork: true

#------pod的tolerations与node的Taints配合,做POD指定调度----

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

#-------------------------------------

containers:

- name: cadvisor

image: gcr.io/cadvisor/cadvisor:v0.39.0

imagePullPolicy: IfNotPresent

volumeMounts:

- name: rootfs

mountPath: /rootfs

readOnly: true

- name: var-run

mountPath: /var/run

- name: sys

mountPath: /sys

readOnly: true

- name: docker

mountPath: /var/lib/docker

readOnly: true

ports:

- name: http

containerPort: 4194

protocol: TCP

readinessProbe:

tcpSocket:

port: 4194

initialDelaySeconds: 5

periodSeconds: 10

args:

- --housekeeping_interval=10s

- --port=4194

terminationGracePeriodSeconds: 30

volumes:

- name: rootfs

hostPath:

path: /

- name: var-run

hostPath:

path: /var/run

- name: sys

hostPath:

path: /sys

- name: docker

hostPath:

path: /data/docker

#修改运算节点软连接 (所有node节点)

#1.node01修改

[root@k8s-node01 ~]# mount -o remount,rw /sys/fs/cgroup/

[root@k8s-node01 ~]# ln -s /sys/fs/cgroup/cpu,cpuacct/ /sys/fs/cgroup/cpuacct,cpu

[root@k8s-node01 ~]# ll /sys/fs/cgroup/ | grep cpu

#2.node02修改

[root@k8s-node02 ~]# mount -o remount,rw /sys/fs/cgroup/

[root@k8s-node02 ~]# ln -s /sys/fs/cgroup/cpu,cpuacct/ /sys/fs/cgroup/cpuacct,cpu

[root@k8s-node02 ~]# ll /sys/fs/cgroup/ | grep cpu

lrwxrwxrwx 1 root root 11 Nov 17 13:41 cpu -> cpu,cpuacct

lrwxrwxrwx 1 root root 11 Nov 17 13:41 cpuacct -> cpu,cpuacct

lrwxrwxrwx 1 root root 27 Dec 19 01:52 cpuacct,cpu -> /sys/fs/cgroup/cpu,cpuacct/

dr-xr-xr-x 5 root root 0 Nov 17 13:41 cpu,cpuacct

dr-xr-xr-x 3 root root 0 Nov 17 13:41 cpuset

#注:这一步只是将原本的可读更改为了可读,可写

[root@k8s-master01 cadvisor]# kubectl apply -f daemonset.yaml

daemonset.apps/cadvisor created

#镜像需要获得,课程中已经配置了镜像地址直接下载就好

[root@k8s-master01 cadvisor]# kubectl -n kube-system get pod -o wide|grep cadvisor

cadvisor-29nv5 1/1 Running 0 2m17s 192.168.1.112 k8s-master02

cadvisor-lnpwj 1/1 Running 0 2m17s 192.168.1.114 k8s-node01

cadvisor-wmr57 1/1 Running 0 2m17s 192.168.1.111 k8s-master01

cadvisor-zcz78 1/1 Running 0 2m17s 192.168.1.115 k8s-node02

[root@k8s-master01 ~]# netstat -luntp|grep 4194

tcp6 0 0 :::4194

4 部署blackbox-exporter 黑盒监控

Prometheus 官方提供的 exporter 之一,可以提供 http、dns、tcp、icmp 的监控数据采集

官方github地址:https://github.com/prometheus/blackbox_exporter

https://github.com/prometheus/blackbox_exporter/tree/v0.18.0/config/testdata

- 准备工作目录

[root@k8s-master01 ~]# docker pull prom/blackbox-exporter:v0.18.0

[root@k8s-master01 ~]# mkdir -p /k8s-yaml/blackbox-exporter && cd /k8s-yaml/blackbox-exporter

- 资源清单创建

[root@k8s-master01 blackbox-exporter]# vim blackbox-exporter-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: kube-system

data:

blackbox.yml: |-

modules:

http_2xx:

prober: http

timeout: 2s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

valid_status_codes: [200,301,302,404]

method: GET

preferred_ip_protocol: "ip4"

tcp_connect:

prober: tcp

timeout: 2s

[root@k8s-master01 blackbox-exporter]# vim blackbox-exporter-deployment.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: blackbox-exporter

namespace: kube-system

labels:

app: blackbox-exporter

annotations:

kubernetes.io/replicationcontroller: Deployment

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

volumes:

- name: config

configMap:

name: blackbox-exporter

defaultMode: 420

containers:

- name: blackbox-exporter

image: prom/blackbox-exporter:v0.18.0

imagePullPolicy: IfNotPresent

args:

- --config.file=/etc/blackbox_exporter/blackbox.yml

- --log.level=info

- --web.listen-address=:9115

ports:

- name: blackbox-port

containerPort: 9115

protocol: TCP

resources:

limits:

cpu: 200m

memory: 256Mi

requests:

cpu: 100m

memory: 50Mi

volumeMounts:

- name: config

mountPath: /etc/blackbox_exporter

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

[root@k8s-master01 blackbox-exporter]# vim blackbox-exporter-service.yaml

kind: Service

apiVersion: v1

metadata:

name: blackbox-exporter

namespace: kube-system

spec:

type: NodePort

selector:

app: blackbox-exporter

ports:

- name: blackbox-port

port: 9115

targetPort: 9115

nodePort: 10015

protocol: TCP

#说明:这里也可以使用ingress-nginx进行转发

[root@k8s-master01 blackbox-exporter]# kubectl create -f .

configmap/blackbox-exporter created

deployment.apps/blackbox-exporter created

service/blackbox-exporter created

[root@k8s-master01 blackbox-exporter]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

blackbox-exporter-59fd868bfc-j4nfv 1/1 Running 0 2m37s

[root@k8s-master01 blackbox-exporter]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

blackbox-exporter NodePort 10.100.192.71 <none> 9115:10015/TCP

#如果在创建的时候提示端口区间则去修改集群端口或者更改成30000以上的端口比如说30015

#然后浏览器去访问我们的IP:10015

[root@k8s-master01 blackbox-exporter]# curl 192.168.1.114:10015

..........

<h1>Blackbox Exporter</h1>

..........

[root@k8s-master01 blackbox-exporter]#

2、部署prometheus server

2.1 准备prometheus server环境

官方dockerhub地址:https://hub.docker.com/r/prom/prometheus

官方github地址:https://github.com/prometheus/prometheus

https://github.com/prometheus/prometheus/tree/v2.32.0/config/testdata

当前最新版:2.32.0

#1.准备目录

[root@k8s-master01 ~]# mkdir /k8s-yaml/prometheus-server && cd /k8s-yaml/prometheus-server

- 1.资源清单rabc

[root@k8s-master01 prometheus-server]# vim rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

2.2.准备deploment资源清单

--web.enable-lifecycle 启用远程热加载配置文件,配置文件改变后不用重启prometheus

curl -X POST http://localhost:9090/-/reload

storage.tsdb.min-block-duration=10m 只加载10分钟数据

storage.tsdb.retention=72h 保留72小时数据

[root@k8s-master01 prometheus-server]# vim depoyment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "5"

labels:

name: prometheus

name: prometheus

namespace: kube-system

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: prometheus

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: prometheus

spec:

hostAliases:

- ip: "192.168.1.111"

hostnames:

- "k8s-master01"

- ip: "192.168.1.112"

hostnames:

- "k8s-master02"

- ip: "192.168.1.114"

hostnames:

- "k8s-node01"

hostnames:

- ip: "192.168.1.115"

hostnames:

- "k8s-node02"

containers:

- name: prometheus

image: prom/prometheus:v2.32.0

imagePullPolicy: IfNotPresent

command:

- /bin/prometheus

args:

- --config.file=/data/etc/prometheus.yaml

#- --storage.tsdb.path=/data/prom-db

- --storage.tsdb.min-block-duration=10m

- --storage.tsdb.retention=72h

- --web.enable-lifecycle

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: /data/

name: data

resources:

requests:

cpu: "1000m"

memory: "1.5Gi"

limits:

cpu: "2000m"

memory: "3Gi"

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

serviceAccountName: prometheus

volumes:

- name: data

nfs:

path: /data/nfs-volume/prometheus/

server: 192.168.1.115

#注意这里要在某一台机器上创建nfs存储,这里我是在192.168.1.115 k8s-node02创建的(生产环境可以使用其它比如说glusterfs存储都可以)

[root@k8s-node02 ~]# yum install nfs-utils -y

[root@k8s-node02 ~]# mkdir -p /data/nfs-volume/prometheus/

[root@k8s-node02 ~]# mkdir -p /data/nfs-volume/prometheus/etc

[root@k8s-node02 ~]# mkdir -p /data/nfs-volume/prometheus/prom-db

[root@k8s-node02 ~]# cat /etc/exports

/data/nfs-volume/prometheus/ * (rw,fsid=0,sync)

[root@k8s-node02 ~]# systemctl start nfs-server

[root@k8s-node02 ~]# systemctl enable nfs-server

- 准备svc资源清单(准备完了service也可以使用ingress-nginx的方式配置域名访问,这里我没配置)

[root@k8s-master01 prometheus-server]# vim service.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: kube-system

spec:

type: NodePort

ports:

- port: 9090

protocol: TCP

targetPort: 9090

nodePort: 10090

selector:

app: prometheus

#在master创建资源文件

[root@k8s-master01 prometheus-server]# kubectl create -f .

- 创建prometheus配置文件 注意:因为设置了共享,所以文件创建在nfs 192.168.1.115这台机器

配置文件:

[root@k8s-node02 ~]# cd /data/nfs-volume/prometheus/etc/

[root@k8s-node02 etc]# cat prometheus.yaml

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:10015

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:4194

- job_name: 'kubernetes-kube-state'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_pod_label_grafanak8sapp]

regex: .*true.*

action: keep

- source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name']

regex: 'node-exporter;(.*)'

action: replace

target_label: nodename

- job_name: 'blackbox_http_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: http

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port, __meta_kubernetes_pod_annotation_blackbox_path]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+);(.+)

replacement: $1:$2$3

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'blackbox_tcp_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [tcp_connect]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: tcp

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'traefik'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme]

action: keep

regex: traefik

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- 启动Prometheus

[root@k8s-master01 prometheus-server]# ls

depoyment.yaml rbac.yaml service.yaml

[root@k8s-master01 prometheus-server]# kubectl apply -f .

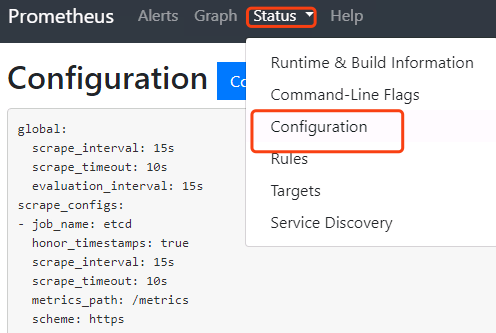

2.3 浏览器验证

访问192.168.1.114:10090 ,如果能成功访问的话,表示启动成功

点击status->configuration就是我们的配置文件

- 2.4使服务能被prometheus自动监控

点击status->targets,展示的就是我们在prometheus.yml中配置的job-name,这些targets基本可以满足我们收集数据的需求。

4个编号的job-name已经被发现并获取数据。

思考:如何将应用pod等监控进来

3.grafana部署

官方dockerhub地址:https://hub.docker.com/r/grafana/grafana

官方github地址:https://github.com/grafana/grafana

grafana官网:https://grafana.com/

#首先需要创建共享存储目录 192.168.1.115

[root@k8s-node02 grafana]# cat /etc/exports

/data/nfs-volume/prometheus/ * (rw,fsid=0,sync)

/data/nfs/grafana/ * (rw,sync)

[root@k8s-node02 grafana]# mkdir /data/nfs/grafana/ -p

[root@k8s-node02 grafana]# systemctl restart nfs

#创建grafana需要的yaml文件

[root@k8s-master01 ~]# mkdir /k8s-yaml/grafana && cd /k8s-yaml/grafana

[root@k8s-master01 grafana]# vim grafana.yaml

[root@k8s-master01 grafana]# cat grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: kube-system

labels:

app: grafana

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana:latest

ports:

- containerPort: 3000

volumeMounts:

---

apiVersion: v1

kind: Service

metadata:

name: grafana-svc

namespace: kube-system

spec:

ports:

- port: 3000

targetPort: 3000

nodePort: 3303

type: NodePort

selector:

app: grafana

[root@k8s-master01 grafana]# kubectl create -f grafana.yaml

deployment.apps/grafana created

service/grafana-svc created

[root@k8s-master01 grafana]# kubectl get pod -n kube-system |grep grafana

grafana-6dc6566c6b-44wsw 1/1 Running 0 22s

11074 11670 8588

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义