kubernetes(3):kubeadm安装k8s1.15

kubeadm安装k8s1.15

https://blog.csdn.net/qq_24058757/article/details/86600736

https://blog.csdn.net/jacksonary/article/details/88975460

kubeadm是kubernetes官方用来自动化高效安装kubernetes的工具,手动安装,特别麻烦。

Yum 安装的版本太低了,还是kubeadm安装吧

清除环境,如果是生产,你小心点。

yum remove kube* -y yum remove docker* -y

1 环境准备

master的CPU至少2核,内存至少2G,否则坑死你

|

主机 |

ip地址 |

cpu核数 |

内存 |

swap |

host解析 |

|

k8s-master |

10.6.76.25 |

2+否则报错 |

2G+否则报错 |

关闭 |

需要 |

|

k8s-node-1 |

10.6.76.23 |

1+ |

1G+ |

关闭 |

需要 |

|

k8s-node-2 |

10.6.76.24 |

1+ |

1G+ |

关闭 |

需要 |

1.1 内核版本大于等于3.10

# Cannot communicate securely with peer: no common encryption algorithm(s)

sudo yum update nss nss-util nss-sysinit nss-tools

升级内核步骤

# ELRepo 仓库(可以先看一下 /etc/yum.repos.d/ 中是否有yum 源)

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

# 查看可用内核

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

# 安装最新内核

yum --enablerepo=elrepo-kernel install kernel-ml -y

# 查看可用内核,一般上面安装的序号为0

awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

# 设置默认内核为最新的

grub2-set-default 0

# 生成 grub 配置文件

grub2-mkconfig -o /boot/grub2/grub.cfg

# 重启

reboot

# 验证

uname -a

1.2 内核优化

添加内核参数文件 /etc/sysctl.d/k8s.conf

添加内核参数文件 /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 vm.swappiness = 0 #关闭swap功能

执行命令使修改生效

modprobe br_netfilter sysctl -p /etc/sysctl.d/k8s.conf

1.3 注释掉 SWAP 的自动挂载

临时处理

swapoff -a

#修改 /etc/fstab 文件,注释掉 SWAP 的自动挂载,即注释/dev/mapper/centos-swap这行

1.4 关闭selinux和Firewall

setenforce 0 sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config # 更改为 SELINUX=disabled #vim /etc/selinux/config systemctl stop firewalld systemctl disable firewalld # 验证 systemctl status firewalld

1.5 kube-proxy开启ipvs的前置条件

cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4 #查看是否已经正确加载所需的内核模块 lsmod | grep -e ip_vs -e nf_conntrack_ipv4 #安装了ipset软件包 yum install ipset -y #为了便于查看ipvs的代理规则,安装管理工具ipvsadm yum install ipvsadm -y

2 k8s-master节点安装

2.1 安装docker

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo yum install -y yum-utils device-mapper-persistent-data lvm2 wget -O /etc/yum.repos.d/docker-ce.repo https://download.docker.com/linux/centos/docker-ce.repo sed -i 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo #版本不要太高,坑死你的 yum install xfsprogs –y #我的需要装这个 yum install -y --setopt=obsoletes=0 docker-ce-18.09.8-3.el7 systemctl enable docker.service systemctl start docker.service

2.2 配置docker加速

mkdir /etc/docker #vim /etc/docker/daemon.json #写入(可以更改驱动为默认的cgroupfs,docker加速器可以去阿里云和163获取) cat >> /etc/docker/daemon.json << EOF { "registry-mirrors": ["http://hub-mirror.c.163.com"], "exec-opts": ["native.cgroupdriver=systemd"] } EOF systemctl daemon-reload && systemctl enable docker && systemctl restart docker

2.3 配置网络策略

iptables -P FORWARD ACCEPT

2.4 安装kubeadm和kubelet(v1.15.3)

#因为阿里云yum安装的kubelet就是1.15.3啊,啊哈哈

2.4.1 添加k8s的yum源

cat << EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

2.4.2 安装kubeadm和kubelet

# 安装1.15.3版本kubelet和kubectl以及kubeadm yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes # 设置启动策略 systemctl daemon-reload && systemctl enable kubelet && systemctl start kubelet

2.5 拉取核心组件镜像

通过kubeadm config images list --kubernetes-version=v1.15.3命令查看具体需要哪些镜像:

[root@k8s-master ~]# kubeadm config images list --kubernetes-version=v1.15.3 k8s.gcr.io/kube-apiserver:v1.15.3 k8s.gcr.io/kube-controller-manager:v1.15.3 k8s.gcr.io/kube-scheduler:v1.15.3 k8s.gcr.io/kube-proxy:v1.15.3 k8s.gcr.io/pause:3.1 k8s.gcr.io/etcd:3.3.10 k8s.gcr.io/coredns:1.3.1 [root@k8s-master ~]#

#阿里云下载 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.15.3 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.15.3 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.15.3 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.15.3 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.3.10 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.3.1 #打标签 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.15.3 k8s.gcr.io/kube-apiserver:v1.15.3 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.15.3 k8s.gcr.io/kube-controller-manager:v1.15.3 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.15.3 k8s.gcr.io/kube-scheduler:v1.15.3 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.15.3 k8s.gcr.io/kube-proxy:v1.15.3 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.3.10 k8s.gcr.io/etcd:3.3.10 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1 #删除旧镜像 docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.15.3 docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.15.3 docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.15.3 docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.15.3 docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.3.10 docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.3.1 #查看镜像 docker images | grep k8s

[root@k8s-master ~]# docker images | grep k8s k8s.gcr.io/kube-proxy v1.15.3 5cd54e388aba 5 months ago 82.1MB k8s.gcr.io/kube-controller-manager v1.15.3 b95b1efa0436 5 months ago 158MB k8s.gcr.io/kube-scheduler v1.15.3 00638a24688b 5 months ago 81.6MB k8s.gcr.io/coredns 1.3.1 eb516548c180 7 months ago 40.3MB k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 9 months ago 258MB k8s.gcr.io/pause 3.1 da86e6ba6ca1 20 months ago 742kB [root@k8s-master ~]#

2.6 使用kubeadm初始化k8s的master节点

#cat kubeadm.yaml apiVersion: kubeadm.k8s.io/v1beta2 kind: InitConfiguration localAPIEndpoint: advertiseAddress: 10.6.76.25 bindPort: 6443 nodeRegistration: taints: - effect: PreferNoSchedule key: node-role.kubernetes.io/master --- apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.15.3 networking: podSubnet: 10.244.0.0/16

kubeadm init --config kubeadm.yaml

成功会有如下类似的输出:

Your Kubernetes master has initializedsuccessfully!

安装失败了,怎么清理环境重新安装啊? kubeadm reset

kubeadm join 10.6.76.25:6443 --token 3qgs8w.lgpsouxdipjubzn1 \ --discovery-token-ca-cert-hash sha256:f189c434272e76a42e6d274ccb908aa7cfc68dc46e3b7e282e3f879d7b6d635e

的信息保存好,后期利用kubeadm添加node节点需要用到

忘记了kubeadm token create --print-join-command查看

下面这个也保存一下

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

2.7 查看集群状态信息

[root@k8s-master ~]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true"} [root@k8s-master ~]#

[root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master NotReady master 3m25s v1.15.3 [root@k8s-master ~]# #获取节点信息,现在的主节点状态为NotReady,原因是没有配置网络

2.8 配置flannel网络

kubectl get nodes

发现它的状态为NotReady,这是因为我们还没有为k8s按照网络插件,网络插件有多种方案,这里我们使用flannel.

一般都是

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml

# kubectl get pod -n kube-system | grep kube-flannel kube-flannel-ds-amd64-sqf6w 1/1 Running 0 3m9s

不在采用以下方式

wget https://raw.githubusercontent.com/coreos/flannel/v0.10.0/Documentation/kube-flannel.yml

vim kube-flannel.yml

76行修改为:"Network": "172.244.0.0/16", #kubeadm初始化的时候,我们kubeadm.yaml用的--pod-network-cidr=172.254.0.0/16,网段需要修改为一致。

03行下面增加如下3行:

- key: node.kubernetes.io/not-ready

operator: Exists

effect: NoSchedule

110行和124行:registry.cn-shanghai.aliyuncs.com/gcr-k8s/flannel:v0.10.0-amd64 #网络原因,修改为国内地址,下载更快

130行增加一行:

- --iface=ens33 #根据自己的物理网卡名称来

#执行

kubectl apply -f kube-flannel.yml

#查看状态 kubectl get pods --namespace kube-system| grep flannel

[root@k8s-master ~]# kubectl get pods --namespace kube-system NAME READY STATUS RESTARTS AGE coredns-5c98db65d4-7rnqf 0/1 Pending 0 10m coredns-5c98db65d4-cmw9k 0/1 Pending 0 10m etcd-k8s-master 1/1 Running 0 9m57s kube-apiserver-k8s-master 1/1 Running 0 10m kube-controller-manager-k8s-master 1/1 Running 0 10m kube-flannel-ds-gq5pt 1/1 Running 0 21s kube-proxy-5h5wv 1/1 Running 0 10m kube-scheduler-k8s-master 1/1 Running 0 10m [root@k8s-master ~]# kubectl get pods --namespace kube-system| grep flannel kube-flannel-ds-gq5pt 1/1 Running 0 28s [root@k8s-master ~]# #验证 [root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 12m v1.15.3 [root@k8s-master ~]#

3 node节点安装

3.1 环境准备

3.2 安装docker

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo yum install -y yum-utils device-mapper-persistent-data lvm2 wget -O /etc/yum.repos.d/docker-ce.repo https://download.docker.com/linux/centos/docker-ce.repo sed -i 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo yum install -y --setopt=obsoletes=0 docker-ce-18.09.8-3.el7 systemctl enable docker.service systemctl start docker.service

3.3 安装kubelet kubeadm

3.3.1 添加k8s的yum源

cat << EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

3.3.2 安装kubeadm和kubelet

yum install -y kubelet kubeadm kubectl ipvsadm systemctl enable kubelet.service scp /etc/kubernetes/admin.conf k8s-node-1:/etc/kubernetes/admin.conf

3.3.3 在master节点,将上述8个镜像打包,发送给node

docker save k8s.gcr.io/kube-proxy k8s.gcr.io/kube-controller-manager k8s.gcr.io/kube-scheduler k8s.gcr.io/kube-apiserver k8s.gcr.io/etcd k8s.gcr.io/coredns registry.cn-shanghai.aliyuncs.com/gcr-k8s/flannel k8s.gcr.io/pause -o k8s_all_image.tar #当然你也可以按之前的步骤下载

3.4 加入k8s集群

kubeadm join 10.6.76.25:6443 --token 3qgs8w.lgpsouxdipjubzn1 \ --discovery-token-ca-cert-hash sha256:f189c434272e76a42e6d274ccb908aa7cfc68dc46e3b7e282e3f879d7b6d635e

[root@k8s-node-1 ~]# kubeadm join 10.6.76.25:6443 --token 3qgs8w.lgpsouxdipjubzn1 \ > --discovery-token-ca-cert-hash sha256:f189c434272e76a42e6d274ccb908aa7cfc68dc46e3b7e282e3f879d7b6d635e [preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Activating the kubelet service [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. [root@k8s-node-1 ~]#

3.5 创建配置文件

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config export KUBECONFIG=/etc/kubernetes/admin.conf systemctl restart kubelet

3.6 master给k8s-node添加角色

kubectl label nodes k8s-node-1 node-role.kubernetes.io/node=

[root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 52m v1.15.3 k8s-node-2 NotReady <none> 35s v1.15.3 [root@k8s-master ~]# kubectl label nodes k8s-node-1 node-role.kubernetes.io/node= [root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 88m v1.15.3 k8s-node-1 NotReady node 85s v1.15.3 k8s-node-2 NotReady node 36m v1.15.3 [root@k8s-master ~]#

3.7 配置flannel网络和proxy

[root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 88m v1.15.3 k8s-node-1 NotReady node 85s v1.15.3 k8s-node-2 NotReady node 36m v1.15.3 [root@k8s-master ~]#

状态一直是NotReady 也需要配置网络,参考master

可以看到两个node节点上的kube-flannel以及kube-proxy都没有启动起来,那是因为两个node节点上都还没有这两个pod的相关镜像,当然起不起来了,所以接下来需要将master节点上的这两个镜像copy到node节点上

[root@k8s-master ~]# kubectl get pods --namespace kube-system NAME READY STATUS RESTARTS AGE coredns-5c98db65d4-7rqxc 1/1 Running 0 3h coredns-5c98db65d4-8w45r 1/1 Running 0 3h etcd-k8s-master 1/1 Running 0 179m kube-apiserver-k8s-master 1/1 Running 0 179m kube-controller-manager-k8s-master 1/1 Running 0 179m kube-flannel-ds-amd64-7bxr2 0/1 Init:0/1 0 6m29s kube-flannel-ds-amd64-dhhw2 0/1 Init:0/1 0 6m29s kube-flannel-ds-amd64-sx7gc 1/1 Running 0 6m29s kube-proxy-6ql7d 0/1 ContainerCreating 0 135m kube-proxy-9f9rq 0/1 ContainerCreating 0 131m kube-proxy-zkhjc 1/1 Running 0 3h kube-scheduler-k8s-master 1/1 Running 0 179m

把这几个镜像导出来,传到node上去

docker save -o all.tar k8s.gcr.io/kube-proxy:v1.15.3 quay.io/coreos/flannel:v0.11.0-amd64 k8s.gcr.io/pause:3.1 scp all.tar k8s-node-1:~ scp all.tar k8s-node-2:~

nodes导入

[root@k8s-node-1 ~]# docker load -i all.tar fe9a8b4f1dcc: Loading layer [==================================================>] 43.87MB/43.87MB 15c9248be8a9: Loading layer [==================================================>] 3.403MB/3.403MB d01dcbdc596d: Loading layer [==================================================>] 36.99MB/36.99MB Loaded image: k8s.gcr.io/kube-proxy:v1.15.3 7bff100f35cb: Loading layer [==================================================>] 4.672MB/4.672MB 5d3f68f6da8f: Loading layer [==================================================>] 9.526MB/9.526MB 9b48060f404d: Loading layer [==================================================>] 5.912MB/5.912MB 3f3a4ce2b719: Loading layer [==================================================>] 35.25MB/35.25MB 9ce0bb155166: Loading layer [==================================================>] 5.12kB/5.12kB Loaded image: quay.io/coreos/flannel:v0.11.0-amd64 e17133b79956: Loading layer [==================================================>] 744.4kB/744.4kB Loaded image: k8s.gcr.io/pause:3.1

自动发现,自愈

[root@k8s-master ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-5c98db65d4-7rqxc 1/1 Running 0 3h13m coredns-5c98db65d4-8w45r 1/1 Running 0 3h13m etcd-k8s-master 1/1 Running 0 3h12m kube-apiserver-k8s-master 1/1 Running 0 3h12m kube-controller-manager-k8s-master 1/1 Running 0 3h12m kube-flannel-ds-amd64-7bxr2 1/1 Running 1 19m kube-flannel-ds-amd64-dhhw2 1/1 Running 1 19m kube-flannel-ds-amd64-sx7gc 1/1 Running 0 19m kube-proxy-6ql7d 1/1 Running 0 148m kube-proxy-9f9rq 1/1 Running 0 144m kube-proxy-zkhjc 1/1 Running 0 3h13m kube-scheduler-k8s-master 1/1 Running 0 3h12m [root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 3h13m v1.15.3 k8s-node-1 Ready node 148m v1.15.3 k8s-node-2 Ready node 144m v1.15.3 [root@k8s-master ~]#

4 Docker和kubectls命令补全

yum install -y bash-completion sh /usr/share/bash-completion/bash_completion yum install -y epel-release bash-completion source /usr/share/bash-completion/bash_completion source <(kubectl completion bash) echo "source <(kubectl completion bash)" >> ~/.bashrc

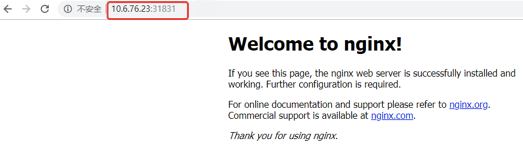

5 k8s集群运行一个nginx容器并被外界访问

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx:latest deployment.apps/nginx created [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-76546c5b7d-5sf8k 0/1 ContainerCreating 0 18s [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-76546c5b7d-5sf8k 1/1 Running 0 38s [root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort service/nginx exposed [root@k8s-master ~]# [root@k8s-master ~]# kubectl get all NAME READY STATUS RESTARTS AGE pod/nginx-76546c5b7d-5sf8k 1/1 Running 0 61s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h15m service/nginx NodePort 10.107.48.185 <none> 80:31831/TCP 20s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx 1/1 1 1 61s NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-76546c5b7d 1 1 1 61s [root@k8s-master ~]# curl -I 10.6.76.23:31831 HTTP/1.1 200 OK Server: nginx/1.17.3 Date: Thu, 29 Aug 2019 04:37:37 GMT Content-Type: text/html Content-Length: 612 Last-Modified: Tue, 13 Aug 2019 08:50:00 GMT Connection: keep-alive ETag: "5d5279b8-264" Accept-Ranges: bytes [root@k8s-master ~]# curl -I 10.6.76.24:31831 HTTP/1.1 200 OK Server: nginx/1.17.3 Date: Thu, 29 Aug 2019 04:37:41 GMT Content-Type: text/html Content-Length: 612 Last-Modified: Tue, 13 Aug 2019 08:50:00 GMT Connection: keep-alive ETag: "5d5279b8-264" Accept-Ranges: bytes [root@k8s-master ~]#

浙公网安备 33010602011771号

浙公网安备 33010602011771号