ELK(12):ELK+kafka(日志量中等)

ELK(12):ELK+kafka(日志不太多)

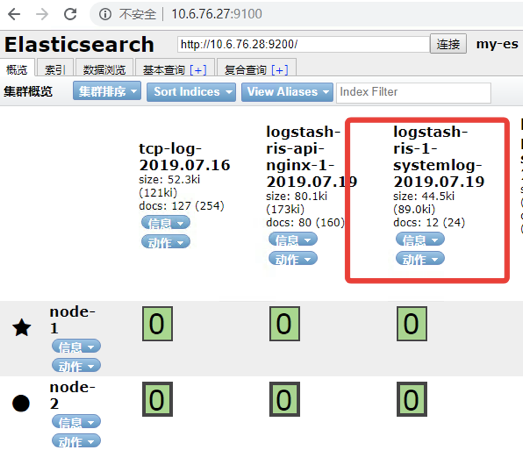

我们先用logstash读取Nginx日志和系统日志写入kafka,再用logstash读取出来写入elasticsearch,适合日志量不是太多的架构。

海量日志建议采用filebeat。

其实用redis也可以,redis没必要开快照和持久化,数据写入es后redis的作用就完成了。当然很耗redis内存,一般8-16G。

后端可能几十台logstash往kafka写入,如果kafka内存居高不下,也就是前端的logstash读的太慢,要加logstash。直到平衡。

Nginx日志

1. Logstash把nginx日志写入kafka

1.1 配置logstash

#[admin@ris-1 conf.d]$ pwd #/etc/logstash/conf.d #[admin@ris-1 conf.d]$ cat nginx_kafka.conf input { file { type => "ris-api-nginx-1" path => "/home/admin/webserver/logs/api/api.log" start_position => "beginning" stat_interval => "2" codec => "json" } } output { if [type] == "ris-api-nginx-1" { kafka { bootstrap_servers => "10.6.76.27:9092" topic_id => "ris-api-nginx-1" batch_size => "5" codec => "json" } stdout { codec => "rubydebug" } } }

1.2 测试配置文件

sudo /usr/share/logstash/bin/logstash -f nginx_kafka.conf –t

1.3 前台启动logstash

sudo /usr/share/logstash/bin/logstash -f nginx_kafka.conf

1.4 查看kafka的topic

[admin@pe-jira ~]$ /home/admin/elk/kafka/bin/kafka-topics.sh --list --zookeeper kafka1:2181, kafka2:2181, kafka3:2181

__consumer_offsets

messagetest

ris-api-nginx-1

[admin@pe-jira ~]$

1.5 前台logstash日志

{ "@version" => "1", "@timestamp" => 2019-07-18T09:16:29.000Z, "clientip" => "10.6.0.11", "xff" => "-", "responsetime" => 0.001, "upstreamhost" => "10.6.75.172:8080", "request_method" => "GET", "domain" => "api.erp.zhaonongzi.com", "status" => "200", "host" => "10.6.75.171", "http_user_agent" => "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.11; rv:67.0) Gecko/20100101 Firefox/67.0", "upstreamtime" => "0.001", "url" => "/APICenter/purchase_snapshot_single.wn", "referer" => "-", "type" => "ris-api-nginx-1", "size" => 66, "remote_user" => "-", "path" => "/home/admin/webserver/logs/api/api.log" }

1.6 再取消前台输出

注释

stdout {

codec => "rubydebug"

}

1.7 通过服务方式启动

sudo systemctl start logstash

2. 配置logstash从kafka读取nginx日志写入elasticsearch

2.1 配置logstash

#[admin@pe-jira conf.d]$ pwd #/etc/logstash/conf.d #[admin@pe-jira conf.d]$ cat kafka-es.conf input{ kafka { bootstrap_servers => "10.6.76.27:9092" #kafka服务器地址 topics => "ris-api-nginx-1" group_id => "ris-api-nginx-logs" decorate_events => true #kafka标记 consumer_threads => 1 codec => "json" #写入的时候使用json编码,因为logstash收集后会转换成json格式 } } output{ stdout { codec => "rubydebug" } # if [type] == "ris-api-nginx-1"{ # elasticsearch { # hosts => ["10.6.76.27:9200"] # index => " logstash-ris-api-nginx-1-%{+YYYY.MM.dd}" # } # } }

2.2 测试配置文件

sudo /usr/share/logstash/bin/logstash -f nginx_kafka.conf –t

2.3 前台启动logstash

sudo /usr/share/logstash/bin/logstash -f kafka-es.conf

2.4 前台logstash日志

{ "domain" => "XXXX.com", "upstreamtime" => "0.001", "host" => "10.6.75.171", "remote_user" => "-", "status" => "200", "xff" => "-", "request_method" => "GET", "@timestamp" => 2019-07-19T02:10:46.000Z, "upstreamhost" => "10.6.75.172:8080", "path" => "/home/admin/webserver/logs/api/api.log", "http_user_agent" => "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.11; rv:67.0) Gecko/20100101 Firefox/67.0", "responsetime" => 0.001, "size" => 66, "@version" => "1", "type" => "ris-api-nginx-1", "referer" => "-", "clientip" => "10.6.0.11", "url" => "/APICenter/purchase_snapshot_single.wn" }

2.5 再取消前台输出,直接写入elasticsearch

input{ kafka { bootstrap_servers => "10.6.76.27:9092" #kafka服务器地址 topics => "ris-api-nginx-1" group_id => "ris-api-nginx-logs" decorate_events => true #kafka标记 consumer_threads => 1 codec => "json" #写入的时候使用json编码,因为logstash收集后会转换成json格式 } } output{ # stdout { # codec => "rubydebug" # } if [type] == "ris-api-nginx-1"{ elasticsearch { hosts => ["10.6.76.27:9200"] index => " logstash-ris-api-nginx-1-%{+YYYY.MM.dd}" #必须logstash开头,地图展示需要 } } }

2.6 通过服务方式启动

sudo systemctl start logstash

2.7 添加kibana

系统日志

3. Logstash把系统日志写入kafka

3.1 修改日志权限

sudo chmod 644 /var/log/messages

3.2 agent-logstash配置

#[admin@ris-1 conf.d]$ pwd #/etc/logstash/conf.d #[admin@ris-1 conf.d]$ cat syslog-kafka.conf input { file { type => "ris-1-systemlog" path => "/var/log/messages" start_position => "beginning" stat_interval => "2" } } output { if [type] == "ris-1-systemlog" { # stdout { # codec => "rubydebug" # } kafka { bootstrap_servers => "10.6.76.27:9092" topic_id => "ris-1-systemlog" batch_size => "5" codec => "json" } } }

3.3 服务启动

sudo systemctl restart logstash

4. 配置logstash从kafka读取系统日志写入elasticsearch

4.1 lostash配置

[admin@pe-jira conf.d]$ cat sys-kafka-es.conf input{ kafka { bootstrap_servers => "10.6.76.27:9092" #kafka服务器地址 topics => "ris-1-systemlog" group_id => "ris-1-systemlog" decorate_events => true #kafka标记 consumer_threads => 1 codec => "json" #写入的时候使用json编码,因为logstash收集后会转换成json格式 } } output{ stdout { codec => "rubydebug" } if [type] == "ris-1-systemlog"{ elasticsearch { hosts => ["10.6.76.27:9200"] index => "logstash-ris-1-systemlog-%{+YYYY.MM.dd}" } } }

4.2 服务启动

sudo systemctl restart logstash

4.3 添加kibana

浙公网安备 33010602011771号

浙公网安备 33010602011771号