ELK(6):ELK-logstash收集Nginx日志

ELK(6):ELK-logstash收集Nginx日志

暂时先用logstash收集,后面才改用filebeat,别着急

我的Nginx和logstash装一台机器的

将Nginx日志转成json

nginx.conf 日志模块修改为:

log_format logstash_json '{"@timestamp":"$time_iso8601",' '"host":"$server_addr",' '"clientip":"$remote_addr",' '"remote_user":"$remote_user",' # '"request":"$request",' #和url一样,多一个请求方式和协议 '"http_user_agent":"$http_user_agent",' '"size":$body_bytes_sent,' '"responsetime":$request_time,' '"upstreamtime":"$upstream_response_time",' '"upstreamhost":"$upstream_addr",' '"request_method": "$request_method",' #'"requesturi":"$request_uri",' #和url一样 '"url":"$uri",' '"domain":"$host",' '"xff":"$http_x_forwarded_for",' '"referer":"$http_referer",' '"status":"$status"}';

虚拟主机日志处理配置:

access_log logs/kibana.log logstash_json;

logstash配置文件

#[admin@pe-jira conf.d]$ pwd #/etc/logstash/conf.d #[admin@pe-jira conf.d]$ cat logstash.conf input { file { type => "pe-jira-kibana" path => "/home/admin/webserver/logs/kibana.log" start_position => "beginning" stat_interval => "2" } } #我在input 中添加 codec = json 我设置不起作用,只能采用这个方式,其实应该是可以的 filter{ json { source => "message" skip_on_invalid_json => true } } output { if [type] == "pe-jira-kibana" { elasticsearch { hosts => ["10.6.76.27:9200"] index => "logstash-pe-jira-nginx-kibana-%{+YYYY.MM.dd}" } } }

检查配置文件、重启

这个你肯定知道这么做的呀

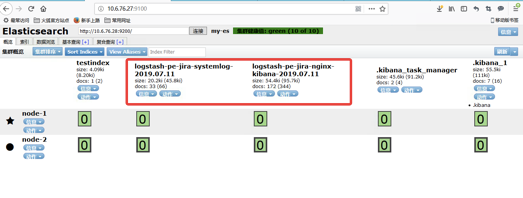

测试

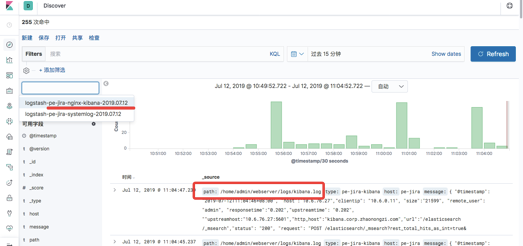

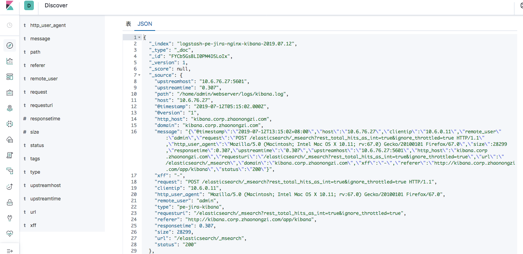

查看json数据

根据字段查看

浙公网安备 33010602011771号

浙公网安备 33010602011771号