(转) Supercharging Style Transfer

Wednesday, October 26, 2016

Posted by Vincent Dumoulin*, Jonathon Shlens and Manjunath Kudlur, Google Brain Team

Pastiche. A French word, it designates a work of art that imitates the style of another one (not to be confused with its more humorous Greek cousin, parody). Although it has been used for a long time in visual art, music and literature, pastiche has been getting mass attention lately with online forums dedicated to images that have been modified to be in the style of famous paintings. Using a technique known as style transfer, these images are generated by phone or web apps that allow a user to render their favorite picture in the style of a well known work of art.

Although users have already produced gorgeous pastiches using the current technology, we feel that it could be made even more engaging. Right now, each painting is its own island, so to speak: the user provides a content image, selects an artistic style and gets a pastiche back. But what if one could combine many different styles, exploring unique mixtures of well known artists to create an entirely unique pastiche?

Learning a representation for artistic style

In our recent paper titled “A Learned Representation for Artistic Style”, we introduce a simple method to allow a single deep convolutional style transfer network to learn multiple styles at the same time. The network, having learned multiple styles, is able to do style interpolation, where the pastiche varies smoothly from one style to another. Our method enables style interpolation in real-time as well, allowing this to be applied not only to static images, but also videos.

In the video above, multiple styles are combined in real-time and the resulting style is applied using a single style transfer network. The user is provided with a set of 13 different painting styles and adjusts their relative strengths in the final style via sliders. In this demonstration, the user is an active participant in producing the pastiche.

A Quick History of Style Transfer

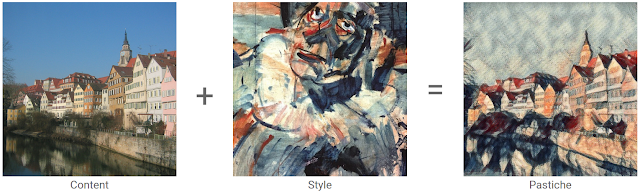

While transferring the style of one image to another has existed for nearly 15 years [1] [2], leveraging neural networks to accomplish it is both very recent and very fascinating. In “A Neural Algorithm of Artistic Style” [3], researchers Gatys, Ecker & Bethge introduced a method that uses deep convolutional neural network (CNN) classifiers. The pastiche image is found via optimization: the algorithm looks for an image which elicits the same kind of activations in the CNN’s lower layers - which capture the overall rough aesthetic of the style input (broad brushstrokes, cubist patterns, etc.) - yet produces activations in the higher layers - which capture the things that make the subject recognizable - that are close to those produced by the content image. From some starting point (e.g. random noise, or the content image itself), the pastiche image is progressively refined until these requirements are met.

The pastiches produced via this algorithm look spectacular:

This work is considered a breakthrough in the field of deep learning research because it provided the first proof of concept for neural network-based style transfer. Unfortunately this method for stylizing an individual image is computationally demanding. For instance, in the first demos available on the web, one would upload a photo to a server, and then still have plenty of time to go grab a cup of coffee before a result was available.

This process was sped up significantly by subsequent research [4, 5] that recognized that this optimization problem may be recast as an image transformation problem, where one wishes to apply a single, fixed painting style to an arbitrary content image (e.g. a photograph). The problem can then be solved by teaching a feed-forward, deep convolutional neural network to alter a corpus of content images to match the style of a painting. The goal of the trained network is two-fold: maintain the content of the original image while matching the visual style of the painting.

The end result of this was that what once took a few minutes for a single static image, could now be run real time (e.g. applying style transfer to a live video). However, the increase in speed that allowed real-time style transfer came with a cost - a given style transfer network is tied to the style of a single painting, losing some flexibility of the original algorithm, which was not tied to any one style. This means that to build a style transfer system capable of modeling 100 paintings, one has to train and store 100 separate style transfer networks.

Our Contribution: Learning and Combining Multiple Styles

We started from the observation that many artists from the impressionist period employ similar brush stroke techniques and color palettes. Furthermore, painting by say, Monet, are even more visually similar.

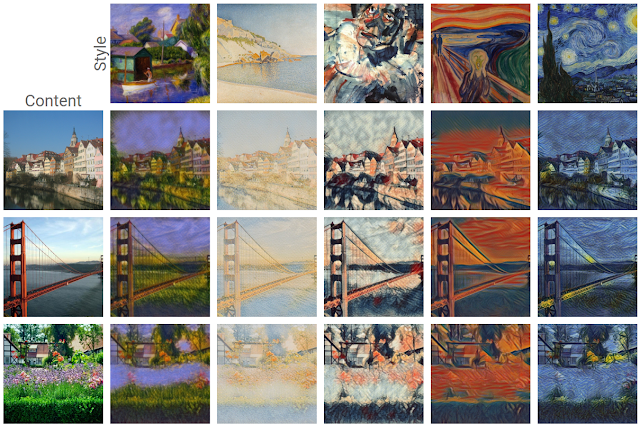

We leveraged this observation in our training of a machine learning system. That is, we trained a single system that is able to capture and generalize across many Monet paintings or even a diverse array of artists across genres. The pastiches produced are qualitatively comparable to those produced in previous work, while originating from the same style transfer network.

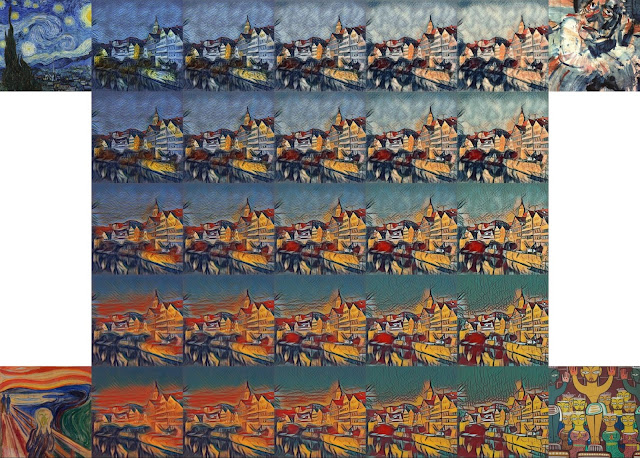

The technique we developed is simple to implement and is not memory intensive. Furthermore, our network, trained on several artistic styles, permits arbitrary combining multiple painting styles in real-time, as shown in the video above. Here are four styles being combined in different proportions on a photograph of Tübingen:

Unlike previous approaches to fast style transfer, we feel that this method of modeling multiple styles at the same time opens the door to exciting new ways for users to interact with style transfer algorithms, not only allowing the freedom to create new styles based on the mixture of several others, but to do it in real-time. Stay tuned for a future post on the Magenta blog, in which we will describe the algorithm in more detail and release the TensorFlow source code to run this model and demo yourself. We also recommend that you check out Nat & Lo’s fantastic video explanationon the subject of style transfer.

References

[1] Efros, Alexei A., and William T. Freeman. Image quilting for texture synthesis and transfer (2001).

[2] Hertzmann, Aaron, Charles E. Jacobs, Nuria Oliver, Brian Curless, and David H. Salesin. Image analogies (2001).

[3] Gatys, Leon A., Alexander S. Ecker, and Matthias Bethge. A Neural Algorithm of Artistic Style(2015).

[4] Ulyanov, Dmitry, Vadim Lebedev, Andrea Vedaldi, and Victor Lempitsky. Texture Networks: Feed-forward Synthesis of Textures and Stylized Images (2016).

[5] Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. Perceptual Losses for Real-Time Style Transfer and Super-Resolution (2016).

Pastiche. A French word, it designates a work of art that imitates the style of another one (not to be confused with its more humorous Greek cousin, parody). Although it has been used for a long time in visual art, music and literature, pastiche has been getting mass attention lately with online forums dedicated to images that have been modified to be in the style of famous paintings. Using a technique known as style transfer, these images are generated by phone or web apps that allow a user to render their favorite picture in the style of a well known work of art.

Although users have already produced gorgeous pastiches using the current technology, we feel that it could be made even more engaging. Right now, each painting is its own island, so to speak: the user provides a content image, selects an artistic style and gets a pastiche back. But what if one could combine many different styles, exploring unique mixtures of well known artists to create an entirely unique pastiche?

Learning a representation for artistic style

In our recent paper titled “A Learned Representation for Artistic Style”, we introduce a simple method to allow a single deep convolutional style transfer network to learn multiple styles at the same time. The network, having learned multiple styles, is able to do style interpolation, where the pastiche varies smoothly from one style to another. Our method enables style interpolation in real-time as well, allowing this to be applied not only to static images, but also videos.

Here is a video from YouTube.

| Credit: awesome dog role played by Google Brain team office dog Picabo. |

A Quick History of Style Transfer

While transferring the style of one image to another has existed for nearly 15 years [1] [2], leveraging neural networks to accomplish it is both very recent and very fascinating. In “A Neural Algorithm of Artistic Style” [3], researchers Gatys, Ecker & Bethge introduced a method that uses deep convolutional neural network (CNN) classifiers. The pastiche image is found via optimization: the algorithm looks for an image which elicits the same kind of activations in the CNN’s lower layers - which capture the overall rough aesthetic of the style input (broad brushstrokes, cubist patterns, etc.) - yet produces activations in the higher layers - which capture the things that make the subject recognizable - that are close to those produced by the content image. From some starting point (e.g. random noise, or the content image itself), the pastiche image is progressively refined until these requirements are met.

|

| Content image: The Tübingen Neckarfront by Andreas Praefcke, Style painting: “Head of a Clown”, by Georges Rouault. |

|

| Figure adapted from L. Gatys et al. "A Neural Algorithm of Artistic Style" (2015). |

This process was sped up significantly by subsequent research [4, 5] that recognized that this optimization problem may be recast as an image transformation problem, where one wishes to apply a single, fixed painting style to an arbitrary content image (e.g. a photograph). The problem can then be solved by teaching a feed-forward, deep convolutional neural network to alter a corpus of content images to match the style of a painting. The goal of the trained network is two-fold: maintain the content of the original image while matching the visual style of the painting.

The end result of this was that what once took a few minutes for a single static image, could now be run real time (e.g. applying style transfer to a live video). However, the increase in speed that allowed real-time style transfer came with a cost - a given style transfer network is tied to the style of a single painting, losing some flexibility of the original algorithm, which was not tied to any one style. This means that to build a style transfer system capable of modeling 100 paintings, one has to train and store 100 separate style transfer networks.

Our Contribution: Learning and Combining Multiple Styles

We started from the observation that many artists from the impressionist period employ similar brush stroke techniques and color palettes. Furthermore, painting by say, Monet, are even more visually similar.

|

| Poppy Field (left) and Impression, Sunrise (right) by Claude Monet. Images from Wikipedia |

|

| Pastiches produced by our single network, trained on 32 varied styles. These pastiches are qualitatively equivalent to those created by single-style networks: Image Credit: (from top to bottom) content photographs by Andreas Praefcke, Rich Niewiroski Jr. and J.-H. Janßen, (from left to right) style paintings by William Glackens, Paul Signac, Georges Rouault, Edvard Munch and Vincent van Gogh. |

Unlike previous approaches to fast style transfer, we feel that this method of modeling multiple styles at the same time opens the door to exciting new ways for users to interact with style transfer algorithms, not only allowing the freedom to create new styles based on the mixture of several others, but to do it in real-time. Stay tuned for a future post on the Magenta blog, in which we will describe the algorithm in more detail and release the TensorFlow source code to run this model and demo yourself. We also recommend that you check out Nat & Lo’s fantastic video explanationon the subject of style transfer.

References

[1] Efros, Alexei A., and William T. Freeman. Image quilting for texture synthesis and transfer (2001).

[2] Hertzmann, Aaron, Charles E. Jacobs, Nuria Oliver, Brian Curless, and David H. Salesin. Image analogies (2001).

[3] Gatys, Leon A., Alexander S. Ecker, and Matthias Bethge. A Neural Algorithm of Artistic Style(2015).

[4] Ulyanov, Dmitry, Vadim Lebedev, Andrea Vedaldi, and Victor Lempitsky. Texture Networks: Feed-forward Synthesis of Textures and Stylized Images (2016).

[5] Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. Perceptual Losses for Real-Time Style Transfer and Super-Resolution (2016).

Stay Hungry,Stay Foolish ...

浙公网安备 33010602011771号

浙公网安备 33010602011771号