The Decade of Deep Learning

The Decade of Deep Learning

2020-01-01 12:10:25

Source: https://leogao.dev/2019/12/31/The-Decade-of-Deep-Learning/

As the 2010’s draw to a close, it’s worth taking a look back at the monumental progress that has been made in Deep Learning in this decade. Driven by the development of ever-more powerful compute and the increased availability of big data, Deep Learning has successfully tackled many previously intractable problems, especially in Computer Vision and Natural Language Processing. Deep Learning has also begun to see real-world application everywhere around us, from the high-impact to the frivolous, from autonomous vehicles and medical imaging to virtual assistants and deepfakes.

This post is an overview of some the most influential Deep Learning papers of the last decade. My hope is to provide a jumping-off point into many disparate areas of Deep Learning by providing succinct and dense summaries that go slightly deeper than a surface level exposition, with many references to the relevant resources.

Given the nature of research, assigning credit is very difficult—the same ideas are pursued by many simultaneously, and the most influential paper is often neither the first nor the best. I try my best to tiptoe the line between influence and first/best works by listing the most influential papers as main entries and the other relevant papers that precede or improve upon the main entry as honorable mentions.[1] Of course, as such a list will always be subjective, this is not meant to be final, exhaustive, or authoritative. If you feel that the ordering, omission, or description of any paper is incorrect, please let me know—I would be more than glad to improve this list by making it more complete and accurate.

2011

Deep Sparse Rectifier Neural Networks (4071 citations)

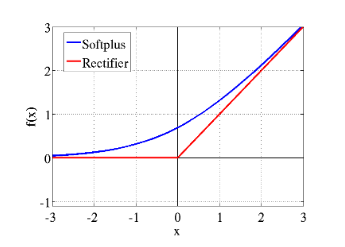

ReLU and Softplus (Source)

ReLU and Softplus (Source)

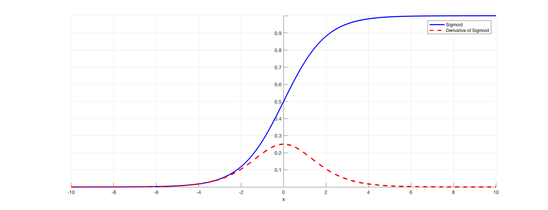

Most neural networks, from the earliest MLPs up until many networks toward the middle of the decade, used sigmoids for intermediate activations. Sigmoids (most commonly the logistic and hyperbolic tangent functions) have the advantages of being differentiable everywhere and having a bounded output. They also provide a satisfying analogy to biological neurons’ all-or-none law. However, as the derivative of sigmoid functions decays quickly away from zero, the gradient is often diminished rapidly as more layers are added. This is known as the vanishing gradient problem and is one of the reasons that networks were difficult to scale depthwise. This paper found that using ReLUs helped solve the vanishing gradient problem and paved the way for deeper networks.

Sigmoid and its derivative (Source)

Sigmoid and its derivative (Source)

Despite this, however, ReLUs still have some flaws: they are non-differentiable at zero[2], they can grow unbounded, and neurons could “die” and become inactive due to the saturated half of the activation. Since 2011, many improved activations have been proposed to solve the problem, but vanilla ReLUs are still competitive, as the efficacy of many new activations has been under question.

The paper Digital selection and analogue amplification coexist in a cortex-inspired silicon circuit (2000) is generally credited to be the first paper to establish the biological plausibility of the ReLU, and What is the Best Multi-Stage Architecture for Object Recognition? (2009) was the earliest paper that I was able to find that explored using the ReLU (referred to as the positive part in this paper) for neural networks.

Honorable Mentions

- Rectifier Nonlinearities Improve Neural Network Acoustic Models: This paper introduced the Leaky ReLU, which, instead of outputting zero, “leaks” with a small gradient on the negative half. This is to help prevent the dying ReLU problem. Leaky ReLUs still have a discontinuity in the derivative at zero, though.

- Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs): ELUs are similar to Leaky ReLUs but are smoother and saturate to -1 on the negative side.

- Self-Normalizing Neural Networks: SELUs aim to remove the need for batch normalization by scaling an ELU to create a fixed point and push the distribution towards zero mean and unit variance.

- Gaussian Error Linear Units (GELUs): The GELU activation is based on the expected value of dropping out neurons according to a Gaussian distribution. To be more concrete, the probability of a certain value xx being kept (i.e not zeroed out) is the CDF of the standard normal distribution: \begin{aligned}\Phi(x) = P(x \leq Z \sim \mathcal{N}(0, 1))\end{aligned}Φ(x)=P(x≤Z∼N(0,1)). Thus, the expected value of a variable after this probabilistic dropout procedure is \bold{GELU}(x) = x\Phi(x)GELU(x)=xΦ(x).[3] GELUs are used in many state-of-the-art Transformer models, like BERT and GPT/GPT2.

2012

ImageNet Classification with Deep Convolutional Neural Networks (52025 citations)

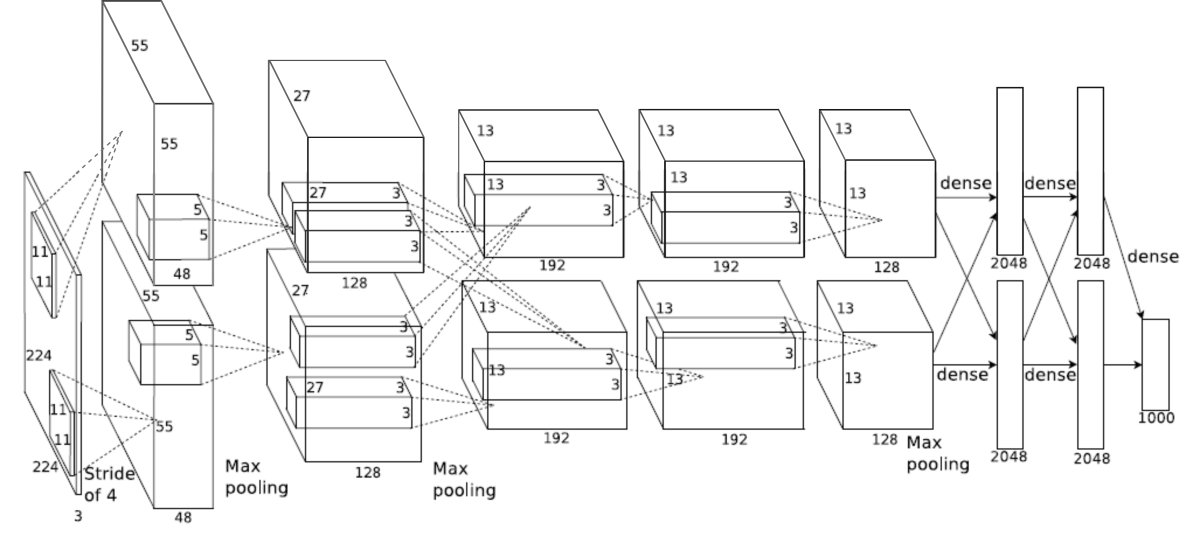

AlexNet architecture (Source, Untruncated)

AlexNet architecture (Source, Untruncated)

AlexNet is an 8 layer Convolutional Neural Network using the ReLU activation function and 60 million parameters. The crucial contribution of AlexNet was demonstrating the power of deeper networks, as its architecture was, in essence, a deeper version of previous networks (i.e LeNet).

The AlexNet paper is generally recognized as the paper that sparked the field of Deep Learning. AlexNet was also one of the first networks to leverage the massively parallel processing power of GPUs to train much deeper convolutional networks than before. The result was astounding, lowering the error rate on ImageNet from 26.2% to 15.3%, and beating every other contender in ILSVRC 2012 by a wide margin. This massive improvement in error attracted a lot of attention to the field of Deep Learning, and made the AlexNet paper the most cited papers in Deep Learning.

Honorable Mentions:

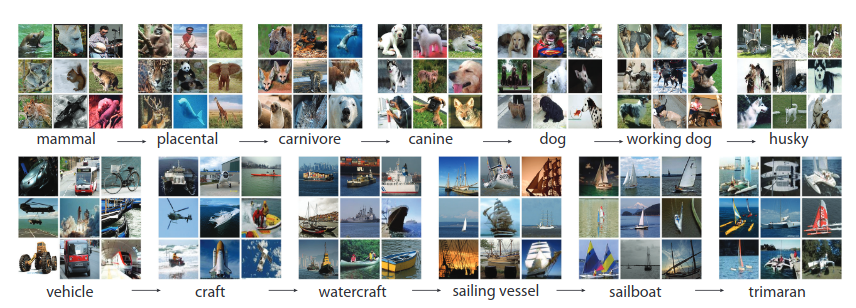

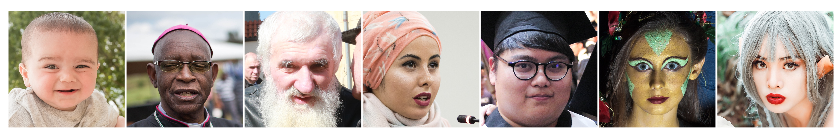

An example of images from the ImageNet hierarchy (Source)

An example of images from the ImageNet hierarchy (Source)

- ImageNet: A Large-Scale Hierarchical Image Database: The ImageNet dataset itself is also in large part responsible for the boom in Deep Learning. With 15050 citations, it is also one of the most cited papers in all of Deep Learning (as it was published in 2009, I have decided to list it as an honorable mention). The dataset was constructed using Amazon Mechanical Turk to outsource the classification task to workers, which made this astronomically-sized dataset possible. The ImageNet Large Scale Visual Recognition Challenge (ILSVRC), the contest for image classification algorithms spawned by the ImageNet database, was also responsible for driving the development of many other innovations in Computer Vision.

- Flexible, High Performance Convolutional Neural Networks for Image Classification: This paper predates AlexNet and has much in common with it: both papers leveraged GPU acceleration for training deeper networks, and both use the ReLU activation that solved the vanishing gradient problem of deep networks. Some argue that this paper has been unfairly snubbed of its place, receiving far fewer citations than AlexNet.

![LeNet architecture (<a href='http://vision.stanford.edu/cs598_spring07/papers/Lecun98.pdf'>Source</a>)]() LeNet architecture (Source)

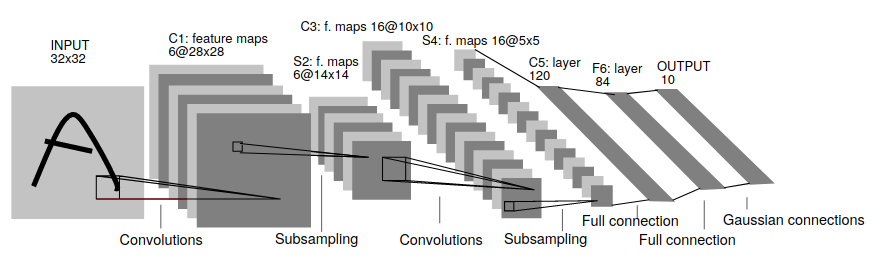

LeNet architecture (Source) - Gradient-Based Learning Applied to Document Recognition: This paper from 1998, with a whopping 23110 citations, is the oft-cited pioneer of CNNs for image recognition. Indeed, modern CNNs are almost exactly scaled up versions of this early work! Even earlier is LeCun’s less cited (though, with 5697 citations, it’s nothing to scoff at) 1989 paper Backpropagation Applied to Handwritten Zip Codes, arguably the first gradient descent CNN.[4]

2013

Distributed Representations of Words and Phrases and their Compositionality (16923 citations)

This paper (and the slightly earlier Efficient Estimation of Word Representations in Vector Space by the same authors) introduced word2vec, which became the dominant way to encode text for Deep Learning NLP models. It is based on the idea that words which appear in similar contexts likely have similar meanings, and thus can be used to embed words into vectors (hence the name) to be used downstream in other models. Word2vec, in particular, trains a network to predict the context around a word given the word itself, and then extracting the latent vector from the network.[5]

Honorable Mentions

- GloVe: Global Vectors for Word Representation: GloVe is an improved model based on the same core ideas of word2vec, except realized slightly differently. It is hotly debated whether either of these models is better in general.

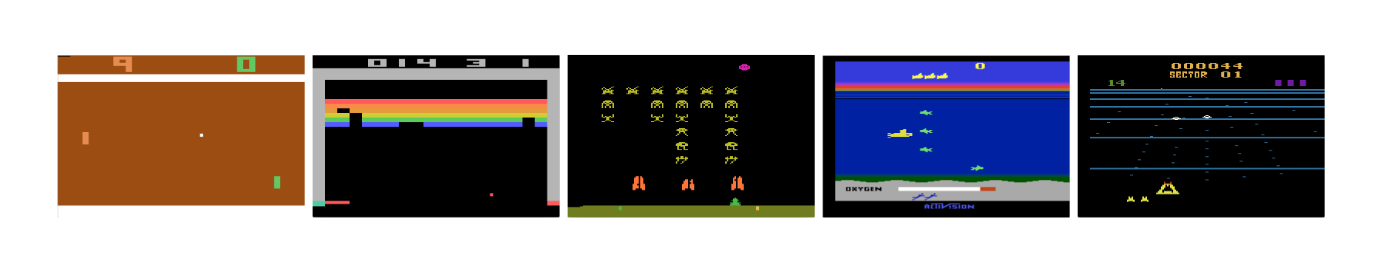

Playing Atari with Deep Reinforcement Learning (3251 citations)

DeepMind Atari DQN (Source)

DeepMind Atari DQN (Source)

The results of DeepMind’s Atari DQN kickstarted the field of Deep Reinforcement Learning. Reinforcement Learning was previously used mostly on low-dimensional environments such as gridworlds, and was difficult to apply to more complex environments. Atari was the first successful application of reinforcement learning to a high-dimensional environment, which brought Reinforcement Learning from obscurity to an important subfield of AI.

The paper uses Deep Q-Learning in particular, a form of value-based Reinforcement Learning. Value-based means that the goal is to learn how much reward the agent can expect to obtain at each state (or, in the case of Q-learning, each state-action pair) by following the policy implicitly defined by the Q-value function. This policy used in this paper is the \epsilonϵ-greedy policy—it takes the greedy (highest-scored) action as estimated by the Q-function with probability 1 - \epsilon1−ϵ and a completely random action with probability \epsilonϵ. This is to allow for exploration of the state space. The objective for training the Q-value function is derived from the Bellman equation, which decomposes the Q-value function into the current reward plus the maximum (discounted) Q-value of the next state (Q(s, a) = r + \gamma \max_{a'} Q(s', a')Q(s,a)=r+γmaxa′Q(s′,a′)), which lets us update the Q-value function using its own estimates. This technique of updating the value function based on the current reward plus future value function is known in general as Temporal Difference learning.

Honorable Mentions

- Learning from Delayed Rewards: Christopher Watkins’ thesis in 1989 introduced Q-Learning.

2014

Generative Adversarial Networks (13917 citations)

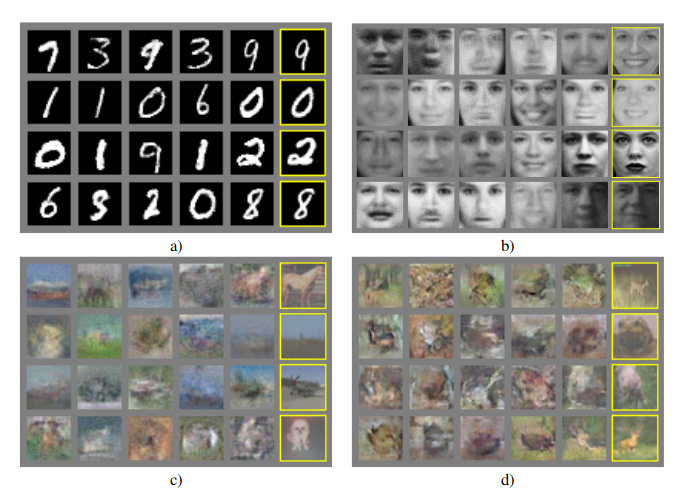

GAN images (Source)

GAN images (Source)

Generative Adversarial Networks have been successful in no small part due to the stunning visuals they produce. Relying on a minimax game between a Generator and a Discriminator, GANs are able to model complex, high dimensional distributions (most often, images[6]). The objective of the Generator is to minimize the log-probability log(1 - D(G(\bold{z})))log(1−D(G(z))) of the Discriminator being correct on fake samples, while the Discriminator’s goal is to minimize its classification error log D(x) + log(1 - D(G(\bold{z})))logD(x)+log(1−D(G(z))) between real and fake samples.

The cost used for the generator in the minimax game is useful for theoretical analysis, but does not perform especially well in practice. —Goodfellow, 2016

In practice, the Generator is often trained to maximize the log-probability D(G(\bold{z}))D(G(z)) of the Discriminator being incorrect (See: NIPS 2016 Tutorial: Generative Adversarial Networks, section 3.2.3). This minor change reduces gradient saturating and improves training stability.

Honorable Mentions:

- Wasserstein GAN & Improved Training of Wasserstein GANs: Vanilla GANs are plagued with difficulties, especially in training stability. Even with many tweaks, vanilla GANs often fail to train, or experience mode collapse (where the Generator produces only few distinct images). Due to their improved training stability, Wasserstein GANs with Gradient Penalty have become the de facto base GAN implementation for many GANs today. Unlike the Jensen-Shannon distance used by vanilla GANs, which saturates and provides an unusable gradient when there is little overlap between the distributions, WGANs use the Earth Mover’s distance.[7] The original WGAN paper enforced a Lipschitz continuity constraint (gradient less than a constant everywhere) via weight clipping, which introduced some problems which using a gradient penalty helped solve.

![StyleGAN images (<a href='https://arxiv.org/abs/1812.04948'>Source</a>)]() StyleGAN images (Source)

StyleGAN images (Source) - StyleGAN: StyleGAN is able to generate stunning high-resolution images that are nearly indistinguishable[8] from real images. Among the most important techniques used in such high-resolution GANs is progressively growing the image size, which is incorporated into StyleGAN. StyleGAN also allows for modification of the latent spaces at each of these different image scales to manipulate only features at certain levels of detail in the generated image.

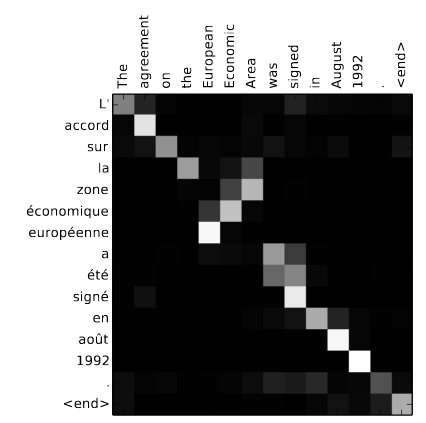

Neural Machine Translation by Jointly Learning to Align and Translate (9882 citations)

A visualization of the attention (Source)

A visualization of the attention (Source)

This paper introduced the idea of attention—instead of compressing information down into a latent space in an RNN, one could instead keep the entire context in memory, then allowing every element of the output to attend to every element of the input, using \mathcal{O}(nm)O(nm) operations. Despite requiring quadratically-increasing compute[9], attention is far more performant than fixed-state RNNs and have become an integral part of not only textual tasks like translation and language modeling, but also percolating to models as distant as GANs, too.

Adam: A Method for Stochastic Optimization (34082 citations)

Adam has become a very popular adaptive optimizer due to its ease of tuning. Adam is based on the idea of adapting separate learning rates for each parameter. While more recent papers have cast doubt on the performance of Adam, it remains one of the most popular optimization algorithms in Deep Learning.

Honorable Mentions

- Decoupled Weight Decay Regularization: This paper claims to have discovered an error[10] in the implementation of Adam with weight decay in popular implementations, proposing instead an alternative AdamW optimizer to alleviate these problems.

- RMSProp: Another popular adaptive optimizer (especially for RNNs, although whether it is actually better or worse than Adam is dubious). RMSProp is notorious for being perhaps the most cited lecture slide in Deep Learning.

2015

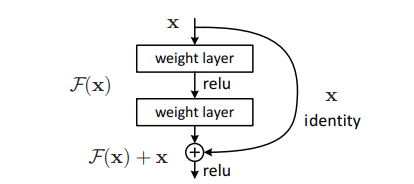

Deep Residual Learning for Image Recognition (34635 citations)

Residual Block Architecture (Source)Initially designed to deal with the problem of vanishing/exploding gradients in deep CNNs, the residual block has become the elementary building block for almost all CNNs today. The idea is very simple—add the input from before each block of convolutional layers to the output. The inspiration behind residual networks is that neural networks should theoretically never degrade with more layers, as additional layers could, in the worst case, be set simply as identity mappings. However, in practice, deeper networks often experience difficulties training. Residual networks made it easier for layers to learn an identity mapping, and also reduced the issue of gradient vanishing. Despite the simplicity, however, residual networks vastly outperform regular CNNs, especially for deeper networks.

Residual Block Architecture (Source)Initially designed to deal with the problem of vanishing/exploding gradients in deep CNNs, the residual block has become the elementary building block for almost all CNNs today. The idea is very simple—add the input from before each block of convolutional layers to the output. The inspiration behind residual networks is that neural networks should theoretically never degrade with more layers, as additional layers could, in the worst case, be set simply as identity mappings. However, in practice, deeper networks often experience difficulties training. Residual networks made it easier for layers to learn an identity mapping, and also reduced the issue of gradient vanishing. Despite the simplicity, however, residual networks vastly outperform regular CNNs, especially for deeper networks.

Honorable Mentions:

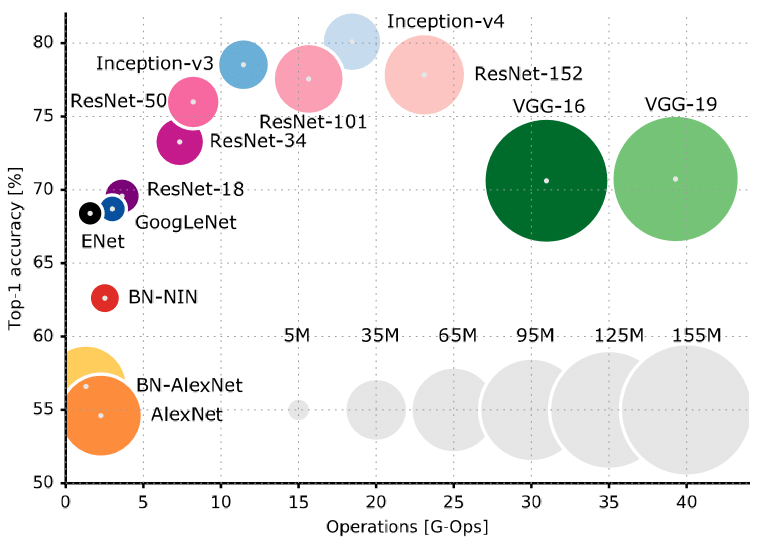

A comparison of many different CNNs (Source)Many other, more complex CNN architectures have vied for the top spot[11]. The following is a small sampling of historically significant networks.

A comparison of many different CNNs (Source)Many other, more complex CNN architectures have vied for the top spot[11]. The following is a small sampling of historically significant networks.

- Highway Networks: Residual networks are a special case of the earlier Highway Networks, which use a similar but more complex gated design to channel gradients in deeper networks.

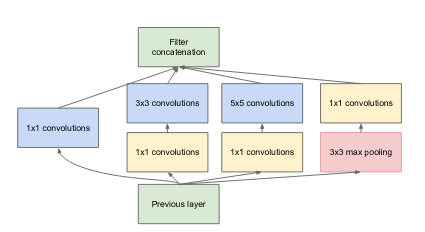

![Inceptionv1 architecture (<a href='https://arxiv.org/abs/1409.4842'>Source</a>)]() Inceptionv1 architecture (Source)

Inceptionv1 architecture (Source) - Going Deeper with Convolutions: The Inception architecture is based on the idea of factoring the convolutions to reduce the number of parameters and make activations sparser. This allows deeper nesting of layers, which helped GoogLeNet, also introduced in this paper, become the SOTA network in ILSVRC 2014. Many future iterations of the Inception module were subsequently published, and Inception modules were finally integrated with ResNets in Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning.

- Very Deep Convolutional Networks for Large-Scale Image Recognition: Another very significant work in the history of CNNs, this paper introduced the VGG networks. This paper is significant for exploring the use of only 3x3 convolutions, instead of larger convolutions as used in most other networks. This reduces the number of parameters significantly.

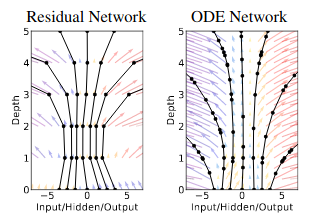

![Neural ODE diagram (<a href='https://arxiv.org/abs/1806.07366'>Source</a>)]() Neural ODE diagram (Source)

Neural ODE diagram (Source) - Neural Ordinary Differential Equations: Neural ODEs, which won the best paper award at NIPS 2018, draw a parallel between residuals and Differential Equations. The core idea is to view residual networks as a discretization of a continuous transformation. One can then define the residual network as parameterized by an ODE, which can be solved using off-the-shelf solvers.

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift (14384 citations)

Batch normalization is another mainstay of nearly all neural networks today. Batch norm is based on another simple but powerful idea: Keeping mean and variance statistics during training, and using that to scale activations to zero mean and unit variance. The exact reasons for the effectiveness of Batch norm are disputed, but it is undeniably effective empirically.[12]

Honorable Mentions

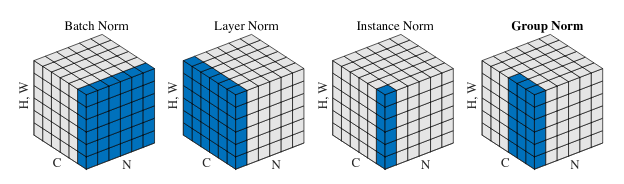

A visualization of the different normalization techniques (Source)

A visualization of the different normalization techniques (Source)

- Layer Normalization, Instance Normalization, and Group Normalization: Many other alternatives have sprung up based on different ways of aggregating the statistics: within a batch, both batch and channel, or a batch and several channels, respectively. These techniques are useful when it is undesirable for different samples in a batch and/or channel to interfere with each other—a prime example of this is in GANs.

2016

Mastering the game of Go with deep neural networks and tree search (6310 citations)

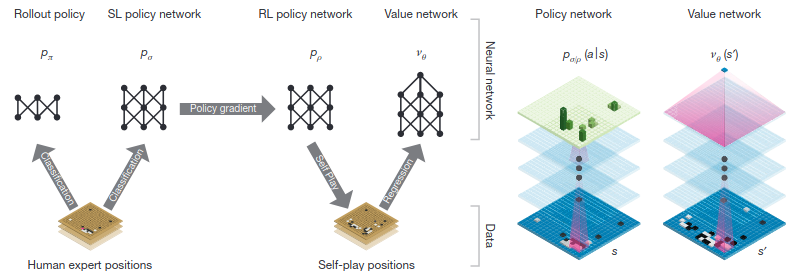

Supervised Learning and Reinforcement Learning pipeline; Policy/Value Network Architectures (Source)

Supervised Learning and Reinforcement Learning pipeline; Policy/Value Network Architectures (Source)

After the defeat of Kasparov by Deep Blue, Go became the next goal for the AI community, thanks to its properties: a much larger state space than chess and a greater reliance on intuition among human players. Up until AlphaGo, the most successful Go systems such as Crazy Stone and Zen were a combination of a Monte-Carlo tree search with many handcrafted heuristics to guide the tree search. Judging from the progress of these systems, defeating human grandmasters was considered at the time to be many years away. Although previous attempts at applying neural networks to Go do exist, none have reached the level of success of AlphaGo, which combines many of these previous techniques with big compute. Specifically, AlphaGo consists of a policy network and a value network that narrow the search tree and allow for truncation of the search tree, respectively. These networks were first trained with standard Supervised Learning and then further tuned with Reinforcement Learning.

AlphaGo has made, of all the advancements listed here, possibly the biggest impact on the public mind, with an estimated 100 million people globally (especially from China, Japan, and Korea, where Go is very popular) tuning in to the AlphaGo vs. Lee Sedol match. The games from this match and the other later AlphaGo Zero matches have influenced human strategy in Go. One example of a very influential move by AlphaGo is the 37th move in the second game; AlphaGo played very unconventionally, baffling many analysts. This move later turned out to be crucial in securing the win for AlphaGo later.[13]

Honorable Mentions

- Mastering the Game of Go without Human Knowledge: This follow-up paper, which introduced AlphaGo Zero, removed the supervised learning phase and trained the policy and value networks purely through self-play. Despite not being imbued with any human biases, AlphaGo Zero was able to rediscover many human strategies, as well as invent superior strategies that challenged many long-held assumptions in common Go wisdom.

2017

Attention Is All You Need (5059 citations)

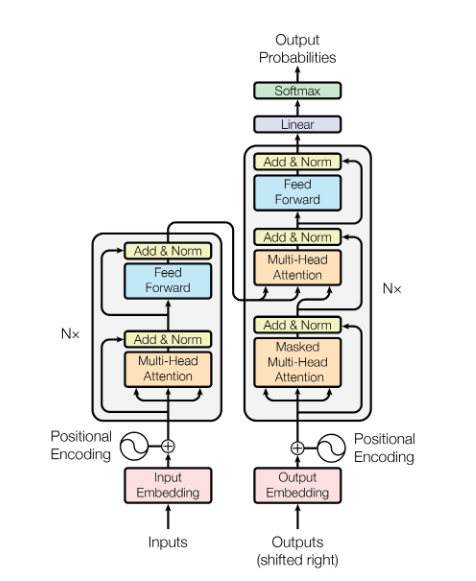

Transformer Architecture (Source)The Transformer architecture, making use of the aforementioned attention mechanism at scale, has become the backbone of nearly all state-of-the-art NLP models today. Transformer models beat RNNs in large part due to the computational benefits in very large networks; in RNNs, gradients need to be propagated through the entire “unrolled” graph, which makes memory access a large bottleneck. This also exacerbates the exploding/vanishing gradients problem, necessitating more complex (and more computationally expensive!) LSTM and GRU models. Instead, Transformer models are optimized for highly parallel processing. The most computationally expensive components are the feed forward networks after the attention layers, which can be applied in parallel, and the attention itself, which is a large matrix multiplication and is also easily optimized.

Transformer Architecture (Source)The Transformer architecture, making use of the aforementioned attention mechanism at scale, has become the backbone of nearly all state-of-the-art NLP models today. Transformer models beat RNNs in large part due to the computational benefits in very large networks; in RNNs, gradients need to be propagated through the entire “unrolled” graph, which makes memory access a large bottleneck. This also exacerbates the exploding/vanishing gradients problem, necessitating more complex (and more computationally expensive!) LSTM and GRU models. Instead, Transformer models are optimized for highly parallel processing. The most computationally expensive components are the feed forward networks after the attention layers, which can be applied in parallel, and the attention itself, which is a large matrix multiplication and is also easily optimized.

Neural Architecture Search with Reinforcement Learning (1186 citations)

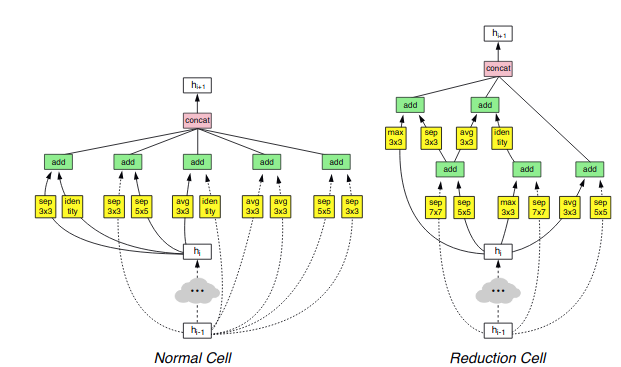

The architecture of NASNet, a network designed using NAS techniques (Source)

The architecture of NASNet, a network designed using NAS techniques (Source)

Neural Architecture Search has become common practice in the field for squeezing every drop of performance out of networks. Instead of designing architectures painstakingly by hand, NAS allows this process to be automated. In this paper, a controller network is trained using RL[14] to produce performant network architectures, which has created many SOTA networks. Other approaches, such as Regularized Evolution for Image Classifier Architecture Search (AmoebaNet), use evolutionary algorithms instead.

2018

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (3025 citations)

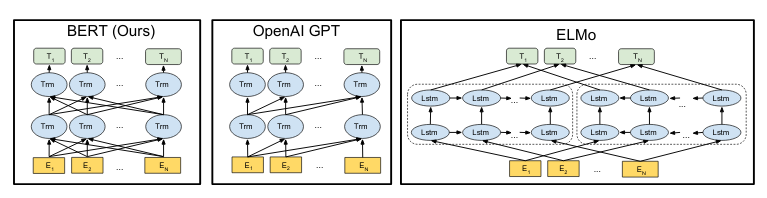

BERT compared to GPT and ELMo (Source)

BERT compared to GPT and ELMo (Source)

BERT is a bidirectional contextual text embedding model. Like word2vec, it is based on assigning each word (or, rather, sub-word token) a vector. However, these vectors in BERT are contextual, allowing homographs to be properly distinguished. Furthermore, BERT is deeply bidirectional, with each latent vector in each layer depending on all latent vectors from the previous layer, unlike earlier works like GPT (which is forward-only) and ELMo (which has separate forward and backward LMs that are only combined at the end). In unidirectional LMs like GPT, the model is trained to predict the next token at each time step, which works because the states at each time step can only depend on previous states. (With ELMo, both the forward and backward models are trained independently in this way and optimized jointly.) However, in a deeply bidirectional network, a state S^L_tStL for step tt and layer LL must depend on all states S^{L-1}_{t'}St′L−1, each of which could, in turn, depend on S^{L-2}_{t}StL−2, allowing the network to “cheat” on the language modeling. To solve this problem, BERT uses a reconstruction task to recover masked tokens, which are never seen by the network.[15]

Honorable Mentions

Since the publication of BERT, there has been an explosion of other transformer-based language models. As they are all quite similar, I will list some of them here instead of as their own entries. Of course, since this field is so fast-moving, it is impossible to be comprehensive; moreover, many of these papers have yet to stand the test of time, and so it is difficult to conclude which papers will have the most impact.

- Deep contextualized word representations: The aforementioned ELMo paper. ELMo is arguably the first contextual text embedding model; however, BERT has become much more popular in practice.

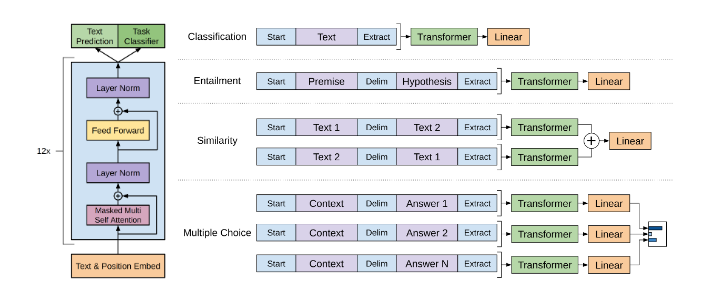

![GPT used for different tasks (<a href='https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/language-unsupervised/language_understanding_paper.pdf'>Source</a>)]() GPT used for different tasks (Source)

GPT used for different tasks (Source) - Improving Language Understanding by Generative Pre-Training: This, the aforementioned GPT paper by OpenAI, explores the idea of using the same pretrained LM downstream for many different types of problems, with only minor fine tuning. Especially considering the price of training a modern language model from scratch, this idea has become very pervasive.

- Language Models are Unsupervised Multitask Learners: GPT2, a followup to GPT by OpenAI, is in many senses simply a scaled up version of GPT. It has more parameters (up to 1.5 billion!), more training data, and much better test perplexity across the board. It also exhibits an impressive level of generalization across datasets, and provides further evidence for the generalization capacity of extremely large networks. Its claim to fame, however, is its impressive text generating ability; I have a more in-depth discussion of text generation here that (I hope!) would be of interest. GPT2 has drawn some criticism for its release strategy, which some purport is designed to maximize hype. [16]

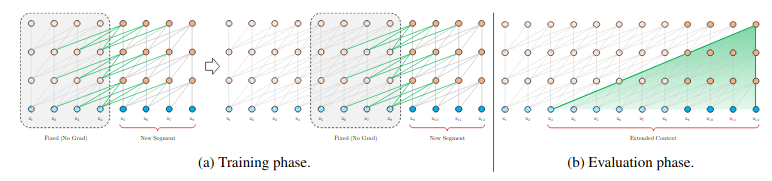

![Transformer-XL context (<a href='https://arxiv.org/abs/1901.02860'>Source</a>)]() Transformer-XL context (Source)

Transformer-XL context (Source) - Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context: Transformer-based models have a fixed attention window, which prevents attending to longer-term context. Transformer-XL attempts to fix this by attending to some context from the previous window (albeit without propagating gradients, for computational feasibility), which allows a much longer effective attention window.

- XLNet: Generalized Autoregressive Pretraining for Language Understanding: XLNet solves the “cheating” dilemma that BERT faces in a different way. XLNet is unidirectional; however, tokens are permuted in an arbitrary order, taking advantage of transformers’ inbuilt invariance to input order. This allows the network to act effectively bidirectional while retaining the computational benefits of unidirectionality. XLNet also integrates ideas from Transformer-XL for a larger effective window.

- Neural Machine Translation of Rare Words with Subword Units: Better tokenization techniques have also been a core part of the recent boom in language modeling. These eliminate the necessity for out-of-vocab tokens by ensuring that all words are tokenizable in pieces.

2019

Deep Double Descent: Where Bigger Models and More Data Hurt

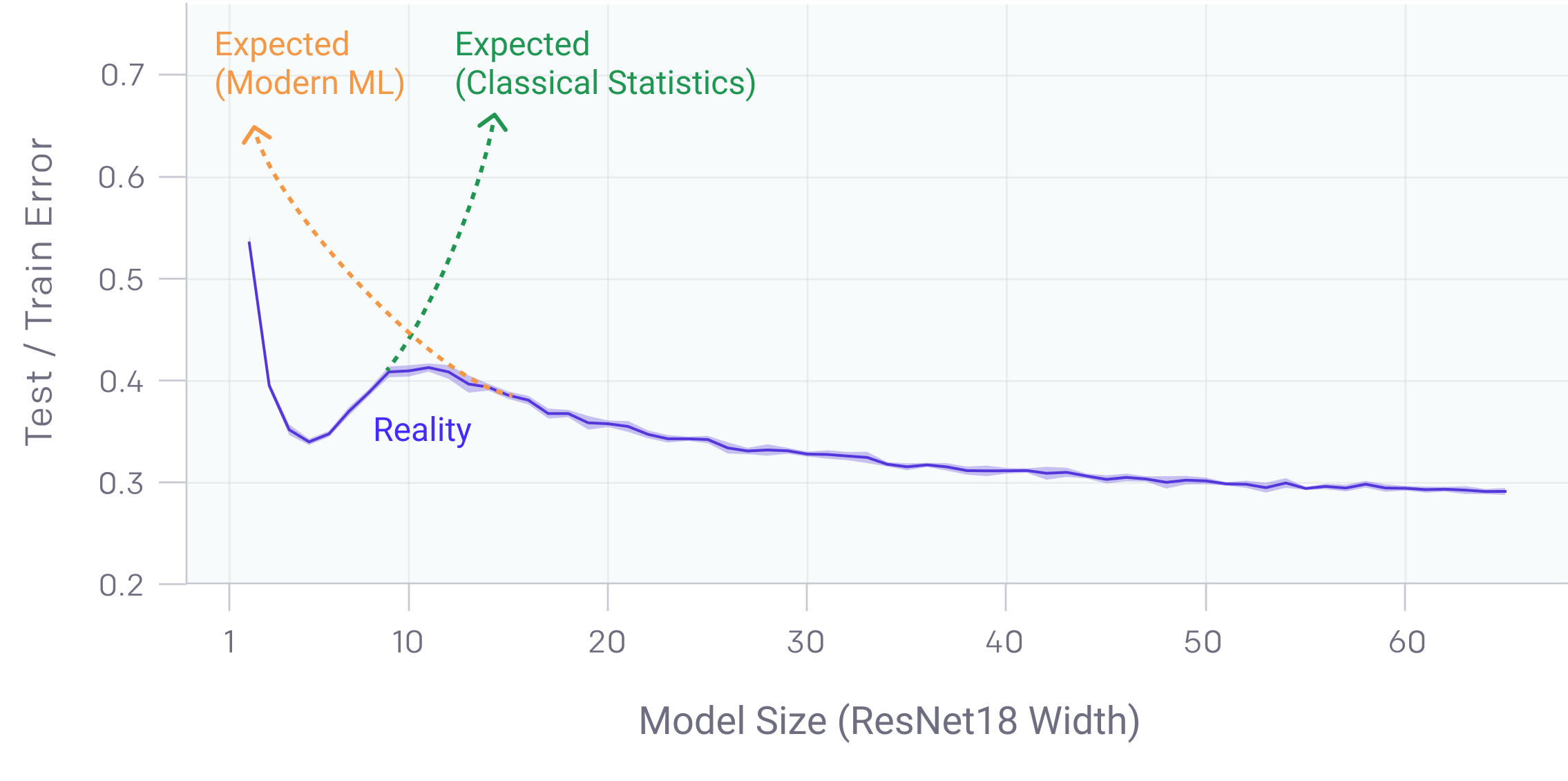

Deep Double Descent (Source)

Deep Double Descent (Source)

The phenomenon of (Deep) Double Descent explored in this paper runs contrary to popular wisdom in both classical machine learning and modern Deep Learning. In classical machine learning, model complexity follows the bias-variance tradeoff. Too weak a model is unable to fully capture the structure of the data, while too powerful a model can overfit and capture spurious patterns that don’t generalize. Because of this, in classical machine learning it is expected that test error will decrease as models get larger, but then start to increase again once the models begin to overfit. In practice, however, in Deep Learning, models are very often massively overparameterized and yet still seem to improve on test performance with larger models. This conflict is the motivation behind (deep) double descent. Deep Double Descent expanded on the original Double Descent paper by Belkin et al. by showing empirically the effects of Double Descent on a much wider variety of Deep Learning models, and its applicability to not only the model size but also training time and dataset size.

By considering larger function classes, which contain more candidate predictors compatible with the data, we are able to find interpolating functions that have smaller norm and are thus “simpler”. Thus increasing function class capacity improves performance of classifiers. —Belkin et al. 2018

As the capacity of the models approaches the “interpolation threshold,” the demarcation between the classical ML and Deep Learning regimes, it becomes possible for gradient descent to find models that achieve near-zero error, which are likely to be overfit. However, as the model capacity is increased even further, the number of different models that can achieve zero training error increases, and the likelihood that some of them fit the data smoothly (i.e without overfitting) increases. Double Descent posits that gradient descent is more likely to find these smoother zero-training-error networks, which generalize well despite being overparameterized.[17]

The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks

A randomly-initialized, dense neural network contains a subnetwork that is initialized such that—when trained in isolation—it can match the test accuracy of the original network after training for at most the same number of iterations.

Another paper about the training characteristics of deep neural networks was the Lottery Ticket Hypothesis paper. The Lottery Ticket Hypothesis asserts that most of a network’s performance comes from a certain subnetwork due to a lucky initialization (hence the name, “lottery ticket,” to refer to these subnetworks), and that larger networks are more performant because of a higher chance of lottery tickets occurring. This not only allows us to prune the irrelevant weights (which is already well established in the literature), but also to retrain from scratch using only the “lottery ticket” weights, which, surprisingly, obtains close to the original loss.

Conclusion and Future Prospects

The past decade has marked an incredibly fast-paced and innovative period in the history of AI, driven by the start of the Deep Learning Revolution—the Renaissance of gradient-based networks. Spurred in large part by the ever increasing computing power available, neural networks have gotten much larger and thus more powerful, displacing traditional AI techniques across the board, from Computer Vision to Natural Language Processing. Neural networks do have their weaknesses though: they require a large amount of data to train, have inexplicable failure modes, and cannot generalize beyond individual tasks. As the limits of Deep Learning with respect to advancing AI have begun to become apparent thanks to the massive improvements in the field, attention has shifted towards a deeper understanding of Deep Learning. The next decade is likely to be marked by an increased understanding of many of the empirical characteristics of neural networks observed today. Personally, I am optimistic about the prospects of AI; Deep Learning is an invaluable tool in the toolkit of AI, that brings us yet another step closer to understanding intelligence.

Here’s to a fruitful 2020’s.

-

This also helps clump similar papers together; if listed strictly chronologically, it would be much more difficult to follow developments along any particular thread of research. ↩︎

-

In practice, the derivative is simply set to an arbitrary value at zero. The discontinuity is more a theoretical concern than anything else. ↩︎

-

Interestingly, there are several other activation functions with very similar shapes: Swish and Mish, which can be written as x\sigma(x)xσ(x) and xtanh(ln(1 + e^x))xtanh(ln(1+ex)), respectively.

GELU, Swish, and Mish all follow the same basic pattern of x times some sigmoidal function (in fact, GELU is often approximated as x\sigma(1.702x)xσ(1.702x)) ↩︎

-

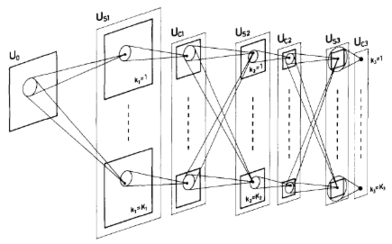

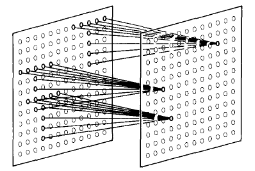

![Layers in a neocognitron. (<a href='https://www.rctn.org/bruno/public/papers/Fukushima1980.pdf'>Source</a>)]() Layers in a neocognitron. (Source)…but not the first CNN-like network ever; that honor would go to the Neocognitron from 1980 (though the preliminary report was published in 1979).

Layers in a neocognitron. (Source)…but not the first CNN-like network ever; that honor would go to the Neocognitron from 1980 (though the preliminary report was published in 1979).![Connections between layers in a neocognitron. (<a href='https://www.rctn.org/bruno/public/papers/Fukushima1980.pdf'>Source</a>)]() Connections between layers in a neocognitron. (Source)The Neocognitron bears striking resemblance to a CNN; the neurons are arranged in layers with multiple filters (called S-planes in the paper) per layer and connected locally. ↩︎

Connections between layers in a neocognitron. (Source)The Neocognitron bears striking resemblance to a CNN; the neurons are arranged in layers with multiple filters (called S-planes in the paper) per layer and connected locally. ↩︎ -

The reference C implementation of word2vec actually does things slightly differently from the description in the paper by having separate vectors for context and focus words. ↩︎

-

GANs for other modalities do exist, though! GANs have been applied to audio, unpaired image-to-image translation, and even text (though difficulties exist due to the discrete nature of text; a good overview of the challenges can be found here) ↩︎

-

The EM distance is, intuitively, the amount of “work” (mass * distance) it would take to move a certain volume of material to another arrangement (hence the name) if the optimal transportation strategy were used. ↩︎

-

Want to put yourself to the test? whichfaceisreal.com lets you test yourself on distinguishing between GAN-generated and fake images. It’s possible to tell very consistently if you know what to look for, but it’s still incredible that we’ve gone from images that only look real if you squint at them to images that only look fake if you squint at them. ↩︎

-

A recent paper, Reformer: The Efficient Transformer, could potentially solve this problem using locality-sensitive hashing to compute the attention matrix sparsely. ↩︎

-

The issue revolves mainly around how weight decay, which should be subtracted directly from the weights, is usually factored into the gradient instead. This doesn’t make a difference for optimizers without momentum, but for optimizers with momentum the gradients go through the momentum step before being applied to the weights, which means that previous weights will also affect the weight decay. A great discussion of this topic can be found here on fast.ai. ↩︎

-

A good place to explore the SOTA over time is Papers With Code. ↩︎

-

“Confounding theoretically but successful empirically” is perhaps the modus operandi of Deep Learning. This is not necessarily a bad thing though; theoretical developments are often spurred by previously unexplainable empirical observations, and many have already shifted their focus to plumbing the theoretical side of deep networks. ↩︎

-

There is an amusing parallel to Deep Blue to be drawn here. In the 36th move of the second game of the 1997 rematch, Deep Blue famously made an unexpected move, which was lauded by analysts. Kasparov himself accused the Deep Blue team of cheating. Only in 2012 was it revealed that the move in question was actually made because Deep Blue was unable to decide and thus chose a random move as a fallback. ↩︎

-

Some more recent work has brought into question the effectiveness of RL-based NAS techniques entirely, suggesting that a simple random search can achieve similar results. ↩︎

-

An excellent in-depth exposition of BERT is “The Illustrated BERT, ELMo, and co.”. ↩︎

-

Despite having attracted a lot of attention recently, I have chosen not to give GPT2 its own entry because I have already covered GPT2 and related autoregressive models in the aforementioned text generation post. ↩︎

-

There is debate over the exact causes of this phenomenon; this LessWrong post discusses several potential explanations. ↩︎

浙公网安备 33010602011771号

浙公网安备 33010602011771号