cuda原子操作进行直方图计算

首先在cpu上进行计算

#include <iostream> #include <chrono> #define DATA_LEN (100 * 1024 * 1024) inline int rnd(float x) { return static_cast<int>(x * rand() / RAND_MAX); } int main(void) { // cpu hist unsigned char* buffer = new unsigned char[DATA_LEN]; for (int i = 0; i < DATA_LEN; ++i) { buffer[i] = rnd(255); if (buffer[i] > 255) { std::cout << "error" << std::endl; } } int hist[256]; for (int i = 0; i < 256; ++i) { hist[i] = 0; } auto start = std::chrono::system_clock::now(); for (int i = 0; i < DATA_LEN; ++i) { hist[buffer[i]]++; } auto end = std::chrono::system_clock::now(); auto duration = std::chrono::duration_cast<std::chrono::microseconds>(end-start); std::cout << double(duration.count()) * std::chrono::microseconds::period::num / std::chrono::microseconds::period::den << "s" << std::endl; long hist_count = 0; for (int i = 0; i < 256; ++i) { hist_count += hist[i]; } if (hist_count != DATA_LEN) { std::cout << "error" << std::endl; } delete[] buffer; return 0; }

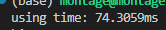

运行时间

接下来在gpu上使用全局内存进行计算

#include <iostream> #define DATA_LEN (100 * 1024 * 1024) inline int rnd(float x) { return static_cast<int>(x * rand() / RAND_MAX); } inline void check(cudaError_t call, const char* file, const int line) { if (call != cudaSuccess) { std::cout << "cuda error: " << cudaGetErrorName(call) << std::endl; std::cout << "error file: " << file << " error line: " << line << std::endl; std::cout << cudaGetErrorString(call) << std::endl; } } #define CHECK(call) (check(call, __FILE__, __LINE__)) __global__ void cal_hist(unsigned char* buffer, unsigned int* hist, long data_size) { int index = threadIdx.x + blockDim.x * blockIdx.x; int stride = blockDim.x * gridDim.x; while (index < data_size) { atomicAdd(&(hist[buffer[index]]), 1); index += stride; } } int main(void) { unsigned char* buffer = new unsigned char[DATA_LEN]; for (int i = 0; i < DATA_LEN; ++i) { buffer[i] = rnd(255); if (buffer[i] > 255) { std::cout << "error" << std::endl; } } cudaEvent_t start, end; CHECK(cudaEventCreate(&start)); CHECK(cudaEventCreate(&end)); CHECK(cudaEventRecord(start, 0)); unsigned int* d_hist; CHECK(cudaMalloc((void**)&d_hist, sizeof(unsigned int) * 256)); // new function CHECK(cudaMemset(d_hist, 0, sizeof(int))); unsigned char* d_buffer; CHECK(cudaMalloc((void**)&d_buffer, sizeof(unsigned char) * DATA_LEN)); CHECK(cudaMemcpy(d_buffer, buffer, sizeof(unsigned char) * DATA_LEN, cudaMemcpyHostToDevice)); cudaDeviceProp prop; CHECK(cudaGetDeviceProperties(&prop, 0)); int block_num = prop.multiProcessorCount; cal_hist<<<block_num, 256>>>(d_buffer, d_hist, DATA_LEN); unsigned int h_hist[256]; CHECK(cudaMemcpy(h_hist, d_hist, sizeof(unsigned int) * 256, cudaMemcpyDeviceToHost)); CHECK(cudaEventRecord(end, 0)); CHECK(cudaEventSynchronize(end)); float elapsed_time; CHECK(cudaEventElapsedTime(&elapsed_time, start, end)); std::cout << "using time: " << elapsed_time << "ms" << std::endl; long hist_count = 0; for (int i = 0; i <256; ++i) { hist_count += h_hist[i]; } std::cout << "histogram sum: " << hist_count << std::endl; for (int i = 0; i < DATA_LEN; ++i) { h_hist[buffer[i]]--; } for (int i = 0; i < 256; ++i) { if (h_hist[i] != 0) { std::cout << "cal error" << std::endl; } } CHECK(cudaEventDestroy(start)); CHECK(cudaEventDestroy(end)); CHECK(cudaFree(d_hist)); CHECK(cudaFree(d_buffer)); delete[] buffer; return 0; }

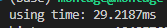

运行时间

这种做法要将所有待运算数据拷贝到gpu全局内存,然后直接在全局内存上进行读写操作。block数量的设置为处理器数量的二倍,这样能较好的发挥出gpu的性能。如果核函数分配的线程个数小于数组中元素的个数,就意味着一个线程要处理多条数据,此时可以使用跨步循环,跨步的步长stride我们就设置为核函数的线程数,即blockDim.x*gridDim.x,但这种方法并非最优解,把对全局内存的读写操作定义为原子操作就意味着读写全局内存这一过程中存在大量串行,这样反而会降低gpu性能,所以原子操作常常与共享内存结合起来使用,因为共享内存是block内部共享的,所以一个blcok内部的原子操作是串行的,而block和block之间是可以并行的,这样就可以优化gpu性能

使用共享内存原子操作代码如下,因为主函数部分没变,所以只放上核函数代码

__global__ void cal_hist(unsigned char* buffer, unsigned int* hist, long data_size) { // init __shared__ unsigned int temp[256]; temp[threadIdx.x] = 0; __syncthreads(); int index = threadIdx.x + blockIdx.x * blockDim.x; int stride = blockDim.x * gridDim.x; while (index < data_size) { atomicAdd(&temp[buffer[index]], 1); index += stride; } __syncthreads(); atomicAdd(&(hist[threadIdx.x]), temp[threadIdx.x]); }

首先将所有共享内存初始化为0,然后去做跨步循环,在执行写操作时候在共享内存上进行原子操作,然后将所有共享内存的对应位置相加,得到最终的直方图结果

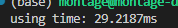

运行时间为29.22ms

无情的摸鱼机器