keras实现Alexnet (cifar10数据集)

论文地址

https://proceedings.neurips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

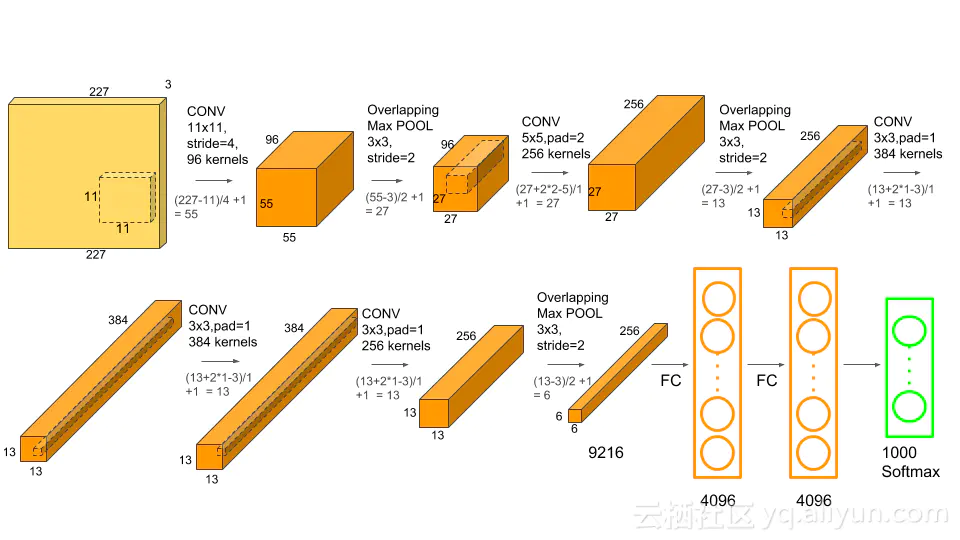

网络结构

论文里画的有点乱,这个更清楚一点

cifar10数据集官网就可以直接下载,keras里也内置下载函数,用法和mnist类似

from tensorflow.keras.datasets import cifar10 from tensorflow.python.keras.utils import np_utils import numpy as np from tensorflow.keras import layers from tensorflow.keras import models,optimizers import matplotlib.pyplot as plt import cv2

导入完头文件开始读取数据集,看一下数据集是什么样的

(x_train,y_train),(x_test,y_test)=cifar10.load_data() print(x_train.shape) print(y_train.shape) y_train=np_utils.to_categorical(y_train) y_test=np_utils.to_categorical(y_test) #x_train=x_train.astype('float32')/255 #x_test=x_test.astype('float32')/255 print(y_train.shape,y_test.shape) x_train_mean=np.mean(x_train) x_test_mean=np.mean(x_test) #x_train-=x_train_mean #x_test-=x_test_mean plt.imshow(x_train[0]) tt=np.zeros((224,224,3)) tt=cv2.resize(x_train[0],(224,224),interpolation=cv2.INTER_NEAREST) plt.imshow(tt)

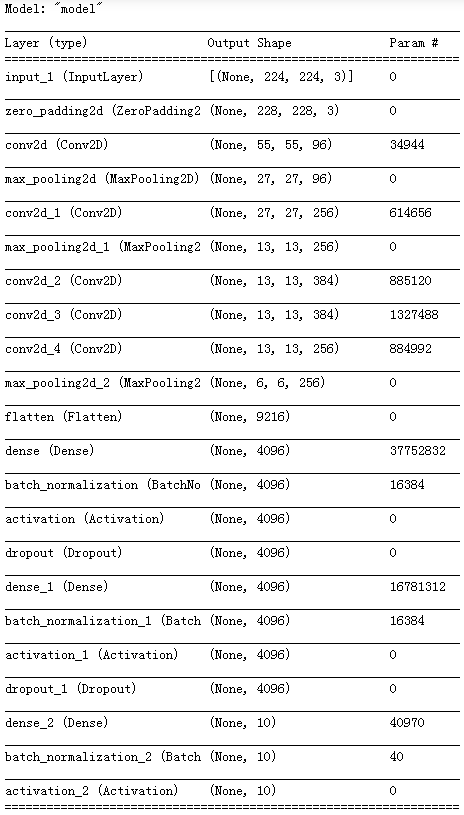

写网络模型

def Alexnet(): inp=layers.Input(shape=(224,224,3)) L1=layers.ZeroPadding2D((2,2))(inp) L1=layers.Conv2D(96,(11,11),strides=(4,4),padding='valid',activation='relu')(L1)#55 L1=layers.MaxPooling2D((3,3),strides=(2,2))(L1)#27 L2=layers.Conv2D(256,(5,5),activation='relu',padding='same')(L1)#27 L2=layers.MaxPooling2D((3,3),strides=(2,2))(L2)#13 L3=layers.Conv2D(384,(3,3),activation='relu',padding='same')(L2)#13 L4=layers.Conv2D(384,(3,3),activation='relu',padding='same')(L3)#13 L5=layers.Conv2D(256,(3,3),activation='relu',padding='same')(L4)#13 L5=layers.MaxPooling2D((3,3),strides=(2,2))(L5) fc=layers.Flatten()(L5) fc1=layers.Dense(4096)(fc) fc1=layers.BatchNormalization()(fc1) fc1=layers.Activation('relu')(fc1) fc1=layers.Dropout(0.5)(fc1) fc2=layers.Dense(4096)(fc1) fc2=layers.BatchNormalization()(fc2) fc2=layers.Activation('relu')(fc2) fc2=layers.Dropout(0.5)(fc2) pred=layers.Dense(10)(fc2) pred=layers.BatchNormalization()(pred) pred=layers.Activation('softmax')(pred) model=models.Model(inp,pred) omz=optimizers.Adam(lr=0.01) model.compile(optimizer=omz,loss='categorical_crossentropy',metrics=['acc']) model.summary() return model

cifar10数据集图像尺寸比较小,而alexnet的输入是224,所以要把样本都reshape,需要一点时间因为数据比较多

from tensorflow.keras.preprocessing import image import cv2 X_TRAIN=np.zeros((x_train.shape[0],224,224,x_train.shape[3])) X_TEST=np.zeros((x_test.shape[0],224,224,x_test.shape[3])) print(X_TRAIN.shape,X_TEST.shape) for i in range(x_train.shape[0]): X_TRAIN[i]=cv2.resize(x_train[i],(224,224),interpolation=cv2.INTER_NEAREST) for i in range(x_test.shape[0]): X_TEST[i]=cv2.resize(x_test[i],(224,224),interpolation=cv2.INTER_NEAREST)

生成数据流

ImageDataGenerator使用方法见官方文档

https://keras.io/zh/preprocessing/image/

train_data_gen=image.ImageDataGenerator() train_gen=train_data_gen.flow(X_TRAIN,y_train,batch_size=32) test_data_gen=image.ImageDataGenerator() test_gen=test_data_gen.flow(X_TEST,y_test,batch_size=32)

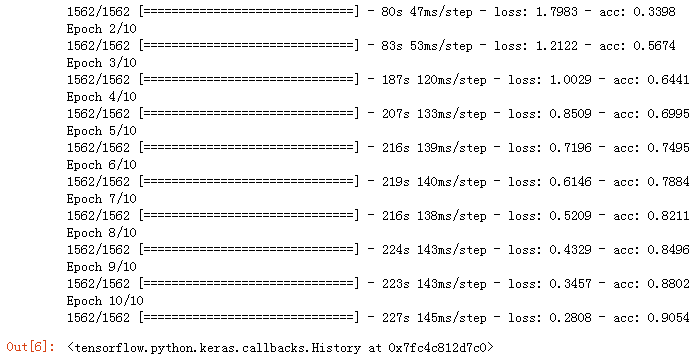

开始训练

model1=Alexnet()

model1.fit_generator(train_gen,steps_per_epoch=50000/32,epochs=10)

50000是训练样本个数,也可以用x_train.shape[0]表示

模型结构:

训练结果

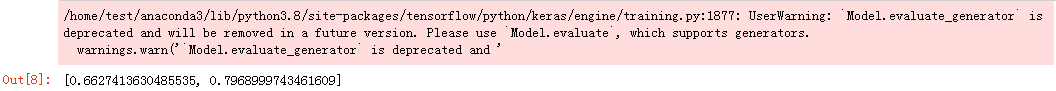

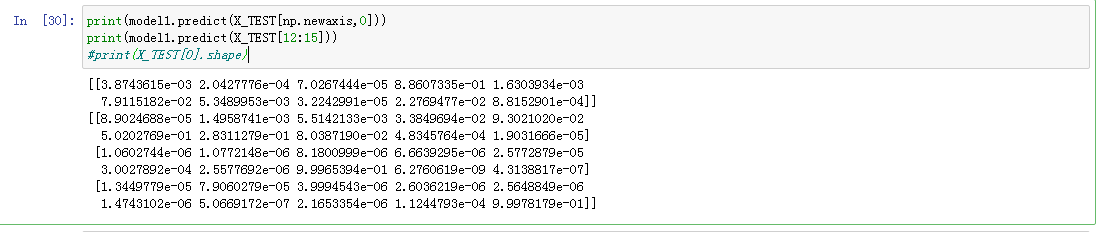

测试一下

model1.evaluate_generator(test_gen)

过拟合了,训练时加上验证集和callbacks.Earlystopping效果应该会改善

whatever,能用就行

顺便隔壁pytorch有官方版本,tf的没在github上找到

https://pytorch.org/hub/pytorch_vision_alexnet/

无情的摸鱼机器