本篇文章主要针对《周志华西瓜书》、《南瓜书》的笔记总结,思路梳理。

线性模型:属性的线性组合

\[f(\boldsymbol{x})=w_{1} x_{1}+w_{2} x_{2}+\ldots+w_{d} x_{d}+b=\omega^Tx+b

\]

蕴含的基本思想:

数据集的表示:

- 数据集 \(D = {(x^{(1)},y^{(1)}),(x^{(2)},y^{(2)},\dots,(x^{(i)},y^{(i)}))}\) 用右上角代表样本

- 对离散属性的处理

- 有序属性:插值离散化 (例如:高,中,低,可转化为 {1.0,0.5,0.0} )

- 无序属性:one-hot 化(例如:西瓜,南瓜,冬瓜,可转化为 (0,0,1),(0,1,0),(1,0,0) )

单元线性回归:

对于回归问题:目标就是让 \(Loss function\) 最小化

\(Loss \ function:\) 最小二乘误差

\[\begin{aligned}\left(w^{*}, b^{*}\right) &=\underset{(w, b)}{\arg \min } \sum_{i=1}^{m}\left(f\left(x_{i}\right)-y_{i}\right)^{2} \\ &=\underset{(w, b)}{\arg \min } \sum_{i=1}^{m}\left(y_{i}-w x_{i}-b\right)^{2} \end{aligned}

\]

先对\(b\)求偏导:

\[2\sum_{i=1}^m(y_i-\omega x_i-b)(-1)=0\\

\Rightarrow b={} \frac{1}{m} \sum_{i=1}^{m}\left(y_{i}-w x_{i}\right)

\]

再对\(\omega\)求偏导:

\[\begin{aligned} 0 &=w \sum_{i=1}^{m} x_{i}^{2}-\sum_{i=1}^{m}\left(y_{i}-b\right) x_{i} \\

\Rightarrow & w \sum_{i=1}^{m} x_{i}^{2}\sum_{i=1}^{m} y_{i} x_{i}-\sum_{i=1}^{m} b x_{i} \end{aligned}\\

\]

将\(b\)代入上式中

\[\Rightarrow w \sum_{i=1}^{m} x_{i}^{2}=\sum_{i=1}^{m} y_{i} x_{i}-\sum_{i=1}^{m}(\bar{y}-w \bar{x}) x_{i}\\

\Rightarrow w\left(\sum_{i=1}^{m} x_{i}^{2}-\bar{x} \sum_{i=1}^{m} x_{i}\right)=\sum_{i=1}^{m} y_{i} x_{i}-\bar{y} \sum_{i=1}^{m} x_{i} \\

\Rightarrow w=\frac{\sum_{i=1}^{m} y_{i} x_{i}-\bar{y} \sum_{i=1}^{m} x_{i}}{\sum_{i=1}^{m} x_{i}^{2}-\bar{x} \sum_{i=1}^{m} x_{i}}

\]

由以下两个等式可以转换为西瓜书上的公式:【技巧】

\[\begin{aligned}

\bar{y} \sum_{i=1}^{m} x_{i} ={} & \frac{1}{m} \sum_{i=1}^{m} y_{i} \sum_{i=1}^{m} x_{i}=\bar{x} \sum_{i=1}^{m} y_{i} \\

\bar{x} \sum_{i=1}^{m} x_{i} ={} & \frac{1}{m} \sum_{i=1}^{m} x_{i} \sum_{i=1}^{m} x_{i}=\frac{1}{m}\left(\sum_{i=1}^{m} x_{i}\right)^{2}

\end{aligned}

\]

最终可得:

\[\Rightarrow w=\frac{\sum_{i=1}^{m} y_{i}\left(x_{i}-\bar{x}\right)}{\sum_{i=1}^{m} x_{i}^{2}-\frac{1}{m}\left(\sum_{i=1}^{m} x_{i}\right)^{2}}

\]

可以求解得:\(\omega\) 和 \(b\) 最优解的闭式解

\[\begin{aligned}

w={} &\frac{\sum_{i=1}^{m} y_{i}\left(x_{i}-\bar{x}\right)}{\sum_{i=1}^{m} x_{i}^{2}-\frac{1}{m}\left(\sum_{i=1}^{m} x_{i}\right)^{2}}\\

b={} &\frac{1}{m} \sum_{i=1}^{m}\left(y_{i}-w x_{i}\right)

\end{aligned}

\]

进一步的可以将\(\omega\)向量化【方便编程】

将\(\frac{1}{m}\left(\sum_{i=1}^{m} x_{i}\right)^{2}=\bar{x} \sum_{i=1}^{m} x_{i}\)代入分母可得:

\[\begin{aligned} w &=\frac{\sum_{i=1}^{m} y_{i}\left(x_{i}-\bar{x}\right)}{\sum_{i=1}^{m} x_{i}^{2}-\bar{x} \sum_{i=1}^{m} x_{i}} \\ &=\frac{\sum_{i=1}^{m}\left(y_{i} x_{i}-y_{i} \bar{x}\right)}{\sum_{i=1}^{m}\left(x_{i}^{2}-x_{i} \bar{x}\right)} \end{aligned}

\]

由以下两个等式:【技巧】

\[\bar{y} \sum_{i=1}^{m} x_{i}=\bar{x} \sum_{i=1}^{m} y_{i}=\sum_{i=1}^{m} \bar{y} x_{i}=\sum_{i=1}^{m} \bar{x} y_{i}=m \bar{x} \bar{y}=\sum_{i=1}^{m} \bar{x} \bar{y} \\

\sum_{i=1}^mx_i\bar{x}=\bar{x} \sum_{i=1}^{m} x_{i}=\bar{x} \cdot m \cdot \frac{1}{m} \cdot \frac{1}{m} \cdot \sum_{i=1}^{m} x_{i}=m \bar{x}^{2}=\sum_{i=1}^{m} \bar{x}^{2}

\]

可将\(\omega\)的表达式化为:

\[\begin{aligned} w &=\frac{\sum_{i=1}^{m}\left(y_{i} x_{i}-y_{i} \bar{x}-x_{i} \bar{y}+\bar{x} \bar{y}\right)}{\sum_{i=1}^{m}\left(x_{i}^{2}-x_{i} \bar{x}-x_{i} \bar{x}+\bar{x}^{2}\right)} \\ &=\frac{\sum_{i=1}^{m}\left(x_{i}-\bar{x}\right)\left(y_{i}-\bar{y}\right)}{\sum_{i=1}^{m}\left(x_{i}-\bar{x}\right)^{2}} \end{aligned}

\]

令\(\boldsymbol{x}_{d}=\left(x_{1}-\bar{x}, x_{2}-\bar{x}, \ldots, x_{m}-\bar{x}\right)^{T}\),\(\boldsymbol{y}_{d}=\left(y_{1}-\bar{y}, y_{2}-\bar{y}, \dots, y_{m}-\bar{y}\right)^{T}\)

向量化结果为:

\[w=\frac{\boldsymbol{x}_{d}^{T} \boldsymbol{y}_{d}}{\boldsymbol{x}_{d}^{T} \boldsymbol{x}_{d}}

\]

多元线性回归

推导过程:对于单元线性回归,我们是先对\(b\)求偏导,再将\(b\)代入对\(\omega\)的偏导中

而对于多元无法通过偏导直接求解出\(Loss\ function\)的极值,改写表达式

\[f(x_{i})=\omega^T x_i +b = \beta^T X

\]

其中

\[\beta = (\omega,b) \\

X = (x_i,1)

\]

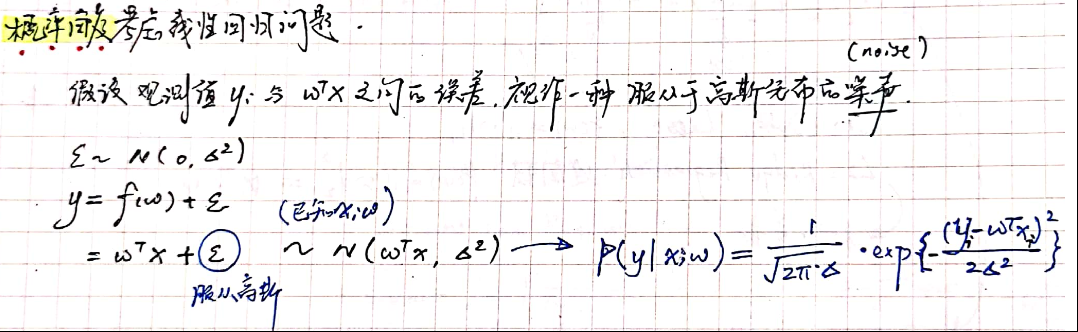

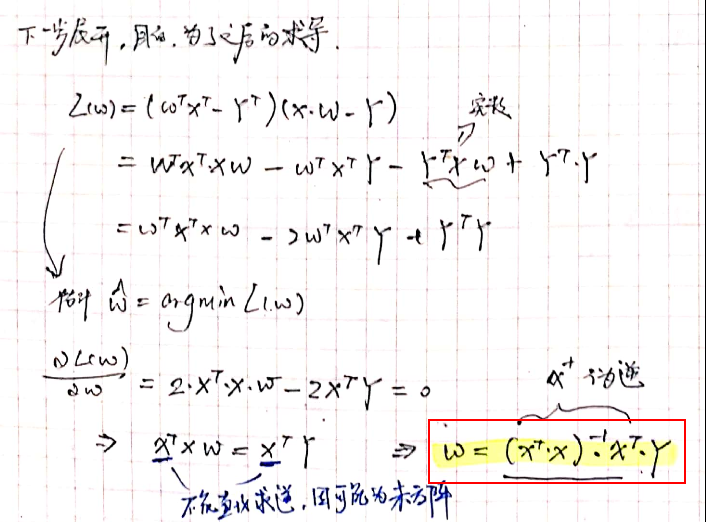

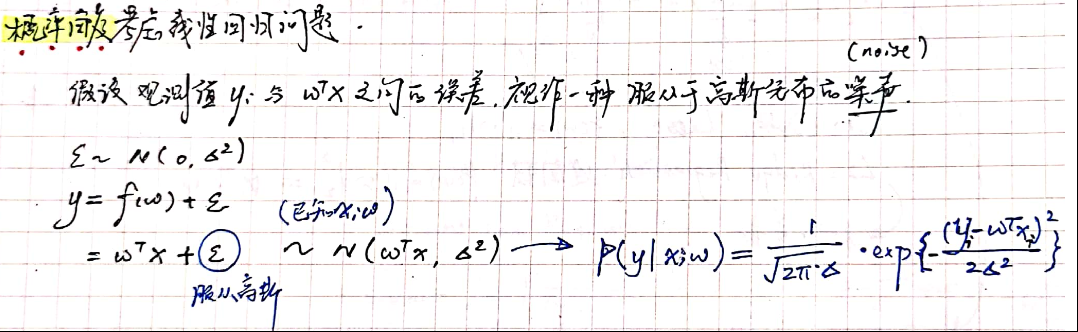

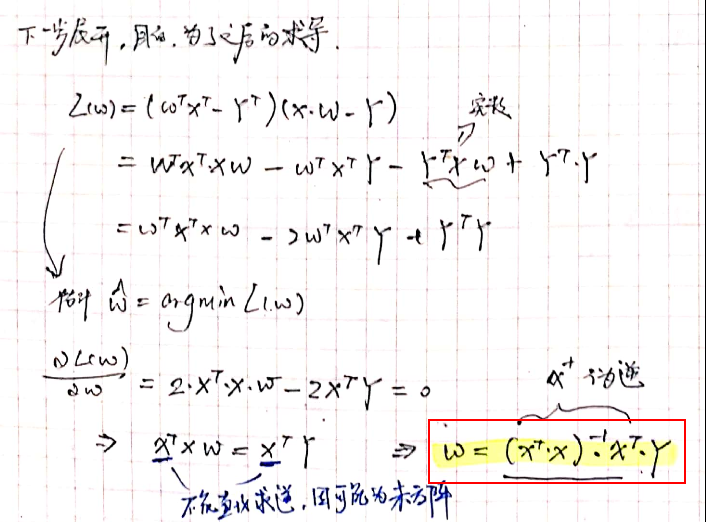

详细推导如下:(手写懒得手打了)

将损失函数求导:

对于上式中若\((X^TX)\)是满秩矩阵或正定矩阵,则存在逆矩阵

例如,生物信息学的基因芯片数据中常有成千上万个属性,但样例仅为几十或上百。此时可解出多个\(\hat{\omega}\),它们都能使均方误差最小化。常见的做法是引入正则项解决这问题。

高阶认知(来源于白板推导)

几何角度[LSE]

概率角度:(即从模型生成角度考虑问题)