centos ovn 搭建测试(一:逻辑交换机)

参考链接:

https://github.com/openvswitch/ovs/blob/v2.10.0/Documentation/intro/install/general.rst

https://www.jianshu.com/p/1121fcd5ab82

# ovn-trace 参考链接

https://www.man7.org/linux/man-pages/man8/ovn-trace.8.html

centos 版本 CentOS Linux release 7.8.2003 (Core)

安装构建RPM包所需要的依赖包 yum install -y @'Development Tools' rpm-build yum-utils

依赖安装 yum install -y git wget firewalld-filesystem net-tools desktop-file-utils groff graphviz selinux-policy-devel python-sphinx python-twisted-core python-zope-interface python-six libcap-ng-devel unbound unbound-devel openssl-devel gcc make python-devel openssl-devel kernel-devel kernel-debug-devel autoconf automake rpm-build redhat-rpm-config libtool python-openvswitch libpcap-devel numactl-devel dpdk-devel

# 源码下载编译 git clone https://github.com/openvswitch/ovs.git git checkout v2.12.0 ./boot.sh ./configure --prefix=/usr --localstatedir=/var --sysconfdir=/etc

rpm 编译安装 make rpm-fedora RPMBUILD_OPT="--without check" make rpm-fedora-ovn RPMBUILD_OPT="--without check"

make rpm-fedora-kmod RPMBUILD_OPT="--without check"

安装 cd rpm/rpmbuild/RPMS/x86_64 yum install ovn-* ../noarch/python-openvswitch-2.12.0-1.el7.noarch.rpm openvswitch-2.12.0-1.el7.x86_64.rpm -y

yum install openvswitch-kmod-2.12.0-1.el7.x86_64.rpm -y

# 启动ovs,ovn服务

# master节点操作 systemctl enable openvswitch systemctl enable ovn-northd systemctl enable ovn-controller systemctl start openvswitch systemctl start ovn-northd systemctl start ovn-controller systemctl status openvswitch systemctl status ovn-northd systemctl status ovn-controller

从节点不需要启动ovn-northd服务

# slaver节点操作

systemctl enable openvswitch

systemctl enable ovn-controller

systemctl start openvswitch

systemctl start ovn-controller

systemctl status openvswitch

systemctl status ovn-controller

#master:192.168.1.200

#slaver:192.168.1.199

# master节点配置 # 让ovsdb-server监听6641,6642端口(其实,就是ovs 链接上 ovn) ovn-nbctl set-connection ptcp:6641:192.168.1.200 ovn-sbctl set-connection ptcp:6642:192.168.1.200

ovs-vsctl set open . external-ids:ovn-remote=tcp:192.168.1.200:6642

ovs-vsctl set open . external-ids:ovn-encap-type=geneve

ovs-vsctl set open . external-ids:ovn-encap-ip=192.168.1.200

6641端口用于监听OVN北向数据库

6642端口用于监听OVN南向数据库

# 从节点配置

ovs-vsctl set open . external-ids:ovn-remote=tcp:192.168.1.200:6642

ovs-vsctl set open . external-ids:ovn-encap-type=geneve

ovs-vsctl set open . external-ids:ovn-encap-ip=192.168.1.199

# 状态查看

[root@master ~]# ovs-vsctl show 2402b6df-301b-4e1b-a2d6-0b2c0ebaa56e Bridge br-int fail_mode: secure Port "ovn-e530c5-0" Interface "ovn-e530c5-0" type: geneve options: {csum="true", key=flow, remote_ip="192.168.1.199"} Port br-int Interface br-int type: internal ovs_version: "2.12.0" [root@slaver ~]# ovs-vsctl show f4461800-e732-40ab-84da-d106ef666041 Bridge br-int fail_mode: secure Port "ovn-32b404-0" Interface "ovn-32b404-0" type: geneve options: {csum="true", key=flow, remote_ip="192.168.1.200"} Port br-int Interface br-int type: internal ovs_version: "2.12.0"

功能测试

# 创建逻辑交换机,测试port连通性

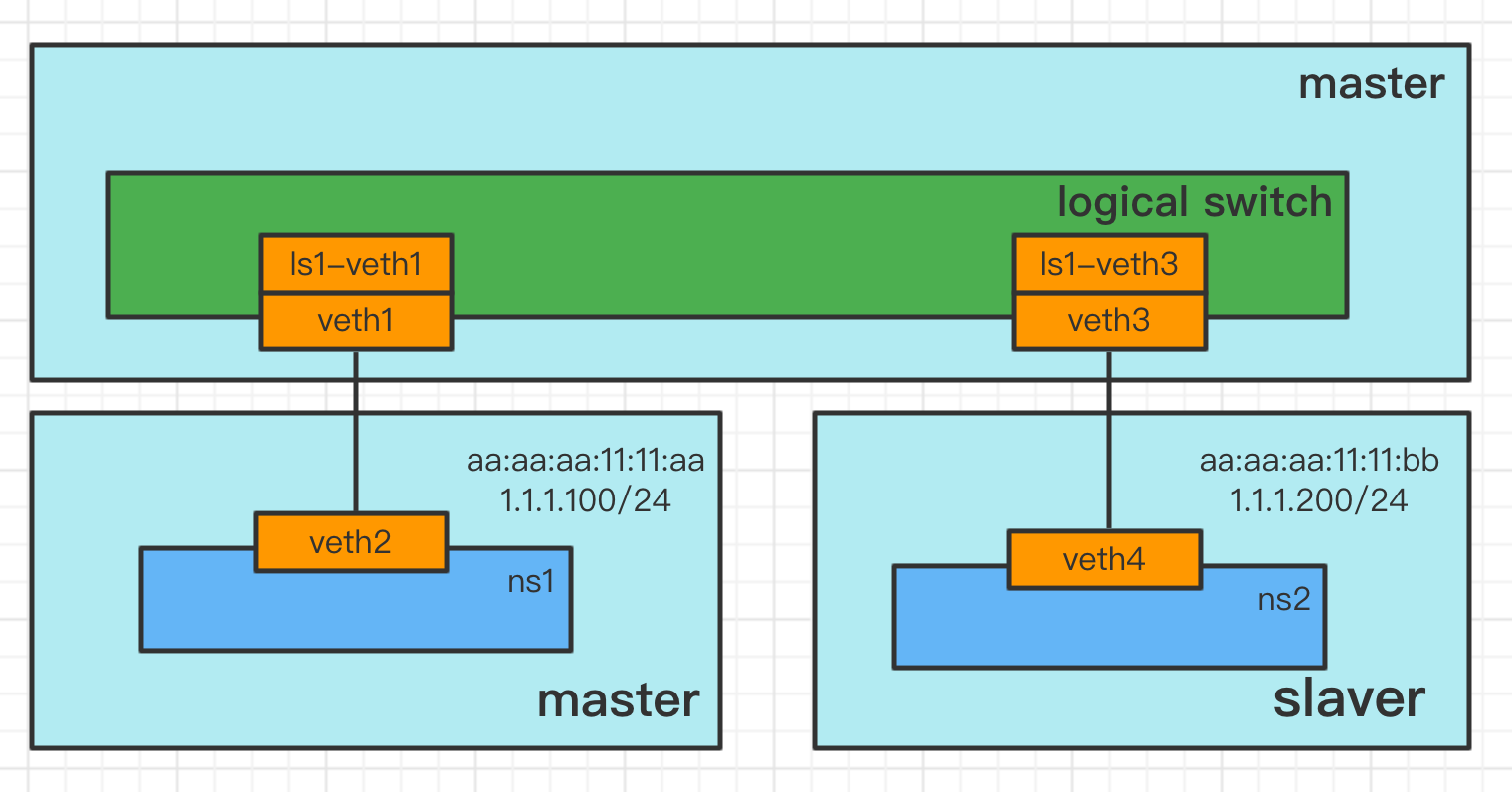

# master 节点操作 # 创建logical switch ovn-nbctl ls-add ls1 # 创建 logical port ls1-veth1 ovn-nbctl lsp-add ls1 ls1-veth1 ovn-nbctl lsp-set-addresses ls1-veth1 "aa:aa:aa:11:11:aa 1.1.1.100" ovn-nbctl lsp-set-port-security ls1-veth1 aa:aa:aa:11:11:aa # 创建 logical port ls1-veth3 ovn-nbctl lsp-add ls1 ls1-veth3 ovn-nbctl lsp-set-addresses ls1-veth3 "aa:aa:aa:11:11:bb 1.1.1.200" ovn-nbctl lsp-set-port-security ls1-veth3 aa:aa:aa:11:11:bb # 查看 ovn-nbctl show [root@master ~]# ovn-nbctl show switch 636b7ee7-08c7-4bb4-814c-e3db26cf194d (ls1) port ls1-veth1 addresses: ["aa:aa:aa:11:11:aa 1.1.1.100"] port ls1-veth3 addresses: ["aa:aa:aa:11:11:bb 1.1.1.200"]

# 配置命名空间 # master: ip netns add ns1 ip link add veth1 type veth peer name veth2 ifconfig veth1 up ifconfig veth2 up ip link set veth2 netns ns1 ip netns exec ns1 ip link set veth2 address aa:aa:aa:11:11:aa ip netns exec ns1 ip addr add 1.1.1.100/24 dev veth2 ip netns exec ns1 ip link set veth2 up ovs-vsctl add-port br-int veth1 ovs-vsctl set Interface veth1 external_ids:iface-id=ls1-veth1 ip netns exec ns1 ip addr show # slaver: ip netns add ns2 ip link add veth3 type veth peer name veth4 ifconfig veth3 up ifconfig veth4 up ip link set veth4 netns ns2 ip netns exec ns2 ip link set veth4 address aa:aa:aa:11:11:bb ip netns exec ns2 ip addr add 1.1.1.200/24 dev veth4 ip netns exec ns2 ip link set veth4 up ovs-vsctl add-port br-int veth3 ovs-vsctl set Interface veth3 external_ids:iface-id=ls1-veth3 ip netns exec ns2 ip addr show

# 测试验证 [root@slover ~]# ip netns exec ns2 ping 1.1.1.100 PING 1.1.1.100 (1.1.1.100) 56(84) bytes of data. 64 bytes from 1.1.1.100: icmp_seq=1 ttl=64 time=0.207 ms 64 bytes from 1.1.1.100: icmp_seq=2 ttl=64 time=0.220 ms 64 bytes from 1.1.1.100: icmp_seq=3 ttl=64 time=0.242 ms

# arp 请求,流标分析 # 使用 ovs-appctl ofproto/trace 工具分析 # ovs-appctl ofproto/trace br-int in_port=veth3,arp,arp_tpa=1.1.1.100,arp_op=1,dl_src=aa:aa:aa:11:11:bb,arp_sha=aa:aa:aa:11:11:bb

arp_op: 1>arp请求 2>arp回应

dl_src: 数据包源地址

arp_sha: arp请求源地址

输出结果如下:

[root@slaver ~]# ovs-appctl ofproto/trace br-int in_port=veth3,arp,arp_tpa=1.1.1.100,arp_op=1,dl_src=aa:aa:aa:11:11:bb,arp_sha=aa:aa:aa:11:11:bb Flow: arp,in_port=2,vlan_tci=0x0000,dl_src=aa:aa:aa:11:11:bb,dl_dst=00:00:00:00:00:00,arp_spa=0.0.0.0,arp_tpa=1.1.1.100,arp_op=1,arp_sha=aa:aa:aa:11:11:bb,arp_tha=00:00:00:00:00:00 bridge("br-int") ---------------- 0. in_port=2, priority 100 set_field:0x1->reg13 set_field:0x2->reg11 set_field:0x3->reg12 set_field:0x1->metadata set_field:0x2->reg14 resubmit(,8) 8. reg14=0x2,metadata=0x1,dl_src=aa:aa:aa:11:11:bb, priority 50, cookie 0x4516809e resubmit(,9) 9. metadata=0x1, priority 0, cookie 0x69a434c9 resubmit(,10) 10. arp,reg14=0x2,metadata=0x1,dl_src=aa:aa:aa:11:11:bb,arp_sha=aa:aa:aa:11:11:bb, priority 90, cookie 0x9c758ab1 resubmit(,11) 11. metadata=0x1, priority 0, cookie 0xd11d6a1c resubmit(,12) 12. metadata=0x1, priority 0, cookie 0x5ea99228 resubmit(,13) 13. metadata=0x1, priority 0, cookie 0x31645736 resubmit(,14) 14. metadata=0x1, priority 0, cookie 0x9e43efb6 resubmit(,15) 15. metadata=0x1, priority 0, cookie 0xdf5f1f28 resubmit(,16) 16. metadata=0x1, priority 0, cookie 0x910724b6 resubmit(,17) 17. metadata=0x1, priority 0, cookie 0xf3ea9e05 resubmit(,18) 18. metadata=0x1, priority 0, cookie 0x6a1b10cc resubmit(,19) 19. arp,metadata=0x1,arp_tpa=1.1.1.100,arp_op=1, priority 50, cookie 0x2af6bc00 move:NXM_OF_ETH_SRC[]->NXM_OF_ETH_DST[] -> NXM_OF_ETH_DST[] is now aa:aa:aa:11:11:bb set_field:aa:aa:aa:11:11:aa->eth_src set_field:2->arp_op move:NXM_NX_ARP_SHA[]->NXM_NX_ARP_THA[] -> NXM_NX_ARP_THA[] is now aa:aa:aa:11:11:bb set_field:aa:aa:aa:11:11:aa->arp_sha move:NXM_OF_ARP_SPA[]->NXM_OF_ARP_TPA[] -> NXM_OF_ARP_TPA[] is now 0.0.0.0 set_field:1.1.1.100->arp_spa move:NXM_NX_REG14[]->NXM_NX_REG15[] -> NXM_NX_REG15[] is now 0x2 load:0x1->NXM_NX_REG10[0] resubmit(,32) 32. priority 0 resubmit(,33) 33. reg15=0x2,metadata=0x1, priority 100 set_field:0x1->reg13 set_field:0x2->reg11 set_field:0x3->reg12 resubmit(,34) 34. priority 0 set_field:0->reg0 set_field:0->reg1 set_field:0->reg2 set_field:0->reg3 set_field:0->reg4 set_field:0->reg5 set_field:0->reg6 set_field:0->reg7 set_field:0->reg8 set_field:0->reg9 resubmit(,40) 40. metadata=0x1, priority 0, cookie 0x7a33311a resubmit(,41) 41. metadata=0x1, priority 0, cookie 0x9aa791f4 resubmit(,42) 42. metadata=0x1, priority 0, cookie 0x1ebbfc83 resubmit(,43) 43. metadata=0x1, priority 0, cookie 0x5623deb1 resubmit(,44) 44. metadata=0x1, priority 0, cookie 0xfe796bb7 resubmit(,45) 45. metadata=0x1, priority 0, cookie 0x30d4ee1b resubmit(,46) 46. metadata=0x1, priority 0, cookie 0x1f61291 resubmit(,47) 47. metadata=0x1, priority 0, cookie 0x6baadad7 resubmit(,48) 48. metadata=0x1, priority 0, cookie 0x37250958 resubmit(,49) 49. reg15=0x2,metadata=0x1,dl_dst=aa:aa:aa:11:11:bb, priority 50, cookie 0xa5f7f156 resubmit(,64) 64. reg10=0x1/0x1,reg15=0x2,metadata=0x1, priority 100 push:NXM_OF_IN_PORT[] set_field:0->in_port resubmit(,65) 65. reg15=0x2,metadata=0x1, priority 100 output:2 pop:NXM_OF_IN_PORT[] -> NXM_OF_IN_PORT[] is now 2 Final flow: arp,reg10=0x1,reg11=0x2,reg12=0x3,reg13=0x1,reg14=0x2,reg15=0x2,metadata=0x1,in_port=2,vlan_tci=0x0000,dl_src=aa:aa:aa:11:11:aa,dl_dst=aa:aa:aa:11:11:bb,arp_spa=1.1.1.100,arp_tpa=0.0.0.0,arp_op=2,arp_sha=aa:aa:aa:11:11:aa,arp_tha=aa:aa:aa:11:11:bb Megaflow: recirc_id=0,eth,arp,in_port=2,vlan_tci=0x0000/0x1000,dl_src=aa:aa:aa:11:11:bb,dl_dst=00:00:00:00:00:00,arp_spa=0.0.0.0,arp_tpa=1.1.1.100,arp_op=1,arp_sha=aa:aa:aa:11:11:bb,arp_tha=00:00:00:00:00:00 Datapath actions: set(eth(src=aa:aa:aa:11:11:aa,dst=aa:aa:aa:11:11:bb)),set(arp(sip=1.1.1.100,tip=0.0.0.0,op=2/0xff,sha=aa:aa:aa:11:11:aa,tha=aa:aa:aa:11:11:bb)),3 This flow is handled by the userspace slow path because it: - Uses action(s) not supported by datapath.

根据流标分析得出,跨节点的ARP请求,数据包并不会封装ARP然后跨节点发送出去,直接在流表里面构造ARP回应,源port直接返回。

在table 64中,由于ovs的设计,veth3出去的流量不能直接返回veth3,不然会直接drop,所以在流标规则中 NXM_OF_IN_PORT 先入栈,然后设置0,再出栈 NXM_OF_IN_PORT,这样数据包就不会被ovs drop了。

# icmp 报文流表分析 [root@slaver ~]# ovs-appctl ofproto/trace br-int in_port=veth3,icmp,dl_dst=aa:aa:aa:11:11:aa,dl_src=aa:aa:aa:11:11:bb,nw_dst=1.1.1.100 Flow: icmp,in_port=2,vlan_tci=0x0000,dl_src=aa:aa:aa:11:11:bb,dl_dst=aa:aa:aa:11:11:aa,nw_src=0.0.0.0,nw_dst=1.1.1.100,nw_tos=0,nw_ecn=0,nw_ttl=0,icmp_type=0,icmp_code=0 bridge("br-int") ---------------- 0. in_port=2, priority 100 set_field:0x1->reg13 set_field:0x2->reg11 set_field:0x3->reg12 set_field:0x1->metadata set_field:0x2->reg14 resubmit(,8) 8. reg14=0x2,metadata=0x1,dl_src=aa:aa:aa:11:11:bb, priority 50, cookie 0x4516809e resubmit(,9) 9. metadata=0x1, priority 0, cookie 0x69a434c9 resubmit(,10) 10. metadata=0x1, priority 0, cookie 0xef0328e4 resubmit(,11) 11. metadata=0x1, priority 0, cookie 0xd11d6a1c resubmit(,12) 12. metadata=0x1, priority 0, cookie 0x5ea99228 resubmit(,13) 13. metadata=0x1, priority 0, cookie 0x31645736 resubmit(,14) 14. metadata=0x1, priority 0, cookie 0x9e43efb6 resubmit(,15) 15. metadata=0x1, priority 0, cookie 0xdf5f1f28 resubmit(,16) 16. metadata=0x1, priority 0, cookie 0x910724b6 resubmit(,17) 17. metadata=0x1, priority 0, cookie 0xf3ea9e05 resubmit(,18) 18. metadata=0x1, priority 0, cookie 0x6a1b10cc resubmit(,19) 19. metadata=0x1, priority 0, cookie 0xbade306a resubmit(,20) 20. metadata=0x1, priority 0, cookie 0x635ad2c5 resubmit(,21) 21. metadata=0x1, priority 0, cookie 0x501ccd49 resubmit(,22) 22. metadata=0x1, priority 0, cookie 0x48021e7 resubmit(,23) 23. metadata=0x1, priority 0, cookie 0xd219abd1 resubmit(,24) 24. metadata=0x1, priority 0, cookie 0x18ca6e2a resubmit(,25) 25. metadata=0x1,dl_dst=aa:aa:aa:11:11:aa, priority 50, cookie 0x16a625f2 set_field:0x1->reg15 resubmit(,32) 32. reg15=0x1,metadata=0x1, priority 100 load:0x1->NXM_NX_TUN_ID[0..23] set_field:0x1->tun_metadata0 move:NXM_NX_REG14[0..14]->NXM_NX_TUN_METADATA0[16..30] -> NXM_NX_TUN_METADATA0[16..30] is now 0x2 output:1 -> output to kernel tunnel Final flow: icmp,reg11=0x2,reg12=0x3,reg13=0x1,reg14=0x2,reg15=0x1,tun_id=0x1,metadata=0x1,in_port=2,vlan_tci=0x0000,dl_src=aa:aa:aa:11:11:bb,dl_dst=aa:aa:aa:11:11:aa,nw_src=0.0.0.0,nw_dst=1.1.1.100,nw_tos=0,nw_ecn=0,nw_ttl=0,icmp_type=0,icmp_code=0 Megaflow: recirc_id=0,eth,ip,tun_id=0/0xffffff,tun_metadata0=NP,in_port=2,vlan_tci=0x0000/0x1000,dl_src=aa:aa:aa:11:11:bb,dl_dst=aa:aa:aa:11:11:aa,nw_ecn=0,nw_frag=no Datapath actions: set(tunnel(tun_id=0x1,dst=192.168.1.200,ttl=64,tp_dst=6081,geneve({class=0x102,type=0x80,len=4,0x20001}),flags(df|csum|key))),2