cephadm RBD块设备

一 工作目录

root@cephadm-deploy:~# cephadm shell

Inferring fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74

Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae54

root@cephadm-deploy:/# mkdir ceph

root@cephadm-deploy:/# cd ceph/

root@cephadm-deploy:/ceph# 二 存储池

2.1 创建存储池

root@cephadm-deploy:/ceph# ceph osd pool create wgsrbd 64 64

pool 'wgsrbd' created2.2 查看存储池

root@cephadm-deploy:/ceph# ceph osd pool ls |grep wgsrbd

wgsrbd2.3 启用存储池

root@cephadm-deploy:/ceph# ceph osd pool application enable wgsrbd rbd

enabled application 'rbd' on pool 'wgsrbd'2.4 初始化存储池

root@cephadm-deploy:/ceph# rbd pool init -p wgsrbd三 rbd镜像

3.1 创建镜像

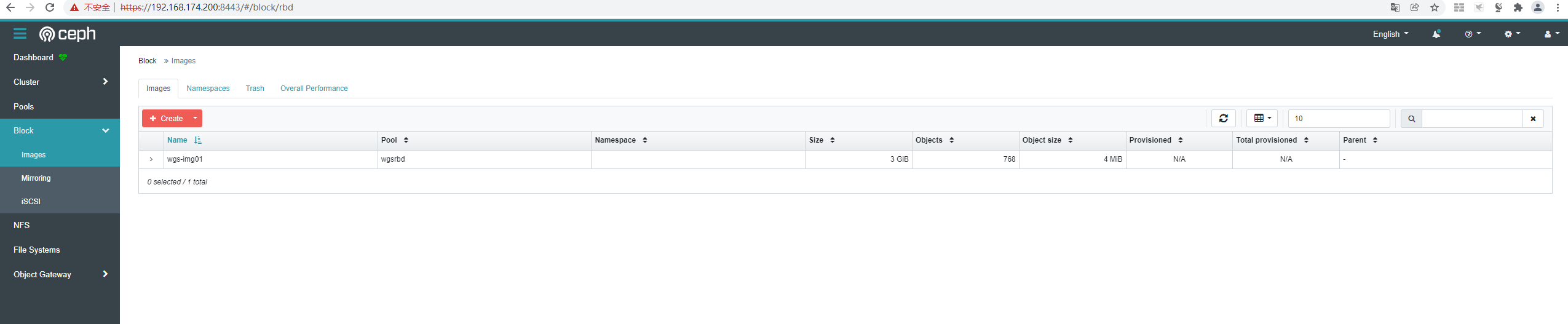

root@cephadm-deploy:/ceph# rbd create wgs-img01 --size 3G --pool wgsrbd --image-format 2 --image-feature layering3.2 查看镜像信息

rroot@cephadm-deploy:/ceph# rbd ls --pool wgsrbd -l --format json --pretty-format

[

{

"image": "wgs-img01",

"id": "1e604ed5c5f19",

"size": 3221225472,

"format": 2

}

]3.3 查看镜像特性

root@cephadm-deploy:/ceph# rbd --image wgs-img01 -p wgsrbd info

rbd image 'wgs-img01':

size 3 GiB in 768 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 1e604ed5c5f19

block_name_prefix: rbd_data.1e604ed5c5f19

format: 2

features: layering

op_features:

flags:

create_timestamp: Fri Dec 10 02:24:57 2021

access_timestamp: Fri Dec 10 02:24:57 2021

modify_timestamp: Fri Dec 10 02:24:57 2021四 RBD用户

4.1 创建rbd用户

root@cephadm-deploy:/ceph# ceph auth add client.wgsrbd mon 'allow r' osd 'allow rwx pool=wgsrbd'

added key for client.wgsrbd4.2 验证rbd用户信息

root@cephadm-deploy:/ceph# ceph auth get client.wgsrbd

[client.wgsrbd]

key = AQDUvLJhXTxNGxAAhYKUR84if5s97cjiE0iFbQ==

caps mon = "allow r"

caps osd = "allow rwx pool=wgsrbd"

exported keyring for client.wgsrbd4.3 创建rbd用户keyring文件

root@cephadm-deploy:/ceph# ceph-authtool --create-keyring ceph.client.wgsrbd.keyring

creating ceph.client.wgsrbd.keyring4.4 导出rbd用户keyring

root@cephadm-deploy:/ceph# ceph auth get client.wgsrbd -o ceph.client.wgsrbd.keyring

exported keyring for client.wgsrbd4.5 验证rbd用户keyring

root@cephadm-deploy:/ceph# cat ceph.client.wgsrbd.keyring

[client.wgsrbd]

key = AQDUvLJhXTxNGxAAhYKUR84if5s97cjiE0iFbQ==

caps mon = "allow r"

caps osd = "allow rwx pool=wgsrbd"4.6 分发rbd用户keyring

root@cephadm-deploy:/ceph# scp ceph.client.wgsrbd.keyring root@ceph-client01:/etc/ceph五 配置客户端

5.1 安装ceph-common

root@ceph-client01:~# apt -y install ceph-common5.2 验证客户端权限

root@ceph-client01:~# ceph --id wgsrbd -s cluster: id: 0888a64c-57e6-11ec-ad21-fbe9db6e2e74 health: HEALTH_OKservices:

mon: 5 daemons, quorum cephadm-deploy,ceph-node01,ceph-node02,ceph-node03,ceph-node04 (age 45m)

mgr: cephadm-deploy.jgiulj(active, since 46m), standbys: ceph-node01.anwvfy

mds: 1/1 daemons up, 2 standby

osd: 15 osds: 15 up (since 45m), 15 in (since 37h)

rgw: 6 daemons active (3 hosts, 1 zones)

rgw-nfs: 2 daemons active (2 hosts, 1 zones)

data:

volumes: 1/1 healthy

pools: 11 pools, 305 pgs

objects: 279 objects, 5.7 MiB

usage: 491 MiB used, 299 GiB / 300 GiB avail

pgs: 305 active+clean

5.3 客户端映射rbd镜像

root@ceph-client01:~# rbd --id wgsrbd -p wgsrbd map wgs-img01

/dev/rbd05.4 验证映射镜像

root@ceph-client01:~# rbd showmapped

id pool namespace image snap device

0 wgsrbd wgs-img01 - /dev/rbd05.5 格式化rbd镜像

ceph集群rbd设备创建后需要格式化一次后才可以挂载。

root@ceph-client01:~# mkfs.xfs /dev/rbd0

meta-data=/dev/rbd0 isize=512 agcount=8, agsize=98304 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=786432, imaxpct=25

= sunit=16 swidth=16 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=16 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=05.6 客户端挂载rbd

5.6.1 创建挂载点

root@ceph-client01:~# mkdir -pv /data/rbd_data

mkdir: created directory '/data/rbd_data'5.6.2 挂载rbd

root@ceph-client01:~# mount /dev/rbd0 /data/rbd_data/5.7 验证挂载

root@ceph-client01:~# df -TH

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 982M 0 982M 0% /dev

tmpfs tmpfs 206M 2.0M 204M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv ext4 20G 11G 8.9G 54% /

tmpfs tmpfs 1.1G 0 1.1G 0% /dev/shm

tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock

tmpfs tmpfs 1.1G 0 1.1G 0% /sys/fs/cgroup

/dev/rbd0 xfs 3.3G 57M 3.2G 2% /data/rbd_data5.8 开机自动挂载rbd

root@ceph-client01:~# cat /etc/rc.d/rc.local

rbd --user wgsrbd -p wgsrbd map wgs-img01

mount /dev/rbd0 /data/rbd-data5.9 卸载rbd

root@ceph-client01:~# umount /data/rbd_data 5.10 取消rbd映射

root@ceph-client01:~# rbd --user wgsrbd -p wgsrbd unmap wgs-img015.11 查看当前rbd映射

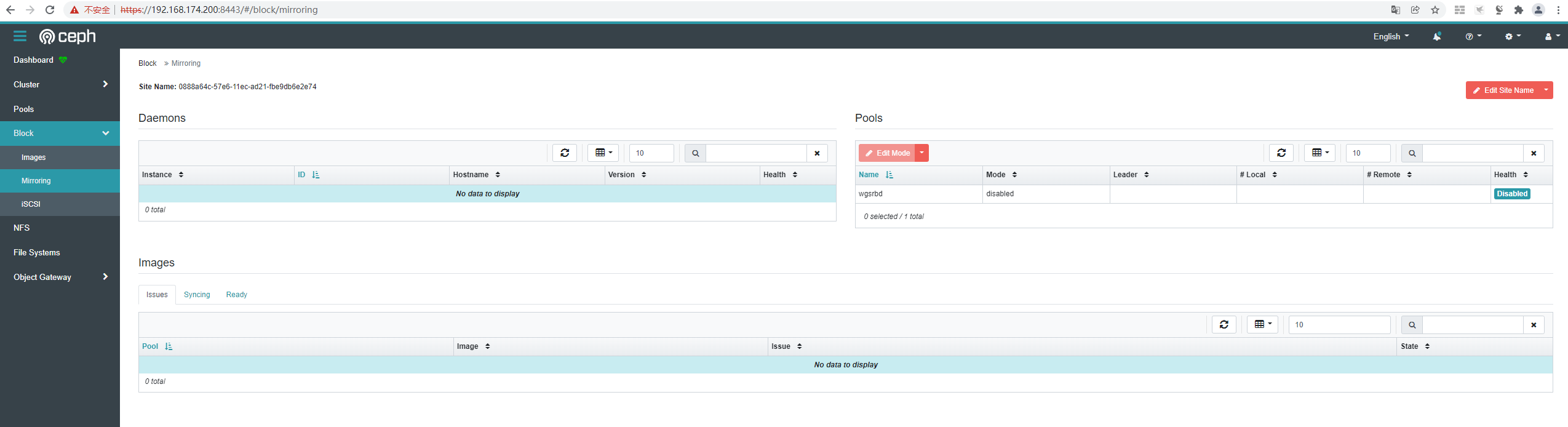

root@ceph-client01:~# rbd showmapped六 查看ceph dashboard

浙公网安备 33010602011771号

浙公网安备 33010602011771号