cephadm安装ceph集群

一 系统规划

1.1 系统版本信息

~# cat /etc/issue

Ubuntu 20.04.3 LTS \n \l1.2 系统时间同步

~# apt -y install chrony

~# systemctl start chrony

~# systemctl enable chrony1.3 服务器规划

| 主机名 | IP | 角色 |

| cephadm-deploy | 192.168.174.200 | cephadm,monitor,mgr,rgw,mds,osd,nfs |

| ceph-node01 | 192.168.174.103 | monitor,mgr,rgw,mds,osd,nfs |

| ceph-node02 | 192.168.174.104 | monitor,mgr,rgw,mds,osd,nfs |

| ceph-node03 | 192.168.174.105 | monitor,mgr,rgw,mds,osd,nfs |

| ceph-node04 | 192.168.174.120 | monitor,mgr,rgw,mds,osd,nfs |

1.4 安装docker

~# apt -y install docker-ce

~# systemctl enable docker-ce1.5 设置主机名称

~# cat /etc/hosts

192.168.174.200 cephadm-deploy

192.168.174.103 ceph-node01

192.168.174.104 ceph-node02

192.168.174.105 ceph-node03

192.168.174.120 ceph-node041.6 软件清单

ceph: 16.2.7 pacific (stable)

cephadm:16.2.7

二 安装cephadm

2.1 基于curl安装

2.1.1 下载执行脚本

root@cephadm-deploy:~/cephadm# curl --silent --remote-name --location https://github.com/ceph/ceph/raw/pacific/src/cephadm/cephadm2.1.2 添加执行权限

root@cephadm-deploy:~/cephadm# chmod +x cephadm2.1.3 添加cephadm源

root@cephadm-deploy:~/cephadm# ./cephadm add-repo --release pacific

Installing repo GPG key from https://download.ceph.com/keys/release.gpg...

Installing repo file at /etc/apt/sources.list.d/ceph.list...

Updating package list...

Completed adding repo.2.1.4 查看cephadm版本信息

root@cephadm-deploy:~/cephadm# apt-cache madison cephadm

cephadm | 16.2.7-1focal | https://download.ceph.com/debian-pacific focal/main amd64 Packages

cephadm | 15.2.14-0ubuntu0.20.04.1 | http://mirrors.aliyun.com/ubuntu focal-updates/universe amd64 Packages

cephadm | 15.2.12-0ubuntu0.20.04.1 | http://mirrors.aliyun.com/ubuntu focal-security/universe amd64 Packages

cephadm | 15.2.1-0ubuntu1 | http://mirrors.aliyun.com/ubuntu focal/universe amd64 Packages2.1.5 安装cephadm

root@cephadm-deploy:~/cephadm# ./cephadm install2.1.6 查看cephadm安装路径

root@cephadm-deploy:~/cephadm# which cephadm

/usr/sbin/cephadm2.2 包管理器安装

2.2.1 添加源

root@cephadm-deploy:~/cephadm# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add -

OK

root@cephadm-deploy:~/cephadm# echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific $(lsb_release -cs) main" >> /etc/apt/sources.list

root@cephadm-deploy:~/cephadm# apt update2.2.2 查看cephadm版本信息

root@cephadm-deploy:~/cephadm# apt-cache madison cephadm

cephadm | 16.2.7-1focal | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific focal/main amd64 Packages

cephadm | 15.2.14-0ubuntu0.20.04.1 | http://mirrors.aliyun.com/ubuntu focal-updates/universe amd64 Packages

cephadm | 15.2.12-0ubuntu0.20.04.1 | http://mirrors.aliyun.com/ubuntu focal-security/universe amd64 Packages

cephadm | 15.2.1-0ubuntu1 | http://mirrors.aliyun.com/ubuntu focal/universe amd64 Packages2.2.3 安装cephadm

root@cephadm-deploy:~/cephadm# apt -y install cephadmin2.3 cephadm使用帮助

查看代码

~# cephadm -h usage: cephadm [-h] [--image IMAGE] [--docker] [--data-dir DATA_DIR] [--log-dir LOG_DIR] [--logrotate-dir LOGROTATE_DIR] [--sysctl-dir SYSCTL_DIR] [--unit-dir UNIT_DIR] [--verbose] [--timeout TIMEOUT] [--retry RETRY] [--env ENV] [--no-container-init] {version,pull,inspect-image,ls,list-networks,adopt,rm-daemon,rm-cluster,run,shell,enter,ceph-volume,zap-osds,unit,logs,bootstrap,deploy,check-host,prepare-host,add-repo,rm-repo,install,registry-login,gather-facts,exporter,host-maintenance} ...Bootstrap Ceph daemons with systemd and containers.

positional arguments:

{version,pull,inspect-image,ls,list-networks,adopt,rm-daemon,rm-cluster,run,shell,enter,ceph-volume,zap-osds,unit,logs,bootstrap,deploy,check-host,prepare-host,add-repo,rm-repo,install,registry-login,gather-facts,exporter,host-maintenance}

sub-command

version #从容器中获取ceph版本

pull #pull 最新 image版本

inspect-image #检查本地image

ls #列出此主机上的守护程序实例

list-networks #列出IP网络

adopt #采用使用不同工具部署的守护程序

rm-daemon #删除守护程序实例

rm-cluster #删除群集的所有守护进程

run #在容器中,在前台运行ceph守护进程

shell #在守护进程容器中运行交互式shell

enter #在运行的守护程序容器中运行交互式shell

ceph-volume #在容器内运行ceph volume

zap-osds #zap与特定fsid关联的所有OSD

unit #操作守护进程的systemd单元

logs #打印守护程序容器的日志

bootstrap #引导群集(mon+mgr守护进程)

deploy #部署守护进程

check-host #检查主机配置

prepare-host #准备主机供cephadm使用

add-repo #配置包存储库

rm-repo #删除包存储库配置

install #安装ceph软件包

registry-login #将主机登录到经过身份验证的注册表

gather-facts #收集并返回主机相关信息(JSON格式)

exporter #在exporter模式(web服务)下启动cephadm,提供主机/守护程序/磁盘元数据

host-maintenance #管理主机的维护状态

optional arguments:

-h, --help #显示此帮助消息并退出

--image IMAGE #container image. 也可以通过“CEPHADM_IMAGE”环境变量进行设置(默认值:无)

--docker #使用docker而不是podman(默认值:False)

--data-dir DATA_DIR #守护程序数据的基本目录(默认值:/var/lib/ceph)

--log-dir LOG_DIR #守护程序日志的基本目录(默认值:/var/log/ceph)

--logrotate-dir LOGROTATE_DIR

#logrotate配置文件的位置(默认值:/etc/logrotate.d)

--sysctl-dir SYSCTL_DIR

#sysctl配置文件的位置(默认值:/usr/lib/sysctl.d)

--unit-dir UNIT_DIR #systemd装置的基本目录(默认值:/etc/systemd/system)

--verbose, -v #显示调试级别日志消息(默认值:False)

--timeout TIMEOUT #以秒为单位的超时(默认值:None)

--retry RETRY #最大重试次数(默认值:15)

--env ENV, -e ENV #设置环境变量(默认值:[])

--no-container-init #不使用“---init”运行podman/docker(默认值:False)

三 引导ceph集群

官方文档:https://docs.ceph.com/en/pacific/install/

3.1 ceph bootstrap

-

在本地主机上为新集群创建一个监视器和管理器守护进程。

-

为 Ceph 集群生成一个新的 SSH 密钥并将其添加到 root 用户的

/root/.ssh/authorized_keys文件中。 -

将公钥的副本写入

/etc/ceph/ceph.pub. -

将最小配置文件写入

/etc/ceph/ceph.conf. 需要此文件才能与新集群通信。 -

将

client.admin管理(特权!)秘密密钥的副本写入/etc/ceph/ceph.client.admin.keyring. -

将

_admin标签添加到引导主机。默认情况下,这个标签的任何主机将(也)获得的副本/etc/ceph/ceph.conf和/etc/ceph/ceph.client.admin.keyring。

3.2 引导集群

查看代码

root@cephadm-deploy:~/cephadm# ./cephadm bootstrap --mon-ip 192.168.174.200 Verifying podman|docker is present... Verifying lvm2 is present... Verifying time synchronization is in place... Unit chrony.service is enabled and running Repeating the final host check... docker is present systemctl is present lvcreate is present Unit chrony.service is enabled and running Host looks OK Cluster fsid: 0888a64c-57e6-11ec-ad21-fbe9db6e2e74 Verifying IP 192.168.174.200 port 3300 ... Verifying IP 192.168.174.200 port 6789 ... Mon IP `192.168.174.200` is in CIDR network `192.168.174.0/24` - internal network (--cluster-network) has not been provided, OSD replication will default to the public_network Pulling container image quay.io/ceph/ceph:v16... Ceph version: ceph version 16.2.7 (dd0603118f56ab514f133c8d2e3adfc983942503) pacific (stable) Extracting ceph user uid/gid from container image... Creating initial keys... Creating initial monmap... Creating mon... Waiting for mon to start... Waiting for mon... mon is available Assimilating anything we can from ceph.conf... Generating new minimal ceph.conf... Restarting the monitor... Setting mon public_network to 192.168.174.0/24 Wrote config to /etc/ceph/ceph.conf Wrote keyring to /etc/ceph/ceph.client.admin.keyring Creating mgr... Verifying port 9283 ... Waiting for mgr to start... Waiting for mgr... mgr not available, waiting (1/15)... mgr not available, waiting (2/15)... mgr not available, waiting (3/15)... mgr not available, waiting (4/15)... mgr is available Enabling cephadm module... Waiting for the mgr to restart... Waiting for mgr epoch 4... mgr epoch 4 is available Setting orchestrator backend to cephadm... Generating ssh key... Wrote public SSH key to /etc/ceph/ceph.pub Adding key to root@localhost authorized_keys... Adding host cephadm-deploy... Deploying mon service with default placement... Deploying mgr service with default placement... Deploying crash service with default placement... Deploying prometheus service with default placement... Deploying grafana service with default placement... Deploying node-exporter service with default placement... Deploying alertmanager service with default placement... Enabling the dashboard module... Waiting for the mgr to restart... Waiting for mgr epoch 8... mgr epoch 8 is available Generating a dashboard self-signed certificate... Creating initial admin user... Fetching dashboard port number... Ceph Dashboard is now available at:URL: https://ceph-deploy:8443/ User: admin Password: pezc2ncdiiEnabling client.admin keyring and conf on hosts with "admin" label

You can access the Ceph CLI with:sudo ./cephadm shell --fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyringPlease consider enabling telemetry to help improve Ceph:

ceph telemetry onFor more information see:

https://docs.ceph.com/docs/pacific/mgr/telemetry/

Bootstrap complete.

3.3 查看ceph镜像

root@cephadm-deploy:~/cephadm# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0fa08c0335a7 quay.io/prometheus/alertmanager:v0.20.0 "/bin/alertmanager -…" 17 minutes ago Up 17 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-alertmanager-cephadm-deploy

fb58cbd1517b quay.io/prometheus/prometheus:v2.18.1 "/bin/prometheus --c…" 17 minutes ago Up 17 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-prometheus-cephadm-deploy

a37f6d4e5696 quay.io/prometheus/node-exporter:v0.18.1 "/bin/node_exporter …" 17 minutes ago Up 17 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-node-exporter-cephadm-deploy

331cf9c8b544 quay.io/ceph/ceph "/usr/bin/ceph-crash…" 17 minutes ago Up 17 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-crash-cephadm-deploy

1fb47f7aba04 quay.io/ceph/ceph:v16 "/usr/bin/ceph-mgr -…" 18 minutes ago Up 18 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-mgr-cephadm-deploy-jgiulj

c1ac511761ec quay.io/ceph/ceph:v16 "/usr/bin/ceph-mon -…" 18 minutes ago Up 18 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-mon-cephadm-deploy- ceph-mgr ceph管理程序

- ceph-monitor ceph监视器

- ceph-crash 崩溃数据收集模块

- prometheus prometheus监控组件

- grafana 监控数据展示dashboard

- alertmanager prometheus告警组件

- node_exporter prometheus节点数据收集组件

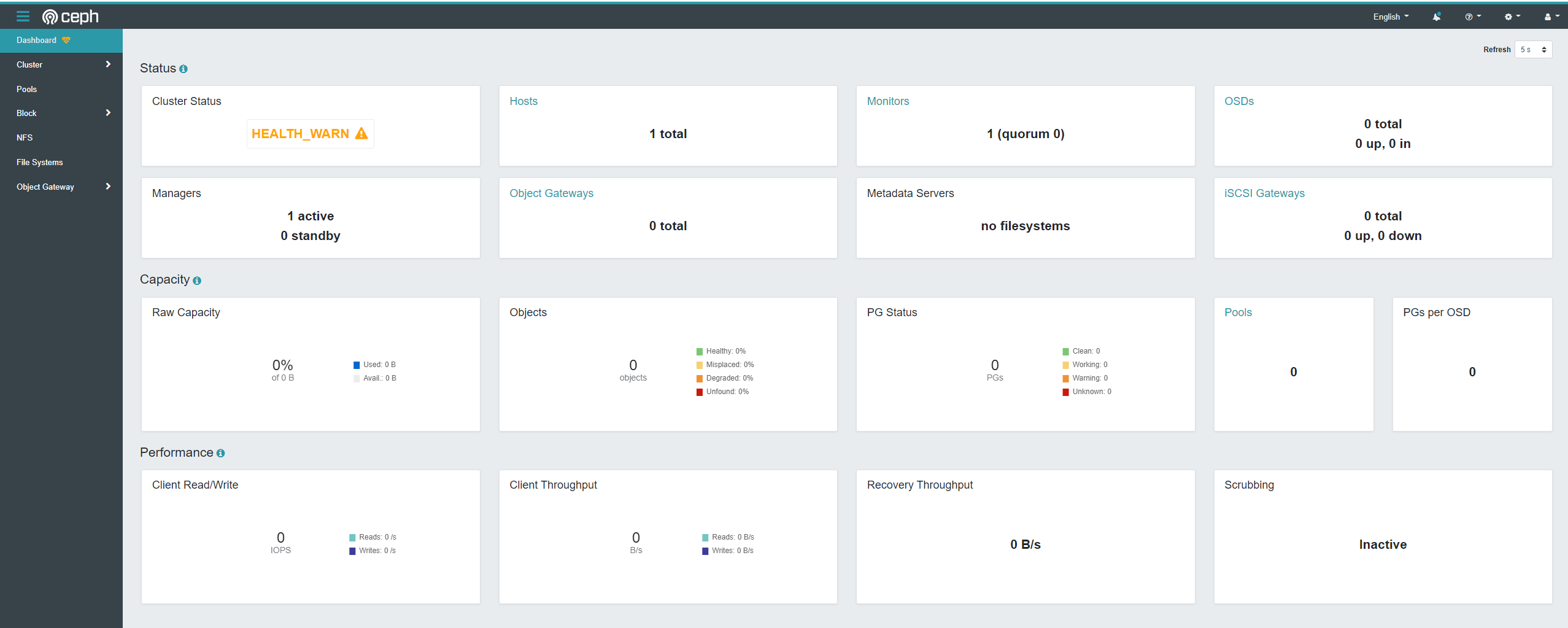

3.4 访问ceph Dashboard

User: admin

Password: pezc2ncdii

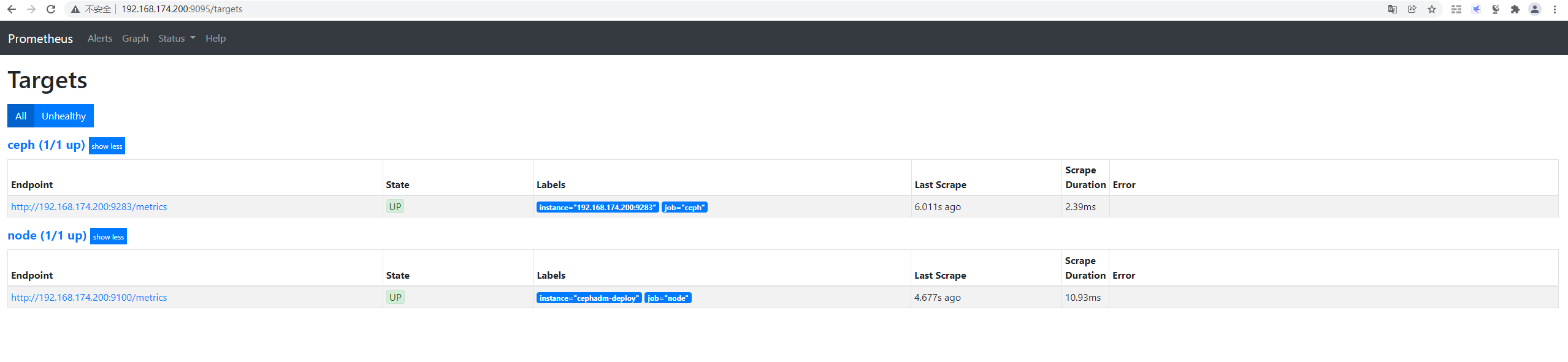

3.5 访问Prometheus web界面

3.6 访问grafana web界面

3.7 启用ceph CLI

root@cephadm-deploy:~/cephadm# sudo ./cephadm shell --fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae543.8 验证ceph集群信息

root@cephadm-deploy:/# ceph version ceph version 16.2.7 (dd0603118f56ab514f133c8d2e3adfc983942503) pacific (stable)root@cephadm-deploy:/# ceph fsid

0888a64c-57e6-11ec-ad21-fbe9db6e2e74root@cephadm-deploy:/# ceph -s

cluster:

id: 0888a64c-57e6-11ec-ad21-fbe9db6e2e74

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3services:

mon: 1 daemons, quorum cephadm-deploy (age 22m)

mgr: cephadm-deploy.jgiulj(active, since 20m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

3.9 查看组件运行状态

查看代码

root@cephadm-deploy:/# ceph orch ps NAME HOST PORTS STATUS REFRESHED AGE MEM USE MEM LIM VERSION IMAGE ID CONTAINER ID alertmanager.cephadm-deploy cephadm-deploy *:9093,9094 running (23m) 2m ago 23m 14.7M - 0.20.0 0881eb8f169f 0fa08c0335a7 crash.cephadm-deploy cephadm-deploy running (23m) 2m ago 23m 10.2M - 16.2.7 cc266d6139f4 331cf9c8b544 mgr.cephadm-deploy.jgiulj cephadm-deploy *:9283 running (24m) 2m ago 24m 433M - 16.2.7 cc266d6139f4 1fb47f7aba04 mon.cephadm-deploy cephadm-deploy running (24m) 2m ago 24m 61.5M 2048M 16.2.7 cc266d6139f4 c1ac511761ec node-exporter.cephadm-deploy cephadm-deploy *:9100 running (23m) 2m ago 23m 17.3M - 0.18.1 e5a616e4b9cf a37f6d4e5696 prometheus.cephadm-deploy cephadm-deploy *:9095 running (23m) 2m ago 23m 64.9M - 2.18.1 de242295e225 fb58cbd1517broot@cephadm-deploy:/# ceph orch ps --daemon_type mgr

NAME HOST PORTS STATUS REFRESHED AGE MEM USE MEM LIM VERSION IMAGE ID CONTAINER ID

mgr.cephadm-deploy.jgiulj cephadm-deploy *:9283 running (45m) 2m ago 45m 419M - 16.2.7 cc266d6139f4 1fb47f7aba04

root@cephadm-deploy:/# ceph orch ls

NAME PORTS RUNNING REFRESHED AGE PLACEMENT

alertmanager ?:9093,9094 1/1 2m ago 45m count:1

crash 1/1 2m ago 45m *

grafana ?:3000 1/1 2m ago 45m count:1

mgr 1/2 2m ago 45m count:2

mon 1/5 2m ago 45m count:5

node-exporter ?:9100 1/1 2m ago 45m *

prometheus ?:9095 1/1 2m ago 45m count:1

root@cephadm-deploy:/# ceph orch ls mgr

NAME PORTS RUNNING REFRESHED AGE PLACEMENT

mgr 1/2 2m ago 45m count:2

3.10 退出ceph CLI

root@cephadm-deploy:/# exit

exit3.11 安装ceph-common组件

root@cephadm-deploy:~/cephadm# cephadm add-repo --release pacific

root@cephadm-deploy:~/cephadm# ./cephadm install ceph-common

Installing packages ['ceph-common']...四 ceph集群主机管理

官方文档:https://docs.ceph.com/en/pacific/cephadm/host-management/#cephadm-adding-hosts

ceph集群添加主机后会自动扩展monitor和manager数量。

4.1 列出集群主机

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph orch host ls

Inferring fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74

Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae54

HOST ADDR LABELS STATUS

cephadm-deploy 192.168.174.200 _admin 4.2 添加主机

4.2.1 新主机添加ceph集群ssh 密钥

root@cephadm-deploy:~/cephadm# ssh-copy-id -f -i /etc/ceph/ceph.pub ceph-node01

root@cephadm-deploy:~/cephadm# ssh-copy-id -f -i /etc/ceph/ceph.pub ceph-node02

root@cephadm-deploy:~/cephadm# ssh-copy-id -f -i /etc/ceph/ceph.pub ceph-node03

root@cephadm-deploy:~/cephadm# ssh-copy-id -f -i /etc/ceph/ceph.pub ceph-node044.2.2 ceph集群添加主机

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph orch host add ceph-node01 192.168.174.103

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph orch host add ceph-node02 192.168.174.104

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph orch host add ceph-node03 192.168.174.105

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph orch host add ceph-node04 192.168.174.1204.3 验证主机信息

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph orch host ls

Inferring fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74

Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae54

HOST ADDR LABELS STATUS

ceph-node01 192.168.174.103

ceph-node02 192.168.174.104

ceph-node03 192.168.174.105

ceph-node04 192.168.174.120

cephadm-deploy 192.168.174.200 _admin 4.4 查看ceph集群节点信息

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph orch ls

Inferring fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74

Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae54

NAME PORTS RUNNING REFRESHED AGE PLACEMENT

alertmanager ?:9093,9094 1/1 - 72m count:1

crash 5/5 9m ago 72m *

grafana ?:3000 1/1 9m ago 72m count:1

mgr 2/2 9m ago 72m count:2

mon 5/5 9m ago 72m count:5

node-exporter ?:9100 5/5 9m ago 72m *

prometheus ?:9095 1/1 - 72m count:1 4.5 验证当前ceph集群状态

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph -s Inferring fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74 Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae54 cluster: id: 0888a64c-57e6-11ec-ad21-fbe9db6e2e74 health: HEALTH_WARN OSD count 0 < osd_pool_default_size 3services:

mon: 5 daemons, quorum cephadm-deploy,ceph-node01,ceph-node02,ceph-node03,ceph-node04 (age 3m)

mgr: cephadm-deploy.jgiulj(active, since 75m), standbys: ceph-node01.anwvfy

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

4.6 其它节点验证镜像

root@ceph-node01:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

05349d266ffa quay.io/prometheus/node-exporter:v0.18.1 "/bin/node_exporter …" 7 minutes ago Up 7 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-node-exporter-ceph-node01

76b58ef1b83a quay.io/ceph/ceph "/usr/bin/ceph-mon -…" 7 minutes ago Up 7 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-mon-ceph-node01

e673814b6a59 quay.io/ceph/ceph "/usr/bin/ceph-mgr -…" 7 minutes ago Up 7 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-mgr-ceph-node01-anwvfy

60a34da66725 quay.io/ceph/ceph "/usr/bin/ceph-crash…" 7 minutes ago Up 7 minutes ceph-0888a64c-57e6-11ec-ad21-fbe9db6e2e74-crash-ceph-node01五 OSD服务

官方文档:https://docs.ceph.com/en/pacific/cephadm/services/osd/#cephadm-deploy-osds

5.1 部署要求

-

设备必须没有分区。 -

设备不得具有任何 LVM 状态。 -

不得安装设备。 -

设备不能包含文件系统。 -

设备不得包含 Ceph BlueStore OSD。 -

设备必须大于 5 GB。

5.2 查看集群主机存储设备清单

查看代码

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph orch device ls

Inferring fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74

Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae54

HOST PATH TYPE DEVICE ID SIZE AVAILABLE REJECT REASONS

ceph-node01 /dev/sdb hdd 21.4G Yes

ceph-node01 /dev/sdc hdd 21.4G Yes

ceph-node01 /dev/sdd hdd 21.4G Yes

ceph-node02 /dev/sdb hdd 21.4G Yes

ceph-node02 /dev/sdc hdd 21.4G Yes

ceph-node02 /dev/sdd hdd 21.4G Yes

ceph-node03 /dev/sdb hdd 21.4G Yes

ceph-node03 /dev/sdc hdd 21.4G Yes

ceph-node03 /dev/sdd hdd 21.4G Yes

ceph-node04 /dev/sdb hdd 21.4G Yes

ceph-node04 /dev/sdc hdd 21.4G Yes

ceph-node04 /dev/sdd hdd 21.4G Yes

cephadm-deploy /dev/sdb hdd 21.4G Yes

cephadm-deploy /dev/sdc hdd 21.4G Yes

cephadm-deploy /dev/sdd hdd 21.4G Yes5.3 创建OSD方法

5.3.1 从特定主机上的特定设备创建 OSD

~# ceph orch daemon add osd ceph-node01:/dev/sdd5.3.2 从yml文件创建OSD

~# ceph orch apply -i spec.yml5.3.3 批量创建OSD

~# ceph orch apply osd --all-available-devices运行上述命令后:

-

如果您向集群添加新磁盘,它们将自动用于创建新的 OSD。

-

如果您移除 OSD 并清理 LVM 物理卷,则会自动创建一个新的 OSD。

如果您想避免这种行为(禁用在可用设备上自动创建 OSD),请使用--unmanaged=true参数;

注意:

ceph orch apply的默认行为导致cephadm不断进行协调。这意味着cephadm会在检测到新驱动器后立即创建OSD。

设置unmanaged:True将禁用OSD的创建。如果设置了unmanaged:True,则即使应用了新的OSD服务,也不会发生任何事情。

ceph orch daemon add创建OSD,但不添加OSD服务。

5.3.4 查看创建OSD

root@cephadm-deploy:~/cephadm# ./cephadm shell ceph orch device ls

Inferring fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74

Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae54

HOST PATH TYPE DEVICE ID SIZE AVAILABLE REJECT REASONS

ceph-node01 /dev/sdb hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node01 /dev/sdc hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node01 /dev/sdd hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node02 /dev/sdb hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node02 /dev/sdc hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node02 /dev/sdd hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node03 /dev/sdb hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node03 /dev/sdc hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node03 /dev/sdd hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node04 /dev/sdb hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node04 /dev/sdc hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

ceph-node04 /dev/sdd hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

cephadm-deploy /dev/sdb hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

cephadm-deploy /dev/sdc hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked

cephadm-deploy /dev/sdd hdd 21.4G Insufficient space (<10 extents) on vgs, LVM detected, locked 5.3.5 查看ceph集群状态

root@cephadm-deploy:~/cephadm# cephadm shell ceph -s Inferring fsid 0888a64c-57e6-11ec-ad21-fbe9db6e2e74 Using recent ceph image quay.io/ceph/ceph@sha256:bb6a71f7f481985f6d3b358e3b9ef64c6755b3db5aa53198e0aac38be5c8ae54 cluster: id: 0888a64c-57e6-11ec-ad21-fbe9db6e2e74 health: HEALTH_OKservices:

mon: 5 daemons, quorum cephadm-deploy,ceph-node01,ceph-node02,ceph-node03,ceph-node04 (age 37m)

mgr: cephadm-deploy.jgiulj(active, since 109m), standbys: ceph-node01.anwvfy

osd: 15 osds: 15 up (since 82s), 15 in (since 104s)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 82 MiB used, 300 GiB / 300 GiB avail

pgs: 1 active+clean

六 删除服务

6.1 自动删除

ceph orch rm <service-name>6.2 手动删除

ceph orch daemon rm <daemon name>... [--force]6.3 禁用守护进程的自动管理

cat mgr.yaml

service_type: mgr

unmanaged: true

placement:

label: mgrceph orch apply -i mgr.yaml在服务规范中应用此更改后,cephadm 将不再部署任何新的守护进程(即使放置规范与其他主机匹配)。