k8s之k8s集群部署

一 kubernetes基础环境搭建

1.1 kubernetes集群规划

1.1.1 部署架构

略

1.1.2 服务器规划

| 类型 | 服务器IP | 主机名 | 配置 | vip |

| k8s-deploy | 192.168.174.200 | k8s-deploy | ||

| k8s-master-01 | 192.168.174.100 | k8s-master-01 | 16C 16G 200G | 192.168.174.20 |

| k8s-master-02 | 192.168.174.101 | k8s-master-02 | 192.168.174.20 | |

| k8s-master-03 | 192.168.174.102 | k8s-master-03 | 192.168.174.20 | |

| k8s-etcd-01 | 192.168.174.103 | k8s-etcd-01 | 8c 16G 200/ssd | |

| k8s-etcd-02 | 192.168.174.104 | k8s-etcd-02 | ||

| k8s-etcd-03 | 192.168.174.105 | k8s-etcd-03 | ||

| k8s-node-01 | 192.168.174.106 | k8s-node-01 | 48C 256G 2T/ssd | |

| k8s-node-02 | 192.168.174.107 | k8s-node-02 | ||

| k8s-node-03 | 192.168.174.108 | k8s-node-03 |

1.2 基础环境准备

1.2.1 设置时间同步

集群各个节点设置时间同步

apt -y install chrony

systemctl enable chrony1.2.2 内核参数设置

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# sysctl params required by setup, params persist across reboots

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# Apply sysctl params without reboot

sudo sysctl --system二 在部署节点安装ansible及准备ssh免密登陆

2.1 在ansible控制端配置免密码登录

root@k8s-deploy:~# apt -y install sshpass

root@k8s-deploy:~# ssh-keygen

root@k8s-deploy:~# sshpass -p 123456 ssh-copy-id 192.168.174.100 -o StrictHostKeyChecking=no

root@k8s-deploy:~# sshpass -p 123456 ssh-copy-id 192.168.174.101 -o StrictHostKeyChecking=no

root@k8s-deploy:~# sshpass -p 123456 ssh-copy-id 192.168.174.102 -o StrictHostKeyChecking=no

root@k8s-deploy:~# sshpass -p 123456 ssh-copy-id 192.168.174.103 -o StrictHostKeyChecking=no

root@k8s-deploy:~# sshpass -p 123456 ssh-copy-id 192.168.174.104 -o StrictHostKeyChecking=no

root@k8s-deploy:~# sshpass -p 123456 ssh-copy-id 192.168.174.105 -o StrictHostKeyChecking=no

root@k8s-deploy:~# sshpass -p 123456 ssh-copy-id 192.168.174.106 -o StrictHostKeyChecking=no

root@k8s-deploy:~# sshpass -p 123456 ssh-copy-id 192.168.174.107 -o StrictHostKeyChecking=no

root@k8s-deploy:~# sshpass -p 123456 ssh-copy-id 192.168.174.108 -o StrictHostKeyChecking=no2.2 安装ansible

root@k8s-deploy:~# apt -y install python3-pip

root@k8s-deploy:~# pip3 install ansible -i https://mirrors.aliyun.com/pypi/simple/

root@k8s-deploy:~# ansible --version

ansible [core 2.11.6]

config file = None

configured module search path = ['/root/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/local/lib/python3.8/dist-packages/ansible

ansible collection location = /root/.ansible/collections:/usr/share/ansible/collections

executable location = /usr/local/bin/ansible

python version = 3.8.10 (default, Sep 28 2021, 16:10:42) [GCC 9.3.0]

jinja version = 2.10.1

libyaml = True三 部署节点编排k8s安装

3.1 部署节点下载部署工具

https://github.com/easzlab/kubeasz

root@k8s-deploy:/opt# export release=3.1.1

root@k8s-deploy:/opt# wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

root@k8s-deploy:/opt# chmod +x ./ezdown3.2 按需修改ezdown文件

点击查看代码

root@k8s-deploy:/opt# cat ezdown

DOCKER_VER=20.10.8

KUBEASZ_VER=3.1.1

K8S_BIN_VER=v1.21.0

EXT_BIN_VER=0.9.5

SYS_PKG_VER=0.4.1

HARBOR_VER=v2.1.3

REGISTRY_MIRROR=CN

calicoVer=v3.19.2

flannelVer=v0.13.0-amd64

dnsNodeCacheVer=1.17.0

corednsVer=1.8.4

dashboardVer=v2.3.1

dashboardMetricsScraperVer=v1.0.6

metricsVer=v0.5.0

pauseVer=3.5

nfsProvisionerVer=v4.0.1

export ciliumVer=v1.4.1

export kubeRouterVer=v0.3.1

export kubeOvnVer=v1.5.3

export promChartVer=12.10.6

export traefikChartVer=9.12.33.3 下载项目源码、二进制及离线镜像

所有文件(kubeasz代码、二进制、离线镜像)均已整理好放入目录/etc/kubeasz

点击查看代码

root@k8s-deploy:/opt# ./ezdown -D

root@k8s-deploy:~# ls -l /etc/kubeasz/

total 108

-rw-rw-r-- 1 root root 6222 Sep 25 11:41 README.md

-rw-rw-r-- 1 root root 20304 Sep 25 11:41 ansible.cfg

drwxr-xr-x 3 root root 4096 Nov 10 17:12 bin

drwxrwxr-x 8 root root 4096 Sep 25 15:30 docs

drwxr-xr-x 2 root root 4096 Nov 10 17:18 down

drwxrwxr-x 2 root root 4096 Sep 25 15:30 example

-rwxrwxr-x 1 root root 24629 Sep 25 11:41 ezctl

-rwxrwxr-x 1 root root 15031 Sep 25 11:41 ezdown

drwxrwxr-x 10 root root 4096 Sep 25 15:30 manifests

drwxrwxr-x 2 root root 4096 Sep 25 15:30 pics

drwxrwxr-x 2 root root 4096 Sep 25 15:30 playbooks

drwxrwxr-x 22 root root 4096 Sep 25 15:30 roles

drwxrwxr-x 2 root root 4096 Sep 25 15:30 tools

root@k8s-deploy:/opt# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

easzlab/kubeasz 3.1.1 a9da15e59bfc 6 weeks ago 164MB

easzlab/kubeasz-ext-bin 0.9.5 60e52b5c3e9f 2 months ago 402MB

calico/node v3.19.2 7aa1277761b5 3 months ago 169MB

calico/pod2daemon-flexvol v3.19.2 6a1186da14d9 3 months ago 21.7MB

calico/cni v3.19.2 05bf027c9836 3 months ago 146MB

calico/kube-controllers v3.19.2 779aa7e4e93c 3 months ago 60.6MB

kubernetesui/dashboard v2.3.1 e1482a24335a 4 months ago 220MB

coredns/coredns 1.8.4 8d147537fb7d 5 months ago 47.6MB

easzlab/metrics-server v0.5.0 1c655933b9c5 5 months ago 63.5MB

easzlab/kubeasz-k8s-bin v1.21.0 a23b83929702 7 months ago 499MB

easzlab/pause-amd64 3.5 ed210e3e4a5b 7 months ago 683kB

easzlab/nfs-subdir-external-provisioner v4.0.1 686d3731280a 8 months ago 43.8MB

easzlab/k8s-dns-node-cache 1.17.0 3a187183b3a8 9 months ago 123MB

kubernetesui/metrics-scraper v1.0.6 48d79e554db6 12 months ago 34.5MB

easzlab/flannel v0.13.0-amd64 e708f4bb69e3 13 months ago 57.2MB3.4 ezctl使用帮助

点击查看代码

root@k8s-deploy:~# /etc/kubeasz/ezctl -help

Usage: ezctl COMMAND [args]

-------------------------------------------------------------------------------------

Cluster setups:

list to list all of the managed clusters

checkout <cluster> to switch default kubeconfig of the cluster

new <cluster> to start a new k8s deploy with name 'cluster'

setup <cluster> <step> to setup a cluster, also supporting a step-by-step way

start <cluster> to start all of the k8s services stopped by 'ezctl stop'

stop <cluster> to stop all of the k8s services temporarily

upgrade <cluster> to upgrade the k8s cluster

destroy <cluster> to destroy the k8s cluster

backup <cluster> to backup the cluster state (etcd snapshot)

restore <cluster> to restore the cluster state from backups

start-aio to quickly setup an all-in-one cluster with 'default' settings

Cluster ops:

add-etcd <cluster> <ip> to add a etcd-node to the etcd cluster

add-master <cluster> <ip> to add a master node to the k8s cluster

add-node <cluster> <ip> to add a work node to the k8s cluster

del-etcd <cluster> <ip> to delete a etcd-node from the etcd cluster

del-master <cluster> <ip> to delete a master node from the k8s cluster

del-node <cluster> <ip> to delete a work node from the k8s cluster

Extra operation:

kcfg-adm <cluster> <args> to manage client kubeconfig of the k8s cluster

Use "ezctl help <command>" for more information about a given command.3.5 创建集群配置实例

点击查看代码

root@k8s-deploy:~# /etc/kubeasz/ezctl new k8s-01

2021-11-10 17:31:57 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-01

2021-11-10 17:31:57 DEBUG set version of common plugins

2021-11-10 17:31:57 DEBUG cluster k8s-01: files successfully created.

2021-11-10 17:31:57 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-01/hosts'

2021-11-10 17:31:57 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-01/config.yml'3.6 修改集群配置文件

根据提示配置'/etc/kubeasz/clusters/k8s-01/hosts' 和 '/etc/kubeasz/clusters/k8s-01/config.yml':根据前面节点规划修改hosts 文件和其他集群层面的主要配置选项;其他集群组件等配置项可以在config.yml 文件中修改。

3.6.1 修改集群host文件

点击查看代码

root@k8s-deploy:~# cat /etc/kubeasz/clusters/k8s-01/hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

192.168.174.103

192.168.174.104

192.168.174.105

# master node(s)

[kube_master]

192.168.174.100

192.168.174.101

#192.168.174.102

# work node(s)

[kube_node]

192.168.174.106

192.168.174.107

#192.168.174.108

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.174.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

192.168.174.121 LB_ROLE=backup EX_APISERVER_VIP=192.168.174.20 EX_APISERVER_PORT=8443

192.168.174.120 LB_ROLE=master EX_APISERVER_VIP=192.168.174.20 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.174.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico" # 私有云选择calico,公有云使用flannel

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-65000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="wgs.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-01"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"3.6.2 修改集群服务文件

root@k8s-deploy:~# cat /etc/kubeasz/clusters/k8s-01/config.yml

.......

SANDBOX_IMAGE: "192.168.174.120/baseimages/pause-amd64:3.5" # 按需修改

INSECURE_REG: '["http://easzlab.io.local:5000","http://192.168.174.20"]' # 添加harbor地址

.......

# node节点最大pod 数 按需修改

MAX_PODS: 300

CALICO_IPV4POOL_IPIP: "Always" # 允许跨子网

# coredns 自动安装

dns_install: "no"

ENABLE_LOCAL_DNS_CACHE: false # 生产环境建议true 需要安装registry.k8s.io/dns/k8s-dns-node-cache:xxx

metricsserver_install: "no"

dashboard_install: "no"四 k8s集群部署

4.1 查看部署帮助

点击查看代码

root@k8s-deploy:~# /etc/kubeasz/ezctl help setup

Usage: ezctl setup <cluster> <step>

available steps:

01 prepare to prepare CA/certs & kubeconfig & other system settings

02 etcd to setup the etcd cluster

03 container-runtime to setup the container runtime(docker or containerd)

04 kube-master to setup the master nodes

05 kube-node to setup the worker nodes

06 network to setup the network plugin

07 cluster-addon to setup other useful plugins

90 all to run 01~07 all at once

10 ex-lb to install external loadbalance for accessing k8s from outside

11 harbor to install a new harbor server or to integrate with an existed one

examples: ./ezctl setup test-k8s 01 (or ./ezctl setup test-k8s prepare)

./ezctl setup test-k8s 02 (or ./ezctl setup test-k8s etcd)

./ezctl setup test-k8s all

./ezctl setup test-k8s 04 -t restart_master4.2 集群环境初始化

playbooks路径:/etc/kubeasz/playbooks/01.prepare.yml

root@k8s-deploy:~# /etc/kubeasz/ezctl setup k8s-01 014.3 部署etcd集群

4.3.1 部署etcd集群

root@k8s-deploy:~# /etc/kubeasz/ezctl setup k8s-01 024.3.2 验证etcd集群状态

root@k8s-etcd-01:~# export NODE_IPS="192.168.174.103 192.168.174.104 192.168.174.105"

root@k8s-etcd-01:~# for ip in ${NODE_IPS}; do ETCDCTL_API=3 etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

https://192.168.174.103:2379 is healthy: successfully committed proposal: took = 7.561336ms

https://192.168.174.104:2379 is healthy: successfully committed proposal: took = 10.208395ms

https://192.168.174.105:2379 is healthy: successfully committed proposal: took = 8.879535ms4.4 集群安装docker

手动安装docker,使用自建的harbor仓库

4.4.1 复制daemon.json到各个节点

root@harbor-01:~# cat /etc/docker/daemon.json

{

"insecure-registries" : ["192.168.174.120","192.168.174.121"],

"registry-mirrors":["https://7590njbk.mirror.aliyuncs.com","https://reg-mirror.qiniu.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"max-concurrent-downloads": 10,

"live-restore": true,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "50m",

"max-file": "1"

},

"storage-driver": "overlay2"

}

root@harbor-01:~# scp /etc/docker/daemon.json 192.168.174.101:/etc/docker

root@harbor-01:~# scp /etc/docker/daemon.json 192.168.174.102:/etc/docker

root@harbor-01:~# scp /etc/docker/daemon.json 192.168.174.100:/etc/docker

root@harbor-01:~# scp /etc/docker/daemon.json 192.168.174.106:/etc/docker

root@harbor-01:~# scp /etc/docker/daemon.json 192.168.174.107:/etc/docker

root@harbor-01:~# scp /etc/docker/daemon.json 192.168.174.108:/etc/docker4.4.2 重启docker

各个节点重启docker

systemctl restart docker

4.4.3 创建docker软连接

root@k8s-master-01:~# ln -sv /usr/bin/docker /usr/local/bin/docker

root@k8s-master-02:~# ln -sv /usr/bin/docker /usr/local/bin/docker

root@k8s-master-03:~# ln -sv /usr/bin/docker /usr/local/bin/docker

root@k8s-node-01:~# ln -sv /usr/bin/docker /usr/local/bin/docker

root@k8s-node-02:~# ln -sv /usr/bin/docker /usr/local/bin/docker

root@k8s-node-03:~# ln -sv /usr/bin/docker /usr/local/bin/docker4.5 部署master节点

4.5.1 部署master节点

root@k8s-deploy:~# /etc/kubeasz/ezctl setup k8s-01 044.5.2 master节点验证

root@k8s-master-01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.174.100 Ready,SchedulingDisabled master 15m v1.21.0

192.168.174.101 Ready,SchedulingDisabled master 15m v1.21.04.5.3 查看进程状态

systemctl status kube-apiserver

systemctl status kube-controller-manager

systemctl status kube-scheduler4.6 部署node节点

4.6.1 部署node节点

root@k8s-deploy:~# /etc/kubeasz/ezctl setup k8s-01 054.6.2 查看node信息

root@k8s-master-01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.174.100 Ready,SchedulingDisabled master 72m v1.21.0

192.168.174.101 Ready,SchedulingDisabled master 72m v1.21.0

192.168.174.106 Ready node 69m v1.21.0

192.168.174.107 Ready node 69m v1.21.04.7 部署calico组件

4.7.1 修改calico组件image

4.7.1.1 查找默认calico组件

root@k8s-deploy:~# grep image /etc/kubeasz/roles/calico/templates/calico-v3.19.yaml.j2

image: docker.io/calico/cni:v3.19.2

image: docker.io/calico/pod2daemon-flexvol:v3.19.2

image: docker.io/calico/node:v3.19.2

image: docker.io/calico/kube-controllers:v3.19.24.7.1.2 image从新打tag

root@k8s-harbor-01:~# docker pull docker.io/calico/cni:v3.19.2

root@k8s-harbor-01:~# docker tag docker.io/calico/cni:v3.19.2 192.168.174.120/baseimages/calico-cni:v3.19.2

root@k8s-harbor-01:~# docker pull docker.io/calico/pod2daemon-flexvol:v3.19.2

root@k8s-harbor-01:~# docker tag docker.io/calico/pod2daemon-flexvol:v3.19.2 192.168.174.120/baseimages/calico-pod2daemon-flexvol:v3.19.2

root@k8s-harbor-01:~# docker pull docker.io/calico/node:v3.19.2

root@k8s-harbor-01:~# docker tag docker.io/calico/node:v3.19.2 192.168.174.120/baseimages/calico-node:v3.19.2

root@k8s-harbor-01:~# docker pull docker.io/calico/kube-controllers:v3.19.2

root@k8s-harbor-01:~# docker tag docker.io/calico/kube-controllers:v3.19.2 192.168.174.120/baseimages/calico-kube-controllers:v3.19.24.7.1.3 上传image到harbor

root@k8s-harbor-01:~# doker login 192.168.174.120

root@k8s-harbor-01:~# docker push 192.168.174.120/baseimages/calico-cni:v3.19.2

root@k8s-harbor-01:~# docker push 192.168.174.120/baseimages/calico-pod2daemon-flexvol:v3.19.2

root@k8s-harbor-01:~# docker push 192.168.174.120/baseimages/calico-node:v3.19.2

root@k8s-harbor-01:~# docker push 192.168.174.120/baseimages/calico-kube-controllers:v3.19.24.7.1.4 替换image

root@k8s-deploy:~# sed -i 's@docker.io/calico/cni:v3.19.2@192.168.174.120/baseimages/calico-cni:v3.19.2@g' /etc/kubeasz/roles/calico/templates/calico-v3.19.yaml.j2

root@k8s-deploy:~# sed -i 's@docker.io/calico/pod2daemon-flexvol:v3.19.2@192.168.174.120/baseimages/calico-pod2daemon-flexvol:v3.19.2@g' /etc/kubeasz/roles/calico/templates/calico-v3.19.yaml.j2

root@k8s-deploy:~# sed -i 's@docker.io/calico/node:v3.19.2@192.168.174.120/baseimages/calico-node:v3.19.2@g' /etc/kubeasz/roles/calico/templates/calico-v3.19.yaml.j2

root@k8s-deploy:~# sed -i 's@docker.io/calico/kube-controllers:v3.19.2@192.168.174.120/baseimages/calico-kube-controllers:v3.19.2@g' /etc/kubeasz/roles/calico/templates/calico-v3.19.yaml.j2 4.7.1.5 验证image

root@k8s-deploy:~# grep image /etc/kubeasz/roles/calico/templates/calico-v3.19.yaml.j2

image: 192.168.174.120/baseimages/calico-cni:v3.19.2

image: 192.168.174.120/baseimages/calico-pod2daemon-flexvol:v3.19.2

image: 192.168.174.120/baseimages/calico-node:v3.19.2

image: 192.168.174.120/baseimages/calico-kube-controllers:v3.19.24.7.2 部署calico注意事项

注意:以下地址段要保持一致

root@k8s-deploy:~# grep CLUSTER_CIDR /etc/kubeasz/roles/calico/templates/calico-v3.19.yaml.j2

value: "{{ CLUSTER_CIDR }}"

root@k8s-deploy:~# grep CLUSTER_CIDR /etc/kubeasz/clusters/k8s-01/hosts

CLUSTER_CIDR="10.200.0.0/16"4.7.3 部署calico组件

root@k8s-deploy:~# /etc/kubeasz/ezctl setup k8s-01 064.7.4 验证calico网络

root@k8s-master-01:~# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6f8794d6c4-4pql8 1/1 Running 0 2m45s

kube-system calico-node-c9qnk 1/1 Running 0 2m45s

kube-system calico-node-dnspm 1/1 Running 0 2m46s

kube-system calico-node-jpr8q 1/1 Running 0 2m45s

kube-system calico-node-kljfr 1/1 Running 1 2m45s4.7.5 查看calico节点状态

root@k8s-master-01:~# calicoctl node status

Calico process is running.

IPv4 BGP status

+-----------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+-----------------+-------------------+-------+----------+-------------+

| 192.168.174.101 | node-to-node mesh | up | 02:44:19 | Established |

| 192.168.174.106 | node-to-node mesh | up | 02:44:15 | Established |

| 192.168.174.107 | node-to-node mesh | up | 02:44:15 | Established |

+-----------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.4.7.6 查看网卡和路由信息

点击查看代码

root@k8s-master-01:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:0e:63:73 brd ff:ff:ff:ff:ff:ff

inet 192.168.174.100/24 brd 192.168.174.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe0e:6373/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:2f:d0:b8:f9 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether 8e:79:49:e7:47:c7 brd ff:ff:ff:ff:ff:ff

inet 10.100.0.1/32 scope global kube-ipvs0

valid_lft forever preferred_lft forever

7: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.200.151.128/32 scope global tunl0

valid_lft forever preferred_lft forever

root@k8s-master-01:~# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.174.2 0.0.0.0 UG 0 0 0 ens33

10.200.44.192 192.168.174.107 255.255.255.192 UG 0 0 0 tunl0

10.200.95.0 192.168.174.101 255.255.255.192 UG 0 0 0 tunl0

10.200.151.128 0.0.0.0 255.255.255.192 U 0 0 0 *

10.200.154.192 192.168.174.106 255.255.255.192 UG 0 0 0 tunl0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.174.0 0.0.0.0 255.255.255.0 U 0 0 0 ens334.7.7 测试pod跨节点通信

4.7.7.1 创建测试pod

root@k8s-node-01:~# kubectl run net-test1 --image=192.168.174.120/baseimages/alpine:latest sleep 300000

pod/net-test1 created

root@k8s-node-01:~# kubectl run net-test2 --image=192.168.174.120/baseimages/alpine:latest sleep 300000

pod/net-test2 created

root@k8s-node-01:~# kubectl run net-test3 --image=192.168.174.120/baseimages/alpine:latest sleep 300000

pod/net-test3 created4.7.7.2 查看创建测试pod信息

root@k8s-node-01:~# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default net-test1 1/1 Running 0 3m32s 10.200.154.193 192.168.174.106 <none> <none>

default net-test2 1/1 Running 0 3m28s 10.200.154.194 192.168.174.106 <none> <none>

default net-test3 1/1 Running 0 2s 10.200.44.193 192.168.174.107 <none> <none>

kube-system calico-kube-controllers-6f8794d6c4-4pql8 1/1 Running 0 25m 192.168.174.107 192.168.174.107 <none> <none>

kube-system calico-node-c9qnk 1/1 Running 0 25m 192.168.174.100 192.168.174.100 <none> <none>

kube-system calico-node-dnspm 1/1 Running 0 25m 192.168.174.107 192.168.174.107 <none> <none>

kube-system calico-node-jpr8q 1/1 Running 0 25m 192.168.174.101 192.168.174.101 <none> <none>

kube-system calico-node-kljfr 4.7.7.3 登录测试pod

root@k8s-node-01:~# kubectl exec -it net-test2 -- sh

/ #4.7.7.4 测试pod跨节点通信

点击查看代码

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 56:50:9c:c1:75:06 brd ff:ff:ff:ff:ff:ff

inet 10.200.154.194/32 brd 10.200.154.194 scope global eth0

valid_lft forever preferred_lft forever

/ # ping 10.200.44.193

PING 10.200.44.193 (10.200.44.193): 56 data bytes

64 bytes from 10.200.44.193: seq=0 ttl=62 time=0.614 ms

64 bytes from 10.200.44.193: seq=1 ttl=62 time=0.245 ms

64 bytes from 10.200.44.193: seq=2 ttl=62 time=0.446 ms

^C

--- 10.200.44.193 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.245/0.435/0.614 ms

/ # ping 223.6.6.6

PING 223.6.6.6 (223.6.6.6): 56 data bytes

64 bytes from 223.6.6.6: seq=0 ttl=127 time=4.147 ms

^C

--- 223.6.6.6 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 4.147/4.147/4.147 ms4.8 部署coredns组件

https://github.com/coredns/coredns/

4.8.1 准备coredns.yaml

https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/dns/coredns/coredns.yaml.base

替换coredns.yaml.base内容:

- __DNS__DOMAIN__:wgs.local

- __DNS__MEMORY__LIMIT__:256M # 按需修改

- __DNS__SERVER__:10.100.0.2

root@k8s-master-01:~# sed -i 's@__DNS__DOMAIN__@wgs.local@g' coredns.yaml

root@k8s-master-01:~# sed -i 's@__DNS__MEMORY__LIMIT__@256M@g' coredns.yaml

root@k8s-master-01:~# sed -i 's@__DNS__SERVER__@10.100.0.2@g' coredns.yaml点击查看代码

root@k8s-master-01:~# cat coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

bind 0.0.0.0

ready

kubernetes wgs.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf { # dns转发,

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: 192.168.174.120/baseimages/coredns:v1.8.3

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

type: NodePort # 端口暴露

selector:

k8s-app: kube-dns

clusterIP: 10.100.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

targetPort: 9153 #

nodePort: 30009 # 宿主机端口4.8.2 主要配置参数

点击查看代码

error:错误日志输出到stdout

health:coredns的运行状况报告为http://localhost:8080/health

cache:启动coredns缓存

reload: 配置自动重新加载配置文件,如果修改了configmap的配置,会在两分钟后生效

loadbalace: 一个域名有多个记录会被轮询解析

kubernetes: coredns将根据指定的server domain名称在kubernetes svc中进行域名解析

forward:不是kubernetes集群域内的域名查询都进行转发指定的服务器(/etc/resolv.conf)

prometheus: coredns的指标数据可以配置prometheus,访问http://coredns svc:9153/metrics进行收集

ready: 当coredns 服务启动完成后会进行状态检测,会有个url 路径为/ready返回200状态码,否则返回报错。4.8.3 部署coredns

root@k8s-master-01:~# kubectl apply -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created4.8.4 查看pod信息

root@k8s-master-01:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6f8794d6c4-6hjzq 1/1 Running 2 3h20m

kube-system calico-node-ln496 1/1 Running 2 3h20m

kube-system calico-node-p4lq4 1/1 Running 0 3h20m

kube-system calico-node-s7n4q 1/1 Running 0 3h20m

kube-system calico-node-trpds 1/1 Running 2 3h20m

kube-system coredns-8568fcb45d-nj7tg 1/1 Running 0 4m26s4.8.5 域名解析测试

4.8.5.1 创建测试pod

root@k8s-master-01:~# kubectl run net-test1 --image=192.168.174.120/baseimages/alpine:latest sleep 300000

pod/net-test1 created4.8.5.2 登录测试pod

root@k8s-master-01:~# kubectl exec -it net-test1 -- sh4.8.5.3 解析域名测试

/ # ping -c 2 www.baidu.com

PING www.baidu.com (110.242.68.3): 56 data bytes

64 bytes from 110.242.68.3: seq=0 ttl=127 time=9.480 ms

64 bytes from 110.242.68.3: seq=1 ttl=127 time=9.455 ms

--- www.baidu.com ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 9.455/9.467/9.480 ms4.8.6 查看coredns监控信息

查看代码

~# curl http://192.168.174.100:30009/metrics

# HELP coredns_build_info A metric with a constant '1' value labeled by version, revision, and goversion from which CoreDNS was built.

# TYPE coredns_build_info gauge

coredns_build_info{goversion="go1.18.2",revision="45b0a11",version="1.9.3"} 1

# HELP coredns_cache_entries The number of elements in the cache.

# TYPE coredns_cache_entries gauge

coredns_cache_entries{server="dns://:53",type="denial",zones="."} 0

coredns_cache_entries{server="dns://:53",type="success",zones="."} 1

# HELP coredns_cache_hits_total The count of cache hits.

# TYPE coredns_cache_hits_total counter

coredns_cache_hits_total{server="dns://:53",type="success",zones="."} 1593

# HELP coredns_cache_misses_total The count of cache misses. Deprecated, derive misses from cache hits/requests counters.

# TYPE coredns_cache_misses_total counter

coredns_cache_misses_total{server="dns://:53",zones="."} 1642

# HELP coredns_cache_requests_total The count of cache requests.

# TYPE coredns_cache_requests_total counter

coredns_cache_requests_total{server="dns://:53",zones="."} 3235

# HELP coredns_dns_request_duration_seconds Histogram of the time (in seconds) each request took per zone.

# TYPE coredns_dns_request_duration_seconds histogram

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.00025"} 1307

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.0005"} 1528

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.001"} 1586

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.002"} 1593

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.004"} 1593

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.008"} 1598

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.016"} 1615

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.032"} 1618

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.064"} 1618

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.128"} 1618

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.256"} 1618

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="0.512"} 1618

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="1.024"} 1618

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="2.048"} 1618

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="4.096"} 1618

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="8.192"} 3016

coredns_dns_request_duration_seconds_bucket{server="dns://:53",zone=".",le="+Inf"} 3235

coredns_dns_request_duration_seconds_sum{server="dns://:53",zone="."} 10839.5530131

coredns_dns_request_duration_seconds_count{server="dns://:53",zone="."} 3235

# HELP coredns_dns_request_size_bytes Size of the EDNS0 UDP buffer in bytes (64K for TCP) per zone and protocol.

# TYPE coredns_dns_request_size_bytes histogram

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="0"} 0

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="100"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="200"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="300"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="400"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="511"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="1023"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="2047"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="4095"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="8291"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="16000"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="32000"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="48000"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="64000"} 1617

coredns_dns_request_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="+Inf"} 1617

coredns_dns_request_size_bytes_sum{proto="tcp",server="dns://:53",zone="."} 109655

coredns_dns_request_size_bytes_count{proto="tcp",server="dns://:53",zone="."} 1617

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="0"} 0

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="100"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="200"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="300"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="400"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="511"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="1023"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="2047"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="4095"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="8291"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="16000"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="32000"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="48000"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="64000"} 1618

coredns_dns_request_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="+Inf"} 1618

coredns_dns_request_size_bytes_sum{proto="udp",server="dns://:53",zone="."} 27546

coredns_dns_request_size_bytes_count{proto="udp",server="dns://:53",zone="."} 1618

# HELP coredns_dns_requests_total Counter of DNS requests made per zone, protocol and family.

# TYPE coredns_dns_requests_total counter

coredns_dns_requests_total{family="1",proto="tcp",server="dns://:53",type="other",zone="."} 1617

coredns_dns_requests_total{family="1",proto="udp",server="dns://:53",type="NS",zone="."} 1617

coredns_dns_requests_total{family="1",proto="udp",server="dns://:53",type="other",zone="."} 1

# HELP coredns_dns_response_size_bytes Size of the returned response in bytes.

# TYPE coredns_dns_response_size_bytes histogram

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="0"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="100"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="200"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="300"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="400"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="511"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="1023"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="2047"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="4095"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="8291"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="16000"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="32000"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="48000"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="64000"} 1617

coredns_dns_response_size_bytes_bucket{proto="tcp",server="dns://:53",zone=".",le="+Inf"} 1617

coredns_dns_response_size_bytes_sum{proto="tcp",server="dns://:53",zone="."} 0

coredns_dns_response_size_bytes_count{proto="tcp",server="dns://:53",zone="."} 1617

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="0"} 0

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="100"} 1

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="200"} 1

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="300"} 1

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="400"} 1

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="511"} 1618

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="1023"} 1618

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="2047"} 1618

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="4095"} 1618

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="8291"} 1618

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="16000"} 1618

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="32000"} 1618

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="48000"} 1618

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="64000"} 1618

coredns_dns_response_size_bytes_bucket{proto="udp",server="dns://:53",zone=".",le="+Inf"} 1618

coredns_dns_response_size_bytes_sum{proto="udp",server="dns://:53",zone="."} 679197

coredns_dns_response_size_bytes_count{proto="udp",server="dns://:53",zone="."} 1618

# HELP coredns_dns_responses_total Counter of response status codes.

# TYPE coredns_dns_responses_total counter

coredns_dns_responses_total{plugin="",rcode="SERVFAIL",server="dns://:53",zone="."} 1617

coredns_dns_responses_total{plugin="loadbalance",rcode="NOERROR",server="dns://:53",zone="."} 1617

coredns_dns_responses_total{plugin="loadbalance",rcode="NXDOMAIN",server="dns://:53",zone="."} 1

# HELP coredns_forward_conn_cache_misses_total Counter of connection cache misses per upstream and protocol.

# TYPE coredns_forward_conn_cache_misses_total counter

coredns_forward_conn_cache_misses_total{proto="tcp",to="202.106.0.20:53"} 4474

coredns_forward_conn_cache_misses_total{proto="udp",to="202.106.0.20:53"} 25

# HELP coredns_forward_healthcheck_broken_total Counter of the number of complete failures of the healthchecks.

# TYPE coredns_forward_healthcheck_broken_total counter

coredns_forward_healthcheck_broken_total 0

# HELP coredns_forward_healthcheck_failures_total Counter of the number of failed healthchecks.

# TYPE coredns_forward_healthcheck_failures_total counter

coredns_forward_healthcheck_failures_total{to="202.106.0.20:53"} 20

# HELP coredns_forward_max_concurrent_rejects_total Counter of the number of queries rejected because the concurrent queries were at maximum.

# TYPE coredns_forward_max_concurrent_rejects_total counter

coredns_forward_max_concurrent_rejects_total 0

# HELP coredns_forward_request_duration_seconds Histogram of the time each request took.

# TYPE coredns_forward_request_duration_seconds histogram

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.00025"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.0005"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.001"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.002"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.004"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.008"} 5

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.016"} 21

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.032"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.064"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.128"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.256"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="0.512"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="1.024"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="2.048"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="4.096"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="8.192"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NOERROR",to="202.106.0.20:53",le="+Inf"} 24

coredns_forward_request_duration_seconds_sum{rcode="NOERROR",to="202.106.0.20:53"} 0.246486645

coredns_forward_request_duration_seconds_count{rcode="NOERROR",to="202.106.0.20:53"} 24

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.00025"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.0005"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.001"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.002"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.004"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.008"} 0

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.016"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.032"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.064"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.128"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.256"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="0.512"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="1.024"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="2.048"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="4.096"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="8.192"} 1

coredns_forward_request_duration_seconds_bucket{rcode="NXDOMAIN",to="202.106.0.20:53",le="+Inf"} 1

coredns_forward_request_duration_seconds_sum{rcode="NXDOMAIN",to="202.106.0.20:53"} 0.012026914

coredns_forward_request_duration_seconds_count{rcode="NXDOMAIN",to="202.106.0.20:53"} 1

# HELP coredns_forward_requests_total Counter of requests made per upstream.

# TYPE coredns_forward_requests_total counter

coredns_forward_requests_total{to="202.106.0.20:53"} 25

# HELP coredns_forward_responses_total Counter of responses received per upstream.

# TYPE coredns_forward_responses_total counter

coredns_forward_responses_total{rcode="NOERROR",to="202.106.0.20:53"} 24

coredns_forward_responses_total{rcode="NXDOMAIN",to="202.106.0.20:53"} 1

# HELP coredns_health_request_duration_seconds Histogram of the time (in seconds) each request took.

# TYPE coredns_health_request_duration_seconds histogram

coredns_health_request_duration_seconds_bucket{le="0.00025"} 0

coredns_health_request_duration_seconds_bucket{le="0.0025"} 1363

coredns_health_request_duration_seconds_bucket{le="0.025"} 1375

coredns_health_request_duration_seconds_bucket{le="0.25"} 1375

coredns_health_request_duration_seconds_bucket{le="2.5"} 1375

coredns_health_request_duration_seconds_bucket{le="+Inf"} 1375

coredns_health_request_duration_seconds_sum 0.680876432

coredns_health_request_duration_seconds_count 1375

# HELP coredns_health_request_failures_total The number of times the health check failed.

# TYPE coredns_health_request_failures_total counter

coredns_health_request_failures_total 0

# HELP coredns_hosts_reload_timestamp_seconds The timestamp of the last reload of hosts file.

# TYPE coredns_hosts_reload_timestamp_seconds gauge

coredns_hosts_reload_timestamp_seconds 0

# HELP coredns_kubernetes_dns_programming_duration_seconds Histogram of the time (in seconds) it took to program a dns instance.

# TYPE coredns_kubernetes_dns_programming_duration_seconds histogram

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.001"} 0

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.002"} 0

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.004"} 0

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.008"} 0

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.016"} 0

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.032"} 0

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.064"} 0

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.128"} 0

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.256"} 0

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="0.512"} 9

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="1.024"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="2.048"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="4.096"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="8.192"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="16.384"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="32.768"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="65.536"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="131.072"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="262.144"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="524.288"} 22

coredns_kubernetes_dns_programming_duration_seconds_bucket{service_kind="headless_with_selector",le="+Inf"} 22

coredns_kubernetes_dns_programming_duration_seconds_sum{service_kind="headless_with_selector"} 13.512582529000001

coredns_kubernetes_dns_programming_duration_seconds_count{service_kind="headless_with_selector"} 22

# HELP coredns_local_localhost_requests_total Counter of localhost.<domain> requests.

# TYPE coredns_local_localhost_requests_total counter

coredns_local_localhost_requests_total 0

# HELP coredns_panics_total A metrics that counts the number of panics.

# TYPE coredns_panics_total counter

coredns_panics_total 0

# HELP coredns_plugin_enabled A metric that indicates whether a plugin is enabled on per server and zone basis.

# TYPE coredns_plugin_enabled gauge

coredns_plugin_enabled{name="cache",server="dns://:53",zone="."} 1

coredns_plugin_enabled{name="errors",server="dns://:53",zone="."} 1

coredns_plugin_enabled{name="forward",server="dns://:53",zone="."} 1

coredns_plugin_enabled{name="kubernetes",server="dns://:53",zone="."} 1

coredns_plugin_enabled{name="loadbalance",server="dns://:53",zone="."} 1

coredns_plugin_enabled{name="loop",server="dns://:53",zone="."} 1

coredns_plugin_enabled{name="prometheus",server="dns://:53",zone="."} 1

# HELP coredns_reload_failed_total Counter of the number of failed reload attempts.

# TYPE coredns_reload_failed_total counter

coredns_reload_failed_total 0

# HELP go_gc_duration_seconds A summary of the pause duration of garbage collection cycles.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 1.5259e-05

go_gc_duration_seconds{quantile="0.25"} 5.7333e-05

go_gc_duration_seconds{quantile="0.5"} 9.9297e-05

go_gc_duration_seconds{quantile="0.75"} 0.000146518

go_gc_duration_seconds{quantile="1"} 0.001997025

go_gc_duration_seconds_sum 0.005687375

go_gc_duration_seconds_count 17

# HELP go_goroutines Number of goroutines that currently exist.

# TYPE go_goroutines gauge

go_goroutines 41

# HELP go_info Information about the Go environment.

# TYPE go_info gauge

go_info{version="go1.18.2"} 1

# HELP go_memstats_alloc_bytes Number of bytes allocated and still in use.

# TYPE go_memstats_alloc_bytes gauge

go_memstats_alloc_bytes 5.909704e+06

# HELP go_memstats_alloc_bytes_total Total number of bytes allocated, even if freed.

# TYPE go_memstats_alloc_bytes_total counter

go_memstats_alloc_bytes_total 5.7328168e+07

# HELP go_memstats_buck_hash_sys_bytes Number of bytes used by the profiling bucket hash table.

# TYPE go_memstats_buck_hash_sys_bytes gauge

go_memstats_buck_hash_sys_bytes 1.466872e+06

# HELP go_memstats_frees_total Total number of frees.

# TYPE go_memstats_frees_total counter

go_memstats_frees_total 610116

# HELP go_memstats_gc_sys_bytes Number of bytes used for garbage collection system metadata.

# TYPE go_memstats_gc_sys_bytes gauge

go_memstats_gc_sys_bytes 5.423216e+06

# HELP go_memstats_heap_alloc_bytes Number of heap bytes allocated and still in use.

# TYPE go_memstats_heap_alloc_bytes gauge

go_memstats_heap_alloc_bytes 5.909704e+06

# HELP go_memstats_heap_idle_bytes Number of heap bytes waiting to be used.

# TYPE go_memstats_heap_idle_bytes gauge

go_memstats_heap_idle_bytes 7.733248e+06

# HELP go_memstats_heap_inuse_bytes Number of heap bytes that are in use.

# TYPE go_memstats_heap_inuse_bytes gauge

go_memstats_heap_inuse_bytes 7.995392e+06

# HELP go_memstats_heap_objects Number of allocated objects.

# TYPE go_memstats_heap_objects gauge

go_memstats_heap_objects 35408

# HELP go_memstats_heap_released_bytes Number of heap bytes released to OS.

# TYPE go_memstats_heap_released_bytes gauge

go_memstats_heap_released_bytes 4.3008e+06

# HELP go_memstats_heap_sys_bytes Number of heap bytes obtained from system.

# TYPE go_memstats_heap_sys_bytes gauge

go_memstats_heap_sys_bytes 1.572864e+07

# HELP go_memstats_last_gc_time_seconds Number of seconds since 1970 of last garbage collection.

# TYPE go_memstats_last_gc_time_seconds gauge

go_memstats_last_gc_time_seconds 1.6656554469519556e+09

# HELP go_memstats_lookups_total Total number of pointer lookups.

# TYPE go_memstats_lookups_total counter

go_memstats_lookups_total 0

# HELP go_memstats_mallocs_total Total number of mallocs.

# TYPE go_memstats_mallocs_total counter

go_memstats_mallocs_total 645524

# HELP go_memstats_mcache_inuse_bytes Number of bytes in use by mcache structures.

# TYPE go_memstats_mcache_inuse_bytes gauge

go_memstats_mcache_inuse_bytes 2400

# HELP go_memstats_mcache_sys_bytes Number of bytes used for mcache structures obtained from system.

# TYPE go_memstats_mcache_sys_bytes gauge

go_memstats_mcache_sys_bytes 15600

# HELP go_memstats_mspan_inuse_bytes Number of bytes in use by mspan structures.

# TYPE go_memstats_mspan_inuse_bytes gauge

go_memstats_mspan_inuse_bytes 126480

# HELP go_memstats_mspan_sys_bytes Number of bytes used for mspan structures obtained from system.

# TYPE go_memstats_mspan_sys_bytes gauge

go_memstats_mspan_sys_bytes 228480

# HELP go_memstats_next_gc_bytes Number of heap bytes when next garbage collection will take place.

# TYPE go_memstats_next_gc_bytes gauge

go_memstats_next_gc_bytes 9.551328e+06

# HELP go_memstats_other_sys_bytes Number of bytes used for other system allocations.

# TYPE go_memstats_other_sys_bytes gauge

go_memstats_other_sys_bytes 813104

# HELP go_memstats_stack_inuse_bytes Number of bytes in use by the stack allocator.

# TYPE go_memstats_stack_inuse_bytes gauge

go_memstats_stack_inuse_bytes 1.048576e+06

# HELP go_memstats_stack_sys_bytes Number of bytes obtained from system for stack allocator.

# TYPE go_memstats_stack_sys_bytes gauge

go_memstats_stack_sys_bytes 1.048576e+06

# HELP go_memstats_sys_bytes Number of bytes obtained from system.

# TYPE go_memstats_sys_bytes gauge

go_memstats_sys_bytes 2.4724488e+07

# HELP go_threads Number of OS threads created.

# TYPE go_threads gauge

go_threads 9

# HELP process_cpu_seconds_total Total user and system CPU time spent in seconds.

# TYPE process_cpu_seconds_total counter

process_cpu_seconds_total 3.66

# HELP process_max_fds Maximum number of open file descriptors.

# TYPE process_max_fds gauge

process_max_fds 1.048576e+06

# HELP process_open_fds Number of open file descriptors.

# TYPE process_open_fds gauge

process_open_fds 15

# HELP process_resident_memory_bytes Resident memory size in bytes.

# TYPE process_resident_memory_bytes gauge

process_resident_memory_bytes 4.9614848e+07

# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE process_start_time_seconds gauge

process_start_time_seconds 1.66565412925e+09

# HELP process_virtual_memory_bytes Virtual memory size in bytes.

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 7.71104768e+08

# HELP process_virtual_memory_max_bytes Maximum amount of virtual memory available in bytes.

# TYPE process_virtual_memory_max_bytes gauge

process_virtual_memory_max_bytes 1.8446744073709552e+194.9 部署nodelocaldns

https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/dns/nodelocaldns/nodelocaldns.yaml

4.9.1 准备nodelocaldns.yaml

# sed -i 's@__PILLAR__DNS__DOMAIN_@wgs.local@g' nodelocaldns.yaml

# sed -i 's@__PILLAR__LOCAL__DNS__ __PILLAR__DNS__SERVER__@169.254.20.10@g' nodelocaldns.yaml

# sed -i 's@__PILLAR__CLUSTER__DNS__@10.100.0.2@g' nodelocaldns.yaml

# sed -i 's@__PILLAR__LOCAL__DNS__@169.254.20.10@g' nodelocaldns.yaml查看代码

# Copyright 2018 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

apiVersion: v1

kind: ServiceAccount

metadata:

name: node-local-dns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns-upstream

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "KubeDNSUpstream"

spec:

ports:

- name: dns

port: 53

protocol: UDP

targetPort: 53

- name: dns-tcp

port: 53

protocol: TCP

targetPort: 53

selector:

k8s-app: kube-dns

---

apiVersion: v1

kind: ConfigMap

metadata:

name: node-local-dns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: Reconcile

data:

Corefile: |

wgs.local_:53 {

errors

cache {

success 9984 30

denial 9984 5

}

reload

loop

bind 169.254.20.10

forward . 10.100.0.2 {

force_tcp

}

prometheus :9253

health 169.254.20.10:8080

}

in-addr.arpa:53 {

errors

cache 30

reload

loop

bind 169.254.20.10

forward . 10.100.0.2 {

force_tcp

}

prometheus :9253

}

ip6.arpa:53 {

errors

cache 30

reload

loop

bind 169.254.20.10

forward . 10.100.0.2 {

force_tcp

}

prometheus :9253

}

.:53 {

errors

cache 30

reload

loop

bind 169.254.20.10

forward . __PILLAR__UPSTREAM__SERVERS__

prometheus :9253

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-local-dns

namespace: kube-system

labels:

k8s-app: node-local-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

updateStrategy:

rollingUpdate:

maxUnavailable: 10%

selector:

matchLabels:

k8s-app: node-local-dns

template:

metadata:

labels:

k8s-app: node-local-dns

annotations:

prometheus.io/port: "9253"

prometheus.io/scrape: "true"

spec:

priorityClassName: system-node-critical

serviceAccountName: node-local-dns

hostNetwork: true

dnsPolicy: Default # Don't use cluster DNS.

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

- effect: "NoExecute"

operator: "Exists"

- effect: "NoSchedule"

operator: "Exists"

containers:

- name: node-cache

# image: registry.k8s.io/dns/k8s-dns-node-cache:1.22.11

image: 192.168.174.120/baseimages/k8s-dns-node-cache:1.22.11

resources:

requests:

cpu: 25m

memory: 5Mi

args: [ "-localip", "169.254.20.10", "-conf", "/etc/Corefile", "-upstreamsvc", "kube-dns-upstream" ]

securityContext:

capabilities:

add:

- NET_ADMIN

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9253

name: metrics

protocol: TCP

livenessProbe:

httpGet:

host: 169.254.20.10

path: /health

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 5

volumeMounts:

- mountPath: /run/xtables.lock

name: xtables-lock

readOnly: false

- name: config-volume

mountPath: /etc/coredns

- name: kube-dns-config

mountPath: /etc/kube-dns

volumes:

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

- name: kube-dns-config

configMap:

name: kube-dns

optional: true

- name: config-volume

configMap:

name: node-local-dns

items:

- key: Corefile

path: Corefile.base

---

# A headless service is a service with a service IP but instead of load-balancing it will return the IPs of our associated Pods.

# We use this to expose metrics to Prometheus.

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/port: "9253"

prometheus.io/scrape: "true"

labels:

k8s-app: node-local-dns

name: node-local-dns

namespace: kube-system

spec:

clusterIP: None

ports:

- name: metrics

port: 9253

targetPort: 9253

selector:

k8s-app: node-local-dns4.9.2 部署nodelocaldns.yaml

~# kubectl apply -f nodelocaldns.yaml

serviceaccount/node-local-dns unchanged

service/kube-dns-upstream unchanged

configmap/node-local-dns unchanged

daemonset.apps/node-local-dns configured

service/node-local-dns unchanged4.9.3 查看pod信息

~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 0 22m

kube-system calico-kube-controllers-9dcb4cbf5-sl84w 1/1 Running 0 88m

kube-system calico-node-2kp7f 1/1 Running 0 88m

kube-system calico-node-9q45x 1/1 Running 0 88m

kube-system calico-node-hvk5x 1/1 Running 0 88m

kube-system calico-node-nml46 1/1 Running 0 88m

kube-system calico-node-sr4mb 1/1 Running 0 88m

kube-system calico-node-vv6ks 1/1 Running 0 88m

kube-system coredns-577746f8bf-xj8gf 1/1 Running 0 4m10s

kube-system node-local-dns-6d9n4 1/1 Running 0 3m55s

kube-system node-local-dns-8hs5l 1/1 Running 0 3m55s

kube-system node-local-dns-hz2q6 1/1 Running 0 3m55s

kube-system node-local-dns-kkqj8 1/1 Running 0 3m55s

kube-system node-local-dns-nwpx9 1/1 Running 0 3m55s

kube-system node-local-dns-thr88 1/1 Running 0 3m55s4.9.4 域名解析测试

~# kubectl run net-test1 --image=alpine:latest sleep 300000

pod/net-test1 created

~# kubectl exec -it net-test1 -- sh

/ # cat /etc/resolv.conf

search default.svc.wgs.local svc.wgs.local wgs.local

nameserver 169.254.20.10

options ndots:5

/ # ping -c 2 www.baidu.com

PING www.baidu.com (110.242.68.3): 56 data bytes

64 bytes from 110.242.68.3: seq=0 ttl=127 time=15.188 ms

64 bytes from 110.242.68.3: seq=1 ttl=127 time=15.393 ms

--- www.baidu.com ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 15.188/15.290/15.393 ms

/ # nslookup www.baidu.com

Server: 169.254.20.10

Address: 169.254.20.10:53

Non-authoritative answer:

www.baidu.com canonical name = www.a.shifen.com

Name: www.a.shifen.com

Address: 110.242.68.4

Name: www.a.shifen.com

Address: 110.242.68.3

Non-authoritative answer:

www.baidu.com canonical name = www.a.shifen.com4.9.5 查看nodelocaldns监控信息

查看代码

# curl http://192.168.174.100:9353/metrics

# HELP coredns_nodecache_setup_errors_total The number of errors during periodic network setup for node-cache

# TYPE coredns_nodecache_setup_errors_total counter

coredns_nodecache_setup_errors_total{errortype="configmap"} 0

coredns_nodecache_setup_errors_total{errortype="interface_add"} 0

coredns_nodecache_setup_errors_total{errortype="interface_check"} 0

coredns_nodecache_setup_errors_total{errortype="iptables"} 0

coredns_nodecache_setup_errors_total{errortype="iptables_lock"} 0

# HELP go_gc_duration_seconds A summary of the pause duration of garbage collection cycles.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 4.3389e-05

go_gc_duration_seconds{quantile="0.25"} 4.3389e-05

go_gc_duration_seconds{quantile="0.5"} 4.715e-05

go_gc_duration_seconds{quantile="0.75"} 4.7611e-05

go_gc_duration_seconds{quantile="1"} 4.7611e-05

go_gc_duration_seconds_sum 0.00013815

go_gc_duration_seconds_count 3

# HELP go_goroutines Number of goroutines that currently exist.

# TYPE go_goroutines gauge

go_goroutines 33

# HELP go_info Information about the Go environment.

# TYPE go_info gauge

go_info{version="go1.18.6"} 1

# HELP go_memstats_alloc_bytes Number of bytes allocated and still in use.

# TYPE go_memstats_alloc_bytes gauge

go_memstats_alloc_bytes 4.505488e+06

# HELP go_memstats_alloc_bytes_total Total number of bytes allocated, even if freed.

# TYPE go_memstats_alloc_bytes_total counter

go_memstats_alloc_bytes_total 8.608488e+06

# HELP go_memstats_buck_hash_sys_bytes Number of bytes used by the profiling bucket hash table.

# TYPE go_memstats_buck_hash_sys_bytes gauge

go_memstats_buck_hash_sys_bytes 1.449147e+06

# HELP go_memstats_frees_total Total number of frees.

# TYPE go_memstats_frees_total counter

go_memstats_frees_total 38880

# HELP go_memstats_gc_cpu_fraction The fraction of this program's available CPU time used by the GC since the program started.

# TYPE go_memstats_gc_cpu_fraction gauge

go_memstats_gc_cpu_fraction 2.1545085571693077e-05

# HELP go_memstats_gc_sys_bytes Number of bytes used for garbage collection system metadata.

# TYPE go_memstats_gc_sys_bytes gauge

go_memstats_gc_sys_bytes 5.135344e+06

# HELP go_memstats_heap_alloc_bytes Number of heap bytes allocated and still in use.

# TYPE go_memstats_heap_alloc_bytes gauge

go_memstats_heap_alloc_bytes 4.505488e+06

# HELP go_memstats_heap_idle_bytes Number of heap bytes waiting to be used.

# TYPE go_memstats_heap_idle_bytes gauge

go_memstats_heap_idle_bytes 1.671168e+06

# HELP go_memstats_heap_inuse_bytes Number of heap bytes that are in use.

# TYPE go_memstats_heap_inuse_bytes gauge

go_memstats_heap_inuse_bytes 5.931008e+06

# HELP go_memstats_heap_objects Number of allocated objects.

# TYPE go_memstats_heap_objects gauge

go_memstats_heap_objects 25614

# HELP go_memstats_heap_released_bytes Number of heap bytes released to OS.

# TYPE go_memstats_heap_released_bytes gauge

go_memstats_heap_released_bytes 1.400832e+06

# HELP go_memstats_heap_sys_bytes Number of heap bytes obtained from system.

# TYPE go_memstats_heap_sys_bytes gauge

go_memstats_heap_sys_bytes 7.602176e+06

# HELP go_memstats_last_gc_time_seconds Number of seconds since 1970 of last garbage collection.

# TYPE go_memstats_last_gc_time_seconds gauge

go_memstats_last_gc_time_seconds 1.6656553792549582e+09

# HELP go_memstats_lookups_total Total number of pointer lookups.

# TYPE go_memstats_lookups_total counter

go_memstats_lookups_total 0

# HELP go_memstats_mallocs_total Total number of mallocs.

# TYPE go_memstats_mallocs_total counter

go_memstats_mallocs_total 64494

# HELP go_memstats_mcache_inuse_bytes Number of bytes in use by mcache structures.

# TYPE go_memstats_mcache_inuse_bytes gauge

go_memstats_mcache_inuse_bytes 2400

# HELP go_memstats_mcache_sys_bytes Number of bytes used for mcache structures obtained from system.

# TYPE go_memstats_mcache_sys_bytes gauge

go_memstats_mcache_sys_bytes 15600

# HELP go_memstats_mspan_inuse_bytes Number of bytes in use by mspan structures.

# TYPE go_memstats_mspan_inuse_bytes gauge

go_memstats_mspan_inuse_bytes 80784

# HELP go_memstats_mspan_sys_bytes Number of bytes used for mspan structures obtained from system.

# TYPE go_memstats_mspan_sys_bytes gauge

go_memstats_mspan_sys_bytes 81600

# HELP go_memstats_next_gc_bytes Number of heap bytes when next garbage collection will take place.

# TYPE go_memstats_next_gc_bytes gauge

go_memstats_next_gc_bytes 6.439056e+06

# HELP go_memstats_other_sys_bytes Number of bytes used for other system allocations.

# TYPE go_memstats_other_sys_bytes gauge

go_memstats_other_sys_bytes 479149

# HELP go_memstats_stack_inuse_bytes Number of bytes in use by the stack allocator.

# TYPE go_memstats_stack_inuse_bytes gauge

go_memstats_stack_inuse_bytes 786432

# HELP go_memstats_stack_sys_bytes Number of bytes obtained from system for stack allocator.

# TYPE go_memstats_stack_sys_bytes gauge

go_memstats_stack_sys_bytes 786432

# HELP go_memstats_sys_bytes Number of bytes obtained from system.

# TYPE go_memstats_sys_bytes gauge

go_memstats_sys_bytes 1.5549448e+07

# HELP go_threads Number of OS threads created.

# TYPE go_threads gauge

go_threads 8

# HELP process_cpu_seconds_total Total user and system CPU time spent in seconds.

# TYPE process_cpu_seconds_total counter

process_cpu_seconds_total 0.23

# HELP process_max_fds Maximum number of open file descriptors.

# TYPE process_max_fds gauge

process_max_fds 1.048576e+06

# HELP process_open_fds Number of open file descriptors.

# TYPE process_open_fds gauge

process_open_fds 15

# HELP process_resident_memory_bytes Resident memory size in bytes.

# TYPE process_resident_memory_bytes gauge

process_resident_memory_bytes 2.8246016e+07

# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE process_start_time_seconds gauge

process_start_time_seconds 1.66565532142e+09

# HELP process_virtual_memory_bytes Virtual memory size in bytes.

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 7.52091136e+08

# HELP process_virtual_memory_max_bytes Maximum amount of virtual memory available in bytes.

# TYPE process_virtual_memory_max_bytes gauge

process_virtual_memory_max_bytes 1.8446744073709552e+19

# HELP promhttp_metric_handler_requests_in_flight Current number of scrapes being served.

# TYPE promhttp_metric_handler_requests_in_flight gauge

promhttp_metric_handler_requests_in_flight 1

# HELP promhttp_metric_handler_requests_total Total number of scrapes by HTTP status code.

# TYPE promhttp_metric_handler_requests_total counter

promhttp_metric_handler_requests_total{code="200"} 1

promhttp_metric_handler_requests_total{code="500"} 0

promhttp_metric_handler_requests_total{code="503"} 04.10 部署dashboard

https://github.com/kubernetes/dashboard

4.10.1 查看dashboard版本兼容性

https://github.com/kubernetes/dashboard/releases

4.10.2 准备yaml文件

root@k8s-deploy:~/yaml# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml4.10.3 准备image

root@k8s-harbor-01:~# docker pull kubernetesui/dashboard:v2.4.0

root@k8s-harbor-01:~# docker tag kubernetesui/dashboard:v2.4.0 192.168.174.120/baseimages/dashboard:v2.4.0

root@k8s-harbor-01:~# docker pull kubernetesui/metrics-scraper:v1.0.7

root@k8s-harbor-01:~# docker tag kubernetesui/metrics-scraper:v1.0.7 192.168.174.120/baseimages/metrics-scraper:v1.0.7

root@k8s-harbor-01:~# docker push 192.168.174.120/baseimages/metrics-scraper:v1.0.7

root@k8s-harbor-01:~# docker push 192.168.174.120/baseimages/dashboard:v2.4.04.10.4 修改yaml文件image地址

root@k8s-deploy:~/yaml# sed -i 's@kubernetesui/dashboard:v2.4.0@192.168.174.120/baseimages/dashboard:v2.4.0@g' recommended.yaml

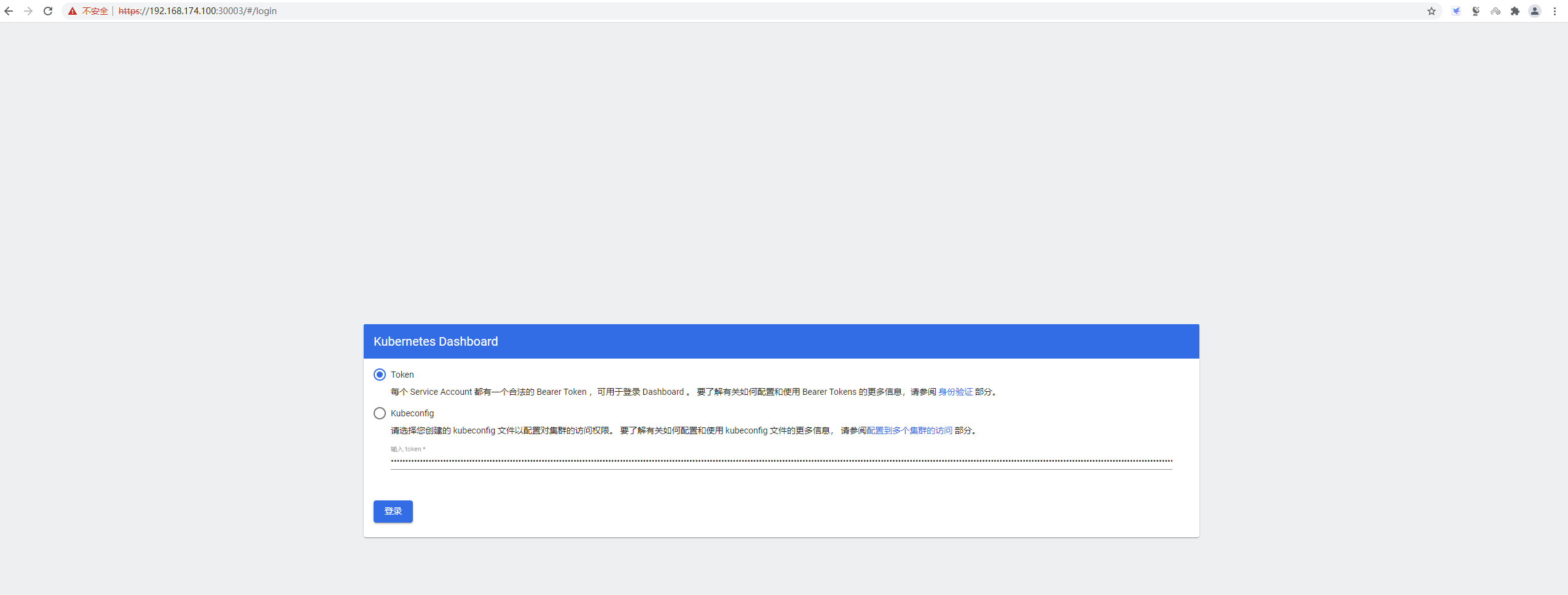

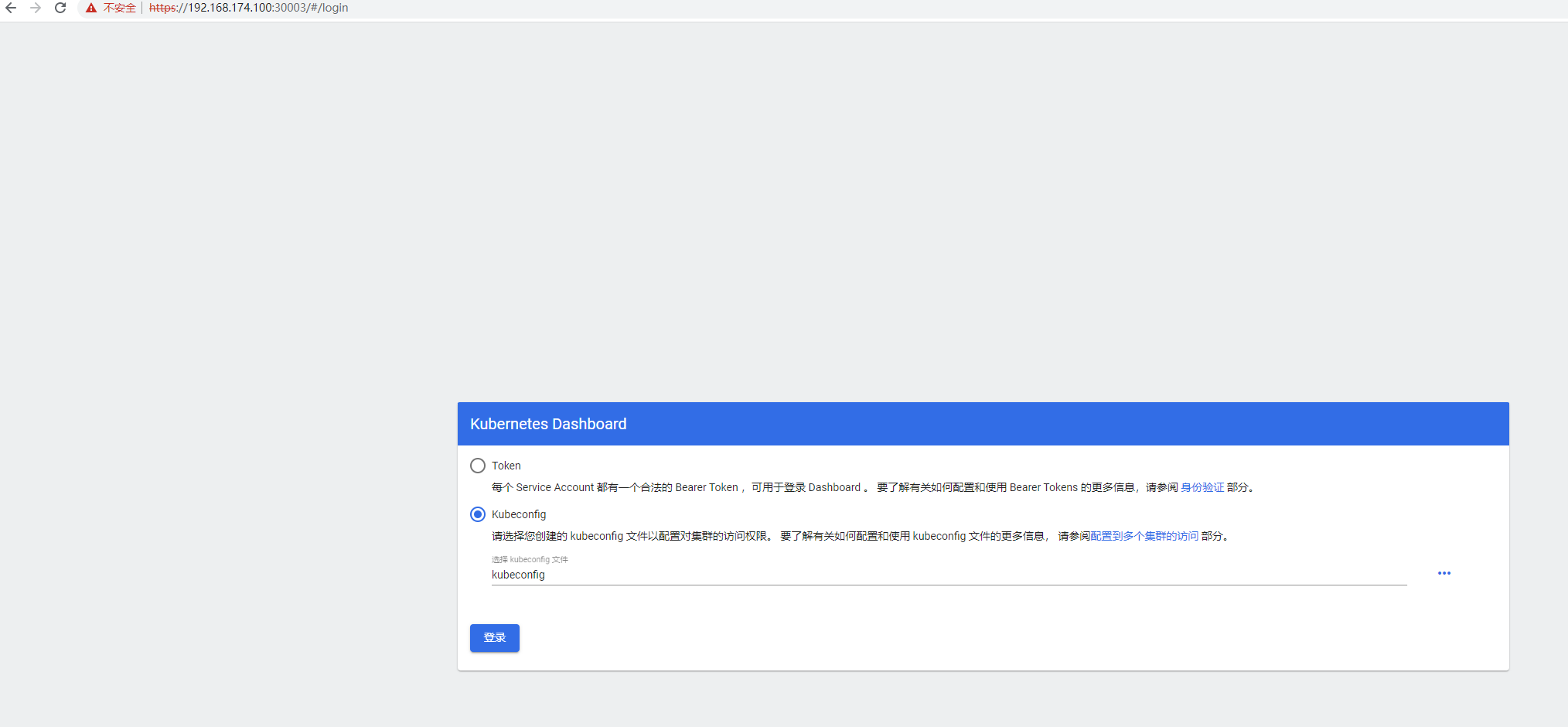

root@k8s-deploy:~/yaml# sed -i 's@kubernetesui/metrics-scraper:v1.0.7@192.168.174.120/baseimages/metrics-scraper:v1.0.7@g' recommended.yaml 4.10.5 开放访问端口

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30003

selector:

k8s-app: kubernetes-dashboard4.10.6 部署dashboard

root@k8s-deploy:~/yaml# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created4.10.7 查看pod信息

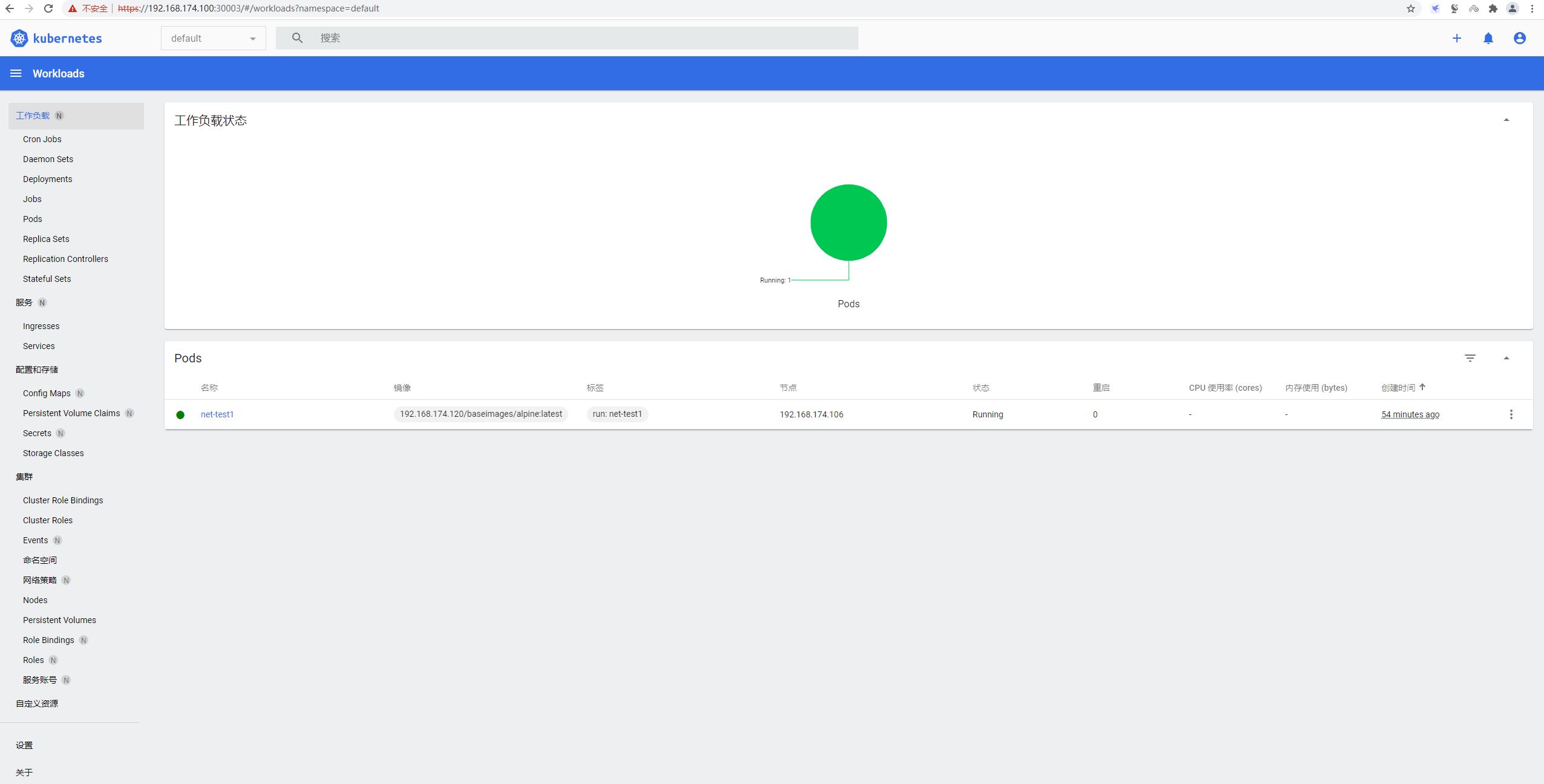

root@k8s-master-01:~# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default net-test1 1/1 Running 0 38m 10.200.154.198 192.168.174.106 <none> <none>

kube-system calico-kube-controllers-6f8794d6c4-6hjzq 1/1 Running 3 4h1m 192.168.174.107 192.168.174.107 <none> <none>

kube-system calico-node-ln496 1/1 Running 3 4h1m 192.168.174.107 192.168.174.107 <none> <none>

kube-system calico-node-p4lq4 1/1 Running 0 4h1m 192.168.174.101 192.168.174.101 <none> <none>

kube-system calico-node-s7n4q 1/1 Running 0 4h1m 192.168.174.106 192.168.174.106 <none> <none>

kube-system calico-node-trpds 1/1 Running 2 4h1m 192.168.174.100 192.168.174.100 <none> <none>

kube-system coredns-8568fcb45d-nj7tg 1/1 Running 0 45m 10.200.154.197 192.168.174.106 <none> <none>

kubernetes-dashboard dashboard-metrics-scraper-c5f49cc44-cdbsn 1/1 Running 0 4m7s 10.200.154.199 192.168.174.106 <none> <none>

kubernetes-dashboard kubernetes-dashboard-688994654d-mt7nl 1/1 Running 0 4m7s 10.200.44.193 192.168.174.107 <none> <none>4.10.8 创建登录用户

https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

4.10.8.1 查看创建用户信息

root@k8s-master-01:~/yaml# cat admin-user.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user