仓库推荐

- https://mirrors.aliyun.com/ceph/ #阿里云镜像仓库

- http://mirrors.163.com/ceph/ #网易镜像仓库

- https://mirrors.tuna.tsinghua.edu.cn/ceph/ #清华大学镜像源

服务器规划

| 主机 |

ip |

角色 |

配置 |

| ceph-deploy |

172.16.10.156 |

deploy |

4核 4G |

| ceph-mon-01 |

172.16.10.148 |

mon |

4核 4G |

| ceph-mon-02 |

172.16.10.110 |

mon |

4核 4G |

| ceph-mon-03 |

172.16.10.182 |

mon |

4核 4G |

| ceph-mgr-01 |

172.16.10.225 |

mgr |

4核 4G |

| ceph-mgr-02 |

172.16.10.248 |

mgr |

4核 4G |

| ceph-node-01 |

172.16.10.126 |

osd |

4核 4G |

| ceph-node-02 |

172.16.10.76 |

osd |

4核 4G |

| ceph-node-03 |

172.16.10.44 |

osd |

4核 4G |

集群规划

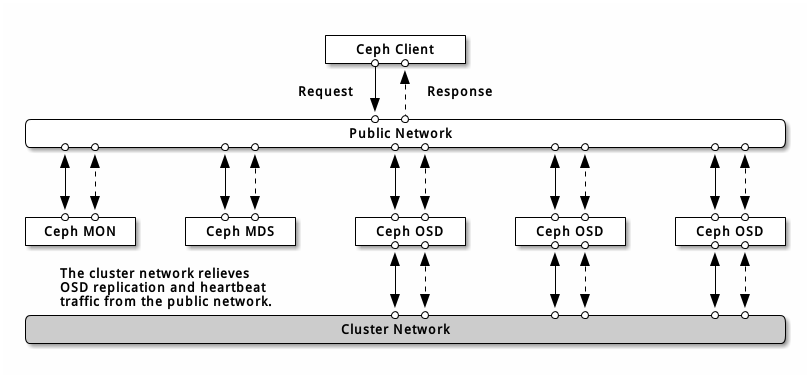

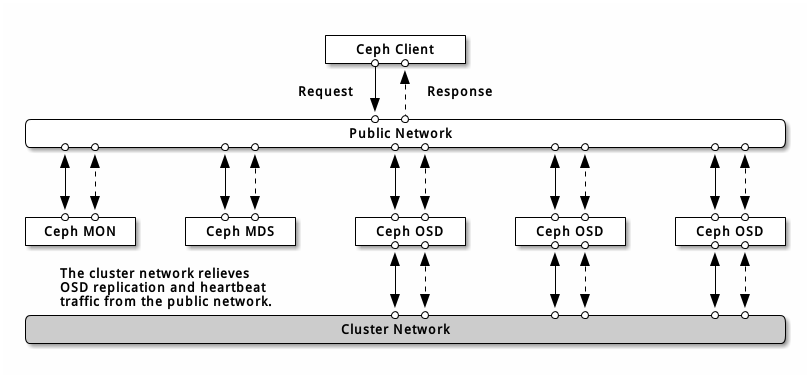

网络规划

- 运行两个独立网络的考量主要有:

- 性能:

- OSD为客户端处理数据复制,复制多份时OSD间的网络负载势必会影响到客户端和Ceph集群的通讯,包括延时增加产生性能问题。

- 恢复和重均衡也会显著增加公共网延迟。

- 安全:

- 防止DOS,当OSD间流量失控时,归置组不能达到active+clean状态,引起数据无法读写。

数据保存规划

单块磁盘

- 机械硬盘或者SSD

- Data: 即ceph保存的对象数据

- Blcok: rocks DB数据即元数据

- block-wal: 数据库的wal日志

两块磁盘

- SSD

- block: rocks DB数据即元数据

- block-wal: 数据库的wal日志

- 机械硬盘

三块磁盘

系统环境

系统版本

点击查看代码

root@ceph-deploy:~# cat /etc/issue

Ubuntu 18.04.3 LTS \n \l

配置主机名解析

点击查看代码

root@ceph-deploy:~# vim /etc/hosts

172.16.10.156 ceph-deploy

172.16.10.148 ceph-mon-01

172.16.10.110 ceph-mon-02

172.16.10.182 ceph-mon-03

172.16.10.225 ceph-mgr-01

172.16.10.248 ceph-mgr-02

172.16.10.126 ceph-osd-01

172.16.10.76 ceph-osd-02

172.16.10.44 ceph-osd-03

部署ceph-deploy节点

系统时间同步

点击查看代码

root@ceph-deploy:~# apt -y install chrony

root@ceph-deploy:~# systemctl start chrony

root@ceph-deploy:~# systemctl enable chrony

仓库准备

导入key

点击查看代码

root@ceph-deploy:~# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add -

OK

添加仓库

点击查看代码

root@ceph-deploy:~# echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific $(lsb_release -cs) main" >> /etc/apt/sources.list

root@ceph-deploy:~# apt -y update && apt -y upgrade

设置ceph用户

查看ceph用户

点击查看代码

root@ceph-deploy:~# id ceph

uid=64045(ceph) gid=64045(ceph) groups=64045(ceph)

设置ceph用户shell

root@ceph-deploy:~# usermod -s /bin/bash ceph

设置ceph用户密码

root@ceph-deploy:~# passwd ceph

允许ceph用户以sudo执行特权命令

root@ceph-deploy:~# echo "ceph ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

安装ceph-deploy部署工具

查看ceph-deploy版本

点击查看代码

root@ceph-deploy:~# apt-cache madison ceph-deploy

ceph-deploy | 2.0.1-0ubuntu1 | http://mirrors.ucloud.cn/ubuntu focal/universe amd64 Packages

ceph-deploy | 2.0.1 | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific focal/main amd64 Packages

ceph-deploy | 2.0.1-0ubuntu1 | http://mirrors.ucloud.cn/ubuntu focal/universe Sources

安装ceph-deploy

点击查看代码

root@ceph-deploy:~# apt install ceph-deploy -y

ceph-deploy使用方法

点击查看代码

root@ceph-deploy:~# ceph-deploy --help

new: 开始部署一个新的 ceph 存储集群, 并生成 CLUSTER.conf 集群配置文件和 keyring 认证文件。

install: 在远程主机上安装 ceph 相关的软件包, 可以通过--release 指定安装的版本。

rgw: 管理 RGW 守护程序(RADOSGW,对象存储网关)。

mgr: 管理 MGR 守护程序(ceph-mgr,Ceph Manager DaemonCeph 管理器守护程序)。

mds: 管理 MDS 守护程序(Ceph Metadata Server, ceph 源数据服务器)。

mon: 管理 MON 守护程序(ceph-mon,ceph 监视器)。

gatherkeys: 从指定获取提供新节点的验证 keys, 这些 keys 会在添加新的 MON/OSD/MD 加入的时候使用。

disk: 管理远程主机磁盘。

osd: 在远程主机准备数据磁盘, 即将指定远程主机的指定磁盘添加到 ceph 集群作为 osd 使用。

repo: 远程主机仓库管理。

admin: 推送 ceph 集群配置文件和 client.admin 认证文件到远程主机。

config: 将 ceph.conf 配置文件推送到远程主机或从远程主机拷贝。

uninstall: 从远端主机删除安装包

purgedata: 从/var/lib/ceph 删除 ceph 数据,会删除/etc/ceph 下的内容。

purge: 删除远端主机的安装包和所有数据。

forgetkeys: 从本地主机删除所有的验证 keyring, 包括 client.admin, monitor, bootstrap 等认证文件。

pkg: 管理远端主机的安装包。

calamari: 安装并配置一个 calamari web 节点, calamari 是一个 web 监控平台。

部署ceph集群管理组件

ceph-common组件介绍

- ceph-common组件用于管理ceph集群

- ceph-common组件会创建ceph用户

查看ceph-commom组件版本

点击查看代码

root@ceph-deploy:~# apt-cache madison ceph-common

ceph-common | 16.2.5-1bionic | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 Packages

ceph-common | 12.2.13-0ubuntu0.18.04.8 | http://mirrors.ucloud.cn/ubuntu bionic-updates/main amd64 Packages

ceph-common | 12.2.13-0ubuntu0.18.04.4 | http://mirrors.ucloud.cn/ubuntu bionic-security/main amd64 Packages

ceph-common | 12.2.4-0ubuntu1 | http://mirrors.ucloud.cn/ubuntu bionic/main amd64 Packages

ceph | 12.2.4-0ubuntu1 | http://mirrors.ucloud.cn/ubuntu bionic/main Sources

ceph | 12.2.13-0ubuntu0.18.04.4 | http://mirrors.ucloud.cn/ubuntu bionic-security/main Sources

ceph | 12.2.13-0ubuntu0.18.04.8 | http://mirrors.ucloud.cn/ubuntu bionic-updates/main Sources

安装ceph-common组件

点击查看代码

root@ceph-deploy:~# apt install ceph-common -y

验证ceph-common组件版本

点击查看代码

root@ceph-deploy:~# ceph --version

ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)

配置mon节点(ceph-mon-01)

系统时间同步

root@ceph-mon-01:~# apt -y install chrony

root@ceph-mon-01:~# systemctl start chrony

root@ceph-mon-01:~# systemctl enable chrony

准备仓库

导入key

root@ceph-mon-01:~# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add -

OK

添加仓库

root@ceph-mon-01:~# echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific $(lsb_release -cs) main" >> /etc/apt/sources.list

root@ceph-mon-01:~# apt -y update && apt -y upgrade

安装ceph-mon

查看ceph-mon版本

root@ceph-mon-01:~# apt-cache madison ceph-mon

ceph-mon | 16.2.5-1bionic | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 Packages

ceph-mon | 12.2.13-0ubuntu0.18.04.8 | http://mirrors.ucloud.cn/ubuntu bionic-updates/main amd64 Packages

ceph-mon | 12.2.13-0ubuntu0.18.04.4 | http://mirrors.ucloud.cn/ubuntu bionic-security/main amd64 Packages

ceph-mon | 12.2.4-0ubuntu1 | http://mirrors.ucloud.cn/ubuntu bionic/main amd64 Packages

ceph | 12.2.4-0ubuntu1 | http://mirrors.ucloud.cn/ubuntu bionic/main Sources

ceph | 12.2.13-0ubuntu0.18.04.4 | http://mirrors.ucloud.cn/ubuntu bionic-security/main Sources

ceph | 12.2.13-0ubuntu0.18.04.8 | http://mirrors.ucloud.cn/ubuntu bionic-updates/main Sources

安装ceph-mon

root@ceph-mon-01:~# apt install ceph-mon -y

验证版本

root@ceph-mon-01:~# ceph-mon --version

ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)

root@ceph-mon-01:~# ceph --version

ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)

设置ceph用户

查看ceph用户

root@ceph-mon-01:~# id ceph

uid=64045(ceph) gid=64045(ceph) groups=64045(ceph)

设置用户bash

root@ceph-mon-01:~# usermod -s /bin/bash ceph

设置ceph用户密码

root@ceph-mon-01:~# usermod -s /bin/bash ceph

允许 ceph 用户以 sudo 执行特权命令

root@ceph-mon-01:~# echo "ceph ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

初始化ceph-mon-01节点

点击查看代码

root@ceph-deploy:~# su - ceph

ceph@ceph-deploy:~$ mkdir ceph-cluster

ceph@ceph-deploy:~$ cd ceph-cluster/

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy new --cluster-network 172.16.10.0/24 --public-network 172.16.10.0/24 ceph-mon-01

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy new --cluster-network 172.16.10.0/24 --public-network 172.16.10.0/24 ceph-mon-01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f5d028d3dc0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['ceph-mon-01']

[ceph_deploy.cli][INFO ] func : <function new at 0x7f5cffccbc50>

[ceph_deploy.cli][INFO ] public_network : 172.16.10.0/24

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : 172.16.10.0/24

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds[ceph-mon-01][DEBUG ] connected to host: ceph-deploy

[ceph-mon-01][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-mon-01[ceph_deploy.new][WARNIN] could not connect via SSH

[ceph_deploy.new][INFO ] will connect again with password promptceph@ceph-mon-01's password:

[ceph-mon-01][DEBUG ] connected to host: ceph-mon-01

[ceph-mon-01][DEBUG ] detect platform information from remote host

[ceph-mon-01][DEBUG ] detect machine type

[ceph-mon-01][WARNIN] .ssh/authorized_keys does not exist, will skip adding keys

ceph@ceph-mon-01's password:

[ceph-mon-01][DEBUG ] connection detected need for sudo

ceph@ceph-mon-01's password:

sudo: unable to resolve host ceph-mon-01

[ceph-mon-01][DEBUG ] connected to host: ceph-mon-01

[ceph-mon-01][DEBUG ] detect platform information from remote host

[ceph-mon-01][DEBUG ] detect machine type

[ceph-mon-01][DEBUG ] find the location of an executable

[ceph-mon-01][INFO ] Running command: sudo /bin/ip link show

[ceph-mon-01][INFO ] Running command: sudo /bin/ip addr show

[ceph-mon-01][DEBUG ] IP addresses found: [u'172.16.10.148']

[ceph_deploy.new][DEBUG ] Resolving host ceph-mon-01

[ceph_deploy.new][DEBUG ] Monitor ceph-mon-01 at 172.16.10.148

[ceph_deploy.new][DEBUG ] Monitor initial members are ['ceph-mon-01']

[ceph_deploy.new][DEBUG ] Monitor addrs are [u'172.16.10.148']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

验证ceph-mon-01节点初始化

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ls -l

total 28

-rw-rw-r-- 1 ceph ceph 267 Aug 27 16:55 ceph.conf #自动生成的配置文件

-rw-rw-r-- 1 ceph ceph 18394 Aug 27 16:55 ceph-deploy-ceph.log #初始化日志

-rw------- 1 ceph ceph 73 Aug 27 16:55 ceph.mon.keyring #用于 ceph mon 节点内部通讯认证的秘钥环文件

ceph@ceph-deploy:~/ceph-cluster$ cat ceph.conf

[global]fsid = 6e521054-1532-4bc8-9971-7f8ae93e8430

public_network = 172.16.10.0/24

cluster_network = 172.16.10.0/24

mon_initial_members = ceph-mon-01

mon_host = 172.16.10.148

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

配置ceph-mon-01节点

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mon create-initial

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create-initial

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f47d95762d0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mon at 0x7f47d9553a50>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] keyrings : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts ceph-mon-01

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph-mon-01 ...

ceph@ceph-mon-01's password:

[ceph-mon-01][DEBUG ] connection detected need for sudo

ceph@ceph-mon-01's password:

sudo: unable to resolve host ceph-mon-01

[ceph-mon-01][DEBUG ] connected to host: ceph-mon-01

[ceph-mon-01][DEBUG ] detect platform information from remote host

[ceph-mon-01][DEBUG ] detect machine type

[ceph-mon-01][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: Ubuntu 18.04 bionic

[ceph-mon-01][DEBUG ] determining if provided host has same hostname in remote

[ceph-mon-01][DEBUG ] get remote short hostname

[ceph-mon-01][DEBUG ] deploying mon to ceph-mon-01

[ceph-mon-01][DEBUG ] get remote short hostname

[ceph-mon-01][DEBUG ] remote hostname: ceph-mon-01

[ceph-mon-01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mon-01][DEBUG ] create the mon path if it does not exist

[ceph-mon-01][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-mon-01/done

[ceph-mon-01][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph-mon-01/done

[ceph-mon-01][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph-mon-01.mon.keyring

[ceph-mon-01][DEBUG ] create the monitor keyring file

[ceph-mon-01][INFO ] Running command: sudo ceph-mon --cluster ceph --mkfs -i ceph-mon-01 --keyring /var/lib/ceph/tmp/ceph-ceph-mon-01.mon.keyring --setuser 64045 --setgroup 64045

[ceph-mon-01][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph-mon-01.mon.keyring

[ceph-mon-01][DEBUG ] create a done file to avoid re-doing the mon deployment

[ceph-mon-01][DEBUG ] create the init path if it does not exist

[ceph-mon-01][INFO ] Running command: sudo systemctl enable ceph.target

[ceph-mon-01][INFO ] Running command: sudo systemctl enable ceph-mon@ceph-mon-01

[ceph-mon-01][INFO ] Running command: sudo systemctl start ceph-mon@ceph-mon-01

[ceph-mon-01][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-mon-01.asok mon_status

[ceph-mon-01][DEBUG ] ********************************************************************************

[ceph-mon-01][DEBUG ] status for monitor: mon.ceph-mon-01

[ceph-mon-01][DEBUG ] {

[ceph-mon-01][DEBUG ] "election_epoch": 3,

[ceph-mon-01][DEBUG ] "extra_probe_peers": [],

[ceph-mon-01][DEBUG ] "feature_map": {

[ceph-mon-01][DEBUG ] "mon": [

[ceph-mon-01][DEBUG ] {

[ceph-mon-01][DEBUG ] "features": "0x3f01cfb9fffdffff",

[ceph-mon-01][DEBUG ] "num": 1,

[ceph-mon-01][DEBUG ] "release": "luminous"

[ceph-mon-01][DEBUG ] }

[ceph-mon-01][DEBUG ] ]

[ceph-mon-01][DEBUG ] },

[ceph-mon-01][DEBUG ] "features": {

[ceph-mon-01][DEBUG ] "quorum_con": "4540138297136906239",

[ceph-mon-01][DEBUG ] "quorum_mon": [

[ceph-mon-01][DEBUG ] "kraken",

[ceph-mon-01][DEBUG ] "luminous",

[ceph-mon-01][DEBUG ] "mimic",

[ceph-mon-01][DEBUG ] "osdmap-prune",

[ceph-mon-01][DEBUG ] "nautilus",

[ceph-mon-01][DEBUG ] "octopus",

[ceph-mon-01][DEBUG ] "pacific",

[ceph-mon-01][DEBUG ] "elector-pinging"

[ceph-mon-01][DEBUG ] ],

[ceph-mon-01][DEBUG ] "required_con": "2449958747317026820",

[ceph-mon-01][DEBUG ] "required_mon": [

[ceph-mon-01][DEBUG ] "kraken",

[ceph-mon-01][DEBUG ] "luminous",

[ceph-mon-01][DEBUG ] "mimic",

[ceph-mon-01][DEBUG ] "osdmap-prune",

[ceph-mon-01][DEBUG ] "nautilus",

[ceph-mon-01][DEBUG ] "octopus",

[ceph-mon-01][DEBUG ] "pacific",

[ceph-mon-01][DEBUG ] "elector-pinging"

[ceph-mon-01][DEBUG ] ]

[ceph-mon-01][DEBUG ] },

[ceph-mon-01][DEBUG ] "monmap": {

[ceph-mon-01][DEBUG ] "created": "2021-08-29T06:36:59.023456Z",

[ceph-mon-01][DEBUG ] "disallowed_leaders: ": "",

[ceph-mon-01][DEBUG ] "election_strategy": 1,

[ceph-mon-01][DEBUG ] "epoch": 1,

[ceph-mon-01][DEBUG ] "features": {

[ceph-mon-01][DEBUG ] "optional": [],

[ceph-mon-01][DEBUG ] "persistent": [

[ceph-mon-01][DEBUG ] "kraken",

[ceph-mon-01][DEBUG ] "luminous",

[ceph-mon-01][DEBUG ] "mimic",

[ceph-mon-01][DEBUG ] "osdmap-prune",

[ceph-mon-01][DEBUG ] "nautilus",

[ceph-mon-01][DEBUG ] "octopus",

[ceph-mon-01][DEBUG ] "pacific",

[ceph-mon-01][DEBUG ] "elector-pinging"

[ceph-mon-01][DEBUG ] ]

[ceph-mon-01][DEBUG ] },

[ceph-mon-01][DEBUG ] "fsid": "6e521054-1532-4bc8-9971-7f8ae93e8430",

[ceph-mon-01][DEBUG ] "min_mon_release": 16,

[ceph-mon-01][DEBUG ] "min_mon_release_name": "pacific",

[ceph-mon-01][DEBUG ] "modified": "2021-08-29T06:36:59.023456Z",

[ceph-mon-01][DEBUG ] "mons": [

[ceph-mon-01][DEBUG ] {

[ceph-mon-01][DEBUG ] "addr": "172.16.10.148:6789/0",

[ceph-mon-01][DEBUG ] "crush_location": "{}",

[ceph-mon-01][DEBUG ] "name": "ceph-mon-01",

[ceph-mon-01][DEBUG ] "priority": 0,

[ceph-mon-01][DEBUG ] "public_addr": "172.16.10.148:6789/0",

[ceph-mon-01][DEBUG ] "public_addrs": {

[ceph-mon-01][DEBUG ] "addrvec": [

[ceph-mon-01][DEBUG ] {

[ceph-mon-01][DEBUG ] "addr": "172.16.10.148:3300",

[ceph-mon-01][DEBUG ] "nonce": 0,

[ceph-mon-01][DEBUG ] "type": "v2"

[ceph-mon-01][DEBUG ] },

[ceph-mon-01][DEBUG ] {

[ceph-mon-01][DEBUG ] "addr": "172.16.10.148:6789",

[ceph-mon-01][DEBUG ] "nonce": 0,

[ceph-mon-01][DEBUG ] "type": "v1"

[ceph-mon-01][DEBUG ] }

[ceph-mon-01][DEBUG ] ]

[ceph-mon-01][DEBUG ] },

[ceph-mon-01][DEBUG ] "rank": 0,

[ceph-mon-01][DEBUG ] "weight": 0

[ceph-mon-01][DEBUG ] }

[ceph-mon-01][DEBUG ] ],

[ceph-mon-01][DEBUG ] "stretch_mode": false

[ceph-mon-01][DEBUG ] },

[ceph-mon-01][DEBUG ] "name": "ceph-mon-01",

[ceph-mon-01][DEBUG ] "outside_quorum": [],

[ceph-mon-01][DEBUG ] "quorum": [

[ceph-mon-01][DEBUG ] 0

[ceph-mon-01][DEBUG ] ],

[ceph-mon-01][DEBUG ] "quorum_age": 2,

[ceph-mon-01][DEBUG ] "rank": 0,

[ceph-mon-01][DEBUG ] "state": "leader",

[ceph-mon-01][DEBUG ] "stretch_mode": false,

[ceph-mon-01][DEBUG ] "sync_provider": []

[ceph-mon-01][DEBUG ] }

[ceph-mon-01][DEBUG ] ********************************************************************************

[ceph-mon-01][INFO ] monitor: mon.ceph-mon-01 is running

[ceph-mon-01][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-mon-01.asok mon_status

[ceph_deploy.mon][INFO ] processing monitor mon.ceph-mon-01

ceph@ceph-mon-01's password:

[ceph-mon-01][DEBUG ] connection detected need for sudo

ceph@ceph-mon-01's password:

sudo: unable to resolve host ceph-mon-01

[ceph-mon-01][DEBUG ] connected to host: ceph-mon-01

[ceph-mon-01][DEBUG ] detect platform information from remote host

[ceph-mon-01][DEBUG ] detect machine type

[ceph-mon-01][DEBUG ] find the location of an executable

[ceph-mon-01][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-mon-01.asok mon_status

[ceph_deploy.mon][INFO ] mon.ceph-mon-01 monitor has reached quorum!

[ceph_deploy.mon][INFO ] all initial monitors are running and have formed quorum

[ceph_deploy.mon][INFO ] Running gatherkeys...

[ceph_deploy.gatherkeys][INFO ] Storing keys in temp directory /tmp/tmptXvt5K

ceph@ceph-mon-01's password:

[ceph-mon-01][DEBUG ] connection detected need for sudo

ceph@ceph-mon-01's password:

sudo: unable to resolve host ceph-mon-01

[ceph-mon-01][DEBUG ] connected to host: ceph-mon-01

[ceph-mon-01][DEBUG ] detect platform information from remote host

[ceph-mon-01][DEBUG ] detect machine type

[ceph-mon-01][DEBUG ] get remote short hostname

[ceph-mon-01][DEBUG ] fetch remote file

[ceph-mon-01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --admin-daemon=/var/run/ceph/ceph-mon.ceph-mon-01.asok mon_status

[ceph-mon-01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon-01/keyring auth get client.admin

[ceph-mon-01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon-01/keyring auth get client.bootstrap-mds

[ceph-mon-01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon-01/keyring auth get client.bootstrap-mgr

[ceph-mon-01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon-01/keyring auth get client.bootstrap-osd

[ceph-mon-01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph-mon-01/keyring auth get client.bootstrap-rgw

[ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring

[ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmptXvt5K

验证ceph-mon-01节点

root@ceph-mon-01:/etc/ceph# ps -ef |grep ceph-mon

ceph 28546 1 0 14:36 ? 00:00:00 /usr/bin/ceph-mon -f --cluster ceph --id ceph-mon-01 --setuser ceph --setgroup ceph

ceph-mon-01服务管理

查看ceph-mon-01服务状态

点击查看代码

root@ceph-mon-01:/etc/ceph# systemctl status ceph-mon@ceph-mon-01

● ceph-mon@ceph-mon-01.service - Ceph cluster monitor daemon

Loaded: loaded (/lib/systemd/system/ceph-mon@.service; indirect; vendor preset: enabled)

Active: active (running) since Sun 2021-08-29 14:36:59 CST; 3min 58s ago

Main PID: 28546 (ceph-mon)

Tasks: 26

CGroup: /system.slice/system-ceph\x2dmon.slice/ceph-mon@ceph-mon-01.service

└─28546 /usr/bin/ceph-mon -f --cluster ceph --id ceph-mon-01 --setuser ceph --setgroup ceph

Aug 29 14:36:59 ceph-mon-01 systemd[1]: Started Ceph cluster monitor daemon.

重启ceph-mon-01服务

root@ceph-mon-01:~# systemctl restart ceph-mon@ceph-mon-01

分发admin秘钥管理集群

admin秘钥分发到ceph-deploy节点

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy admin ceph-deploy

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy admin ceph-deploy

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f8b71aa7320>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph-deploy']

[ceph_deploy.cli][INFO ] func : <function admin at 0x7f8b723ab9d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-deploy[ceph-deploy][DEBUG ] connection detected need for sudo

[ceph-deploy][DEBUG ] connected to host: ceph-deploy[ceph-deploy][DEBUG ] detect platform information from remote host

[ceph-deploy][DEBUG ] detect machine type[ceph-deploy][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

授权ceph用户

root@ceph-deploy:~# setfacl -m u:ceph:rw /etc/ceph/ceph.client.admin.keyring

验证ceph集群状态

验证ceph集群版本

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph versions

{

"mon": {

"ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)": 1

},

"mgr": {},

"osd": {},

"mds": {},

"overall": {

"ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)": 1

}

}

验证集群状态

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 6e521054-1532-4bc8-9971-7f8ae93e8430

health: HEALTH_WARN

mon is allowing insecure global_id reclaim

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph-mon-01 (age 5m)

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail pgs:

解决health: HEALTH_WARN

mon is allowing insecure global_id reclaim

ceph@ceph-deploy:~/ceph-cluster$ ceph config set mon auth_allow_insecure_global_id_reclaim false

OSD count 0 < osd_pool_default_size 3

验证HEALTH_WARN

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 6e521054-1532-4bc8-9971-7f8ae93e8430

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph-mon-01 (age 7m)

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail pgs:

部署manager节点(ceph-mgr-01)

系统时间同步

root@ceph-mgr-01:~# apt -y install chrony

root@ceph-mgr-01:~# systemctl start chrony

root@ceph-mgr-01:~# systemctl enable chrony

准备仓库

导入key

root@ceph-mgr-01:~# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add -

OK

添加仓库

root@ceph-mgr-01:~# echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific $(lsb_release -cs) main" >> /etc/apt/sources.list

root@ceph-mgr-01:~# apt -y update && apt -y upgrade

安装ceph-mgr

查看ceph-mgr版本

root@ceph-mgr-01:~# apt-cache madison ceph-mgr

ceph-mgr | 16.2.5-1bionic | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 Packages

ceph-mgr | 12.2.13-0ubuntu0.18.04.8 | http://mirrors.ucloud.cn/ubuntu bionic-updates/main amd64 Packages

ceph-mgr | 12.2.13-0ubuntu0.18.04.4 | http://mirrors.ucloud.cn/ubuntu bionic-security/main amd64 Packages

ceph-mgr | 12.2.4-0ubuntu1 | http://mirrors.ucloud.cn/ubuntu bionic/main amd64 Packages

ceph | 12.2.4-0ubuntu1 | http://mirrors.ucloud.cn/ubuntu bionic/main Sources

ceph | 12.2.13-0ubuntu0.18.04.4 | http://mirrors.ucloud.cn/ubuntu bionic-security/main Sources

ceph | 12.2.13-0ubuntu0.18.04.8 | http://mirrors.ucloud.cn/ubuntu bionic-updates/main Sources

安装ceph-mgr

root@ceph-mgr-01:~# apt -y install ceph-mgr

验证ceph-mgr版本

root@ceph-mgr-01:~# ceph-mgr --version

ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)

设置ceph用户

查看ceph用户

root@ceph-mgr-01:~# id ceph

uid=64045(ceph) gid=64045(ceph) groups=64045(ceph)

设置用户shell

root@ceph-mgr-01:~# usermod -s /bin/bash ceph

设置ceph用户密码

root@ceph-mgr-01:~# passwd ceph

允许 ceph 用户以 sudo 执行特权命令

root@ceph-mgr-01:~# echo "ceph ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

初始化ceph-mgr-01节点

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy mgr create ceph-mgr-01

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mgr create ceph-mgr-01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('ceph-mgr-01', 'ceph-mgr-01')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fe20cd96e60>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mgr at 0x7fe20d1f70d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts ceph-mgr-01:ceph-mgr-01

The authenticity of host 'ceph-mgr-01 (172.16.10.225)' can't be established.

ECDSA key fingerprint is SHA256:FSg4Sx1V2Vrn4ZHy3xng6Cx0V0k8ctGe4Bh60m8IsAU.

Are you sure you want to continue connecting (yes/no)?

Warning: Permanently added 'ceph-mgr-01,172.16.10.225' (ECDSA) to the list of known hosts.

ceph@ceph-mgr-01's password:

[ceph-mgr-01][DEBUG ] connection detected need for sudo

ceph@ceph-mgr-01's password:

sudo: unable to resolve host ceph-mgr-01

[ceph-mgr-01][DEBUG ] connected to host: ceph-mgr-01

[ceph-mgr-01][DEBUG ] detect platform information from remote host

[ceph-mgr-01][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph-mgr-01

[ceph-mgr-01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mgr-01][WARNIN] mgr keyring does not exist yet, creating one

[ceph-mgr-01][DEBUG ] create a keyring file

[ceph-mgr-01][DEBUG ] create path recursively if it doesn't exist

[ceph-mgr-01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph-mgr-01 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph-mgr-01/keyring

[ceph-mgr-01][INFO ] Running command: sudo systemctl enable ceph-mgr@ceph-mgr-01

[ceph-mgr-01][WARNIN] Created symlink /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph-mgr-01.service → /lib/systemd/system/ceph-mgr@.service.

[ceph-mgr-01][INFO ] Running command: sudo systemctl start ceph-mgr@ceph-mgr-01

[ceph-mgr-01][INFO ] Running command: sudo systemctl enable ceph.target

验证ceph-mgr-01节点

root@ceph-mgr-01:~# ps -ef |grep ceph-mgr

ceph 3585 1 4 16:00 ? 00:00:07 /usr/bin/ceph-mgr -f --cluster ceph --id ceph-mgr-01 --setuser ceph --setgroup ceph

ceph-mgr-01服务管理

查看ceph-mgr-01服务状态

点击查看代码

root@ceph-mgr-01:~# systemctl status ceph-mgr@ceph-mgr-01

● ceph-mgr@ceph-mgr-01.service - Ceph cluster manager daemon

Loaded: loaded (/lib/systemd/system/ceph-mgr@.service; indirect; vendor preset: enabled)

Active: active (running) since Sun 2021-08-29 16:00:02 CST; 4min 45s ago

Main PID: 3585 (ceph-mgr)

Tasks: 62 (limit: 1032)

CGroup: /system.slice/system-ceph\x2dmgr.slice/ceph-mgr@ceph-mgr-01.service

└─3585 /usr/bin/ceph-mgr -f --cluster ceph --id ceph-mgr-01 --setuser ceph --setgroup ceph

Aug 29 16:00:02 ceph-mgr-01 systemd[1]: Started Ceph cluster manager daemon.

Aug 29 16:00:02 ceph-mgr-01 systemd[1]: /lib/systemd/system/ceph-mgr@.service:21: Unknown lvalue 'ProtectHostname' in section 'Service'

Aug 29 16:00:02 ceph-mgr-01 systemd[1]: /lib/systemd/system/ceph-mgr@.service:22: Unknown lvalue 'ProtectKernelLogs' in section 'Service'

重启ceph-mgr-01服务

root@ceph-mgr-01:~# systemctl restart ceph-mgr@ceph-mgr-01

验证ceph集群状态

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 6e521054-1532-4bc8-9971-7f8ae93e8430

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph-mon-01 (age 90m)

mgr: ceph-mgr-01(active, since 68s)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

验证ceph集群版本

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph versions

{

"mon": {

"ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)": 1

},

"mgr": {

"ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)": 1

},

"osd": {},

"mds": {},

"overall": {

"ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)": 2

}

}

部署OSD节点

系统时间同步

ceph-osd-01节点

root@ceph-node-01:~# apt -y install chrony

root@ceph-node-01:~# systemctl start chrony

root@ceph-node-01:~# systemctl enable chrony

ceph-osd-02节点

root@ceph-node-02:~# apt -y install chrony

root@ceph-node-02:~# systemctl start chrony

root@ceph-node-02:~# systemctl enable chrony

ceph-osd-03节点

root@ceph-node-03:~# apt -y install chrony

root@ceph-node-03:~# systemctl start chrony

root@ceph-node-03:~# systemctl enable chrony

准备仓库

导入key

root@ceph-node-01:~# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add -

root@ceph-node-02:~# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add -

root@ceph-node-03:~# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add -

添加仓库

root@ceph-node-01:~# echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific $(lsb_release -cs) main" >> /etc/apt/sources.list

root@ceph-node-02:~# echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific $(lsb_release -cs) main" >> /etc/apt/sources.list

root@ceph-node-03:~# echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific $(lsb_release -cs) main" >> /etc/apt/sources.list

更新仓库

root@ceph-node-01:~# apt -y update && apt -y upgrade

root@ceph-node-02:~# apt -y update && apt -y upgrade

root@ceph-node-03:~# apt -y update && apt -y upgrade

安装公共组件ceph-common

root@ceph-node-01:~# apt -y install ceph-common

root@ceph-node-02:~# apt -y install ceph-common

root@ceph-node-03:~# apt -y install ceph-common

设置ceph用户

查看ceph用户

root@ceph-node-01:~# id ceph

uid=64045(ceph) gid=64045(ceph) groups=64045(ceph)

root@ceph-node-02:~# id ceph

uid=64045(ceph) gid=64045(ceph) groups=64045(ceph)

root@ceph-node-03:~# id ceph

uid=64045(ceph) gid=64045(ceph) groups=64045(ceph)

设置ceph用户shell

root@ceph-node-01:~# usermod -s /bin/bash ceph

root@ceph-node-02:~# usermod -s /bin/bash ceph

root@ceph-node-03:~# usermod -s /bin/bash ceph

设置ceph用户密码

root@ceph-node-01:~# passwd ceph

root@ceph-node-02:~# passwd ceph

root@ceph-node-03:~# passwd ceph

允许 ceph 用户以 sudo 执行特权命令

root@ceph-node-01:~# echo "ceph ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

root@ceph-node-02:~# echo "ceph ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

root@ceph-node-03:~# echo "ceph ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

初始化OSD节点

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy install --no-adjust-repos --nogpgcheck ceph-node-01 ceph-node-02 ceph-node-03

安装OSD节点运行环境

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy install --release pacific ceph-node-01

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy install --release pacific ceph-node-02

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy install --release pacific ceph-node-03

列出OSD节点磁盘

ceph-node-01节点磁盘列表

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy disk list ceph-node-01

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk list ceph-node-01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : list

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fdf5e1122d0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ['ceph-node-01']

[ceph_deploy.cli][INFO ] func : <function disk at 0x7fdf5e0e8250>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : FalseWarning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

[ceph-node-01][DEBUG ] connection detected need for sudo

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

sudo: unable to resolve host ceph-osd-01

[ceph-node-01][DEBUG ] connected to host: ceph-node-01

[ceph-node-01][DEBUG ] detect platform information from remote host

[ceph-node-01][DEBUG ] detect machine type

[ceph-node-01][DEBUG ] find the location of an executable

[ceph-node-01][INFO ] Running command: sudo fdisk -l

[ceph-node-01][INFO ] Disk /dev/vda: 20 GiB, 21474836480 bytes, 41943040 sectors

[ceph-node-01][INFO ] Disk /dev/vdb: 20 GiB, 21474836480 bytes, 41943040 sectors

[ceph-node-01][INFO ] Disk /dev/vdc: 20 GiB, 21474836480 bytes, 41943040 sectors

[ceph-node-01][INFO ] Disk /dev/vdd: 20 GiB, 21474836480 bytes, 41943040 sectors

ceph-node-01节点磁盘列表

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy disk list ceph-node-02

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk list ceph-node-02

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : list

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fa7eae632d0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ['ceph-node-02']

[ceph_deploy.cli][INFO ] func : <function disk at 0x7fa7eae39250>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

ceph@ceph-node-02's password:

[ceph-node-02][DEBUG ] connection detected need for sudo

ceph@ceph-node-02's password:

sudo: unable to resolve host ceph-osd-02

[ceph-node-02][DEBUG ] connected to host: ceph-node-02

[ceph-node-02][DEBUG ] detect platform information from remote host

[ceph-node-02][DEBUG ] detect machine type

[ceph-node-02][DEBUG ] find the location of an executable

[ceph-node-02][INFO ] Running command: sudo fdisk -l

[ceph-node-02][INFO ] Disk /dev/vda: 20 GiB, 21474836480 bytes, 41943040 sectors

[ceph-node-02][INFO ] Disk /dev/vdb: 20 GiB, 21474836480 bytes, 41943040 sectors

[ceph-node-02][INFO ] Disk /dev/vdc: 20 GiB, 21474836480 bytes, 41943040 sectors

[ceph-node-02][INFO ] Disk /dev/vdd: 20 GiB, 21474836480 bytes, 41943040 sectors

ceph-node-01节点磁盘列表

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy disk list ceph-node-03

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk list ceph-node-03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : list

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7febdfcf62d0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ['ceph-node-03']

[ceph_deploy.cli][INFO ] func : <function disk at 0x7febdfccc250>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

ceph@ceph-node-03's password:

[ceph-node-03][DEBUG ] connection detected need for sudo

ceph@ceph-node-03's password:

sudo: unable to resolve host ceph-osd-03

[ceph-node-03][DEBUG ] connected to host: ceph-node-03

[ceph-node-03][DEBUG ] detect platform information from remote host

[ceph-node-03][DEBUG ] detect machine type

[ceph-node-03][DEBUG ] find the location of an executable

[ceph-node-03][INFO ] Running command: sudo fdisk -l

[ceph-node-03][INFO ] Disk /dev/vda: 20 GiB, 21474836480 bytes, 41943040 sectors

[ceph-node-03][INFO ] Disk /dev/vdb: 20 GiB, 21474836480 bytes, 41943040 sectors

[ceph-node-03][INFO ] Disk /dev/vdc: 20 GiB, 21474836480 bytes, 41943040 sectors

[ceph-node-03][INFO ] Disk /dev/vdd: 20 GiB, 21474836480 bytes, 41943040 sectors

擦除ceph-node节点数据盘

擦除ceph-node-01节点数据盘

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy disk zap ceph-node-01 /dev/vdb /dev/vdc /dev/vdd

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk zap ceph-node-01 /dev/vdb /dev/vdc /dev/vdd

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : zap

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f84ae4f22d0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ceph-node-01

[ceph_deploy.cli][INFO ] func : <function disk at 0x7f84ae4c8250>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : ['/dev/vdb', '/dev/vdc', '/dev/vdd']

[ceph_deploy.osd][DEBUG ] zapping /dev/vdb on ceph-node-01

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

[ceph-node-01][DEBUG ] connection detected need for sudo

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

sudo: unable to resolve host ceph-node-01

[ceph-node-01][DEBUG ] connected to host: ceph-node-01

[ceph-node-01][DEBUG ] detect platform information from remote host

[ceph-node-01][DEBUG ] detect machine type

[ceph-node-01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node-01][DEBUG ] zeroing last few blocks of device

[ceph-node-01][DEBUG ] find the location of an executable

[ceph-node-01][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/vdb

[ceph-node-01][WARNIN] --> Zapping: /dev/vdb

[ceph-node-01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node-01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/vdb bs=1M count=10 conv=fsync

[ceph-node-01][WARNIN] stderr: 10+0 records in

[ceph-node-01][WARNIN] 10+0 records out

[ceph-node-01][WARNIN] stderr: 10485760 bytes (10 MB, 10 MiB) copied, 0.203791 s, 51.5 MB/s

[ceph-node-01][WARNIN] --> Zapping successful for: <Raw Device: /dev/vdb>

[ceph_deploy.osd][DEBUG ] zapping /dev/vdc on ceph-node-01

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

[ceph-node-01][DEBUG ] connection detected need for sudo

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

sudo: unable to resolve host ceph-node-01

[ceph-node-01][DEBUG ] connected to host: ceph-node-01

[ceph-node-01][DEBUG ] detect platform information from remote host

[ceph-node-01][DEBUG ] detect machine type

[ceph-node-01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node-01][DEBUG ] zeroing last few blocks of device

[ceph-node-01][DEBUG ] find the location of an executable

[ceph-node-01][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/vdc

[ceph-node-01][WARNIN] --> Zapping: /dev/vdc

[ceph-node-01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node-01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/vdc bs=1M count=10 conv=fsync

[ceph-node-01][WARNIN] stderr: 10+0 records in

[ceph-node-01][WARNIN] 10+0 records out

[ceph-node-01][WARNIN] stderr: 10485760 bytes (10 MB, 10 MiB) copied, 0.0703279 s, 149 MB/s

[ceph-node-01][WARNIN] --> Zapping successful for: <Raw Device: /dev/vdc>

[ceph_deploy.osd][DEBUG ] zapping /dev/vdd on ceph-node-01

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

[ceph-node-01][DEBUG ] connection detected need for sudo

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

sudo: unable to resolve host ceph-node-01

[ceph-node-01][DEBUG ] connected to host: ceph-node-01

[ceph-node-01][DEBUG ] detect platform information from remote host

[ceph-node-01][DEBUG ] detect machine type

[ceph-node-01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node-01][DEBUG ] zeroing last few blocks of device

[ceph-node-01][DEBUG ] find the location of an executable

[ceph-node-01][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/vdd

[ceph-node-01][WARNIN] --> Zapping: /dev/vdd

[ceph-node-01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node-01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/vdd bs=1M count=10 conv=fsync

[ceph-node-01][WARNIN] stderr: 10+0 records in

[ceph-node-01][WARNIN] 10+0 records out

[ceph-node-01][WARNIN] stderr: 10485760 bytes (10 MB, 10 MiB) copied, 0.0935676 s, 112 MB/s

[ceph-node-01][WARNIN] --> Zapping successful for: <Raw Device: /dev/vdd>

擦除ceph-node-02节点数据盘

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy disk zap ceph-node-02 /dev/vdb /dev/vdc /dev/vdd

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk zap ceph-node-02 /dev/vdb /dev/vdc /dev/vdd

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : zap

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fb883d762d0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ceph-node-02

[ceph_deploy.cli][INFO ] func : <function disk at 0x7fb883d4c250>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : ['/dev/vdb', '/dev/vdc', '/dev/vdd']

[ceph_deploy.osd][DEBUG ] zapping /dev/vdb on ceph-node-02

ceph@ceph-node-02's password:

[ceph-node-02][DEBUG ] connection detected need for sudo

ceph@ceph-node-02's password:

sudo: unable to resolve host ceph-node-02

[ceph-node-02][DEBUG ] connected to host: ceph-node-02

[ceph-node-02][DEBUG ] detect platform information from remote host

[ceph-node-02][DEBUG ] detect machine type

[ceph-node-02][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node-02][DEBUG ] zeroing last few blocks of device

[ceph-node-02][DEBUG ] find the location of an executable

[ceph-node-02][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/vdb

[ceph-node-02][WARNIN] --> Zapping: /dev/vdb

[ceph-node-02][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node-02][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/vdb bs=1M count=10 conv=fsync

[ceph-node-02][WARNIN] stderr: 10+0 records in

[ceph-node-02][WARNIN] 10+0 records out

[ceph-node-02][WARNIN] 10485760 bytes (10 MB, 10 MiB) copied, 0.0419579 s, 250 MB/s

[ceph-node-02][WARNIN] --> Zapping successful for: <Raw Device: /dev/vdb>

[ceph_deploy.osd][DEBUG ] zapping /dev/vdc on ceph-node-02

ceph@ceph-node-02's password:

[ceph-node-02][DEBUG ] connection detected need for sudo

ceph@ceph-node-02's password:

sudo: unable to resolve host ceph-node-02

[ceph-node-02][DEBUG ] connected to host: ceph-node-02

[ceph-node-02][DEBUG ] detect platform information from remote host

[ceph-node-02][DEBUG ] detect machine type

[ceph-node-02][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node-02][DEBUG ] zeroing last few blocks of device

[ceph-node-02][DEBUG ] find the location of an executable

[ceph-node-02][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/vdc

[ceph-node-02][WARNIN] --> Zapping: /dev/vdc

[ceph-node-02][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node-02][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/vdc bs=1M count=10 conv=fsync

[ceph-node-02][WARNIN] --> Zapping successful for: <Raw Device: /dev/vdc>

[ceph_deploy.osd][DEBUG ] zapping /dev/vdd on ceph-node-02

ceph@ceph-node-02's password:

[ceph-node-02][DEBUG ] connection detected need for sudo

ceph@ceph-node-02's password:

sudo: unable to resolve host ceph-node-02

[ceph-node-02][DEBUG ] connected to host: ceph-node-02

[ceph-node-02][DEBUG ] detect platform information from remote host

[ceph-node-02][DEBUG ] detect machine type

[ceph-node-02][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node-02][DEBUG ] zeroing last few blocks of device

[ceph-node-02][DEBUG ] find the location of an executable

[ceph-node-02][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/vdd

[ceph-node-02][WARNIN] --> Zapping: /dev/vdd

[ceph-node-02][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node-02][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/vdd bs=1M count=10 conv=fsync

[ceph-node-02][WARNIN] stderr: 10+0 records in

[ceph-node-02][WARNIN] 10+0 records out

[ceph-node-02][WARNIN] 10485760 bytes (10 MB, 10 MiB) copied, 0.547056 s, 19.2 MB/s

[ceph-node-02][WARNIN] --> Zapping successful for: <Raw Device: /dev/vdd>

擦除ceph-node-03节点数据盘

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy disk zap ceph-node-03 /dev/vdb /dev/vdc /dev/vdd

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk zap ceph-node-03 /dev/vdb /dev/vdc /dev/vdd

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : zap

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fe6d2ba62d0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ceph-node-03

[ceph_deploy.cli][INFO ] func : <function disk at 0x7fe6d2b7c250>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : ['/dev/vdb', '/dev/vdc', '/dev/vdd']

[ceph_deploy.osd][DEBUG ] zapping /dev/vdb on ceph-node-03

ceph@ceph-node-03's password:

[ceph-node-03][DEBUG ] connection detected need for sudo

ceph@ceph-node-03's password:

sudo: unable to resolve host ceph-node-03

[ceph-node-03][DEBUG ] connected to host: ceph-node-03

[ceph-node-03][DEBUG ] detect platform information from remote host

[ceph-node-03][DEBUG ] detect machine type

[ceph-node-03][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node-03][DEBUG ] zeroing last few blocks of device

[ceph-node-03][DEBUG ] find the location of an executable

[ceph-node-03][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/vdb

[ceph-node-03][WARNIN] --> Zapping: /dev/vdb

[ceph-node-03][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node-03][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/vdb bs=1M count=10 conv=fsync

[ceph-node-03][WARNIN] --> Zapping successful for: <Raw Device: /dev/vdb>

[ceph_deploy.osd][DEBUG ] zapping /dev/vdc on ceph-node-03

ceph@ceph-node-03's password:

[ceph-node-03][DEBUG ] connection detected need for sudo

ceph@ceph-node-03's password:

sudo: unable to resolve host ceph-node-03

[ceph-node-03][DEBUG ] connected to host: ceph-node-03

[ceph-node-03][DEBUG ] detect platform information from remote host

[ceph-node-03][DEBUG ] detect machine type

[ceph-node-03][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node-03][DEBUG ] zeroing last few blocks of device

[ceph-node-03][DEBUG ] find the location of an executable

[ceph-node-03][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/vdc

[ceph-node-03][WARNIN] --> Zapping: /dev/vdc

[ceph-node-03][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node-03][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/vdc bs=1M count=10 conv=fsync

[ceph-node-03][WARNIN] --> Zapping successful for: <Raw Device: /dev/vdc>

[ceph_deploy.osd][DEBUG ] zapping /dev/vdd on ceph-node-03

ceph@ceph-node-03's password:

[ceph-node-03][DEBUG ] connection detected need for sudo

ceph@ceph-node-03's password:

sudo: unable to resolve host ceph-node-03

[ceph-node-03][DEBUG ] connected to host: ceph-node-03

[ceph-node-03][DEBUG ] detect platform information from remote host

[ceph-node-03][DEBUG ] detect machine type

[ceph-node-03][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node-03][DEBUG ] zeroing last few blocks of device

[ceph-node-03][DEBUG ] find the location of an executable

[ceph-node-03][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/vdd

[ceph-node-03][WARNIN] --> Zapping: /dev/vdd

[ceph-node-03][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node-03][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/vdd bs=1M count=10 conv=fsync

[ceph-node-03][WARNIN] --> Zapping successful for: <Raw Device: /dev/vdd>

3.9.9 添加OSD

添加OSD方法

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy osd -h

usage: ceph-deploy osd [-h] {list,create} ...

Create OSDs from a data disk on a remote host:

ceph-deploy osd create {node} --data /path/to/device

For bluestore, optional devices can be used::

ceph-deploy osd create {node} --data /path/to/data --block-db /path/to/db-device

ceph-deploy osd create {node} --data /path/to/data --block-wal /path/to/wal-device

ceph-deploy osd create {node} --data /path/to/data --block-db /path/to/db-device --block-wal /path/to/wal-device

For filestore, the journal must be specified, as well as the objectstore::

ceph-deploy osd create {node} --filestore --data /path/to/data --journal /path/to/journal

For data devices, it can be an existing logical volume in the format of:

vg/lv, or a device. For other OSD components like wal, db, and journal, it

can be logical volume (in vg/lv format) or it must be a GPT partition.

positional arguments:

{list,create}

list List OSD info from remote host(s)

create Create new Ceph OSD daemon by preparing and activating a

device

optional arguments:

-h, --help show this help message and exit

添加ceph-node-01节点OSD

添加ceph-node-01节点/dev/vdb

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy osd create ceph-node-01 --data /dev/vdb

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create ceph-node-01 --data /dev/vdb

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f088b6145f0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] host : ceph-node-01

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] func : <function osd at 0x7f088b6651d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] data : /dev/vdb

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/vdb

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

[ceph-node-01][DEBUG ] connection detected need for sudo

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

sudo: unable to resolve host ceph-node-01

[ceph-node-01][DEBUG ] connected to host: ceph-node-01

[ceph-node-01][DEBUG ] detect platform information from remote host

[ceph-node-01][DEBUG ] detect machine type

[ceph-node-01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph_deploy.osd][DEBUG ] Deploying osd to ceph-node-01

[ceph-node-01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-node-01][WARNIN] osd keyring does not exist yet, creating one

[ceph-node-01][DEBUG ] create a keyring file

[ceph-node-01][DEBUG ] find the location of an executable

[ceph-node-01][INFO ] Running command: sudo /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/vdb

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 43200fd2-1472-4b6c-b4ec-c384580fe631

[ceph-node-01][WARNIN] Running command: /sbin/vgcreate --force --yes ceph-d6fc82f0-6bad-4b75-8cc5-73f86b33c9de /dev/vdb

[ceph-node-01][WARNIN] stdout: Physical volume "/dev/vdb" successfully created.

[ceph-node-01][WARNIN] stdout: Volume group "ceph-d6fc82f0-6bad-4b75-8cc5-73f86b33c9de" successfully created

[ceph-node-01][WARNIN] Running command: /sbin/lvcreate --yes -l 5119 -n osd-block-43200fd2-1472-4b6c-b4ec-c384580fe631 ceph-d6fc82f0-6bad-4b75-8cc5-73f86b33c9de

[ceph-node-01][WARNIN] stdout: Logical volume "osd-block-43200fd2-1472-4b6c-b4ec-c384580fe631" created.

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key

[ceph-node-01][WARNIN] Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0

[ceph-node-01][WARNIN] --> Executable selinuxenabled not in PATH: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin

[ceph-node-01][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/ceph-d6fc82f0-6bad-4b75-8cc5-73f86b33c9de/osd-block-43200fd2-1472-4b6c-b4ec-c384580fe631

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-0

[ceph-node-01][WARNIN] Running command: /bin/ln -s /dev/ceph-d6fc82f0-6bad-4b75-8cc5-73f86b33c9de/osd-block-43200fd2-1472-4b6c-b4ec-c384580fe631 /var/lib/ceph/osd/ceph-0/block

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap

[ceph-node-01][WARNIN] stderr: 2021-09-06T14:09:11.118+0800 7f72337a7700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory

[ceph-node-01][WARNIN] 2021-09-06T14:09:11.118+0800 7f72337a7700 -1 AuthRegistry(0x7f722c05b408) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx

[ceph-node-01][WARNIN] stderr: got monmap epoch 1

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQCGsDVhhhXOAxAAD7GOy2/7BctI1wRwHK17OA==

[ceph-node-01][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-0/keyring

[ceph-node-01][WARNIN] added entity osd.0 auth(key=AQCGsDVhhhXOAxAAD7GOy2/7BctI1wRwHK17OA==)

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid 43200fd2-1472-4b6c-b4ec-c384580fe631 --setuser ceph --setgroup ceph

[ceph-node-01][WARNIN] stderr: 2021-09-06T14:09:11.590+0800 7f8edfe14f00 -1 bluestore(/var/lib/ceph/osd/ceph-0/) _read_fsid unparsable uuid

[ceph-node-01][WARNIN] --> ceph-volume lvm prepare successful for: /dev/vdb

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-d6fc82f0-6bad-4b75-8cc5-73f86b33c9de/osd-block-43200fd2-1472-4b6c-b4ec-c384580fe631 --path /var/lib/ceph/osd/ceph-0 --no-mon-config

[ceph-node-01][WARNIN] Running command: /bin/ln -snf /dev/ceph-d6fc82f0-6bad-4b75-8cc5-73f86b33c9de/osd-block-43200fd2-1472-4b6c-b4ec-c384580fe631 /var/lib/ceph/osd/ceph-0/block

[ceph-node-01][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-0

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

[ceph-node-01][WARNIN] Running command: /bin/systemctl enable ceph-volume@lvm-0-43200fd2-1472-4b6c-b4ec-c384580fe631

[ceph-node-01][WARNIN] stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-43200fd2-1472-4b6c-b4ec-c384580fe631.service → /lib/systemd/system/ceph-volume@.service.

[ceph-node-01][WARNIN] Running command: /bin/systemctl enable --runtime ceph-osd@0

[ceph-node-01][WARNIN] stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service → /lib/systemd/system/ceph-osd@.service.

[ceph-node-01][WARNIN] Running command: /bin/systemctl start ceph-osd@0

[ceph-node-01][WARNIN] --> ceph-volume lvm activate successful for osd ID: 0

[ceph-node-01][WARNIN] --> ceph-volume lvm create successful for: /dev/vdb

[ceph-node-01][INFO ] checking OSD status...

[ceph-node-01][DEBUG ] find the location of an executable

[ceph-node-01][INFO ] Running command: sudo /usr/bin/ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host ceph-node-01 is now ready for osd use.

Unhandled exception in thread started by

添加ceph-node-01节点/dev/vdc

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy osd create ceph-node-01 --data /dev/vdc

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create ceph-node-01 --data /dev/vdc

[ceph_deploy.cli][INFO ] ceph-deploy options:[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f2beaa6d5f0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] host : ceph-node-01

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] func : <function osd at 0x7f2beaabe1d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] data : /dev/vdc

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/vdc

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

[ceph-node-01][DEBUG ] connection detected need for sudo

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

sudo: unable to resolve host ceph-node-01

[ceph-node-01][DEBUG ] connected to host: ceph-node-01

[ceph-node-01][DEBUG ] detect platform information from remote host

[ceph-node-01][DEBUG ] detect machine type

[ceph-node-01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph_deploy.osd][DEBUG ] Deploying osd to ceph-node-01

[ceph-node-01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-node-01][DEBUG ] find the location of an executable

[ceph-node-01][INFO ] Running command: sudo /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/vdc

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 000e303a-cafd-4a2b-96d8-17f556921569

[ceph-node-01][WARNIN] Running command: /sbin/vgcreate --force --yes ceph-67d74ac1-ac6a-4712-80b8-742da52d1c1e /dev/vdc

[ceph-node-01][WARNIN] stdout: Physical volume "/dev/vdc" successfully created.

[ceph-node-01][WARNIN] stdout: Volume group "ceph-67d74ac1-ac6a-4712-80b8-742da52d1c1e" successfully created

[ceph-node-01][WARNIN] Running command: /sbin/lvcreate --yes -l 5119 -n osd-block-000e303a-cafd-4a2b-96d8-17f556921569 ceph-67d74ac1-ac6a-4712-80b8-742da52d1c1e

[ceph-node-01][WARNIN] stdout: Logical volume "osd-block-000e303a-cafd-4a2b-96d8-17f556921569" created.

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key

[ceph-node-01][WARNIN] Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-1

[ceph-node-01][WARNIN] --> Executable selinuxenabled not in PATH: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin

[ceph-node-01][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/ceph-67d74ac1-ac6a-4712-80b8-742da52d1c1e/osd-block-000e303a-cafd-4a2b-96d8-17f556921569

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-1

[ceph-node-01][WARNIN] Running command: /bin/ln -s /dev/ceph-67d74ac1-ac6a-4712-80b8-742da52d1c1e/osd-block-000e303a-cafd-4a2b-96d8-17f556921569 /var/lib/ceph/osd/ceph-1/block

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-1/activate.monmap

[ceph-node-01][WARNIN] stderr: 2021-09-06T14:11:34.199+0800 7f05fca57700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory

[ceph-node-01][WARNIN] stderr: 2021-09-06T14:11:34.199+0800 7f05fca57700 -1 AuthRegistry(0x7f05f805b408) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx

[ceph-node-01][WARNIN] stderr: got monmap epoch 1

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-1/keyring --create-keyring --name osd.1 --add-key AQAVsTVhp80wCxAA5nJA080MT3BjWtEzgjjwTQ==

[ceph-node-01][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-1/keyring

[ceph-node-01][WARNIN] added entity osd.1 auth(key=AQAVsTVhp80wCxAA5nJA080MT3BjWtEzgjjwTQ==)

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/keyring

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 1 --monmap /var/lib/ceph/osd/ceph-1/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-1/ --osd-uuid 000e303a-cafd-4a2b-96d8-17f556921569 --setuser ceph --setgroup ceph

[ceph-node-01][WARNIN] stderr: 2021-09-06T14:11:34.675+0800 7f3cda5c7f00 -1 bluestore(/var/lib/ceph/osd/ceph-1/) _read_fsid unparsable uuid

[ceph-node-01][WARNIN] --> ceph-volume lvm prepare successful for: /dev/vdc

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1

[ceph-node-01][WARNIN] Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-67d74ac1-ac6a-4712-80b8-742da52d1c1e/osd-block-000e303a-cafd-4a2b-96d8-17f556921569 --path /var/lib/ceph/osd/ceph-1 --no-mon-config

[ceph-node-01][WARNIN] Running command: /bin/ln -snf /dev/ceph-67d74ac1-ac6a-4712-80b8-742da52d1c1e/osd-block-000e303a-cafd-4a2b-96d8-17f556921569 /var/lib/ceph/osd/ceph-1/block

[ceph-node-01][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-1/block

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-1

[ceph-node-01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1

[ceph-node-01][WARNIN] Running command: /bin/systemctl enable ceph-volume@lvm-1-000e303a-cafd-4a2b-96d8-17f556921569

[ceph-node-01][WARNIN] stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-1-000e303a-cafd-4a2b-96d8-17f556921569.service → /lib/systemd/system/ceph-volume@.service.

[ceph-node-01][WARNIN] Running command: /bin/systemctl enable --runtime ceph-osd@1

[ceph-node-01][WARNIN] stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@1.service → /lib/systemd/system/ceph-osd@.service.

[ceph-node-01][WARNIN] Running command: /bin/systemctl start ceph-osd@1

[ceph-node-01][WARNIN] --> ceph-volume lvm activate successful for osd ID: 1

[ceph-node-01][WARNIN] --> ceph-volume lvm create successful for: /dev/vdc

[ceph-node-01][INFO ] checking OSD status...

[ceph-node-01][DEBUG ] find the location of an executable

[ceph-node-01][INFO ] Running command: sudo /usr/bin/ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host ceph-node-01 is now ready for osd use.

Unhandled exception in thread started by

添加ceph-node-01节点/dev/vdd

点击查看代码

ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy osd create ceph-node-01 --data /dev/vdd

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create ceph-node-01 --data /dev/vdd

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fcaa652d5f0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] host : ceph-node-01

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] func : <function osd at 0x7fcaa657e1d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] data : /dev/vdd

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/vdd

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

[ceph-node-01][DEBUG ] connection detected need for sudo

Warning: the ECDSA host key for 'ceph-node-01' differs from the key for the IP address '172.16.10.126'

Offending key for IP in /var/lib/ceph/.ssh/known_hosts:5

Matching host key in /var/lib/ceph/.ssh/known_hosts:6

Are you sure you want to continue connecting (yes/no)? yes

ceph@ceph-node-01's password:

sudo: unable to resolve host ceph-node-01