3、交付Dubbo微服务到kubernetes集群

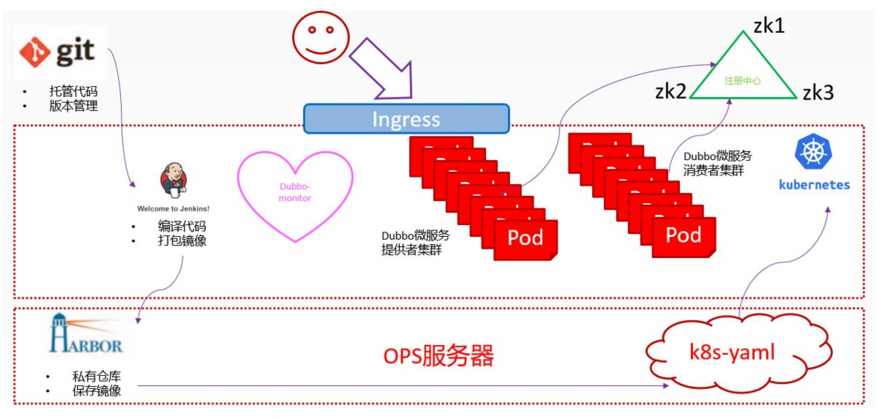

1.基础架构

1.1.架构图

- Zookeeper是Dubbo微服务集群的注册中心

- 它的高可用机制和k8s的etcd集群一致

- java编写,需要jdk环境

1.2.节点规划

| 主机名 | 角色 | ip |

|---|---|---|

| hdss7-11.host.com | k8s代理节点1,zk1 | 10.4.7.11 |

| hdss7-12.host.com | k8s代理节点2,zk2 | 10.4.7.12 |

| hdss7-21.host.com | k8s运算节点1,zk3 | 10.4.7.21 |

| hdss7-22.host.com | k8s运算节点2,jenkins | 10.4.7.22 |

| hdss7-200.host.com | k8s运维节点(docker仓库) | 10.4.7.200 |

2.部署zookeeper

2.1.安装jdk 1.8(3台zk节点都要安装)

//解压、创建软链接

[root@hdss7-11 src]# mkdir /usr/java

[root@hdss7-11 src]# tar xf jdk-8u221-linux-x64.tar.gz -C /usr/java/

[root@hdss7-11 src]# ln -s /usr/java/jdk1.8.0_221/ /usr/java/jdk

[root@hdss7-11 src]# cd /usr/java/

[root@hdss7-11 java]# ll

total 0

lrwxrwxrwx 1 root root 23 Nov 30 17:38 jdk -> /usr/java/jdk1.8.0_221/

drwxr-xr-x 7 10 143 245 Jul 4 19:37 jdk1.8.0_221

//创建环境变量

[root@hdss7-11 java]# vi /etc/profile

export JAVA_HOME=/usr/java/jdk

export PATH=$JAVA_HOME/bin:$JAVA_HOME/bin:$PATH

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

//source并检查

[root@hdss7-11 java]# source /etc/profile

[root@hdss7-11 java]# java -version

java version "1.8.0_221"

Java(TM) SE Runtime Environment (build 1.8.0_221-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode)

2.2.安装zk(3台节点都要安装)

2.2.1.解压,创建软链接

[root@hdss7-11 src]# tar xf zookeeper-3.4.14.tar.gz -C /opt/

[root@hdss7-11 src]# ln -s /opt/zookeeper-3.4.14/ /opt/zookeeper

2.2.2.创建数据目录和日志目录

[root@hdss7-11 opt]# mkdir -pv /data/zookeeper/data /data/zookeeper/logs

mkdir: created directory ‘/data’

mkdir: created directory ‘/data/zookeeper’

mkdir: created directory ‘/data/zookeeper/data’

mkdir: created directory ‘/data/zookeeper/logs’

2.2.3.配置

//各节点相同

[root@hdss7-11 opt]# vi /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

server.1=zk1.od.com:2888:3888

server.2=zk2.od.com:2888:3888

server.3=zk3.od.com:2888:3888

myid

//各节点不同

[root@hdss7-11 opt]# vi /data/zookeeper/data/myidvi

1

[root@hdss7-12 opt]# vi /data/zookeeper/data/myid

2

[root@hdss7-21 opt]# vi /data/zookeeper/data/myid

3

2.2.4.做dns解析

[root@hdss7-11 opt]# vi /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2019111006 ; serial //序列号前滚1

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

traefik A 10.4.7.10

dashboard A 10.4.7.10

zk1 A 10.4.7.11

zk2 A 10.4.7.12

zk3 A 10.4.7.21

[root@hdss7-11 opt]# systemctl restart named

[root@hdss7-11 opt]# dig -t A zk1.od.com @10.4.7.11 +short

10.4.7.11

2.2.4.依次启动并检查

启动

[root@hdss7-11 opt]# /opt/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@hdss7-12 opt]# /opt/zookeeper/bin/zkServer.sh start

[root@hdss7-21 opt]# /opt/zookeeper/bin/zkServer.sh start

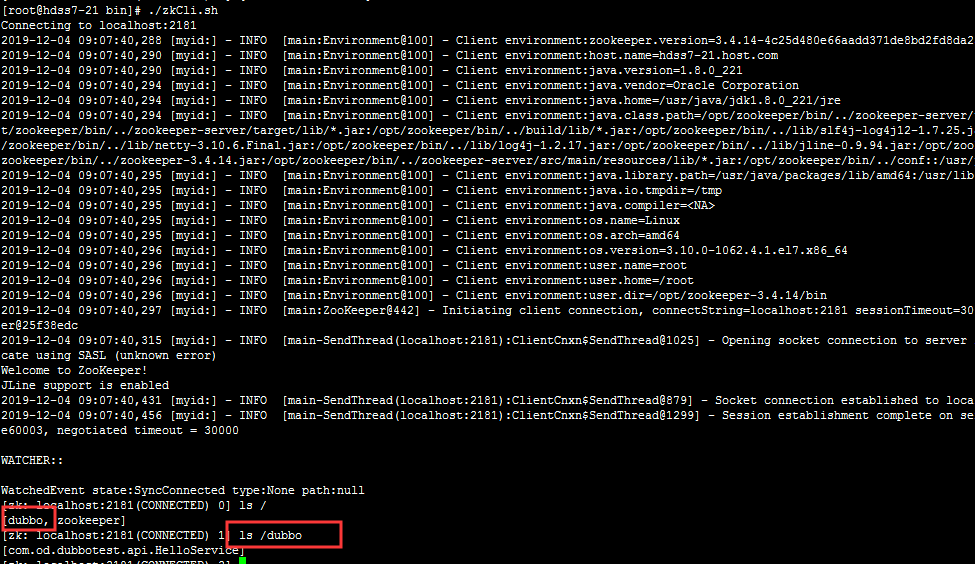

检查

[root@hdss7-11 opt]# netstat -ntlup|grep 2181

tcp6 0 0 :::2181 :::* LISTEN 69157/java

[root@hdss7-11 opt]# zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: follower

[root@hdss7-12 opt]# zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: leader

3.部署jenkins

3.1.准备镜像

hdss7-200上

[root@hdss7-200 ~]# docker pull jenkins/jenkins:2.190.3

[root@hdss7-200 ~]# docker images |grep jenkins

[root@hdss7-200 ~]# docker tag 22b8b9a84dbe harbor.od.com/public/jenkins:v2.190.3

[root@hdss7-200 ~]# docker push harbor.od.com/public/jenkins:v2.190.3

3.2.制作自定义镜像

3.2.1.生成ssh秘钥对

[root@hdss7-200 ~]# ssh-keygen -t rsa -b 2048 -C "8614610@qq.com" -N "" -f /root/.ssh/id_rsa

- 此处用自己的邮箱

3.2.2.准备get-docker.sh文件

[root@hdss7-200 ~]# curl -fsSL get.docker.com -o get-docker.sh

[root@hdss7-200 ~]# chmod +x get-docker.sh

3.2.3.准备config.json文件

cp /root/.docker/config.json .

cat /root/.docker/config.json

{

"auths": {

"harbor.od.com": {

"auth": "YWRtaW46SGFyYm9yMTIzNDU="

}

},

"HttpHeaders": {

"User-Agent": "Docker-Client/19.03.4 (linux)"

}

3.2.4.创建目录并准备Dockerfile

[root@hdss7-200 ~]# mkdir /data/dockerfile/jenkins -p

[root@hdss7-200 ~]# cd /data/dockerfile/jenkins/

[root@hdss7-200 jenkins]# vi Dockerfile

FROM harbor.od.com/public/jenkins:v2.190.3

USER root

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

ADD id_rsa /root/.ssh/id_rsa

ADD config.json /root/.docker/config.json

ADD get-docker.sh /get-docker.sh

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

/get-docker.sh

- 设置容器用户为root

- 设置容器内的时区

- 将创建的ssh私钥加入(使用git拉代码是要用,配对的公钥配置在gitlab中)

- 加入了登陆自建harbor仓库的config文件

- 修改了ssh客户端的配置,不做指纹验证

- 安装一个docker的客户端 //build如果失败,在get-docker.sh 后加--mirror=Aliyun

3.3.制作自定义镜像

//准备所需文件,拷贝至/data/dockerfile/jenkins

[root@hdss7-200 jenkins]# pwd

/data/dockerfile/jenkins

[root@hdss7-200 jenkins]# ll

total 32

-rw------- 1 root root 151 Nov 30 18:35 config.json

-rw-r--r-- 1 root root 349 Nov 30 18:31 Dockerfile

-rwxr-xr-x 1 root root 13216 Nov 30 18:31 get-docker.sh

-rw------- 1 root root 1675 Nov 30 18:35 id_rsa

//执行build

docker build . -t harbor.od.com/infra/jenkins:v2.190.3

//公钥上传到gitee测试此镜像是否可以成功连接

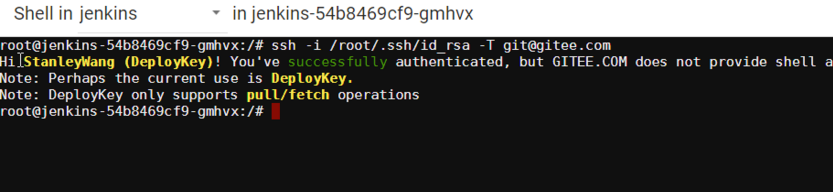

[root@hdss7-200 harbor]# docker run --rm harbor.od.com/infra/jenkins:v2.190.3 ssh -i /root/.ssh/id_rsa -T git@gitee.com

Warning: Permanently added 'gitee.com,212.64.62.174' (ECDSA) to the list of known hosts.

Hi StanleyWang (DeployKey)! You've successfully authenticated, but GITEE.COM does not provide shell access.

Note: Perhaps the current use is DeployKey.

Note: DeployKey only supports pull/fetch operations

3.4.创建infra仓库

3.5.创建kubernetes名称空间并在此创建secret

[root@hdss7-21 ~]# kubectl create namespace infra

[root@hdss7-21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n infra

3.6.推送镜像

[root@hdss7-200 jenkins]# docker push harbor.od.com/infra/jenkins:v2.190.3

3.7.准备共享存储

运维主机hdss7-200和所有运算节点上,这里指hdss7-21、22

3.7.1.安装nfs-utils -y

[root@hdss7-200 jenkins]# yum install nfs-utils -y

3.7.2.配置NFS服务

运维主机hdss7-200上

[root@hdss7-200 jenkins]# vi /etc/exports

/data/nfs-volume 10.4.7.0/24(rw,no_root_squash)

3.7.3.启动NFS服务

运维主机hdss7-200上

[root@hdss7-200 ~]# mkdir -p /data/nfs-volume

[root@hdss7-200 ~]# systemctl start nfs

[root@hdss7-200 ~]# systemctl enable nfs

3.8.准备资源配置清单

运维主机hdss7-200上

[root@hdss7-200 ~]# cd /data/k8s-yaml/

[root@hdss7-200 k8s-yaml]# mkdir /data/k8s-yaml/jenkins && mkdir /data/nfs-volume/jenkins_home && cd jenkins

dp.yaml

[root@hdss7-200 jenkins]# vi dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

labels:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data

nfs:

server: hdss7-200

path: /data/nfs-volume/jenkins_home

- name: docker

hostPath:

path: /run/docker.sock

type: ''

containers:

- name: jenkins

image: harbor.od.com/infra/jenkins:v2.190.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512m -Xms512m

volumeMounts:

- name: data

mountPath: /var/jenkins_home

- name: docker

mountPath: /run/docker.sock

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

svc.yaml

[root@hdss7-200 jenkins]# vi dp.yaml

kind: Service

apiVersion: v1

metadata:

name: jenkins

namespace: infra

spec:

ports:

- protocol: TCP

port: 80

targetPort: 8080

selector:

app: jenkins

ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

spec:

rules:

- host: jenkins.od.com

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 80

3.9.应用资源配置清单

任意运算节点上

[root@hdss7-21 etcd]# kubectl apply -f http://k8s-yaml.od.com/jenkins/dp.yaml

[root@hdss7-21 etcd]# kubectl apply -f http://k8s-yaml.od.com/jenkins/svc.yaml

[root@hdss7-21 etcd]# kubectl apply -f http://k8s-yaml.od.com/jenkins/ingress.yaml

[root@hdss7-21 etcd]# kubectl get pods -n infra

NAME READY STATUS RESTARTS AGE

jenkins-54b8469cf9-v46cc 1/1 Running 0 168m

[root@hdss7-21 etcd]# kubectl get all -n infra

NAME READY STATUS RESTARTS AGE

pod/jenkins-54b8469cf9-v46cc 1/1 Running 0 169m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/jenkins ClusterIP 192.168.183.210 <none> 80/TCP 2d21h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/jenkins 1/1 1 1 2d21h

NAME DESIRED CURRENT READY AGE

replicaset.apps/jenkins-54b8469cf9 1 1 1 2d18h

replicaset.apps/jenkins-6b6d76f456 0 0 0 2d21h

3.10.解析域名

hdss7-11上

[root@hdss7-11 ~]# vi /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2019111007 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

...

...

jenkins A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# dig -t A jenkins.od.com @10.4.7.11 +short

10.4.7.10

3.11. 浏览器访问

访问:http://jenkins.od.com 需要输入初始密码:

初始密码查看(也可在log里查看):

[root@hdss7-200 jenkins]# cat /data/nfs-volume/jenkins_home/secrets/initialAdminPassword

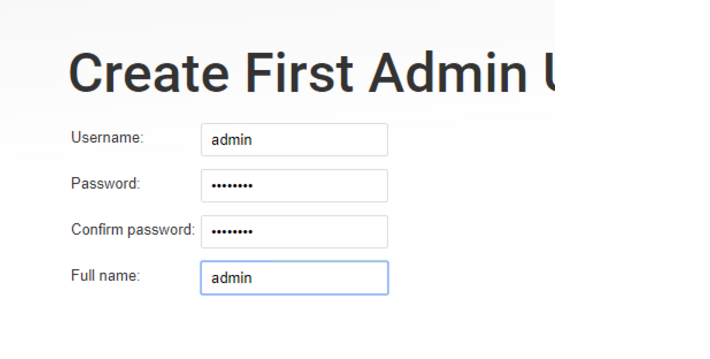

3.12.页面配置jenkins

3.12.1.配置用户名密码

用户名:admin 密码:admin123 //后续依赖此密码,请务必设置此密码

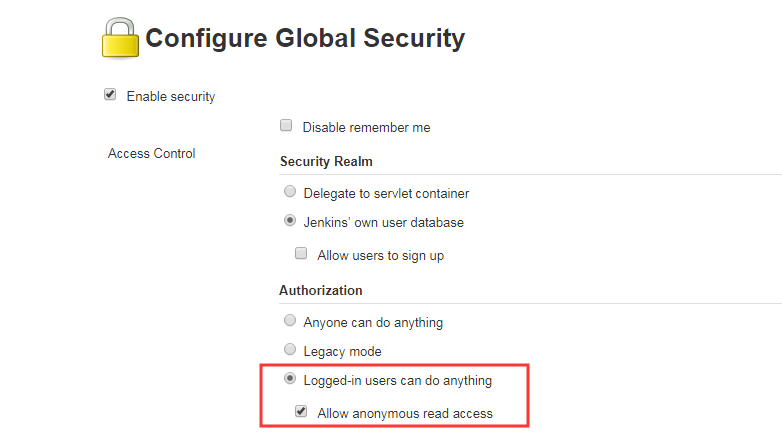

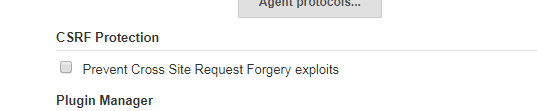

3.12.2.设置configure global security

允许匿名用户访问

阻止跨域请求,勾去掉

3.12.3.安装好流水线插件Blue-Ocean

注意安装插件慢的话可以设置清华大学加速

hdss-200上

cd /data/nfs-volume/jenkins_home/updates

sed -i 's/http:\/\/updates.jenkins-ci.org\/download/https:\/\/mirrors.tuna.tsinghua.edu.cn\/jenkins/g' default.json && sed -i 's/http:\/\/www.google.com/https:\/\/www.baidu.com/g' default.json

4.最后的准备工作

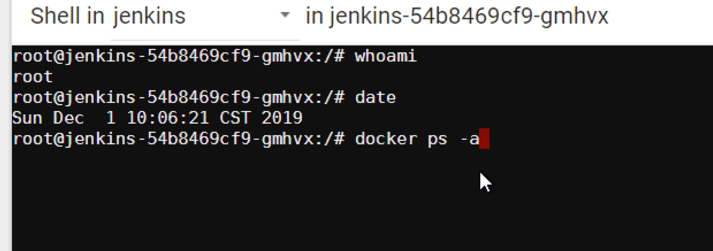

4.1.检查jenkins容器里的docker客户端

验证当前用户,时区

,sock文件是否可用

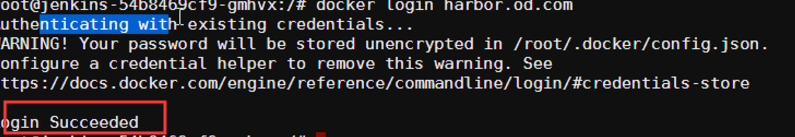

验证kubernetes名称空间创建的secret是否可登陆到harbor仓库

4.2.检查jenkins容器里的SSH key

验证私钥,是否能登陆到gitee拉代码

4.3.部署maven软件

编译java,早些年用javac-->ant -->maven-->Gragle

在运维主机hdss7-200上二进制部署,这里部署maven-3.6.2版本

mvn命令是一个脚本,如果用jdk7,可以在脚本里修改

4.3.1.下载安装包

4.3.2.创建目录并解压

目录8u232是根据docker容器里的jenkins的jdk版本命名,请严格按照此命名

[root@hdss7-200 src]# mkdir /data/nfs-volume/jenkins_home/maven-3.6.1-8u232

[root@hdss7-200 src]# tar xf apache-maven-3.6.1-bin.tar.gz -C /data/nfs-volume/jenkins_home/maven-3.6.1-8u232

[root@hdss7-200 src]# cd /data/nfs-volume/jenkins_home/maven-3.6.1-8u232

[root@hdss7-200 maven-3.6.1-8u232]# ls

apache-maven-3.6.1

[root@hdss7-200 maven-3.6.1-8u232]# mv apache-maven-3.6.1/ ../ && mv ../apache-maven-3.6.1/* .

[root@hdss7-200 maven-3.6.1-8u232]# ll

total 28

drwxr-xr-x 2 root root 97 Dec 3 19:04 bin

drwxr-xr-x 2 root root 42 Dec 3 19:04 boot

drwxr-xr-x 3 501 games 63 Apr 5 2019 conf

drwxr-xr-x 4 501 games 4096 Dec 3 19:04 lib

-rw-r--r-- 1 501 games 13437 Apr 5 2019 LICENSE

-rw-r--r-- 1 501 games 182 Apr 5 2019 NOTICE

-rw-r--r-- 1 501 games 2533 Apr 5 2019 README.txt

4.3.3.设置settings.xml国内镜像源

搜索替换:

[root@hdss7-200 maven-3.6.1-8u232]# vi /data/nfs-volume/jenkins_home/maven-3.6.1-8u232/conf/settings.xml

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>*</mirrorOf>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</mirror>

4.3.制作dubbo微服务的底包镜像

运维主机hdss7-200上

4.3.1.自定义Dockerfile

root@hdss7-200 dockerfile]# mkdir jre8

[root@hdss7-200 dockerfile]# cd jre8/

[root@hdss7-200 jre8]# pwd

/data/dockerfile/jre8

[root@hdss7-200 jre8]# vi Dockfile

FROM harbor.od.com/public/jre:8u112

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

ADD config.yml /opt/prom/config.yml

ADD jmx_javaagent-0.3.1.jar /opt/prom/

WORKDIR /opt/project_dir

ADD entrypoint.sh /entrypoint.sh

CMD ["/entrypoint.sh"]

- 普罗米修斯的监控匹配规则

- java agent 收集jvm的信息,采集jvm的jar包

- docker运行的默认启动脚本entrypoint.sh

4.3.2.准备jre底包(7版本有一个7u80)

[root@hdss7-200 jre8]# docker pull docker.io/stanleyws/jre8:8u112

[root@hdss7-200 jre8]# docker images |grep jre

stanleyws/jre8 8u112 fa3a085d6ef1 2 years ago 363MB

[root@hdss7-200 jre8]# docker tag fa3a085d6ef1 harbor.od.com/public/jre:8u112

[root@hdss7-200 jre8]# docker push harbor.od.com/public/jre:8u112

4.3.3.准备java-agent的jar包

[root@hdss7-200 jre8]# wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar

4.3.3.准备config.yml和entrypoint.sh

[root@hdss7-200 jre8]# vi config.yml

---

rules:

- pattern: '.*'

[root@hdss7-200 jre8]# vi entrypoint.sh

#!/bin/sh

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=${C_OPTS}

JAR_BALL=${JAR_BALL}

exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL}

[root@hdss7-200 jre8]# chmod +x entrypoint.sh

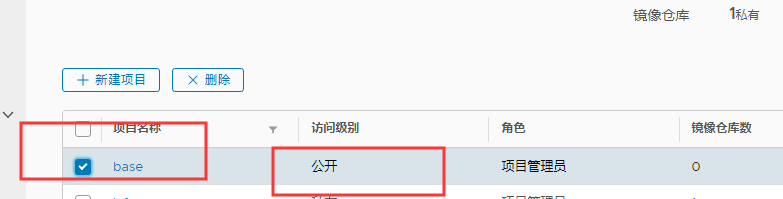

4.3.4.harbor创建base项目

4.3.5.构建dubbo微服务的底包并推到harbor仓库

[root@hdss7-200 jre8]# docker build . -t harbor.od.com/base/jre8:8u112

[root@hdss7-200 jre8]# docker push harbor.od.com/base/jre8:8u112

5.交付dubbo微服务至kubenetes集群

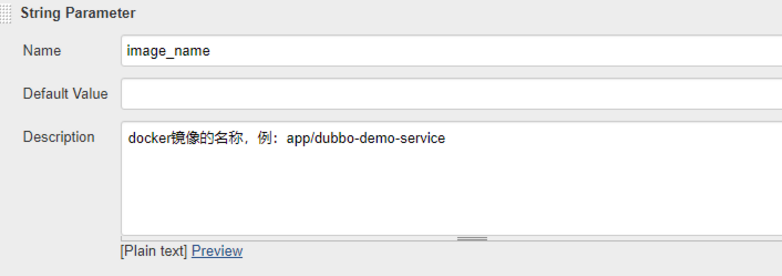

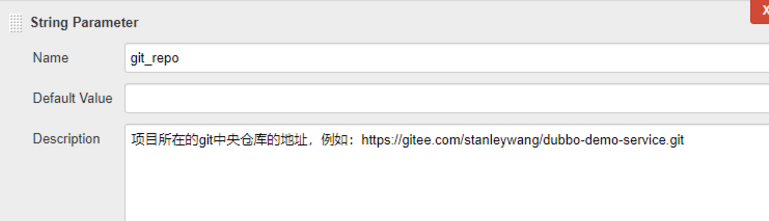

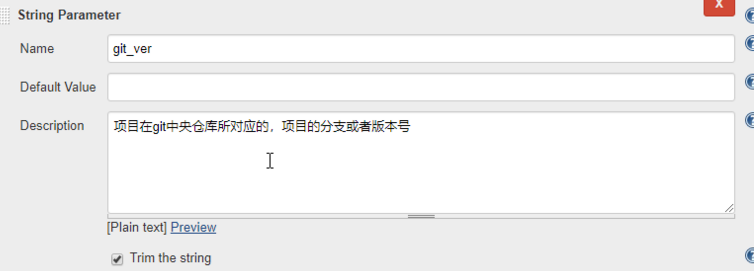

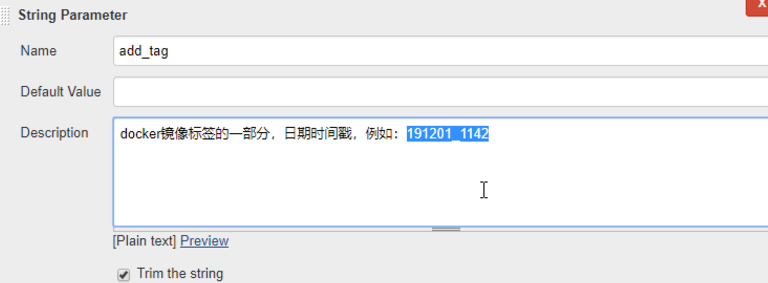

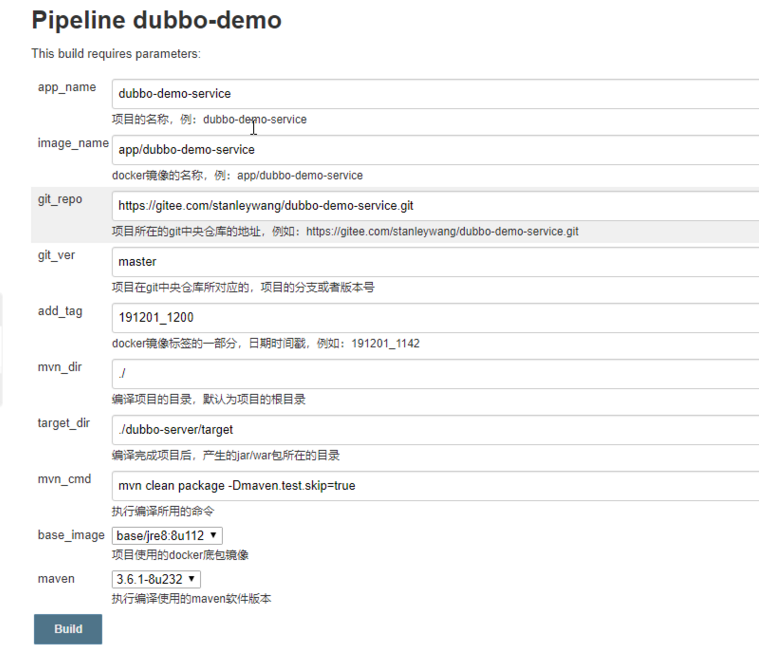

5.1.配置New job流水线

添加构建参数:

//以下配置项是王导根据多年生产经验总结出来的--运维甩锅大法(姿势要帅,动作要快)

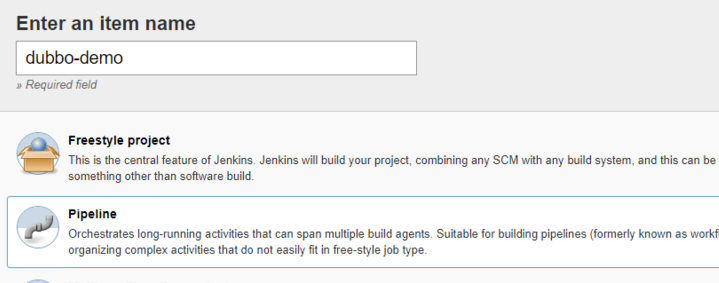

登陆jenkins----->选择NEW-ITEM----->item name :dubbo-demo----->Pipeline------>ok

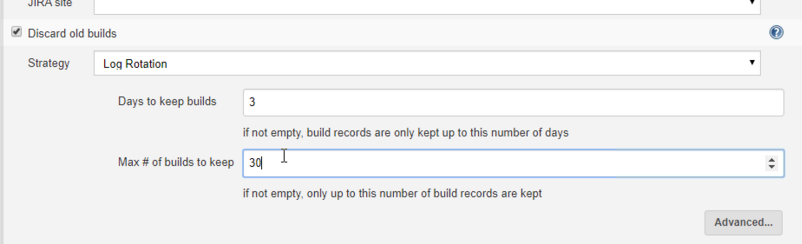

需要保留多少此老的构建,这里设置,保留三天,30个

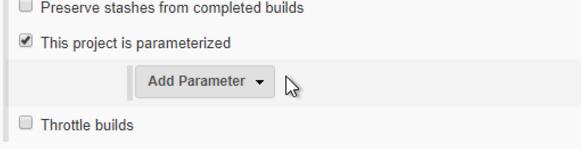

点击:“This project is parameterized”使用参数化构建jenkins

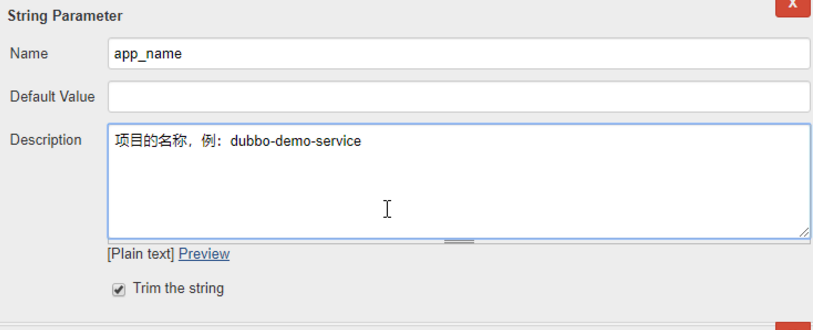

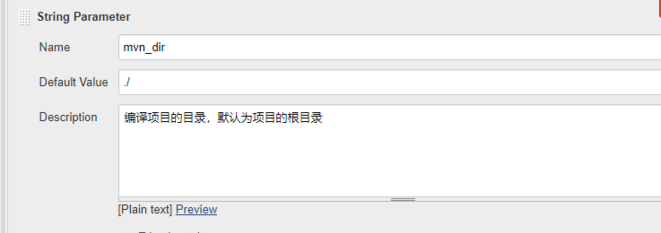

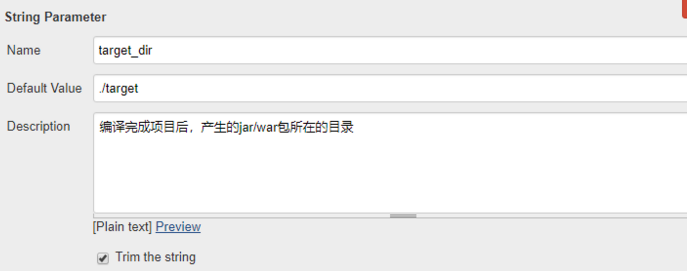

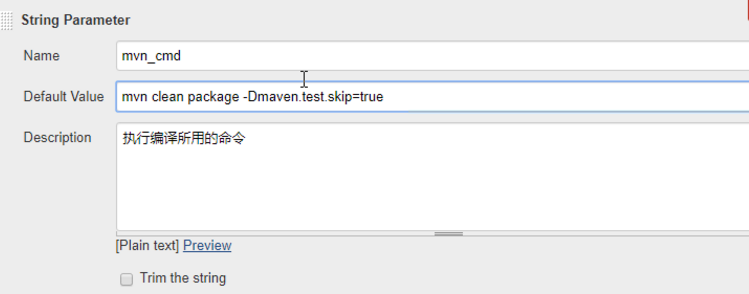

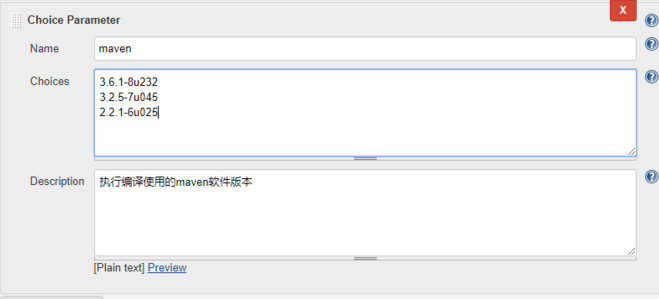

添加参数String Parameter:8个------Trim the string都勾选

app_name

image_name

git_repo

git_ver

add_tag

mvn_dir

target_dir

mvn_cmd

添加Choice Parameter:2个

base_image

maven

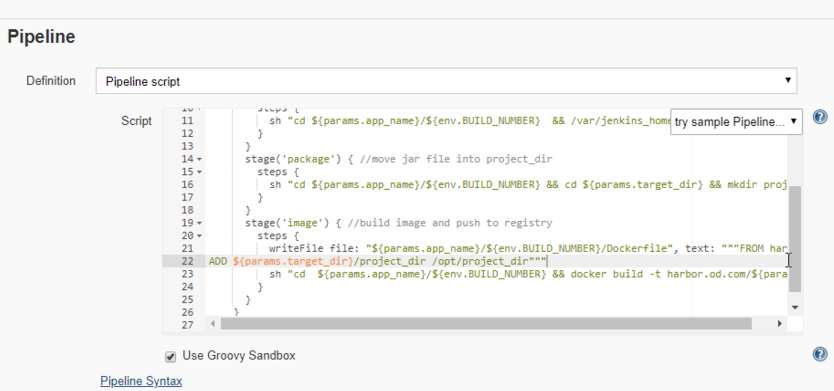

5.2..Pipeline Script

pipeline {

agent any

stages {

stage('pull') { //get project code from repo

steps {

sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"

}

}

stage('build') { //exec mvn cmd

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"

}

}

stage('package') { //move jar file into project_dir

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && mv *.jar ./project_dir"

}

}

stage('image') { //build image and push to registry

steps {

writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.od.com/${params.base_image}

ADD ${params.target_dir}/project_dir /opt/project_dir"""

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag}"

}

}

}

}

5.3.harbor创建app项目,把dubbo服务镜像管理起来

5.4.创建app名称空间,并添加secret资源

任意运算节点上

因为要去拉app私有仓库的镜像,所以添加secret资源

[root@hdss7-21 bin]# kubectl create ns app

namespace/app created

[root@hdss7-21 bin]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n app

secret/harbor created

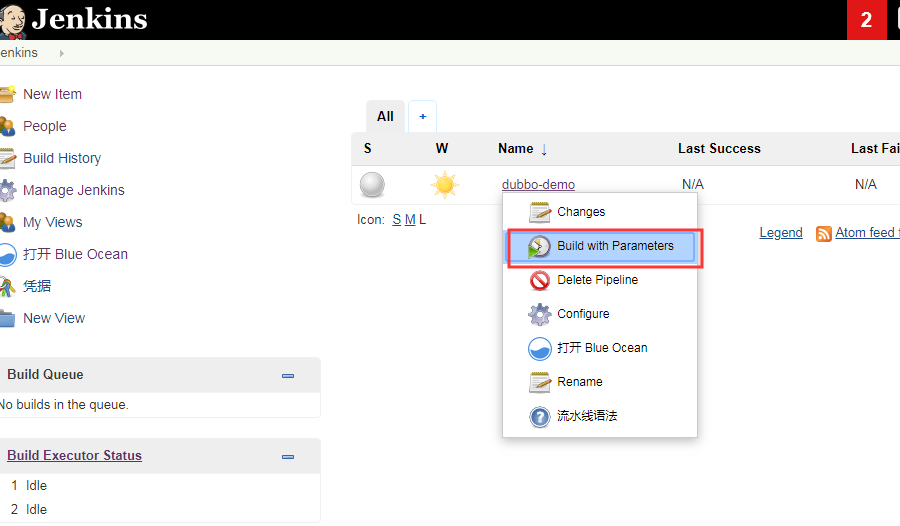

5.5.交付dubbo-demo-service

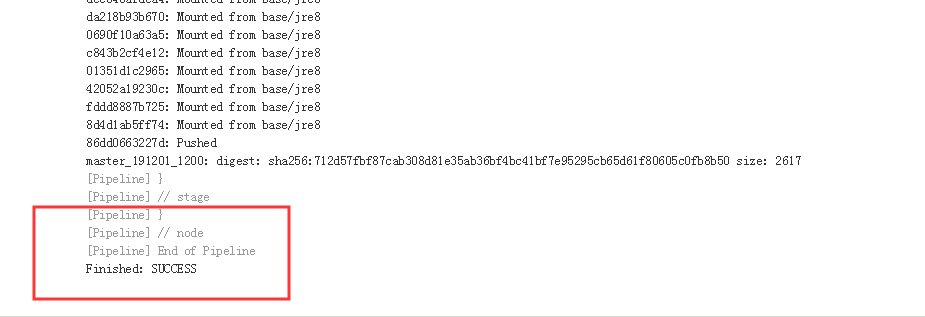

5.5.1.jenkins传参,构建dubbo-demo-service镜像,传到harbor

5.5.2.创建dubbo-demo-service的资源配置清单

特别注意:dp.yaml的image替换成自己打包的镜像名称

hdss7-200上

[root@hdss7-200 dubbo-demo-service]# vi dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-service

namespace: app

labels:

name: dubbo-demo-service

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-service

template:

metadata:

labels:

app: dubbo-demo-service

name: dubbo-demo-service

spec:

containers:

- name: dubbo-demo-service

image: harbor.od.com/app/dubbo-demo-service:master_191201_1200

ports:

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-server.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

5.5.3.应用dubbo-demo-service资源配置清单

任意运算节点上

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-service/dp.yaml

deployment.extensions/dubbo-demo-service created

5.5.4.检查启动状态

dashboard查看日志

zk注册中心查看

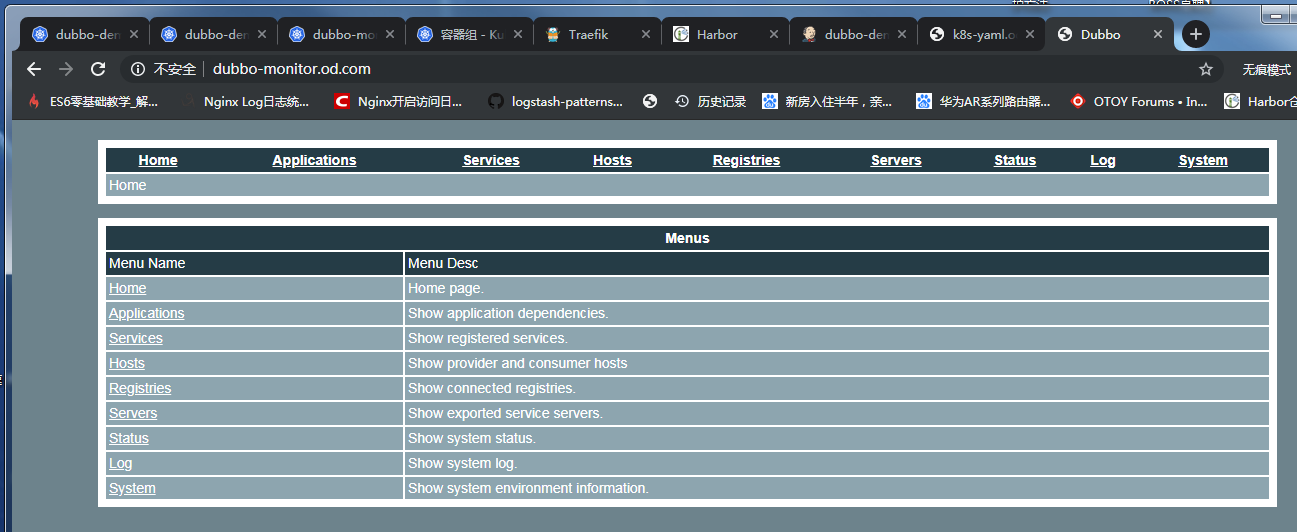

5.6.交付dubbo-Monitor

dubbo-monitor实际上就是从注册中心registry去数据出来然后展示的工具

两个开源软件:1、dubbo-admin 2、dubbo-monitor。此处我们用dubbo-minitor

5.6.1.准备docker镜像

5.6.1.1.下载源码包、解压

[root@hdss7-200 src]# ll|grep dubbo

-rw-r--r-- 1 root root 23468109 Dec 4 11:50 dubbo-monitor-master.zip

[root@hdss7-200 src]# unzip dubbo-monitor-master.zip

[root@hdss7-200 src]# ll|grep dubbo

drwxr-xr-x 3 root root 69 Jul 27 2016 dubbo-monitor-master

-rw-r--r-- 1 root root 23468109 Dec 4 11:50 dubbo-monitor-master.zip

5.6.1.2.修改以下项配置

[root@hdss7-200 conf]# pwd

/opt/src/dubbo-monitor-master/dubbo-monitor-simple/conf

[root@hdss7-200 conf]# vi dubbo_origin.properties

dubbo.registry.address=zookeeper://zk1.od.com:2181?backup=zk2.od.com:2181,zk3.od.com:2181

dubbo.protocol.port=20880

dubbo.jetty.port=8080

dubbo.jetty.directory=/dubbo-monitor-simple/monitor

dubbo.statistics.directory=/dubbo-monitor-simple/statistics

dubbo.charts.directory=/dubbo-monitor-simple/charts

dubbo.log4j.file=logs/dubbo-monitor.log

5.6.1.3.制作镜像

5.6.1.3.1.准备环境

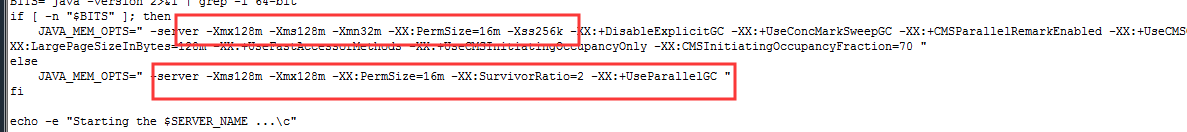

- 由于是虚拟机环境,这里java给的内存太大,需要给小一些,nohup 替换成exec,要在前台跑,去掉结尾&符,删除nohup 行下所有行

[root@hdss7-200 bin]# vi /opt/src/dubbo-monitor-master/dubbo-monitor-simple/bin/start.sh

JAVA_MEM_OPTS=" -server -Xmx2g -Xms2g -Xmn256m -XX:PermSize=128m -Xss256k -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:LargePageSizeInBytes=128m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 "

else

JAVA_MEM_OPTS=" -server -Xms1g -Xmx1g -XX:PermSize=128m -XX:SurvivorRatio=2 -XX:+UseParallelGC "

fi

echo -e "Starting the $SERVER_NAME ...\c"

nohup java $JAVA_OPTS $JAVA_MEM_OPTS $JAVA_DEBUG_OPTS $JAVA_JMX_OPTS -classpath $CONF_DIR:$LIB_JARS com.alibaba.dubbo.container.Main > $STDOUT_FILE 2>&1 &

- sed命令替换,用到了sed模式空间

sed -r -i -e '/^nohup/{p;:a;N;$!ba;d}' ./dubbo-monitor-simple/bin/start.sh && sed -r -i -e "s%^nohup(.*)%exec \1%" /opt/src/dubbo-monitor-master/dubbo-monitor-simple/bin/start.sh

//调小内存,然后nohup行结尾的&去掉!!!

- 为了规范,复制到data下

[root@hdss7-200 src]# mv dubbo-monitor-master dubbo-monitor

[root@hdss7-200 src]# cp -a dubbo-monitor /data/dockerfile/

[root@hdss7-200 src]# cd /data/dockerfile/dubbo-monitor/

5.6.1.3.2.准备Dockerfile

[root@hdss7-200 dubbo-monitor]# pwd

/data/dockerfile/dubbo-monitor

[root@hdss7-200 dubbo-monitor]# cat Dockerfile

FROM jeromefromcn/docker-alpine-java-bash

MAINTAINER Jerome Jiang

COPY dubbo-monitor-simple/ /dubbo-monitor-simple/

CMD /dubbo-monitor-simple/bin/start.sh

5.6.1.3.3.build镜像并push到harbor仓库

[root@hdss7-200 dubbo-monitor]# docker build . -t harbor.od.com/infra/dubbo-monitor:latest

[root@hdss7-200 dubbo-monitor]# docker push harbor.od.com/infra/dubbo-monitor:latest

5.6.2.解析域名

[root@hdss7-11 ~]# vi /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2019111008 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

。。。略

dubbo-monitor A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# dig -t A dubbo-monitor.od.com @10.4.7.11 +short

10.4.7.10

5.6.3.准备k8s资源配置清单

- dp.yaml

[root@hdss7-200 k8s-yaml]# pwd

/data/k8s-yaml

[root@hdss7-200 k8s-yaml]# pwd

/data/k8s-yaml

[root@hdss7-200 k8s-yaml]# mkdir dubbo-monitor

[root@hdss7-200 k8s-yaml]# cd dubbo-monitor/

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

labels:

name: dubbo-monitor

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-monitor

template:

metadata:

labels:

app: dubbo-monitor

name: dubbo-monitor

spec:

containers:

- name: dubbo-monitor

image: harbor.od.com/infra/dubbo-monitor:latest

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

- svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-monitor

namespace: infra

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-monitor

- ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

spec:

rules:

- host: dubbo-monitor.od.com

http:

paths:

- path: /

backend:

serviceName: dubbo-monitor

servicePort: 8080

5.6.4.应用资源配置清单

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/dp.yaml

deployment.extensions/dubbo-monitor created

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/svc.yaml

service/dubbo-monitor created

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/ingress.yaml

ingress.extensions/dubbo-monitor created

5.6.5.浏览器访问

5.7.交付dubbo-demo-consumer

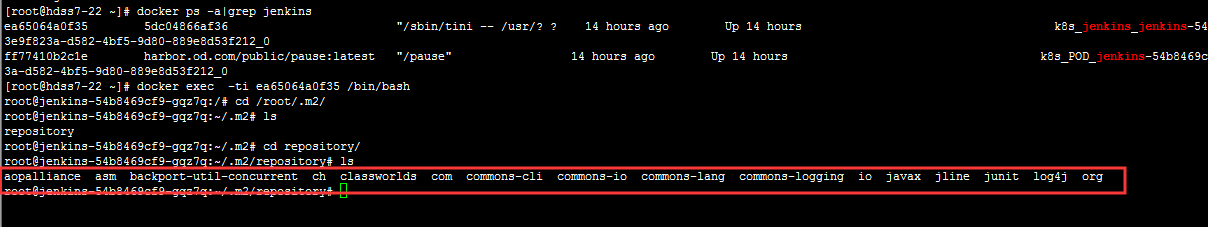

5.7.1.jenkins传参,构建dubbo-demo-service镜像,传到harbor

jenkins的jar包本地缓存

5.7.2.创建dubbo-demo-consumer的资源配置清单

运维主机hdss7-200上

特别注意:dp.yaml的image替换成自己打包的镜像名称

dp.yaml

[root@hdss7-200 k8s-yaml]# pwd

/data/k8s-yaml

[root@hdss7-200 k8s-yaml]# mkdir dubbo-demo-consumer

[root@hdss7-200 k8s-yaml]# cd dubbo-demo-consumer/

[root@hdss7-200 k8s-yaml]# vi dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.od.com/app/dubbo-demo-consumer:master_191204_1307

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-client.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-demo-consumer

ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

rules:

- host: demo.od.com

http:

paths:

- path: /

backend:

serviceName: dubbo-demo-consumer

servicePort: 8080

5.7.3.应用dubbo-demo-consumer资源配置清单

任意运算节点上

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/dp.yaml

deployment.extensions/dubbo-demo-consumer created

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/svc.yaml

service/dubbo-demo-consumer created

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/ingress.yaml

ingress.extensions/dubbo-demo-consumer created

5.7.4.解析域名

hdss7-11上

[root@hdss7-11 ~]# vi /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2019111009 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

...

...

demo A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# dig -t A demo.od.com @10.4.7.11 +short

10.4.7.10

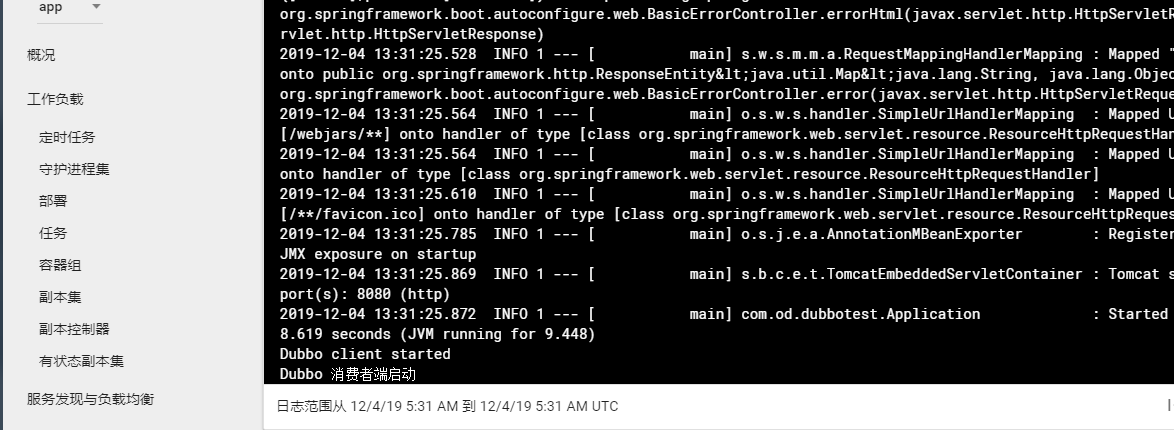

5.7.5.检查启动状态

-

dashboard查看:

http://dashboard.od.com

-

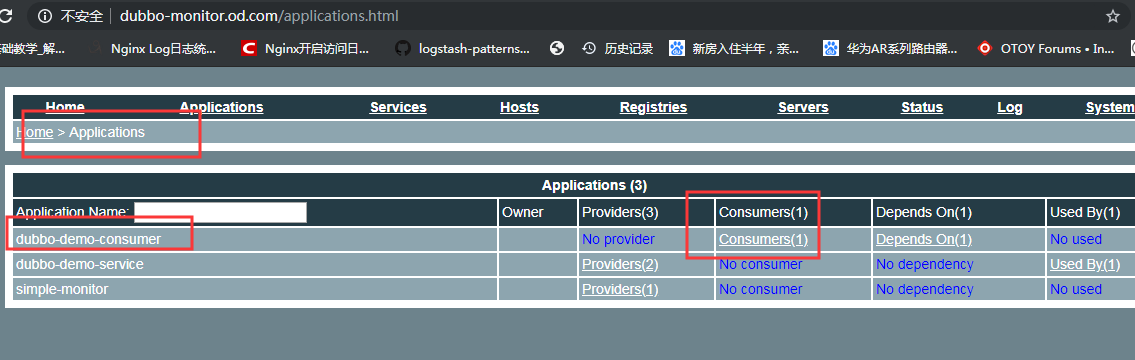

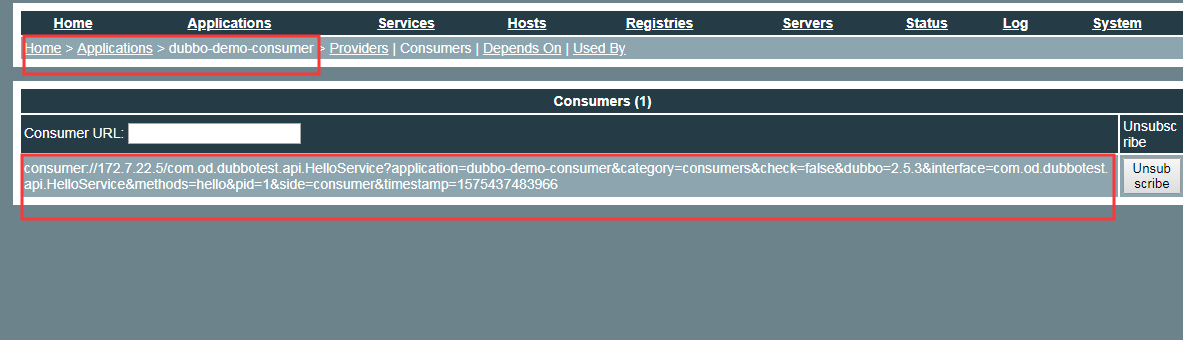

dubbo-monitor查看:

http://dubbo-monitor.od.com

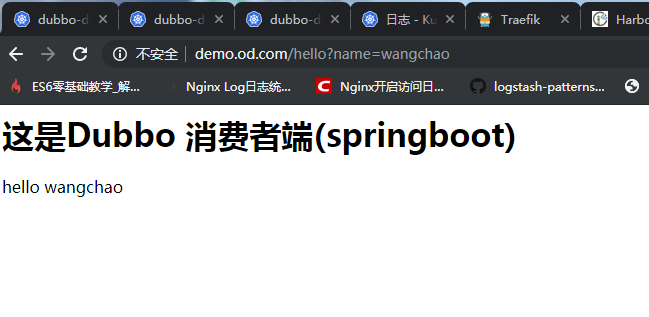

- 访问demo.od.com查看:

http://demo.od.com/hello?name=wang

6.实战维护dubbo微服务集群

6.1.更新(rolling update)

- 修改代码提交GIT(发版)

- 使用jenkins进行CI(持续构建)

- 修改并应用k8s资源配置清单

- 或者在k8s上修改yaml的harbor镜像地址

6.2.扩容(scaling)

- 在k8s的dashboard上直接操作:登陆dashboard页面-->部署-->伸缩-->修改数量-->确定

- 命令行扩容,如下示例:

* Examples:

# Scale a replicaset named 'foo' to 3.

kubectl scale --replicas=3 rs/foo

# Scale a resource identified by type and name specified in "foo.yaml" to 3.

kubectl scale --replicas=3 -f foo.yaml

# If the deployment named mysql's current size is 2, scale mysql to 3.

kubectl scale --current-replicas=2 --replicas=3 deployment/mysql

# Scale multiple replication controllers.

kubectl scale --replicas=5 rc/foo rc/bar rc/baz

# Scale statefulset named 'web' to 3.

kubectl scale --replicas=3 statefulset/web

6.3.宿主机突发故障处理

假如hdss7-21突发故障,离线

- 其他运算节点上操作:先删除故障节点使k8s触发自愈机制,pod在健康节点重新拉起

[root@hdss7-22 ~]# kubectl delete node hdss7-21.host.com

node "hdss7-21.host.com" deleted

- 前端代理修改配置文件,把节点注释掉,使其不再调度到故障节点(hdss-7-21)

[root@hdss7-11 ~]# vi /etc/nginx/nginx.conf

[root@hdss7-11 ~]# vi /etc/nginx/conf.d/od.com.conf

[root@hdss7-11 ~]# nginx -t

[root@hdss7-11 ~]# nginx -s reload

- 节点修好,直接启动,会自行加到集群,修改label,并把节点加回前端负载

[root@hdss7-21 bin]# kubectl label node hdss7-21.host.com node-role.kubernetes.io/master=

node/hdss7-21.host.com labeled

[root@hdss7-21 bin]# kubectl label node hdss7-21.host.com node-role.kubernetes.io/node=

node/hdss7-21.host.com labeled

[root@hdss7-21 bin]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready master,node 8d v1.15.4

hdss7-22.host.com Ready master,node 10d v1.15.4

6.4.FAQ

6.4.1.supervisor restart 不成功?

/etc/supervisord.d/xxx.ini 追加:

killasgroup=true

stopasgroup=true

浙公网安备 33010602011771号

浙公网安备 33010602011771号