【实验目的】

- 理解神经网络原理,掌握神经网络前向推理和后向传播方法;

- 掌握使用pytorch框架训练和推理全连接神经网络模型的编程实现方法。

【实验内容】

- 使用pytorch框架,设计一个全连接神经网络,实现Mnist手写数字字符集的训练与识别。

【实验报告要求】

- 修改神经网络结构,改变层数观察层数对训练和检测时间,准确度等参数的影响;

- 修改神经网络的学习率,观察对训练和检测效果的影响;

- 修改神经网络结构,增强或减少神经元的数量,观察对训练的检测效果的影响。

【实验过程与步骤】

1. 导包

| import torch |

| import torch.nn as nn |

| import torchvision |

| |

| import torchvision.transforms as transforms |

| |

| import numpy as np |

| import pandas as pd |

| import matplotlib.pyplot as plt |

| %matplotlib inline |

| |

| |

| |

| device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') |

| device |

| |

2. 设置超参数

| |

| input_size = 784 |

| hidden_size = 500 |

| output_size = 10 |

| num_epochs = 5 |

| batch_size = 100 |

| learning_rate = 0.001 |

| |

3. 下载数据集

| |

| train_dataset = torchvision.datasets.MNIST('./data/', |

| train=True, |

| transform=transforms.ToTensor(), |

| download=True) |

| print(train_dataset) |

| |

| test_dataset = torchvision.datasets.MNIST('./data/', |

| train=False, |

| transform=transforms.ToTensor()) |

| print(test_dataset) |

| |

| train_loader = torch.utils.data.DataLoader(train_dataset, |

| batch_size=batch_size, |

| shuffle=True) |

| |

| test_loader = torch.utils.data.DataLoader(test_dataset, |

| batch_size=batch_size, |

| shuffle=False) |

| |

| Dataset MNIST |

| Number of datapoints: 60000 |

| Root location: ./data/ |

| Split: Train |

| StandardTransform |

| Transform: ToTensor() |

| Dataset MNIST |

| Number of datapoints: 10000 |

| Root location: ./data/ |

| Split: Test |

| StandardTransform |

| Transform: ToTensor() |

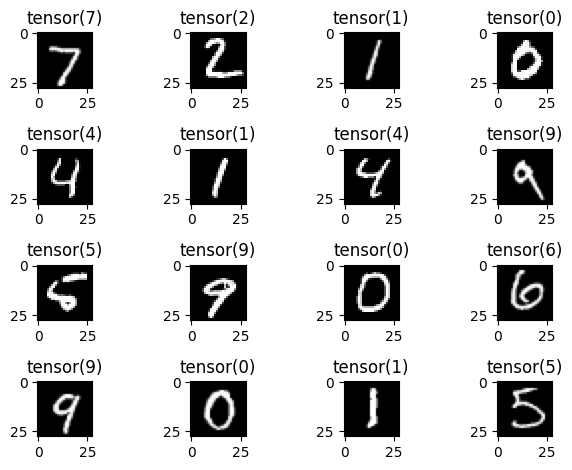

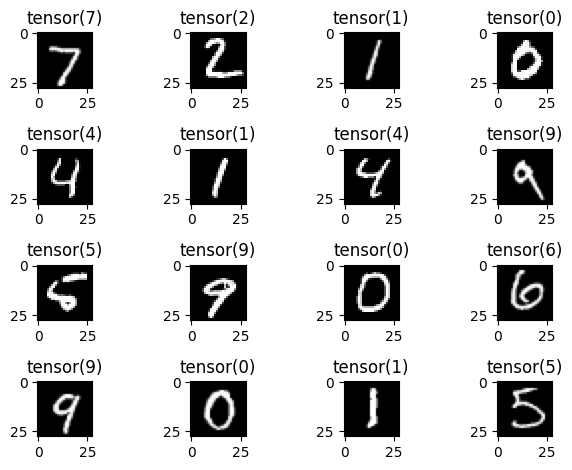

4. 查看样例数据

| |

| examples = iter(test_loader) |

| |

| example_data, example_target = next(examples) |

| for i in range(16): |

| |

| plt.subplot(4, 4, i+1).set_title(example_target[i]) |

| plt.imshow(example_data[i][0], 'gray') |

| plt.tight_layout() |

| plt.show() |

| |

5. 构建网络

| |

| class NeuralNet(nn.Module): |

| |

| def __init__(self, input_size, hidden_size, output_size): |

| super(NeuralNet, self).__init__() |

| self.fc1 = nn.Linear(input_size, hidden_size) |

| self.relu = nn.ReLU() |

| self.fc2 = nn.Linear(hidden_size, output_size) |

| |

| def forward(self, x): |

| out = self.fc1(x) |

| out = self.relu(out) |

| out = self.fc2(out) |

| return out |

| |

| |

| model = NeuralNet(input_size, hidden_size, output_size).to(device) |

| |

6. 定义损失函数和优化器

| |

| criterion = nn.CrossEntropyLoss() |

| |

| |

| |

| optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate) |

| |

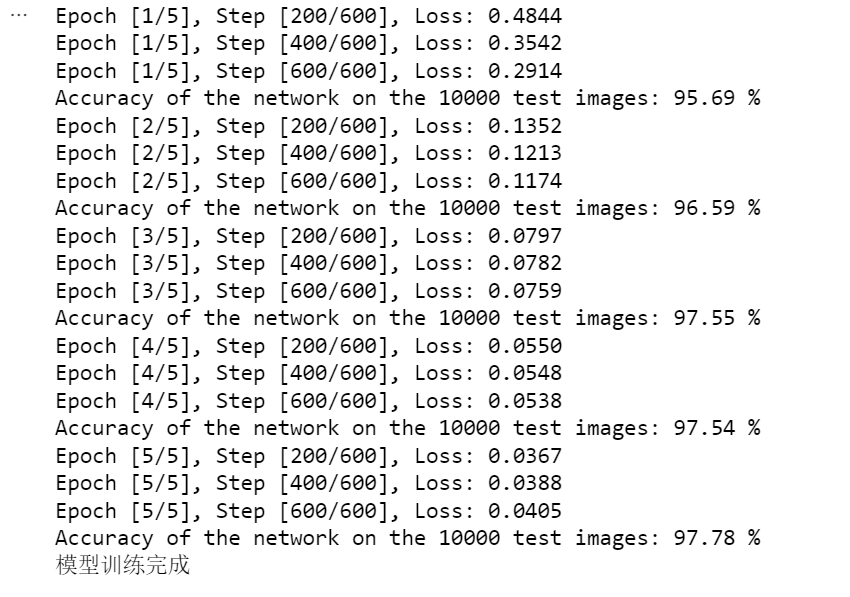

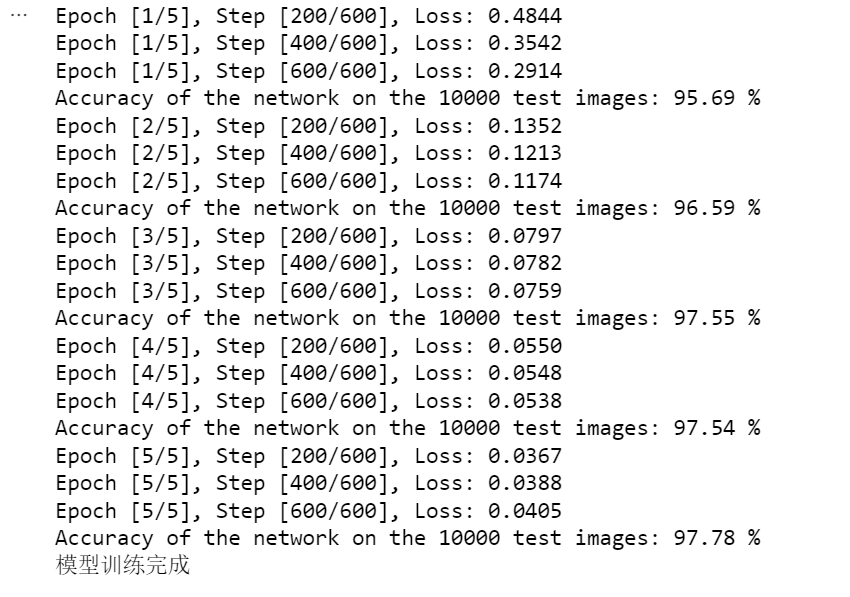

7. 模型的训练与测试

| |

| total_step = len(train_loader) |

| |

| LossList = [] |

| AccuryList = [] |

| |

| for epoch in range(num_epochs): |

| totalLoss = 0 |

| for i, (images, labels) in enumerate(train_loader): |

| |

| |

| images = images.reshape(-1, 28*28).to(device) |

| labels = labels.to(device) |

| |

| |

| outputs = model(images) |

| loss = criterion(outputs, labels) |

| totalLoss = totalLoss + loss.item() |

| |

| |

| optimizer.zero_grad() |

| loss.backward() |

| optimizer.step() |

| |

| if (i+1) % 200 == 0: |

| print('Epoch [{}/{}], Step [{}/{}], Loss: {:.4f}' |

| .format(epoch+1, num_epochs, i+1, total_step, totalLoss/(i+1))) |

| |

| LossList.append(totalLoss/(i+1)) |

| |

| |

| |

| with torch.no_grad(): |

| correct = 0 |

| total = 0 |

| for images, labels in test_loader: |

| images = images.reshape(-1, 28*28).to(device) |

| labels = labels.to(device) |

| outputs = model(images) |

| |

| |

| _, predicted = torch.max(outputs.data, 1) |

| |

| |

| |

| total += labels.size(0) |

| correct += (predicted == labels).sum().item() |

| acc = 100.0 * correct / total |

| AccuryList.append(acc) |

| print('Accuracy of the network on the {} test images: {} %'.format(total, acc)) |

| print("模型训练完成") |

| |

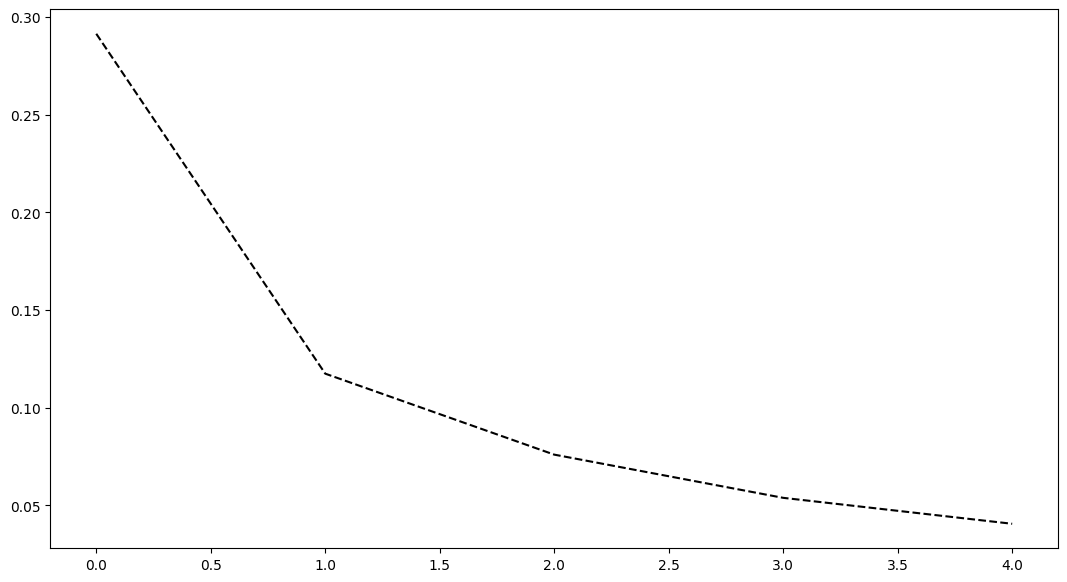

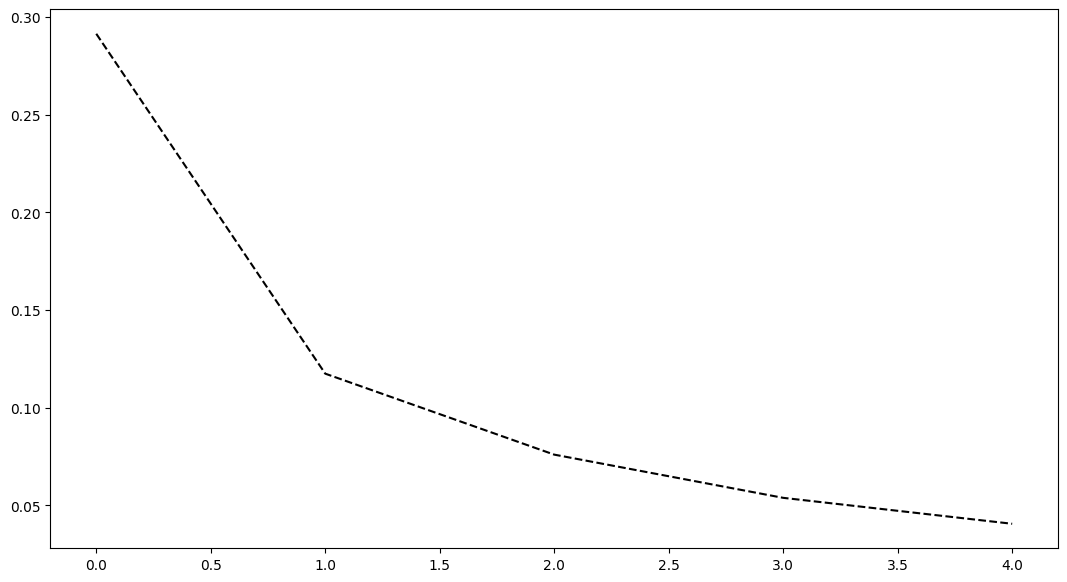

| Epoch [1/5], Step [200/600], Loss: 0.4844 |

| Epoch [1/5], Step [400/600], Loss: 0.3542 |

| Epoch [1/5], Step [600/600], Loss: 0.2914 |

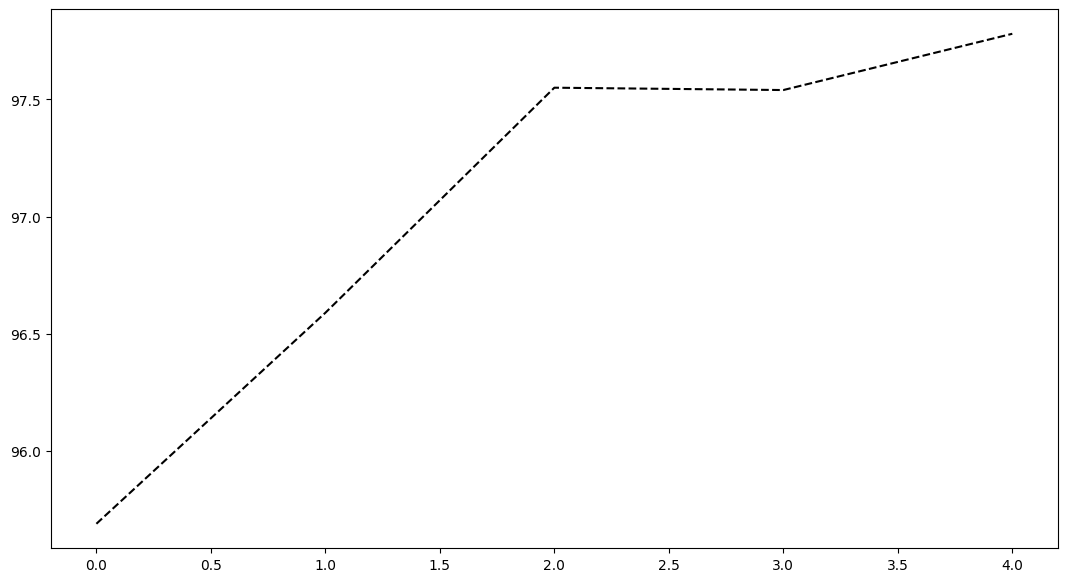

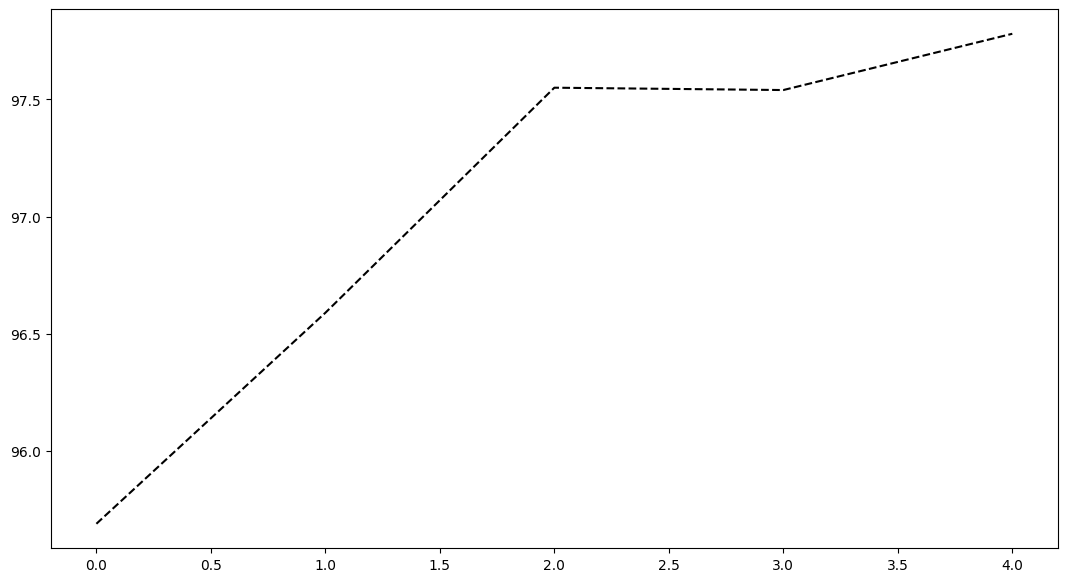

| Accuracy of the network on the 10000 test images: 95.69 % |

| Epoch [2/5], Step [200/600], Loss: 0.1352 |

| Epoch [2/5], Step [400/600], Loss: 0.1213 |

| Epoch [2/5], Step [600/600], Loss: 0.1174 |

| Accuracy of the network on the 10000 test images: 96.59 % |

| Epoch [3/5], Step [200/600], Loss: 0.0797 |

| Epoch [3/5], Step [400/600], Loss: 0.0782 |

| Epoch [3/5], Step [600/600], Loss: 0.0759 |

| Accuracy of the network on the 10000 test images: 97.55 % |

| Epoch [4/5], Step [200/600], Loss: 0.0550 |

| Epoch [4/5], Step [400/600], Loss: 0.0548 |

| Epoch [4/5], Step [600/600], Loss: 0.0538 |

| Accuracy of the network on the 10000 test images: 97.54 % |

| Epoch [5/5], Step [200/600], Loss: 0.0367 |

| Epoch [5/5], Step [400/600], Loss: 0.0388 |

| Epoch [5/5], Step [600/600], Loss: 0.0405 |

| Accuracy of the network on the 10000 test images: 97.78 % |

| 模型训练完成 |

8. 绘制 loss 的变化

| fig, axes = plt.subplots(nrows=1, ncols=1, figsize=(13, 7)) |

| axes.plot(LossList, 'k--') |

| |

| fig, axes = plt.subplots(nrows=1, ncols=1, figsize=(13, 7)) |

| axes.plot(AccuryList, 'k--') |

| |

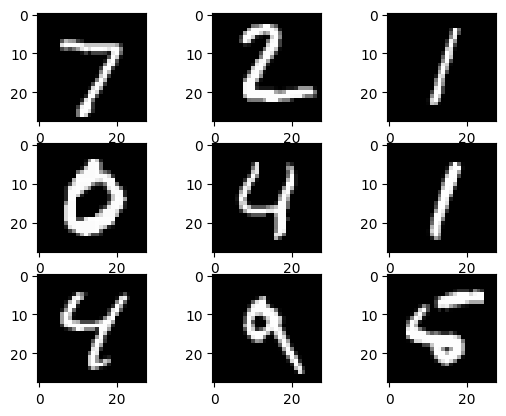

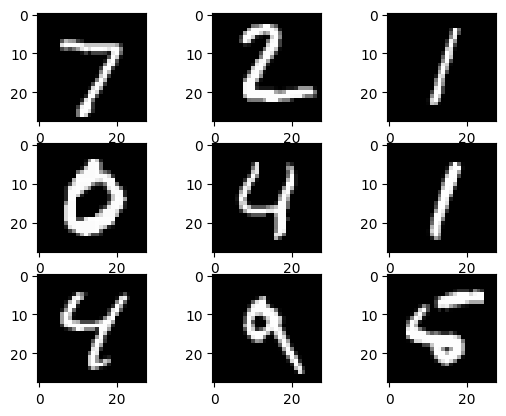

9. 使用实际例子进行验证

| |

| examples = iter(test_loader) |

| example_data, example_targets = next(examples) |

| |

| |

| for i in range(9): |

| plt.subplot(3, 3, i+1) |

| plt.imshow(example_data[i][0], cmap='gray') |

| plt.show() |

| |

| |

| images = example_data.reshape(-1, 28*28).to(device) |

| labels = example_targets.to(device) |

| |

| |

| outputs = model(images) |

| |

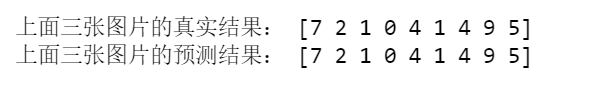

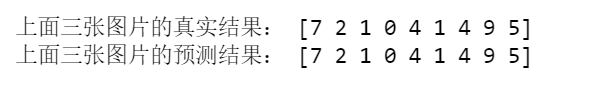

| print("上面三张图片的真实结果:", example_targets[0:9].detach().numpy()) |

| |

| |

| print("上面三张图片的预测结果:", np.argmax(outputs[0:9].cpu().detach().numpy(), axis=1)) |

| |

| 上面三张图片的真实结果: [7 2 1 0 4 1 4 9 5] |

| 上面三张图片的预测结果: [7 2 1 0 4 1 4 9 5] |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步