python code run on spark standalon mode

1.official document

http://spark.apache.org/docs/latest/submitting-applications.html

2. Bundling Your Application’s Dependencies

If your code depends on other projects, you will need to package them alongside your application in order to distribute the code to a Spark cluster. To do this, create an assembly jar (or “uber” jar) containing your code and its dependencies.

For Python, you can use the --py-files argument of spark-submit to add .py, .zip or .egg files to be distributed with your application. If you depend on multiple Python files we recommend packaging them into a .zip or .egg.

For example:

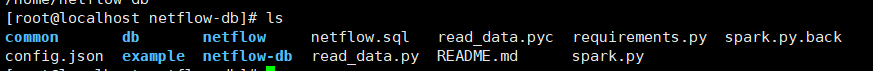

zip -qr netflow.zip netflow-db/

3. Launching Applications with spark-submit

./bin/spark-submit \

--class <main-class> \

--master <master-url> \

--deploy-mode <deploy-mode> \

--conf <key>=<value> \

... # other options

<application-jar> \

[application-arguments]Some of the commonly used options are:

--class: The entry point for your application (e.g.org.apache.spark.examples.SparkPi)--master: The master URL for the cluster (e.g.spark://23.195.26.187:7077)--deploy-mode: Whether to deploy your driver on the worker nodes (cluster) or locally as an external client (client) (default:client) †--conf: Arbitrary Spark configuration property in key=value format. For values that contain spaces wrap “key=value” in quotes (as shown).application-jar: Path to a bundled jar including your application and all dependencies. The URL must be globally visible inside of your cluster, for instance, anhdfs://path or afile://path that is present on all nodes.application-arguments: Arguments passed to the main method of your main class, if any

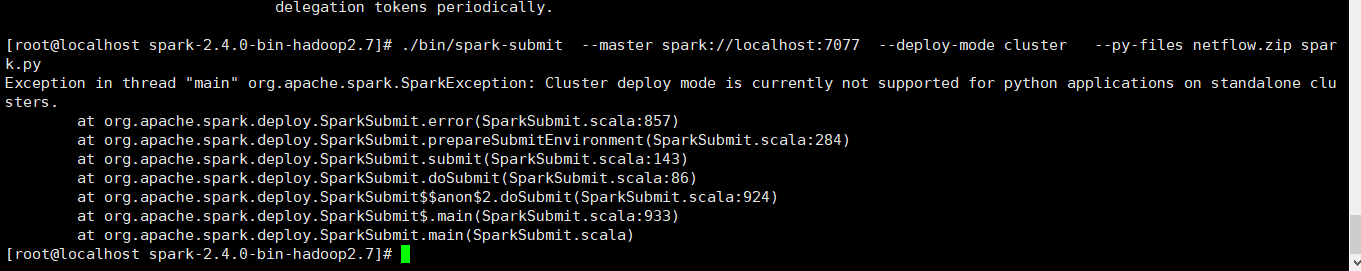

./bin/spark-submit \ --class spark.py \ --master spark://localhost:7077\ --deploy-mode cluster \ --py-files netflow.zip

For Python applications, simply pass a.pyfile in the place of<application-jar>instead of a JAR, and add Python.zip,.eggor.pyfiles to the search path with--py-files.

# Run application locally on 8 cores ./bin/spark-submit \ --class org.apache.spark.examples.SparkPi \ --master local[8] \ /path/to/examples.jar \ 100 # Run on a Spark standalone cluster in client deploy mode ./bin/spark-submit \ --class org.apache.spark.examples.SparkPi \ --master spark://207.184.161.138:7077 \ --executor-memory 20G \ --total-executor-cores 100 \ /path/to/examples.jar \ 1000 # Run on a Spark standalone cluster in cluster deploy mode with supervise ./bin/spark-submit \ --class org.apache.spark.examples.SparkPi \ --master spark://207.184.161.138:7077 \ --deploy-mode cluster \ --supervise \ --executor-memory 20G \ --total-executor-cores 100 \ /path/to/examples.jar \ 1000 # Run on a YARN cluster export HADOOP_CONF_DIR=XXX ./bin/spark-submit \ --class org.apache.spark.examples.SparkPi \ --master yarn \ --deploy-mode cluster \ # can be client for client mode --executor-memory 20G \ --num-executors 50 \ /path/to/examples.jar \ 1000 # Run a Python application on a Spark standalone cluster ./bin/spark-submit \ --master spark://207.184.161.138:7077 \ examples/src/main/python/pi.py \ 1000 # Run on a Mesos cluster in cluster deploy mode with supervise ./bin/spark-submit \ --class org.apache.spark.examples.SparkPi \ --master mesos://207.184.161.138:7077 \ --deploy-mode cluster \ --supervise \ --executor-memory 20G \ --total-executor-cores 100 \ http://path/to/examples.jar \ 1000 # Run on a Kubernetes cluster in cluster deploy mode ./bin/spark-submit \ --class org.apache.spark.examples.SparkPi \ --master k8s://xx.yy.zz.ww:443 \ --deploy-mode cluster \ --executor-memory 20G \ --num-executors 50 \ http://path/to/examples.jar \ 1000

4. result

Fuck: Cluster deploy mode is currently not supported for python applications on standalone clusters.

没有什么是写一万遍还不会的,如果有那就再写一万遍。