云原生之旅 - 4)基础设施即代码 使用 Terraform 创建 Kubernetes

前言

上一篇文章我们已经简单的入门Terraform, 本篇介绍如何使用Terraform在GCP和AWS 创建Kubernetes 资源。

Kubernetes 在云原生时代的重要性不言而喻,等于这个时代的操作系统,基本上只需要建这个资源,就可以将绝大多数的应用跑在上面,包括数据库,甚至很多团队的大数据处理例如 Spark, Flink 都跑在Kubernetes上。

- GCP Kubernetes = GKE

- AWS Kubernetes = EKS

- Azure Kubernetes = AKS

本篇文章主要介绍前两者的Terraform 代码实现,现在使用官方的 module 要比以前方便太多了,哪怕是新手都可以很快的将资源建起来,当然如果要更多的了解,还是需要慢慢下功夫的。

关键词:IaC, Infrastructure as Code, Terraform, 基础设施即代码,使用Terraform创建GKE,使用Terraform创建EKS

环境信息:

使用Terraform创建GKE

1 2 3 | # valid LOCATION values are `asia`, `eu` or `us`gsutil mb -l $LOCATION gs://$BUCKET_NAMEgsutil versioning set on gs://$BUCKET_NAME |

准备如下tf文件

1 2 3 4 5 6 | terraform { backend "gcs" { bucket = "sre-dev-terraform-test" prefix = "demo/state" }} |

providers.tf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | terraform { required_version = ">= 1.2.9" required_providers { google = { source = "hashicorp/google" version = "~> 4.0" } google-beta = { source = "hashicorp/google-beta" version = "~> 4.0" } }}provider "google" { project = local.project.project_id region = local.project.region}provider "google-beta" { project = local.project.project_id region = local.project.region} |

使用 terraform google module 事半功倍,代码如下

gke-cluster.tf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 | data "google_compute_zones" "available" { region = "us-central1" status = "UP"}resource "google_compute_network" "default" { project = local.project.project_id name = local.project.network_name auto_create_subnetworks = false routing_mode = "GLOBAL"}resource "google_compute_subnetwork" "wade-gke" { project = local.project.project_id network = google_compute_network.default.name name = local.wade_cluster.subnet_name ip_cidr_range = local.wade_cluster.subnet_range region = local.wade_cluster.region secondary_ip_range { range_name = format("%s-secondary1", local.wade_cluster.cluster_name) ip_cidr_range = local.wade_cluster.secondary_ip_range_pods } secondary_ip_range { range_name = format("%s-secondary2", local.wade_cluster.cluster_name) ip_cidr_range = local.wade_cluster.secondary_ip_range_services } private_ip_google_access = true}resource "google_service_account" "sa-wade-test" { account_id = "sa-wade-test" display_name = "sa-wade-test"}module "wade-gke" { source = "terraform-google-modules/kubernetes-engine/google//modules/beta-private-cluster" version = "23.1.0" project_id = local.project.project_id name = local.wade_cluster.cluster_name kubernetes_version = local.wade_cluster.cluster_version region = local.wade_cluster.region network = google_compute_network.default.name subnetwork = google_compute_subnetwork.wade-gke.name master_ipv4_cidr_block = "10.1.0.0/28" ip_range_pods = google_compute_subnetwork.wade-gke.secondary_ip_range.0.range_name ip_range_services = google_compute_subnetwork.wade-gke.secondary_ip_range.1.range_name service_account = google_service_account.sa-wade-test.email master_authorized_networks = local.wade_cluster.master_authorized_networks master_global_access_enabled = false istio = false issue_client_certificate = false enable_private_endpoint = false enable_private_nodes = true remove_default_node_pool = true enable_shielded_nodes = false identity_namespace = "enabled" node_metadata = "GKE_METADATA" horizontal_pod_autoscaling = true enable_vertical_pod_autoscaling = false node_pools = local.wade_cluster.node_pools node_pools_oauth_scopes = local.wade_cluster.oauth_scopes node_pools_labels = local.wade_cluster.node_pools_labels node_pools_metadata = local.wade_cluster.node_pools_metadata node_pools_taints = local.wade_cluster.node_pools_taints node_pools_tags = local.wade_cluster.node_pools_tags} |

变量 locals.tf

master_authorized_networks 需要改为自己要放行的白名单,只有白名单的IP才能访问 cluster api endpoint。为了安全性,不要用0.0.0.0/0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 | locals { # project details project = { project_id = "sre-eng-cn-dev" region = "us-central1" network_name = "wade-test-network" } # cluster details wade_cluster = { cluster_name = "wade-gke" cluster_version = "1.22.12-gke.500" subnet_name = "wade-gke" subnet_range = "10.254.71.0/24" secondary_ip_range_pods = "172.20.72.0/21" secondary_ip_range_services = "10.127.8.0/24" region = "us-central1" node_pools = [ { name = "app-pool" machine_type = "n1-standard-2" node_locations = join(",", slice(data.google_compute_zones.available.names, 0, 3)) initial_node_count = 1 min_count = 1 max_count = 10 max_pods_per_node = 64 disk_size_gb = 100 disk_type = "pd-standard" image_type = "COS" auto_repair = true auto_upgrade = false preemptible = false max_surge = 1 max_unavailable = 0 } ] node_pools_labels = { all = {} } node_pools_tags = { all = ["k8s-nodes"] } node_pools_metadata = { all = { disable-legacy-endpoints = "true" } } node_pools_taints = { all = [] } oauth_scopes = { all = [ "https://www.googleapis.com/auth/monitoring", "https://www.googleapis.com/auth/compute", "https://www.googleapis.com/auth/devstorage.full_control", "https://www.googleapis.com/auth/logging.write", "https://www.googleapis.com/auth/service.management", "https://www.googleapis.com/auth/servicecontrol", ] } master_authorized_networks = [ { display_name = "Whitelist 1" cidr_block = "4.14.xxx.xx/32" }, { display_name = "Whitelist 2" cidr_block = "64.124.xxx.xx/32" }, ] }} |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | output "cluster_id" { description = "GKE cluster ID" value = module.wade-gke.cluster_id}output "cluster_endpoint" { description = "Endpoint for GKE control plane" value = module.wade-gke.endpoint sensitive = true}output "cluster_name" { description = "Google Kubernetes Cluster Name" value = module.wade-gke.name}output "region" { description = "GKE region" value = module.wade-gke.region}output "project_id" { description = "GCP Project ID" value = local.project.project_id} |

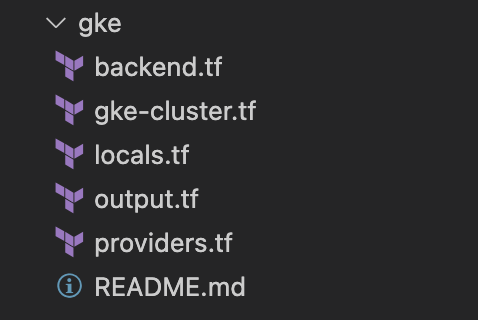

tf文件结构如下

部署

确保自己的GCP account已经登陆,并且有足够的权限操作GCP Project。

1 2 3 | gcloud auth logingcloud auth list |

1 2 3 | terraform init<br>terraform plan<br>terraform apply |

配置连接GKE集群

1 2 3 4 | ### Adding the cluster to your contextgcloud container clusters get-credentials $(terraform output -raw cluster_name) \--region $(terraform output -raw region) \--project $(terraform output -raw project_id) |

使用

使用Terraform创建EKS

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | terraform { backend "s3" { bucket = "sre-dev-terraform" key = "test/eks.tfstate" region = "cn-north-1" } required_providers { aws = { source = "hashicorp/aws" version = "~> 4.25.0" } }}provider "aws" { region = local.region}# https://github.com/terraform-aws-modules/terraform-aws-eks/issues/2009provider "kubernetes" { host = module.wade-eks.cluster_endpoint cluster_ca_certificate = base64decode(module.wade-eks.cluster_certificate_authority_data) exec { api_version = "client.authentication.k8s.io/v1beta1" command = "aws" # This requires the awscli to be installed locally where Terraform is executed args = ["eks", "get-token", "--cluster-name", module.wade-eks.cluster_id] }} |

类似的,使用terraform aws module, 这里有个小插曲,我建的时候提示 cn-north-1d 这个zone没有足够的资源,所以我在data available zone 里面排除了这个zone

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 | data "aws_availability_zones" "available" { # Cannot create cluster because cn-north-1d, # the targeted availability zone, does not currently have sufficient capacity to support the cluster. exclude_names = ["cn-north-1d"]}module "wade-eks" { source = "terraform-aws-modules/eks/aws" version = "18.27.1" cluster_name = local.cluster_name cluster_version = local.cluster_version cluster_endpoint_private_access = true cluster_endpoint_public_access = true # api server authorized network list cluster_endpoint_public_access_cidrs = local.master_authorized_networks cluster_addons = { coredns = { resolve_conflicts = "OVERWRITE" } kube-proxy = {} vpc-cni = { resolve_conflicts = "OVERWRITE" } } vpc_id = module.vpc.vpc_id subnet_ids = module.vpc.private_subnets # Extend cluster security group rules cluster_security_group_additional_rules = local.cluster_security_group_additional_rules eks_managed_node_group_defaults = { ami_type = local.node_group_default.ami_type min_size = local.node_group_default.min_size max_size = local.node_group_default.max_size desired_size = local.node_group_default.desired_size } eks_managed_node_groups = { # dmz = { # name = "dmz-pool" # } app = { name = "app-pool" instance_types = local.app_group.instance_types create_launch_template = false launch_template_name = "" disk_size = local.app_group.disk_size create_security_group = true security_group_name = "app-node-group-sg" security_group_use_name_prefix = false security_group_description = "EKS managed app node group security group" security_group_rules = local.app_group.security_group_rules update_config = { max_unavailable_percentage = 50 } } } # aws-auth configmap # create_aws_auth_configmap = true manage_aws_auth_configmap = true aws_auth_roles = [ { rolearn = "arn:aws-cn:iam::9935108xxxxx:role/CN-SRE" # replace me username = "sre" groups = ["system:masters"] }, ] aws_auth_users = [ { userarn = "arn:aws-cn:iam::9935108xxxxx:user/wadexu" # replace me username = "wadexu" groups = ["system:masters"] }, ] tags = { Environment = "dev" Terraform = "true" } # aws china only because https://github.com/terraform-aws-modules/terraform-aws-eks/pull/1905 cluster_iam_role_dns_suffix = "amazonaws.com"} |

locals.tf 这里为了安全性,最好给cluster api server endpoint 加好白名单来访问,否则 0.0.0.0/0代表全开

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 | locals { cluster_name = "test-eks-2022" cluster_version = "1.22" region = "cn-north-1" vpc = { cidr = "10.0.0.0/16" private_subnets = ["10.0.1.0/24", "10.0.2.0/24"] public_subnets = ["10.0.4.0/24", "10.0.5.0/24"] } master_authorized_networks = [ "4.14.xxx.xx/32", # allow office 1 "64.124.xxx.xx/32", # allow office 2 "0.0.0.0/0" # allow all access master node ] # Extend cluster security group rules example cluster_security_group_additional_rules = { egress_nodes_ephemeral_ports_tcp = { description = "To node 1025-65535" protocol = "tcp" from_port = 1025 to_port = 65535 type = "egress" source_node_security_group = true } } node_group_default = { ami_type = "AL2_x86_64" min_size = 1 max_size = 5 desired_size = 1 } dmz_group = { } app_group = { instance_types = ["t3.small"] disk_size = 50 # example rules added for app node group security_group_rules = { egress_1 = { description = "Hello CloudFlare" protocol = "udp" from_port = 53 to_port = 53 type = "egress" cidr_blocks = ["1.1.1.1/32"] } } }} |

vpc.tf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | module "vpc" { source = "terraform-aws-modules/vpc/aws" version = "3.14.2" name = "wade-test-vpc" cidr = local.vpc.cidr azs = slice(data.aws_availability_zones.available.names, 0, 2) private_subnets = local.vpc.private_subnets public_subnets = local.vpc.public_subnets enable_nat_gateway = true single_nat_gateway = true enable_dns_hostnames = true public_subnet_tags = { "kubernetes.io/cluster/${local.cluster_name}" = "shared" "kubernetes.io/role/elb" = 1 } private_subnet_tags = { "kubernetes.io/cluster/${local.cluster_name}" = "shared" "kubernetes.io/role/internal-elb" = 1 }} |

output.tf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | output "cluster_id" { description = "EKS cluster ID" value = module.wade-eks.cluster_id}output "cluster_endpoint" { description = "Endpoint for EKS control plane" value = module.wade-eks.cluster_endpoint}output "region" { description = "EKS region" value = local.region}output "cluster_name" { description = "AWS Kubernetes Cluster Name" value = local.cluster_name} |

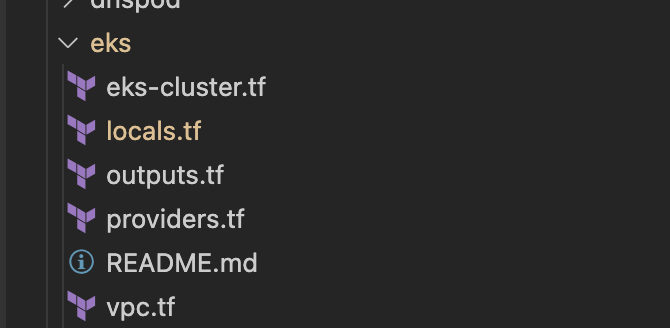

文件结构如下

### 本文首发于博客园 https://www.cnblogs.com/wade-xu/p/16839468.html

部署

配置aws account key/secret

Option 1: Export AWS access and security to environment variables

Option 2: Add a profile to your AWS credentials file

1 2 3 4 5 6 7 | aws configure# orvim ~/.aws/credentials[default]aws_access_key_id=xxxaws_secret_access_key=xxx |

可以使用如下命令来验证当前用的是哪个credentials

1 | aws sts get-caller-identity |

部署tf资源

1 2 3 4 5 | terraform initterraform planterraform apply |

成功之后有如下输出

配置连接EKS集群

1 2 3 | #### Adding the cluster to your contextaws eks --region $(terraform output -raw region) update-kubeconfig \ --name $(terraform output -raw cluster_name) |

使用

同上面,需要下载kubectl

Example 命令:

1 2 3 | kubectl cluster-infokubectl get nodes |

感谢阅读,如果您觉得本文的内容对您的学习有所帮助,您可以打赏和推荐,您的鼓励是我创作的动力

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本