《利用python进行数据分析》读书笔记--第七章 数据规整化:清理、转换、合并、重塑(一)

http://www.cnblogs.com/batteryhp/p/5023330.html

数据分析和建模的大量编程工作都是在数据准备上的(深表同意):加载、清理、转换以及重塑。pandas和Python标准库提供了一组高级的、灵活的、高效的核心函数和算法,他们能够轻松地将数据规整化为正确的形式。

1、合并数据集

pandas对象中的数据可以通过一些内置的方式进行合并

-

pandas.merge可以根据一个或者多个键值连接起来,就是SQL中的数据库连接工作。

-

pandas.concat可以沿着一条轴将多个对象堆叠在一起

-

实例方法combine_first可以讲重复数据编接在一起 ,用一个对象中的值填充另一个对象中的缺失值(注:译者说就是数据库中的外连接)。

由于太常用,给出一些例子。

数据库风格的DataFrame合并

#-*- encoding: utf-8 -*-

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from pandas import Series,DataFrame

#数据集的合并(merge)或者连接(join)运算是通过一个或者多个键将行链接起来。这是关系型数据库的核心。

df1 = DataFrame({'key':['b','b','a','c','a','a','b'],'data1':range(7)})

df2 = DataFrame({'key':['a','b','d'],'data2':range(3)})

print df1

print df2

#没有指定用哪些列进行合并时,默认用重复的列名进行合并,并且只保留合并列中的交集,其他舍去

#即merge默认的是“内连接”

print pd.merge(df1,df2)

#不过,最好显示指定一下:

print pd.merge(df1,df2,on = 'key')

#如果两个对象列明不同,也可以分别指定,当然,原则是这两列得有相同的值

df3 = DataFrame({'lkey':['b','b','a','c','a','a','b'],'data1':range(7)})

df4 = DataFrame({'rkey':['a','b','d'],'data2':range(3)})

print pd.merge(df3,df4,left_on = 'lkey',right_on = 'rkey')

#如果两列没有相同值,返回一个空DataFrame

print pd.merge(df3,df4,left_on = 'lkey',right_on = 'data2')

#merge选项有inner、left、right、outer几种,分别表示 内、左、右、外连接

print pd.merge(df1,df2,how = 'outer')

#下面看多对多(即两个对象中每个键值对应不同的值)

df1 = DataFrame({'key':list('bbacab'),'data1':range(6)})

df2 = DataFrame({'key':list('ababd'),'data2':range(5)})

#下面是多对多的合并,结果是笛卡尔积也就是针对一个键值,两个对象对应值的所有组合

print pd.merge(df1,df2,on = 'key',how = 'left')

#对多个键进行合并,传入一个由列名组成的列表即可

left = DataFrame({'key1':['foo','foo','bar'],'key2':['one','two','one'],'lval':[1,2,3]})

right = DataFrame({'key1':['foo','foo','bar','bar'],'key2':['one','one','one','two'],'rval':[4,5,6,7]})

#多个键进行合并就是将多个键组合成元组,当作单个键值使用(实际上并不是这么回事)

#注意要“不忘初心”,根据键值是对其他列的值进行合并

print pd.merge(left,right,on = ['key1','key2'],how = 'outer')

#警告:列与列合并时,会把DataFrame的索引丢弃

#下面处理重复列名的问题,这里的重复列名是说,依据一列进行合并时两个对象剩下的列中有的列名字重复

#pandas会自动添加后缀

print pd.merge(left,right,on = 'key1')

#后缀可以通过suffixes选项来指定

print pd.merge(left,right,on = 'key1',suffixes = ('_left','_right'))

>>>

data1 key

0 0 b

1 1 b

2 2 a

3 3 c

4 4 a

5 5 a

6 6 b

data2 key

0 0 a

1 1 b

2 2 d

data1 key data2

0 2 a 0

1 4 a 0

2 5 a 0

3 0 b 1

4 1 b 1

5 6 b 1

data1 key data2

0 2 a 0

1 4 a 0

2 5 a 0

3 0 b 1

4 1 b 1

5 6 b 1

data1 lkey data2 rkey

0 2 a 0 a

1 4 a 0 a

2 5 a 0 a

3 0 b 1 b

4 1 b 1 b

5 6 b 1 b

Empty DataFrame

Columns: array([data1, lkey, data2, rkey], dtype=object)

Index: array([], dtype=int64)

data1 key data2

0 2 a 0

1 4 a 0

2 5 a 0

3 0 b 1

4 1 b 1

5 6 b 1

6 3 c NaN

7 NaN d 2

data1 key data2

0 2 a 0

1 2 a 2

2 4 a 0

3 4 a 2

4 0 b 1

5 0 b 3

6 1 b 1

7 1 b 3

8 5 b 1

9 5 b 3

10 3 c NaN

key1 key2 lval rval

0 bar one 3 6

1 bar two NaN 7

2 foo one 1 4

3 foo one 1 5

4 foo two 2 NaN

key1 key2_x lval key2_y rval

0 bar one 3 one 6

1 bar one 3 two 7

2 foo one 1 one 4

3 foo one 1 one 5

4 foo two 2 one 4

5 foo two 2 one 5

key1 key2_left lval key2_right rval

0 bar one 3 one 6

1 bar one 3 two 7

2 foo one 1 one 4

3 foo one 1 one 5

4 foo two 2 one 4

5 foo two 2 one 5

[Finished in 0.7s]

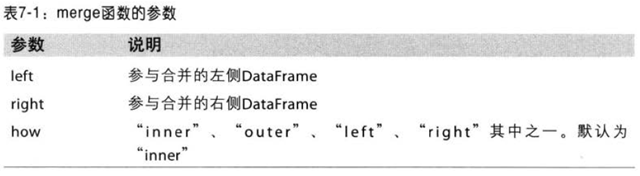

merge的选项有:

索引上的合并

#-*- encoding: utf-8 -*-

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from pandas import Series,DataFrame

#索引上的合并

#DataFrame中连接键有时候在索引中。这时可以传入left_index = True或者right_index = True

left1 = DataFrame({'key':list('abaabc'),'value':range(6)})

right1 = DataFrame({'group_val':[3.5,7],'index':['a','b']})

print right1

#注意上面的right1的索引值和ledt1中的值是同类型的,也就是说相当于对右边的进行转置并且索引跟随改变再进行合并

print pd.merge(left1,right1,left_on = 'key',right_index = True,how = 'inner')

#对于层次化索引,事情就有点复杂了

lefth = DataFrame({'key1':['Ohio','Ohio','Ohio','Nevada','Nevada'],

'key2':[2000,2001,2002,2001,2002],'data':np.arange(5.)})

righth = DataFrame(np.arange(12.).reshape((6,2)),index = [['Nevada','Nevada','Ohio','Ohio','Ohio','Ohio',],

[2001,2000,2000,2000,2001,2002]],columns = ['event1','event2'])

print lefth

print righth

#这种情况下,必须指明用作合并键的多个列(注意对重复索引值的处理)

#注意得到的结果的index是跟左边对象的index一致

print pd.merge(lefth,righth,left_on = ['key1','key2'],right_index = True,how = 'outer')

#同时使用合并双方的索引也没问题

left2 = DataFrame([[1.,2.],[3.,4.],[5.,6.]],index = ['a','c','e'],columns = ['Ohio','Nevada'])

right2 = DataFrame([[7.,8.],[9.,10.],[11.,12.],[13,14]],index = ['b','c','d','e'],columns = ['Missouri','Alabama'])

print left2

print right2

#注意下面的方式,利用index进行合并

print pd.merge(left2,right2,how = 'outer',left_index = True,right_index = True)

#DataFrame有一个join实例方法,它能更方便地实现按索引合并。还可以用作合并多个带有相同或者相似索引的

#DataFrame对象,而不管有没有重叠的列

print left2.join(right2,how = 'outer')

#由于一些历史原因,DataFrame的join方法是在连接键上做左连接。它还支持参数DataFrame的索引跟

#调用者DataFrame的某个列之间的连接(这个方法有点像merge中的left_index这样的参数)

print left1.join(right1,on = 'key') #这个函数现在已经跟书上的不一样了

#最后,对于简单的索引合并,还可以向join传入多个DataFrame

another = DataFrame([[7.,8.],[9.,10.],[11.,12.],[16.,17.]],index = ['a','c','e','f'],columns = ['New York','Oregon'])

print left2.join([right2,another],how = 'outer')

>>>

group_val index

0 3.5 a

1 7.0 b

Empty DataFrame

Columns: array([key, value, group_val, index], dtype=object)

Index: array([], dtype=int64)

data key1 key2

0 0 Ohio 2000

1 1 Ohio 2001

2 2 Ohio 2002

3 3 Nevada 2001

4 4 Nevada 2002

event1 event2

Nevada 2001 0 1

2000 2 3

Ohio 2000 4 5

2000 6 7

2001 8 9

2002 10 11

data key1 key2 event1 event2

4 NaN Nevada 2000 2 3

3 3 Nevada 2001 0 1

4 4 Nevada 2002 NaN NaN

0 0 Ohio 2000 4 5

0 0 Ohio 2000 6 7

1 1 Ohio 2001 8 9

2 2 Ohio 2002 10 11

Ohio Nevada

a 1 2

c 3 4

e 5 6

Missouri Alabama

b 7 8

c 9 10

d 11 12

e 13 14

Ohio Nevada Missouri Alabama

a 1 2 NaN NaN

b NaN NaN 7 8

c 3 4 9 10

d NaN NaN 11 12

e 5 6 13 14

Ohio Nevada Missouri Alabama

a 1 2 NaN NaN

b NaN NaN 7 8

c 3 4 9 10

d NaN NaN 11 12

e 5 6 13 14

key value group_val index

0 a 0 NaN NaN

1 b 1 NaN NaN

2 a 2 NaN NaN

3 a 3 NaN NaN

4 b 4 NaN NaN

5 c 5 NaN NaN

Ohio Nevada Missouri Alabama New York Oregon

a 1 2 NaN NaN 7 8

b NaN NaN 7 8 NaN NaN

c 3 4 9 10 9 10

d NaN NaN 11 12 NaN NaN

e 5 6 13 14 11 12

f NaN NaN NaN NaN 16 17

[Finished in 0.8s]

下面是轴向连接

#-*- encoding: utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt from pandas import Series,DataFrame #另一种合并运算为连接(concatenation),绑定(binding)或者堆叠(stacking)。 #Numpy有一个用于合并原始Numpy数组的concatenation函数: arr = np.arange(12).reshape((3,4)) print arr print np.concatenate([arr,arr],axis = 1)

对于pandas对象,需要考虑:

-

如果各对象其他轴上的索引不同,那些轴应该是并集还是交集?

-

结果对象中的分组需要各不相同吗?

-

用于连接的轴重要吗?

下面介绍concat函数:

#-*- encoding: utf-8 -*-

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from pandas import Series,DataFrame

'''

#另一种合并运算为连接(concatenation),绑定(binding)或者堆叠(stacking)。

#Numpy有一个用于合并原始Numpy数组的concatenation函数:

arr = np.arange(12).reshape((3,4))

print arr

print np.concatenate([arr,arr],axis = 1)

'''

s1 = Series([0,1],index = ['a','b'])

s2 = Series([2,3,4],index = ['c','d','e'])

s3 = Series([5,6],index = ['f','g'])

print pd.concat([s1,s2,s3])

#注意下面的方式,产生的是一个DataFrame,index是所有index合并起来,列是每个Series占一列,其他位置N啊N

print pd.concat([s1,s2,s3],axis = 1)

#如果Series有重复值的情况下

s4 = pd.concat([s1 * 5,s3])

print s4

#下面的inner是取交集

print pd.concat([s1,s4],axis = 1,join = 'inner')

#通过join_axes指定要在“其他轴”上使用的索引

print pd.concat([s1,s4],axis = 1,join_axes = [['a','c','b','e']])

#现在有个问题,参与连接的各个部分在最后的结果中不能区分,可以设置层次化索引解决此问题

result = pd.concat([s1,s2,s3],keys = ['one','two','three'])

print result

print result.unstack()

#如果沿着axis=1进行合并,则当然的key成为DataFrame的列头(列名):

result1 = pd.concat([s1,s2,s3],axis = 1,keys = ['one','two','three'])

print result1

print result1.columns

#下面看DataFrame的合并方式,行列数量不同也能合并,比R语言好

df1 = DataFrame(np.arange(6).reshape(3,2),index = ['a','b','c'],columns = ['one','two'])

df2 = DataFrame(5 + np.arange(4).reshape(2,2),index = ['a','c'],columns = ['three','four'])

print pd.concat([df1,df2])#默认将行合并

print pd.concat([df1,df2],axis = 1,keys = ['level1','level2'])

#下面的这种合并方式更加科学,字典的形式

print pd.concat({'level1':df1,'level2':df2},axis = 0)

print pd.concat([df1,df2],axis = 1,keys = ['level1','level2'],names = ['upper','lower'])

#最后需要考虑的问题是,跟当前分析工作无关的DataFrame行索引,也就是说,原来的行索引没有意义了

df1 = DataFrame(np.random.randn(3,4),columns = [list('abcd')])

df2 = DataFrame(np.random.randn(2,3),columns = ['b','d','a'])

#只要加上ignore_index = True 即可

print pd.concat([df1,df2],ignore_index = True)

>>>

a 0

b 1

c 2

d 3

e 4

f 5

g 6

0 1 2

a 0 NaN NaN

b 1 NaN NaN

c NaN 2 NaN

d NaN 3 NaN

e NaN 4 NaN

f NaN NaN 5

g NaN NaN 6

a 0

b 5

f 5

g 6

0 1

a 0 0

b 1 5

0 1

a 0 0

c NaN NaN

b 1 5

e NaN NaN

one a 0

b 1

two c 2

d 3

e 4

three f 5

g 6

a b c d e f g

one 0 1 NaN NaN NaN NaN NaN

two NaN NaN 2 3 4 NaN NaN

three NaN NaN NaN NaN NaN 5 6

one two three

a 0 NaN NaN

b 1 NaN NaN

c NaN 2 NaN

d NaN 3 NaN

e NaN 4 NaN

f NaN NaN 5

g NaN NaN 6

array([one, two, three], dtype=object)

four one three two

a NaN 0 NaN 1

b NaN 2 NaN 3

c NaN 4 NaN 5

a 6 NaN 5 NaN

c 8 NaN 7 NaN

level1 level2

one two three four

a 0 1 5 6

b 2 3 NaN NaN

c 4 5 7 8

four one three two

level1 a NaN 0 NaN 1

b NaN 2 NaN 3

c NaN 4 NaN 5

level2 a 6 NaN 5 NaN

c 8 NaN 7 NaN

upper level1 level2

lower one two three four

a 0 1 5 6

b 2 3 NaN NaN

c 4 5 7 8

a b c d

0 2.277611 0.597990 2.128480 -0.467747

1 2.450508 -0.682617 1.129313 1.174447

2 -0.106422 0.590667 1.015706 0.712673

3 -1.323742 0.060791 NaN 1.095113

4 0.586082 -0.849976 NaN -0.320739

[Finished in 1.9s]

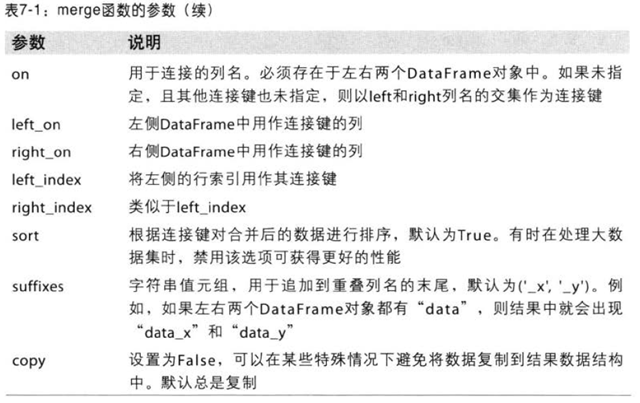

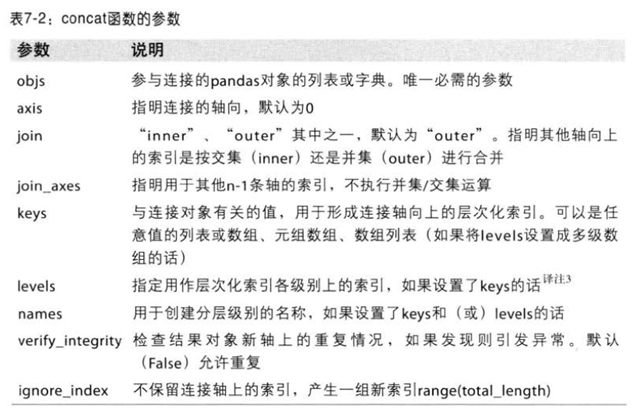

concat函数的参数如下:

合并重叠数据

还有一种数据是不能简单通过merge、concatenation解决的。比如,有可能部分或者全部索引重叠的两个数据集。

#-*- encoding: utf-8 -*-

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from pandas import Series,DataFrame

a = Series([np.nan,2.5,np.nan,3.5,4.5,np.nan],

index = ['f','e','d','c','b','a'])

b = Series(np.arange(len(a),dtype = np.float64),

index = ['f','e','d','c','b','a'])

b[-1] = np.nan

print a,'\n'

print b,'\n'

#print a + b #注意这里的自动对齐

#c用来按照索引取a、b的值:

c = np.where(pd.isnull(a),b,a)

print c,'\n'

#numpy中也有这样一个方法combine_first

print b[:-2].combine_first(a[2:]) #注意两者都不为空时,保留b的值

#对于DataFrame而言,combine_first也是做同样的事,可以看作用参数对象中的数据

#为调用者对象的确实数据“打补丁”

df1 = DataFrame({'a':[1.,np.nan,5.,np.nan],

'b':[np.nan,2.,np.nan,6.],

'c':range(2,18,4)})

df2 = DataFrame({'a':[5.,4.,np.nan,3.,7.],

'b':[np.nan,3.,4.,6.,8.]})

#要特别注意下面的应用,df1比df2 少一行,运行以后df1就比原来多了一行,这有时候对数据处理是个隐藏bug啊!

print df1.combine_first(df2)

>>>

f NaN

e 2.5

d NaN

c 3.5

b 4.5

a NaN

f 0

e 1

d 2

c 3

b 4

a NaN

f 0.0

e 2.5

d 2.0

c 3.5

b 4.5

a NaN

a NaN

b 4.5

c 3.0

d 2.0

e 1.0

f 0.0

a b c

0 1 NaN 2

1 4 2 6

2 5 4 10

3 3 6 14

4 7 8 NaN

[Finished in 0.9s]

2、重塑和轴向旋转

#-*- encoding: utf-8 -*-

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from pandas import Series,DataFrame

#reshape(重塑)、pivot(轴向旋转)可以对表格型数据进行基础运算

#重塑层次化索引

#stack:将数据的列“旋转”为行

#unstack:将数据的行“旋转”为列

data = DataFrame(np.arange(6).reshape((2,3)),index = pd.Index(['Ohio','Colorado'],name = 'state'),

columns = pd.Index(['one','two','three'],name = 'number'))

print data

result = data.stack()

print result #这里就是将列名作为了层次化索引(内层索引),得到了一个Series

print result.unstack() #将层次化索引转换为二维表,得到DataFrame

#默认情况下,unstack处理的是内层的索引,若想别的层次,传入编号或者名称即可,注意最外一层编号为0

result1 = result.unstack(0)

print result1

print result1.stack(0),'\n' #默认,列为内层

print result1.unstack(1) ,'\n' #列为外层

#下面看有缺失值的情况,unstack()会标示出缺失值

s1 = Series([0,1,2,3],index = [list('abcd')])

s2 = Series([4,5,6],index = ['c','d','e'])

data2 = pd.concat([s1,s2],keys = ['one','two'])

print data2

print data2.unstack(),'\n'

#stack会滤除缺失数据

print data2.unstack().stack(),'\n'

print data2.unstack().stack(dropna = False) ,'\n' #保留缺失值

#对DataFrame进行unstack时,作为旋转轴的级别成为结果中最低的,弄到最内层

df = DataFrame({'left':result,'right':result + 5},columns = pd.Index(['left','right'],name = 'side'))

print 'df is \n',df

print 'df.unstack is \n',df.unstack('state')

print 'df.unstack.stack \n',df.unstack('state').stack('side')

将“长格式”转换为“宽格式”

#-*- encoding: utf-8 -*-

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from pandas import Series,DataFrame

#时间序列中的数据通常是以所谓“长格式”(long)或“堆叠格式”(stacked)存储在数据库和csv中

#由于没有找到数据,自己动手写一点

ldata = DataFrame({'date':['03-31','03-31','03-31','06-30','06-30','06-30'],

'item':['real','infl','unemp','real','infl','unemp'],'value':['2710.','000.','5.8','2778.','2.34','5.1']})

print 'ldata is \n',ldata

#下面就是将data、item作为行、列名,value填充进二维表

pivoted = ldata.pivot('date','item','value')

print 'pivoted is \n',pivoted

ldata['value2'] = np.random.randn(len(ldata))

print 'ldata is \n',ldata

#看一下下面的结果,得到的列就有了层次化列表

pivoted = ldata.pivot('date','item')

print pivoted

print 'pivoted is \n',pivoted['value'],'\n'

#换一种试试,下面的就将value2填充,value就丢弃了

pivoted1 = ldata.pivot('date','item','value2')

print pivoted1

#注意,pivot其实只是一个“快捷方式而已”,用set_index创建层次化索引,再用unstack重塑

unstacked = ldata.set_index(['date','item']).unstack('item') #unstack标明展开的轴

print unstacked

浙公网安备 33010602011771号

浙公网安备 33010602011771号