Hadoop 数据仓库工具——Hive

1.安装Mysql

a.在官网下载 Mysql 8.0 (mysql-8.0.16-winx64.zip)并解压,地址:https://dev.mysql.com/downloads/mysql/

b.在 Mysql 根目录下 my.ini 文件和 data 文件夹,my.ini 内容如下:

[mysqld] # 设置3306端口 port=3306 # 设置mysql的安装目录 basedir=D:\Tools\mysql-8.0.16-winx64 # 设置mysql数据库的数据的存放目录 datadir=D:\Tools\mysql-8.0.16-winx64\data # 允许最大连接数 max_connections=200 # 允许连接失败的次数。这是为了防止有人从该主机试图攻击数据库系统 max_connect_errors=10 # 服务端使用的字符集默认为UTF8 character-set-server=utf8 # 创建新表时将使用的默认存储引擎 default-storage-engine=INNODB [mysql] # 设置mysql客户端默认字符集 default-character-set=utf8 [client] # 设置mysql客户端连接服务端时默认使用的端口 port=3306 default-character-set=utf8

c.新增系统环境变量 MYSQL_HOME:D:\Tools\mysql-8.0.16-winx64,并在 Path 变量中添加 %MYSQL_HOME%\bin

d.以管理员的身份打开cmd窗口,并跳转到 Mysql 的 bin 目录下

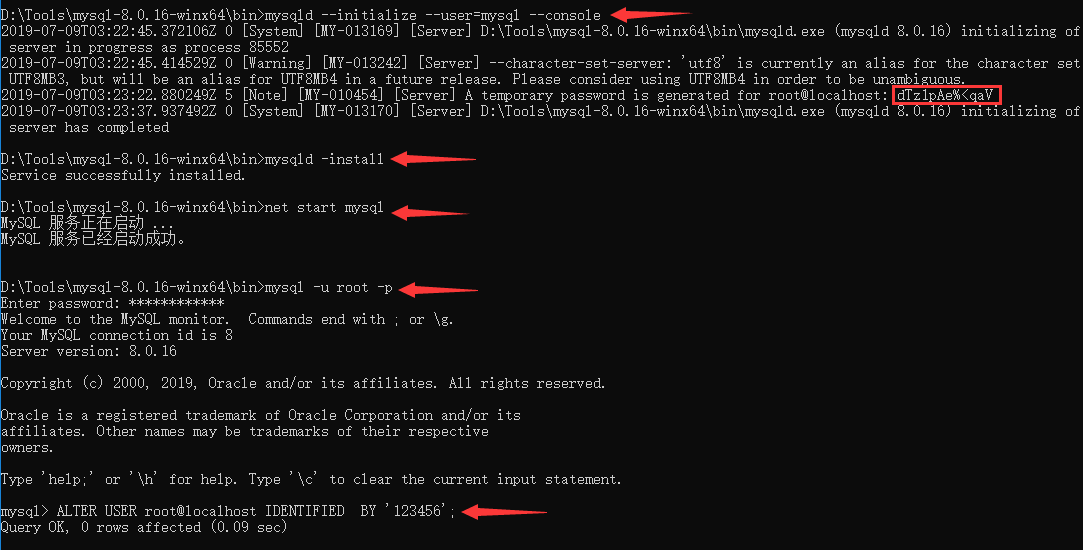

①执行初始化命令:mysqld --initialize --user=mysql --console,并记住临时密码

②执行安装服务命令:mysqld -install

③执行启动服务命令:net start mysql

④执行修改密码命令:mysql -u root -p (此时需要输入①中的临时密码)

⑤执行修改密码语句:ALTER USER root@localhost IDENTIFIED BY '123456';

2.安装Hive

a.在官网下载 Hive(apache-hive-3.1.1-bin.tar.gz)并解压,地址:http://mirror.bit.edu.cn/apache/hive/

b.新增系统环境变量 HIVE_HOME:D:\Tools\apache-hive-3.1.1-bin,并在 Path 变量中添加 %HIVE_HOME%\bin

c.在 Hive 的 conf 目录下创建 hive-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. --> <configuration> <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> <description>location of default database for the warehouse</description> </property> <property> <name>hive.exec.scratchdir</name> <value>/tmp/hive</value> <description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/<username> is created, with ${hive.scratch.dir.permission}.</description> </property> <property> <name>hive.exec.local.scratchdir</name> <value>D:/Tools/apache-hive-3.1.1-bin/scratch_dir</value> <description>Local scratch space for Hive jobs</description> </property> <property> <name>hive.downloaded.resources.dir</name> <value>D:/Tools/apache-hive-3.1.1-bin/resources_dir/${hive.session.id}_resources</value> <description>Temporary local directory for added resources in the remote file system.</description> </property> <property> <name>hive.querylog.location</name> <value>D:/Tools/apache-hive-3.1.1-bin/querylog_dir</value> <description>Location of Hive run time structured log file</description> </property> <property> <name>hive.server2.logging.operation.log.location</name> <value>D:/Tools/apache-hive-3.1.1-bin/operation_dir</value> <description>Top level directory where operation logs are stored if logging functionality is enabled</description> </property> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://127.0.0.1:3306/hive?serverTimezone=UTC&createDatabaseIfNotExist=true</value> <description> JDBC connect string for a JDBC metastore. To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL. For example, jdbc:postgresql://myhost/db?ssl=true for postgres database. </description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> <description>Username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>123456</value> <description>password to use against metastore database</description> </property> <property> <name>hive.metastore.schema.verification</name> <value>false</value> <description> Enforce metastore schema version consistency. True: Verify that version information stored in is compatible with one from Hive jars. Also disable automatic schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures proper metastore schema migration. (Default) False: Warn if the version information stored in metastore doesn't match with one from in Hive jars. </description> </property> <!-- 设置hiveserver2外部访问端口,默认为10000 --> <property> <name>hive.server2.thrift.port</name> <value>10010</value> </property> <!-- 使用本地mr,解决使用Hadoop的mr时报错的问题 --> <property> <name>hive.exec.mode.local.auto</name> <value>true</value> <description>Let Hive determine whether to run in local mode automatically</description> </property> </configuration>

注意:hive.exec.mode.local.auto 配置是为了解决 return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask 的问题

d.在 Hive 的根目录下创建 scratch_dir、resources_dir、querylog_dir、operation_dir 四个文件夹

e.添加对windows的支持:在官网下载 apache-hive-1.2.2-src.tar.gz,解压后将 bin 目录及子目录下的 cmd 文件复制到 Hive 对应的 bin 目录及子目录下

f.下载 mysql-connector-java-8.0.16.jar 驱动包,放到 Hive 的 lib 目录下

g.新建cmd窗口进入 Hive 的 bin 目录执行命令初始化数据库表:hive --service schematool -dbType mysql -initSchema

h.在cmd窗口启动metastore:hive --service metastore

i.在cmd窗口启动hiveserver2:hive --service hiveserver2

j.在cmd窗口输入 hive 即可进入 Hive 的控制台

k.问题及解决:

①问题一:执行DDL时出现 (org.apache.hadoop.security.authorize.AuthorizationException): User: A(A为windows管理员名称) is not allowed to impersonate root 的权限问题

①解决:在 Hadoop 的 hdfs-site.xml 配置文件中加入

<property> <name>dfs.permissions</name> <value>false</value> </property>

在 Hadoop 的 core-site.xml 配置文件中加入

<property> <name>hadoop.proxyuser.zwj.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.zwj.groups</name> <value>*</value> </property>

②问题二:在执行查询操作时,报出 com.google.common.collect.ImmutableSortedMap 类冲突的异常

②解决:将 Hive 的 lib 目录下的 guava-19.0.jar 替换掉 Hadoop 的 share\hadoop 目录的子目录下的 guava-11.0.2.jar (共两处需要替换),注意做好备份

l.以后使用:先进入 Hadoop 的 sbin 目录执行cmd启动命令:start-all.cmd

在执行cmd命令:hive --service metastore

最后执行cmd命令:hive --service hiveserver2

3.使用 hive-jdbc 操作 Hive

a.加入pom依赖

<!-- Hive --> <dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-jdbc</artifactId> <version>2.3.0</version> </dependency>

b.使用

public class HiveDao { private static String driverName = "org.apache.hive.jdbc.HiveDriver"; private static String url = "jdbc:hive2://localhost:10010/default"; private static String user = "root"; private static String password = "123456"; private static Connection conn = null; private static Statement stmt = null; private static ResultSet rs = null; // 加载驱动、创建连接 public static void init() throws Exception { Class.forName(driverName); conn = DriverManager.getConnection(url,user,password); stmt = conn.createStatement(); } // 创建数据库 public static void createDatabase() throws Exception { String sql = "create database hive_jdbc_test"; System.out.println("Running: " + sql); stmt.execute(sql); } // 查询所有数据库 public static void showDatabases() throws Exception { String sql = "show databases"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); while (rs.next()) { System.out.println(rs.getString(1)); } } // 创建表 public static void createTable() throws Exception { String sql = "create table cmdty(" + "cmdtyCode int," + "cmdtyName string," + "firstPrice double" + ")" + "row format delimited fields terminated by '\\t'"; System.out.println("Running: " + sql); stmt.execute(sql); } // 查询所有表 public static void showTables() throws Exception { String sql = "show tables"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); while (rs.next()) { System.out.println(rs.getString(1)); } } // 查看表结构 public static void descTable() throws Exception { String sql = "desc cmdty"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); while (rs.next()) { System.out.println(rs.getString(1) + "\t" + rs.getString(2)); } } // 加载数据 public static void loadData() throws Exception { //先创建文件夹 Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://localhost:9527"); // 对应 core-site.xml 中配置的端口 // 拿到操作HDFS的一个实例,并且设置其用户(由于windows权限问题"zwj"需替换为管理员账号) FileSystem fs = FileSystem.get(new URI("hdfs://localhost:9527"),conf,"zwj"); fs.mkdirs(new Path("/user/hive/warehouse/cmdty")); fs.close(); //在导入数据 String filePath = "E:/tmp/cmdty.txt"; String sql = "load data local inpath '" + filePath + "' overwrite into table cmdty"; System.out.println("Running: " + sql); stmt.execute(sql); } // 查询数据 public static void selectData() throws Exception { String sql = "select * from cmdty"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); System.out.println("员工编号" + "\t" + "员工姓名" + "\t" + "工作岗位"); while (rs.next()) { System.out.println(rs.getInt("cmdtyCode") + "\t\t" + rs.getString("cmdtyName") + "\t\t" + rs.getDouble("firstPrice")); } } // 统计查询(会运行mapreduce作业) public static void countData() throws Exception { String sql = "select count(1) from cmdty"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); while (rs.next()) { System.out.println(rs.getInt(1) ); } } // 删除数据库 public static void dropDatabase() throws Exception { String sql = "drop database if exists hive_jdbc_test"; System.out.println("Running: " + sql); stmt.execute(sql); } // 删除数据库表 public static void deopTable() throws Exception { String sql = "drop table if exists cmdty"; System.out.println("Running: " + sql); stmt.execute(sql); } // 释放资源 public static void destory() throws Exception { if ( rs != null) { rs.close(); } if (stmt != null) { stmt.close(); } if (conn != null) { conn.close(); } } public static void main(String[] args) throws Exception { init(); // createDatabase(); // showDatabases(); // createTable(); // showTables(); // descTable(); // loadData(); // selectData(); countData(); // dropDatabase(); // deopTable(); destory(); } }

注意:在加载数据到HDFS和数据库表中时,需要提前在HDFS中建好对应文件夹,不然后会报错

4.遗留问题

a.在启动hiveserver2,会报 java.lang.ClassNotFoundException: org.apache.tez.dag.api.TezConfiguration 的异常,是因为没有集成tez的原因,但暂时不影响 hive-jdbc 操作 Hive。

参考文章:https://www.cnblogs.com/tangyb/p/8971658.html

https://www.cnblogs.com/maria-ld/p/10171780.html

https://www.cnblogs.com/takemybreathaway/articles/9750175.html