[转]What is a WebRTC Gateway anyway? (Lorenzo Miniero)

[转]What is a WebRTC Gateway anyway? (Lorenzo Miniero)

https://webrtchacks.com/webrtc-gw/

As I mentioned in my ‘WebRTC meets telecom’ article a couple of weeks ago, at Quobis we’re currently involved in 30+ WebRTC field trials/POCs which involve in one way or another a telco network. In most cases service providers are trying to provide WebRTC-based access to their existing/legacy infrastructure and services (fortunately, in some cases it’s not limited to do only that). To achieve all this, one of the pieces they need to deploy is a WebRTC Gateway. But, what is a WebRTC Gateway anyway? A year ago I had the chance to provide a first answer during the Kamailio World Conference 2013 (see my presentation WebRTC and VoIP: bridging the gap) but, since Lorenzo Miniero has recently released an open source, modular and general purpose WebRTC gateway called Janus, I thought it would be great to get him to share his experience here.

I’ve known Lorenzo for some years now. He is the co-founder of a small but great startup called Meetecho. Meetecho is an academic spinoff of the University of Napoli Federico II, where Lorenzo is currently also a Ph.D student. He has been involved in real-time multimedia applications over the Internet for years, especially from a standardisation point of view. Within the IETF, in particular, he especially worked in XCON on Centralized Conferencing and MEDIACTRL on the interactions between Application Servers and Media Servers. He is currently working on WebRTC-related applications, in particular on conferencing and large scale streaming as part of his Ph.D, focusing on the interaction with legacy infrastructures — here it is where WebRTC gateways play an interesting role. As part of the Meetecho team he also provides remote participation services on a regular basis to all IETF meetings. Most recently, he also spent some time reviewing Simon P. and Salvatore’s L. new WebRTC book.

{“intro-by” : “victor“}

What is a WebRTC Gateway anyway? (by Lorenzo Miniero)

Since day one, WebRTC has been seen as a great opportunity by two different worlds: those who envisaged the chance to create innovative and new applications based on a new paradigm, and those who basically just envisioned a new client to legacy services and applications. Whether you belong to the former or the latter (or anywhere in between, as me), good chances are that, sooner or later, you eventually faced the need for some kind of component to be placed between two or more WebRTC peers, thus going beyond (or simply breaking) the end-to-end approach WebRTC is based upon. I, for one, did, and have devoted my WebRTC-related efforts in that direction since WebRTC first saw the light.

A different kind of peer

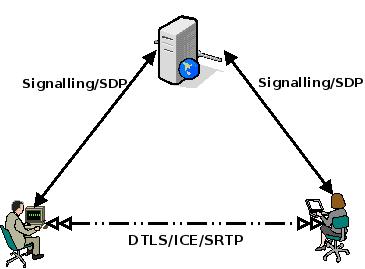

As you probably already know (and if you don’t, head here and do your homework!), WebRTC has been conceived as a peer-to-peer solution: that is, while signalling goes through a web server/application, the media flow is peer-to-peer.

I won’t go into the details of how this paradigm may change, especially considering this has been the subject of a previous blog post. What’s important to point out is that, even in a simple peer-to-peer scenario, one of the two involved parties (or maybe even both) doesn’t need to be a browser, but may very well be an application. The reasons for having such an application may be several: it may be acting as an MCU, a media recorder, an IVR application, a bridge towards a more or less different technology (e.g., SIP, RTMP, or any legacy streaming platform) or something else. Such an application, which should implement most, if not all, the WebRTC protocols and technologies, is what is usually called a WebRTC Gateway: one side talks WebRTC, while the other still WebRTC or something entirely different (e.g., translating signalling protocols and/or transcoding media packets).

Gateways? Why??

As anticipated, there are several reasons why a gateway can be useful. Technically speaking, MCUs and server-side stacks can be seen as gateways as well, which means that, even when you don’t step outside the WebRTC world and just want to extend the one-to-one/full-mesh paradigm among peers, having such a component can definitely help according to the scenario you want to achieve.

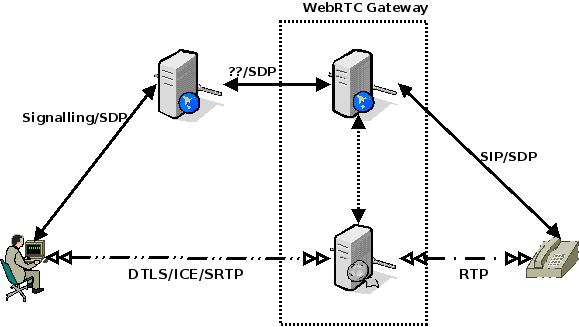

Nevertheless, the main motivation comes from the tons of existing and so called legacy infrastructures out there, that may benefit from a WebRTC-enabled kind of access. In fact, one would assume that the re-use of existing protocols like SDP, RTP and others in WebRTC would make this trivial. Unfortunately, most of the times that is not the case. In fact, if for instance we refer to existing SIP infrastructures, even by making use of SIP as a signalling protocol in WebRTC there are too many differences between the standards WebRTC endpoints implement and those available in the currently widely available deployments.

Just to make a simple example, most legacy components don’t support media encryption, and when they do they usually only support SDES. On the other end, for security reasons WebRTC mandates the use of DTLS as the only way to establish a secure media connection, a mechanism that has been around for a while but that has seen little or no deployment in the existing communication frameworks so far. The same incompatibilities between the two worlds emerge in other aspects as well, like the extensive use WebRTC endpoints make of ICE for NAT traversal, RTCP feedback messages for managing the status of a connection or RTP/RTCP muxing, whereas existing infrastructures usually rely on simpler approaches like Hosted Nat Traversal (HNT) in SBCs, separate even/odd ports for RTP and RTCP, and more or less basic RFC3550 RTCP statistics and messages. Things get even wilder when we think of the additional stuff, mandatory or not, that is being added to WebRTC right now, as BUNDLE, Trickle ICE, new codecs the existing media servers will most likely not support and so on, not to mention Data Channels and WebSockets and the way they could be used in a WebRTC environment to transport protocols like BFCP or MSRP, that SBCs or other legacy components would usually expect on TCP and/or UDP and negotiated the old fashioned way.

Ok, we need a gateway… what now?

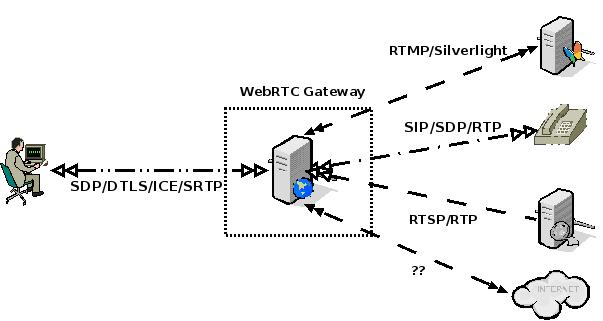

Luckily for you (and for us all!), several people have worked on gateways since the first WebRTC browsers have been made available. Even just to focus on open source efforts alone, a lot of work has been done on platforms like Asterisk or Kamailio to make the interaction with existing SIP infrastructures easier, and new components like Doubango, Kurento, Licode or the Jitsi stack have been released in the latest months. Each application usually addresses different requirements, depending on whether you just need a WebRTC-to-SIP gateway, a conferencing MCU, a WebRTC-compliant streaming server, a more generic stack/media server and so on.

Since I’ve recently worked on an open source WebRTC gateway implementation called Janus myself, and considering its more general purpose approach to gatewaying, I’ll try and guide you through the common requirements and challenges such a WebRTC-driven project can face you with.

Where to start?

When it comes to gateways, the harder step is always the first one. Where should you start? The easiest way is starting from addressing the functional requirements, that usually are:

- architectural, as in “should the gateway be monolithic, or somehow decomposed between signalling and media plane”?;

- protocols, as you’ll need to be able to talk WebRTC and probably something else too, if you’re going to translate to a more or less different technology;

- media management, depending on whether you’re only going to relay media around or handle it directly (e.g., transcoding, mixing, recording, etc.);

- signalling, that is how you’re going to setup and manage media sessions on either side;

- putting this all together, as, especially in WebRTC, all current implementations have expectations on how the involved technologies should behave, and may not work if they’re failed.

The first point in particular is quite important, as it will obviously impact the way the gateway is subsequently going to be designed and implemented. In fact, while a monolithic approach (where signalling and media planes are handled together) might be easier to design, a decomposed gateway (with signalling and media planes handled separately, and the two interacting somehow) would allow for a separate management of scalability concerns. There is a middle ground, if for instance one relies on a more hybrid modular architecture. That said, all of them have pros and cons, and if properly designed each of them can be scaled as needed.

Apart from that, at least from a superficial point of view there’s nothing in the requirements that is quite different from a WebRTC-compliant endpoint in general. Of course, there are differences to take into account: for one, a gateway is most likely going to handle many more sessions that a single endpoint; besides, no media needs to be played locally, which makes things easier on one side, but presents different complications when it comes to what must happen to the media themselves. The following paragraphs try to go a bit deeper in the genesis of a gateway.

Protocols

The first thing you need to ask when choosing or implementing a WebRTC implementation is: can I avoid re-inventing the wheel? This is a very common question we ask ourselves everyday in several different contexts. Yet, it is even more important when talking about WebRTC, as it does partly re-use existing technologies and protocols, even if “on steroids” as I explained before.

The answer luckily is, at least in part, “mostly“. You may, of course, just take the Chrome stack and start it all from there. As I anticipated, a gateway is, after all, a compliant WebRTC implementation, and so a complete codebase like that can definitely help. For several different reasons, I chose a different approach, that is trying to write something new from scratch. Whatever the programming language, there are several open source libraries you can re-use for the purpose, like openssl (C/C++) or BouncyCastle (Java) for DTLS-SRTP, libnice (C/C++), pjnath (C/C++) or ice4j (Java) for everything related to ICE/STUN/TURN, libsrtp for SRTP and so on. Of course, a stack is only half of the solution: you’ll need to prepare yourself for every situation, e.g., acting as either a DTLS server or client, handle heterogeneous NAT traversal scenarios, and basically be able to interact with all compliant implementations according to the WebRTC specs.

As you may imagine (especially if you read Tim’s rant), things do get a bit harder when it comes to SDP: while there are libraries that allow you to parse, manipulate and generate SDP, the several attributes and features that are needed for WebRTC are quite likely not supported, if not by working on the library a lot. For instance, for Janus I personally chose a relatively lightweight approach: I used Sofia-SDP as a stack for parsing session descriptions, while manually generating them instead of relying on a library for the purpose. Considering the mangling we already all do in JavaScript, until a WebRTC-specific SDP library comes out it looked like the safest course of action. What’s important to point out is that, since the gateway is going to terminate the media connections somehow, the session descriptions must be prepared correctly, and in a way that all compliant implementations must be able to process: which means, be prepared to handle whatever you may receive, as your gateway will need to understand it!

Media

Once you deal with the protocols, you’re left with the media, and again, there are tons of RTP/RTCP libraries you can re-use for the purpose. Once you’re at the media level, you can do what you want: you may want to record the frames a peer is sending, reflect them around for a webinar/conference, transcode them and send them somewhere else, translate RTP and the transported media to and from a different protocol/format, receive some from an external source and send them to a WebRTC endpoint, and so on.

RTCP in particular, though, needs special care, especially if you’re bridging WebRTC peers through the gateway: in fact, RTCP messages are tightly coupled with the RTP session they’re related to, which means you have to translate the messages going back and forth if you want them to keep their meaning. Considering a gateway is a WebRTC-compliant endpoint, you may also want to take care of the RTCP messages yourself: e.g., retransmit RTP packets when you get a NACK, adapting the bandwidth on reception of a REMB, or keep the WebRTC peer up-to-date on the status of the connection by sending proper feedback. Some more details are available in draft-ietf-straw-b2bua-rtcp which is currently under discussion in the IETF.

Signalling

Last, but not least: what kind of signalling should your gateway employ? WebRTC doesn’t mandate any, which means you’re free to choose the one that fits your requirements. Several implementations rely on SIP, which looks like the natural choice when bridging to existing SIP infrastructures. Others make use of alternative protocols like XMPP/Jingle.

That said, there is not a perfect candidate, as it mostly depends on what you want your gateway to do and what you’re most comfortable with in the first place. If you want it to be as generic as possible, as I did, an alternative approach may be relying on an ad-hoc protocol, e.g., based on JSON or XML, which leaves you the greatest freedom when it comes to design a bridge to other technologies.

Long story short…

As you might have guessed, writing a gateway is not easy. You need to implement all the protocols and use them in a way that allows you to seamlessly interact with all compliant implementations, maybe even fixing what may cause them not to interact with each other as they are, while at the same time taking into account the requirements on the legacy side. You need to tame the SDP beast, be careful of RTCP, and take care of any possible issue that may arise when bridging WebRTC to a different technology. Besides, as you know WebRTC is a moving target, and so what works today in the gateways world may not work tomorrow: which means that keeping updated is of paramount importance.

Anyway, this doesn’t need to scare you. Several good implementations are already available that address different scenarios, so if all you need is an MCU or a way to simply talk to well known legacy technologies, good chances are that one or more of the existing platforms can do it for you. Some implementations, like Janus itself, are even conceived as more or less extensible, which means that, in case no gateway currently supports what you need, you probably don’t need to write a new one from scratch anyway. And besides, as time goes by the so-called legacy implementations will hopefully start aligning with the stuff WebRTC is mandating right now, so that gateways won’t be needed anymore for bridging technologies but only to allow for more complex WebRTC scenarios.

That said, make sure you follow the Server-oriented stack topic on discuss-webrtc for more information!

{“author” : “Lorenzo Miniero“}

Want to keep up on our latest posts? Please click here to subscribe to our mailing list if you have not already. We only email post updates. You can also follow us on twitter at @webrtcHacks for blog updates and news of technical WebRTC topics or our individual feeds @chadwallacehart, @victorpascual and @tsahil.

浙公网安备 33010602011771号

浙公网安备 33010602011771号