spark--设置日志级别

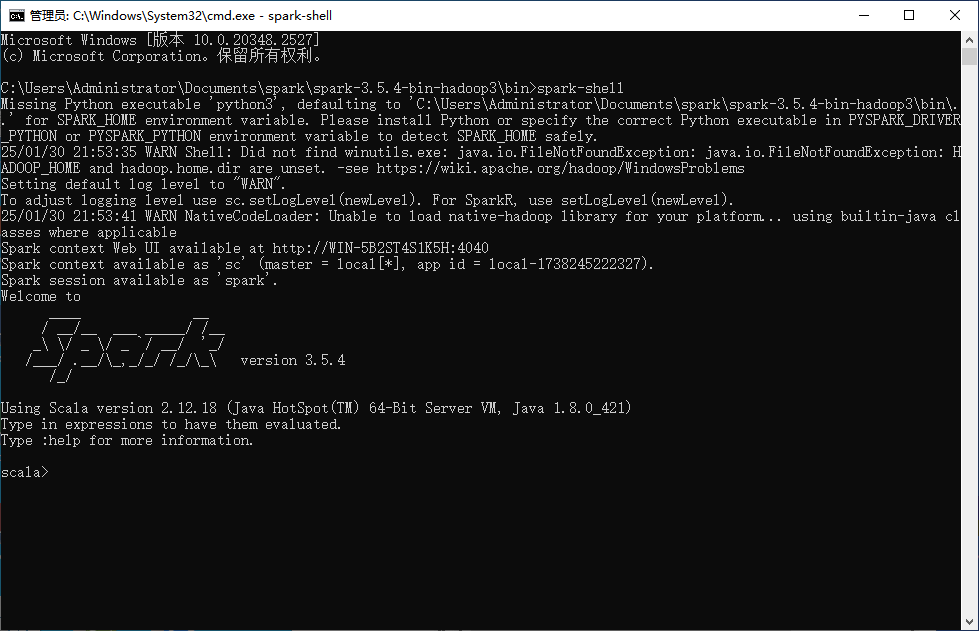

修改前:

Windows:

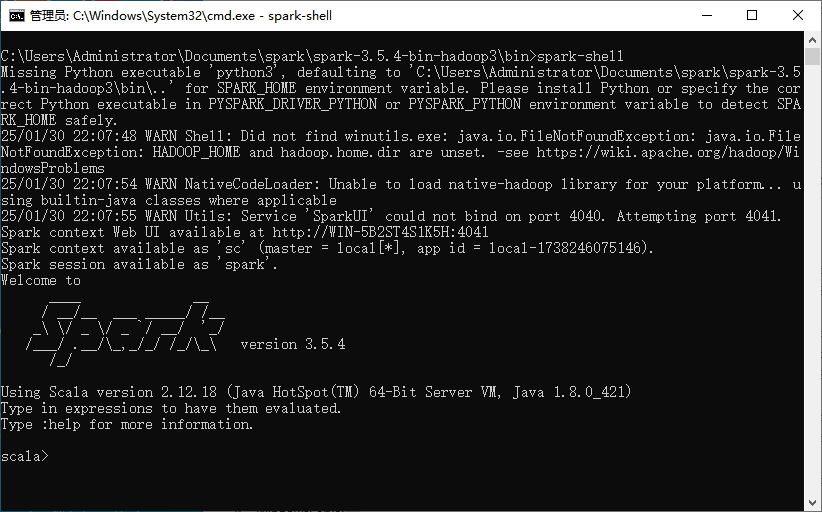

修改后:

Windows:

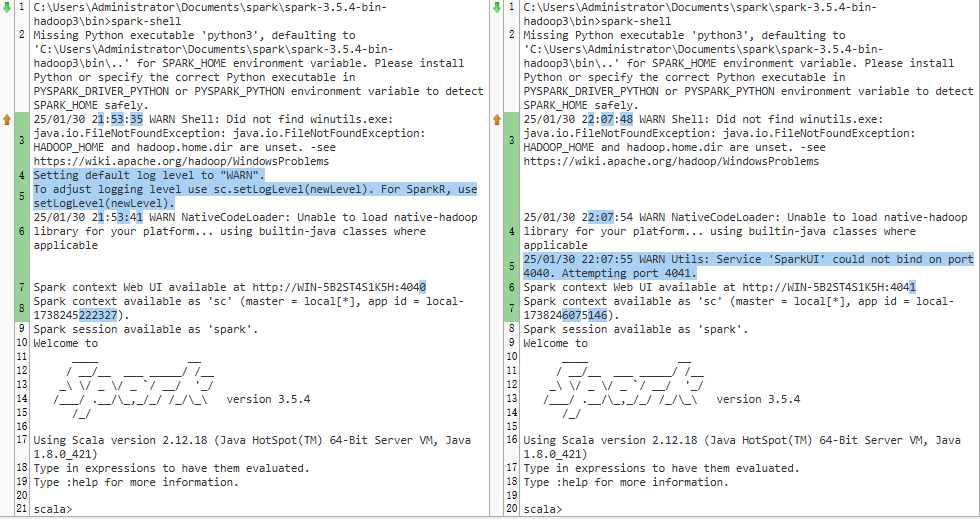

对比:

Windows:

修改过程:

Windows:

C:\Users\Administrator\Documents\spark\spark-3.5.4-bin-hadoop3>copy conf\log4j2.properties.template conf\log4j2.properties

已复制 1 个文件。

rootLogger.level = info

rootLogger.appenderRef.stdout.ref = console

修改为

rootLogger.level = warn

rootLogger.appenderRef.stdout.ref = console

ps:

1.log4j.rootCategory=WARN, console在log4j2.properties中不存在,分别为rootLogger.level和rootLogger.appenderRef.stdout.ref

2.error级别的启动输出日志如下:

C:\Users\Administrator\Documents\spark\spark-3.5.4-bin-hadoop3\bin>spark-shell

Missing Python executable 'python3', defaulting to 'C:\Users\Administrator\Documents\spark\spark-3.5.4-bin-hadoop3\bin\..' for SPARK_HOME environment variable. Please install Python or specify the correct Python executable in PYSPARK_DRIVER_PYTHON or PYSPARK_PYTHON environment variable to detect SPARK_HOME safely.

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://WIN-5B2ST4S1K5H:4041

Spark context available as 'sc' (master = local[*], app id = local-1738247066941).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.5.4

/_/

Using Scala version 2.12.18 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_421)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

3.文本对比网站:https://text-compare.com/zh-hans/

标签:

Spark

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律