hadoop伪分布式模式

1.下载,上传,解压,配置环境变量

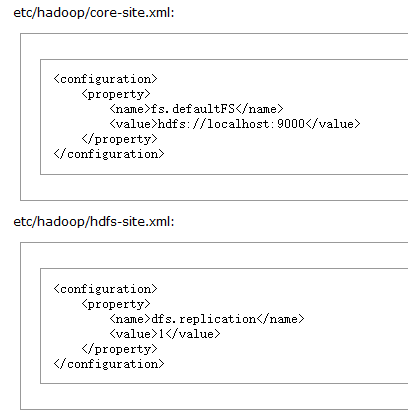

2.修改配置文件

2.1 HDFS

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

2.2 NameNode

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

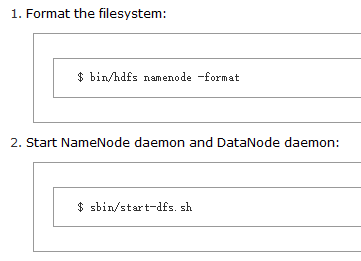

3.启动

3.1 初始化

hdfs namenode -format

3.2 启动服务

start-dfs.sh

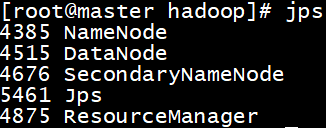

3.3 查看节点

jps

脚本

点击查看代码

#安装expect,

if ! (test -e /usr/bin/expect); then

cd /opt/software/

rpm -i /opt/software/tcl-8.4.13-4.el5.x86_64.rpm

rpm -i /opt/software/expect-5.43.0-5.1.x86_64.rpm

fi

#生成密钥

test -d /root/.ssh && rm -rf /root/.ssh

expect << ooff

set timeout 60

spawn ssh-keygen -t rsa

expect {

"Enter file in which to save the key" {send "\r";exp_continue}

"Enter passphrase (empty for no passphrase):" {send "\r";exp_continue}

"Enter same passphrase again: " {send "\r"}

}

expect eof

ooff

#免认证文件

cd /root/.ssh

touch /root/.ssh/authorized_keys

cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

#清除

expect <<ooff

set timeout 60

spawn stop-dfs.sh

expect 'Are you sure you want to continue connecting' {send 'yes\r'}

expect eof

ooff

expect <<ooff

set timeout 60

spawn stop-yarn.sh

expect 'Are you sure you want to continue connecting' {send 'yes\r'}

expect eof

ooff

cd /usr/local/src

rm -rf /usr/local/src/hadoop*

sed -i '/^export HADOOP_HOME=.*/d' /etc/profile

sed -i '/^export PATH=$PATH:$HADOOP_HOME.*/d' /etc/profile

rm -rf /root/input

#if test -d /root/output;then

#rm -rf /root/output/

#fi

#解压

cd /opt/software

tar -zxf hadoop-2.7.6.tar.gz -C /usr/local/src

cd /usr/local/src

mv /usr/local/src/hadoop-2.7.6 /usr/local/src/hadoop

#添加全局

echo export HADOOP_HOME=/usr/local/src/hadoop >> /etc/profile

echo 'export PATH=$PATH:$HADOOP_HOME/bin/:$HADOOP_HOME/sbin/' >> /etc/profile

source /etc/profile

#配置

#告诉Hadoop java在哪里

cd /usr/local/src/hadoop/etc/hadoop

sed -i '/# The java implementation to use./,/# The jsvc implementation to use. Jsvc is required to run secure datanodes/{/export JAVA_HOME=${JAVA_HOME}/d}' /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

sed -i '/# The java implementation to use./aexport JAVA_HOME=/usr/local/src/java/' /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

#主机

sed -i '/<configuration>/a<property> ' /usr/local/src/hadoop/etc/hadoop/core-site.xml

sed -i '/<property>/a<name>fs.defaultFS</name> ' /usr/local/src/hadoop/etc/hadoop/core-site.xml

sed -i '/<name>fs.defaultFS/a<value>hdfs://localhost:9000</value>' /usr/local/src/hadoop/etc/hadoop/core-site.xml

sed -i '/<value>hdfs:\/\/localhost:9000<\/value>/a</property>' /usr/local/src/hadoop/etc/hadoop/core-site.xml

#副本数

sed -i '/<configuration>/a<property> ' /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

sed -i '/<property>/a<name>dfs.replication</name> ' /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

sed -i '/<name>dfs.replication<\/name>/a<value>1</value>' /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

sed -i '/<value>1<\/value>/a</property>' /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

#测试

#cd /root/

#mkdir /root/input

#cd /root/input

#touch /root/input/data.txt

#echo Hello World >> /root/input/data.txt

#echo Hello Hadoop >> /root/input/data.txt

#echo Hello Java >> /root/input/data.txt

#cd /root/

#hadoop jar /usr/local/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar wordcount /root/input/data.txt /root/output/

expect <<ooff

set timeout 60

spawn hdfs namenode -format

expect {

'Are you sure you want to continue connecting?' {send 'yes\r'; exp_continue}

'Re-format filesystem in Storage Directory' {send 'y\r'}

}

expect eof

ooff

for ((i=0;i<1;i++));

do

expect <<ooff

spawn start-dfs.sh

expect {

'Are you sure you want to continue connecting (yes/no)? ' {send 'yes\r'; exp_continue}

'Are you sure you want to continue connecting (yes/no)? ' {send 'yes\r'}

}

expect eof

ooff

done

expect <<ooff

spawn start-yarn.sh

expect 'Are you sure you want to continue connecting (yes/no)? ' {send 'yes\r'}

expect eof

ooff

jps

下载链接:

https://yum.oracle.com/repo/OracleLinux/OL5/latest/x86_64/index.html

原文链接:

https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/SingleCluster.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律