HOG特征提取原理及实现

HOG特征提取

1背景:

HOG是Histogram of Oriented Gradient的缩写,是一种在计算机视觉和图像处理中用来进行目标检测的特征描述子。可结合OPENCV的SVM分类器等用于图像的识别。

2.特征提取流程:

HOG特征提取流程可分为5个部分:检测窗口、归一化图像、计算梯度、统计直方图、梯度直方图归一化、得到HOG特征向量,以下分步骤介绍。

(1)检测窗口:

HOG通过窗口(window)和块(block)将图像进行分割。通过以细胞(cell)为单位,对图像某一区域的像素值进行数学计算处理。在此先介绍窗口(window)、块(block)和细胞(cell)的概念及之间的联系。

窗口(window):将图像按一定大小分割成多个相同的窗口,滑动。

块(block):将每个窗口按一定大小分割成多个相同的块,滑动。

细胞(cell):将每个窗口按一定大小分割成多个相同的细胞,属于特征提取的单元,静止不动。

图像(image)->检测窗口(win)->图像块(block)->细胞单元(cell)

如下是图像的表示。

黑色表示窗口的划分,蓝色表示块的划分,黄色表示细胞的划分。在检测窗口部分,将图像按照窗口大小进行划分,而后再讲每个窗口按照块大小进行划分,再将每个块以细胞为单元进行划分。(窗口根据窗口滑动的大小可进行移动,块根据块滑动大小进行移动,如若不理解,看下面数学计算。)

(2)归一化图像:

归一化分为gamma空间和颜色空间归一化。为减少光照因素影响,将整个图像进行规范化(归一化)。(归一化公式:y=(x-MinValue)/(MaxValue-MinValue))。归一化同时可以避免在图像的纹理强度中,局部的表层曝光贡献度的比重较大的情况。标准化Gamma压缩公式:I(x,y)=I(x,y)^gamma. gamma根据自己效果取值,如1/2.

(3)计算梯度:

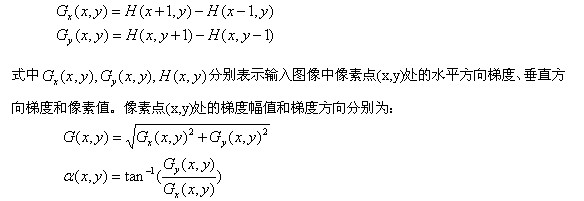

计算图像横坐标和纵坐标方向的梯度,并根据横坐标和纵坐标的梯度,计算梯度方向。下图为计算公式图:

在算法中,常先用[-1,0,1]进行卷积操作求得x方向的梯度值,再采用[-1,0,1]T进行卷积操作求得y方向。而后采用上述公式求梯度幅值和方向。

(4)构建梯度直方图:

HOG构建方向梯度直方图在细胞(cell)中完成:

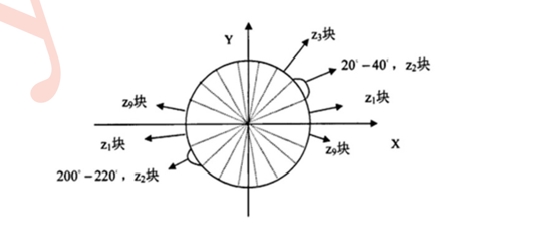

bins(可理解为划分的个数)决定方向的划分。一般bins取9,将梯度方向划分为9个区间。(注:关于划分区间,有些博主以360°计算。鄙人查opencv书籍,发现确应按180度进行计算,artan所得值得范围即为180°。)例如,假设一个细胞尺寸为6*6,则对这个细胞内的36个像素点,先判断像素点梯度方向所属的区间,后根据像素点的梯度幅值大小和梯度方向的大小进行加权于对应的梯度方向区间。(加权方法可有线性加权、平方根等等各种高大尚的加权方法)

以下是按照9个区间,进行角度划分的图像。

(5)块内进行细胞归一化梯度直方图。

原因:局部光照的变化及前景-背景对比度的变化,使梯度强度的变化范围很大,在此需要进行归一化,(查资料,使用的归一化函数有L2-norm、L2-Hys、L1-norm于L1-sqrt等,O(∩_∩)O,没听过?没办法,谁让你那么菜呢???)进一步对光照、阴影和边缘进行压缩。根据上述介绍,把

(6)生成HOG特征向量:

最后组合所有的块,生成特征向量:例对于一个64*128的窗口而言,每8*8的像素组成一个cell,每2*2个cell组成一个块,每个块有9*4个特征,以8个像素为步长,水平方向将有7个扫描窗口,垂直方向将有15个扫描窗口。所以,一个64*128的窗口共36*7*15=3780个特征,代码中一个hog描述子针对一个检测窗口。

3.HOG加权方法:

HOG采用三线性加权法,有两个部分用到加权,分别是构建梯度直方图和细胞归一化。以下分别介绍:

在构建梯度直方图时,假若一个像素点的梯度方向为25°,距离0~20°和20~40°最近,采用加权方法,对相邻两个区间进行幅度值相加,分别为(25-10)/20=0.75和(25-20)/20=0.25的权重值进行累加。

同时,在收集块内梯度方向直方图时,存在一个既定假设,即位于不同细胞内的像素点只会对其从属的细胞进行投影,并不会对其周围的细胞产生影响。显然,若对于细胞交界处的像素点和在块滑动情况下,这样的假设未免显得有点牵强,因为它们与其周围所有的细胞都是相关的。

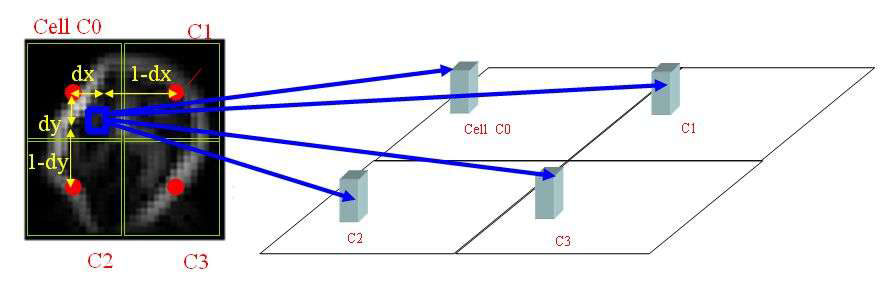

如下图,左图中的方框处为待处理像素点,它位于block中的C0单元中,根据位于不同细胞内的像素点只会对其从属的细胞进行投影,那像素点仅仅会对C0细胞产生影响,而忽略了对C1,C2,C3细胞的贡献,为了弥补,借鉴线性插值方法在各个像素的位置上进行加权运算,利用该点与四个cell中的中心像素点(图中4个圆点)的距离计算权值,将待处理像素点的梯度幅值分别加权累加到C0、C1、C2、C3中相应的直方图上。

综合考虑,在两个位置坐标(x,y)和一个方向坐标( θ )上进行三线性插值,关键要解决的问题是应该在哪些bin上进行加权累加,累

加时权值又是多少。将一个像素点处的梯度幅值加权分配到4个cell中与该点梯度方向相邻的2个bin上。按照公式(7)修正直方图

向量,其中x、y轴表征像素点的空间位置,z轴表征该点的梯度方向(即θ)。对于待处理像素点(x,y),设其梯度幅值为ω ,梯度方向为

z,z1和z2分别是与之最邻近的两个bin的中点坐标。梯度直方图h沿x、y、z三个维度的直方图带宽分别为b=[bx, by, bz],bx=by=8,bz=

180°/9。如图6所示为三线性插值计算梯度方向直方图向量的示意图,左图中的方框处为待处理像素点,计算block的每个cell中与该

点梯度方向相邻的2个bin,共计8个直方图柱上的权值,将该点的梯度幅值进行加权累加,即形成block中的梯度方向直方图[5]。 h(x1,y1,z1)←h(x1,y1,z1)+ω(1- x -x1bx )(1- y -y1by )(1- z -z1bz )

h(x1,y1,z2)←h(x1,y1,z2)+ω(1- x -x1bx )(1- y -y1by )(1- z -z2bz )

h(x1,y2,z1)←h(x1,y2,z1)+ω(1- x -x1bx )(y -y2by )(1- z -z1bz )

h(x2,y1,z1)←h(x2,y1,z1)+ω(x -x1bx )(1- y -y1by )(1- z -z1bz )

h(x1,y2,z2)←h(x1,y2,z2)+ω(1- x -x1bx )(y -y2by )(z -z2bz )

h(x2,y1,z2)←h(x2,y1,z2)+ω(x -x 2bx )(1- y -y1by )(1- z -z2bz )

h(x2,y2,z1)←h(x2,y2,z1)+ω(x -x 2bx )(1- y -y2by )(1- z -z1bz )

h(x2,y2,z2)←h(x2,y2,z2)+ω(x -x 2bx )(y -y2by )(z -z2bz )

由于采用插值法,故可以克服区域重叠问题。

4.HOG算法优缺点:

优点:

(1)HOG表示边缘的结构特征,可以描述局部的形状信息。

(2)位置和方向空间的量化一定程度上可以抑制平移和旋转带来的影响。

(3)采用归一化,可以抵消光照带来的变化

缺点:

(1)描述子生成过程冗长,维度较高

(2)很难处理遮挡问题。

(3)对噪点敏感

HOG函数的实现:

HOGDescriptor hog(Size(64,128),Size(16,16),Size(8,8),Size(8,8),9);//创建HOG,参数分别为窗口大小(64,128),块尺寸(16,16),块步长(8,8),cell尺寸(8,8),直方图bin个数9 std::vector<float> descriptors;

hog->compute(trainImg,descriptors, Size(64, 48), Size(0, 0)); //参数分别为图像,HOG特征描述子,window步长,图像填充大小padding,window步长和padding可忽略。

HOG+SVM行人识别demo:

#include<iostream> #include <fstream> #include <opencv2/core/core.hpp> #include <opencv2/highgui/highgui.hpp> #include <opencv2/imgproc/imgproc.hpp> #include <opencv2/objdetect/objdetect.hpp> #include <opencv2/ml/ml.hpp> using namespace cv; using namespace std; #define PosSamNO 1126 //正样本个数 #define NegSamNO 1210 //负样本个数 //生成setSVMDetector()中用到的检测子参数时要用到的SVM的decision_func参数时protected类型,只能继承之后通过函数访问 class MySVM : public CvSVM { public: //获得SVM的决策函数中的alpha数组 double * get_alpha_vector() { return this->decision_func->alpha; } //获得SVM的决策函数中的rho参数,即偏移量 float get_rho() { return this->decision_func->rho; } }; int main() { HOGDescriptor hog(Size(64,128),Size(16,16),Size(8,8),Size(8,8),9);//窗口大小(64,128),块尺寸(16,16),块步长(8,8),cell尺寸(8,8),直方图bin个数9 int DescriptorDim;//HOG描述子的维数,由图片大小、检测窗口大小、块大小、细胞单元中直方图bin个数决定 MySVM svm; string ImgName;//图片名 ifstream finPos("pos.txt");//正样本图片的文件名列表 ifstream finNeg("neg.txt");//负样本图片的文件名列表 Mat sampleFeatureMat;//所有训练样本的特征向量组成的矩阵,行数等于所有样本的个数,列数等于HOG描述子维数 Mat sampleLabelMat;//训练样本的类别向量,行数等于所有样本的个数,列数等于1;1表示有人,-1表示无人 //依次读取正样本图片,生成HOG描述子 for(int num=0; num<PosSamNO && getline(finPos,ImgName); num++) { ImgName = "E:\\INRIAPerson\\Posjpg64_128\\" + ImgName;//加上正样本的路径名 Mat src = imread(ImgName);//读取图片 vector<float> descriptors;//HOG描述子向量 hog.compute(src,descriptors,Size(8,8));//计算HOG描述子,检测窗口移动步长(8,8) //处理第一个样本时初始化特征向量矩阵和类别矩阵,因为只有知道了特征向量的维数才能初始化特征向量矩阵 if( 0 == num ) { DescriptorDim = descriptors.size();//HOG描述子的维数 //初始化所有训练样本的特征向量组成的矩阵sampleFeatureMat,行数等于所有样本的个数,列数等于HOG描述子维数 sampleFeatureMat = Mat::zeros(PosSamNO+NegSamNO, DescriptorDim, CV_32FC1); //初始化训练样本的类别向量,行数等于所有样本的个数,列数等于1;1表示有人,-1表示无人 sampleLabelMat = Mat::zeros(PosSamNO+NegSamNO+HardExampleNO, 1, CV_32FC1); } //将计算好的HOG描述子复制到样本特征矩阵sampleFeatureMat for(int i=0; i<DescriptorDim; i++) sampleFeatureMat.at<float>(num,i) = descriptors[i];//第num个样本的特征向量中的第i个元素 sampleLabelMat.at<float>(num,0) = 1;//正样本类别为1,有人 } //处理负样本的流程和正样本大同小异 for(int num=0; num<NegSamNO && getline(finNeg,ImgName); num++) { ImgName = "E:\\INRIAPerson\\Negjpg_undesign\\" + ImgName;//加上负样本的路径名 Mat src = imread(ImgName);//读取图片 vector<float> descriptors;//HOG描述子向量 hog.compute(src,descriptors,Size(8,8));//计算HOG描述子,检测窗口移动步长(8,8) //将计算好的HOG描述子复制到样本特征矩阵sampleFeatureMat for(int i=0; i<DescriptorDim; i++) sampleFeatureMat.at<float>(num+PosSamNO,i) = descriptors[i];//第PosSamNO+num个样本的特征向量中的第i个元素 sampleLabelMat.at<float>(num+PosSamNO,0) = -1;//负样本类别为-1,无人 } //输出样本的HOG特征向量矩阵到文件 ofstream fout("SampleFeatureMat.txt"); for(int i=0; i<PosSamNO+NegSamNO; i++) { fout<<i<<endl; for(int j=0; j<DescriptorDim; j++) fout<<sampleFeatureMat.at<float>(i,j)<<" "; fout<<endl; } //训练SVM分类器,迭代终止条件,当迭代满1000次或误差小于FLT_EPSILON时停止迭代 CvTermCriteria criteria = cvTermCriteria(CV_TERMCRIT_ITER+CV_TERMCRIT_EPS, 1000, FLT_EPSILON); //SVM参数:SVM类型为C_SVC;线性核函数;松弛因子C=0.01 CvSVMParams param(CvSVM::C_SVC, CvSVM::LINEAR, 0, 1, 0, 0.01, 0, 0, 0, criteria); cout<<"开始训练SVM分类器"<<endl; svm.train(sampleFeatureMat, sampleLabelMat, Mat(), Mat(), param); cout<<"训练完成"<<endl; svm.save("SVM_HOG.xml");//将训练好的SVM模型保存为xml文件 DescriptorDim = svm.get_var_count();//特征向量的维数,即HOG描述子的维数 cout<<"描述子维数:"<<DescriptorDim<<endl; int supportVectorNum = svm.get_support_vector_count();//支持向量的个数 cout<<"支持向量个数:"<<supportVectorNum<<endl; Mat alphaMat = Mat::zeros(1, supportVectorNum, CV_32FC1);//alpha向量,长度等于支持向量个数 Mat supportVectorMat = Mat::zeros(supportVectorNum, DescriptorDim, CV_32FC1);//支持向量矩阵 Mat resultMat = Mat::zeros(1, DescriptorDim, CV_32FC1);//alpha向量乘以支持向量矩阵的结果 //将支持向量的数据复制到supportVectorMat矩阵中,共有supportVectorNum个支持向量,每个支持向量的数据有DescriptorDim维(种) for(int i=0; i<supportVectorNum; i++) { const float * pSVData = svm.get_support_vector(i);//返回第i个支持向量的数据指针 for(int j=0; j<DescriptorDim; j++) supportVectorMat.at<float>(i,j) = pSVData[j];//第i个向量的第j维数据 } //将alpha向量的数据复制到alphaMat中 //double * pAlphaData = svm.get_alpha_vector();//返回SVM的决策函数中的alpha向量 double * pAlphaData = svm.get_alpha_vector(); for(int i=0; i<supportVectorNum; i++) { alphaMat.at<float>(0,i) = pAlphaData[i];//alpha向量,长度等于支持向量个数 } resultMat = -1 * alphaMat * supportVectorMat;//计算-(alphaMat * supportVectorMat),结果放到resultMat中, //注意因为svm.predict使用的是alpha*sv*another-rho,如果为负的话则认为是正样本,在HOG的检测函数中, //使用rho-alpha*sv*another如果为正的话是正样本,所以需要将后者变为负数之后保存起来 //得到最终的setSVMDetector(const vector<float>& detector)参数中可用的检测子 vector<float> myDetector; //将resultMat中的数据复制到数组myDetector中 for(int i=0; i<DescriptorDim; i++) { myDetector.push_back(resultMat.at<float>(0,i)); } myDetector.push_back(svm.get_rho());//最后添加偏移量rho,得到检测子 cout<<"检测子维数:"<<myDetector.size()<<endl; //设置HOGDescriptor的检测子,用我们训练的检测器代替默认的检测器 HOGDescriptor myHOG; myHOG.setSVMDetector(myDetector); //保存检测子参数到文件 ofstream fout("HOGDetectorParagram.txt"); for(int i=0; i<myDetector.size(); i++) fout<<myDetector[i]<<endl; //读入图片进行人体检测 Mat src = imread("test1.png"); vector<Rect> found, found_filtered;//矩形框数组 cout<<"进行多尺度HOG人体检测"<<endl; myHOG.detectMultiScale(src, found, 0, Size(8,8), Size(32,32), 1.05, 2);//对图片进行多尺度行人检测 cout<<"找到的矩形框个数:"<<found.size()<<endl; //找出所有没有嵌套的矩形框r,并放入found_filtered中,如果有嵌套的话,则取外面最大的那个矩形框放入found_filtered中 for(int i=0; i < found.size(); i++) { Rect r = found[i]; int j=0; for(; j < found.size(); j++) { if(j != i && (r & found[j]) == r)//说明r是被嵌套在found[j]里面的,舍弃当前的r break; } if( j == found.size())//r没有被嵌套在第0,1,2...found.size()-1号的矩形框内,则r是符合条件的 found_filtered.push_back(r); } //对画出来的矩形框做一些大小调整 for(int i=0; i<found_filtered.size(); i++) { Rect r = found_filtered[i]; r.x += cvRound(r.width*0.1); r.width = cvRound(r.width*0.8); r.y += cvRound(r.height*0.07); r.height = cvRound(r.height*0.8); rectangle(src, r.tl(), r.br(), Scalar(255,0,0), 2); } imwrite("ImgProcessed.jpg",src); namedWindow("src",0); imshow("src",src); waitKey(); }

HOG源代码:

1 /*M/////////////////////////////////////////////////////////////////////////////////////// 2 // 3 // IMPORTANT: READ BEFORE DOWNLOADING, COPYING, INSTALLING OR USING. 4 // 5 // By downloading, copying, installing or using the software you agree to this license. 6 // If you do not agree to this license, do not download, install, 7 // copy or use the software. 8 // 9 // 10 // License Agreement 11 // For Open Source Computer Vision Library 12 // 13 // Copyright (C) 2000-2008, Intel Corporation, all rights reserved. 14 // Copyright (C) 2009, Willow Garage Inc., all rights reserved. 15 // Third party copyrights are property of their respective owners. 16 // 17 // Redistribution and use in source and binary forms, with or without modification, 18 // are permitted provided that the following conditions are met: 19 // 20 // * Redistribution's of source code must retain the above copyright notice, 21 // this list of conditions and the following disclaimer. 22 // 23 // * Redistribution's in binary form must reproduce the above copyright notice, 24 // this list of conditions and the following disclaimer in the documentation 25 // and/or other materials provided with the distribution. 26 // 27 // * The name of the copyright holders may not be used to endorse or promote products 28 // derived from this software without specific prior written permission. 29 // 30 // This software is provided by the copyright holders and contributors "as is" and 31 // any express or implied warranties, including, but not limited to, the implied 32 // warranties of merchantability and fitness for a particular purpose are disclaimed. 33 // In no event shall the Intel Corporation or contributors be liable for any direct, 34 // indirect, incidental, special, exemplary, or consequential damages 35 // (including, but not limited to, procurement of substitute goods or services; 36 // loss of use, data, or profits; or business interruption) however caused 37 // and on any theory of liability, whether in contract, strict liability, 38 // or tort (including negligence or otherwise) arising in any way out of 39 // the use of this software, even if advised of the possibility of such damage. 40 // 41 //M*/ 42 43 #include "precomp.hpp" 44 #include <iterator> 45 #ifdef HAVE_IPP 46 #include "ipp.h" 47 #endif 48 /****************************************************************************************\ 49 The code below is implementation of HOG (Histogram-of-Oriented Gradients) 50 descriptor and object detection, introduced by Navneet Dalal and Bill Triggs. 51 52 The computed feature vectors are compatible with the 53 INRIA Object Detection and Localization Toolkit 54 (http://pascal.inrialpes.fr/soft/olt/) 55 \****************************************************************************************/ 56 57 namespace cv 58 { 59 60 size_t HOGDescriptor::getDescriptorSize() const 61 { 62 //下面2个语句是保证block中有整数个cell;保证block在窗口中能移动整数次 63 CV_Assert(blockSize.width % cellSize.width == 0 && 64 blockSize.height % cellSize.height == 0); 65 CV_Assert((winSize.width - blockSize.width) % blockStride.width == 0 && 66 (winSize.height - blockSize.height) % blockStride.height == 0 ); 67 //返回的nbins是每个窗口中检测到的hog向量的维数 68 return (size_t)nbins* 69 (blockSize.width/cellSize.width)* 70 (blockSize.height/cellSize.height)* 71 ((winSize.width - blockSize.width)/blockStride.width + 1)* 72 ((winSize.height - blockSize.height)/blockStride.height + 1); 73 } 74 75 //winSigma到底是什么作用呢? 76 double HOGDescriptor::getWinSigma() const 77 { 78 return winSigma >= 0 ? winSigma : (blockSize.width + blockSize.height)/8.; 79 } 80 81 //svmDetector是HOGDescriptor内的一个成员变量,数据类型为向量vector。 82 //用来保存hog特征用于svm分类时的系数的. 83 //该函数返回为真的实际含义是什么呢?保证与hog特征长度相同,或者相差1,但为什么 84 //相差1也可以呢? 85 bool HOGDescriptor::checkDetectorSize() const 86 { 87 size_t detectorSize = svmDetector.size(), descriptorSize = getDescriptorSize(); 88 return detectorSize == 0 || 89 detectorSize == descriptorSize || 90 detectorSize == descriptorSize + 1; 91 } 92 93 void HOGDescriptor::setSVMDetector(InputArray _svmDetector) 94 { 95 //这里的convertTo函数只是将图像Mat属性更改,比如说通道数,矩阵深度等。 96 //这里是将输入的svm系数矩阵全部转换成浮点型。 97 _svmDetector.getMat().convertTo(svmDetector, CV_32F); 98 CV_Assert( checkDetectorSize() ); 99 } 100 101 #define CV_TYPE_NAME_HOG_DESCRIPTOR "opencv-object-detector-hog" 102 103 //FileNode是opencv的core中的一个文件存储节点类,这个节点用来存储读取到的每一个文件元素。 104 //一般是读取XML和YAML格式的文件 105 //又因为该函数是把文件节点中的内容读取到其类的成员变量中,所以函数后面不能有关键字const 106 bool HOGDescriptor::read(FileNode& obj) 107 { 108 //isMap()是用来判断这个节点是不是一个映射类型,如果是映射类型,则每个节点都与 109 //一个名字对应起来。因此这里的if语句的作用就是需读取的文件node是一个映射类型 110 if( !obj.isMap() ) 111 return false; 112 //中括号中的"winSize"是指返回名为winSize的一个节点,因为已经知道这些节点是mapping类型 113 //也就是说都有一个对应的名字。 114 FileNodeIterator it = obj["winSize"].begin(); 115 //操作符>>为从节点中读入数据,这里是将it指向的节点数据依次读入winSize.width,winSize.height 116 //下面的几条语句功能类似 117 it >> winSize.width >> winSize.height; 118 it = obj["blockSize"].begin(); 119 it >> blockSize.width >> blockSize.height; 120 it = obj["blockStride"].begin(); 121 it >> blockStride.width >> blockStride.height; 122 it = obj["cellSize"].begin(); 123 it >> cellSize.width >> cellSize.height; 124 obj["nbins"] >> nbins; 125 obj["derivAperture"] >> derivAperture; 126 obj["winSigma"] >> winSigma; 127 obj["histogramNormType"] >> histogramNormType; 128 obj["L2HysThreshold"] >> L2HysThreshold; 129 obj["gammaCorrection"] >> gammaCorrection; 130 obj["nlevels"] >> nlevels; 131 132 //isSeq()是判断该节点内容是不是一个序列 133 FileNode vecNode = obj["SVMDetector"]; 134 if( vecNode.isSeq() ) 135 { 136 vecNode >> svmDetector; 137 CV_Assert(checkDetectorSize()); 138 } 139 //上面的都读取完了后就返回读取成功标志 140 return true; 141 } 142 143 void HOGDescriptor::write(FileStorage& fs, const String& objName) const 144 { 145 //将objName名字输入到文件fs中 146 if( !objName.empty() ) 147 fs << objName; 148 149 fs << "{" CV_TYPE_NAME_HOG_DESCRIPTOR 150 //下面几句依次将hog描述子内的变量输入到文件fs中,且每次输入前都输入 151 //一个名字与其对应,因此这些节点是mapping类型。 152 << "winSize" << winSize 153 << "blockSize" << blockSize 154 << "blockStride" << blockStride 155 << "cellSize" << cellSize 156 << "nbins" << nbins 157 << "derivAperture" << derivAperture 158 << "winSigma" << getWinSigma() 159 << "histogramNormType" << histogramNormType 160 << "L2HysThreshold" << L2HysThreshold 161 << "gammaCorrection" << gammaCorrection 162 << "nlevels" << nlevels; 163 if( !svmDetector.empty() ) 164 //svmDetector则是直接输入序列,也有对应的名字。 165 fs << "SVMDetector" << "[:" << svmDetector << "]"; 166 fs << "}"; 167 } 168 169 //从给定的文件中读取参数 170 bool HOGDescriptor::load(const String& filename, const String& objname) 171 { 172 FileStorage fs(filename, FileStorage::READ); 173 //一个文件节点有很多叶子,所以一个文件节点包含了很多内容,这里当然是包含的 174 //HOGDescriptor需要的各种参数了。 175 FileNode obj = !objname.empty() ? fs[objname] : fs.getFirstTopLevelNode(); 176 return read(obj); 177 } 178 179 //将类中的参数以文件节点的形式写入文件中。 180 void HOGDescriptor::save(const String& filename, const String& objName) const 181 { 182 FileStorage fs(filename, FileStorage::WRITE); 183 write(fs, !objName.empty() ? objName : FileStorage::getDefaultObjectName(filename)); 184 } 185 186 //复制HOG描述子到c中 187 void HOGDescriptor::copyTo(HOGDescriptor& c) const 188 { 189 c.winSize = winSize; 190 c.blockSize = blockSize; 191 c.blockStride = blockStride; 192 c.cellSize = cellSize; 193 c.nbins = nbins; 194 c.derivAperture = derivAperture; 195 c.winSigma = winSigma; 196 c.histogramNormType = histogramNormType; 197 c.L2HysThreshold = L2HysThreshold; 198 c.gammaCorrection = gammaCorrection; 199 //vector类型也可以用等号赋值 200 c.svmDetector = svmDetector; c.nlevels = nlevels; } 201 202 //计算图像img的梯度幅度图像grad和梯度方向图像qangle. 203 //paddingTL为需要在原图像img左上角扩增的尺寸,同理paddingBR 204 //为需要在img图像右下角扩增的尺寸。 205 void HOGDescriptor::computeGradient(const Mat& img, Mat& grad, Mat& qangle, 206 Size paddingTL, Size paddingBR) const 207 { 208 //该函数只能计算8位整型深度的单通道或者3通道图像. 209 CV_Assert( img.type() == CV_8U || img.type() == CV_8UC3 ); 210 211 //将图像按照输入参数进行扩充,这里不是为了计算边缘梯度而做的扩充,因为 212 //为了边缘梯度而扩充是在后面的代码完成的,所以这里为什么扩充暂时还不明白。 213 Size gradsize(img.cols + paddingTL.width + paddingBR.width, 214 img.rows + paddingTL.height + paddingBR.height); 215 grad.create(gradsize, CV_32FC2); // <magnitude*(1-alpha), magnitude*alpha> 216 qangle.create(gradsize, CV_8UC2); // [0..nbins-1] - quantized gradient orientation 217 Size wholeSize; 218 Point roiofs; 219 //locateROI在此处是如果img图像是从其它父图像中某一部分得来的,那么其父图像 220 //的大小尺寸就为wholeSize了,img图像左上角相对于父图像的位置点就为roiofs了。 221 //对于正样本,其父图像就是img了,所以这里的wholeSize就和img.size()是一样的, 222 //对应负样本,这2者不同;因为里面的关系比较不好懂,这里权且将wholesSize理解为 223 //img的size,所以roiofs就应当理解为Point(0, 0)了。 224 img.locateROI(wholeSize, roiofs); 225 226 int i, x, y; 227 int cn = img.channels(); 228 229 //_lut为行向量,用来作为浮点像素值的存储查找表 230 Mat_<float> _lut(1, 256); 231 const float* lut = &_lut(0,0); 232 233 //gamma校正指的是将0~256的像素值全部开根号,即范围缩小了,且变换范围都不成线性了, 234 if( gammaCorrection ) 235 for( i = 0; i < 256; i++ ) 236 _lut(0,i) = std::sqrt((float)i); 237 else 238 for( i = 0; i < 256; i++ ) 239 _lut(0,i) = (float)i; 240 241 //创建长度为gradsize.width+gradsize.height+4的整型buffer 242 AutoBuffer<int> mapbuf(gradsize.width + gradsize.height + 4); 243 int* xmap = (int*)mapbuf + 1; 244 int* ymap = xmap + gradsize.width + 2; 245 246 //言外之意思borderType就等于4了,因为opencv的源码中是如下定义的。 247 //#define IPL_BORDER_REFLECT_101 4 248 //enum{...,BORDER_REFLECT_101=IPL_BORDER_REFLECT_101,...} 249 //borderType为边界扩充后所填充像素点的方式。 250 /* 251 Various border types, image boundaries are denoted with '|' 252 253 * BORDER_REPLICATE: aaaaaa|abcdefgh|hhhhhhh 254 * BORDER_REFLECT: fedcba|abcdefgh|hgfedcb 255 * BORDER_REFLECT_101: gfedcb|abcdefgh|gfedcba 256 * BORDER_WRAP: cdefgh|abcdefgh|abcdefg 257 * BORDER_CONSTANT: iiiiii|abcdefgh|iiiiiii with some specified 'i' 258 */ 259 const int borderType = (int)BORDER_REFLECT_101; 260 261 for( x = -1; x < gradsize.width + 1; x++ ) 262 /*int borderInterpolate(int p, int len, int borderType) 263 其中参数p表示的是扩充后图像的一个坐标,相对于对应的坐标轴而言; 264 len参数表示对应源图像的一个坐标轴的长度;borderType为扩充类型, 265 在上面已经有过介绍. 266 所以这个函数的作用是从扩充后的像素点坐标推断出源图像中对应该点 267 的坐标值。 268 */ 269 //这里的xmap和ymap实际含义是什么呢?其实xmap向量里面存的就是 270 //扩充后图像第一行像素点对应与原图像img中的像素横坐标,可以看 271 //出,xmap向量中有些元素的值是相同的,因为扩充图像肯定会对应 272 //到原图像img中的某一位置,而img本身尺寸内的像素也会对应该位置。 273 //同理,ymap向量里面存的是扩充后图像第一列像素点对应于原图想img 274 //中的像素纵坐标。 275 xmap[x] = borderInterpolate(x - paddingTL.width + roiofs.x, 276 wholeSize.width, borderType) - roiofs.x; 277 for( y = -1; y < gradsize.height + 1; y++ ) 278 ymap[y] = borderInterpolate(y - paddingTL.height + roiofs.y, 279 wholeSize.height, borderType) - roiofs.y; 280 281 // x- & y- derivatives for the whole row 282 int width = gradsize.width; 283 AutoBuffer<float> _dbuf(width*4); 284 float* dbuf = _dbuf; 285 //DX为水平梯度图,DY为垂直梯度图,Mag为梯度幅度图,Angle为梯度角度图 286 //该构造方法的第4个参数表示矩阵Mat的数据在内存中存放的位置。由此可以 287 //看出,这4幅图像在内存中是连续存储的。 288 Mat Dx(1, width, CV_32F, dbuf); 289 Mat Dy(1, width, CV_32F, dbuf + width); 290 Mat Mag(1, width, CV_32F, dbuf + width*2); 291 Mat Angle(1, width, CV_32F, dbuf + width*3); 292 293 int _nbins = nbins; 294 //angleScale==9/pi; 295 float angleScale = (float)(_nbins/CV_PI); 296 #ifdef HAVE_IPP 297 Mat lutimg(img.rows,img.cols,CV_MAKETYPE(CV_32F,cn)); 298 Mat hidxs(1, width, CV_32F); 299 Ipp32f* pHidxs = (Ipp32f*)hidxs.data; 300 Ipp32f* pAngles = (Ipp32f*)Angle.data; 301 302 IppiSize roiSize; 303 roiSize.width = img.cols; 304 roiSize.height = img.rows; 305 306 for( y = 0; y < roiSize.height; y++ ) 307 { 308 const uchar* imgPtr = img.data + y*img.step; 309 float* imglutPtr = (float*)(lutimg.data + y*lutimg.step); 310 311 for( x = 0; x < roiSize.width*cn; x++ ) 312 { 313 imglutPtr[x] = lut[imgPtr[x]]; 314 } 315 } 316 317 #endif 318 for( y = 0; y < gradsize.height; y++ ) 319 { 320 #ifdef HAVE_IPP 321 const float* imgPtr = (float*)(lutimg.data + lutimg.step*ymap[y]); 322 const float* prevPtr = (float*)(lutimg.data + lutimg.step*ymap[y-1]); 323 const float* nextPtr = (float*)(lutimg.data + lutimg.step*ymap[y+1]); 324 #else 325 //imgPtr在这里指的是img图像的第y行首地址;prePtr指的是img第y-1行首地址; 326 //nextPtr指的是img第y+1行首地址; 327 const uchar* imgPtr = img.data + img.step*ymap[y]; 328 const uchar* prevPtr = img.data + img.step*ymap[y-1]; 329 const uchar* nextPtr = img.data + img.step*ymap[y+1]; 330 #endif 331 float* gradPtr = (float*)grad.ptr(y); 332 uchar* qanglePtr = (uchar*)qangle.ptr(y); 333 334 //输入图像img为单通道图像时的计算 335 if( cn == 1 ) 336 { 337 for( x = 0; x < width; x++ ) 338 { 339 int x1 = xmap[x]; 340 #ifdef HAVE_IPP 341 dbuf[x] = (float)(imgPtr[xmap[x+1]] - imgPtr[xmap[x-1]]); 342 dbuf[width + x] = (float)(nextPtr[x1] - prevPtr[x1]); 343 #else 344 //下面2句把Dx,Dy就计算出来了,因为其对应的内存都在dbuf中 345 dbuf[x] = (float)(lut[imgPtr[xmap[x+1]]] - lut[imgPtr[xmap[x-1]]]); 346 dbuf[width + x] = (float)(lut[nextPtr[x1]] - lut[prevPtr[x1]]); 347 #endif 348 } 349 } 350 //当cn==3时,也就是输入图像为3通道图像时的处理。 351 else 352 { 353 for( x = 0; x < width; x++ ) 354 { 355 //x1表示第y行第x1列的地址 356 int x1 = xmap[x]*3; 357 float dx0, dy0, dx, dy, mag0, mag; 358 #ifdef HAVE_IPP 359 const float* p2 = imgPtr + xmap[x+1]*3; 360 const float* p0 = imgPtr + xmap[x-1]*3; 361 362 dx0 = p2[2] - p0[2]; 363 dy0 = nextPtr[x1+2] - prevPtr[x1+2]; 364 mag0 = dx0*dx0 + dy0*dy0; 365 366 dx = p2[1] - p0[1]; 367 dy = nextPtr[x1+1] - prevPtr[x1+1]; 368 mag = dx*dx + dy*dy; 369 370 if( mag0 < mag ) 371 { 372 dx0 = dx; 373 dy0 = dy; 374 mag0 = mag; 375 } 376 377 dx = p2[0] - p0[0]; 378 dy = nextPtr[x1] - prevPtr[x1]; 379 mag = dx*dx + dy*dy; 380 #else 381 //p2为第y行第x+1列的地址 382 //p0为第y行第x-1列的地址 383 const uchar* p2 = imgPtr + xmap[x+1]*3; 384 const uchar* p0 = imgPtr + xmap[x-1]*3; 385 386 //计算第2通道的幅值 387 dx0 = lut[p2[2]] - lut[p0[2]]; 388 dy0 = lut[nextPtr[x1+2]] - lut[prevPtr[x1+2]]; 389 mag0 = dx0*dx0 + dy0*dy0; 390 391 //计算第1通道的幅值 392 dx = lut[p2[1]] - lut[p0[1]]; 393 dy = lut[nextPtr[x1+1]] - lut[prevPtr[x1+1]]; 394 mag = dx*dx + dy*dy; 395 396 //取幅值最大的那个通道 397 if( mag0 < mag ) 398 { 399 dx0 = dx; 400 dy0 = dy; 401 mag0 = mag; 402 } 403 404 //计算第0通道的幅值 405 dx = lut[p2[0]] - lut[p0[0]]; 406 dy = lut[nextPtr[x1]] - lut[prevPtr[x1]]; 407 mag = dx*dx + dy*dy; 408 #endif 409 //取幅值最大的那个通道 410 if( mag0 < mag ) 411 { 412 dx0 = dx; 413 dy0 = dy; 414 mag0 = mag; 415 } 416 417 //最后求出水平和垂直方向上的梯度图像 418 dbuf[x] = dx0; 419 dbuf[x+width] = dy0; 420 } 421 } 422 #ifdef HAVE_IPP 423 ippsCartToPolar_32f((const Ipp32f*)Dx.data, (const Ipp32f*)Dy.data, (Ipp32f*)Mag.data, pAngles, width); 424 for( x = 0; x < width; x++ ) 425 { 426 if(pAngles[x] < 0.f) 427 pAngles[x] += (Ipp32f)(CV_PI*2.); 428 } 429 430 ippsNormalize_32f(pAngles, pAngles, width, 0.5f/angleScale, 1.f/angleScale); 431 ippsFloor_32f(pAngles,(Ipp32f*)hidxs.data,width); 432 ippsSub_32f_I((Ipp32f*)hidxs.data,pAngles,width); 433 ippsMul_32f_I((Ipp32f*)Mag.data,pAngles,width); 434 435 ippsSub_32f_I(pAngles,(Ipp32f*)Mag.data,width); 436 ippsRealToCplx_32f((Ipp32f*)Mag.data,pAngles,(Ipp32fc*)gradPtr,width); 437 #else 438 //cartToPolar()函数是计算2个矩阵对应元素的幅度和角度,最后一个参数为是否 439 //角度使用度数表示,这里为false表示不用度数表示,即用弧度表示。 440 //如果只需计算2个矩阵对应元素的幅度图像,可以采用magnitude()函数。 441 //-pi/2<Angle<pi/2; 442 cartToPolar( Dx, Dy, Mag, Angle, false ); 443 #endif 444 for( x = 0; x < width; x++ ) 445 { 446 #ifdef HAVE_IPP 447 int hidx = (int)pHidxs[x]; 448 #else 449 //-5<angle<4 450 float mag = dbuf[x+width*2], angle = dbuf[x+width*3]*angleScale - 0.5f; 451 //cvFloor()返回不大于参数的最大整数 452 //hidx={-5,-4,-3,-2,-1,0,1,2,3,4}; 453 int hidx = cvFloor(angle); 454 //0<=angle<1;angle表示的意思是与其相邻的较小的那个bin的弧度距离(即弧度差) 455 angle -= hidx; 456 //gradPtr为grad图像的指针 457 //gradPtr[x*2]表示的是与x处梯度方向相邻较小的那个bin的幅度权重; 458 //gradPtr[x*2+1]表示的是与x处梯度方向相邻较大的那个bin的幅度权重 459 gradPtr[x*2] = mag*(1.f - angle); 460 gradPtr[x*2+1] = mag*angle; 461 #endif 462 if( hidx < 0 ) 463 hidx += _nbins; 464 else if( hidx >= _nbins ) 465 hidx -= _nbins; 466 assert( (unsigned)hidx < (unsigned)_nbins ); 467 468 qanglePtr[x*2] = (uchar)hidx; 469 hidx++; 470 //-1在补码中的表示为11111111,与-1相与的话就是自己本身了; 471 //0在补码中的表示为00000000,与0相与的结果就是0了. 472 hidx &= hidx < _nbins ? -1 : 0; 473 qanglePtr[x*2+1] = (uchar)hidx; 474 } 475 } 476 } 477 478 479 struct HOGCache 480 { 481 struct BlockData 482 { 483 BlockData() : histOfs(0), imgOffset() {} 484 int histOfs; 485 Point imgOffset; 486 }; 487 488 struct PixData 489 { 490 size_t gradOfs, qangleOfs; 491 int histOfs[4]; 492 float histWeights[4]; 493 float gradWeight; 494 }; 495 496 HOGCache(); 497 HOGCache(const HOGDescriptor* descriptor, 498 const Mat& img, Size paddingTL, Size paddingBR, 499 bool useCache, Size cacheStride); 500 virtual ~HOGCache() {}; 501 virtual void init(const HOGDescriptor* descriptor, 502 const Mat& img, Size paddingTL, Size paddingBR, 503 bool useCache, Size cacheStride); 504 505 Size windowsInImage(Size imageSize, Size winStride) const; 506 Rect getWindow(Size imageSize, Size winStride, int idx) const; 507 508 const float* getBlock(Point pt, float* buf); 509 virtual void normalizeBlockHistogram(float* histogram) const; 510 511 vector<PixData> pixData; 512 vector<BlockData> blockData; 513 514 bool useCache; 515 vector<int> ymaxCached; 516 Size winSize, cacheStride; 517 Size nblocks, ncells; 518 int blockHistogramSize; 519 int count1, count2, count4; 520 Point imgoffset; 521 Mat_<float> blockCache; 522 Mat_<uchar> blockCacheFlags; 523 524 Mat grad, qangle; 525 const HOGDescriptor* descriptor; 526 }; 527 528 //默认的构造函数,不使用cache,块的直方图向量大小为0等 529 HOGCache::HOGCache() 530 { 531 useCache = false; 532 blockHistogramSize = count1 = count2 = count4 = 0; 533 descriptor = 0; 534 } 535 536 //带参的初始化函数,采用内部的init函数进行初始化 537 HOGCache::HOGCache(const HOGDescriptor* _descriptor, 538 const Mat& _img, Size _paddingTL, Size _paddingBR, 539 bool _useCache, Size _cacheStride) 540 { 541 init(_descriptor, _img, _paddingTL, _paddingBR, _useCache, _cacheStride); 542 } 543 544 //HOGCache结构体的初始化函数 545 void HOGCache::init(const HOGDescriptor* _descriptor, 546 const Mat& _img, Size _paddingTL, Size _paddingBR, 547 bool _useCache, Size _cacheStride) 548 { 549 descriptor = _descriptor; 550 cacheStride = _cacheStride; 551 useCache = _useCache; 552 553 //首先调用computeGradient()函数计算输入图像的权值梯度幅度图和角度量化图 554 descriptor->computeGradient(_img, grad, qangle, _paddingTL, _paddingBR); 555 //imgoffset是Point类型,而_paddingTL是Size类型,虽然类型不同,但是2者都是 556 //一个二维坐标,所以是在opencv中是允许直接赋值的。 557 imgoffset = _paddingTL; 558 559 winSize = descriptor->winSize; 560 Size blockSize = descriptor->blockSize; 561 Size blockStride = descriptor->blockStride; 562 Size cellSize = descriptor->cellSize; 563 int i, j, nbins = descriptor->nbins; 564 //rawBlockSize为block中包含像素点的个数 565 int rawBlockSize = blockSize.width*blockSize.height; 566 567 //nblocks为Size类型,其长和宽分别表示一个窗口中水平方向和垂直方向上block的 568 //个数(需要考虑block在窗口中的移动) 569 nblocks = Size((winSize.width - blockSize.width)/blockStride.width + 1, 570 (winSize.height - blockSize.height)/blockStride.height + 1); 571 //ncells也是Size类型,其长和宽分别表示一个block中水平方向和垂直方向容纳下 572 //的cell个数 573 ncells = Size(blockSize.width/cellSize.width, blockSize.height/cellSize.height); 574 //blockHistogramSize表示一个block中贡献给hog描述子向量的长度 575 blockHistogramSize = ncells.width*ncells.height*nbins; 576 577 if( useCache ) 578 { 579 //cacheStride= _cacheStride,即其大小是由参数传入的,表示的是窗口移动的大小 580 //cacheSize长和宽表示扩充后的图像cache中,block在水平方向和垂直方向出现的个数 581 Size cacheSize((grad.cols - blockSize.width)/cacheStride.width+1, 582 (winSize.height/cacheStride.height)+1); 583 //blockCache为一个float型的Mat,注意其列数的值 584 blockCache.create(cacheSize.height, cacheSize.width*blockHistogramSize); 585 //blockCacheFlags为一个uchar型的Mat 586 blockCacheFlags.create(cacheSize); 587 size_t cacheRows = blockCache.rows; 588 //ymaxCached为vector<int>类型 589 //Mat::resize()为矩阵的一个方法,只是改变矩阵的行数,与单独的resize()函数不相同。 590 ymaxCached.resize(cacheRows); 591 //ymaxCached向量内部全部初始化为-1 592 for(size_t ii = 0; ii < cacheRows; ii++ ) 593 ymaxCached[ii] = -1; 594 } 595 596 //weights为一个尺寸为blockSize的二维高斯表,下面的代码就是计算二维高斯的系数 597 Mat_<float> weights(blockSize); 598 float sigma = (float)descriptor->getWinSigma(); 599 float scale = 1.f/(sigma*sigma*2); 600 601 for(i = 0; i < blockSize.height; i++) 602 for(j = 0; j < blockSize.width; j++) 603 { 604 float di = i - blockSize.height*0.5f; 605 float dj = j - blockSize.width*0.5f; 606 weights(i,j) = std::exp(-(di*di + dj*dj)*scale); 607 } 608 609 //vector<BlockData> blockData;而BlockData为HOGCache的一个结构体成员 610 //nblocks.width*nblocks.height表示一个检测窗口中block的个数, 611 //而cacheSize.width*cacheSize.heigh表示一个已经扩充的图片中的block的个数 612 blockData.resize(nblocks.width*nblocks.height); 613 //vector<PixData> pixData;同理,Pixdata也为HOGCache中的一个结构体成员 614 //rawBlockSize表示每个block中像素点的个数 615 //resize表示将其转换成列向量 616 pixData.resize(rawBlockSize*3); 617 618 // Initialize 2 lookup tables, pixData & blockData. 619 // Here is why: 620 // 621 // The detection algorithm runs in 4 nested loops (at each pyramid layer): 622 // loop over the windows within the input image 623 // loop over the blocks within each window 624 // loop over the cells within each block 625 // loop over the pixels in each cell 626 // 627 // As each of the loops runs over a 2-dimensional array, 628 // we could get 8(!) nested loops in total, which is very-very slow. 629 // 630 // To speed the things up, we do the following: 631 // 1. loop over windows is unrolled in the HOGDescriptor::{compute|detect} methods; 632 // inside we compute the current search window using getWindow() method. 633 // Yes, it involves some overhead (function call + couple of divisions), 634 // but it's tiny in fact. 635 // 2. loop over the blocks is also unrolled. Inside we use pre-computed blockData[j] 636 // to set up gradient and histogram pointers. 637 // 3. loops over cells and pixels in each cell are merged 638 // (since there is no overlap between cells, each pixel in the block is processed once) 639 // and also unrolled. Inside we use PixData[k] to access the gradient values and 640 // update the histogram 641 //count1,count2,count4分别表示block中同时对1个cell,2个cell,4个cell有贡献的像素点的个数。 642 count1 = count2 = count4 = 0; 643 for( j = 0; j < blockSize.width; j++ ) 644 for( i = 0; i < blockSize.height; i++ ) 645 { 646 PixData* data = 0; 647 //cellX和cellY表示的是block内该像素点所在的cell横坐标和纵坐标索引,以小数的形式存在。 648 float cellX = (j+0.5f)/cellSize.width - 0.5f; 649 float cellY = (i+0.5f)/cellSize.height - 0.5f; 650 //cvRound返回最接近参数的整数;cvFloor返回不大于参数的整数;cvCeil返回不小于参数的整数 651 //icellX0和icellY0表示所在cell坐标索引,索引值为该像素点相邻cell的那个较小的cell索引 652 //当然此处就是由整数的形式存在了。 653 //按照默认的系数的话,icellX0和icellY0只可能取值-1,0,1,且当i和j<3.5时对应的值才取-1 654 //当i和j>11.5时取值为1,其它时刻取值为0(注意i,j最大是15,从0开始的) 655 int icellX0 = cvFloor(cellX); 656 int icellY0 = cvFloor(cellY); 657 int icellX1 = icellX0 + 1, icellY1 = icellY0 + 1; 658 //此处的cellx和celly表示的是真实索引值与最近邻cell索引值之间的差, 659 //为后面计算同一像素对不同cell中的hist权重的计算。 660 cellX -= icellX0; 661 cellY -= icellY0; 662 663 //满足这个if条件说明icellX0只能为0,也就是说block横坐标在(3.5,11.5)之间时 664 if( (unsigned)icellX0 < (unsigned)ncells.width && 665 (unsigned)icellX1 < (unsigned)ncells.width ) 666 { 667 //满足这个if条件说明icellY0只能为0,也就是说block纵坐标在(3.5,11.5)之间时 668 if( (unsigned)icellY0 < (unsigned)ncells.height && 669 (unsigned)icellY1 < (unsigned)ncells.height ) 670 { 671 //同时满足上面2个if语句的像素对4个cell都有权值贡献 672 //rawBlockSize表示的是1个block中存储像素点的个数 673 //而pixData的尺寸大小为block中像素点的3倍,其定义如下: 674 //pixData.resize(rawBlockSize*3); 675 //pixData的前面block像素大小的内存为存储只对block中一个cell 676 //有贡献的pixel;中间block像素大小的内存存储对block中同时2个 677 //cell有贡献的pixel;最后面的为对block中同时4个cell都有贡献 678 //的pixel 679 data = &pixData[rawBlockSize*2 + (count4++)]; 680 //下面计算出的结果为0 681 data->histOfs[0] = (icellX0*ncells.height + icellY0)*nbins; 682 //为该像素点对cell0的权重 683 data->histWeights[0] = (1.f - cellX)*(1.f - cellY); 684 //下面计算出的结果为18 685 data->histOfs[1] = (icellX1*ncells.height + icellY0)*nbins; 686 data->histWeights[1] = cellX*(1.f - cellY); 687 //下面计算出的结果为9 688 data->histOfs[2] = (icellX0*ncells.height + icellY1)*nbins; 689 data->histWeights[2] = (1.f - cellX)*cellY; 690 //下面计算出的结果为27 691 data->histOfs[3] = (icellX1*ncells.height + icellY1)*nbins; 692 data->histWeights[3] = cellX*cellY; 693 } 694 else 695 //满足这个else条件说明icellY0取-1或者1,也就是说block纵坐标在(0, 3.5) 696 //和(11.5, 15)之间. 697 //此时的像素点对相邻的2个cell有权重贡献 698 { 699 data = &pixData[rawBlockSize + (count2++)]; 700 if( (unsigned)icellY0 < (unsigned)ncells.height ) 701 { 702 //(unsigned)-1等于127>2,所以此处满足if条件时icellY0==1; 703 //icellY1==1; 704 icellY1 = icellY0; 705 cellY = 1.f - cellY; 706 } 707 //不满足if条件时,icellY0==-1;icellY1==0; 708 //当然了,这2种情况下icellX0==0;icellX1==1; 709 data->histOfs[0] = (icellX0*ncells.height + icellY1)*nbins; 710 data->histWeights[0] = (1.f - cellX)*cellY; 711 data->histOfs[1] = (icellX1*ncells.height + icellY1)*nbins; 712 data->histWeights[1] = cellX*cellY; 713 data->histOfs[2] = data->histOfs[3] = 0; 714 data->histWeights[2] = data->histWeights[3] = 0; 715 } 716 } 717 //当block中横坐标满足在(0, 3.5)和(11.5, 15)范围内时,即 718 //icellX0==-1或==1 719 else 720 { 721 722 if( (unsigned)icellX0 < (unsigned)ncells.width ) 723 { 724 //icellX1=icllX0=1; 725 icellX1 = icellX0; 726 cellX = 1.f - cellX; 727 } 728 //当icllY0=0时,此时对2个cell有贡献 729 if( (unsigned)icellY0 < (unsigned)ncells.height && 730 (unsigned)icellY1 < (unsigned)ncells.height ) 731 { 732 data = &pixData[rawBlockSize + (count2++)]; 733 data->histOfs[0] = (icellX1*ncells.height + icellY0)*nbins; 734 data->histWeights[0] = cellX*(1.f - cellY); 735 data->histOfs[1] = (icellX1*ncells.height + icellY1)*nbins; 736 data->histWeights[1] = cellX*cellY; 737 data->histOfs[2] = data->histOfs[3] = 0; 738 data->histWeights[2] = data->histWeights[3] = 0; 739 } 740 else 741 //此时只对自身的cell有贡献 742 { 743 data = &pixData[count1++]; 744 if( (unsigned)icellY0 < (unsigned)ncells.height ) 745 { 746 icellY1 = icellY0; 747 cellY = 1.f - cellY; 748 } 749 data->histOfs[0] = (icellX1*ncells.height + icellY1)*nbins; 750 data->histWeights[0] = cellX*cellY; 751 data->histOfs[1] = data->histOfs[2] = data->histOfs[3] = 0; 752 data->histWeights[1] = data->histWeights[2] = data->histWeights[3] = 0; 753 } 754 } 755 //为什么每个block中i,j位置的gradOfs和qangleOfs都相同且是如下的计算公式呢? 756 //那是因为输入的_img参数不是代表整幅图片而是检测窗口大小的图片,所以每个 757 //检测窗口中关于block的信息可以看做是相同的 758 data->gradOfs = (grad.cols*i + j)*2; 759 data->qangleOfs = (qangle.cols*i + j)*2; 760 //每个block中i,j位置的权重都是固定的 761 data->gradWeight = weights(i,j); 762 } 763 764 //保证所有的点都被扫描了一遍 765 assert( count1 + count2 + count4 == rawBlockSize ); 766 // defragment pixData 767 //将pixData中按照内存排满,这样节省了2/3的内存 768 for( j = 0; j < count2; j++ ) 769 pixData[j + count1] = pixData[j + rawBlockSize]; 770 for( j = 0; j < count4; j++ ) 771 pixData[j + count1 + count2] = pixData[j + rawBlockSize*2]; 772 //此时count2表示至多对2个cell有贡献的所有像素点的个数 773 count2 += count1; 774 //此时count4表示至多对4个cell有贡献的所有像素点的个数 775 count4 += count2; 776 777 //上面是初始化pixData,下面开始初始化blockData 778 // initialize blockData 779 for( j = 0; j < nblocks.width; j++ ) 780 for( i = 0; i < nblocks.height; i++ ) 781 { 782 BlockData& data = blockData[j*nblocks.height + i]; 783 //histOfs表示该block对检测窗口贡献的hog描述变量起点在整个 784 //变量中的坐标 785 data.histOfs = (j*nblocks.height + i)*blockHistogramSize; 786 //imgOffset表示该block的左上角在检测窗口中的坐标 787 data.imgOffset = Point(j*blockStride.width,i*blockStride.height); 788 } 789 //一个检测窗口对应一个blockData内存,一个block对应一个pixData内存。 790 } 791 792 793 //pt为该block左上角在滑动窗口中的坐标,buf为指向检测窗口中blocData的指针 794 //函数返回一个block描述子的指针 795 const float* HOGCache::getBlock(Point pt, float* buf) 796 { 797 float* blockHist = buf; 798 assert(descriptor != 0); 799 800 Size blockSize = descriptor->blockSize; 801 pt += imgoffset; 802 803 CV_Assert( (unsigned)pt.x <= (unsigned)(grad.cols - blockSize.width) && 804 (unsigned)pt.y <= (unsigned)(grad.rows - blockSize.height) ); 805 806 if( useCache ) 807 { 808 //cacheStride可以认为和blockStride是一样的 809 //保证所获取到HOGCache是我们所需要的,即在block移动过程中会出现 810 CV_Assert( pt.x % cacheStride.width == 0 && 811 pt.y % cacheStride.height == 0 ); 812 //cacheIdx表示的是block个数的坐标 813 Point cacheIdx(pt.x/cacheStride.width, 814 (pt.y/cacheStride.height) % blockCache.rows); 815 //ymaxCached的长度为一个检测窗口垂直方向上容纳的block个数 816 if( pt.y != ymaxCached[cacheIdx.y] ) 817 { 818 //取出blockCacheFlags的第cacheIdx.y行并且赋值为0 819 Mat_<uchar> cacheRow = blockCacheFlags.row(cacheIdx.y); 820 cacheRow = (uchar)0; 821 ymaxCached[cacheIdx.y] = pt.y; 822 } 823 824 //blockHist指向该点对应block所贡献的hog描述子向量,初始值为空 825 blockHist = &blockCache[cacheIdx.y][cacheIdx.x*blockHistogramSize]; 826 uchar& computedFlag = blockCacheFlags(cacheIdx.y, cacheIdx.x); 827 if( computedFlag != 0 ) 828 return blockHist; 829 computedFlag = (uchar)1; // set it at once, before actual computing 830 } 831 832 int k, C1 = count1, C2 = count2, C4 = count4; 833 // 834 const float* gradPtr = (const float*)(grad.data + grad.step*pt.y) + pt.x*2; 835 const uchar* qanglePtr = qangle.data + qangle.step*pt.y + pt.x*2; 836 837 CV_Assert( blockHist != 0 ); 838 #ifdef HAVE_IPP 839 ippsZero_32f(blockHist,blockHistogramSize); 840 #else 841 for( k = 0; k < blockHistogramSize; k++ ) 842 blockHist[k] = 0.f; 843 #endif 844 845 const PixData* _pixData = &pixData[0]; 846 847 //C1表示只对自己所在cell有贡献的点的个数 848 for( k = 0; k < C1; k++ ) 849 { 850 const PixData& pk = _pixData[k]; 851 //a表示的是幅度指针 852 const float* a = gradPtr + pk.gradOfs; 853 float w = pk.gradWeight*pk.histWeights[0]; 854 //h表示的是相位指针 855 const uchar* h = qanglePtr + pk.qangleOfs; 856 857 //幅度有2个通道是因为每个像素点的幅值被分解到了其相邻的两个bin上了 858 //相位有2个通道是因为每个像素点的相位的相邻处都有的2个bin的序号 859 int h0 = h[0], h1 = h[1]; 860 float* hist = blockHist + pk.histOfs[0]; 861 float t0 = hist[h0] + a[0]*w; 862 float t1 = hist[h1] + a[1]*w; 863 //hist中放的为加权的梯度值 864 hist[h0] = t0; hist[h1] = t1; 865 } 866 867 for( ; k < C2; k++ ) 868 { 869 const PixData& pk = _pixData[k]; 870 const float* a = gradPtr + pk.gradOfs; 871 float w, t0, t1, a0 = a[0], a1 = a[1]; 872 const uchar* h = qanglePtr + pk.qangleOfs; 873 int h0 = h[0], h1 = h[1]; 874 875 //因为此时的像素对2个cell有贡献,这是其中一个cell的贡献 876 float* hist = blockHist + pk.histOfs[0]; 877 w = pk.gradWeight*pk.histWeights[0]; 878 t0 = hist[h0] + a0*w; 879 t1 = hist[h1] + a1*w; 880 hist[h0] = t0; hist[h1] = t1; 881 882 //另一个cell的贡献 883 hist = blockHist + pk.histOfs[1]; 884 w = pk.gradWeight*pk.histWeights[1]; 885 t0 = hist[h0] + a0*w; 886 t1 = hist[h1] + a1*w; 887 hist[h0] = t0; hist[h1] = t1; 888 } 889 890 //和上面类似 891 for( ; k < C4; k++ ) 892 { 893 const PixData& pk = _pixData[k]; 894 const float* a = gradPtr + pk.gradOfs; 895 float w, t0, t1, a0 = a[0], a1 = a[1]; 896 const uchar* h = qanglePtr + pk.qangleOfs; 897 int h0 = h[0], h1 = h[1]; 898 899 float* hist = blockHist + pk.histOfs[0]; 900 w = pk.gradWeight*pk.histWeights[0]; 901 t0 = hist[h0] + a0*w; 902 t1 = hist[h1] + a1*w; 903 hist[h0] = t0; hist[h1] = t1; 904 905 hist = blockHist + pk.histOfs[1]; 906 w = pk.gradWeight*pk.histWeights[1]; 907 t0 = hist[h0] + a0*w; 908 t1 = hist[h1] + a1*w; 909 hist[h0] = t0; hist[h1] = t1; 910 911 hist = blockHist + pk.histOfs[2]; 912 w = pk.gradWeight*pk.histWeights[2]; 913 t0 = hist[h0] + a0*w; 914 t1 = hist[h1] + a1*w; 915 hist[h0] = t0; hist[h1] = t1; 916 917 hist = blockHist + pk.histOfs[3]; 918 w = pk.gradWeight*pk.histWeights[3]; 919 t0 = hist[h0] + a0*w; 920 t1 = hist[h1] + a1*w; 921 hist[h0] = t0; hist[h1] = t1; 922 } 923 924 normalizeBlockHistogram(blockHist); 925 926 return blockHist; 927 } 928 929 930 void HOGCache::normalizeBlockHistogram(float* _hist) const 931 { 932 float* hist = &_hist[0]; 933 #ifdef HAVE_IPP 934 size_t sz = blockHistogramSize; 935 #else 936 size_t i, sz = blockHistogramSize; 937 #endif 938 939 float sum = 0; 940 #ifdef HAVE_IPP 941 ippsDotProd_32f(hist,hist,sz,&sum); 942 #else 943 //第一次归一化求的是平方和 944 for( i = 0; i < sz; i++ ) 945 sum += hist[i]*hist[i]; 946 #endif 947 //分母为平方和开根号+0.1 948 float scale = 1.f/(std::sqrt(sum)+sz*0.1f), thresh = (float)descriptor->L2HysThreshold; 949 #ifdef HAVE_IPP 950 ippsMulC_32f_I(scale,hist,sz); 951 ippsThreshold_32f_I( hist, sz, thresh, ippCmpGreater ); 952 ippsDotProd_32f(hist,hist,sz,&sum); 953 #else 954 for( i = 0, sum = 0; i < sz; i++ ) 955 { 956 //第2次归一化是在第1次的基础上继续求平和和 957 hist[i] = std::min(hist[i]*scale, thresh); 958 sum += hist[i]*hist[i]; 959 } 960 #endif 961 962 scale = 1.f/(std::sqrt(sum)+1e-3f); 963 #ifdef HAVE_IPP 964 ippsMulC_32f_I(scale,hist,sz); 965 #else 966 //最终归一化结果 967 for( i = 0; i < sz; i++ ) 968 hist[i] *= scale; 969 #endif 970 } 971 972 973 //返回测试图片中水平方向和垂直方向共有多少个检测窗口 974 Size HOGCache::windowsInImage(Size imageSize, Size winStride) const 975 { 976 return Size((imageSize.width - winSize.width)/winStride.width + 1, 977 (imageSize.height - winSize.height)/winStride.height + 1); 978 } 979 980 981 //给定图片的大小,已经检测窗口滑动的大小和测试图片中的检测窗口的索引,得到该索引处 982 //检测窗口的尺寸,包括坐标信息 983 Rect HOGCache::getWindow(Size imageSize, Size winStride, int idx) const 984 { 985 int nwindowsX = (imageSize.width - winSize.width)/winStride.width + 1; 986 int y = idx / nwindowsX;//商 987 int x = idx - nwindowsX*y;//余数 988 return Rect( x*winStride.width, y*winStride.height, winSize.width, winSize.height ); 989 } 990 991 992 void HOGDescriptor::compute(const Mat& img, vector<float>& descriptors, 993 Size winStride, Size padding, 994 const vector<Point>& locations) const 995 { 996 //Size()表示长和宽都是0 997 if( winStride == Size() ) 998 winStride = cellSize; 999 //gcd为求最大公约数,如果采用默认值的话,则2者相同 1000 Size cacheStride(gcd(winStride.width, blockStride.width), 1001 gcd(winStride.height, blockStride.height)); 1002 size_t nwindows = locations.size(); 1003 //alignSize(m, n)返回n的倍数大于等于m的最小值 1004 padding.width = (int)alignSize(std::max(padding.width, 0), cacheStride.width); 1005 padding.height = (int)alignSize(std::max(padding.height, 0), cacheStride.height); 1006 Size paddedImgSize(img.cols + padding.width*2, img.rows + padding.height*2); 1007 1008 HOGCache cache(this, img, padding, padding, nwindows == 0, cacheStride); 1009 1010 if( !nwindows ) 1011 //Mat::area()表示为Mat的面积 1012 nwindows = cache.windowsInImage(paddedImgSize, winStride).area(); 1013 1014 const HOGCache::BlockData* blockData = &cache.blockData[0]; 1015 1016 int nblocks = cache.nblocks.area(); 1017 int blockHistogramSize = cache.blockHistogramSize; 1018 size_t dsize = getDescriptorSize();//一个hog的描述长度 1019 //resize()为改变矩阵的行数,如果减少矩阵的行数则只保留减少后的 1020 //那些行,如果是增加行数,则保留所有的行。 1021 //这里将描述子长度扩展到整幅图片 1022 descriptors.resize(dsize*nwindows); 1023 1024 for( size_t i = 0; i < nwindows; i++ ) 1025 { 1026 //descriptor为第i个检测窗口的描述子首位置。 1027 float* descriptor = &descriptors[i*dsize]; 1028 1029 Point pt0; 1030 //非空 1031 if( !locations.empty() ) 1032 { 1033 pt0 = locations[i]; 1034 //非法的点 1035 if( pt0.x < -padding.width || pt0.x > img.cols + padding.width - winSize.width || 1036 pt0.y < -padding.height || pt0.y > img.rows + padding.height - winSize.height ) 1037 continue; 1038 } 1039 //locations为空 1040 else 1041 { 1042 //pt0为没有扩充前图像对应的第i个检测窗口 1043 pt0 = cache.getWindow(paddedImgSize, winStride, (int)i).tl() - Point(padding); 1044 CV_Assert(pt0.x % cacheStride.width == 0 && pt0.y % cacheStride.height == 0); 1045 } 1046 1047 for( int j = 0; j < nblocks; j++ ) 1048 { 1049 const HOGCache::BlockData& bj = blockData[j]; 1050 //pt为block的左上角相对检测图片的坐标 1051 Point pt = pt0 + bj.imgOffset; 1052 1053 //dst为该block在整个测试图片的描述子的位置 1054 float* dst = descriptor + bj.histOfs; 1055 const float* src = cache.getBlock(pt, dst); 1056 if( src != dst ) 1057 #ifdef HAVE_IPP 1058 ippsCopy_32f(src,dst,blockHistogramSize); 1059 #else 1060 for( int k = 0; k < blockHistogramSize; k++ ) 1061 dst[k] = src[k]; 1062 #endif 1063 } 1064 } 1065 } 1066 1067 1068 void HOGDescriptor::detect(const Mat& img, 1069 vector<Point>& hits, vector<double>& weights, double hitThreshold, 1070 Size winStride, Size padding, const vector<Point>& locations) const 1071 { 1072 //hits里面存的是符合检测到目标的窗口的左上角顶点坐标 1073 hits.clear(); 1074 if( svmDetector.empty() ) 1075 return; 1076 1077 if( winStride == Size() ) 1078 winStride = cellSize; 1079 Size cacheStride(gcd(winStride.width, blockStride.width), 1080 gcd(winStride.height, blockStride.height)); 1081 size_t nwindows = locations.size(); 1082 padding.width = (int)alignSize(std::max(padding.width, 0), cacheStride.width); 1083 padding.height = (int)alignSize(std::max(padding.height, 0), cacheStride.height); 1084 Size paddedImgSize(img.cols + padding.width*2, img.rows + padding.height*2); 1085 1086 HOGCache cache(this, img, padding, padding, nwindows == 0, cacheStride); 1087 1088 if( !nwindows ) 1089 nwindows = cache.windowsInImage(paddedImgSize, winStride).area(); 1090 1091 const HOGCache::BlockData* blockData = &cache.blockData[0]; 1092 1093 int nblocks = cache.nblocks.area(); 1094 int blockHistogramSize = cache.blockHistogramSize; 1095 size_t dsize = getDescriptorSize(); 1096 1097 double rho = svmDetector.size() > dsize ? svmDetector[dsize] : 0; 1098 vector<float> blockHist(blockHistogramSize); 1099 1100 for( size_t i = 0; i < nwindows; i++ ) 1101 { 1102 Point pt0; 1103 if( !locations.empty() ) 1104 { 1105 pt0 = locations[i]; 1106 if( pt0.x < -padding.width || pt0.x > img.cols + padding.width - winSize.width || 1107 pt0.y < -padding.height || pt0.y > img.rows + padding.height - winSize.height ) 1108 continue; 1109 } 1110 else 1111 { 1112 pt0 = cache.getWindow(paddedImgSize, winStride, (int)i).tl() - Point(padding); 1113 CV_Assert(pt0.x % cacheStride.width == 0 && pt0.y % cacheStride.height == 0); 1114 } 1115 double s = rho; 1116 //svmVec指向svmDetector最前面那个元素 1117 const float* svmVec = &svmDetector[0]; 1118 #ifdef HAVE_IPP 1119 int j; 1120 #else 1121 int j, k; 1122 #endif 1123 for( j = 0; j < nblocks; j++, svmVec += blockHistogramSize ) 1124 { 1125 const HOGCache::BlockData& bj = blockData[j]; 1126 Point pt = pt0 + bj.imgOffset; 1127 1128 //vec为测试图片pt处的block贡献的描述子指针 1129 const float* vec = cache.getBlock(pt, &blockHist[0]); 1130 #ifdef HAVE_IPP 1131 Ipp32f partSum; 1132 ippsDotProd_32f(vec,svmVec,blockHistogramSize,&partSum); 1133 s += (double)partSum; 1134 #else 1135 for( k = 0; k <= blockHistogramSize - 4; k += 4 ) 1136 //const float* svmVec = &svmDetector[0]; 1137 s += vec[k]*svmVec[k] + vec[k+1]*svmVec[k+1] + 1138 vec[k+2]*svmVec[k+2] + vec[k+3]*svmVec[k+3]; 1139 for( ; k < blockHistogramSize; k++ ) 1140 s += vec[k]*svmVec[k]; 1141 #endif 1142 } 1143 if( s >= hitThreshold ) 1144 { 1145 hits.push_back(pt0); 1146 weights.push_back(s); 1147 } 1148 } 1149 } 1150 1151 //不用保留检测到目标的可信度,即权重 1152 void HOGDescriptor::detect(const Mat& img, vector<Point>& hits, double hitThreshold, 1153 Size winStride, Size padding, const vector<Point>& locations) const 1154 { 1155 vector<double> weightsV; 1156 detect(img, hits, weightsV, hitThreshold, winStride, padding, locations); 1157 } 1158 1159 struct HOGInvoker 1160 { 1161 HOGInvoker( const HOGDescriptor* _hog, const Mat& _img, 1162 double _hitThreshold, Size _winStride, Size _padding, 1163 const double* _levelScale, ConcurrentRectVector* _vec, 1164 ConcurrentDoubleVector* _weights=0, ConcurrentDoubleVector* _scales=0 ) 1165 { 1166 hog = _hog; 1167 img = _img; 1168 hitThreshold = _hitThreshold; 1169 winStride = _winStride; 1170 padding = _padding; 1171 levelScale = _levelScale; 1172 vec = _vec; 1173 weights = _weights; 1174 scales = _scales; 1175 } 1176 1177 void operator()( const BlockedRange& range ) const 1178 { 1179 int i, i1 = range.begin(), i2 = range.end(); 1180 double minScale = i1 > 0 ? levelScale[i1] : i2 > 1 ? levelScale[i1+1] : std::max(img.cols, img.rows); 1181 //将原图片进行缩放 1182 Size maxSz(cvCeil(img.cols/minScale), cvCeil(img.rows/minScale)); 1183 Mat smallerImgBuf(maxSz, img.type()); 1184 vector<Point> locations; 1185 vector<double> hitsWeights; 1186 1187 for( i = i1; i < i2; i++ ) 1188 { 1189 double scale = levelScale[i]; 1190 Size sz(cvRound(img.cols/scale), cvRound(img.rows/scale)); 1191 //smallerImg只是构造一个指针,并没有复制数据 1192 Mat smallerImg(sz, img.type(), smallerImgBuf.data); 1193 //没有尺寸缩放 1194 if( sz == img.size() ) 1195 smallerImg = Mat(sz, img.type(), img.data, img.step); 1196 //有尺寸缩放 1197 else 1198 resize(img, smallerImg, sz); 1199 //该函数实际上是将返回的值存在locations和histWeights中 1200 //其中locations存的是目标区域的左上角坐标 1201 hog->detect(smallerImg, locations, hitsWeights, hitThreshold, winStride, padding); 1202 Size scaledWinSize = Size(cvRound(hog->winSize.width*scale), cvRound(hog->winSize.height*scale)); 1203 for( size_t j = 0; j < locations.size(); j++ ) 1204 { 1205 //保存目标区域 1206 vec->push_back(Rect(cvRound(locations[j].x*scale), 1207 cvRound(locations[j].y*scale), 1208 scaledWinSize.width, scaledWinSize.height)); 1209 //保存缩放尺寸 1210 if (scales) { 1211 scales->push_back(scale); 1212 } 1213 } 1214 //保存svm计算后的结果值 1215 if (weights && (!hitsWeights.empty())) 1216 { 1217 for (size_t j = 0; j < locations.size(); j++) 1218 { 1219 weights->push_back(hitsWeights[j]); 1220 } 1221 } 1222 } 1223 } 1224 1225 const HOGDescriptor* hog; 1226 Mat img; 1227 double hitThreshold; 1228 Size winStride; 1229 Size padding; 1230 const double* levelScale; 1231 //typedef tbb::concurrent_vector<Rect> ConcurrentRectVector; 1232 ConcurrentRectVector* vec; 1233 //typedef tbb::concurrent_vector<double> ConcurrentDoubleVector; 1234 ConcurrentDoubleVector* weights; 1235 ConcurrentDoubleVector* scales; 1236 }; 1237 1238 1239 void HOGDescriptor::detectMultiScale( 1240 const Mat& img, vector<Rect>& foundLocations, vector<double>& foundWeights, 1241 double hitThreshold, Size winStride, Size padding, 1242 double scale0, double finalThreshold, bool useMeanshiftGrouping) const 1243 { 1244 double scale = 1.; 1245 int levels = 0; 1246 1247 vector<double> levelScale; 1248 1249 //nlevels默认的是64层 1250 for( levels = 0; levels < nlevels; levels++ ) 1251 { 1252 levelScale.push_back(scale); 1253 if( cvRound(img.cols/scale) < winSize.width || 1254 cvRound(img.rows/scale) < winSize.height || 1255 scale0 <= 1 ) 1256 break; 1257 //只考虑测试图片尺寸比检测窗口尺寸大的情况 1258 scale *= scale0; 1259 } 1260 levels = std::max(levels, 1); 1261 levelScale.resize(levels); 1262 1263 ConcurrentRectVector allCandidates; 1264 ConcurrentDoubleVector tempScales; 1265 ConcurrentDoubleVector tempWeights; 1266 vector<double> foundScales; 1267 1268 //TBB并行计算 1269 parallel_for(BlockedRange(0, (int)levelScale.size()), 1270 HOGInvoker(this, img, hitThreshold, winStride, padding, &levelScale[0], &allCandidates, &tempWeights, &tempScales)); 1271 //将tempScales中的内容复制到foundScales中;back_inserter是指在指定参数迭代器的末尾插入数据 1272 std::copy(tempScales.begin(), tempScales.end(), back_inserter(foundScales)); 1273 //容器的clear()方法是指移除容器中所有的数据 1274 foundLocations.clear(); 1275 //将候选目标窗口保存在foundLocations中 1276 std::copy(allCandidates.begin(), allCandidates.end(), back_inserter(foundLocations)); 1277 foundWeights.clear(); 1278 //将候选目标可信度保存在foundWeights中 1279 std::copy(tempWeights.begin(), tempWeights.end(), back_inserter(foundWeights)); 1280 1281 if ( useMeanshiftGrouping ) 1282 { 1283 groupRectangles_meanshift(foundLocations, foundWeights, foundScales, finalThreshold, winSize); 1284 } 1285 else 1286 { 1287 //对矩形框进行聚类 1288 groupRectangles(foundLocations, (int)finalThreshold, 0.2); 1289 } 1290 } 1291 1292 //不考虑目标的置信度 1293 void HOGDescriptor::detectMultiScale(const Mat& img, vector<Rect>& foundLocations, 1294 double hitThreshold, Size winStride, Size padding, 1295 double scale0, double finalThreshold, bool useMeanshiftGrouping) const 1296 { 1297 vector<double> foundWeights; 1298 detectMultiScale(img, foundLocations, foundWeights, hitThreshold, winStride, 1299 padding, scale0, finalThreshold, useMeanshiftGrouping); 1300 } 1301 1302 typedef RTTIImpl<HOGDescriptor> HOGRTTI; 1303 1304 CvType hog_type( CV_TYPE_NAME_HOG_DESCRIPTOR, HOGRTTI::isInstance, 1305 HOGRTTI::release, HOGRTTI::read, HOGRTTI::write, HOGRTTI::clone); 1306 1307 vector<float> HOGDescriptor::getDefaultPeopleDetector() 1308 { 1309 static const float detector[] = { 1310 0.05359386f, -0.14721455f, -0.05532170f, 0.05077307f, 1311 0.11547081f, -0.04268804f, 0.04635834f, ........ 1312 }; 1313 //返回detector数组的从头到尾构成的向量 1314 return vector<float>(detector, detector + sizeof(detector)/sizeof(detector[0])); 1315 } 1316 //This function renurn 1981 SVM coeffs obtained from daimler's base. 1317 //To use these coeffs the detection window size should be (48,96) 1318 vector<float> HOGDescriptor::getDaimlerPeopleDetector() 1319 { 1320 static const float detector[] = { 1321 0.294350f, -0.098796f, -0.129522f, 0.078753f, 1322 0.387527f, 0.261529f, 0.145939f, 0.061520f, 1323 ........ 1324 }; 1325 //返回detector的首尾构成的向量 1326 return vector<float>(detector, detector + sizeof(detector)/sizeof(detector[0])); 1327 } 1328 1329 }

objdetect.hpp中关于hog的部分:

1 //////////////// HOG (Histogram-of-Oriented-Gradients) Descriptor and Object Detector ////////////// 2 3 struct CV_EXPORTS_W HOGDescriptor 4 { 5 public: 6 enum { L2Hys=0 }; 7 enum { DEFAULT_NLEVELS=64 }; 8 9 CV_WRAP HOGDescriptor() : winSize(64,128), blockSize(16,16), blockStride(8,8), 10 cellSize(8,8), nbins(9), derivAperture(1), winSigma(-1), 11 histogramNormType(HOGDescriptor::L2Hys), L2HysThreshold(0.2), gammaCorrection(true), 12 nlevels(HOGDescriptor::DEFAULT_NLEVELS) 13 {} 14 15 //可以用构造函数的参数来作为冒号外的参数初始化传入,这样定义该类的时候,一旦变量分配了 16 //内存,则马上会被初始化,而不用等所有变量分配完内存后再初始化。 17 CV_WRAP HOGDescriptor(Size _winSize, Size _blockSize, Size _blockStride, 18 Size _cellSize, int _nbins, int _derivAperture=1, double _winSigma=-1, 19 int _histogramNormType=HOGDescriptor::L2Hys, 20 double _L2HysThreshold=0.2, bool _gammaCorrection=false, 21 int _nlevels=HOGDescriptor::DEFAULT_NLEVELS) 22 : winSize(_winSize), blockSize(_blockSize), blockStride(_blockStride), cellSize(_cellSize), 23 nbins(_nbins), derivAperture(_derivAperture), winSigma(_winSigma), 24 histogramNormType(_histogramNormType), L2HysThreshold(_L2HysThreshold), 25 gammaCorrection(_gammaCorrection), nlevels(_nlevels) 26 {} 27 28 //可以导入文本文件进行初始化 29 CV_WRAP HOGDescriptor(const String& filename) 30 { 31 load(filename); 32 } 33 34 HOGDescriptor(const HOGDescriptor& d) 35 { 36 d.copyTo(*this); 37 } 38 39 virtual ~HOGDescriptor() {} 40 41 //size_t是一个long unsigned int型 42 CV_WRAP size_t getDescriptorSize() const; 43 CV_WRAP bool checkDetectorSize() const; 44 CV_WRAP double getWinSigma() const; 45 46 //virtual为虚函数,在指针或引用时起函数多态作用 47 CV_WRAP virtual void setSVMDetector(InputArray _svmdetector); 48 49 virtual bool read(FileNode& fn); 50 virtual void write(FileStorage& fs, const String& objname) const; 51 52 CV_WRAP virtual bool load(const String& filename, const String& objname=String()); 53 CV_WRAP virtual void save(const String& filename, const String& objname=String()) const; 54 virtual void copyTo(HOGDescriptor& c) const; 55 56 CV_WRAP virtual void compute(const Mat& img, 57 CV_OUT vector<float>& descriptors, 58 Size winStride=Size(), Size padding=Size(), 59 const vector<Point>& locations=vector<Point>()) const; 60 //with found weights output 61 CV_WRAP virtual void detect(const Mat& img, CV_OUT vector<Point>& foundLocations, 62 CV_OUT vector<double>& weights, 63 double hitThreshold=0, Size winStride=Size(), 64 Size padding=Size(), 65 const vector<Point>& searchLocations=vector<Point>()) const; 66 //without found weights output 67 virtual void detect(const Mat& img, CV_OUT vector<Point>& foundLocations, 68 double hitThreshold=0, Size winStride=Size(), 69 Size padding=Size(), 70 const vector<Point>& searchLocations=vector<Point>()) const; 71 //with result weights output 72 CV_WRAP virtual void detectMultiScale(const Mat& img, CV_OUT vector<Rect>& foundLocations, 73 CV_OUT vector<double>& foundWeights, double hitThreshold=0, 74 Size winStride=Size(), Size padding=Size(), double scale=1.05, 75 double finalThreshold=2.0,bool useMeanshiftGrouping = false) const; 76 //without found weights output 77 virtual void detectMultiScale(const Mat& img, CV_OUT vector<Rect>& foundLocations, 78 double hitThreshold=0, Size winStride=Size(), 79 Size padding=Size(), double scale=1.05, 80 double finalThreshold=2.0, bool useMeanshiftGrouping = false) const; 81 82 CV_WRAP virtual void computeGradient(const Mat& img, CV_OUT Mat& grad, CV_OUT Mat& angleOfs, 83 Size paddingTL=Size(), Size paddingBR=Size()) const; 84 85 CV_WRAP static vector<float> getDefaultPeopleDetector(); 86 CV_WRAP static vector<float> getDaimlerPeopleDetector(); 87 88 CV_PROP Size winSize; 89 CV_PROP Size blockSize; 90 CV_PROP Size blockStride; 91 CV_PROP Size cellSize; 92 CV_PROP int nbins; 93 CV_PROP int derivAperture; 94 CV_PROP double winSigma; 95 CV_PROP int histogramNormType; 96 CV_PROP double L2HysThreshold; 97 CV_PROP bool gammaCorrection; 98 CV_PROP vector<float> svmDetector; 99 CV_PROP int nlevels; 100 };

[2] 黄冬丽, 戴健文, 冯超, 等. HOG 特征提取中的三线性插值算法[J]. 电脑知识与技术: 学术交流, 2012, 8(11): 7548-7551.

https://blog.csdn.net/gy429476195/article/details/50156813

https://blog.csdn.net/zhanghenan123/article/details/80853523

https://blog.csdn.net/huguohu2006/article/details/48681287

https://blog.csdn.net/sinat_34604992/article/details/53933004