Pytorch RNN 用sin预测cos

RNN简单介绍

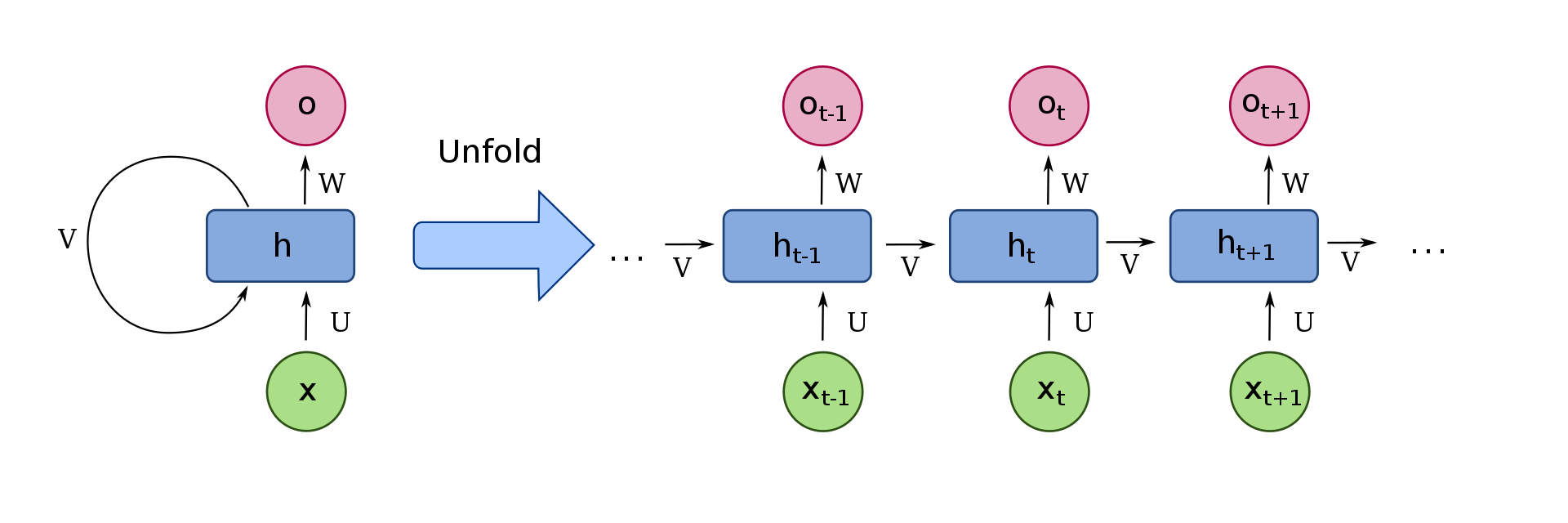

什么是RNN

RNN的全称是Recurrent Neural Network,中文名称是循环神经网络。它的一大特点就是拥有记忆性,并且参数共享,所以它很适合用来处理序列数据,比如说机器翻译、语言模型、语音识别等等。

它的结构

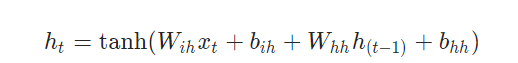

它的更新公式

(Pytorh 文档里的 https://pytorch.org/docs/stable/generated/torch.nn.RNN.html?highlight=rnn#torch.nn.RNN)

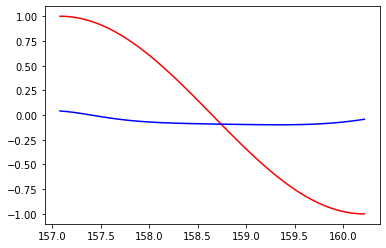

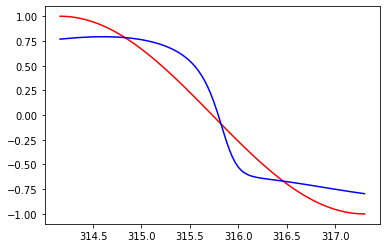

sin to cos

我们这里采用的是一个seq2seq的模型,也就是,首先生成区间(a,b)的等距采样点steps=[s0,s1,s2,...sn],然后再算出x = [x0,x1,x2,...xn], xn = sin(sn),和y = [y0, y1,y2,...,yn], yn = cos(sn)。

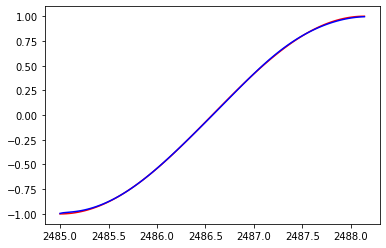

RNN要做的是,把序列x转变成序列y,也即是从sin到cos(下图中蓝色到红色)

可以知道,y0只受到x0的影响,而y2则同时受到[x0,x0,x1,x2]的影响,同理yn则受到[x0,...xn]的影响。

代码

import torch

from torch import nn

import numpy as np

import matplotlib.pyplot as plt

steps = np.linspace(0, np.pi*2, 100, dtype=np.float32)

input_x = np.sin(steps)

target_y = np.cos(steps)

plt.plot(steps, input_x, 'b-', label='input:sin')

plt.plot(steps, target_y, 'r-', label='target:cos')

plt.legend(loc='best')

plt.show()

class RNN(nn.Module):

def __init__(self, input_size, hidden_size):

super(RNN, self).__init__()

self.hidden_size = hidden_size

self.rnn = nn.RNN(

input_size = input_size,

hidden_size = hidden_size,

batch_first = True,

)

self.out = nn.Linear(hidden_size, 1)

def forward(self, input, hidden):

output, hidden = self.rnn(input, hidden)

output = self.out(output)

return output,hidden

def initHidden(self):

hidden = torch.randn(1, self.hidden_size)

return hidden

rnn = RNN(input_size = 1, hidden_size = 20)

hidden = rnn.initHidden()

optimizer = torch.optim.Adam(rnn.parameters(), lr=0.001)

loss_func = nn.MSELoss()

plt.figure(1, figsize=(12, 5))

plt.ion() # 开启交互模式

loss_list = []

for step in range(800):

start, end = step * np.pi, (step + 1) * np.pi

steps = np.linspace(start, end, 100, dtype=np.float32)

x_np = np.sin(steps)

y_np = np.cos(steps)

#(100, 1) 不加batch_size

x = torch.from_numpy(x_np).unsqueeze(-1)

y = torch.from_numpy(y_np).unsqueeze(-1)

y_predict, hidden = rnn(x, hidden)

hidden = hidden.data # 重新包装数据,断掉连接,不然会报错

loss = loss_func(y_predict, y)

optimizer.zero_grad() # 梯度清零

loss.backward() # 反向传播

optimizer.step() # 梯度下降

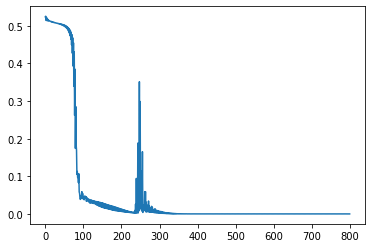

loss_list.append(loss.item())

if step % 10 == 0 or step % 10 == 1:

plt.plot(steps, y_np.flatten(), 'r-')

plt.plot(steps, y_predict.data.numpy().flatten(), 'b-')

plt.draw();

plt.pause(0.05)

plt.ioff()

plt.show()

plt.plot(loss_list)

效果

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!