Numpy 写3层神经网络拟合sinx

代码

# -*- coding: utf-8 -*-

"""

Created on Wed Feb 23 20:37:01 2022

@author: koneko

"""

import numpy as np

import matplotlib.pyplot as plt

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def mean_squared_error(y, t):

return 0.5 * np.sum((y-t)**2)

class Sigmoid:

def __init__(self):

self.out = None

def forward(self, x):

out = sigmoid(x)

self.out = out

return out

def backward(self, dout):

dx = dout * (1.0 - self.out) * self.out

return dx

x = np.linspace(-np.pi, np.pi, 1000)

y = np.sin(x)

plt.plot(x,y)

x = x.reshape(1, x.size)

y = y.reshape(1, y.size)

# 初始化权重

W1 = np.random.randn(3,1)

b1 = np.random.randn(3,1)

W2 = np.random.randn(2,3)

b2 = np.random.randn(2,1)

W3 = np.random.randn(1,2)

b3 = np.random.randn(1,1)

sig1 = Sigmoid()

sig2 = Sigmoid()

lr = 0.001

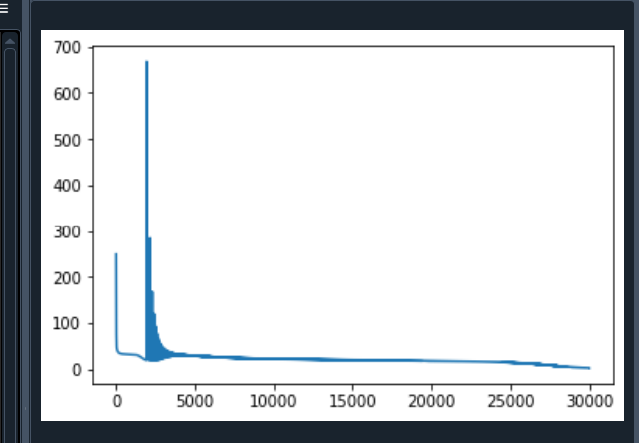

for i in range(30000):

a1 = W1 @ x + b1

c1 = sig1.forward(a1)

a2 = W2 @ c1 + b2

c2 = sig2.forward(a2)

y_pred = W3 @ c2 + b3

#y_pred = W2 @ c1 + b2

Loss = mean_squared_error(y, y_pred)

print(f"Loss[{i}]: {Loss}")

dy_pred = y_pred - y

dc2 = W3.T @ dy_pred

da2 = sig2.backward(dc2)

dc1 = W2.T @ da2

da1 = sig1.backward(dc1)

# 计算Loss对各层参数的偏导数

dW3 = dy_pred @ c2.T

db3 = np.sum(dy_pred)

dW2 = da2 @ c1.T

db2 = np.sum(da2, axis=1)

db2 = db2.reshape(db2.size, 1)

dW1 = da1 @ x.T

db1 = np.sum(da1, axis=1)

db1 = db1.reshape(db1.size, 1)

W3 -= lr*dW3

b3 -= lr*db3

W2 -= lr*dW2

b2 -= lr*db2

W1 -= lr*dW1

b1 -= lr*db1

if i % 100 == 99:

plt.cla()

plt.plot(x.T,y.T)

plt.plot(x.T,y_pred.T)

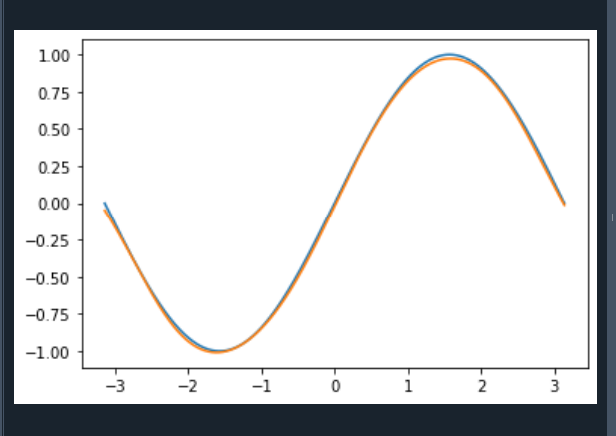

效果

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!