There is some notes while I am learning Ubuntu Operate System!

(Ask Ubuntu & Fedora)

1-- Hard link : ln command have different parm. Hard link means that the two files is connect together for backup the original files.If you edit any of the two files , it will be sync immedietely. If you delete the original file. It will make all files used. If you delete the copy file , it will keep the context of the original file. This command can not link the directory but single file. ln A.txt B.txt

2-- Symbolic link: This is different from the hard link. This command can link the directory. This one is more usefull than Hard link. ln -s A.txt B.txt

3-- cat : cat is a command which can read the .txt .py .c etc without opening the origin files. Used like this: cat filename.suffix zcat is another command which can read the zip files : zcat filename.zip.

4-- du -sb;df -i : read the Memory and disk used and the information of the device.

5-- ln : The explaination have already said in the point 1 and the point 2.

6-- fdisk : fdisk /dev/sda1 check the information of this device. The memory,sector,node and so on.

7--df : df / command is for checking all device.df -i more information about all the device.

8-- while there is a question about that : sudo apt-get update . Then there is two errors say:”Could not get lock /var/lib/dpkg/lock” .And “Unable to lock administration directory(/var/lib/dpkg),is anther process using it?”.

That two error’s information tell us that we need to find out that whether there is anther process is using the dpkg. So we need to type anther command into the Terminal like that: sudo lsof /var/lib/dpkg/lock. Then it return some information like that :

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

unattend 9241 root 5uW REG 8,1 0 699848 /var/lib/dpkg/lock

So, We need to kill the process like this :sudo kill -9 9214 .(The fromat: sudo kill -9 <PID>).

After these steps ,I can run the system normally. Congratulations!

9--when I moving the Ubuntu Terminal. It receive a command(Ctrl + C) to shutdown the process running in the Terminal. So need to solve this problem like this : Shutdown the processing running in your Original system(Our Ubuntu is install in the Vmware as a Vitrual Machine), The named of the process is YouDao Dictionary!

10--Error:Unable to find the remote helper for https:When I was upload the C files into the remote repository, it show like this error. After finding the answer, It said that the path named /usr/lib/git-core is not in the System’s PATH. So what we need to do is to set the path to include the git-core path so that we can use the command of git: PATH=$PATH:/usr/lib/git-core.

11-- When I try to change my authority to be a root, It turn out to be that Authourity failure. So I am going to find the reason. At last it told me That I have not set the root’password before.We are asked to set a new password for root. We set the password for root used this command: sudo passwd root .After running this command, it asks you to input the password of user now time, then you need to enter the password for root.

12-- When I am using git to manage my code.

There is some steps for you to do this(This for you to update your code to the github.com!).

Step1 : Go to the the file directory which you want to manage the code use the command ‘cd’. After yo u get into the directory, you need to use the first git command: git init -→this command make the directory to be a local repository. The later steps is based on this directory.

Step2 : You need to know the status of the directory, using this git command to get the status: git status. After you using this command. There show the status of the directory. Then you need to add these new files into the commit like this: git add filename.

Step3 : When you finished to add the new files into the commit. Now you can commit those files into the local repository. The command like this: get commit -m filename. After this step, you can check the status of the directory like this:git status to insure that there is no files is new needed to be update.

Step4 : There is two different positions. If you have not connect this local repository to the remote repository located in the service machine of the git.com. You need to do like this : git remote add origin https://github.com/user name/repository’s name,git . After this o peration, the local repository will link with the remote repository located in the service of github,com. Notice: We must insure that there have already make a repository in your github account of github.com. If you have already link your file s to the local repository with the repository in github.com, You just skip this step and read the next step.

Step5 :After all those operations, The last step you need to do is :git push -u origin master .

There is some steps for you do this(This is for you to clone the files from the github.com).

Step1 : You don’t need to add a new directory in your local computer. You just need to type this in your Terminal : git clone https://github.com/githubusername/local-repository-name.git

13-- mkfs : The long form is : Make your file system

14-- badblocks : This command is for you to check whither there are some break sectors in the memory device. It takes more time.

15-- mount : mount commad is important. If you want to operate some devices, it firstly should be mount on. Mount -l command means to show all the devices have been mount on. Mount /dev/sda1 means to mount on the device you want to mount, once mount on, you can use it.before you eject the devices , you should firstly umount the divice like this : umount /dev/sda1 . Then you can eject the device. For example(You want to operate your flash device):

Step1 : df -i show all the device been connect to the operation system.

Step2 : mkdir /media/flash To make a directory to operate the flash device with this file.

Step3 : mount /dev/devicename /media/flash To mount the device on the system’s file.

Step4 : Do what you want to do on the flash directory in order to operate the flash device.

16-- umount : umount /dev/sdb1

17-- rm -rf : rm -rf file’directory. This command can directly delete the whole file directory.

18-- cp -r : cp -r /Original directory /Destination directoryCopy the whole directory.

19-- dumpe2fs :

20-- e2label : e2lable /dev/sab1 MM1994UESTCChange the device name.

21-- hdparm : hdparm /dev/sda1To show the parameters of device connect to the operate Sys.

22-- Some file’s suffix inform us the compress type of the file:

*.Z compressed by compress App

*.gz compressed by gzip App

*.bz2 compressed by bzip2 APP

*.tar Have not been compressed, Open with tar App

*.tar.gz packed by tar App, also been compressed by gzip App

*.tar.bz2 Packed by tar App, also been compressed by bzip2 APP

The Compress Command:

gzip :

bzip2 :

gunzip :

tar -jcv -ffilename.tar.bz2 filename

tar -jxv -ffilename.tar.bz2 -C filename

tar -zcv -ffilename.tar.gz filename

tar -zxv -ffilename.tar.gz -C filename

The package Command:

tar : tar -c -ffiledirectoryname.tar original filename

压缩指令:tar -zcvf target.tar.gz /source/*

解压指令:tar -xzvf target.tar.gz -C /dir/

23--mv : Acommad that can also change the filename. Mv A.txt B.txt

This command also can be used as moving the file into another place.

24-- alias : To rename the command with another name. EG: alias lm=’ls -al’ means that to use the lm name to respect the command ls -al.

25--declare -i number=$RANDOM*10/32768; echo $number

26-- history :

27-- vim -r .filename.swp : This command can help you to recovery the file that have not been saved before which suddenly be killed without saved.

28-- To install the MySQL on your Ubuntu Operation System.

Step1 :sudo apt-get install mysql-server

Step2 : apt-get install mysql-client

Step3 : sudo apt-get install libmysqlclient-dev

After those steps , Let us check whether the Mysql-server been installed on our Ubuntu’system

Command:sudo netstat -tap | grep mysql

If ubuntu’s terminal shows that your mysql’socket is in listening means that the mysql-server has been installed successfully.

29-- How to remove some App That we don’t want to use!

Step1 : sudo apt-get remove mysql-server mysql-client mysql-common

Step2 : sudo apt-get autoremove

Step3 : sudo apt-get autoclean

If you are trying to reinstall the mysql server ,and there is some problems,please redo the three steps above and then execute the follow command as this :

Step4 : sudo apt-get remove - -purge mysql-\*

Step5 : sudo apt-get install - -reinstall mysql-common (The most important command!)

Step6 : sudo apt-get install mysql-server mysql-client

We also can use this command to do this:sudo dpkg --purge Software

30-- How to stop/start the mysql-server under ubuntu?

sudo service mysql start(Notice:In order to prevent the system have already open the service for you while you continue try this start command , sudo service mysql restartis better!Also,you can use this command to find out while the mysql service is running:service mysql status)

sudo service mysql stop

sudo apt-get install ksnapshot

31-- Error:could not get lock /var/lib/dpkg/lock -open 出现这个问题的原因可能是有另外一个程序正在运行,导致资源被锁不可用。而导致资源被锁的原因,可能是上次安装时没正常完成,而导致出现此状况。

解决方法:输入以下命令

sudo rm /var/cache/apt/archives/lock

sudo rm /var/lib/dpkg/lock

删除完上面的东西之后有可能会出现下面的问题(Kylin中遇到过):dpkg 被中断,您必须手工运行sudo dpkg --configure -a解决此问题,但是按照提示输入命令之后还是没有解决问题,下面是解决办法:

sudo rm /var/lib/dpkg/update/*

sudo apt-get update

sudo apt-get upgrade

32-- can't use the command shutdown:

solution1:

echo $PATH

PATH=$PATH:/sbin

solution2:

/sbin/shutdown

33-- yum Error: Cannot retrieve repository metadata (repomd.xml) for repository: xxxxx:

问题是,在使用Fedora 14的过程中,发现上述的错误,Fedora的源数据库repo不能使用,或者不能获取元数据。猜测可能的原因是Fedora的版本太旧了,fedora系统中原有的repo库已经不能使用,于是更新一下库吧,找了半天发现163网易的源已经不支持了啊,然而有很多网友的方法中添加的是网易的源(2017-11-03)的内容是README,而README的内容如下:

ATTENTION ====================================== The contents of this directory have been moved to our archives available at: http://archives.fedoraproject.org/pub/archive/fedora/ If you are having troubles finding something there please stop by #fedora-admin on irc.freenode.net

所以我们要找的是http://archives.fedoraproject.org/pub/archive/fedora/,同样的我们添加下面的几个源:

baseurl=http://archives.fedoraproject.org/pub/archive/fedora/linux/releases/14/Everything/x86_64/os/ baseurl=http://archives.fedoraproject.org/pub/archive/fedora/linux/releases/14/Everything/x86_64/debug/ baseurl=http://archives.fedoraproject.org/pub/archive/fedora/linux/releases/14/Everything/source/SRPMS/

添加之后的fedora.repo的库内容如下所示:

1、修改fedora.repo(文件的位置: etc/yum.repos.d/fedora.repo)

vi fedora.repo

修改之后的fedora.repo的内容如下所示:

[fedora] name=Fedora $releasever - $basearch failovermethod=priority #baseurl=http://download.fedoraproject.org/pub/fedora/linux/releases/$releasever/Everything/$basearch/os/ baseurl=http://archives.fedoraproject.org/pub/archive/fedora/linux/releases/14/Everything/x86_64/os/ #mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=fedora-$releasever&arch=$basearch enabled=1 metadata_expire=7d gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-fedora-$basearch [fedora-debuginfo] name=Fedora $releasever - $basearch - Debug failovermethod=priority #baseurl=http://download.fedoraproject.org/pub/fedora/linux/releases/$releasever/Everything/$basearch/debug/ baseurl=http://archives.fedoraproject.org/pub/archive/fedora/linux/releases/14/Everything/x86_64/debug/ #mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=fedora-debug-$releasever&arch=$basearch enabled=0 metadata_expire=7d gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-fedora-$basearch [fedora-source] name=Fedora $releasever - Source failovermethod=priority #baseurl=http://download.fedoraproject.org/pub/fedora/linux/releases/$releasever/Everything/source/SRPMS/ baseurl=http://archives.fedoraproject.org/pub/archive/fedora/linux/releases/14/Everything/source/SRPMS/ #mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=fedora-source-$releasever&arch=$basearch enabled=0 metadata_expire=7d gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-fedora-$basearch

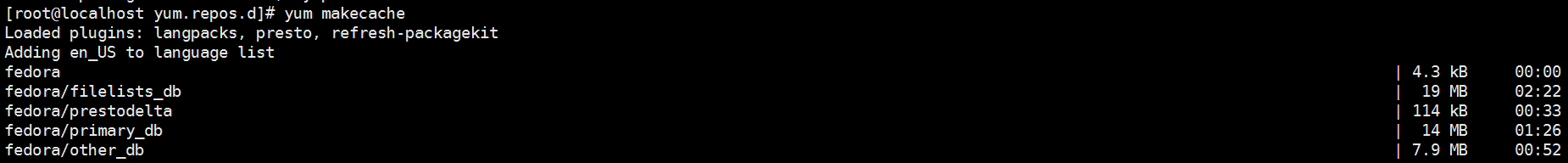

2、修改完成之后运行下面的指令:

yum clean all

yum makecache

OK,这样就可以使用了,下面是yum makecache的加载过程:

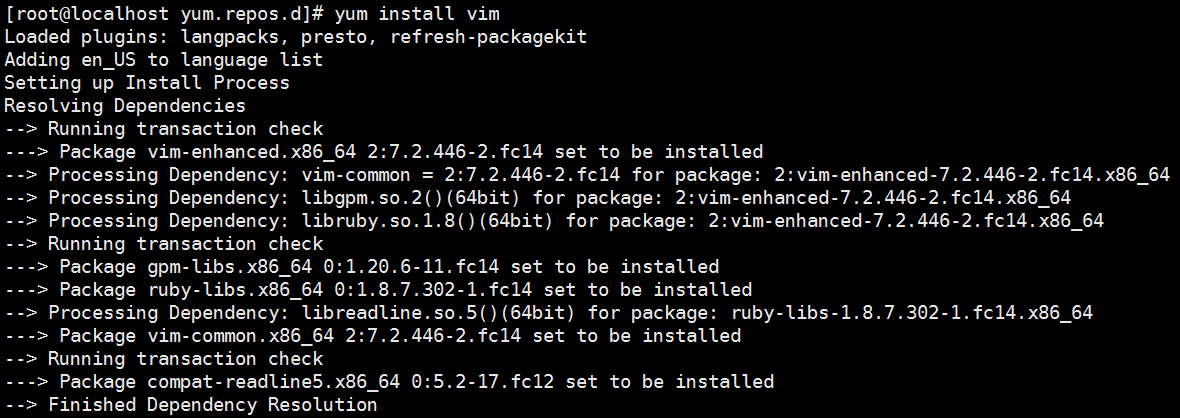

3、测试安装vim:

yum install vim

OK!完成操作,所以出现问题之后的错误信息很重要,解决这个问题的方法就是确定到底是为什么,然后再一次解决。

OK!完成操作,所以出现问题之后的错误信息很重要,解决这个问题的方法就是确定到底是为什么,然后再一次解决。

官方的fedora的库(国外镜像):http://archives.fedoraproject.org/pub/archive/fedora/linux/releases/

国内网易镜像(国内镜像,部分老版本的镜像已经删除):http://mirrors.163.com/fedora/updates/

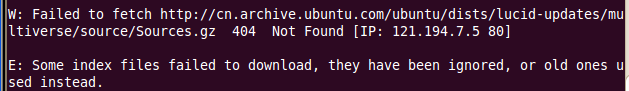

34-- Failed to fetch http://cn.archive.ubuntu.com/xxx/xxx/xxx/*.gz(Ubuntu下源不存在需要更新的问题):

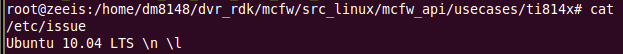

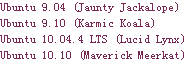

针对这个问题,首先我们需要确定我们的Ubuntu的版本是那个,采用如下的指令:

cat /etc/issue

查询好对应的版本好之后,我们就可以在ubuntu的老版本的维护社区找到对应的源的链接了,所有老版本的源的地址如下:

http://old-releases.ubuntu.com/ 这里我们可以先在release的目录下查询Ubuntu10.04对应的版本的名称

从右图可以看出,Ubuntu10.04版本的名称时Lucid Lynx

从右图可以看出,Ubuntu10.04版本的名称时Lucid Lynx

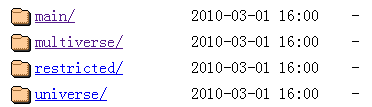

根据名称我们在这里找到了对应的相关文件:http://old-releases.ubuntu.com/ubuntu/dists/lucid-xxx/

我们讲对应的下面的文件进行更改和替换:

gedit /etc/apt/sources.list

插入的内容如下(如果你的版本不同,替换掉对应版本的的lucid的版本代号就可以了):

deb http://old-releases.ubuntu.com/ubuntu/ lucid main restricted universe multiverse deb http://old-releases.ubuntu.com/ubuntu/ lucid-updates main restricted universe multiverse deb http://old-releases.ubuntu.com/ubuntu/ lucid-security main restricted universe multiverse deb http://old-releases.ubuntu.com/ubuntu/ lucid-proposed main restricted universe multiverse deb http://old-releases.ubuntu.com/ubuntu/ lucid-backports main restricted universe multiverse deb-src http://old-releases.ubuntu.com/ubuntu/ lucid main restricted universe multiverse deb-src http://old-releases.ubuntu.com/ubuntu/ lucid-updates main restricted universe multiverse deb-src http://old-releases.ubuntu.com/ubuntu/ lucid-security main restricted universe multiverse deb-src http://old-releases.ubuntu.com/ubuntu/ lucid-proposed main restricted universe multiverse deb-src http://old-releases.ubuntu.com/ubuntu/ lucid-backports main restricted universe multiverse

保存退出,测试更新源即可使用了,记得把不能使用的源链接都删除掉或者使用#来注释掉所有的源链接。(这里将所有文件夹下面的Source.gz都添加进来了。

main restricted universe multiverse文件夹下面都是Source.gz文件

release文件夹下面的对应的Source.gz的源文件

release文件夹下面的对应的Source.gz的源文件

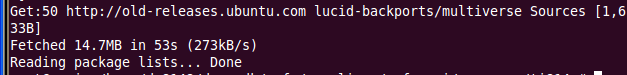

修改完成之后,我们需要更新apt的缓存:

sudo apt-get update

更行的过程如下所示:

使用这种通用的老版本的源其实速度也不算慢,但是源标间稳定,推荐使用。

使用这种通用的老版本的源其实速度也不算慢,但是源标间稳定,推荐使用。

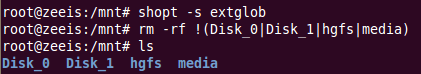

35-- How todeletesomefiles, and keep some other files in local folder?(如何删除大量的文件并保存部分的需要保留的文件?):

如下所示,我们需要删除除了hgfs,Disk_0,Disk_1,media这些文件夹意外的所有的文件,需要按照如下的处理方式:

按照如下的指令进行操作(注意一定要打开extglob的模式):

shopt -s extglob # 打开extglob模式

rm -fr !(file1)

这样就能删除多个文件了。

这样就能删除多个文件了。

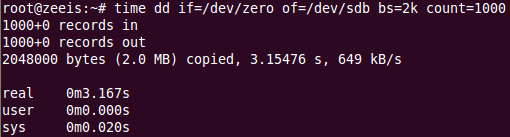

36-- dd指令测试硬盘性能以及拷贝文件到对应的文件:

time dd if=/dev/zero of=/dev/sdb bs=2k count=1000

运行结果:

相关参数的解释:

time 用于计时(real实际耗时,user用户态耗时,sys系统态耗时) dd 用于复制,从if读出(input file),写到of(output file) if=/dev/zero 不产生IO,因此可以用来测试纯写速度(输入设备,改为/dev/sdb就表示从磁盘读取数据,测试磁盘的读取性能)

of=/dev/null 不产生IO,可以用来测试纯读速度(输出设备,改为/dev/sdb就表示向磁盘sdb写入数据,测试磁盘的写入性能) bs 是每次读或写的大小,即一个块的大小 count 是读写块的数量,相乘就是读写数据量大小,数据量(count)越大越准确,多次测试取平均值

这里我们就能看到sdb设备的写速度在649kB/s。

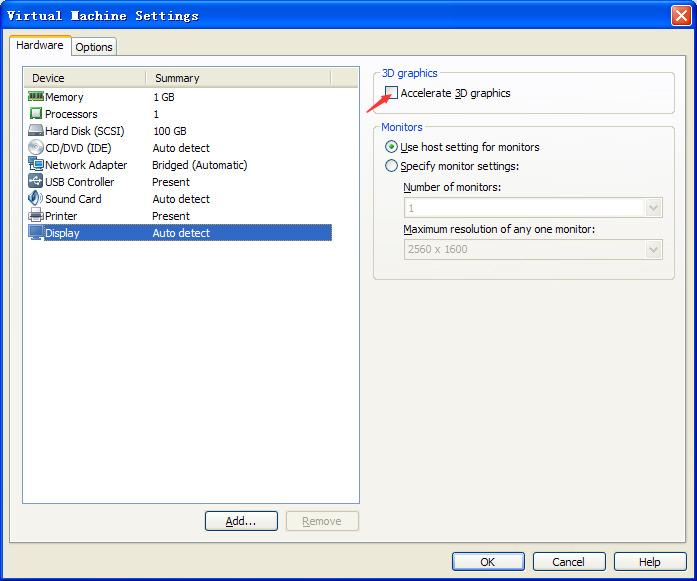

37-- 在使用VMware10的过程中,安装完成了Ubuntu10.0的虚拟机之后,发现虚拟机的桌面显示出现了问题:

没有任何的边框存在,Ubuntu基本的左边缘的软件也没法选择,可能存在的原因是因为你选择的虚拟机设置当中存在这3D的选项,请将3D选项不要勾选在启动之后即可解决。

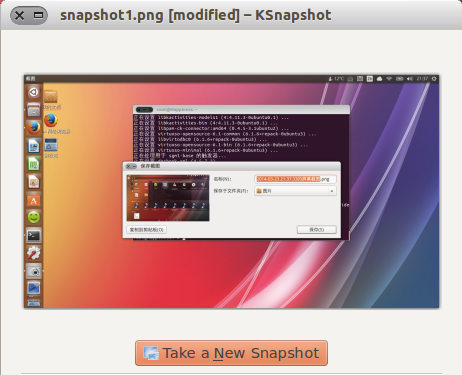

38 -- 安装ubuntu上的截图软件ksnapshot:

sudo apt-get install ksnapshot

安装之后如下图所示(我们在终端直接输入ubuntu-mm:~$ksnapshot 即可运行截图软件):

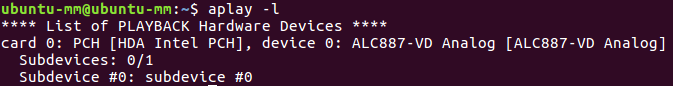

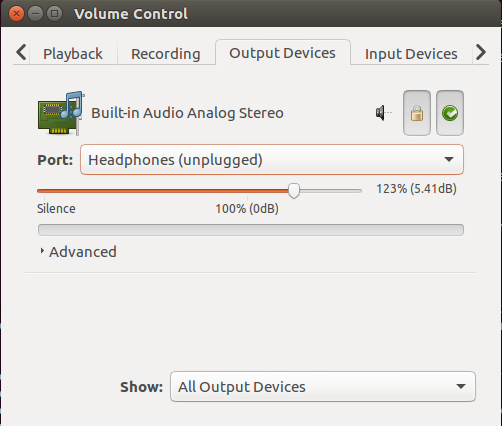

39 -- ubuntu系统启动之后前置耳放设备没有声音:

这种情况下一般先怀疑是设备选择的问题,可能默认的声卡设备的输出口选择的是机箱后置的音响输出口,我们需要选择为前置的耳放输出接口,我们可以先检查一下声卡设备的选择是否是正确的:

aplay -l #用来列出当前的播放硬件设备的列表

这里列出了声卡设备,说明声卡设备的硬件是没有问题的,我们需要设置声卡设备的选择问题(修改下面的文件,如果没有此文件请创建,ubuntu系统的很多文件都是用户自己创建的,这很正常):

vim /etc/asound.conf #创建声卡设备选择控制文件

插入如下的内容:

defaults.pcm.card 0 defaults.pcm.device 0 defaults.ctl.card 0

保存退出,重启电脑,看问题是否解决,若未解决请看下面:

下载声卡输出口配置软件pavucontrol(可以参考http://www.cnblogs.com/kingstrong/p/5960466.html#3940836):

sudo apt-get install pavucontrol #下载配置软件pavucontrol

这里配置选择成Headphones时就是主机前置输出接口,如果是Line Out就会是后置的输出接口,切换好之后就能完成声卡的配置了。

这里配置选择成Headphones时就是主机前置输出接口,如果是Line Out就会是后置的输出接口,切换好之后就能完成声卡的配置了。

40 -- git have something wrong under ubuntu

Pushing to Git returning Error Code 403 fatal: HTTP request failed

Error:

error: failed to push some refs to 'git+ssh://git@github.com/yufeiluo/newstart.git' hint: Updates were rejected because the remote contains work that you do hint: not have locally. This is usually caused by another repository pushing hint: to the same ref. You may want to first integrate the remote changes hint: (e.g., 'git pull ...') before pushing again. hint: See the 'Note about fast-forwards' in 'git push --help' for details.

In order to fixed the problem we need to type these command:

git push -f origin master

This will fixed the problem!

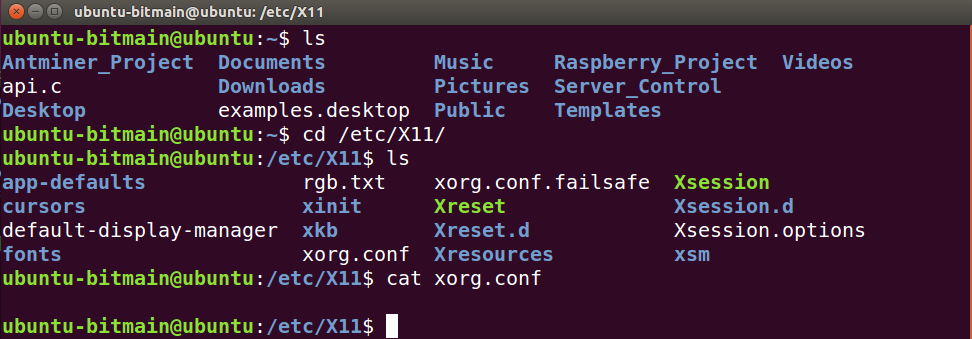

41--Ubuntu16.04 cp xorg.conf.failsafe xorg.conf后无法设置分辨率的解决办法

登陆Ubuntu出现了无法显示登陆界面的情况,因此只能通过重启系统时,按住shift,进入到grub命令行,切换到ubuntu recovery模式下进行如下操作:

1 cd /etc/X11 2 sudo cp xorg.conf.failsafe xorg.conf 3 sudo reboot

完成操作之后重启Ubuntu.这是系统就能够通过登陆界面直接进入了。

进入界面之后发现无法调节界面的分辨率等问题,解决如下:

Step1:在命令行执行如下命令

1 sudo apt-get updata 2 sudo apt-get upgrade

Step2:将xorg.conf文件中的内容清空:

参考这篇文档:

https://blog.csdn.net/qq_24729895/article/details/81632209

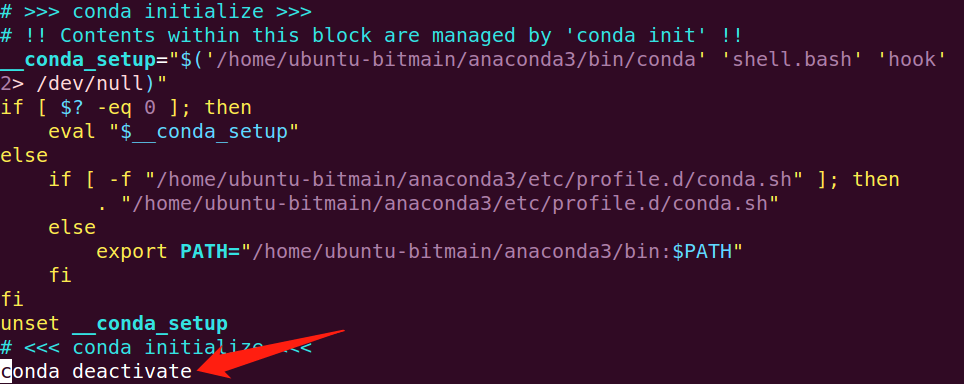

42---安装 aconda 后Linux的终端界面前部出现(base)字样

在安装完anaconda之后,在Terminal终端看到了(base)的字样,其原因是在每次启用终端的时候都执行了下面的命令:

conda activate base

针对上述问题,我们需要在~/.bashrc文件中添加不激活conda的指令或者在Terminal中调用如下指令:

conda deactivate

或者修改~/.bashrc文件中的内容(在末尾添加命令:conda deactivate):

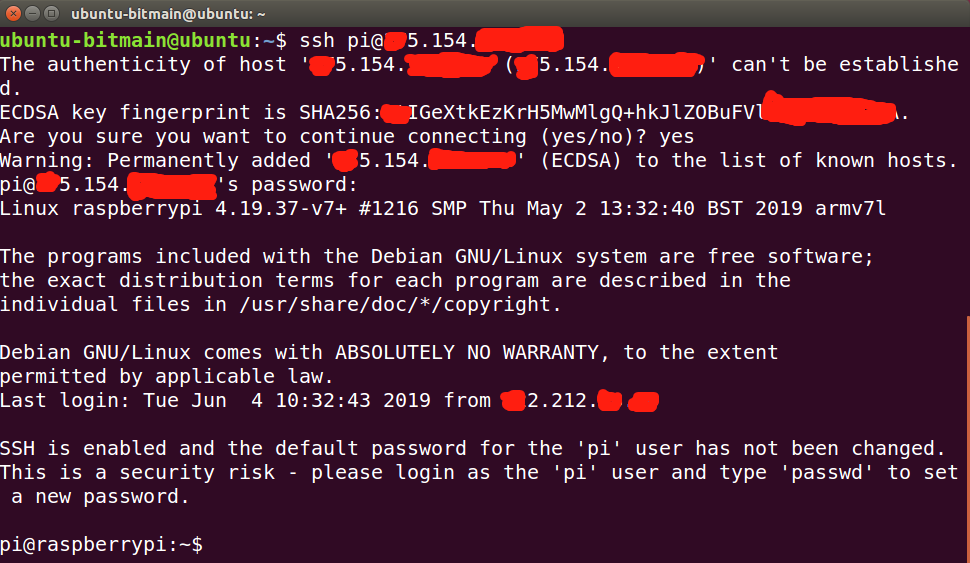

43--- 如何使用 ssh / scp 等指令远程连接主机并发送数据:

1、SSH连接远程主机:

ssh UserName@IP_Address

如图所示,前面的是用户名,后面是Remote-Host-IP

如图所示,已经连接完成。

如图所示,已经连接完成。

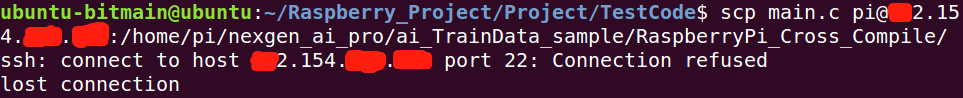

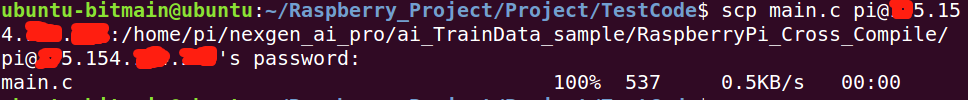

2、SCP拷贝,发送数据到远程主机:

1 scp [-12346BCpqrv] [-c cipher] [-F ssh_config] [-i identity_file] 2 [-l limit] [-o ssh_option] [-P port] [-S program] 3 [[user@]host1:]file1 ... [[user@]host2:]file2 4 5 -1: 强制scp命令使用协议ssh1 6 -2: 强制scp命令使用协议ssh2 7 -4: 强制scp命令只使用IPv4寻址 8 -6: 强制scp命令只使用IPv6寻址 9 -B: 使用批处理模式(传输过程中不询问传输口令或短语) 10 -C: 允许压缩。(将-C标志传递给ssh,从而打开压缩功能) 11 -p:保留原文件的修改时间,访问时间和访问权限。 12 -q: 不显示传输进度条。 13 -r: 递归复制整个目录。 14 -v:详细方式显示输出。scp和ssh(1)会显示出整个过程的调试信息。这些信息用于调试连接,验证和配置问题。 15 -c cipher: 以cipher将数据传输进行加密,这个选项将直接传递给ssh。 16 -F ssh_config: 指定一个替代的ssh配置文件,此参数直接传递给ssh。 17 -i identity_file: 从指定文件中读取传输时使用的密钥文件,此参数直接传递给ssh。 18 -l limit: 限定用户所能使用的带宽,以Kbit/s为单位。 19 -o ssh_option: 如果习惯于使用ssh_config(5)中的参数传递方式, 20 -P port:注意是大写的P, port是指定数据传输用到的端口号 21 -S program: 指定加密传输时所使用的程序。此程序必须能够理解ssh(1)的选项。

a)使用scp指令复制本地文件到远程:

![]()

出现如下所示的错误

ssh: connect to host 192.168.1.118 port 22: Connection refused

lost connection

这表示未能完全安装好openssh-server和ssh

sudo apt-get install openssh-server

这样就完成了从本地将数据上传到远程Host上面。

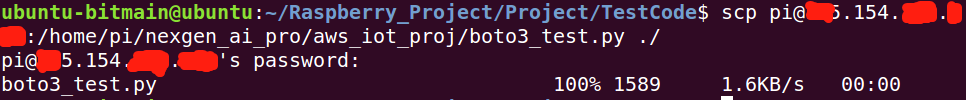

b)从远程Host复制文件到本地:

44-- 每次使用树莓派通过 sudo raspi-config命令打开某些服务或者功能之后,密码出现错误:

这是由于使用sudo raspi-config之后,树莓派删除了之前设置的配置的密码,因此每次设置完成之后一定要在raspi-config中修改树莓派的密码让新的密码生效。

45-- pip安装包出现【TLSV1_ALERT_PROTOCOL_VERSION】证书问题

出现这个错误的原因是python.org已经不支持TLSv1.0和TLSv1.1了。更新pip可以解决这个问题。但是如果使用传统的python -m pip install --upgrade pip的方式,还是会出现那个问题。这是一个鸡和蛋的问题,你因为TLS证书的问题需要去升级pip,升pip的时候又因为TLS证书的原因不能下载最新版本的pip。这时候就没有办法了,只能手动的去升级pip。

1. mac或者linux操作系统:在终端下执行命令:

curl https://bootstrap.pypa.io/get-pip.py | python

2. windows操作系统:从https://bootstrap.pypa.io/get-pip.py下载get-pip.py文件,然后使用python运行这个文件python get-pip.py即可。

46-- Linux下执行程序出现 Text file busy ?(当一个程序正在运行时,使用其他命令,如cp、mv等出现Text file busy的错误!!!)

Step1:首先使用fuser命令查看执行程序的进程PID,或者使用ps查看进程ID:

fuser <程序文件名>

Step2:使用kill关闭进程:

kill -9 PID

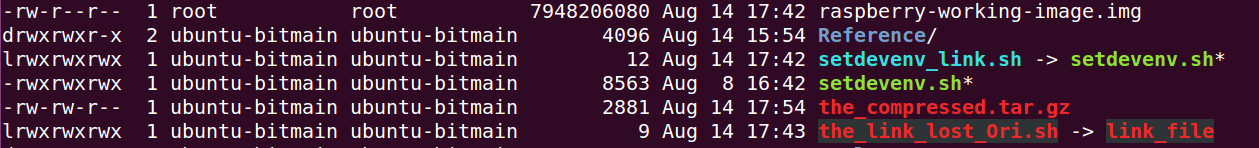

47-- Linux不同颜色文件代表的意义:

- 绿色文件: 可执行文件,可执行的程序

- 红色文件:压缩文件或者包文件(注意:当symbol link文件的源丢失之后,link文件为红色,不可用!)

- 蓝色文件:目录

- 白色文件:一般性文件,如文本文件,配置文件,源码文件等

- 浅蓝色文件:链接文件,主要是使用ln命令建立的文件

- 红色闪烁:表示链接的文件有问题

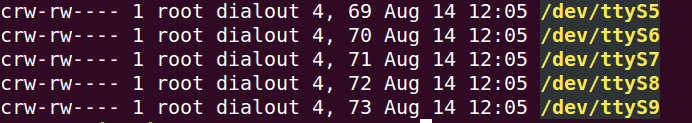

- 黄色:表示设备文件

- 灰色:表示其他文件

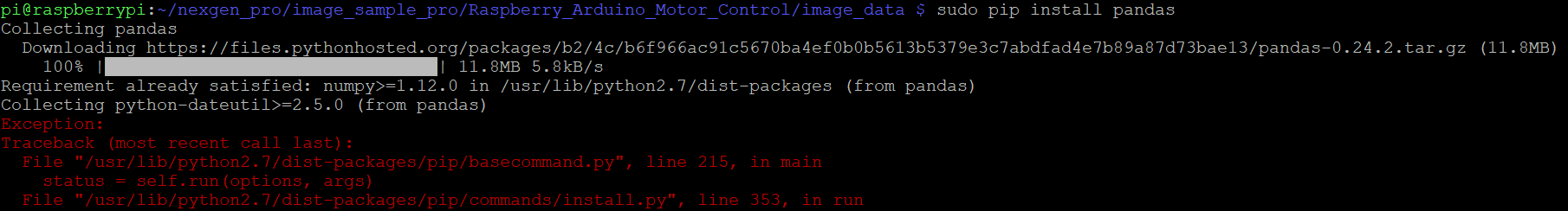

48 -- pip 安装pandas出现问题:

如图所示,安装python-dateutil包出现了问题,安装一下libopenblas-dev就可以解决问题啦:

sudo apt-get install libopenblas-dev

sudo pip install pandas

49 -- CodeError Summary(代码错误状态总结):

Section1:Float Point Exception

After i succeefully compiling the code with cross-compile-tools(arm-linux-gnueabihf-gcc),There is no Error with code.But while i am running the code on the ARM platform,here get the Error:

Here the two conditions create the Code Error:

1. The denominator should not to be zero.(You must be careful that the denominator can not to be zero.before you using some code with denominator calculate,you should firstly initial the variable!!!)

1 int asic = 100; 2 dev->interval = 0; 3 [...Code initial the dev->interval not to be Zero...] 4 asic_pos = asic/dev->interval;

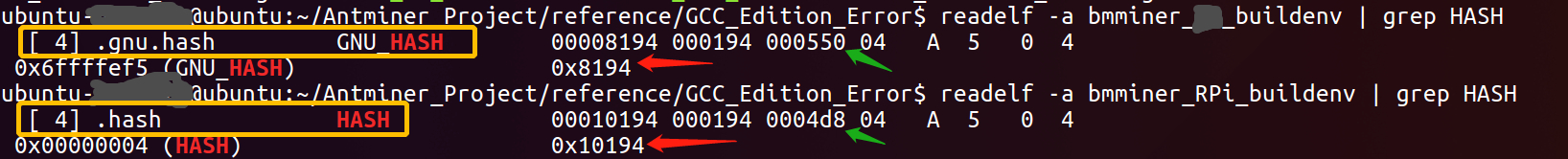

2. The version of you cross-compile tool is higher than the hardware's #(CC) env running the code compiled just now!

同一个程序在一台高版本Linux上运行时没有问题,而在另一台低版本机器上运行报Floating Point Exception时,那么这极有可能是由高版本gcc链接造成的。高版本的gcc在链接时采用了新的哈希技术来提高动态链接的速度,这在低版本中是不支持的。因此会发生这个错误。gcc就是一个编译器。编译出来的软件在低版本操作系统上有些技术不支持造成这个原因。

Here the test on HASH value:

We can see that the HASH value is diff.So we need to fix this bug--简单的说就是编译的时候加个参数, 把两中hash都编译进入,向下兼容。:

To see if this is conclusively the problem, build with -Wl,--hash-style=sysv or -Wl,--hash-style=both and see if the crash goes away.

Reference:

https://blog.csdn.net/zhaoyongyd/article/details/21013901

http://ju.outofmemory.cn/entry/313368

50 -- Ubuntu每次启动都显示System program problem detected的解决办法

sudo gedit /etc/default/apport

将enabled=1改为enabled=0保存退出即可

reference:https://www.cnblogs.com/liubin0509/p/6178222.html

51 --Ubuntu下交叉编译的程序,在ARM平台上运行出现“-sh: ./main: No such file or directory” 的错误,具体如下所示:

这种错误一般都是由于宿主机和目标机器之间在链接库上存在一定的差异,具体参看下面的连接,因此我们可以直接采用静态编译的方式,而不是采用动态编译,这样就能够正确的运行了。

动态编译结果:

静态编译的结果:

![]()

因此我们使用编译选项 -static:

arm-linux-gcc -static main.c -o main

交叉编译错误解决:https://blog.csdn.net/qq_37860012/article/details/87278320

动态编译静态编译区别:https://blog.csdn.net/qq_37659294/article/details/99692418

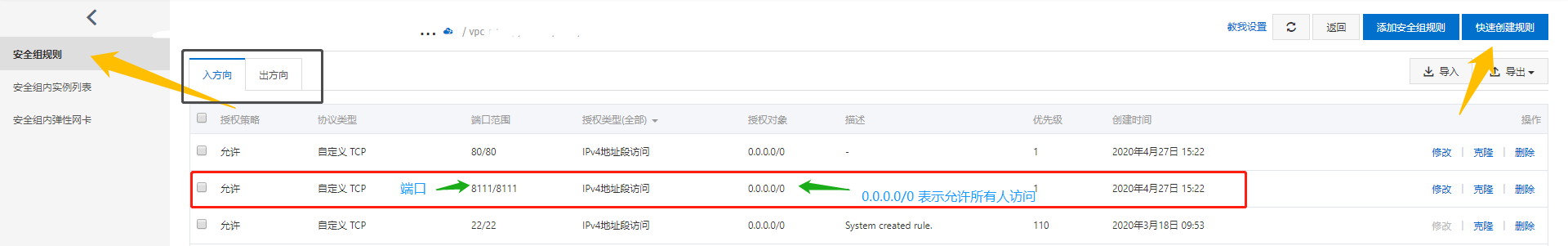

52 --访问阿里云服务器特殊端口无效,通过ufw设置关闭防火墙还是无效:

阿里云服务器除了在ECS实例本身系统自带了防火墙,其内网网关也对防火墙进行了相关的设置,需要设置使能端口如下:

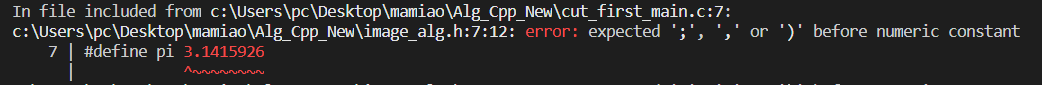

53 -- 使用MinGW64的gcc编译程序时,出现如下错误(“error: expected ';', ',' or ')' before numeric constant):

- “error: expected ';', ',' or ')' before numeric constant #define pi 3.1415926”

- “error: expected ';', ',' or ')' before numeric constant #define H 480”

如图所示,定义的pi以及H出现了宏定义的问题,思考可能是由于在系统文件中和你的程序代码中重复定义或者冲突导致的,解决办法有两种:

- 第一pi的宏已经在其他系统文件中定义过了,因此重复定义会导致出现问题,可以考虑更改pi为pi_local,同样的H改为H_local即可解决问题。

- 将系统头文件放在最前面,自己定义的macro宏或者自定义的库文件放在系统#include之后,这样也能够解决问题。

综上所述,我们一般最好是采用方案2,在引用自己定义的头文件或者定义宏变量的时候,一般放在引用库头文件之后,这样能够避免出现在系统头文件中定义的宏或者变量被迫重定义,这样将导致程序无法顺利编译通过,出现如上的错误。

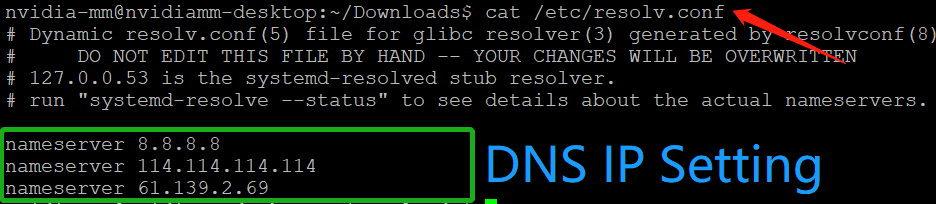

54 -- Jetson Nano DNS地址配置问题:

在使用Jetson nano板卡时,wget命令出现无法访问目标域名的情况,通过ping百度域名,确实出现不能ping通的情况,但是仍然可以ping通百度IP地址,因此怀疑是DNS存在问题,修改DNS的方式如下所示:

cat /etc/resolv.conf # 查看DNS配置情况 sudo vim /etc/resolvconf/resolv.conf.d/base # 修改添加配置DNS地址(8.8.8.8/114.114.114.114) sudo resolvconf -u # 使修改结果立刻生效

注意修改添加的DNS IP地址为8.8.8.8/114.114.114.114,添加完成DNS地址之后测试ping www.baidu.com即可通了,如下图所示:

ping www.baidu.com的结果(可以使用 host/dig/nslookup www.baidu.com来解析域名对应的IP地址):

![]()

55 -- GitLab忘记管理员密码,重置:

# 进入控制 gitlab-rails console # 查找该用户 user = User.where(username: 'root').first # 修改密码 user.password='newpassword' # 保存 user.save!

上述操作中,newpassword为重新设置的登录密码。

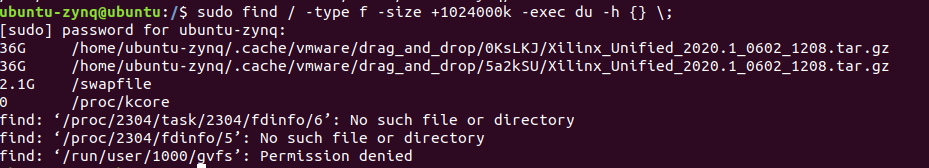

56 -- 查看Ubuntu等发行版系统中大于1G文件的指令:

sudo find / -type f -size +1024000k -exec du -h {} \;

通过上述指令便能够查看根目录下所有文件夹中的大于1G的文件内容,如下所示为我查看的结果:

我们可以看到,两个非常大的文件隐藏在.cache文件夹中,这是我在拖拽拷贝文件过程中的缓存文件,一开始没找到在哪里占用了大量的空间,现在即可删除。

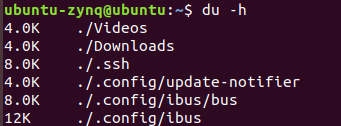

当然我们也可以使用‘du -h’命令来查看当前文件下的所有文件大小:

du -h ./

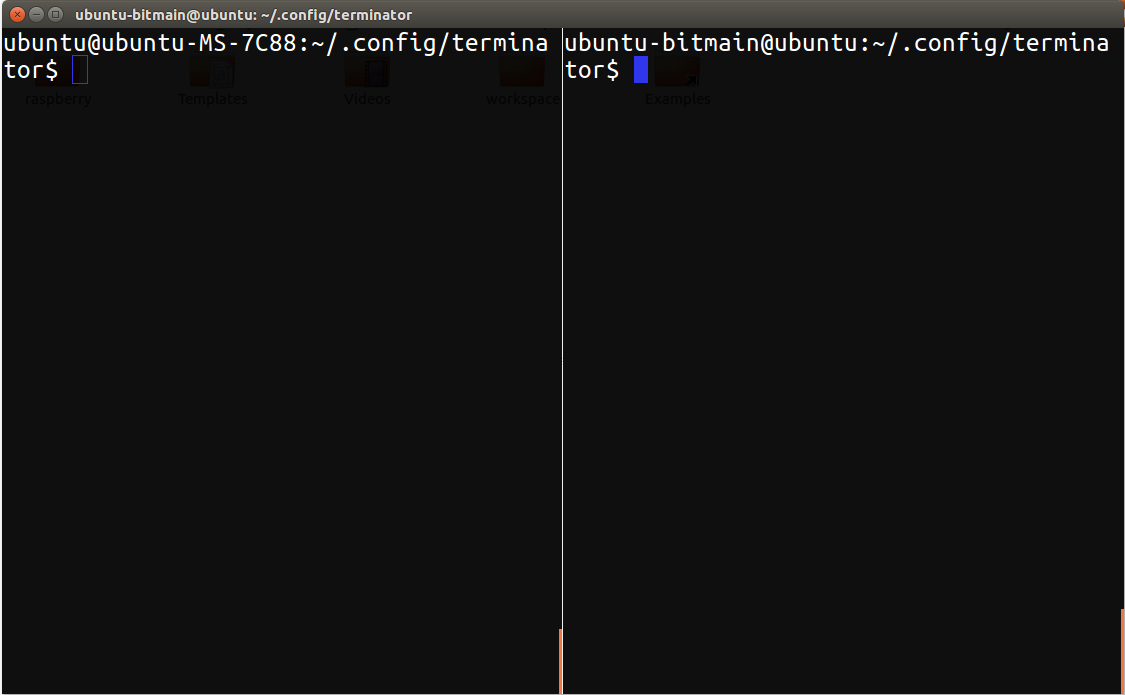

57 -- 查看Ubuntu等发行版系统中大于1G文件的指令:

1.安装Terminator软件:

sudo apt-get install terminator

2.添加配置文件以及配置文件夹:

mkdir ~/.config/terminator # 创建文件夹 cd ~/.config/terminator # 切换到对应文件夹 sudo vim config # 创建config文件并写配置

![]()

修改config文件内容

[global_config] handle_size = 1 inactive_color_offset = 1.0 title_font = mry_KacstQurn Bold 11 title_hide_sizetext = True [keybindings] [layouts] [[default]] [[[child1]]] parent = window0 profile = default type = Terminal [[[window0]]] parent = "" type = Window [plugins] [profiles] [[default]] background_darkness = 0.94 background_image = None background_type = transparent cursor_color = "#3036ec" custom_command = tmux font = Ubuntu Mono 20 foreground_color = "#ffffff" login_shell = True show_titlebar = False use_system_font = False

打开Terminator如下所示,能够增加分栏(ALT+左右切换左右窗口)

相关配置参考:参考链接

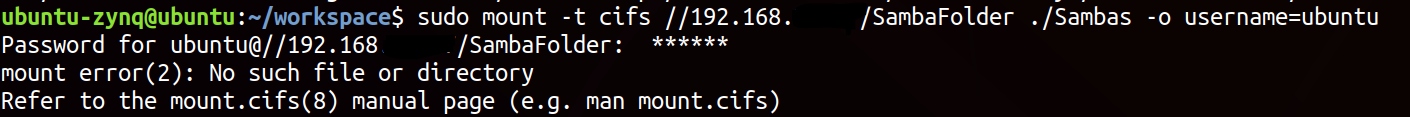

58-- 在ubuntu系统下挂载Samba服务及报错

首先在Server端配置好Samba服务,具体参见我的博客,接下来具体挂载的方式:

1. 由于Ubuntu18.04版本不自带Samba的挂载命令需要我们首先安装支持Samba挂载的软件包:

sudo apt-get install cifs-utils

2. 使用如下所示的挂载命令(其中 ShareFolder 是共享路径签名,而不是文件夹路径):

sudo mount -t cifs //192.168.x.x/ShareFolder ./Sambas -o username=ubuntu

Notice:这里一定需要注意的是 ‘//192.168.x.x/ShareFolder’ 是Samba服务端配置的共享目录签名!,而不是真实的文件路径!否则报错如下:

1.挂载失败参考:https://blog.csdn.net/qq_21419995/article/details/80739052

2.Ubuntu下挂载Samba服务参考:cnblogs.com/keanuyaoo/p/3400203.html

3. Ubuntu 挂载群晖系统 Samba,出现错误: `mount error(95): Operation not supported` ,解决如下:[参考](https://blog.csdn.net/qq_26003101/article/details/112261336)

增加:`vers=2.0`

`sudo mount -t cifs //192.168.xx.xx/SambaFolder ./Samba -o username=username,password='passwd',vers=2.0`

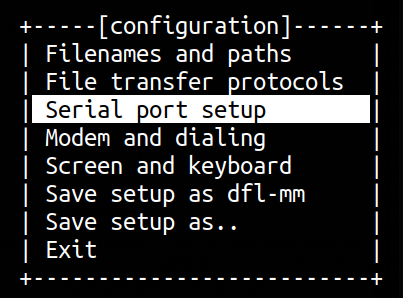

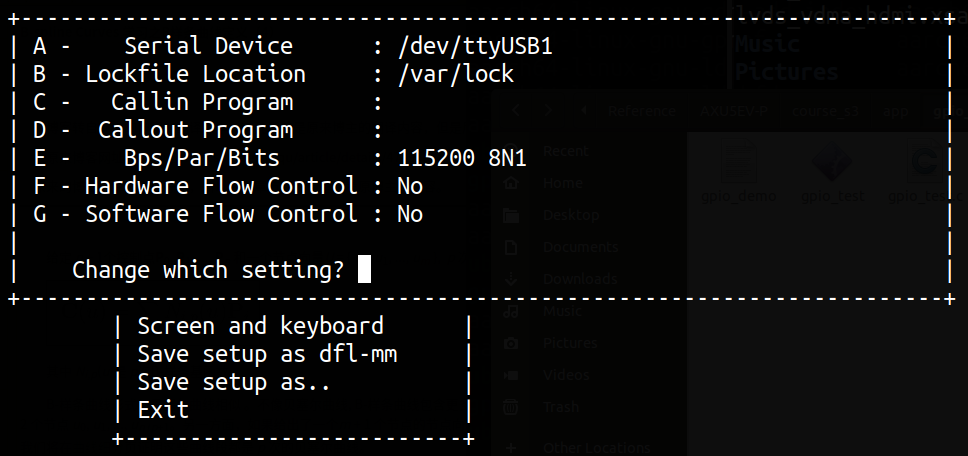

59-- minicom不能正常打印开发板Linux系统的串口打印信息

需要配置:硬/软件流控制分别键入“F”“G”并且都选NO

设置成no/no 或者 no/yes都可以(之后查到网上有说法是:一般都设成no),和超级终端一样可以输入命令了!

Ctrl A+Z ---> o

Serial port setup ---> F/G

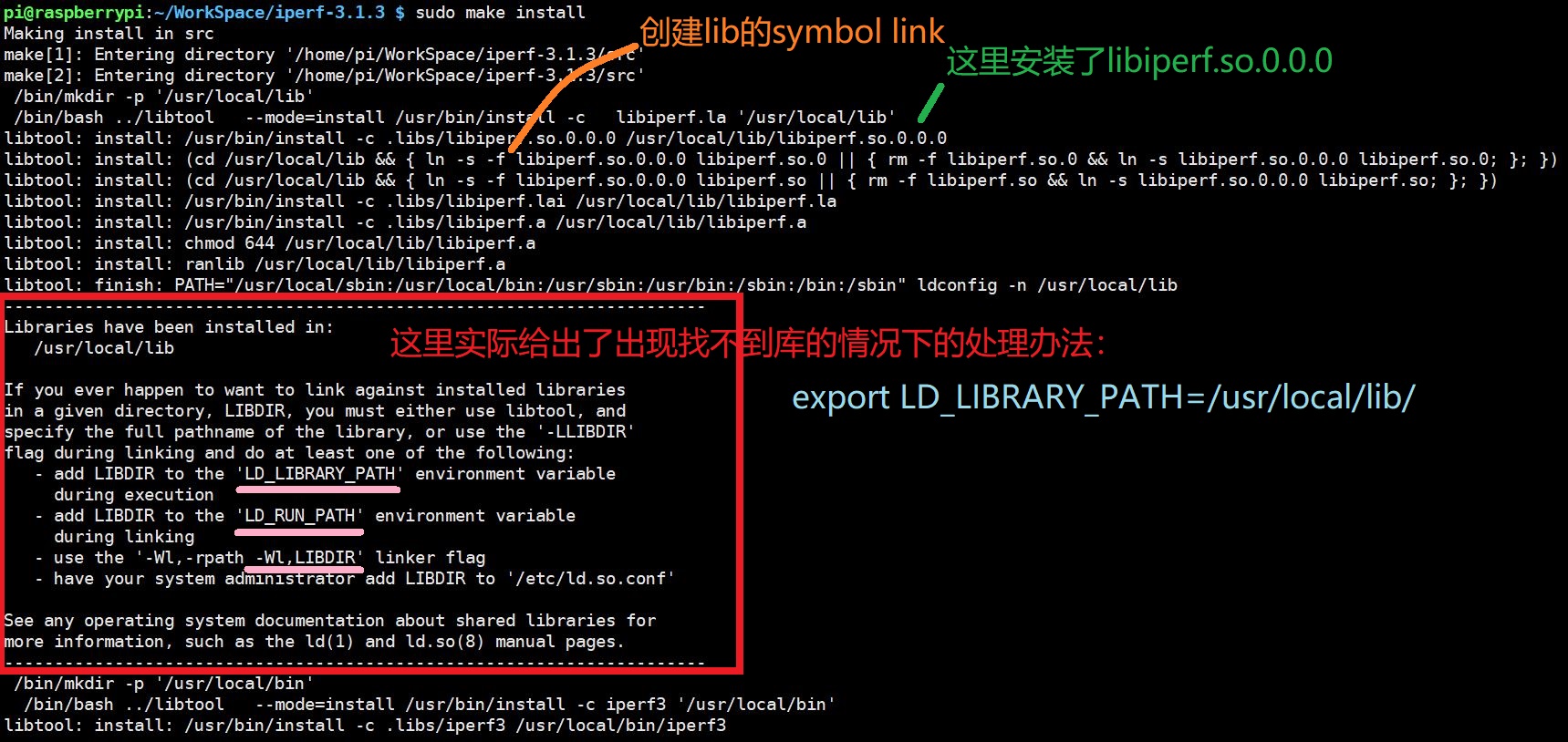

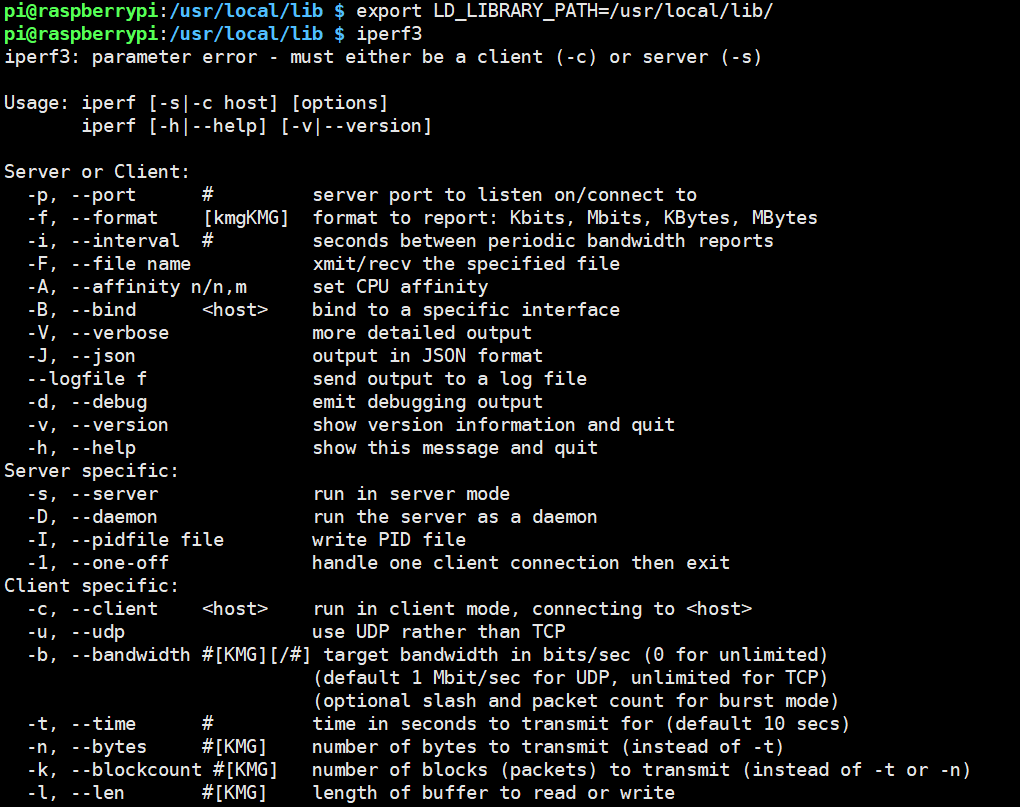

60-- 编译和链接通过生成可执行文件,但运行时找不到动态库

编译iperf网络测试工具包过程中,编译链接能够顺利完成,但是在进行了基本的库,可执行程序等安装之后,调用可执行文件发现不能正常执行iperf3这个可执行文件,提示内容如下:

提示不能找到对应的动态链接库调用,通过阅读编译安装过程提示信息:

1 ./configure 2 make 3 make install

安装提示信息如下:

1 pi@raspberrypi:~/WorkSpace/iperf-3.1.3 $ sudo make install 2 Making install in src 3 make[1]: Entering directory '/home/pi/WorkSpace/iperf-3.1.3/src' 4 make[2]: Entering directory '/home/pi/WorkSpace/iperf-3.1.3/src' 5 /bin/mkdir -p '/usr/local/lib' 6 /bin/bash ../libtool --mode=install /usr/bin/install -c libiperf.la '/usr/local/lib' 7 libtool: install: /usr/bin/install -c .libs/libiperf.so.0.0.0 /usr/local/lib/libiperf.so.0.0.0 8 libtool: install: (cd /usr/local/lib && { ln -s -f libiperf.so.0.0.0 libiperf.so.0 || { rm -f libiperf.so.0 && ln -s libiperf.so.0.0.0 libiperf.so.0; }; }) 9 libtool: install: (cd /usr/local/lib && { ln -s -f libiperf.so.0.0.0 libiperf.so || { rm -f libiperf.so && ln -s libiperf.so.0.0.0 libiperf.so; }; }) 10 libtool: install: /usr/bin/install -c .libs/libiperf.lai /usr/local/lib/libiperf.la 11 libtool: install: /usr/bin/install -c .libs/libiperf.a /usr/local/lib/libiperf.a 12 libtool: install: chmod 644 /usr/local/lib/libiperf.a 13 libtool: install: ranlib /usr/local/lib/libiperf.a 14 libtool: finish: PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/sbin" ldconfig -n /usr/local/lib 15 ---------------------------------------------------------------------- 16 Libraries have been installed in: 17 /usr/local/lib 18 19 If you ever happen to want to link against installed libraries 20 in a given directory, LIBDIR, you must either use libtool, and 21 specify the full pathname of the library, or use the '-LLIBDIR' 22 flag during linking and do at least one of the following: 23 - add LIBDIR to the 'LD_LIBRARY_PATH' environment variable 24 during execution 25 - add LIBDIR to the 'LD_RUN_PATH' environment variable 26 during linking 27 - use the '-Wl,-rpath -Wl,LIBDIR' linker flag 28 - have your system administrator add LIBDIR to '/etc/ld.so.conf' 29 30 See any operating system documentation about shared libraries for 31 more information, such as the ld(1) and ld.so(8) manual pages. 32 ---------------------------------------------------------------------- 33 /bin/mkdir -p '/usr/local/bin' 34 /bin/bash ../libtool --mode=install /usr/bin/install -c iperf3 '/usr/local/bin' 35 libtool: install: /usr/bin/install -c .libs/iperf3 /usr/local/bin/iperf3 36 /bin/mkdir -p '/usr/local/include' 37 /usr/bin/install -c -m 644 iperf_api.h '/usr/local/include' 38 /bin/mkdir -p '/usr/local/share/man/man1' 39 /usr/bin/install -c -m 644 iperf3.1 '/usr/local/share/man/man1' 40 /bin/mkdir -p '/usr/local/share/man/man3' 41 /usr/bin/install -c -m 644 libiperf.3 '/usr/local/share/man/man3' 42 make[2]: Leaving directory '/home/pi/WorkSpace/iperf-3.1.3/src' 43 make[1]: Leaving directory '/home/pi/WorkSpace/iperf-3.1.3/src' 44 Making install in examples 45 make[1]: Entering directory '/home/pi/WorkSpace/iperf-3.1.3/examples' 46 make[2]: Entering directory '/home/pi/WorkSpace/iperf-3.1.3/examples' 47 make[2]: Nothing to be done for 'install-exec-am'. 48 make[2]: Nothing to be done for 'install-data-am'. 49 make[2]: Leaving directory '/home/pi/WorkSpace/iperf-3.1.3/examples' 50 make[1]: Leaving directory '/home/pi/WorkSpace/iperf-3.1.3/examples' 51 make[1]: Entering directory '/home/pi/WorkSpace/iperf-3.1.3' 52 make[2]: Entering directory '/home/pi/WorkSpace/iperf-3.1.3' 53 make[2]: Nothing to be done for 'install-exec-am'. 54 make[2]: Nothing to be done for 'install-data-am'. 55 make[2]: Leaving directory '/home/pi/WorkSpace/iperf-3.1.3' 56 make[1]: Leaving directory '/home/pi/WorkSpace/iperf-3.1.3'

根据上述提示的情况,只需要对环境变量进行配置即可:

export LD_LIBRARY_PATH=/usr/local/lib/

其他解决方案:

1. 执行程序前,配一下库的搜索路径:

export LD_LIBRARY_PATH=/usr/local/lib/

2.检查一下/etc/ld.so.conf/里的conf文件,看是否包含/usr/local/lib

有点话,直接sudo ldconfig更新系统;没有的话讲该路径加入,然后再更新。

3.网上找到的一些资料:

首先回答前面的问题,一共有多少种方法来指定告诉linux共享库链接器ld.so已经编译好的库libbase.so的位置呢?答案是一共有五种,它们都可以通知ld.so去哪些地方找下已经编译好的c语言函数动态库,它们是:

1)ELF可执行文件中动态段中DT_RPATH所指定的路径。即在编译目标代码时, 对gcc加入链接参数“-Wl,-rpath”指定动态库搜索路径,eg:gcc -Wl,-rpath,/home/arc/test,-rpath,/lib/,-rpath,/usr/lib/,-rpath,/usr/local/lib test.c

2)环境变量LD_LIBRARY_PATH 指定的动态库搜索路径

3)/etc/ld.so.cache中所缓存的动态库路径,这个可以通过先修改配置文件/etc/ld.so.conf中指定的动态库搜索路径,然后执行ldconfig命令来改变。

4)默认的动态库搜索路径/lib

5)默认的动态库搜索路径/usr/lib

另外:在嵌入式Linux系统的实际应用中,1和2被经常使用,也有一些相对简单的的嵌入式系统会采用4或5的路径来规范动态库,3在嵌入式系统中使用的比较少, 因为有很多系统根本就不支持ld.so.cache。

那么,动态链接器ld.so在这五种路径中,是按照什么样的顺序来搜索需要的动态共享库呢?答案这里先告知就是按照上面的顺序来得,即优先级是:1-->2-->3-->4-->5。

Reference:

61-- 安装后ubuntu18.04后Software出现Unable to download updates from extensions.gnome.org的问题

sudo apt-get install gnome-shell-extensions

62-- g++: internal compiler error: Killed (program cc1plus) OMPL编译错误

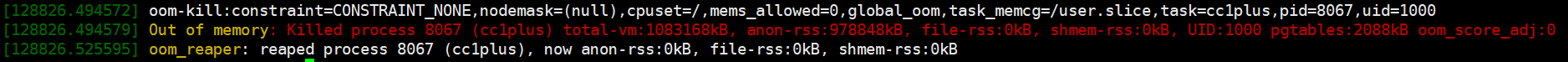

g++: internal compiler error: Killed (program cc1plus) Please submit a full bug report, with preprocessed source if appropriate. See <file:///usr/share/doc/gcc-7/README.Bugs> for instructions.

可以打印出内核相关信息:dmesg

结果如下所示:

[128826.494579] Out of memory: Killed process 8067 (cc1plus) total-vm:1083168kB, anon-rss:978848kB, file-rss:0kB, shmem-rss:0kB, UID:1000 pgtables:2088kB oom_score_adj:0 [128826.525595] oom_reaper: reaped process 8067 (cc1plus), now anon-rss:0kB, file-rss:0kB, shmem-rss:0kB

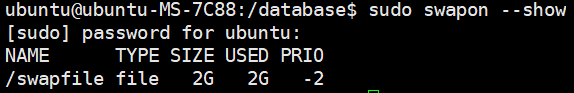

通过上述提示可知,在编译的过程中出现了内存溢出的情况,而操作系统为了确保整个系统的正常运行,操作系统将会将对所有进程程序的内存占用情况进行排序,排序完成后将会对内存占用最高的程序杀死killed从而释放内存,而此时这个被杀死的程序时cc1plus的话,那么gcc或者g++程序将会奔溃或者报错,然而这种穷狂并不是程序代码导致的编译器编译失败,而是OS操作系统杀死了c1plus进程导致的结果,处理方法如下(在此之前可以先查看系统是否已经存在swapfile):

如下命令中可以更具实际的需要情况修改参数内容,包括bs=以及count=,从而达到你想要的swap空间大小

1 sudo dd if=/dev/zero of=/swapfile bs=64M count=16 # create a file which will be used as a swap space. bs is the size of one block. count is num of blocks. it will get 1024K * 1M = 1G space 2 sudo chmod 600 /swapfile # Ensure that only the root user can read and write the swap file 3 sudo mkswap /swapfile # set up a Linux swap area on the file 4 sudo swapon /swapfile # activate the swap

接下来就可以正常编译代码了,但是一定要在编译完之后,恢复原来的编译内存交换空间的状态:

1 sudo swapoff /swapfile 2 sudo rm /swapfile

查看系统是否已经存在swapfile:

sudo swapon --show

如果没有上述的输出内容,则说明当前系统没有swap space可以使用,若已经有/swapfile则可以删除后新建以增加swap space大小

参考Stack Flow解释:https://stackoverflow.com/questions/30887143/make-j-8-g-internal-compiler-error-killed-program-cc1plus

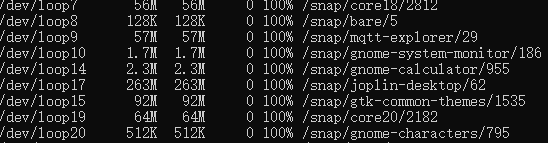

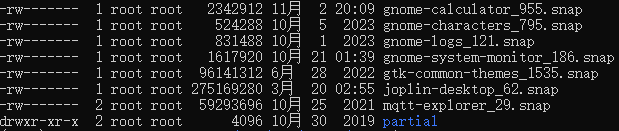

63-- Ubuntu磁盘爆满,需要清理 Snap 保留的旧软件包以释放磁盘空间

df -h # 查看磁盘占用情况

进入到snap文件夹中,查看具体占用的情况:

du -h /var/lib/snapd/snaps cd /var/lib/snapd/snaps ls -alsnap list --all

由于当前系统配置的snap保留的软件版本有三个,可以修改为2个,从而节约空间:

sudo snap set system refresh.retain=2

这里可以调用脚本删除文件 clean_snap.sh :

#!/bin/bash # Removes old revisions of snaps # CLOSE ALL SNAPS BEFORE RUNNING THIS set -eu LANG=C snap list --all | awk '/disabled/{print $1, $3}' | while read snapname revision; do snap remove "$snapname" --revision="$revision" done

https://blog.csdn.net/baidu_35692628/article/details/136519924

未完待续~