离线部署k8s集群

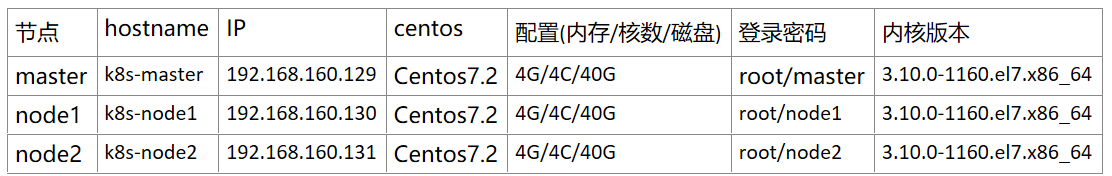

0、节点说明:

1、环境配置

1.1 关闭防火墙、selinux、swap

setenforce 0 sed -i 's/=enforcing/=disabled/g' /etc/selinux/config systemctl stop firewalld systemctl disable firewalld

1.2 做免密操作

生成密钥(master): sed -i '35cStrictHostKeyChecking no' /etc/ssh/ssh_config ssh-keygen -t rsa -f /root/.ssh/id_rsa -P "" cp /root/.ssh/id_rsa.pub /root/.ssh/authorized_keys 发送到其他节点: [root@k8s-master ~]# scp -r /root/.ssh/ root@192.168.160.130:/root [root@k8s-master ~]# scp -r /root/.ssh/ root@192.168.160.131:/root

免密测试:

免密测试: [root@k8s-master ~]# ssh 192.168.160.131 Last login: Fri May 21 10:04:36 2021 from 192.168.160.129 [root@k8s-node2 ~]# ssh 192.168.160.130 Last login: Fri May 21 09:12:10 2021 from 192.168.160.1 [root@k8s-node1 ~]# ssh 192.168.160.129 The authenticity of host '192.168.160.129 (192.168.160.129)' can't be established. ECDSA key fingerprint is SHA256:FSe5JBJyY0olAkh+sfW3uOj1fQ+6eCXR4F5meZLvrp4. ECDSA key fingerprint is MD5:50:44:e3:e2:35:5d:7f:68:9e:7e:63:b7:d4:e6:dd:6c. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '192.168.160.129' (ECDSA) to the list of known hosts. Last login: Fri May 21 09:12:31 2021 from 192.168.160.1 [root@k8s-master ~]#

1.3 设置主机名解析

#设置主机名:hostnamectl set-hostname HOSTNAME #配置主机名解析: cat > /etc/hosts << QQQ 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.160.129 k8s-master 192.168.160.130 k8s-node1 192.168.160.131 k8s-node2 QQQ #发送到其他节点 [root@k8s-master ~]# scp /etc/hosts 192.168.160.130:/etc [root@k8s-master ~]# scp /etc/hosts 192.168.160.131:/etc

1.4 关闭swap交换分区

[root@k8s-master ~]# swapoff -a && sysctl -w vm.swappiness=0 vm.swappiness = 0 [root@k8s-master ~]# ssh 192.168.160.130 "swapoff -a && sysctl -w vm.swappiness=0" vm.swappiness = 0 [root@k8s-master ~]# ssh 192.168.160.131 "swapoff -a && sysctl -w vm.swappiness=0" vm.swappiness = 0 [root@k8s-master ~]# sed -ri 's/.*swap.*/#&/' /etc/fstab [root@k8s-master ~]# cat /etc/fstab # # /etc/fstab # Created by anaconda on Thu May 20 17:07:46 2021 # # Accessible filesystems, by reference, are maintained under '/dev/disk' # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info # /dev/mapper/centos-root / xfs defaults 0 0 UUID=e22ac659-213c-476a-9ecb-6e6b9d5e7fba /boot xfs defaults 0 0 #/dev/mapper/centos-swap swap swap defaults 0 0 /dev/cdrom /iso iso9660 defaults 0 0 /dev/cdrom /iso iso9660 defaults 0 0 [root@k8s-master ~]#

验证swap分区关闭情况:

swap验证: [root@k8s-master ~]# free -h total used free shared buff/cache available Mem: 3.8G 264M 3.3G 11M 286M 3.4G Swap: 0B 0B 0B [root@k8s-master ~]# ssh 192.168.160.130 "free -h" ^[[A total used free shared buff/cache available Mem: 3.8G 269M 3.4G 11M 138M 3.4G Swap: 0B 0B 0B [root@k8s-master ~]# ssh 192.168.160.131 "free -h" total used free shared buff/cache available Mem: 3.8G 265M 3.4G 11M 134M 3.4G Swap: 0B 0B 0B [root@k8s-master ~]#

1.5 (选项)配置yum源:(如果公司有自己的yum,使用自己的yum源)

[root@k8s-master ~]# mkdir /etc/yum.repos.d/bak [root@k8s-master ~]# mount /dev/cdrom /iso [root@k8s-master ~]# echo "/dev/cdrom /iso iso9660 defaults 0 0">>/etc/fstab [root@k8s-master ~]# mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/bak [root@k8s-master ~]# cat>/etc/yum.repos.d/iso.repo <<QQQ > [iso] > name=iso > baseurl=file:///iso > enabled=1 > gpgcheck=0 > QQQ [root@k8s-master ~]# mkdir /iso [root@k8s-master ~]# mount /dev/cdrom /iso mount: /dev/sr0 写保护,将以只读方式挂载 [root@k8s-master ~]# echo "/dev/cdrom /iso iso9660 defaults 0 0">>/etc/fstab [root@k8s-master ~]# yum -y install vim net-tools unzip

1.6 安装docker (内核版本不同,安装的rpm包不同)

[root@k8s-master k8s]# scp -r /root/k8s 192.168.160.130:/root [root@k8s-master k8s]# scp -r /root/k8s 192.168.160.131:/root [root@k8s-master ~]# ls /root/k8s/docker/docker-rpm/ containerd.io-1.4.4-3.1.el7.x86_64.rpm docker-scan-plugin-0.7.0-3.el7.x86_64.rpm container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm fuse3-libs-3.6.1-4.el7.x86_64.rpm docker-ce-20.10.6-3.el7.x86_64.rpm fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm docker-ce-cli-20.10.6-3.el7.x86_64.rpm slirp4netns-0.4.3-4.el7_8.x86_64.rpm docker-ce-rootless-extras-20.10.6-3.el7.x86_64.rpm [root@k8s-master ~]# cd /root/k8s/docker/docker-rpm [root@k8s-master docker-rpm]# yum -y localinstall ./* [root@k8s-master docker-rpm]# cd .. [root@k8s-master2 docker]# ls docker-rpm docker-speed.sh [root@k8s-master docker]# sh docker-speed.sh { "exec-opts": ["native.cgroupdriver=systemd"] } Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service. #无外网可以忽略/etc/docker/daemon.json [root@k8s-master docker]# cat docker-speed.sh #!/bin/bash sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json <<-'EOF' { "exec-opts": ["native.cgroupdriver=systemd"] } EOF sudo systemctl daemon-reload sudo systemctl restart docker systemctl enable docker

1.7 要保证打开内置的桥功能,这个是借助于iptables来实现的

[root@k8s-master ~]# echo 1 >/proc/sys/net/bridge/bridge-nf-call-iptables [root@k8s-master ~]# echo 1 >/proc/sys/net/ipv4/ip_forward

2.开始部署master节点

2.1 安装kubectl、kubeadm、kubelet,并且设置kubelet开机自启

[root@k8s-master k8s-rpm]# ls conntrack-tools-1.4.4-7.el7.x86_64.rpm kubectl-1.19.0-0.x86_64.rpm libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm cri-tools-1.13.0-0.x86_64.rpm kubelet-1.19.0-0.x86_64.rpm libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm kubeadm-1.19.0-0.x86_64.rpm kubernetes-cni-0.8.7-0.x86_64.rpm libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm [root@k8s-master k8s-rpm]# yum localinstall -y ./* ……. [root@k8s-master ~]# systemctl enable kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

kubelet 运行在 Cluster 所有节点上,负责启动 Pod 和容器。

kubeadm 用于初始化 Cluster。

kubectl 是 Kubernetes 命令行工具。通过 kubectl 可以部署和管理应用,查看各种资源,创建、删除和更新各种组件。

2.2 初始化集群

2.2.1 拉取镜像:

[root@k8s-master k8s-images]# ll 总用量 1003112 -rw-r--r--. 1 root root 45365760 5月 20 13:45 coredns-1.7.0.tar.gz -rw-r--r--. 1 root root 225547264 5月 20 13:45 dashboard-v2.0.1.tar.gz -rw-r--r--. 1 root root 254629888 5月 20 13:45 etcd-3.4.9-1.tar.gz -rw-r--r--. 1 root root 65271296 5月 20 13:45 flannel-v0.13.1-rc2.tar.gz -rw-r--r--. 1 root root 120040960 5月 20 13:45 kube-apiserver-v1.19.0.tar.gz -rw-r--r--. 1 root root 112045568 5月 20 13:45 kube-controller-manager-v1.19.0.tar.gz -rw-r--r--. 1 root root 119695360 5月 20 13:45 kube-proxy-v1.19.0.tar.gz -rw-r--r--. 1 root root 46919168 5月 20 13:45 kube-scheduler-v1.19.0.tar.gz -rw-r--r--. 1 root root 692736 5月 20 13:44 pause-3.2.tar.gz [root@k8s-master ~]# docker load -i /root/k8s/k8s-images/kube-apiserver-v1.19.0.tar.gz [root@k8s-master ~]# docker load -i /root/k8s/k8s-images/coredns-1.7.0.tar.gz [root@k8s-master ~]# docker load -i /root/k8s/k8s-images/dashboard-v2.0.1.tar.gz [root@k8s-master ~]# docker load -i /root/k8s/k8s-images/etcd-3.4.9-1.tar.gz [root@k8s-master ~]# docker load -i /root/k8s/k8s-images/flannel-v0.13.1-rc2.tar.gz [root@k8s-master ~]# docker load -i /root/k8s/k8s-images/kube-controller-manager-v1.19.0.tar.gz [root@k8s-master ~]# docker load -i /root/k8s/k8s-images/kube-proxy-v1.19.0.tar.gz [root@k8s-master ~]# docker load -i /root/k8s/k8s-images/kube-scheduler-v1.19.0.tar.gz [root@k8s-master ~]# docker load -i /root/k8s/k8s-images/pause-3.2.tar.gz

2.2.2 修改镜像名称并删除旧镜像

#使用kubeadm config images list查看需要tag的镜像版本

[root@k8s-master ~]# kubeadm config images list I0521 13:38:41.768238 12611 version.go:252] remote version is much newer: v1.21.1; falling back to: stable-1.19 W0521 13:38:45.604893 12611 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] k8s.gcr.io/kube-apiserver:v1.19.11 k8s.gcr.io/kube-controller-manager:v1.19.11 k8s.gcr.io/kube-scheduler:v1.19.11 k8s.gcr.io/kube-proxy:v1.19.11 k8s.gcr.io/pause:3.2 k8s.gcr.io/etcd:3.4.9-1 k8s.gcr.io/coredns:1.7.0 #通过kubeadm config images list修改镜像名称: [root@k8s-master ~]# docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.19.0 k8s.gcr.io/kube-apiserver:v1.19.11 [root@k8s-master ~]# docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.19.0 k8s.gcr.io/kube-controller-manager:v1.19.11 [root@k8s-master ~]# docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.19.0 k8s.gcr.io/kube-scheduler:v1.19.11 [root@k8s-master ~]# docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.19.0 k8s.gcr.io/kube-proxy:v1.19.11 [root@k8s-master ~]# docker tag registry.cn-hangzhou.aliyuncs.com/k8sos/flannel:v0.13.1-rc2 quay.io/coreos/flannel:v0.11.0-amd64 [root@k8s-master ~]# docker tag registry.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:v3.2 [root@k8s-master ~]# docker tag registry.aliyuncs.com/google_containers/etcd:3.4.9-1 k8s.gcr.io/etcd:3.4.9-1 [root@k8s-master ~]# docker tag registry.aliyuncs.com/google_containers/coredns:1.7.0 k8s.gcr.io/coredns:v1.7.0 [root@k8s-master ~]# docker tag kubernetesui/dashboard:v2.0.1 k8s.gcr.io/dashboard:v2.0.1 [root@k8s-master ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE quay.io/coreos/flannel v0.11.0-amd64 60e169ce803f 3 months ago 64.3MB k8s.gcr.io/kube-proxy v1.19.11 bc9c328f379c 8 months ago 118MB k8s.gcr.io/kube-apiserver v1.19.11 1b74e93ece2f 8 months ago 119MB k8s.gcr.io/kube-controller-manager v1.19.11 09d665d529d0 8 months ago 111MB k8s.gcr.io/kube-scheduler v1.19.11 cbdc8369d8b1 8 months ago 45.7MB k8s.gcr.io/etcd 3.4.9-1 d4ca8726196c 10 months ago 253MB k8s.gcr.io/coredns 1.7.0 bfe3a36ebd25 11 months ago 45.2MB k8s.gcr.io/dashboard v2.0.1 85d666cddd04 12 months ago 223MB k8s.gcr.io/pause 3.2 80d28bedfe5d 15 months ago 683kB

2.2.3 初始化集群

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address 192.168.160.129 --pod-network-cidr=10.244.0.0/16 ... [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.160.129:6443 --token 8w1usi.xrk1kgpghbn7vo66 \ --discovery-token-ca-cert-hash sha256:8d6937dc0c3174bbc7ff95d5c1b3cc487027007cc782522e63dd3d2ac7b45787 [root@k8s-master ~]# mkdir -p $HOME/.kube [root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config [root@k8s-master ~]#

2.2.4 配置网络

[root@k8s-master k8s-conf]# kubectl apply -f kube-flannel.yml podsecuritypolicy.policy/psp.flannel.unprivileged created Warning: rbac.authorization.k8s.io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole clusterrole.rbac.authorization.k8s.io/flannel created Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds-amd64 created daemonset.apps/kube-flannel-ds-arm64 created daemonset.apps/kube-flannel-ds-arm created daemonset.apps/kube-flannel-ds-ppc64le created daemonset.apps/kube-flannel-ds-s390x created [root@k8s-master k8s-conf]#

[root@k8s-master test]# cat k8s/k8s-conf/kube-flannel.yml --- apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default spec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false # Users and groups runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny # Privilege Escalation allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false # Capabilities allowedCapabilities: ['NET_ADMIN'] defaultAddCapabilities: [] requiredDropCapabilities: [] # Host namespaces hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 # SELinux seLinux: # SELinux is unused in CaaSP rule: 'RunAsAny' --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged'] - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-amd64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - amd64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-amd64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-amd64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm64 namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - arm64 hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-arm64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-arm64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-arm namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - arm hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-arm command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-arm command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-ppc64le namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - ppc64le hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-ppc64le command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-ppc64le command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds-s390x namespace: kube-system labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/os operator: In values: - linux - key: beta.kubernetes.io/arch operator: In values: - s390x hostNetwork: true tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.11.0-s390x command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.11.0-s390x command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run/flannel - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg [root@k8s-master test]#

2.2.5 修改controller-manager与scheduler配置文件

[root@k8s-master ~]# cd /etc/kubernetes/manifests/ [root@k8s-master manifests]# ls etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml #将/etc/kubernetes/manifests/下的kube-controller-manager.yaml和kube-scheduler.yaml设置的默认端口是0导致的,解决方式是注释掉对应的port即可 [root@k8s-master manifests]# cat kube-controller-manager.yaml|grep port # - --port=0 port: 10257 port: 1025

2.2.6 检查

[root@k8s-master manifests]# kubectl get ns NAME STATUS AGE default Active 40m kube-node-lease Active 40m kube-public Active 40m kube-system Active 40m [root@k8s-master manifests]# kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health":"true"} [root@k8s-master manifests]# kubectl get po -n kube-system NAME READY STATUS RESTARTS AGE coredns-f9fd979d6-cxhbf 1/1 Running 0 41m coredns-f9fd979d6-vcvrb 1/1 Running 0 41m etcd-k8s-master 1/1 Running 0 41m kube-apiserver-k8s-master 1/1 Running 0 41m kube-controller-manager-k8s-master 1/1 Running 0 38m kube-flannel-ds-amd64-cchsr 1/1 Running 0 39m kube-proxy-xz7p5 1/1 Running 0 41m kube-scheduler-k8s-master 1/1 Running 0 38m [root@k8s-master manifests]#

3、node节点加入集群

[root@k8s-node1 ~]# cd k8s/k8s-images/ [root@k8s-node1 k8s-images]# docker load -i flannel.tar.gz [root@k8s-node1 k8s-images]# docker load -i kube-proxy.tar.gz [root@k8s-node1 k8s-images]# docker load -i pause.tar.gz [root@k8s-node1 k8s-images]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE quay.io/coreos/flannel v0.11.0-amd64 60e169ce803f 3 months ago 64.3MB k8s.gcr.io/kube-proxy v1.19.11 bc9c328f379c 8 months ago 118MB k8s.gcr.io/pause 3.2 80d28bedfe5d 15 months ago 683kB [root@k8s-node1 test]# kubeadm join 192.168.160.129:6443 --token 8w1usi.xrk1kgpghbn7vo66 \ > --discovery-token-ca-cert-hash sha256:8d6937dc0c3174bbc7ff95d5c1b3cc487027007cc782522e63dd3d2ac7b45787 [preflight] Running pre-flight checks [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.6. Latest validated version: 19.03 [WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service' [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

注:

master节点需要的镜像有: k8s.gcr.io/kube-scheduler v1.19.3 k8s.gcr.io/kube-apiserver v1.19.3 k8s.gcr.io/kube-controller-manager v1.19.3 k8s.gcr.io/etcd 3.4.13-0 k8s.gcr.io/coredns 1.7.0 kubernetesui/dashboard v2.0.1 quay.io/coreos/flannel v0.13.0 k8s.gcr.io/kube-proxy v1.19.3 kubernetesui/metrics-scraper v1.0.4 k8s.gcr.io/pause 3.2 node节点需要的镜像有: quay.io/coreos/flannel v0.13.0 k8s.gcr.io/kube-proxy v1.19.3 kubernetesui/metrics-scraper v1.0.4 k8s.gcr.io/pause 3.2

所需镜像及其安装包:

[root@k8s-master ~]# tree k8s k8s ├── dashboard │ ├── dashboard-v2.0.1.tar.gz │ ├── dashboard.yaml │ └── metrics-scraper-v1.0.4.tar.gz ├── docker │ ├── docker-rpm │ │ ├── containerd.io-1.4.4-3.1.el7.x86_64.rpm │ │ ├── container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm │ │ ├── docker-ce-20.10.6-3.el7.x86_64.rpm │ │ ├── docker-ce-cli-20.10.6-3.el7.x86_64.rpm │ │ ├── docker-ce-rootless-extras-20.10.6-3.el7.x86_64.rpm │ │ ├── docker-scan-plugin-0.7.0-3.el7.x86_64.rpm │ │ ├── fuse3-libs-3.6.1-4.el7.x86_64.rpm │ │ ├── fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm │ │ └── slirp4netns-0.4.3-4.el7_8.x86_64.rpm │ └── docker-speed.sh ├── k8s-conf │ └── kube-flannel.yml ├── k8s-images │ ├── coredns-1.7.0.tar.gz │ ├── etcd-3.4.9-1.tar.gz │ ├── flannel-v0.11.0-amd64.tar.gz │ ├── kube-apiserver-v1.19.11.tar.gz │ ├── kube-controller-manager-v1.19.11.tar.gz │ ├── kube-proxy-v1.19.11.tar.gz │ ├── kube-scheduler-v1.19.11.tar.gz │ └── pause-3.2.tar.gz └── k8s-rpm ├── conntrack-tools-1.4.4-7.el7.x86_64.rpm ├── cri-tools-1.13.0-0.x86_64.rpm ├── kubeadm-1.19.0-0.x86_64.rpm ├── kubectl-1.19.0-0.x86_64.rpm ├── kubelet-1.19.0-0.x86_64.rpm ├── kubernetes-cni-0.8.7-0.x86_64.rpm ├── libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm ├── libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm ├── libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm └── socat-1.7.3.2-2.el7.x86_64.rpm 6 directories, 32 files

链接:https://pan.baidu.com/s/1_LgbKOc8VT6VFi4G1HVqtA

提取码:n4j2

复制这段内容后打开百度网盘手机App,操作更方便哦

作者:无荨

-------------------------------------------

个性签名:学IT,就要做到‘活到老学到老’!

如果觉得这篇文章对你有小小的帮助的话,别忘记点个“推荐”哦!

浙公网安备 33010602011771号

浙公网安备 33010602011771号