基于Mindformers+mindspore框架在升腾910上进行qwen-7b-chat的lora微调

基于Mindformers+mindspore框架在昇腾910上进行qwen-7b-chat的8卡lora微调

主要参考文档:https://gitee.com/mindspore/mindformers/tree/r1.0/research/qwen

STEP 1: 环境准备

我使用mindformers官方提供的docker镜像进行微调,下载指令:

docker pull swr.cn-central-221.ovaijisuan.com/mindformers/mindformers1.0.2_mindspore2.2.13:20240416

启动容器指令参考:

#!/bin/bash

CONTAINER_NAME=mindformers-r1.0

CHECKPOINT_PATH=/var/images/llm_setup/model/qwen/Qwen-7B-Chat

DOCKER_CHECKPOINT_PATH=/data/qwen/models/Qwen-7B-Chat

IMAGE_NAME=swr.cn-central-221.ovaijisuan.com/mindformers/mindformers1.0.2_mindspore2.2.13:20240416

docker run -it -u root \

--device=/dev/davinci0 \

--device=/dev/davinci1 \

--device=/dev/davinci2 \

--device=/dev/davinci3 \

--device=/dev/davinci4 \

--device=/dev/davinci5 \

--device=/dev/davinci6 \

--device=/dev/davinci7 \

--device=/dev/davinci_manager \

--device=/dev/devmm_svm \

--device=/dev/hisi_hdc \

-v /etc/localtime:/etc/localtime \

-v /usr/local/Ascend/driver:/usr/local/Ascend/driver \

-v /var/log/npu/:/usr/slog \

-v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \

-v ${CHECKPOINT_PATH}:${DOCKER_CHECKPOINT_PATH} \

--name ${CONTAINER_NAME} \

${IMAGE_NAME} \

/bin/bash

环境验证

在命令行中输入如下指令进行验证,

python -c "import mindspore;mindspore.set_context(device_target='Ascend');mindspore.run_check()"

如果输出如下结果则环境没问题:

MindSpore version: 版本号

The result of multiplication calculation is correct, MindSpore has been installed on platform [CPU] successfully!

微调需要的代码下载

微调使用代码大部分来自于mindformers 官方提供,在镜像内执行代码下载及目录进入:

git clone -b r1.0 https://gitee.com/mindspore/mindformers.git

cd mindformers

RANK_TABLE_FILE 生成

开始微调前请先准备多卡微调所需的RANKFILE。用镜像执行需要退出镜像环境在镜像外进行生成:

# 如果容器外没有git clone mindformers代码库,可以通过wget下载需要的代码

wget https://gitee.com/mindspore/models/raw/master/utils/hccl_tools/hccl_tools.py

# 生成rank_table_file文件

python hccl_tools.py --device_num "[0,8)"

将生成的 hccl_8p_01234567_xx.xx.xx.xx.json 文件拷贝到容器内即可进行下面的微调。

STEP 2: 下载模型

由于使用mindformers框架,需要对权重进行转换。目前使用的这个镜像环境进行权重转换有环境上的冲突,无法安装相应的包,所以直接从官网下载转换后的权重、词表文件:

# 权重ckpt 大小29G

wget https://ascend-repo-modelzoo.obs.cn-east-2.myhuaweicloud.com/MindFormers/qwen/qwen_7b_base.ckpt

# 词表文件

wget https://ascend-repo-modelzoo.obs.cn-east-2.myhuaweicloud.com/MindFormers/qwen/qwen.tiktoken

STEP 3: 数据准备

微调qwen模型需要先将数据转换为以下json格式:

{

"id": "1",

"conversations": [

{

"from": "user",

"value": "Give three tips for staying healthy."

},

{

"from": "assistant",

"value": "1.Eat a balanced diet and make sure to include plenty of fruits and vegetables. \n2. Exercise regularly to keep your body active and strong. \n3. Get enough sleep and maintain a consistent sleep schedule."

}

]

},

然后再转换为适配mindformers的Mindrecord数据,可以使用如下脚本:

python research/qwen/qwen_preprocess.py \

--input_glob /path/alpaca-data-conversation.json \ # 源数据路径(已转换成以上格式)

--model_file /path/qwen.tiktoken \ # 词表路径

--seq_length 2048 \

--output_file /path/alpaca.mindrecord # 输出mindrecord格式数据路径

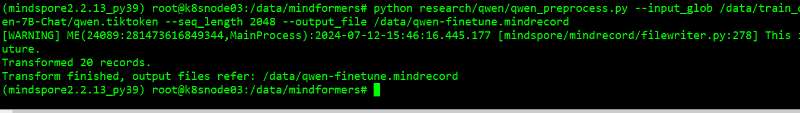

结果:

STEP 4: 开始微调

注意开始微调前需要执行STEP 1中的RANK_TABLE_FILE生成,确保容器内有 hccl_8p_01234567_xx.xx.xx.xx.json 文件;

启动脚本进行微调,修改yaml文件

启动以下指令进行微调

cd mindformers/research

bash run_singlenode.sh "python qwen/run_qwen.py \

--config qwen/run_qwen_7b_lora.yaml \

--load_checkpoint /data/qwen/models/Qwen-7B-Chat \

--use_parallel True \

--run_mode finetune \

--auto_trans_ckpt True \

--train_data /path/alpaca.mindrecord" \

/data/hccl_8p_01234567_10.17.2.76.json [0,8] 8

其中有如下注意要点:

-

qwen/run_qwen_7b_lora.yaml中为需要配置的参数文件,需要修改如下内容确保无误:load_checkpoint: 'model_dir' # 使用完整权重,权重按照`model_dir/rank_0/xxx.ckpt`格式存放 model_config: seq_length: 2048 # 与数据集长度保持相同 train_dataset: &train_dataset data_loader: type: MindDataset dataset_dir: "/path/alpaca.mindrecord" # 配置训练数据集文件夹路径 shuffle: True pet_config: pet_type: lora lora_rank: 64 lora_alpha: 16 lora_dropout: 0.05 target_modules: '.*wq|.*wk|.*wv|.*wo|.*w1|.*w2|.*w3' freeze_exclude: ["*wte*", "*lm_head*"] # 使用chat权重进行微调时删除该配置

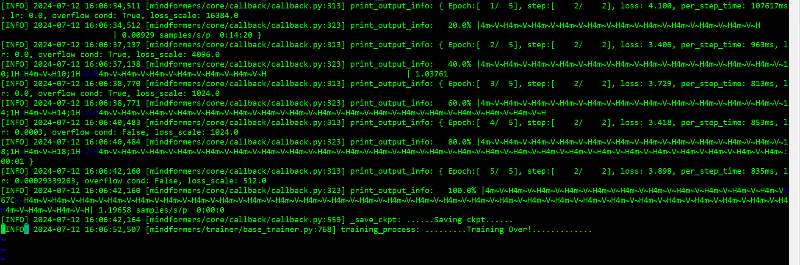

微调成功:

Q&A

- 报错 ValueError x.shape and y.shape need to broadcast,完整报错信息如下

...

[INFO] 2024-07-16 13:52:49,028 [mindformers/trainer/base_trainer.py:682] training_process: .........Build Running Wrapper From Config For Train..........

[INFO] 2024-07-16 13:52:49,028 [mindformers/trainer/base_trainer.py:500] create_model_wrapper: .........Build Model Wrapper for Train From Config..........

[INFO] 2024-07-16 13:52:49,040 [mindformers/trainer/base_trainer.py:689] training_process: .........Build Callbacks For Train..........

[INFO] 2024-07-16 13:52:49,042 [mindformers/core/callback/callback.py:530] __init__: Integrated_save is changed to False when using auto_parallel.

[INFO] 2024-07-16 13:52:49,043 [mindformers/trainer/base_trainer.py:724] training_process: .........Starting Init Train Model..........

[INFO] 2024-07-16 13:52:49,043 [mindformers/trainer/utils.py:321] transform_and_load_checkpoint: .........Building model.........

[ERROR] 2024-07-16 14:16:46,150 [mindformers/tools/cloud_adapter/cloud_monitor.py:43] wrapper: Traceback (most recent call last):

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/tools/cloud_adapter/cloud_monitor.py", line 34, in wrapper

result = run_func(*args, **kwargs)

File "/data/mindformers/research/qwen/run_qwen.py", line 137, in main

trainer.finetune(finetune_checkpoint=ckpt, auto_trans_ckpt=auto_trans_ckpt)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindspore/_checkparam.py", line 1313, in wrapper

return func(*args, **kwargs)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/trainer.py", line 485, in finetune

self.trainer.train(

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/causal_language_modeling/causal_language_modeling.py", line 97, in train

self.training_process(

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/base_trainer.py", line 739, in training_process

transform_and_load_checkpoint(config, model, network, dataset)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/utils.py", line 322, in transform_and_load_checkpoint

build_model(config, model, dataset, do_eval=do_eval, do_predict=do_predict)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/utils.py", line 446, in build_model

model.build(train_dataset=dataset, epoch=config.runner_config.epochs,

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindspore/train/model.py", line 1274, in build

self._init(train_dataset, valid_dataset, sink_size, epoch)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindspore/train/model.py", line 529, in _init

train_network.compile(*inputs)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindspore/nn/cell.py", line 997, in compile

_cell_graph_executor.compile(self, phase=self.phase,

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindspore/common/api.py", line 1547, in compile

result = self._graph_executor.compile(obj, args, kwargs, phase, self._use_vm_mode())

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindspore/ops/primitive.py", line 647, in __infer__

out[track] = fn(*(x[track] for x in args))

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindspore/ops/operations/math_ops.py", line 80, in infer_shape

return get_broadcast_shape(x_shape, y_shape, self.name)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindspore/ops/_utils/utils.py", line 70, in get_broadcast_shape

raise ValueError(f"For '{prim_name}', {arg_name1}.shape and {arg_name2}.shape need to "

ValueError: For 'Mul', x.shape and y.shape need to broadcast. The value of x.shape[-1] or y.shape[-1] must be 1 or -1 when they are not the same, but got x.shape = [8, 1, 1024] and y.shape = [1, 2048, 2048].

解决方法:确保微调所用的yaml的model_config.seq_length与STEP 3中数据转换成mindrecords的seq_length一致,像上面的报错就是源于一个设为1024,一个设为2048;

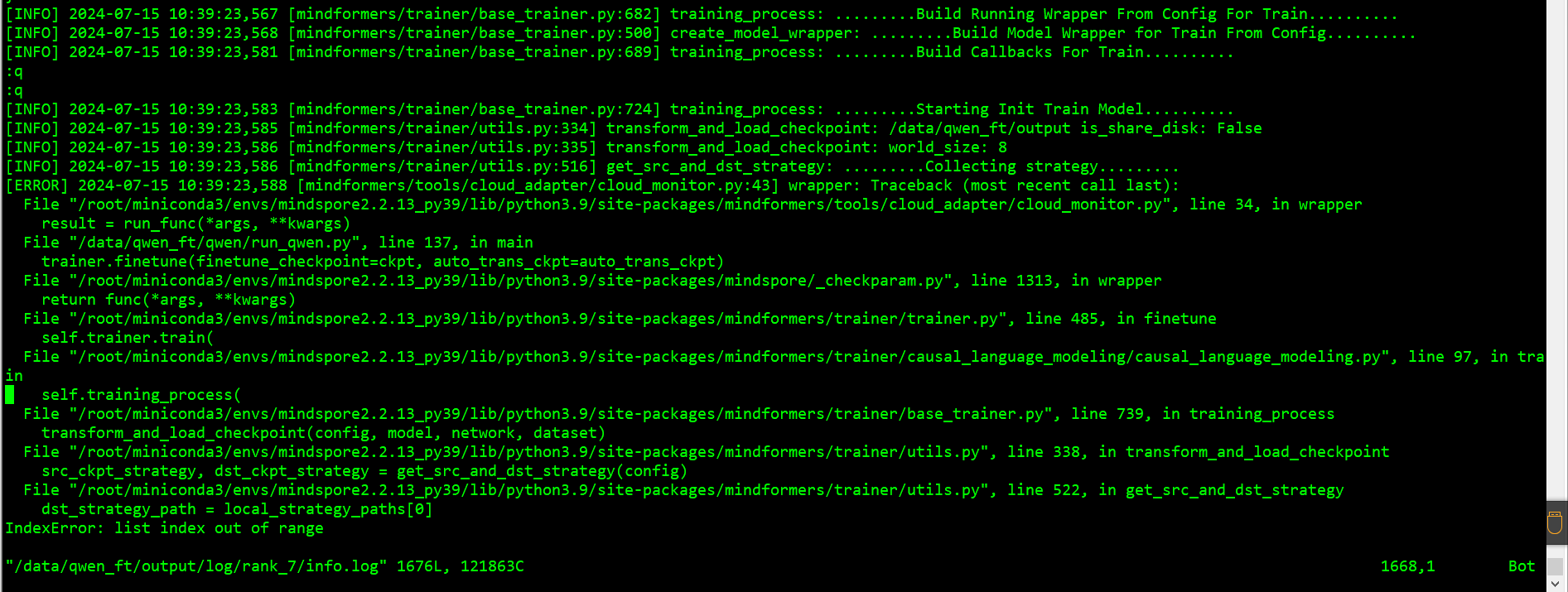

- dst_strategy_path = local_strategy_paths[0]报错IndexError: list index out of range

...

[INFO] 2024-07-16 10:52:20,510 [mindformers/trainer/base_trainer.py:682] training_process: .........Build Running Wrapper From Config For Train..........

[INFO] 2024-07-16 10:52:20,510 [mindformers/trainer/base_trainer.py:500] create_model_wrapper: .........Build Model Wrapper for Train From Config..........

[INFO] 2024-07-16 10:52:20,523 [mindformers/trainer/base_trainer.py:689] training_process: .........Build Callbacks For Train..........

[INFO] 2024-07-16 10:52:20,525 [mindformers/trainer/base_trainer.py:724] training_process: .........Starting Init Train Model..........

[INFO] 2024-07-16 10:52:20,527 [mindformers/trainer/utils.py:334] transform_and_load_checkpoint: /data/qwen_ft/output is_share_disk: False

[INFO] 2024-07-16 10:52:20,527 [mindformers/trainer/utils.py:335] transform_and_load_checkpoint: world_size: 8

[INFO] 2024-07-16 10:52:20,528 [mindformers/trainer/utils.py:516] get_src_and_dst_strategy: .........Collecting strategy.........

[ERROR] 2024-07-16 10:52:20,530 [mindformers/tools/cloud_adapter/cloud_monitor.py:43] wrapper: Traceback (most recent call last):

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/tools/cloud_adapter/cloud_monitor.py", line 34, in wrapper

result = run_func(*args, **kwargs)

File "/data/qwen_ft/qwen/run_qwen.py", line 137, in main

trainer.finetune(finetune_checkpoint=ckpt, auto_trans_ckpt=auto_trans_ckpt)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindspore/_checkparam.py", line 1313, in wrapper

return func(*args, **kwargs)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/trainer.py", line 485, in finetune

self.trainer.train(

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/causal_language_modeling/causal_language_modeling.py", line 97, in train

self.training_process(

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/base_trainer.py", line 739, in training_process

transform_and_load_checkpoint(config, model, network, dataset)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/utils.py", line 338, in transform_and_load_checkpoint

src_ckpt_strategy, dst_ckpt_strategy = get_src_and_dst_strategy(config)

File "/root/miniconda3/envs/mindspore2.2.13_py39/lib/python3.9/site-packages/mindformers/trainer/utils.py", line 522, in get_src_and_dst_strategy

dst_strategy_path = local_strategy_paths[0]

IndexError: list index out of range

这个问题产生的过程是,当我们使用完整权重(STEP 2下载的qwen_7b_base.ckpt),且微调的yaml文件配置了 auto_trans_ckpt=True 时,脚本会自动启动权重转换,将完整权重转换为分布在8张卡上训练的分布式权重,并生成8卡的策略文件。在这个过程中如果没有在目的地防止相应的权重文件,或者权重文件本身有损的情况下,程序没有按照期待的方式进行切分、生成策略文件,导致 local_strategy_paths 目录下没有相应格式的文件甚至是空的,就报了这个错误。可能的原因和解决方法如下:

- 检查权重是否按照

model_dir/rank_0/xxx.ckpt格式存放,存放路径不正确可能导致无法进行策略文件生成; - 检查权重是否有损坏,建议重新按照STEP 2 下载。

本文来自博客园,作者:落魄统计佬,转载请注明原文链接:https://www.cnblogs.com/tungsten106/p/18395937

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· DeepSeek “源神”启动!「GitHub 热点速览」

· 我与微信审核的“相爱相杀”看个人小程序副业

· 微软正式发布.NET 10 Preview 1:开启下一代开发框架新篇章

· C# 集成 DeepSeek 模型实现 AI 私有化(本地部署与 API 调用教程)

· spring官宣接入deepseek,真的太香了~