环境篇:CM+CDH6.3.2环境搭建(全网最全)

环境篇:CM+CDH6.3.2环境搭建(全网最全)

零 杂谈

0.1 相关大事件

- 国外:

2010年,美国总统办公室下属科学技术顾问委员会和信息技术顾问向奥巴马和国会提交《规划数字化未来》,报告提出”如何收集、保存、管理、分析、共享正成指数增长的数据是我们面临的一个挑战“

2012年3月,奥巴马签署并发布”大数据研究发展创新计划“

2012年7月,联合国发布白皮书《大数据促发展:挑战与机遇》,全球大数据研究进入前所未有的高潮期。

2013年5月,麦肯锡研究院发布研究报告《颠覆性技术:技术改变生活、商业和全球经济》并未列入大数据技术,其给出的解释是,大数据技术已成为其他技术的基石。

- 国内:

2013年4月14日,中央电视台邀请维克托.迈尔-舍恩伯格和阿比做客《对话》节目。

2012年中国计算机学会发布《2013年中国大数据技术与产业发展白皮书》

2015年9月,国务院引发了《促进大数据发展行动纲要》

2016年3月17日,国家”十三五“规划纲要发布,明确指出大数据发展相关事宜。

重大事件:2012年7月,阿里巴巴的管理层设立首席数据官一职(Chief DataOfficer,简称CDO)由陆兆禧担任,并推出”聚石塔“数据分享平台,为淘宝天猫上的电商及电商服务商提供数据云服务。

0.2 大数据发展历史

大数据三驾马车奠定了大数据发展。

2003年,Google发布Google File System论文,这是一个可扩展的分布式文件系统,用于大型的、分布式的、对大量数据进行访问的应用。它运行于廉价的普通硬件上,提供容错功能。从根本上说:文件被分割成很多块,使用冗余的方式储存于商用机器集群上。(GFS)

紧随其后的就是2004年公布的 MapReduce论文,论文描述了大数据的分布式计算方式,主要思想是将任务分解然后在多台处理能力较弱的计算节点中同时处理,然后将结果合并从而完成大数据处理。

Bigtable发布于2006年,启发了无数的NoSQL数据库,比如:Cassandra(卡珊德拉)、HBase等等。

2009年至今,大数据基础技术成熟之后,学术界及及企业界纷纷开始转向应用研究,2013年大数据技术开始向商业、科技、医疗、政府、教育、经济、交通、物流及社会的各个领域渗透,因此2013年也被称为大数据元年

0.3 大数据环境发展历史

2009年新的Apache Hadoop子项目成立,2011年3月被称为“21世纪的瑞士军刀”,12月27日发布1.0.0可用,2013年10月15日发布2.2.0可用,推动大数据技术快速落地企业。

- 安装方式分为原生包(tar包安装),和集群管理工具安装(HDP,CDH,CDP)

-

原生包方式

- 缺点:就是要自己安装每个组件,做组件与组件的集成,监控平台啊等需要自行适配,运维成本高

- 优点:可以自定义各种组件版本,对于二次开发,兼容协调有很好的优势

-

集群管理工具安装

-

缺点:对于自定义各种组件版本不友好,对于二次开发,兼容协调非常不方便

-

优点:不需要管理组件与组件的集成,已提供良好的监控平台,运维成本较低

-

- 集群管理工具对比

| 比较项/产品 | HDP | CDH | CDP |

|---|---|---|---|

| 公司 | Hortonworks | Cloudera | Cloudera |

| 全名 | Hortonworks Data Platform | Cloudera’s Distribution Including Apache Hadoop | Cloudera Data Platform |

| 集群管理工具 | Ambari | Cloudera Manager | Cloudera Manager |

| 最早时间 | 2011年 | 2008年 | Cloudera和Hortonworks2018年合并后 |

| 收费情况 | 免费 | 社区版免费,企业版收费(4000$一台节点) | 10000$一台节点,内存硬盘自行购买 |

一 环境准备

1.1 三台虚拟机准备

Master( 32g内存 + 100g硬盘 + 4cpu + 每个cpu2核)

2台Slave( 12g内存 + 100g硬盘 + 4cpu + 每个cpu1核)

- 参考地址:

https://www.cnblogs.com/ttzzyy/p/12566281.html

1、准备的机器只要网络IP不冲突通并且可以正常访问网络即可,如机器资源不够,可自行分配所需要的资源,或者使用云服务

2、关闭防火墙,云服务还需要开启对应的IP端口

3、机器hostname修改,配置hosts,并配置SHH免密

4、时间同步

注意:如果拿不稳可以做快照的做快照,这样恢复起来容易。

1.2 常用yum源更新,gcc,G++,C++等环境(可以跳过)

yum -y install chkconfig python bind-utils psmisc libxslt zlib sqlite cyrus-sasl-plain cyrus-sasl-gssapi fuse fuse-libs redhat-lsb postgresql* portmap mod_ssl openssl openssl-devel python-psycopg2 MySQL-python python-devel telnet pcre-devel gcc gcc-c++

1.3 配置本地yum云

注意此处只需要在主节点机器上执行

1.3.1 更新yum源httpd

#更新yum源httpd

yum -y install httpd

#查看httpd状态

systemctl status httpd.service

#启动httpd

service httpd start

#配置httpd永久生效(重启生效)

chkconfig httpd on

1.3.2 更新yum源yum-utils

#更新yum源yum-utils createrepo

yum -y install yum-utils createrepo

#进入yum源路径

cd /var/www/html/

#创建cm文件夹

mkdir cm

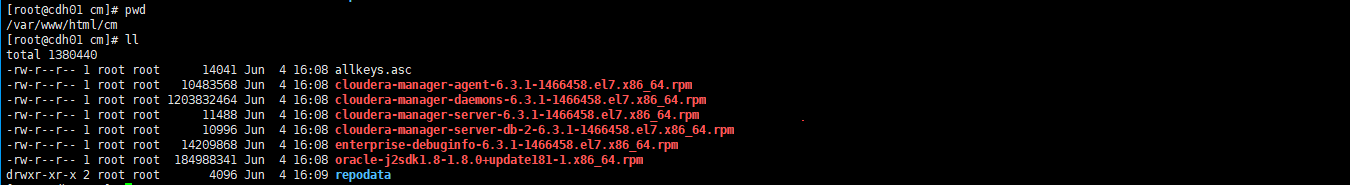

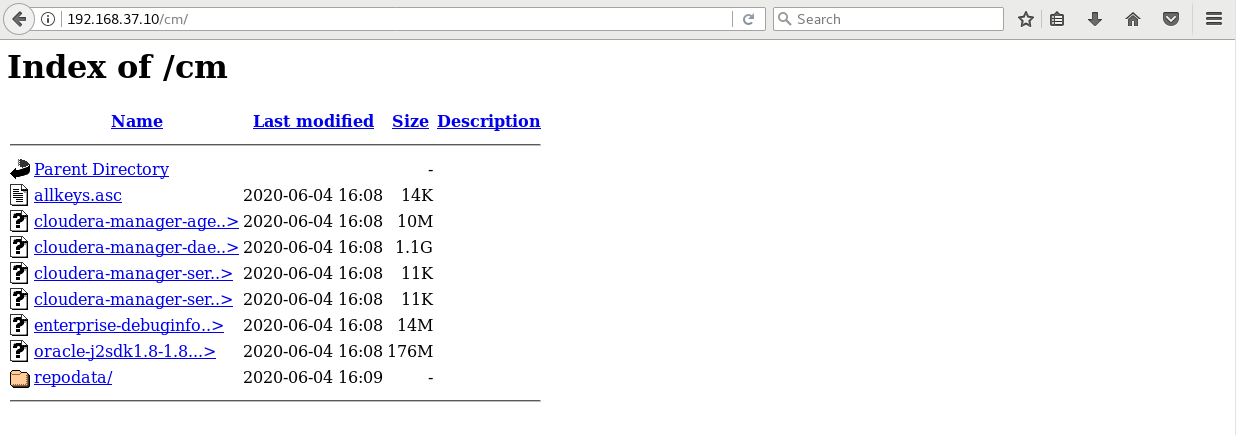

1.3.3 将下载好的资源添加进本地yum云

在“2.1CM下载”中,下载需要的文件,后面要放在本地yum云中,提供访问

在添加完需要资源后,使用工具和本地的yum源创建联系,其中repodata是yum联系创建的

createrepo /var/www/html/cm/

1.3.4 创建本地repo文件

vim /etc/yum.repos.d/cloudera-manager.repo

---->添加如下内容(注意IP改成本地yum主机IP,只能是ip)

[cloudera-manager]

name=Cloudera Manager, Version yum

baseurl=http://192.168.37.10/cm

gpgcheck=0

enabled=1

----<

- 更新yum源

yum clean all

yum makecache

- 验证

yum list | grep cloudera-manager

1.3.5 将本地yum文件分发至从节点

scp -r /etc/yum.repos.d/cloudera-manager.repo root@cdh02.cm:/etc/yum.repos.d/

scp -r /etc/yum.repos.d/cloudera-manager.repo root@cdh03.cm:/etc/yum.repos.d/

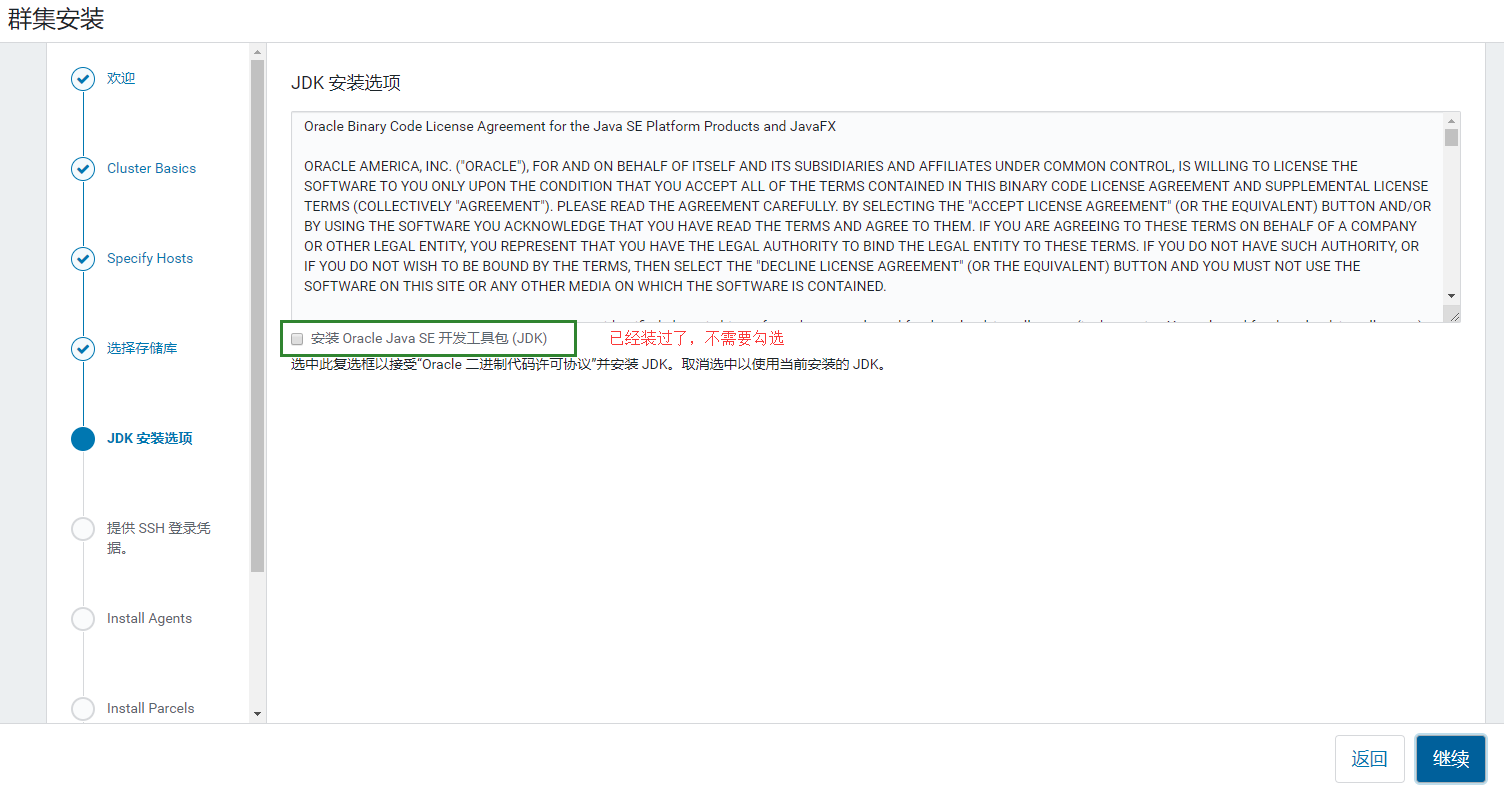

1.4 安装JDK(所有节点)

#查看命令

rpm -qa | grep java

#删除命令(删除所有openjdk)

rpm -e --nodeps xxx

- 将oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm上传至每个节点安装

rpm -ivh oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm

- 修改配置文件

vim /etc/profile

--->添加

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

---<

- 刷新源

source /etc/profile

- 检验

java

javac

1.5 安装mysql

用于管理CM数据,故安装在主节点即可

- 此处先把mysql-connector-java-5.1.47.jar传到每台服务器的/usr/share/java(如果目录不存在需要先创建)目录下,并更名为mysql-connector-java.jar,如果不更名后面会报错

- 把mysql-5.7.11-linux-glibc2.5-x86_64.tar.gz解压到/usr/local目录下并改名为mysql,然后执行如下命令

#创建归档,数据,临时文件夹

mkdir /usr/local/mysql/arch /usr/local/mysql/data /usr/local/mysql/tmp

#创建用户,赋予权限

useradd mysql

chown -R mysql.mysql /usr/local/mysql

#更新yum

yum -y install perl perl-devel

- 修改vi /etc/my.cnf

删除原有配置,增加如下配置

[client]

port=3306

socket=/usr/local/mysql/data/mysql.sock

default-character-set=utf8mb4

[mysqld]

port=3306

socket=/usr/local/mysql/data/mysql.sock

skip-slave-start

skip-external-locking

key_buffer_size=256M

sort_buffer_size=2M

read_buffer_size=2M

read_rnd_buffer_size=4M

query_cache_size=32M

max_allowed_packet=16M

myisam_sort_buffer_size=128M

tmp_table_size=32M

table_open_cache=512

thread_cache_size=8

wait_timeout=86400

interactive_timeout=86400

max_connections=600

# Try number of CPU's*2 for thread_concurrency

#thread_concurrency=32

#isolation level and default engine

default-storage-engine=INNODB

transaction-isolation=READ-COMMITTED

server-id=1739

basedir=/usr/local/mysql

datadir=/usr/local/mysql/data

pid-file=/usr/local/mysql/data/hostname.pid

#open performance schema

log-warnings

sysdate-is-now

binlog_format=ROW

log_bin_trust_function_creators=1

log-error=/usr/local/mysql/data/hostname.err

log-bin=/usr/local/mysql/arch/mysql-bin

expire_logs_days=7

innodb_write_io_threads=16

relay-log=/usr/local/mysql/relay_log/relay-log

relay-log-index=/usr/local/mysql/relay_log/relay-log.index

relay_log_info_file=/usr/local/mysql/relay_log/relay-log.info

log_slave_updates=1

gtid_mode=OFF

enforce_gtid_consistency=OFF

# slave

slave-parallel-type=LOGICAL_CLOCK

slave-parallel-workers=4

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log_recovery=ON

#other logs

#general_log=1

#general_log_file=/usr/local/mysql/data/general_log.err

#slow_query_log=1

#slow_query_log_file=/usr/local/mysql/data/slow_log.err

#for replication slave

sync_binlog=500

#for innodb options

innodb_data_home_dir=/usr/local/mysql/data/

innodb_data_file_path=ibdata1:1G;ibdata2:1G:autoextend

innodb_log_group_home_dir=/usr/local/mysql/arch

innodb_log_files_in_group=4

innodb_log_file_size=1G

innodb_log_buffer_size=200M

#根据生产需要,调整pool size

innodb_buffer_pool_size=2G

#innodb_additional_mem_pool_size=50M #deprecated in 5.6

tmpdir=/usr/local/mysql/tmp

innodb_lock_wait_timeout=1000

#innodb_thread_concurrency=0

innodb_flush_log_at_trx_commit=2

innodb_locks_unsafe_for_binlog=1

#innodb io features: add for mysql5.5.8

performance_schema

innodb_read_io_threads=4

innodb-write-io-threads=4

innodb-io-capacity=200

#purge threads change default(0) to 1 for purge

innodb_purge_threads=1

innodb_use_native_aio=on

#case-sensitive file names and separate tablespace

innodb_file_per_table=1

lower_case_table_names=1

[mysqldump]

quick

max_allowed_packet=128M

[mysql]

no-auto-rehash

default-character-set=utf8mb4

[mysqlhotcopy]

interactive-timeout

[myisamchk]

key_buffer_size=256M

sort_buffer_size=256M

read_buffer=2M

write_buffer=2M

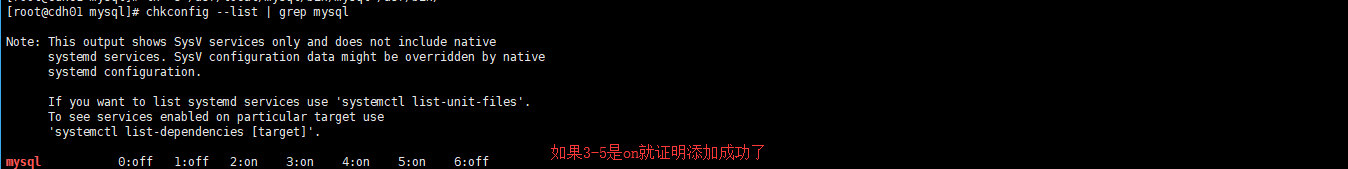

- 配置服务及开机自启动

cd /usr/local/mysql

#将服务文件拷贝到init.d下,并重命名为mysql

cp support-files/mysql.server /etc/rc.d/init.d/mysql

#赋予可执行权限

chmod +x /etc/rc.d/init.d/mysql

#删除服务

chkconfig --del mysql

#添加服务

chkconfig --add mysql

chkconfig --level 345 mysql on

#添加快捷方式

ln -s /usr/local/mysql/bin/mysql /usr/bin/

#检查服务

chkconfig --list | grep mysql

- 安装mysql的初始db

#输入如下命令等待

/usr/local/mysql/bin/mysqld \

--defaults-file=/etc/my.cnf \

--user=mysql \

--basedir=/usr/local/mysql/ \

--datadir=/usr/local/mysql/data/ \

--initialize

在初始化时如果加上 –initial-insecure,则会创建空密码的 root@localhost 账号,否则会创建带密码的 root@localhost 账号,密码直接写在 log-error 日志文件中

(在5.6版本中是放在 ~/.mysql_secret 文件里,更加隐蔽,不熟悉的话可能会无所适从)

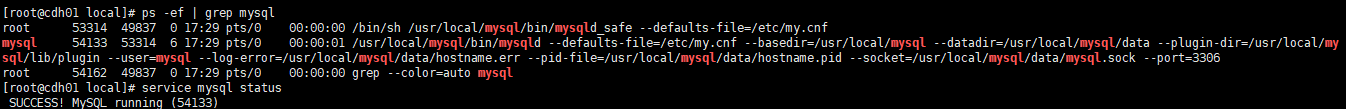

- 启动

#下面命令执行完记得敲回车

/usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my.cnf &

#启动mysql(如果上面命令没有启动mysql),停止为service mysql stop

#service mysql start

#查看mysql状态

service mysql status

- 查看临时密码

cat /usr/local/mysql/data/hostname.err |grep password

- 登录及修改用户密码

mysql -u root -p

#输入刚刚日志中的密码进入

#设置密码为:root

set password for 'root'@'localhost'=password('root');

#配置远程可以访问

grant all privileges on *.* to 'root'@'%' identified by 'root' with grant option;

use mysql

delete from user where host!='%';

#刷新

flush privileges;

#退出

quit

- 登录创建cm需要的库的用户

| 服务名 | 数据库名 | 用户名 |

|---|---|---|

| Cloudera Manager Server | scm | scm |

| Activity Monitor | amon | amon |

| Reports Manager | rman | rman |

| Hue | hue | hue |

| Hive Metastore Server | metastore | hive |

| Sentry Server | sentry | sentry |

| Cloudera Navigator Audit Server | nav | nav |

| Cloudera Navigator Metadata Server | navms | navms |

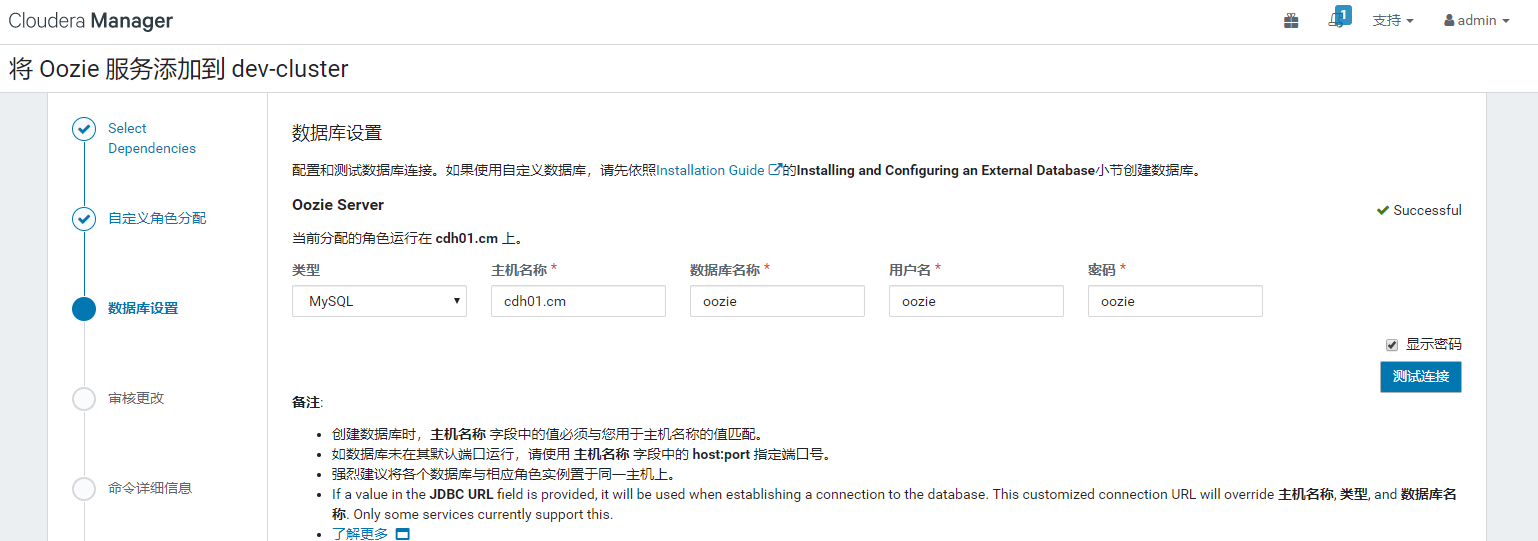

| Oozie | oozie | oozie |

#进入mysql(输入修改后的密码)

mysql -u root -p

#scm库和权限暂时不创建,后面指定数据库,会自动创建

#CREATE DATABASE scm DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE amon DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE rman DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE metastore DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE hue DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE oozie DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

#GRANT ALL ON scm.* TO 'scm'@'%' IDENTIFIED BY 'scm';

GRANT ALL ON amon.* TO 'amon'@'%' IDENTIFIED BY 'amon';

GRANT ALL ON rman.* TO 'rman'@'%' IDENTIFIED BY 'rman';

GRANT ALL ON metastore.* TO 'hive'@'%' IDENTIFIED BY 'hive';

GRANT ALL ON hue.* TO 'hue'@'%' IDENTIFIED BY 'hue';

GRANT ALL ON oozie.* TO 'oozie'@'%' IDENTIFIED BY 'oozie';

#####注意此处再授权一个本主机名地址,不然web页面配置很容易出错,注意修改本地主机名hostname

GRANT ALL ON amon.* TO 'amon'@'本主机名' IDENTIFIED BY 'amon';

#刷新源

FLUSH PRIVILEGES;

#检查权限是否正确

show grants for 'amon'@'%';

show grants for 'rman'@'%';

show grants for 'hive'@'%';

show grants for 'hue'@'%';

show grants for 'oozie'@'%';

#退出

quit

#重启服务

service mysql restart

二 CM+CDH安装

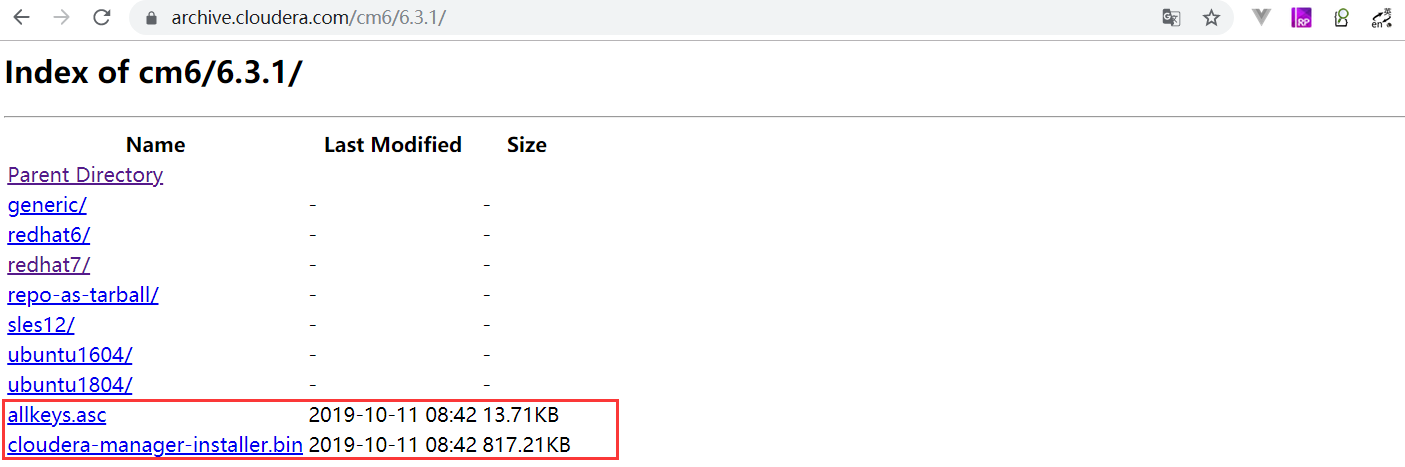

2.1 CM下载

红框中都需要下载

2.2 通过yum安装daemons,agent,server

主节点

yum list | grep cloudera-manager

yum -y install cloudera-manager-daemons cloudera-manager-agent cloudera-manager-server

从节点

yum list | grep cloudera-manager

yum -y install cloudera-manager-daemons cloudera-manager-agent

注意从节点不能查到,可能由防火墙引起,确认关闭

2.3 上传cloudera-manager-installer.bin到主节点,赋予权限

mkdir /usr/software

cd /usr/software

chmod +x cloudera-manager-installer.bin

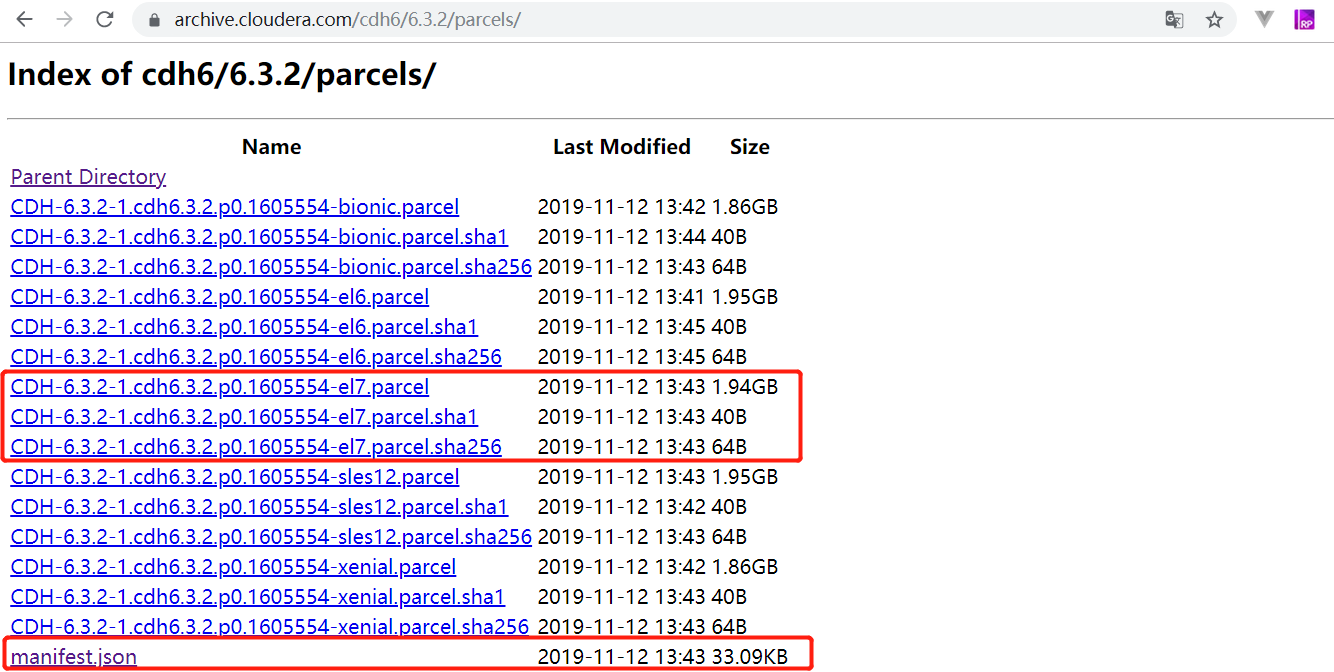

2.4 CDH下载

下载地址:https://archive.cloudera.com/cdh6/6.3.2/parcels/

红框中都需要下载

2.5 配置本地Parcel存储库

- 将CDH下载的文件,上传到指定文件夹(主节点)

cd /opt/cloudera/parcel-repo/

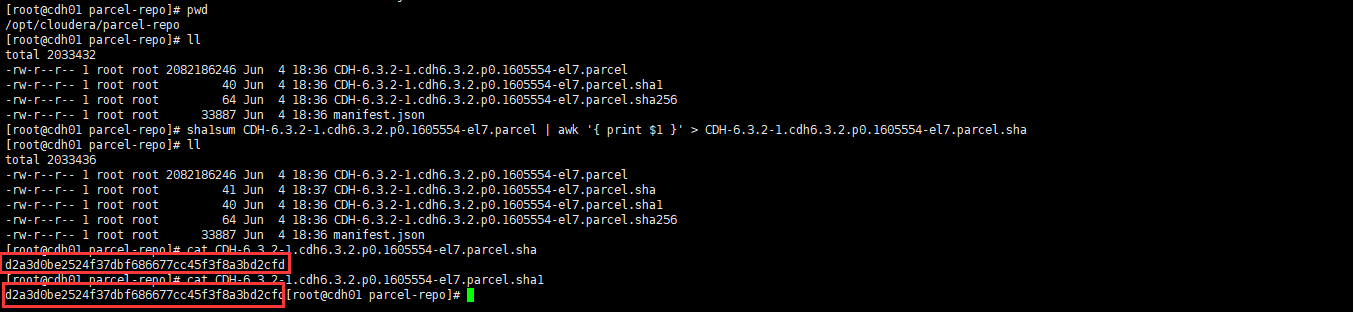

- 校验文件是否下载完全

sha1sum CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel | awk '{ print $1 }' > CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel.sha

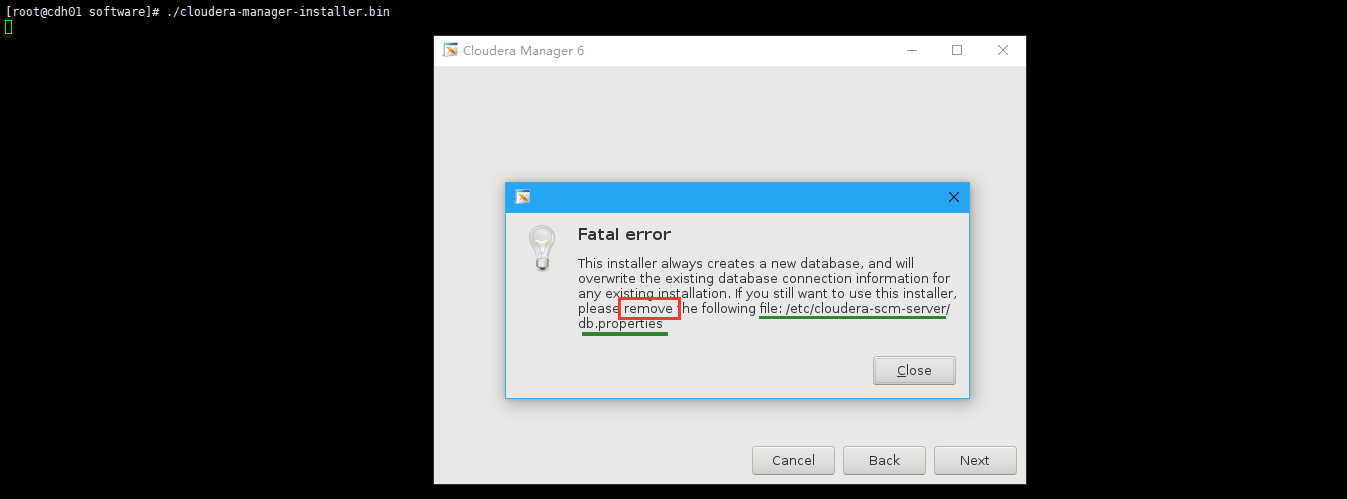

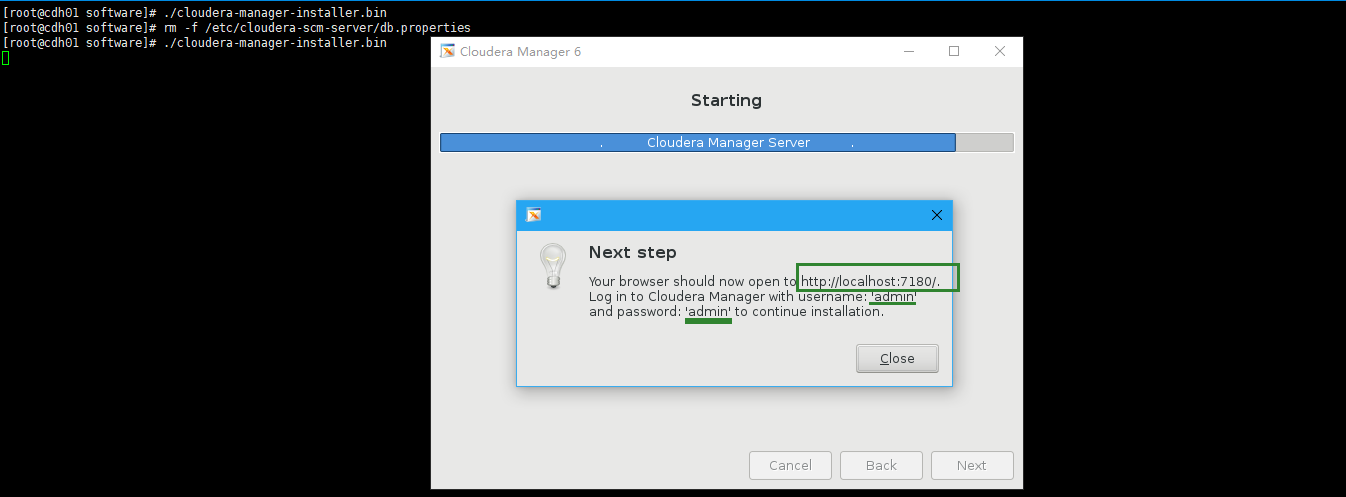

2.6 执行安装

cd /usr/software

./cloudera-manager-installer.bin

rm -f /etc/cloudera-scm-server/db.properties

#再次执行脚本(一路yes)

./cloudera-manager-installer.bin

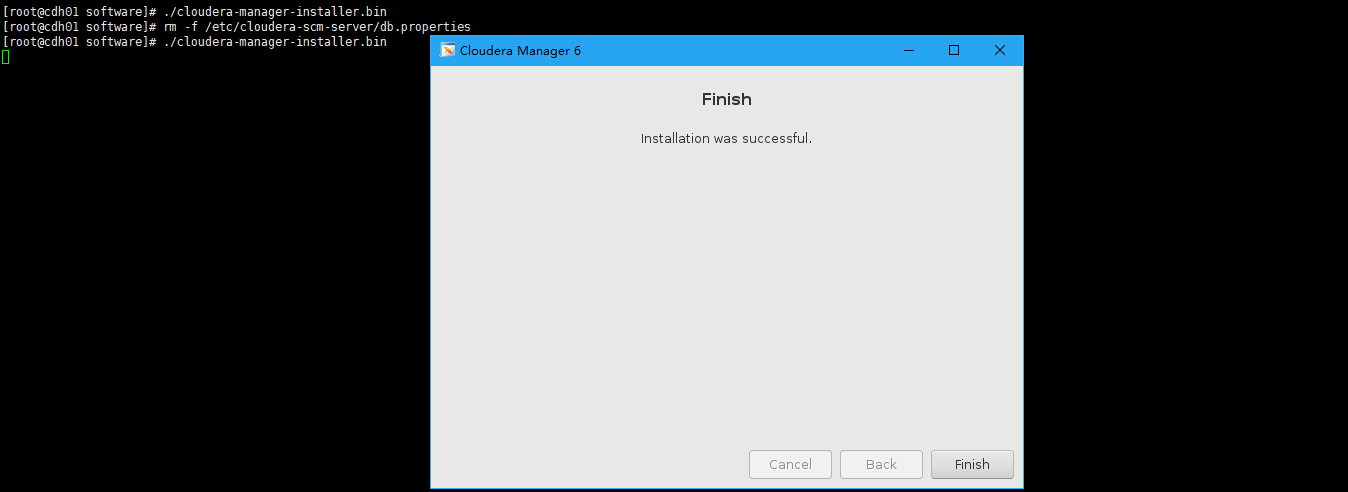

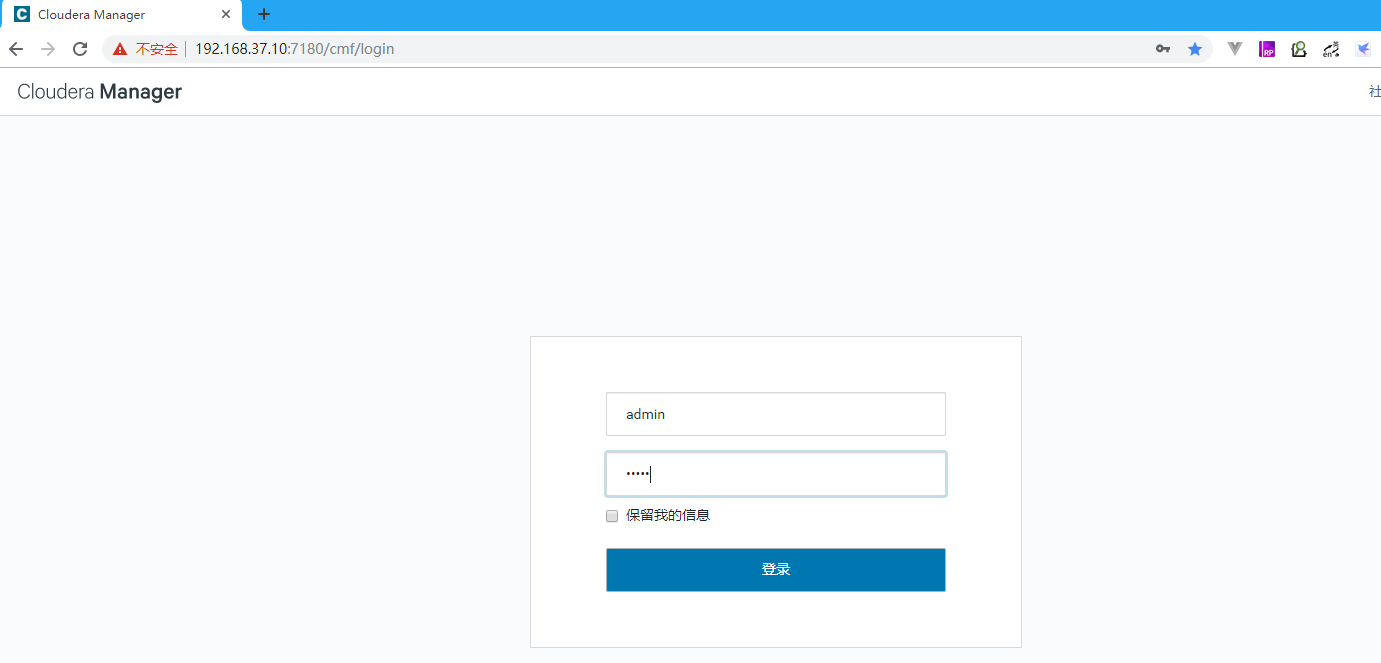

此处便安装完成,可以登录主机IP+7180端口登录web页面,用户名密码admin/admin,但是此处暂时不要急于登录网页并配置参数,先改掉数据库连接方式重启cloudera-scm-server后再进行配置,操作如下:

- 1) 执行脚本scm_prepare_database.sh

#设置Cloudera Manager 数据库

/opt/cloudera/cm/schema/scm_prepare_database.sh mysql -uroot -p'root' scm scm scm

#进入mysql(这里是上面改的密码root)

mysql -uroot -proot

GRANT ALL ON scm.* TO 'scm'@'%' IDENTIFIED BY 'scm';

FLUSH PRIVILEGES;

show grants for 'scm'@'%';

quit

- 2) 停止ClouderaManager服务

service cloudera-scm-server stop

service cloudera-scm-server-db stop

- 3) 删除内嵌的默认数据库PostgreSQL的配置

rm -f /etc/cloudera-scm-server/db.mgmt.properties

- 4) 启动ClouderaManager服务

service cloudera-scm-server start

-

- 如果有问题查看日志

vim /var/log/cloudera-scm-server/cloudera-scm-server.log

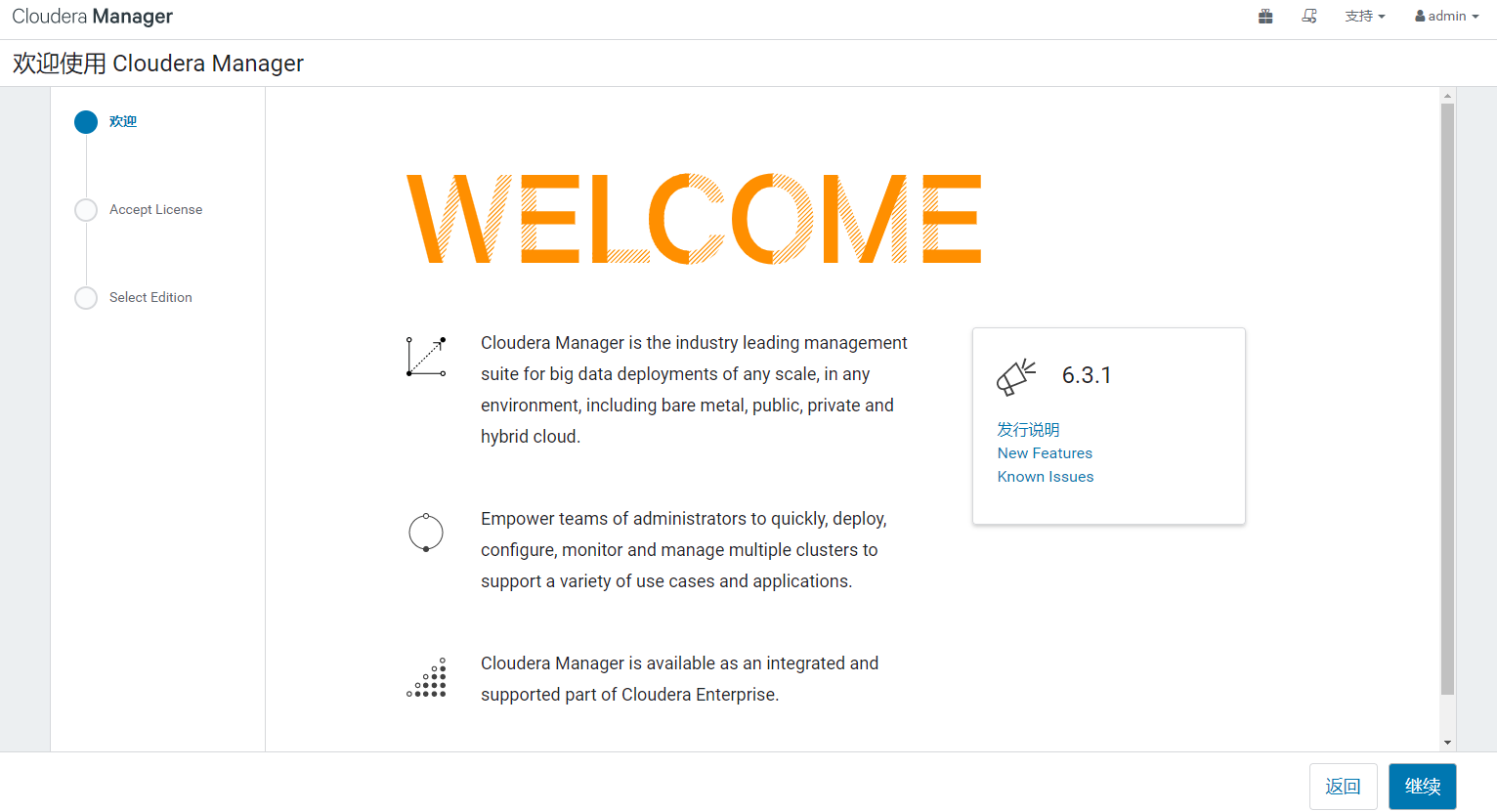

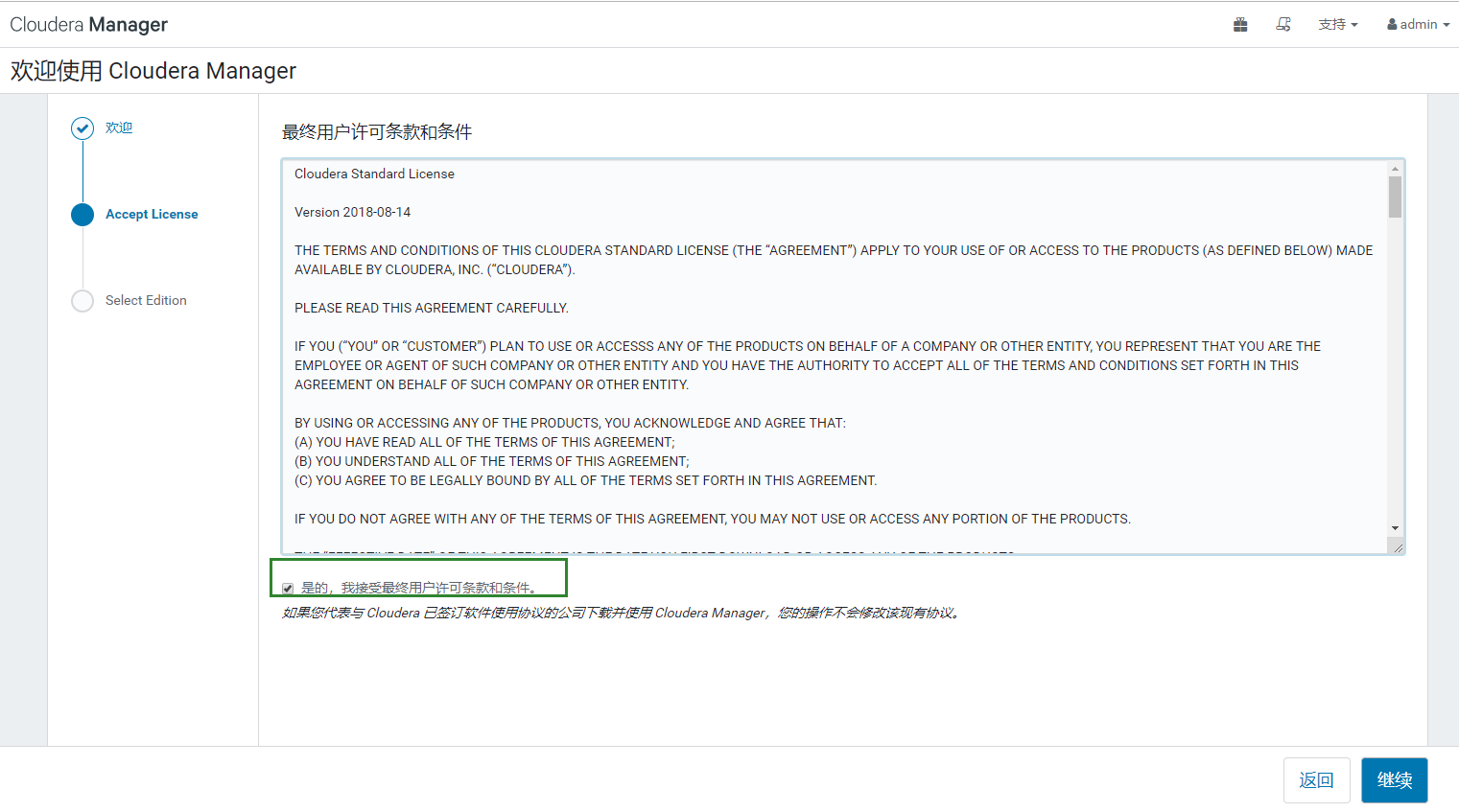

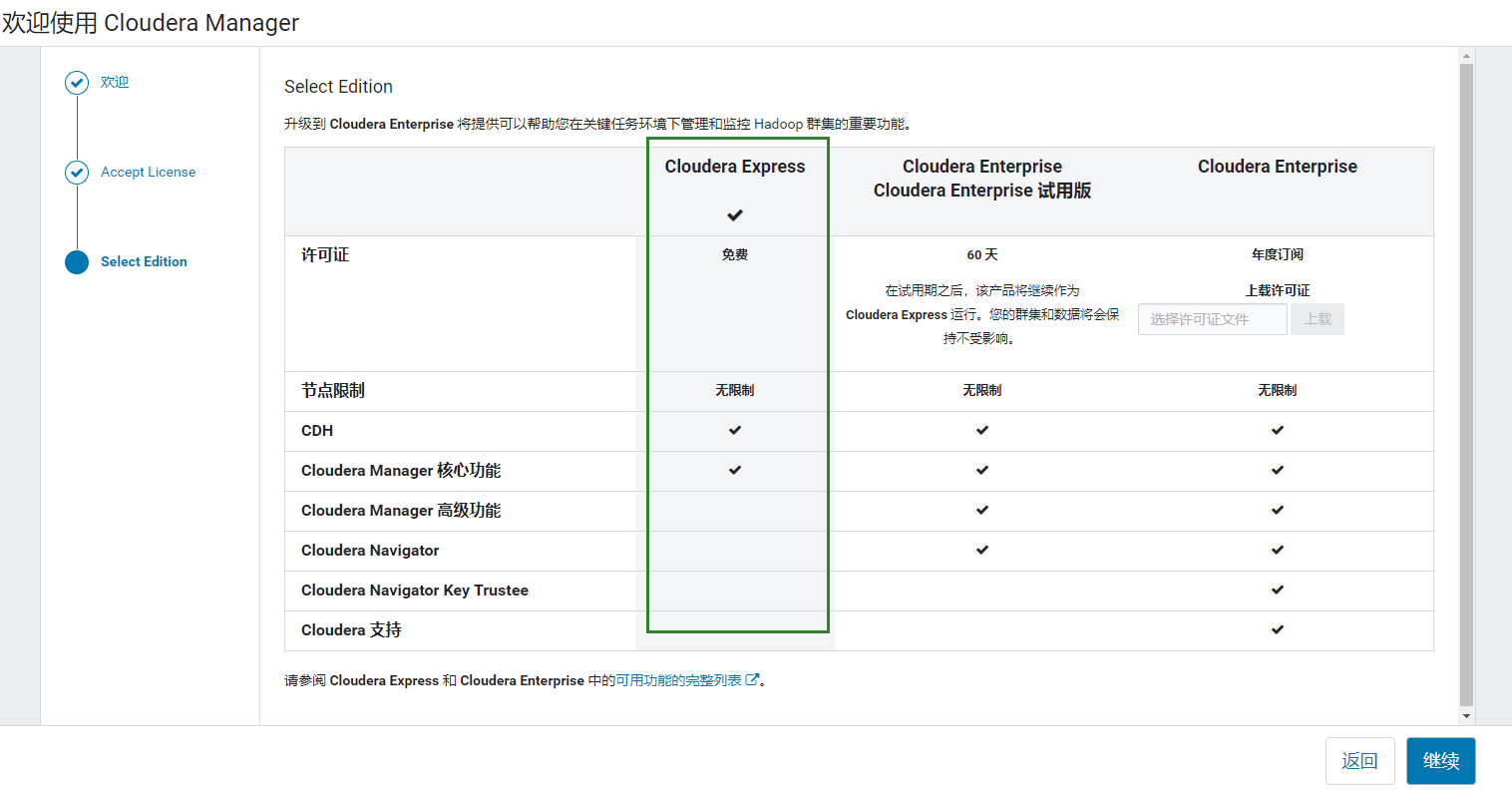

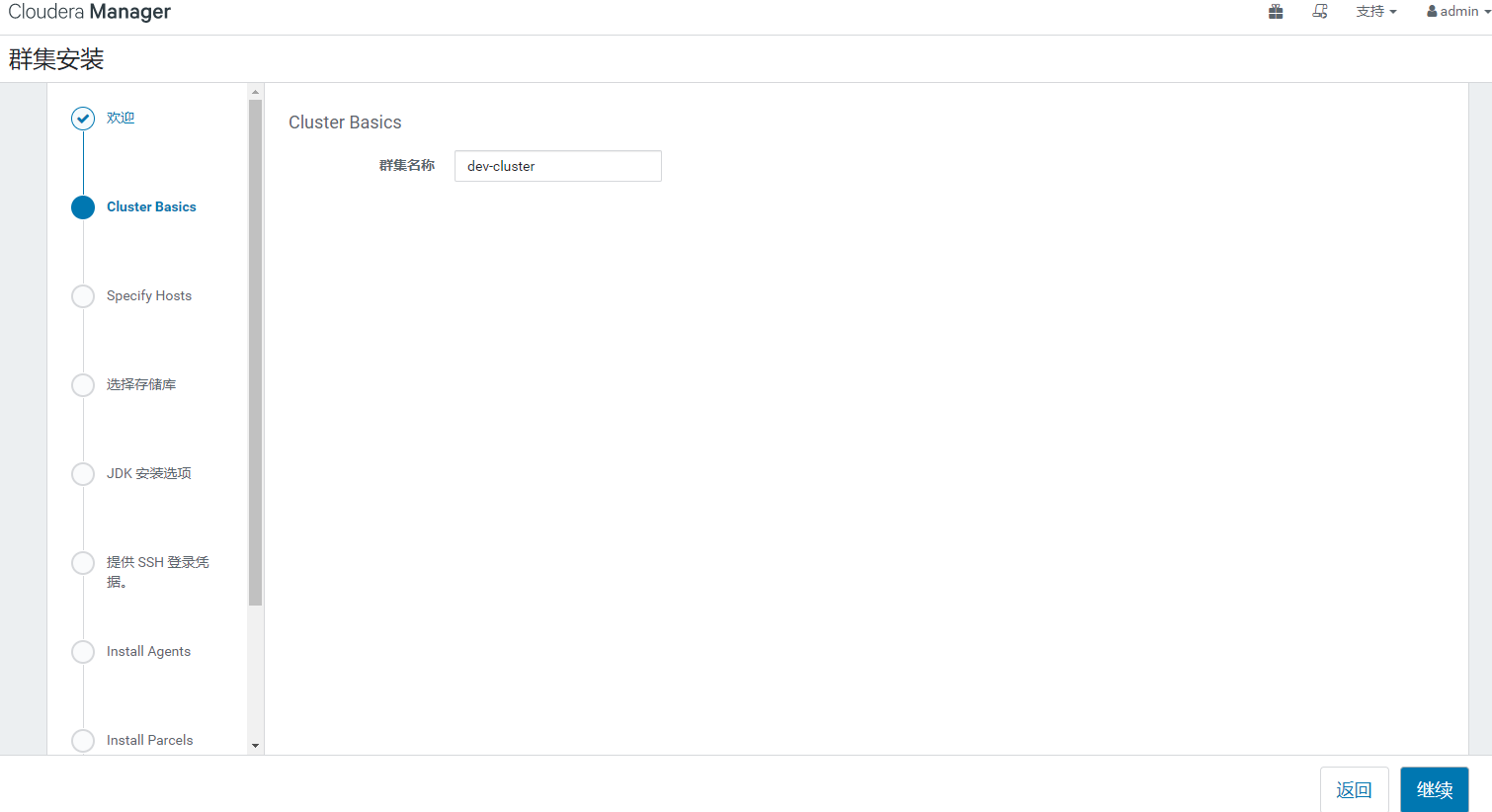

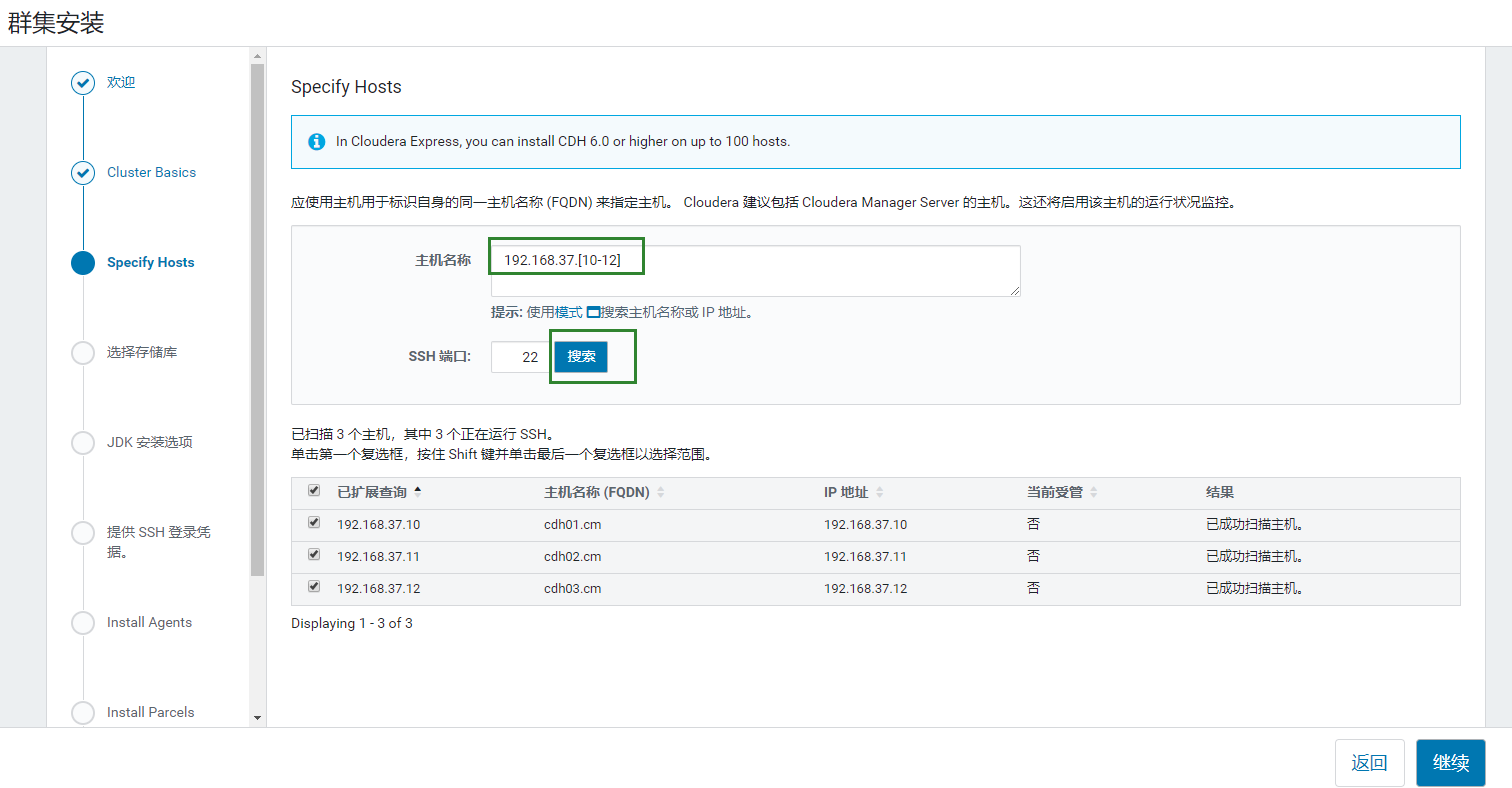

2.7 CM->CDH安装(账号:admin,密码:admin)

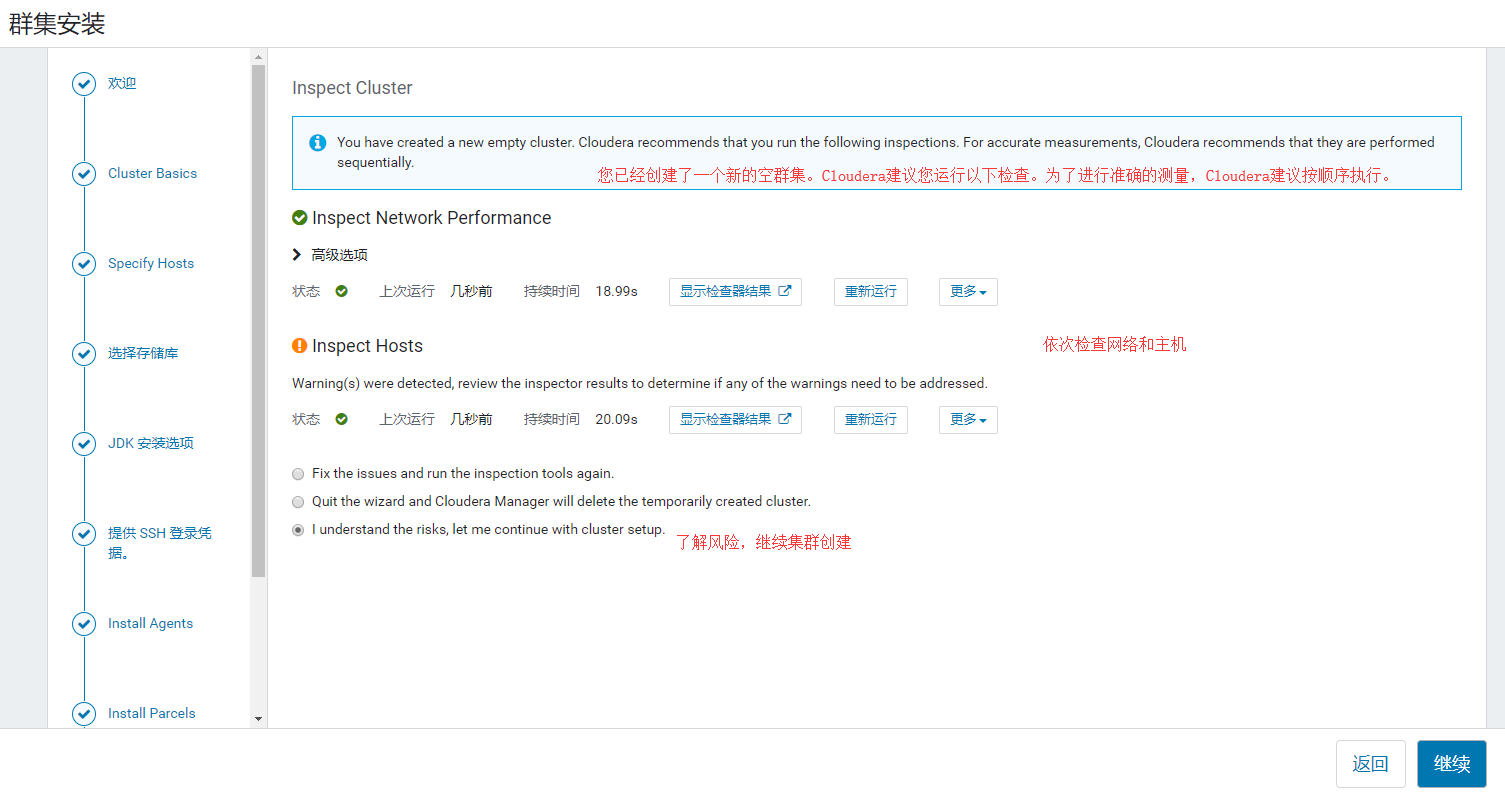

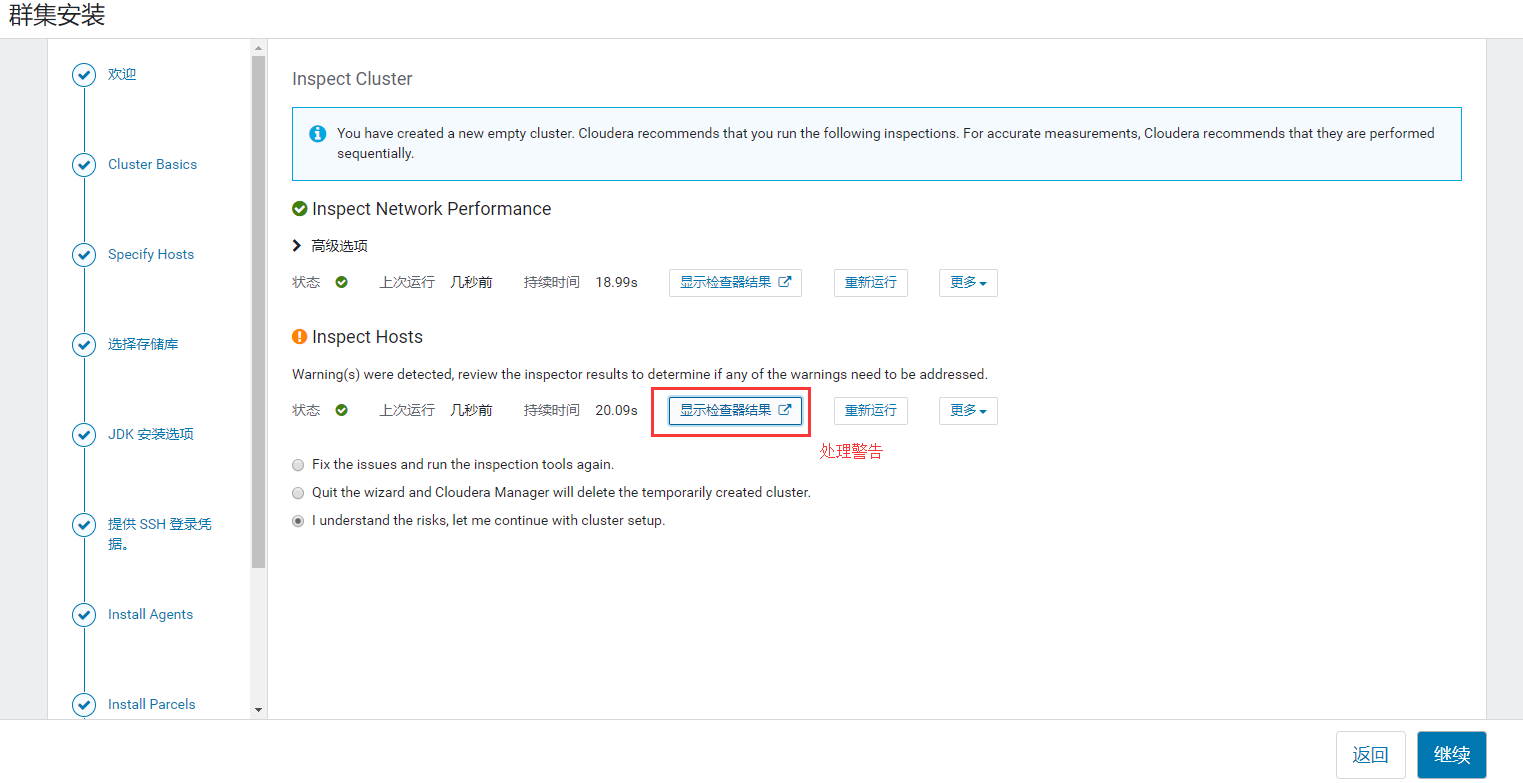

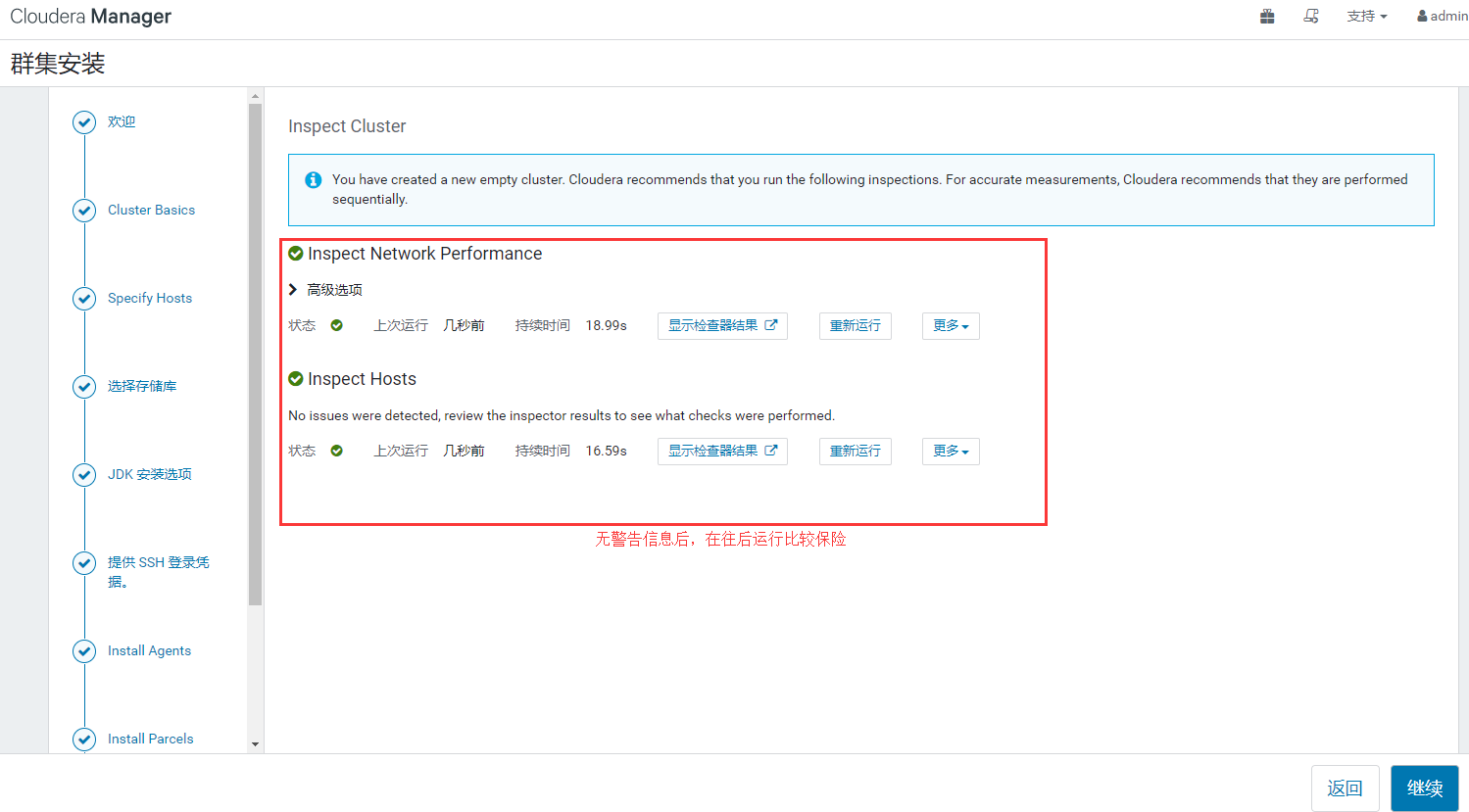

处理相关警告:所有机器都要修改

- 问题一

#临时

sysctl vm.swappiness=10

#永久

echo 'vm.swappiness=10'>> /etc/sysctl.conf

- 问题二

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

#修改启动脚本

vim /etc/rc.local

--->添加

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

---<

重新运行检查

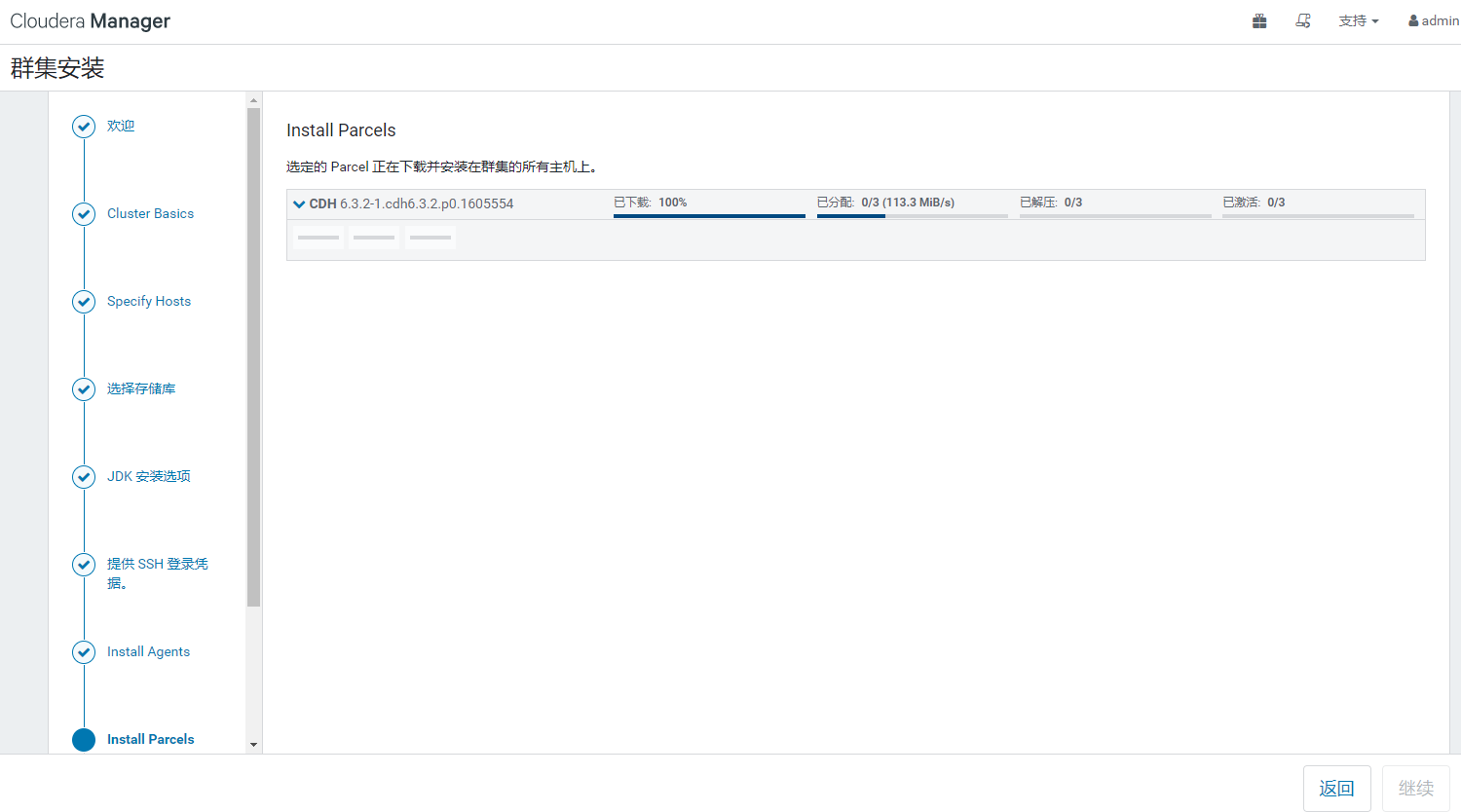

2.8 第一次组件安装

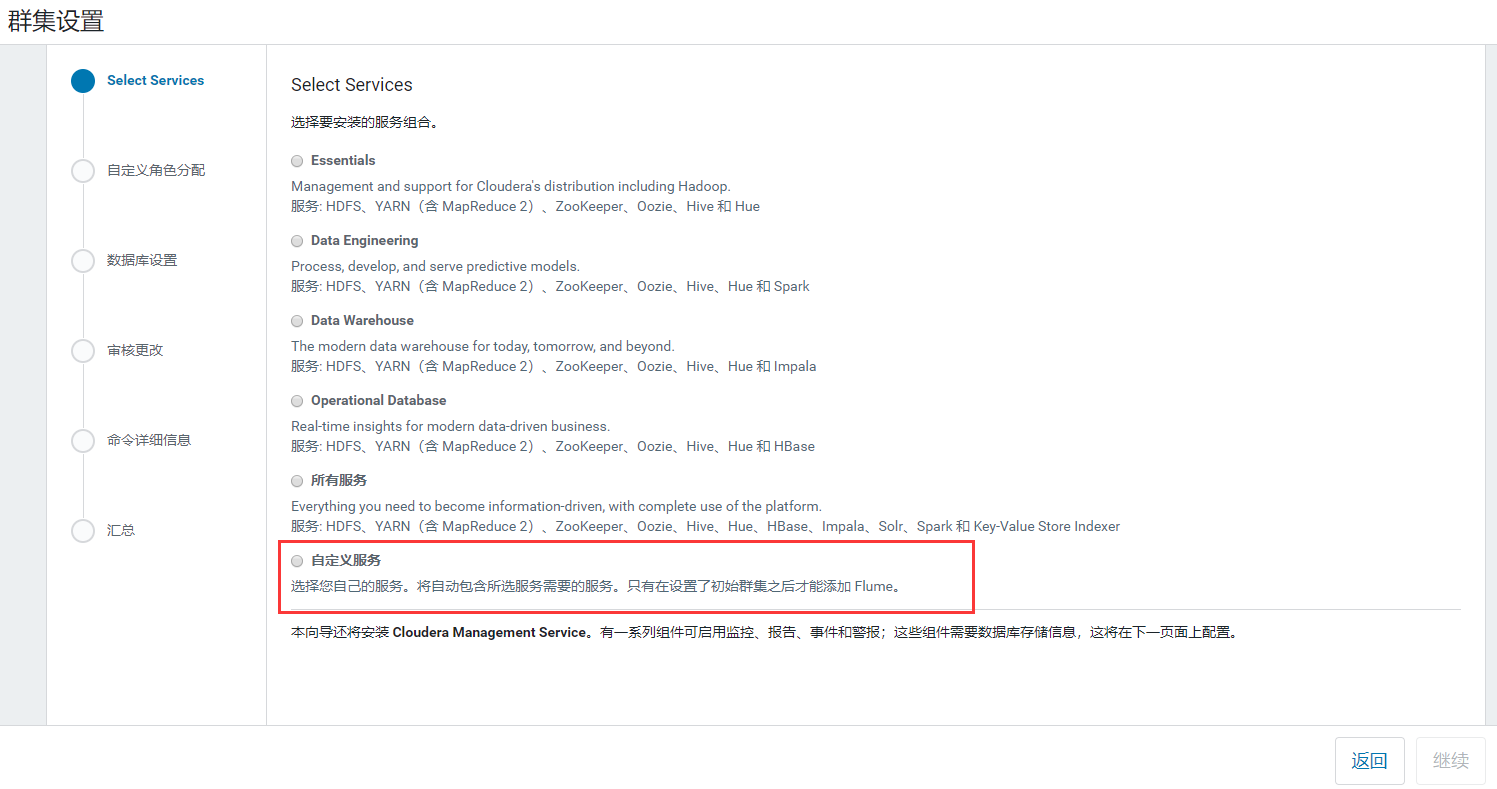

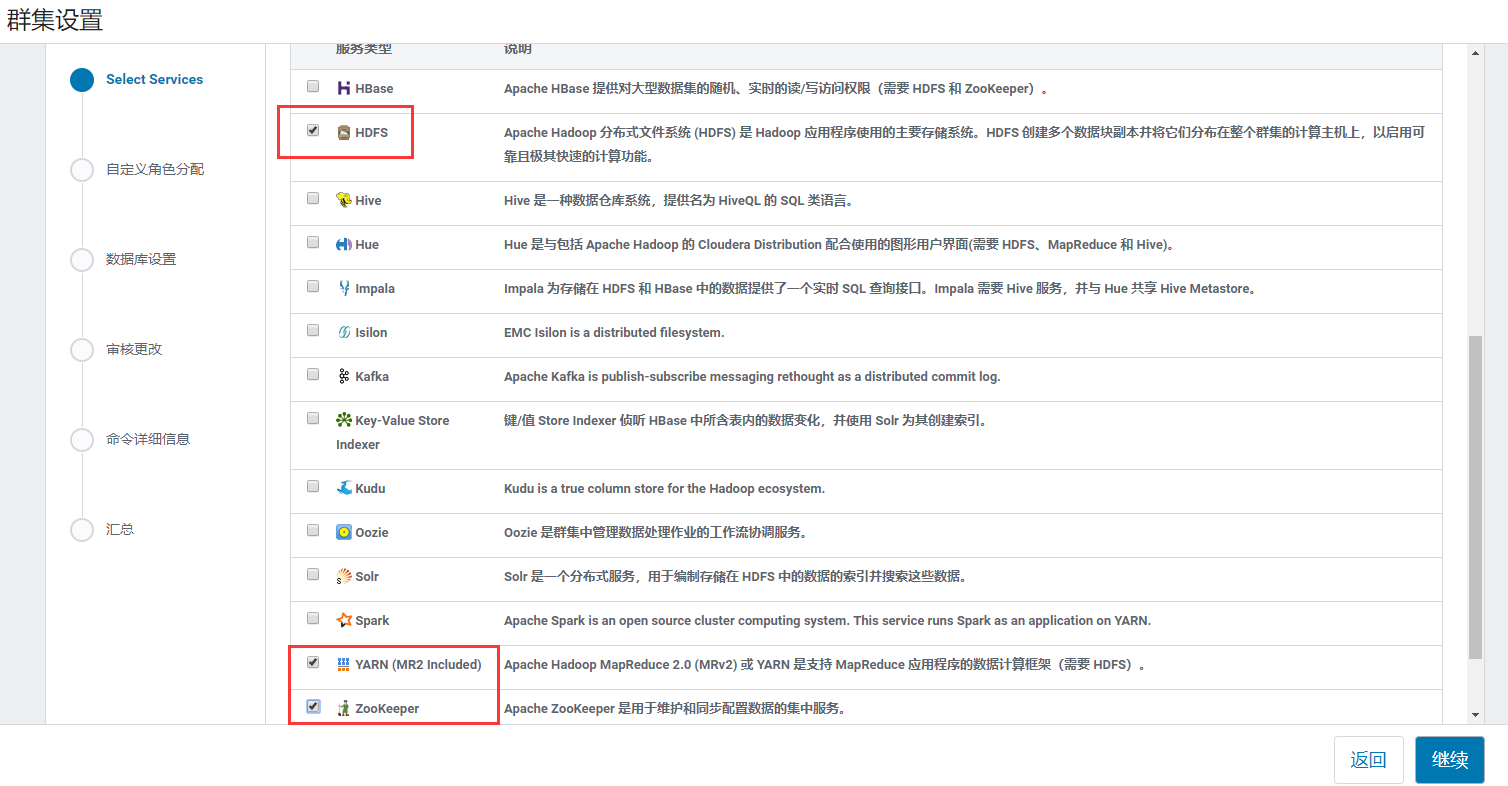

选择组件,为了简单安全有效,选择HDFS,YARN,ZOOKEEPER

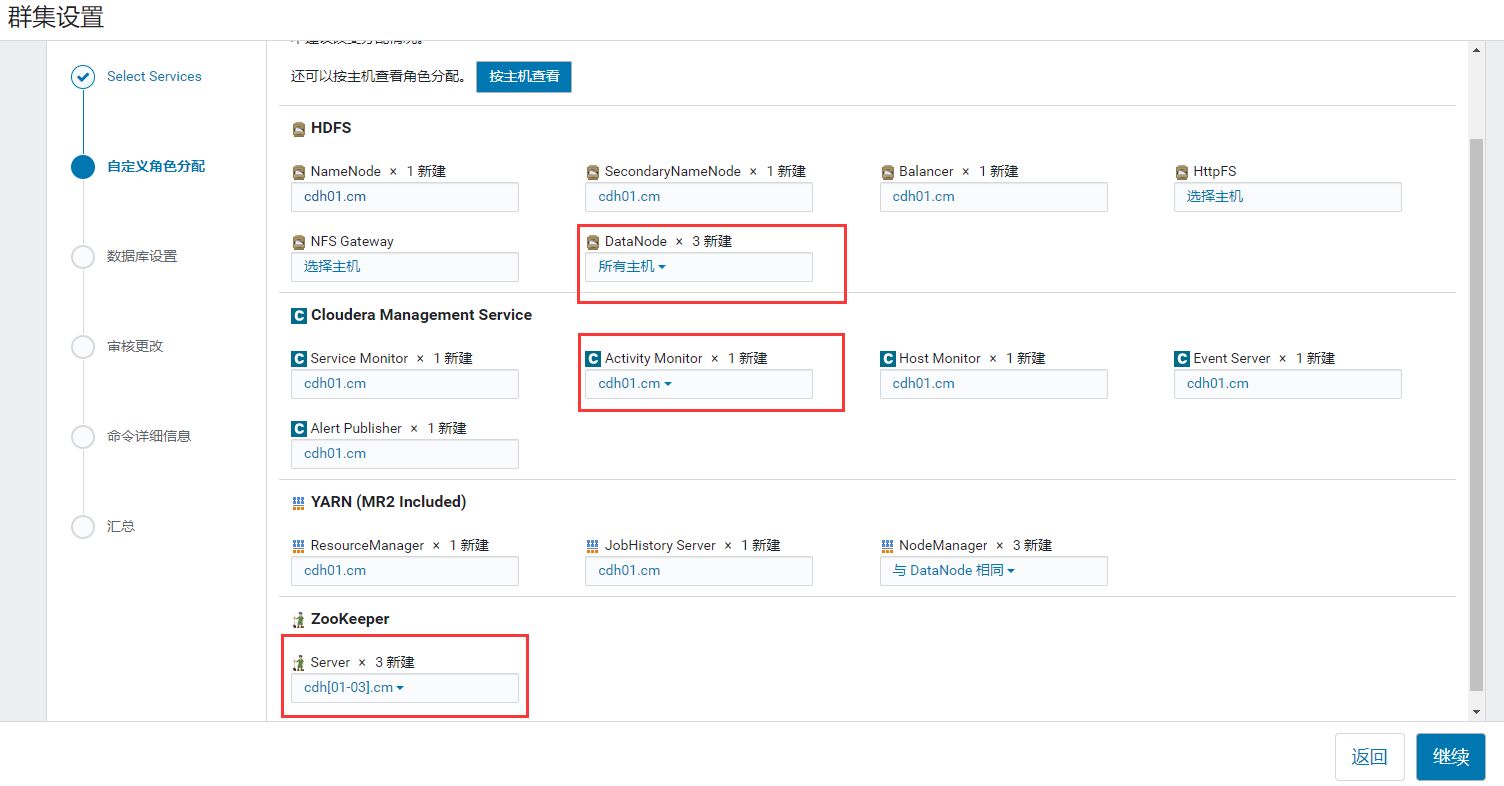

这里可以将SecondaryNameNode和NameNode放在不同的机器,可以在NameNode异常时尽可能的确保数据正确性

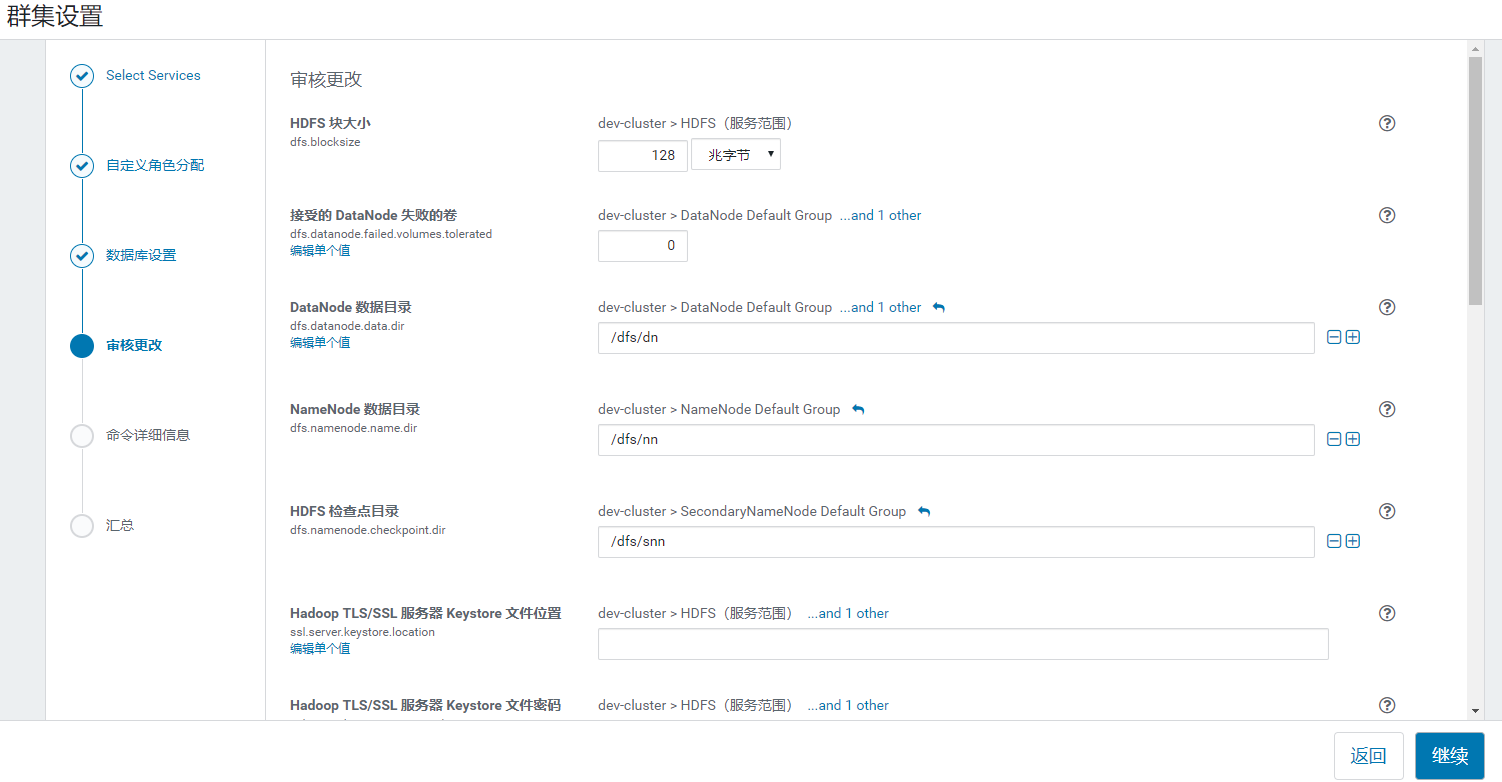

下图为基本配置,不需要管,下一步

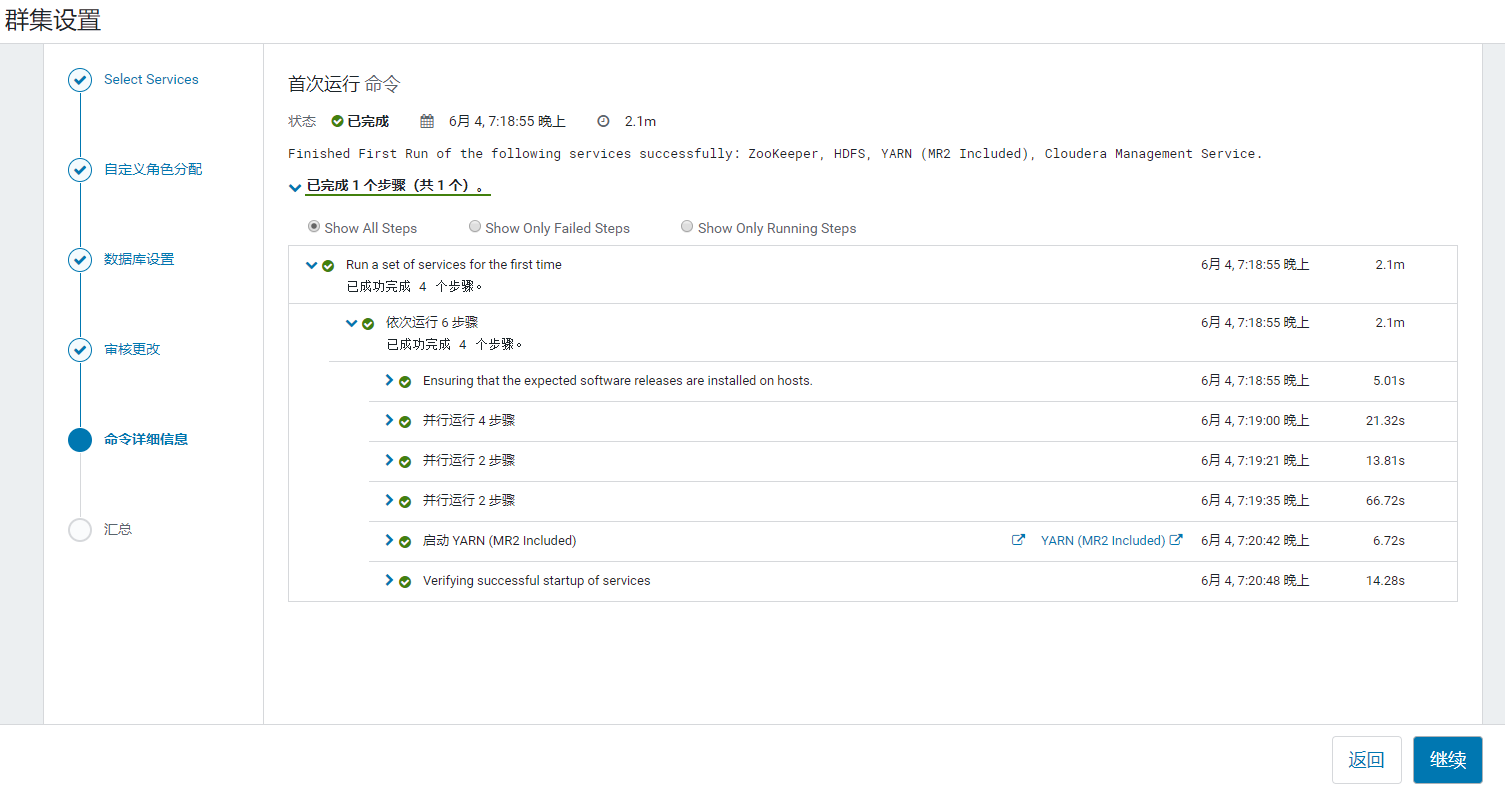

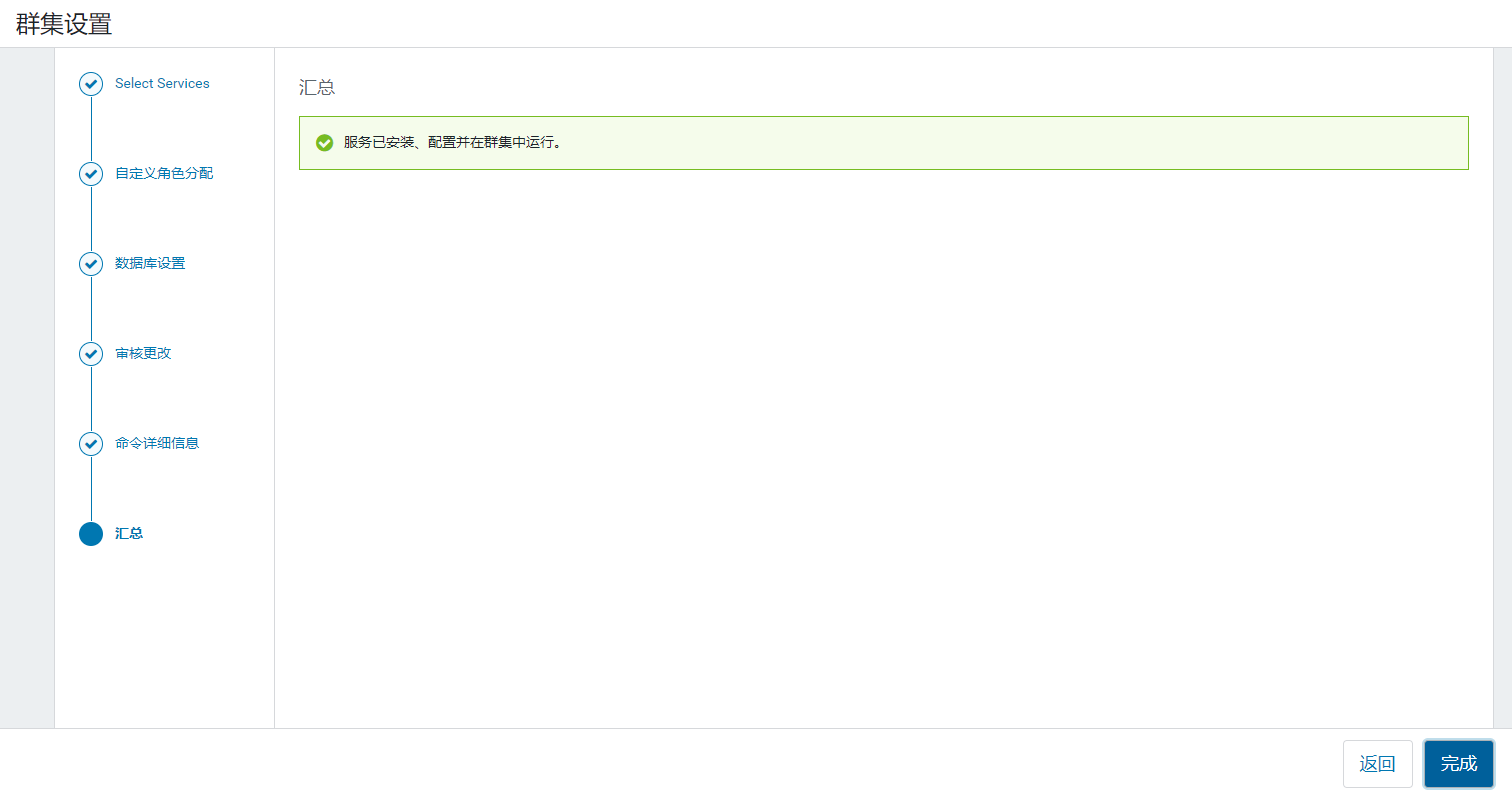

安装完成

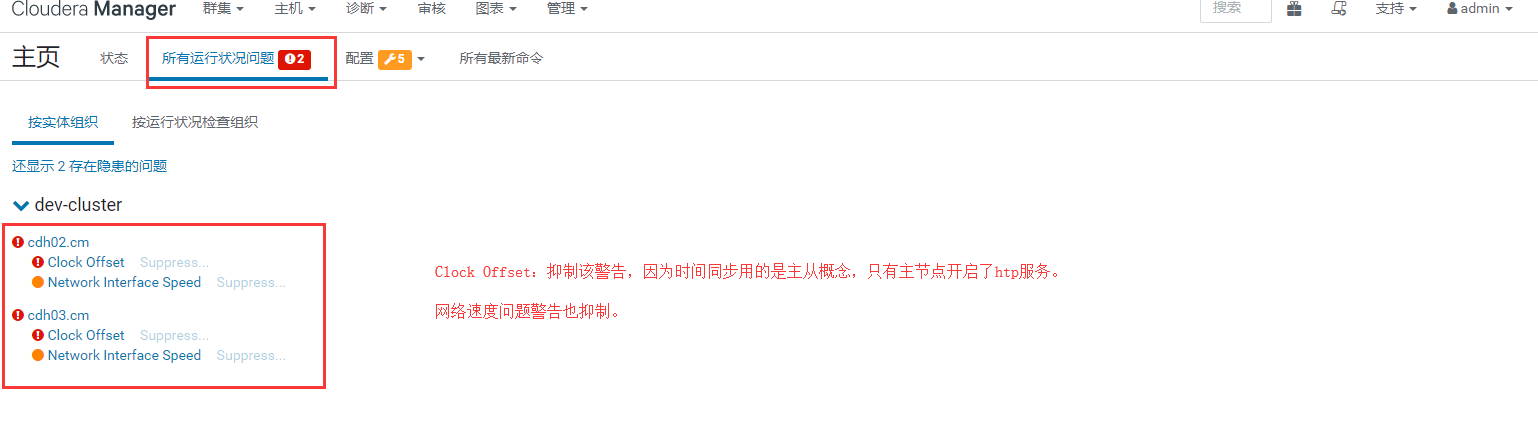

2.9 问题处理

2.9.1 Clock Offset及 Network Interface Speed

2.9.2 HDFS Canary

直接抑制

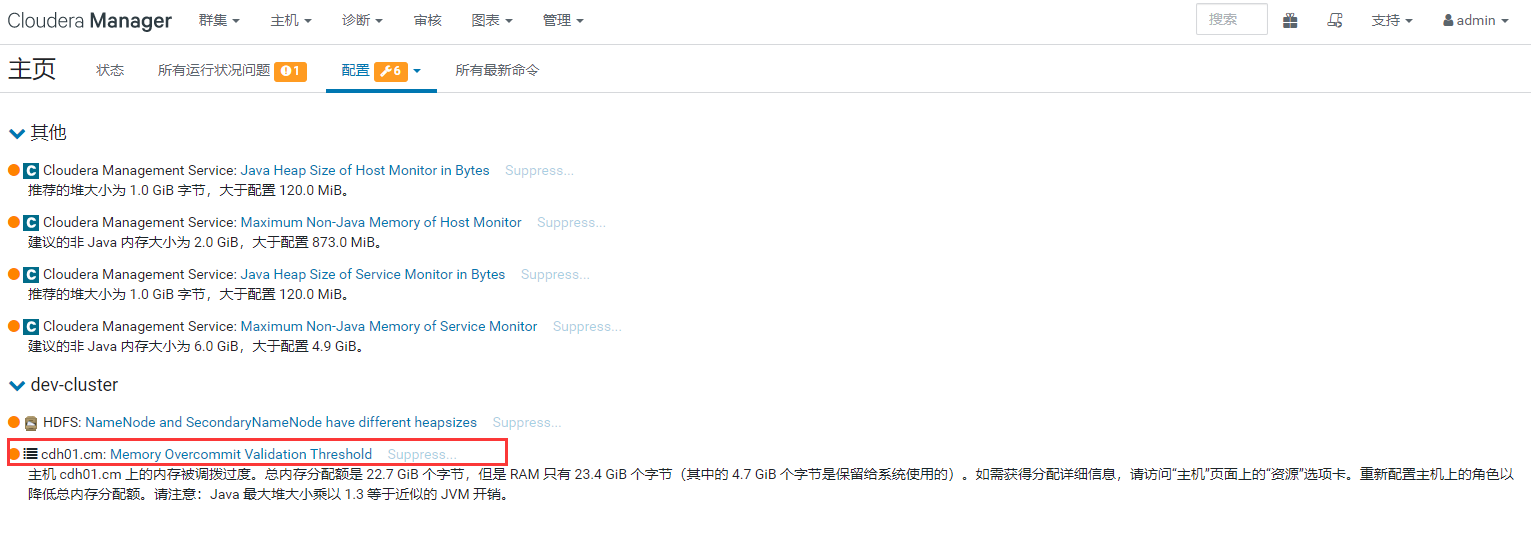

2.9.3 Java Heap Size of NameNode in Bytes

堆大小,使用默认值4G即可

类似警告还有:

Java Heap Size of Host Monitor in Bytes

Maximum Non-Java Memory of Host Monitor

Java Heap Size of Service Monitor in Bytes

Maximum Non-Java Memory of Service Monitor

2.9.4 Memory Overcommit Validation Threshold

内存被调拨过度,所有服务安装完后,总的分配内存是 22.7G ,总内存是 23.4G

内存调拨为22.7/23.4=0.97>默认值0.8,改成1.0就OK了。

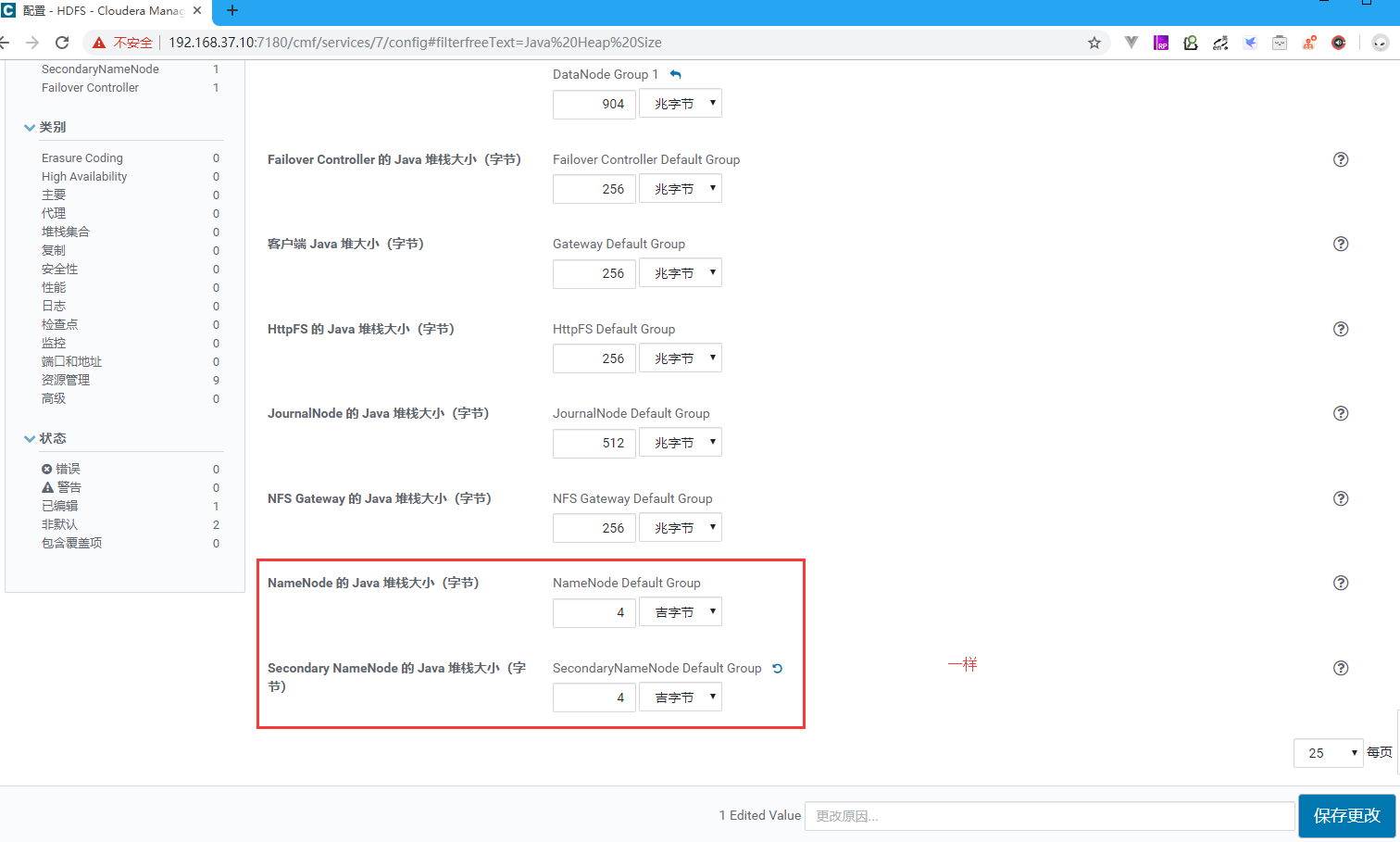

2.9.5 NameNode and SecondaryNameNode have different heapsizes

NameNode和SecondaryNameNode具有不同的堆大小

- 搜索 Java Heap Size

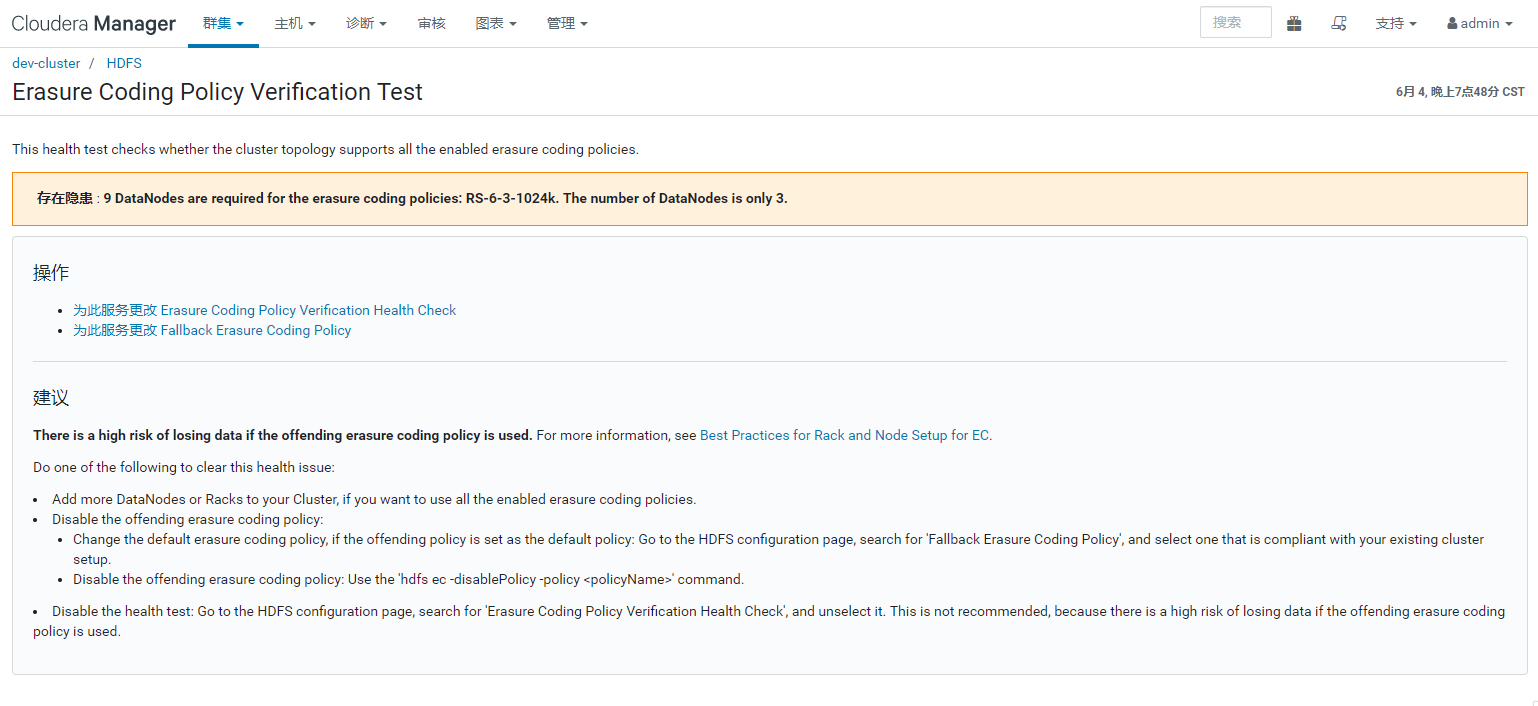

2.9.6 Erasure Coding Policy Verification Test

擦除编码策略验证测试:直接抑制

2.9.7 文件系统检查点

不良 : 文件系统检查点已有 20 小时,40 分钟。占配置检查点期限 1 小时的 2,068.25%。 临界阈值:400.00%。 自上个文件系统检查点以来已发生 255 个事务。这是 1,000,000 的已配置检查点事务目标的 0.03%。

解决方法:

1、namenode的Cluster ID 与 secondnamenode的Cluster ID 不一致,对比/dfs/nn/current/VERSION 和/dfs/snn/current/VERSION中的Cluster ID 来确认,如果不一致改成一致后重启节点应该可以解决。

2、修改之后还出现这个状况,查看secondnamenode 日志,报

ERROR: Exception in doCheckpoint java.io.IOException: Inconsistent checkpoint field

这个错误,直接删除 /dfs/snn/current/下所有文件,重启snn节点

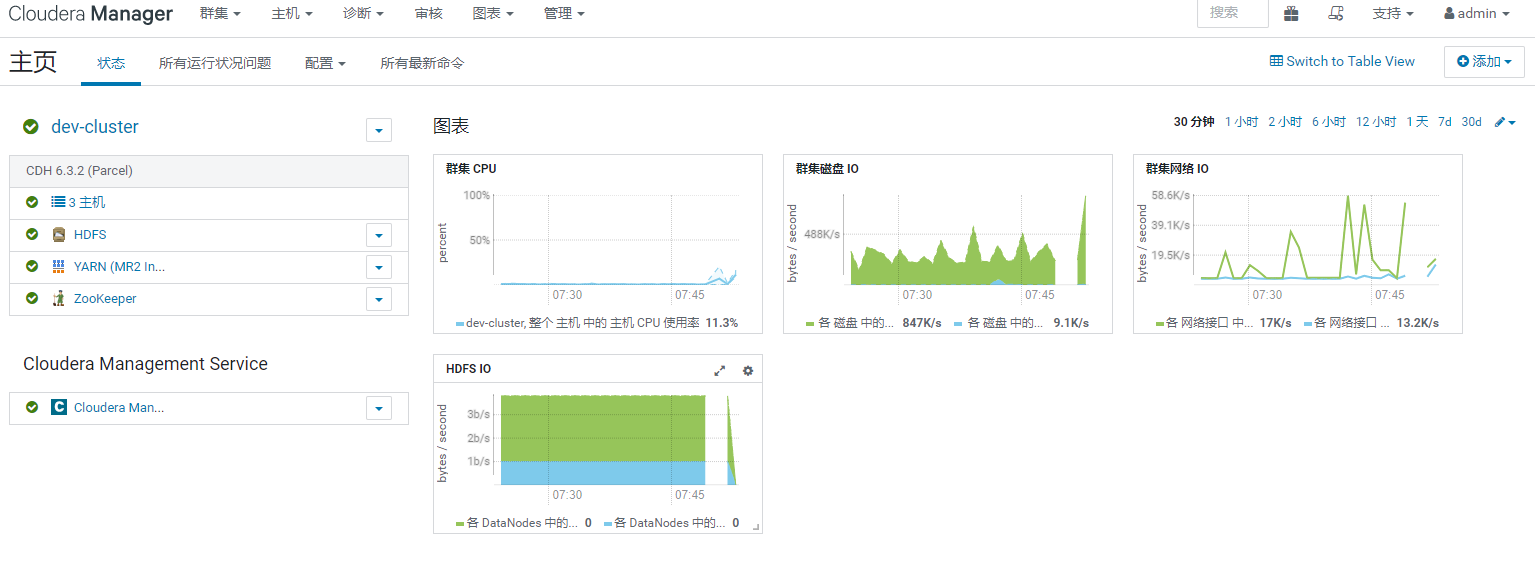

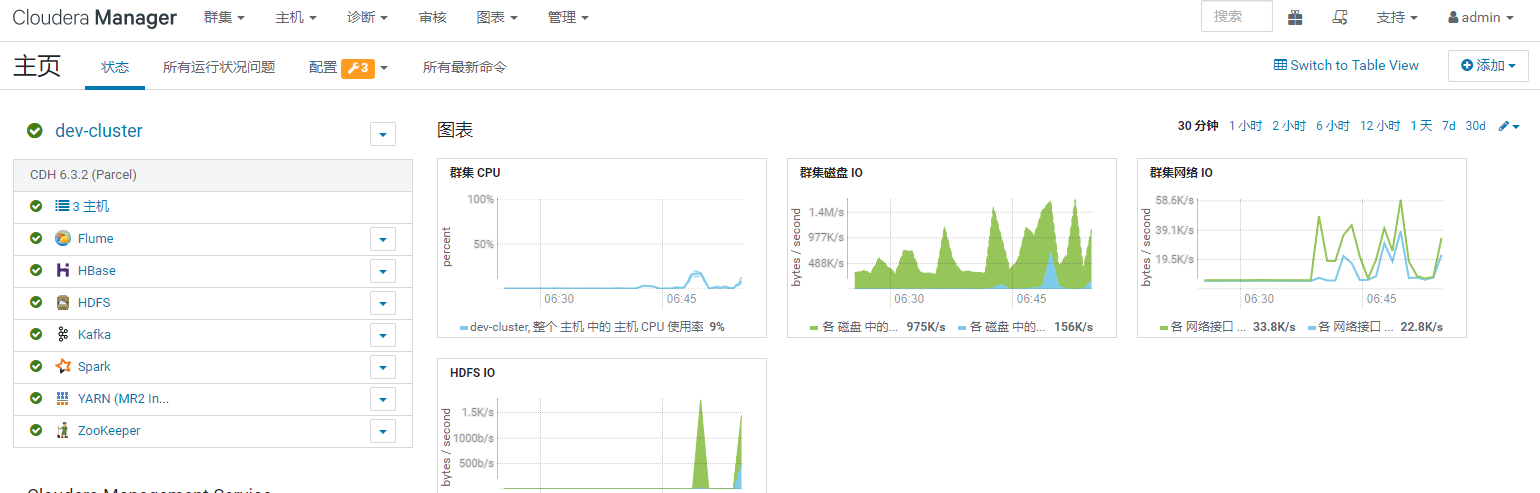

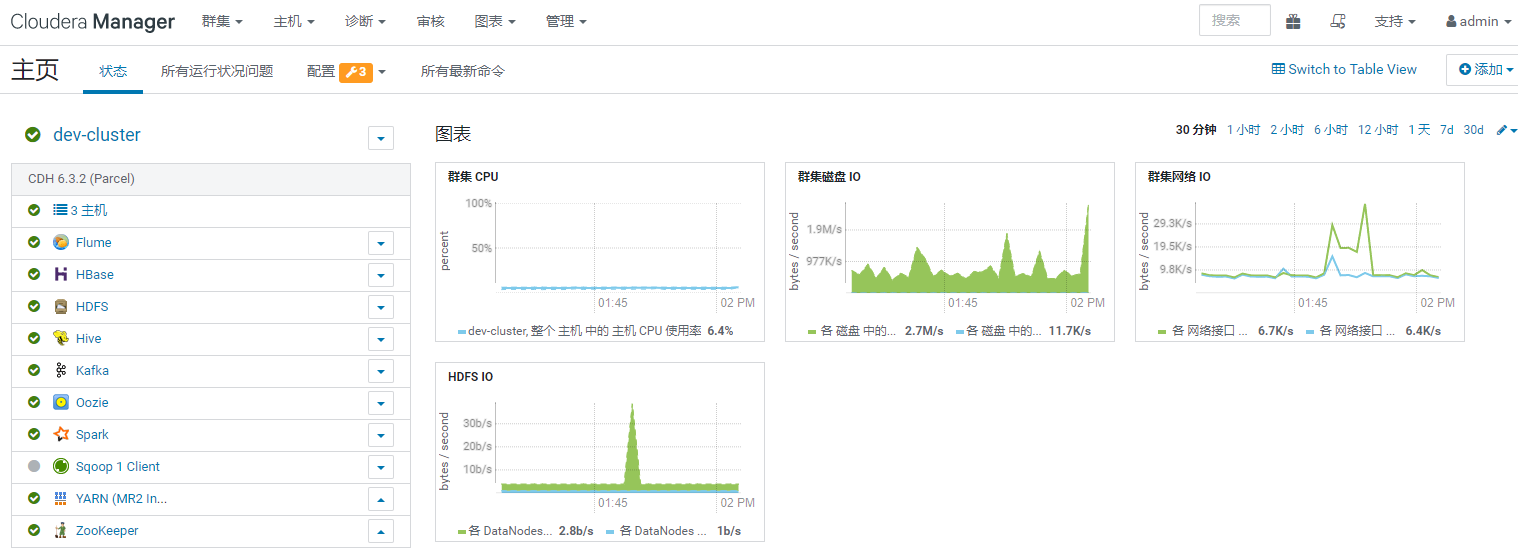

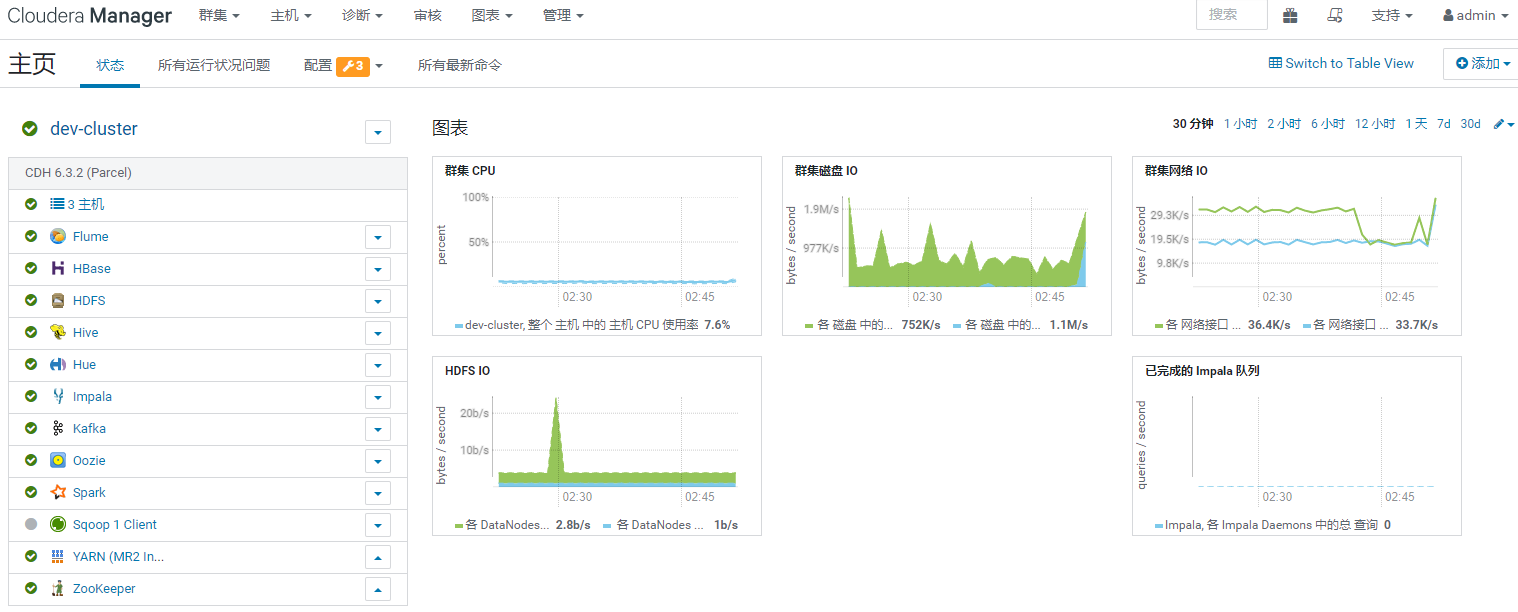

2.10 正常状态

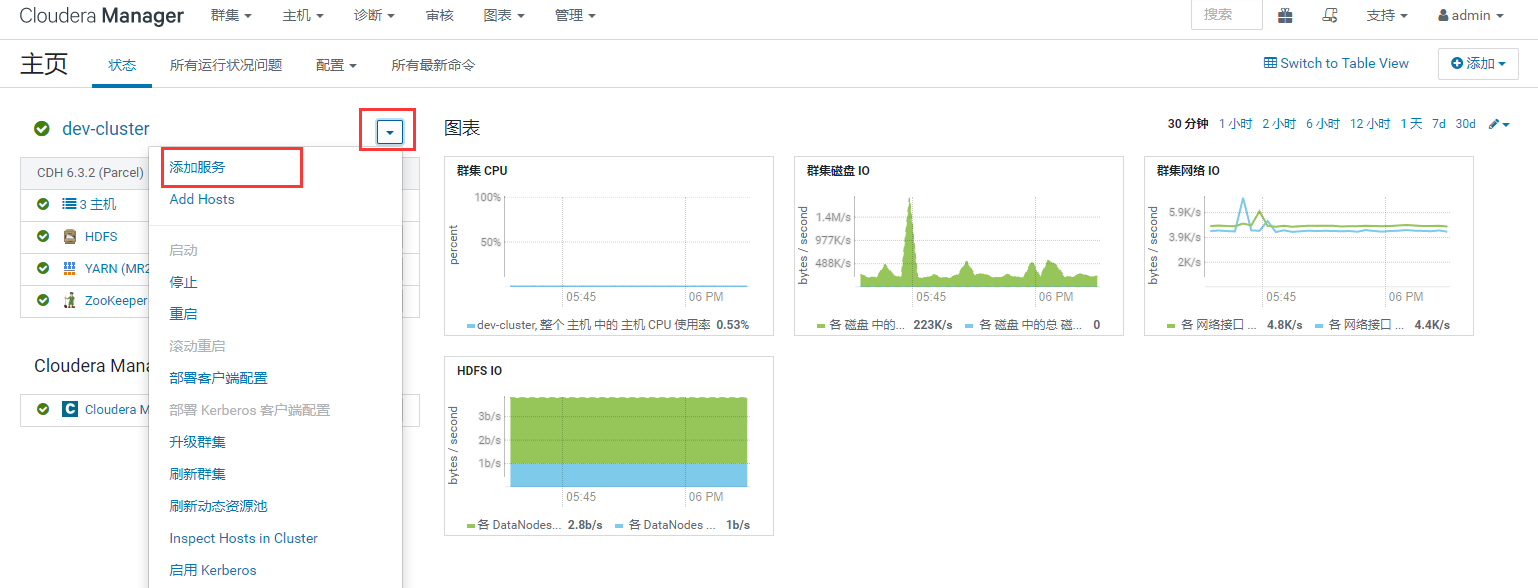

可以使用添加服务来添加需要的组件

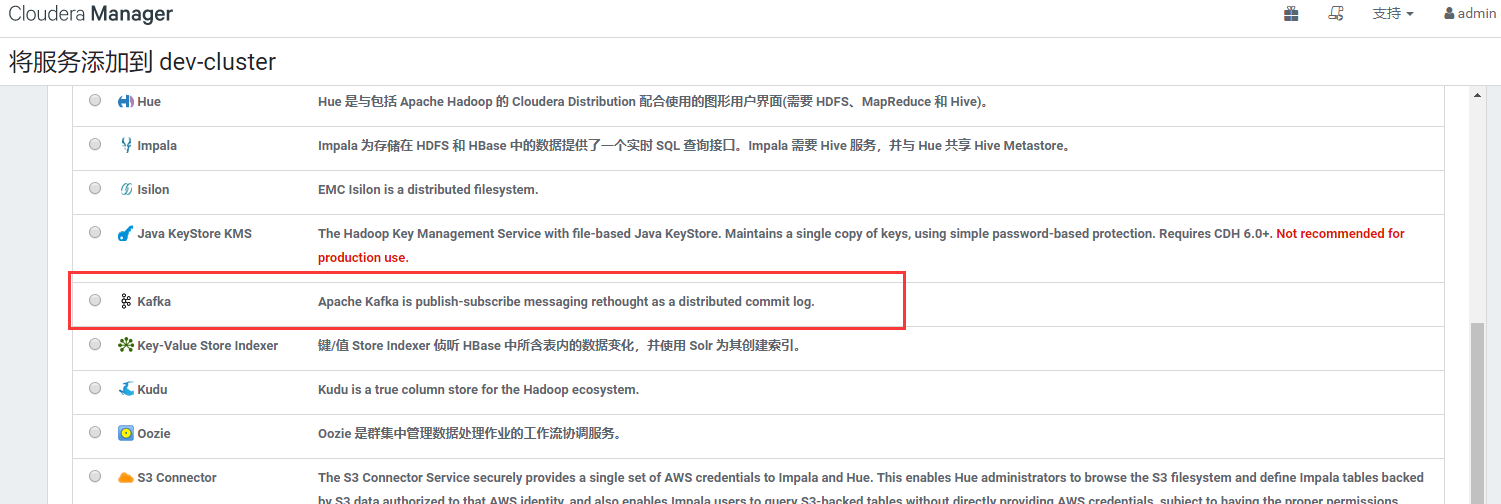

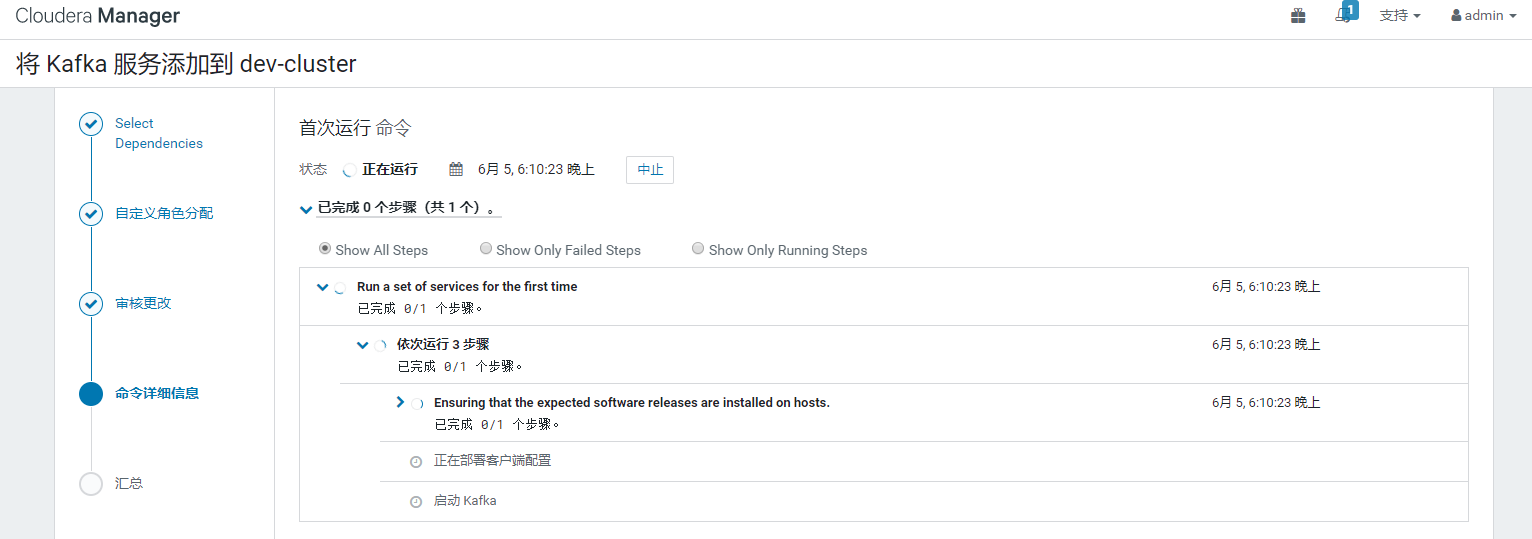

三 安装Kafka

基础配置走起

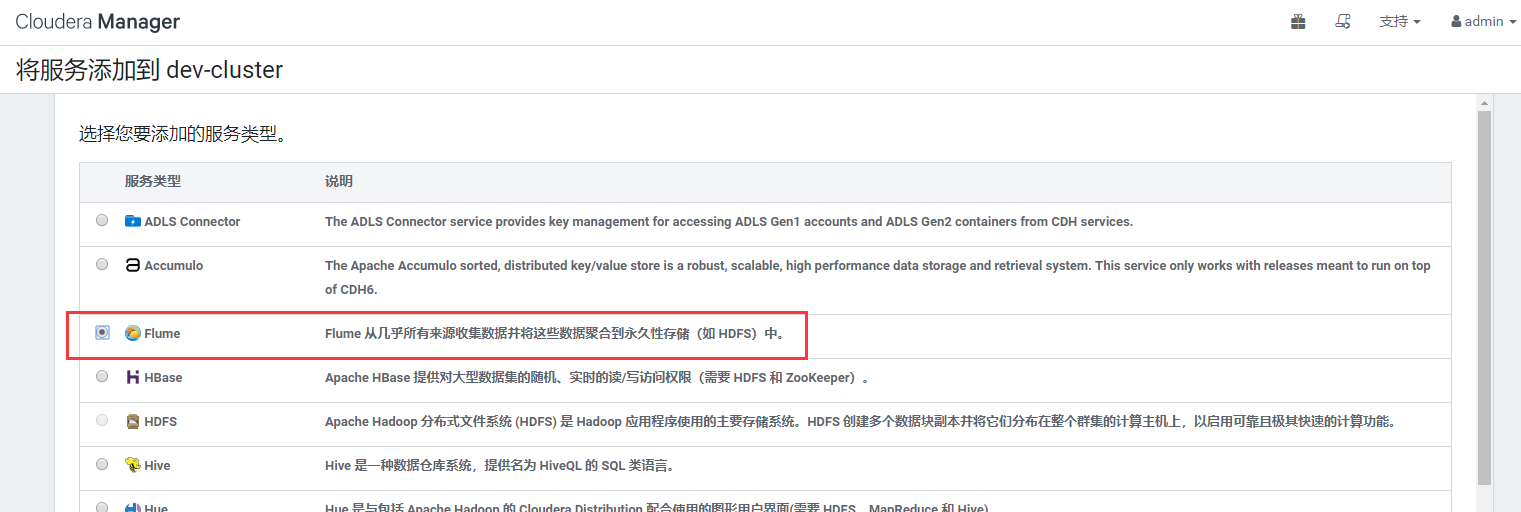

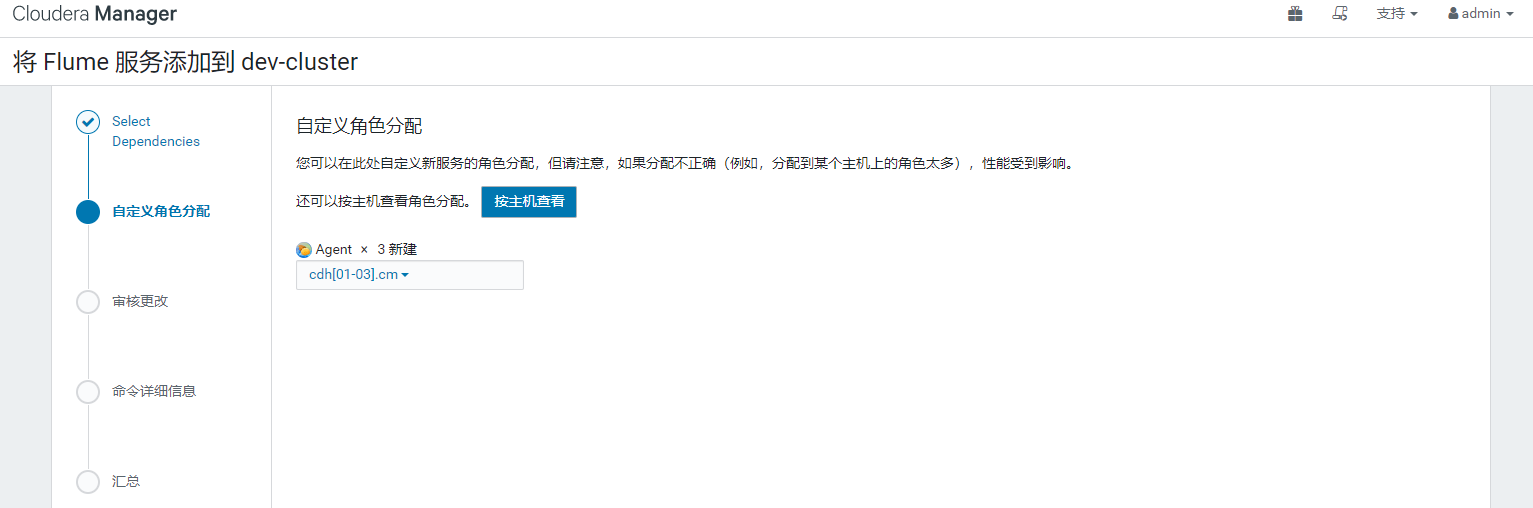

四 安装Flume

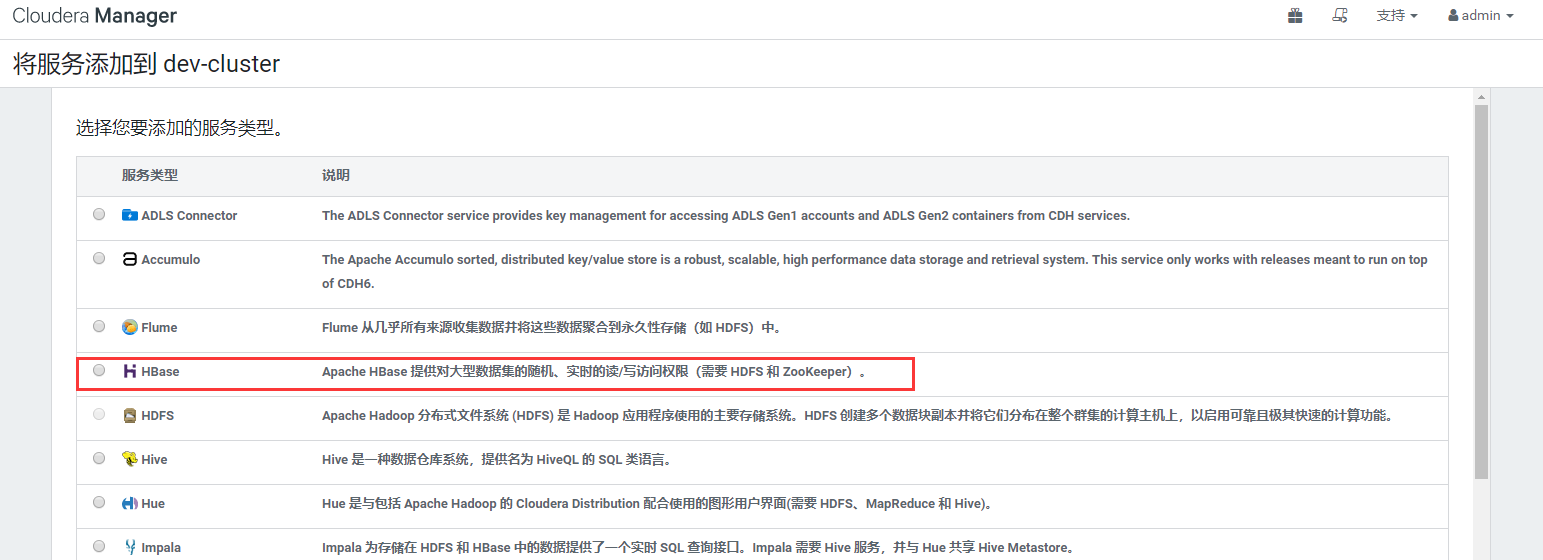

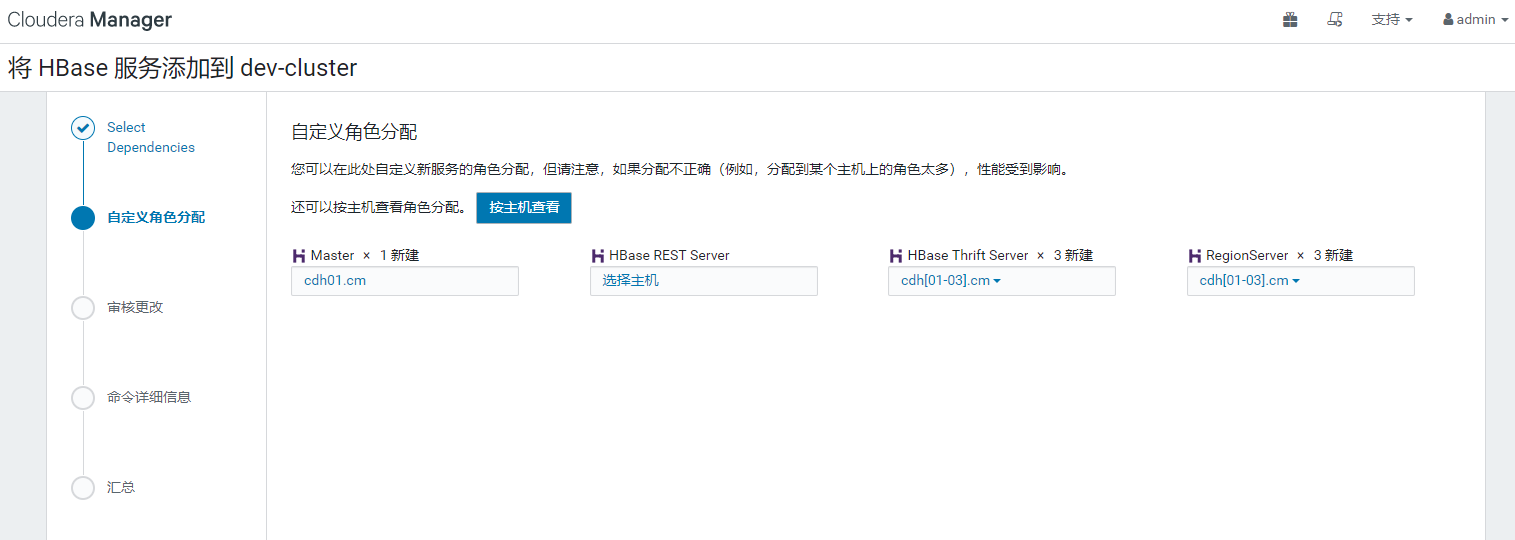

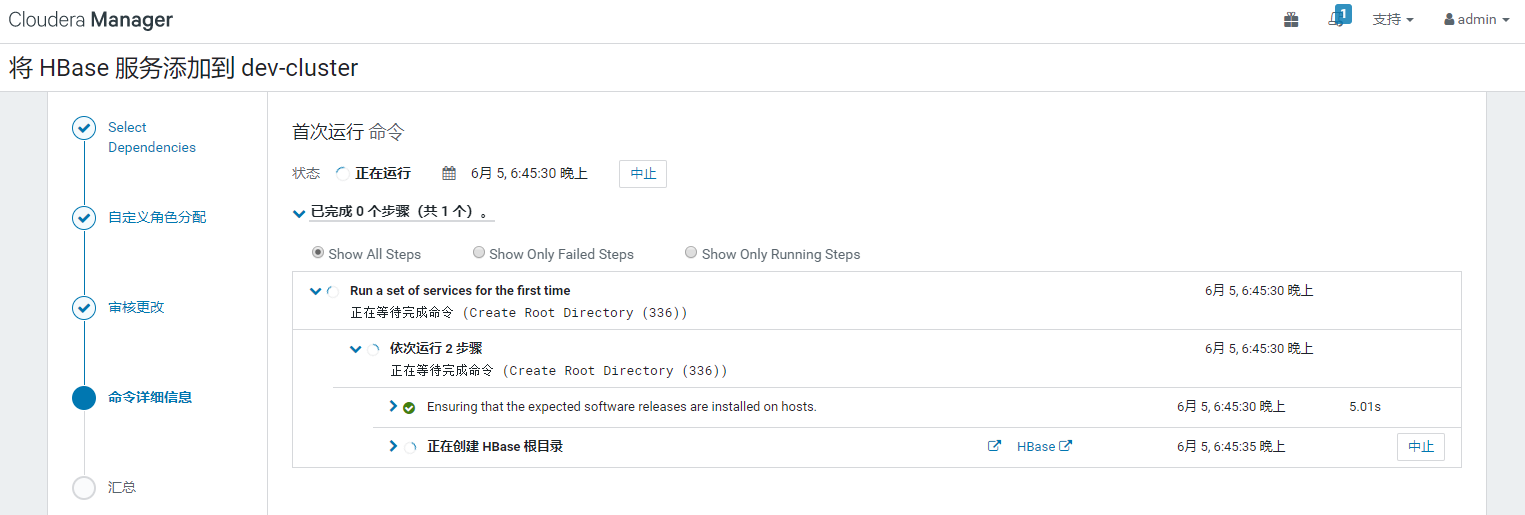

五 安装Hbase

基础配置走起

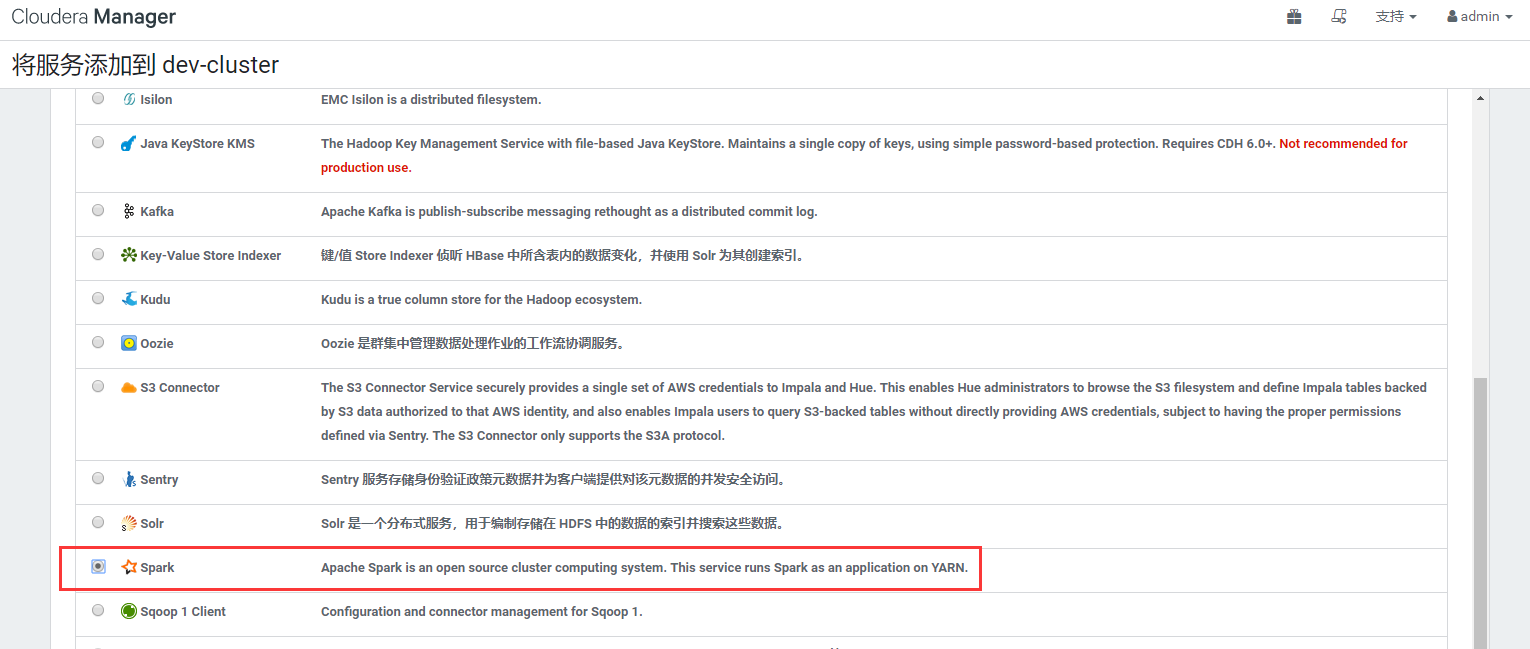

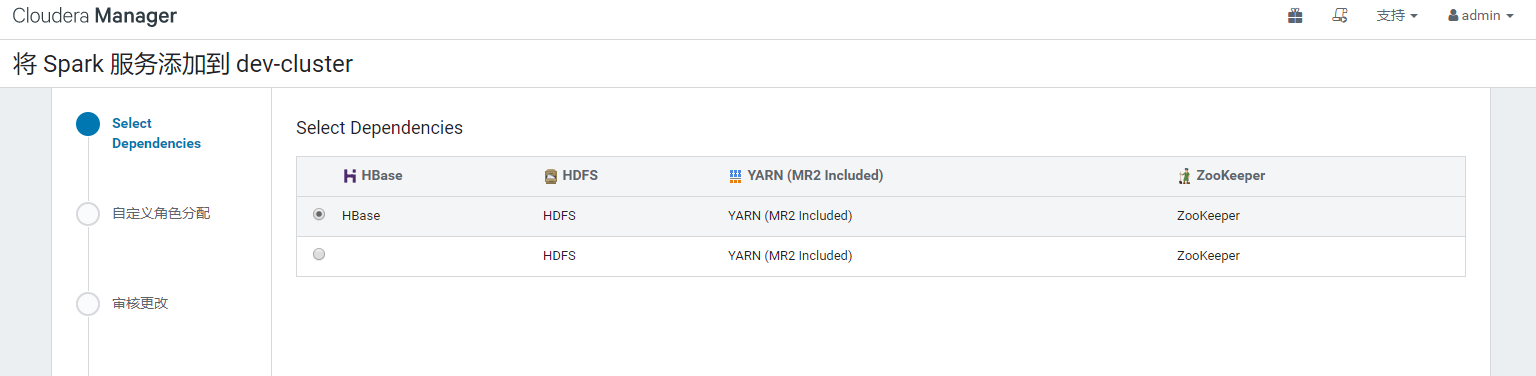

六 安装Spark

默认配置走起

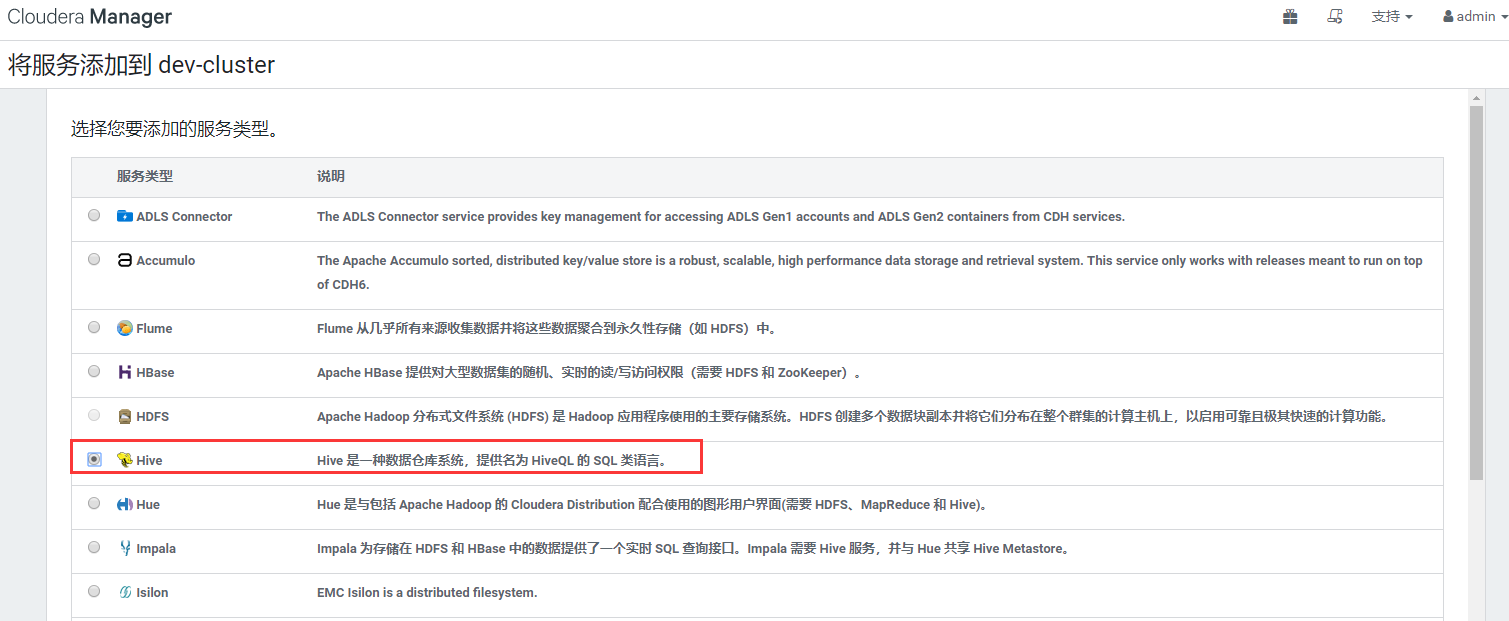

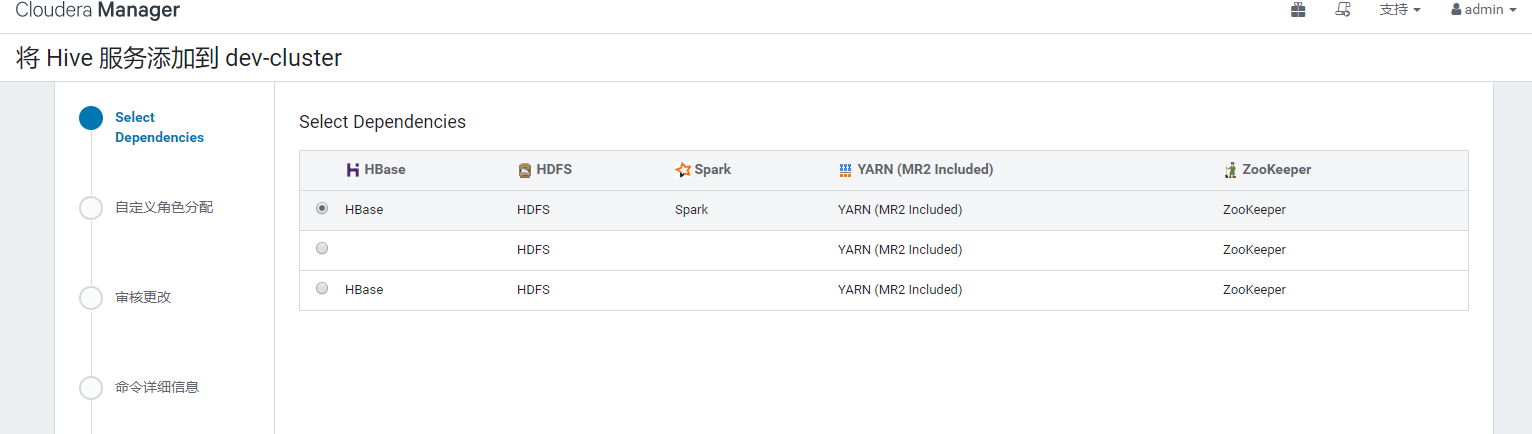

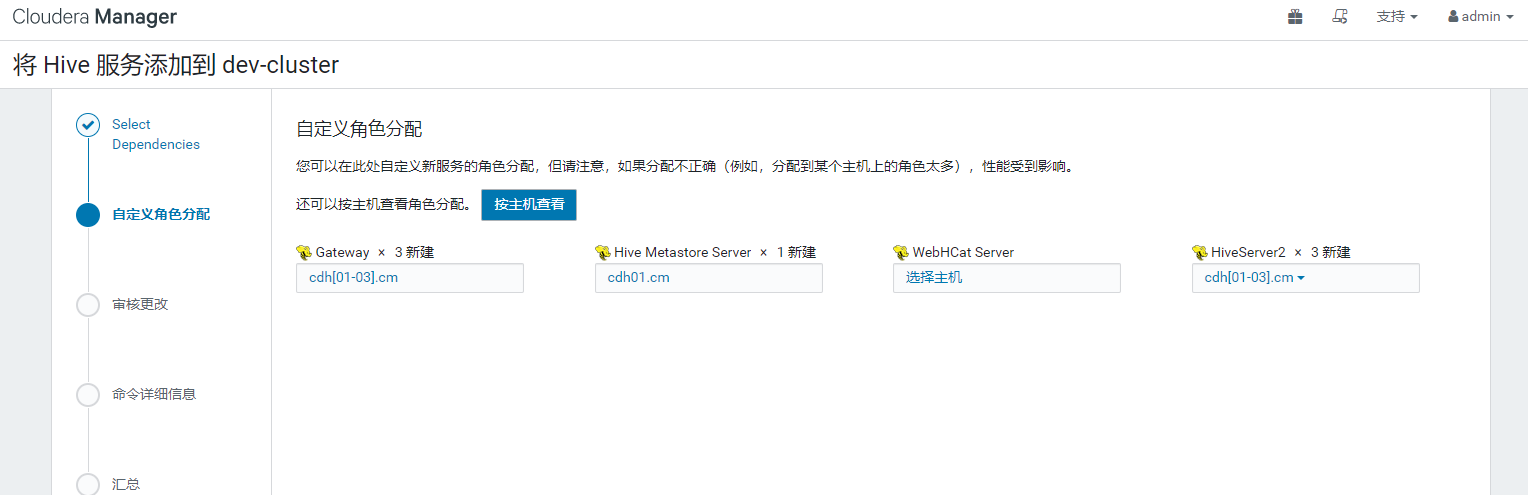

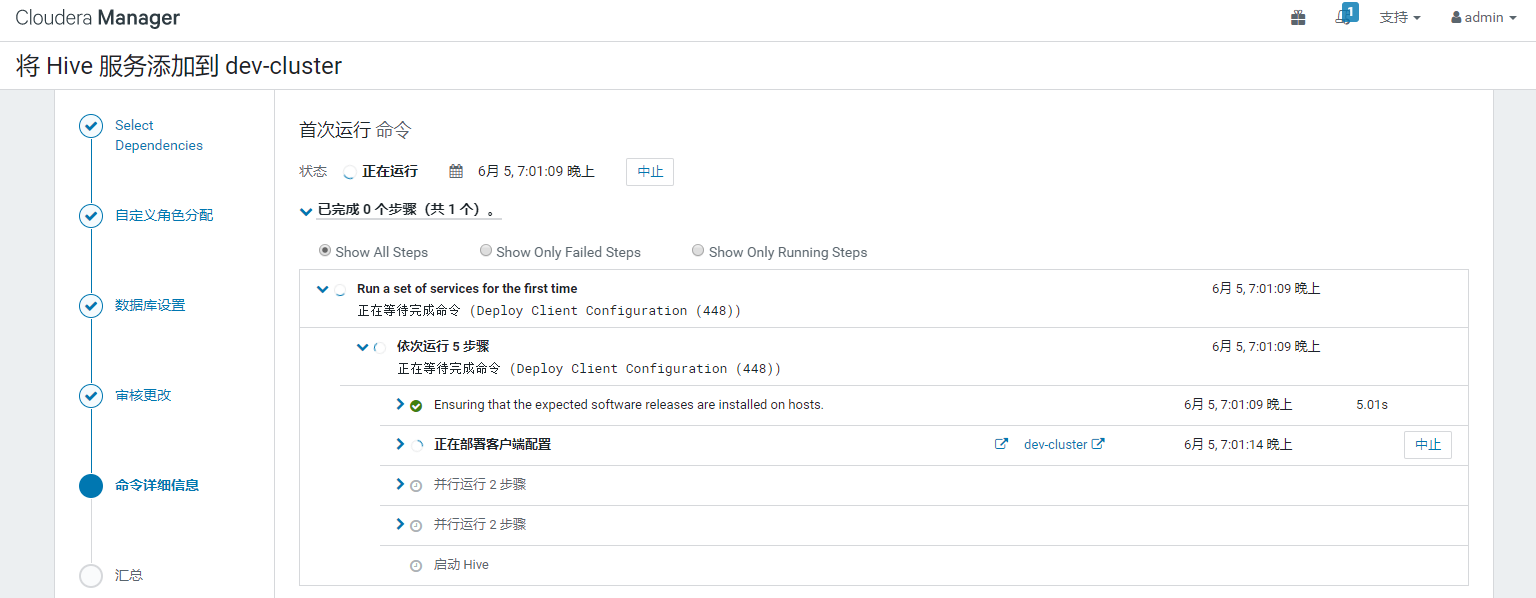

七 安装hive

基础配置走起

- 解决中文乱码

#进入mysql(输入修改后的密码)

mysql -u root -p

#进入hive元数据库

use metastore

#hive中文乱码解决

alter table COLUMNS_V2 modify column COMMENT varchar(256) character set utf8;

alter table TABLE_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

alter table PARTITION_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

alter table PARTITION_KEYS modify column PKEY_COMMENT varchar(4000) character set utf8;

alter table INDEX_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

#刷新

flush privileges;

#退出

quit

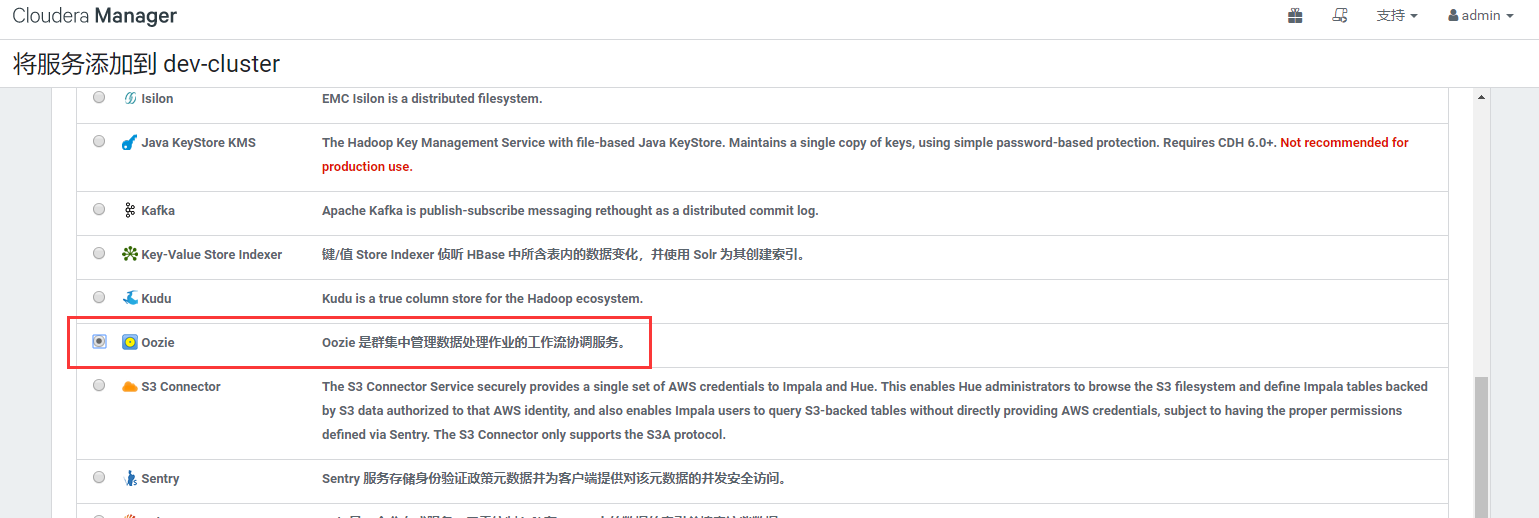

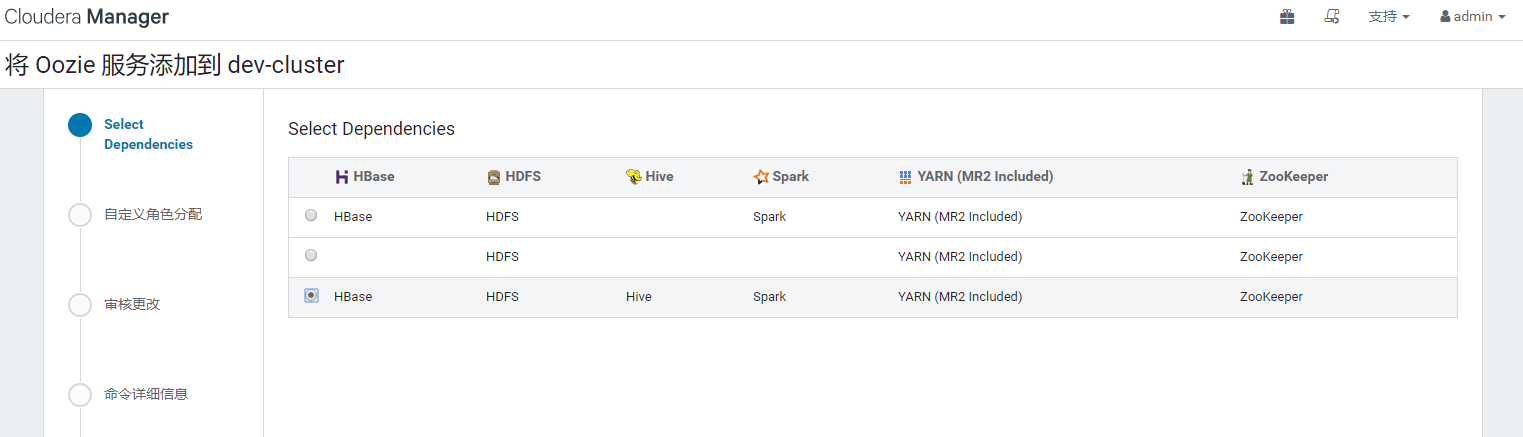

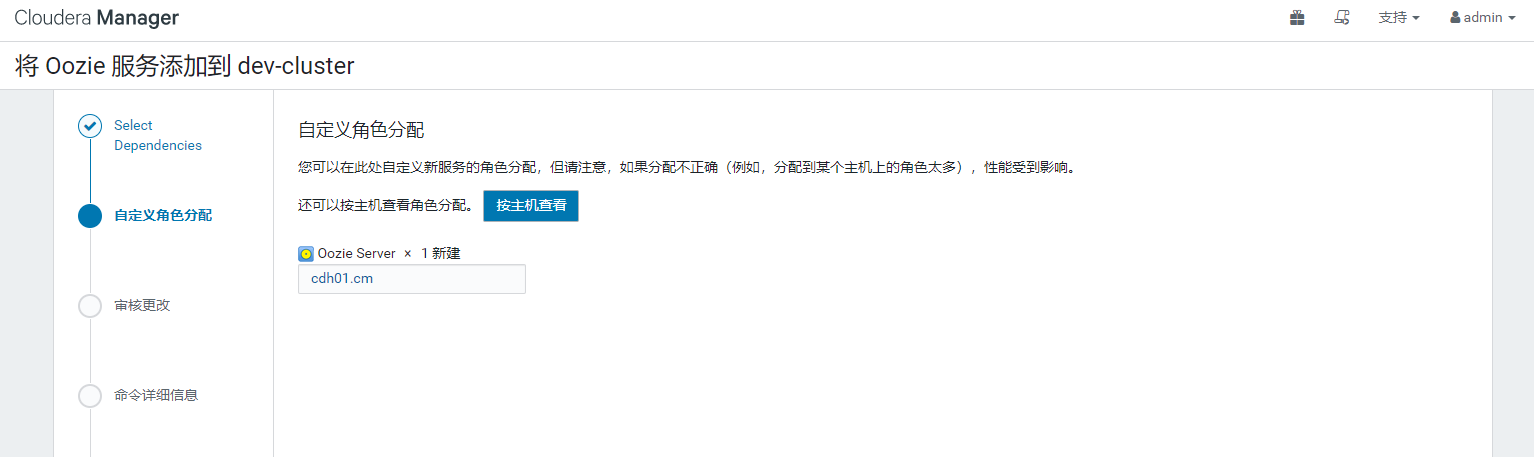

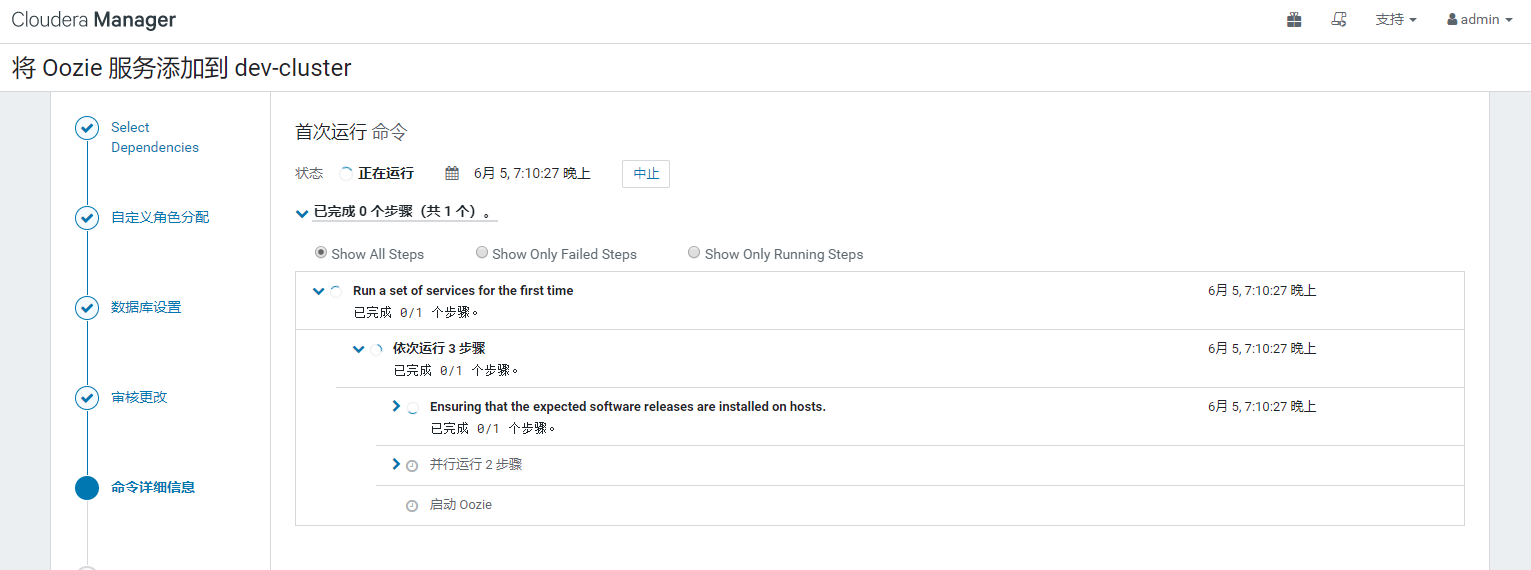

八 安装Oozie

默认配置走起

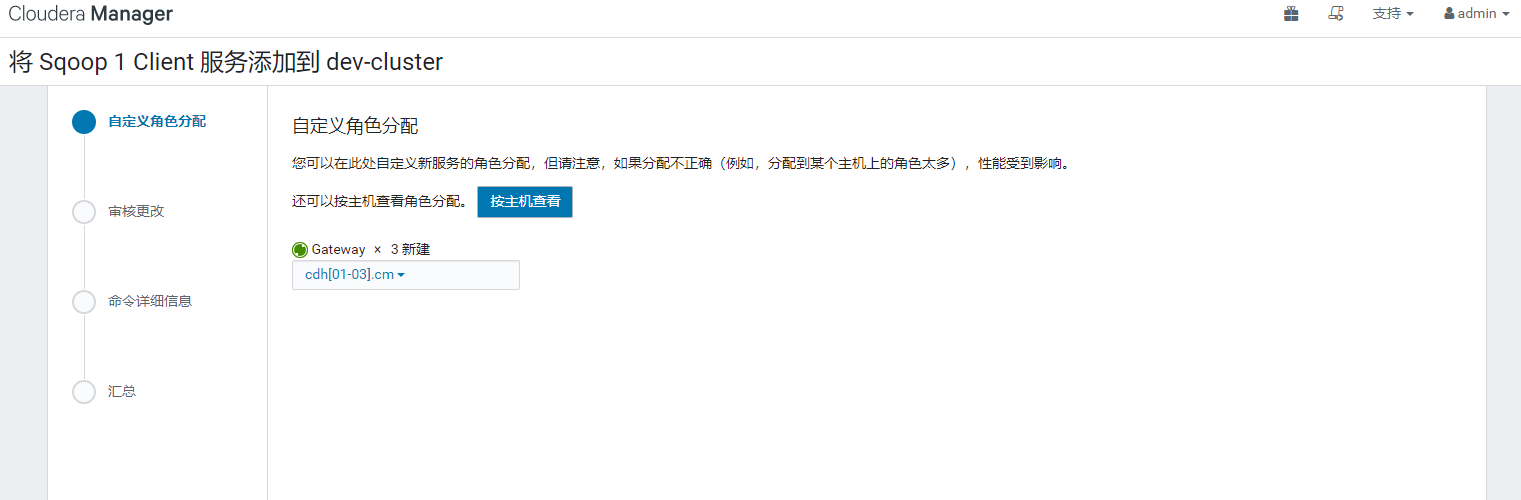

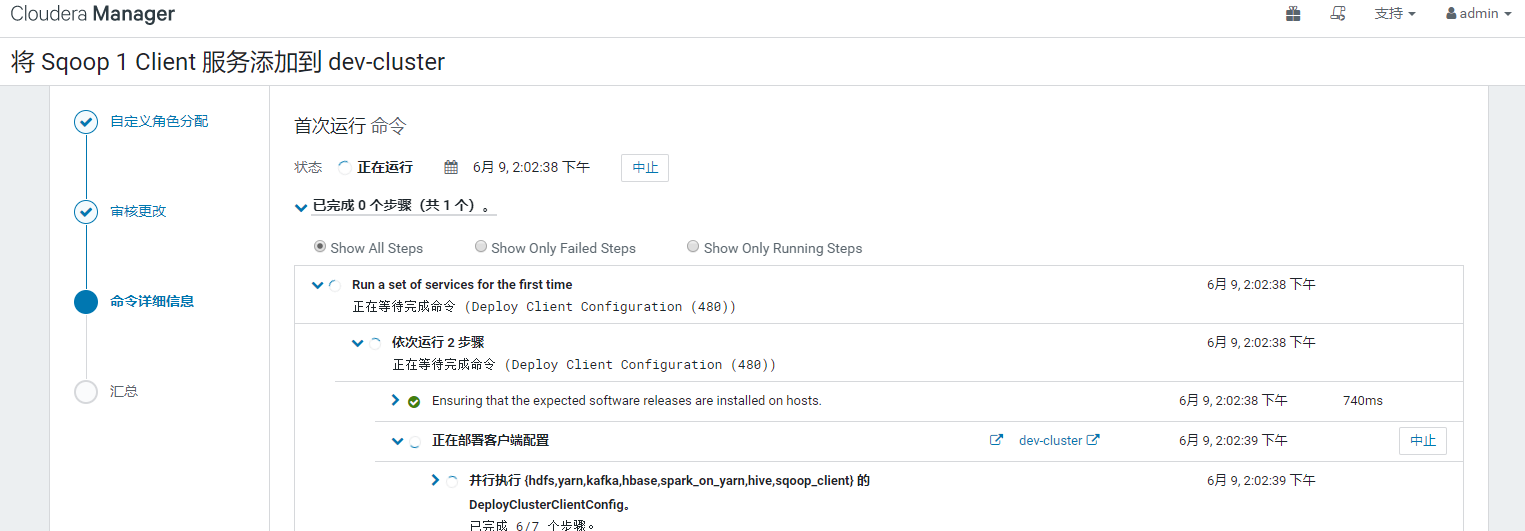

九 安装Sqoop

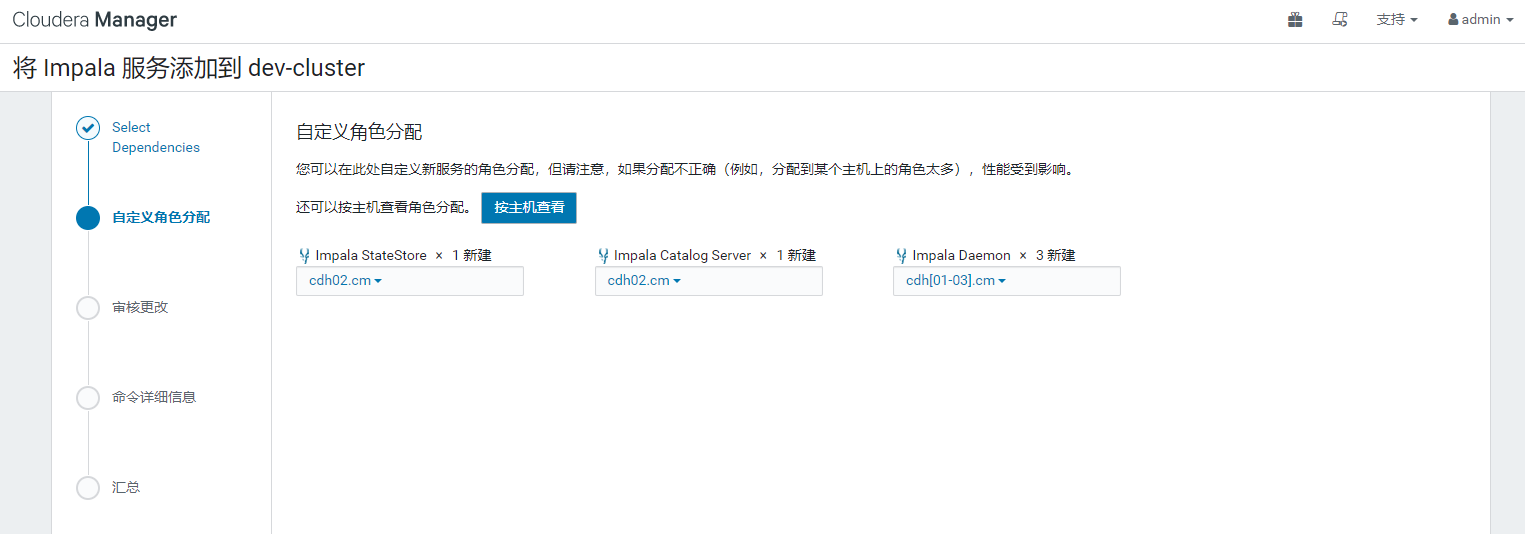

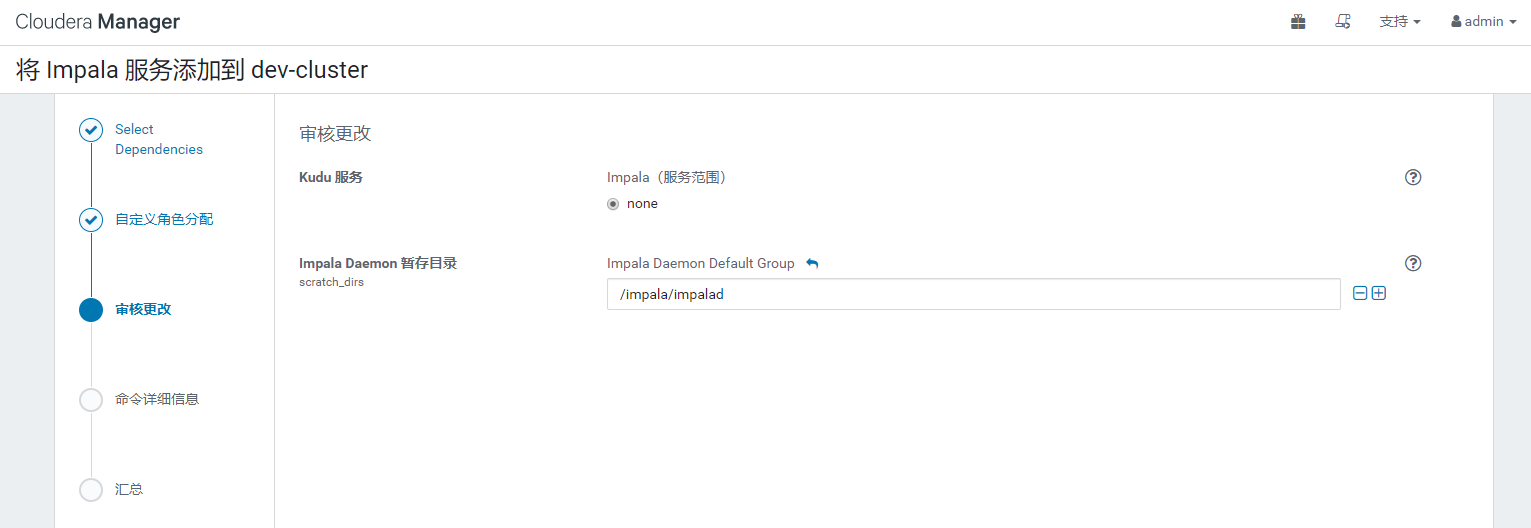

十 安装Impala

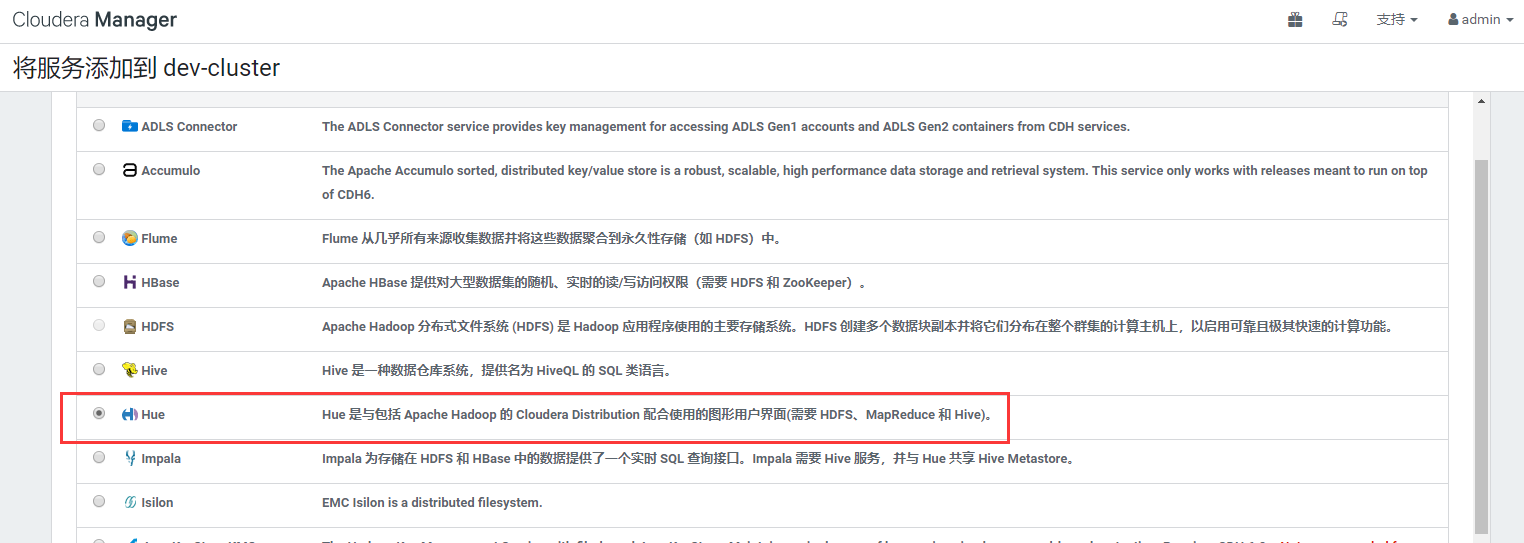

十一 安装hue

- hue常用连接器配置

https://docs.gethue.com/administrator/configuration/connectors/

Hue通过本机或SqlAlchemy连接器连接到任何数据库或仓库。完成HUE-8758之后,可以通过UI配置连接,直到此之前需要将它们添加到Hue hue_safety_valve.ini文件中。

十二 cdh 6.3.2引用maven

- pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

</project>

| Project | groupId | artifactId | version |

|---|---|---|---|

| Apache Avro | org.apache.avro | avro | 1.8.2-cdh6.3.2 |

| org.apache.avro | avro-compiler | 1.8.2-cdh6.3.2 | |

| org.apache.avro | avro-guava-dependencies | 1.8.2-cdh6.3.2 | |

| org.apache.avro | avro-ipc | 1.8.2-cdh6.3.2 | |

| org.apache.avro | avro-mapred | 1.8.2-cdh6.3.2 | |

| org.apache.avro | avro-maven-plugin | 1.8.2-cdh6.3.2 | |

| org.apache.avro | avro-protobuf | 1.8.2-cdh6.3.2 | |

| org.apache.avro | avro-service-archetype | 1.8.2-cdh6.3.2 | |

| org.apache.avro | avro-thrift | 1.8.2-cdh6.3.2 | |

| org.apache.avro | avro-tools | 1.8.2-cdh6.3.2 | |

| org.apache.avro | trevni-avro | 1.8.2-cdh6.3.2 | |

| org.apache.avro | trevni-core | 1.8.2-cdh6.3.2 | |

| Apache Crunch | org.apache.crunch | crunch-archetype | 0.11.0-cdh6.3.2 |

| org.apache.crunch | crunch-contrib | 0.11.0-cdh6.3.2 | |

| org.apache.crunch | crunch-core | 0.11.0-cdh6.3.2 | |

| org.apache.crunch | crunch-examples | 0.11.0-cdh6.3.2 | |

| org.apache.crunch | crunch-hbase | 0.11.0-cdh6.3.2 | |

| org.apache.crunch | crunch-hive | 0.11.0-cdh6.3.2 | |

| org.apache.crunch | crunch-scrunch | 0.11.0-cdh6.3.2 | |

| org.apache.crunch | crunch-spark | 0.11.0-cdh6.3.2 | |

| org.apache.crunch | crunch-test | 0.11.0-cdh6.3.2 | |

| Apache Flume 1.x | org.apache.flume | flume-ng-auth | 1.9.0-cdh6.3.2 |

| org.apache.flume | flume-ng-configuration | 1.9.0-cdh6.3.2 | |

| org.apache.flume | flume-ng-core | 1.9.0-cdh6.3.2 | |

| org.apache.flume | flume-ng-embedded-agent | 1.9.0-cdh6.3.2 | |

| org.apache.flume | flume-ng-node | 1.9.0-cdh6.3.2 | |

| org.apache.flume | flume-ng-sdk | 1.9.0-cdh6.3.2 | |

| org.apache.flume | flume-ng-tests | 1.9.0-cdh6.3.2 | |

| org.apache.flume | flume-tools | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-channels | flume-file-channel | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-channels | flume-jdbc-channel | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-channels | flume-kafka-channel | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-channels | flume-spillable-memory-channel | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-clients | flume-ng-log4jappender | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-configfilters | flume-ng-config-filter-api | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-configfilters | flume-ng-environment-variable-config-filter | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-configfilters | flume-ng-external-process-config-filter | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-configfilters | flume-ng-hadoop-credential-store-config-filter | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-legacy-sources | flume-avro-source | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-legacy-sources | flume-thrift-source | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sinks | flume-dataset-sink | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sinks | flume-hdfs-sink | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sinks | flume-hive-sink | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sinks | flume-http-sink | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sinks | flume-irc-sink | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sinks | flume-ng-hbase2-sink | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sinks | flume-ng-kafka-sink | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sinks | flume-ng-morphline-solr-sink | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sources | flume-jms-source | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sources | flume-kafka-source | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sources | flume-scribe-source | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sources | flume-taildir-source | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-ng-sources | flume-twitter-source | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-shared | flume-shared-kafka | 1.9.0-cdh6.3.2 | |

| org.apache.flume.flume-shared | flume-shared-kafka-test | 1.9.0-cdh6.3.2 | |

| GCS Connector | com.google.cloud.bigdataoss | gcs-connector | hadoop3-1.9.10-cdh6.3.2 |

| com.google.cloud.bigdataoss | gcsio | 1.9.10-cdh6.3.2 | |

| com.google.cloud.bigdataoss | util | 1.9.10-cdh6.3.2 | |

| com.google.cloud.bigdataoss | util-hadoop | hadoop3-1.9.10-cdh6.3.2 | |

| Apache Hadoop | org.apache.hadoop | hadoop-aliyun | 3.0.0-cdh6.3.2 |

| org.apache.hadoop | hadoop-annotations | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-archive-logs | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-archives | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-assemblies | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-auth | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-aws | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-azure | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-azure-datalake | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-build-tools | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-client | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-client-api | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-client-integration-tests | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-client-minicluster | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-client-runtime | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-cloud-storage | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-common | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-datajoin | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-distcp | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-extras | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-gridmix | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-hdfs | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-hdfs-client | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-hdfs-httpfs | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-hdfs-native-client | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-hdfs-nfs | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-kafka | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-kms | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-client-app | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-client-common | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-client-core | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-client-hs | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-client-hs-plugins | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-client-jobclient | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-client-nativetask | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-client-shuffle | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-client-uploader | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-mapreduce-examples | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-maven-plugins | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-minicluster | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-minikdc | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-nfs | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-openstack | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-resourceestimator | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-rumen | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-sls | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-streaming | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-api | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-applications-distributedshell | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-applications-unmanaged-am-launcher | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-client | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-common | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-registry | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-applicationhistoryservice | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-common | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-nodemanager | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-resourcemanager | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-router | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-sharedcachemanager | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-tests | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-timeline-pluginstorage | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-timelineservice | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-timelineservice-hbase | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-timelineservice-hbase-tests | 3.0.0-cdh6.3.2 | |

| org.apache.hadoop | hadoop-yarn-server-web-proxy | 3.0.0-cdh6.3.2 | |

| Apache HBase | org.apache.hbase | hbase-annotations | 2.1.0-cdh6.3.2 |

| org.apache.hbase | hbase-checkstyle | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-client | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-client-project | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-common | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-endpoint | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-examples | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-external-blockcache | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-hadoop-compat | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-hadoop2-compat | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-http | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-it | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-mapreduce | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-metrics | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-metrics-api | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-procedure | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-protocol | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-protocol-shaded | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-replication | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-resource-bundle | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-rest | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-rsgroup | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-server | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-shaded-client | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-shaded-client-byo-hadoop | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-shaded-client-project | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-shaded-mapreduce | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-shell | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-spark | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-spark-it | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-testing-util | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-thrift | 2.1.0-cdh6.3.2 | |

| org.apache.hbase | hbase-zookeeper | 2.1.0-cdh6.3.2 | |

| HBase Indexer | com.ngdata | hbase-indexer-all | 1.5-cdh6.3.2 |

| com.ngdata | hbase-indexer-cli | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-indexer-common | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-indexer-demo | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-indexer-dist | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-indexer-engine | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-indexer-model | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-indexer-morphlines | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-indexer-mr | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-indexer-server | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-sep-api | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-sep-demo | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-sep-impl | 1.5-cdh6.3.2 | |

| com.ngdata | hbase-sep-tools | 1.5-cdh6.3.2 | |

| Apache Hive | org.apache.hive | hive-accumulo-handler | |

| org.apache.hive | hive-ant | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-beeline | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-classification | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-cli | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-common | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-contrib | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-exec | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-hbase-handler | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-hplsql | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-jdbc | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-kryo-registrator | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-llap-client | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-llap-common | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-llap-ext-client | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-llap-server | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-llap-tez | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-metastore | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-orc | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-serde | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-service | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-service-rpc | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-shims | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-spark-client | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-storage-api | 2.1.1-cdh6.3.2 | |

| org.apache.hive | hive-testutils | 2.1.1-cdh6.3.2 | |

| org.apache.hive.hcatalog | hive-hcatalog-core | 2.1.1-cdh6.3.2 | |

| org.apache.hive.hcatalog | hive-hcatalog-pig-adapter | 2.1.1-cdh6.3.2 | |

| org.apache.hive.hcatalog | hive-hcatalog-server-extensions | 2.1.1-cdh6.3.2 | |

| org.apache.hive.hcatalog | hive-hcatalog-streaming | 2.1.1-cdh6.3.2 | |

| org.apache.hive.hcatalog | hive-webhcat | 2.1.1-cdh6.3.2 | |

| org.apache.hive.hcatalog | hive-webhcat-java-client | 2.1.1-cdh6.3.2 | |

| org.apache.hive.shims | hive-shims-0.23 | 2.1.1-cdh6.3.2 | |

| org.apache.hive.shims | hive-shims-common | 2.1.1-cdh6.3.2 | |

| org.apache.hive.shims | hive-shims-scheduler | 2.1.1-cdh6.3.2 | |

| Apache Kafka | org.apache.kafka | connect-api | 2.2.1-cdh6.3.2 |

| org.apache.kafka | connect-basic-auth-extension | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | connect-file | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | connect-json | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | connect-runtime | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | connect-transforms | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka-clients | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka-examples | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka-log4j-appender | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka-streams | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka-streams-examples | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka-streams-scala_2.11 | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka-streams-scala_2.12 | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka-streams-test-utils | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka-tools | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka_2.11 | 2.2.1-cdh6.3.2 | |

| org.apache.kafka | kafka_2.12 | 2.2.1-cdh6.3.2 | |

| Kite SDK | org.kitesdk | kite-data-core | 1.0.0-cdh6.3.2 |

| org.kitesdk | kite-data-crunch | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-data-hbase | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-data-hive | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-data-mapreduce | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-data-oozie | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-data-s3 | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-data-spark | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-hadoop-compatibility | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-maven-plugin | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-minicluster | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-avro | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-core | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-hadoop-core | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-hadoop-parquet-avro | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-hadoop-rcfile | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-hadoop-sequencefile | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-json | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-maxmind | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-metrics-scalable | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-metrics-servlets | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-protobuf | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-saxon | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-solr-cell | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-solr-core | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-tika-core | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-tika-decompress | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-twitter | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-morphlines-useragent | 1.0.0-cdh6.3.2 | |

| org.kitesdk | kite-tools | 1.0.0-cdh6.3.2 | |

| Apache Kudu | org.apache.kudu | kudu-backup-tools | 1.10.0-cdh6.3.2 |

| org.apache.kudu | kudu-backup2_2.11 | 1.10.0-cdh6.3.2 | |

| org.apache.kudu | kudu-client | 1.10.0-cdh6.3.2 | |

| org.apache.kudu | kudu-client-tools | 1.10.0-cdh6.3.2 | |

| org.apache.kudu | kudu-flume-sink | 1.10.0-cdh6.3.2 | |

| org.apache.kudu | kudu-hive | 1.10.0-cdh6.3.2 | |

| org.apache.kudu | kudu-mapreduce | 1.10.0-cdh6.3.2 | |

| org.apache.kudu | kudu-spark2-tools_2.11 | 1.10.0-cdh6.3.2 | |

| org.apache.kudu | kudu-spark2_2.11 | 1.10.0-cdh6.3.2 | |

| org.apache.kudu | kudu-test-utils | 1.10.0-cdh6.3.2 | |

| Apache Oozie | org.apache.oozie | oozie-client | 5.1.0-cdh6.3.2 |

| org.apache.oozie | oozie-core | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-examples | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-fluent-job-api | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-fluent-job-client | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-server | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-distcp | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-git | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-hcatalog | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-hive | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-hive2 | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-oozie | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-pig | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-spark | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-sqoop | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-sharelib-streaming | 5.1.0-cdh6.3.2 | |

| org.apache.oozie | oozie-tools | 5.1.0-cdh6.3.2 | |

| org.apache.oozie.test | oozie-mini | 5.1.0-cdh6.3.2 | |

| Apache Pig | org.apache.pig | pig | 0.17.0-cdh6.3.2 |

| org.apache.pig | piggybank | 0.17.0-cdh6.3.2 | |

| org.apache.pig | pigsmoke | 0.17.0-cdh6.3.2 | |

| org.apache.pig | pigunit | 0.17.0-cdh6.3.2 | |

| Cloudera Search | com.cloudera.search | search-crunch | 1.0.0-cdh6.3.2 |

| com.cloudera.search | search-mr | 1.0.0-cdh6.3.2 | |

| Apache Sentry | com.cloudera.cdh | solr-upgrade | 1.0.0-cdh6.3.2 |

| org.apache.sentry | sentry-binding-hbase-indexer | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-binding-hive | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-binding-hive-common | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-binding-hive-conf | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-binding-hive-follower | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-binding-kafka | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-binding-solr | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-core-common | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-core-model-db | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-core-model-indexer | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-core-model-kafka | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-core-model-solr | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-dist | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-hdfs-common | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-hdfs-dist | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-hdfs-namenode-plugin | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-hdfs-service | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-policy-common | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-policy-engine | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-provider-cache | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-provider-common | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-provider-db | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-provider-file | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-service-api | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-service-client | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-service-providers | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-service-server | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-service-web | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-shaded | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-shaded-miscellaneous | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-spi | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-tests-hive | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-tests-kafka | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-tests-solr | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | sentry-tools | 2.1.0-cdh6.3.2 | |

| org.apache.sentry | solr-sentry-handlers | 2.1.0-cdh6.3.2 | |

| Apache Solr | org.apache.lucene | lucene-analyzers-common | 7.4.0-cdh6.3.2 |

| org.apache.lucene | lucene-analyzers-icu | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-analyzers-kuromoji | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-analyzers-morfologik | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-analyzers-nori | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-analyzers-opennlp | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-analyzers-phonetic | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-analyzers-smartcn | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-analyzers-stempel | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-analyzers-uima | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-backward-codecs | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-benchmark | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-classification | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-codecs | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-core | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-demo | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-expressions | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-facet | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-grouping | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-highlighter | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-join | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-memory | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-misc | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-queries | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-queryparser | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-replicator | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-sandbox | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-spatial | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-spatial-extras | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-spatial3d | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-suggest | 7.4.0-cdh6.3.2 | |

| org.apache.lucene | lucene-test-framework | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-analysis-extras | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-analytics | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-cell | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-clustering | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-core | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-dataimporthandler | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-dataimporthandler-extras | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-jetty-customizations | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-langid | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-ltr | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-prometheus-exporter | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-security-util | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-sentry-audit-logging | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-solrj | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-test-framework | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-uima | 7.4.0-cdh6.3.2 | |

| org.apache.solr | solr-velocity | 7.4.0-cdh6.3.2 | |

| Apache Spark | org.apache.spark | spark-avro_2.11 | 2.4.0-cdh6.3.2 |

| org.apache.spark | spark-catalyst_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-core_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-graphx_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-hadoop-cloud_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-hive_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-kubernetes_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-kvstore_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-launcher_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-mllib-local_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-mllib_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-network-common_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-network-shuffle_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-network-yarn_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-repl_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-sketch_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-sql-kafka-0-10_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-sql_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-streaming-flume-assembly_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-streaming-flume-sink_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-streaming-flume_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-streaming-kafka-0-10-assembly_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-streaming-kafka-0-10_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-streaming_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-tags_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-unsafe_2.11 | 2.4.0-cdh6.3.2 | |

| org.apache.spark | spark-yarn_2.11 | 2.4.0-cdh6.3.2 | |

| Apache Sqoop | org.apache.sqoop | sqoop | 1.4.7-cdh6.3.2 |

| Apache ZooKeeper | org.apache.zookeeper | zookeeper | 3.4.5-cdh6.3.2 |

您的资助是我最大的动力!

金额随意,欢迎来赏!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构