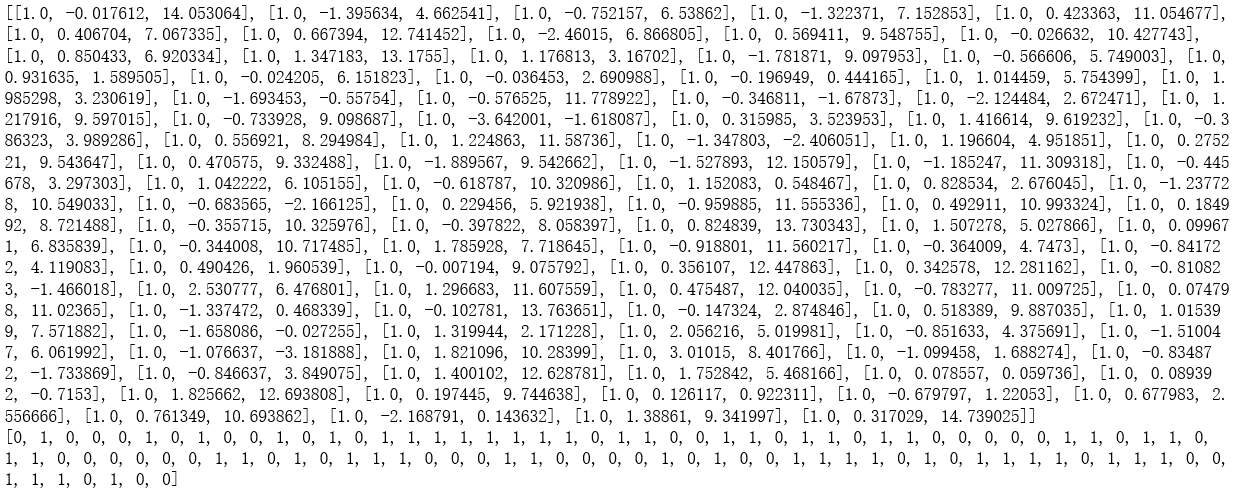

import numpy as np def loadDataSet(): dataMat = [] labelMat = [] fr = open('D:\\LearningResource\\machinelearninginaction\\Ch05\\testSet.txt') for line in fr.readlines(): lineArr = line.strip().split() dataMat.append([1.0, float(lineArr[0]), float(lineArr[1])]) labelMat.append(int(lineArr[2])) return dataMat,labelMat dataMat,labelMat = loadDataSet() print(dataMat) print(labelMat)

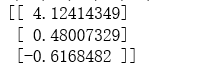

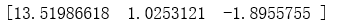

def sigmoid(z): sigmoid = 1.0/(1+np.exp(-z)) return sigmoid def gradAscent(dataMatIn, classLabels): dataMatrix = np.mat(dataMatIn) labelMat = np.mat(classLabels).transpose() m,n = np.shape(dataMatrix) alpha = 0.001 maxCycles = 500 weights = np.ones((n,1)) for k in range(maxCycles): h = sigmoid(dataMatrix*weights) error = (labelMat - h) weights = weights + alpha * dataMatrix.transpose()* error return weights weights = gradAscent(dataMat,labelMat) print(weights)

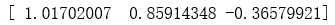

def stocGradAscent0(dataMatrix, classLabels): m,n = np.shape(dataMatrix) alpha = 0.01 weights = np.ones(n) for i in range(m): h = sigmoid(sum(np.array(dataMatrix[i])*weights)) error = classLabels[i] - h weights = weights + alpha * error * np.array(dataMatrix[i]) return weights weights = stocGradAscent0(dataMat,labelMat) print(weights)

def stocGradAscent1(dataMatrix, classLabels, numIter=150): m,n = np.shape(dataMatrix) weights = np.ones(n) for j in range(numIter): dataIndex = list(range(m)) for i in range(m): alpha = 4/(1.0+j+i)+0.0001 randIndex = int(np.random.uniform(0,len(dataIndex))) h = sigmoid(sum(np.array(dataMatrix[randIndex])*weights)) error = classLabels[randIndex] - h weights = weights + alpha * error * np.array(dataMatrix[randIndex]) del(dataIndex[randIndex]) return weights weights = stocGradAscent1(dataMat,labelMat) print(weights)

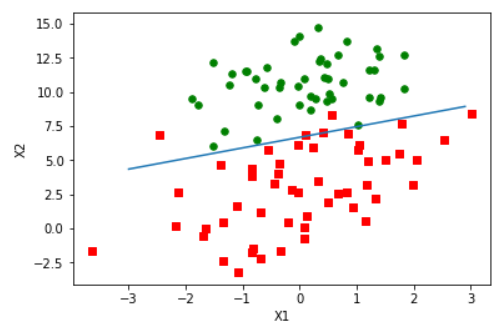

import matplotlib.pyplot as plt def plotBestFit(): dataMat,labelMat=loadDataSet() weights = gradAscent(dataMat,labelMat) dataArr = np.array(dataMat) n = np.shape(dataArr)[0] xcord1 = [] ycord1 = [] xcord2 = [] ycord2 = [] for i in range(n): if(int(labelMat[i])== 1): xcord1.append(dataArr[i,1]) ycord1.append(dataArr[i,2]) else: xcord2.append(dataArr[i,1]) ycord2.append(dataArr[i,2]) fig = plt.figure() ax = fig.add_subplot(111) ax.scatter(xcord1, ycord1, s=30, c='red', marker='s') ax.scatter(xcord2, ycord2, s=30, c='green') x = np.arange(-3.0, 3.0, 0.1) y = (-weights[0]-weights[1]*x)/weights[2] y = np.array(y).reshape(len(x)) ax.plot(x, y) plt.xlabel('X1') plt.ylabel('X2'); plt.show() plotBestFit()

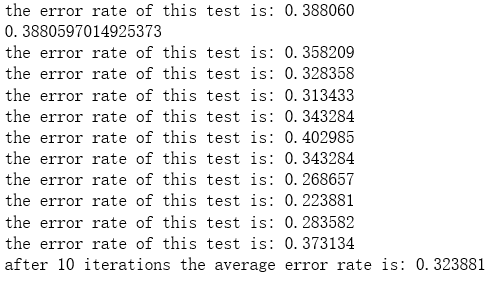

def classifyVector(z, weights): prob = sigmoid(sum(z*weights)) if(prob > 0.5): return 1.0 else: return 0.0 def colicTest(): frTrain = open('D:\\LearningResource\\machinelearninginaction\\Ch05\\horseColicTraining.txt') frTest = open('D:\\LearningResource\\machinelearninginaction\\Ch05\\horseColicTest.txt') trainingSet = [] trainingLabels = [] for line in frTrain.readlines(): currLine = line.strip().split('\t') lineArr =[] for i in range(21): lineArr.append(float(currLine[i])) trainingSet.append(lineArr) trainingLabels.append(float(currLine[21])) trainWeights = stocGradAscent1(np.array(trainingSet), trainingLabels, 1000) errorCount = 0 numTestVec = 0.0 for line in frTest.readlines(): numTestVec += 1.0 currLine = line.strip().split('\t') lineArr =[] for i in range(21): lineArr.append(float(currLine[i])) if(int(classifyVector(np.array(lineArr), trainWeights))!= int(currLine[21])): errorCount += 1 errorRate = (float(errorCount)/numTestVec) print("the error rate of this test is: %f" % errorRate) return errorRate errorRate = colicTest() print(errorRate) def multiTest(): numTests = 10 errorSum=0.0 for k in range(numTests): errorSum += colicTest() print("after %d iterations the average error rate is: %f" % (numTests, errorSum/float(numTests))) multiTest()