ceph

*/5 * * * * /usr/sbin/netdate time.aliyun.com &> /dev/null && hwclock -w &> /dev/null

apt install -y apt-transport-https ca-certificates curl software-properties-common

导入key

wget -q -O- 'https://download.ceph.com/keys/release.asc' | sudo apt-key add -

添加源(每个主机)

echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic main" >> /etc/apt/sources.list

ceph管理节点上创建

cephadmin #部署和管理ceph集群的普通用户ceph 用户是安装ceph后自动创建的,用于启动ceph-mon/ceph-mgr等进程cephadm #ceph v15版本开始的一个部署工具su - cephadmin

ssh-keygen

ssh-copy-id cephadmin@10.4.7.133

ssh-copy-id cephadmin@10.4.7.134

ssh-copy-id cephadmin@10.4.7.135

ssh-copy-id cephadmin@10.4.7.136

ssh-copy-id cephadmin@10.4.7.137

ssh-copy-id cephadmin@10.4.7.138

ssh-copy-id cephadmin@10.4.7.139

ssh-copy-id cephadmin@10.4.7.140

ssh-copy-id cephadmin@10.4.7.141

ssh-copy-id cephadmin@10.4.7.142

sudo vim /etc/hosts

10.4.7.133 ceph-deploy.example.local ceph-deploy

10.4.7.134 ceph-mon1.example.local ceph-mon1

10.4.7.135 ceph-mon2.example.local ceph-mon2

10.4.7.136 ceph-mon3.example.local ceph-mon3

10.4.7.137 ceph-mgr1.example.local ceph-mgr1

10.4.7.138 ceph-mgr2.example.local ceph-mgr2

10.4.7.139 ceph-node1.example.local ceph-node1

10.4.7.140 ceph-node2.example.local ceph-node2

10.4.7.141 ceph-node3.example.local ceph-node3

10.4.7.142 ceph-node4.example.local ceph-node4

解决pip2安装

https://blog.csdn.net/m0_68744965/article/details/128391261

add-apt-repository universe

apt update sudo apt install python2

root@ceph-deploy:/etc/apt# curl https://bootstrap.pypa.io/pip/2.7/get-pip.py --output get-pip.py

启用存储库后,使用Python 2以sudo用户身份运行脚本以安装适用于Python 2的pip:

root@ceph-deploy:/etc/apt# sudo python2 get-pip.py

root@ceph-deploy:/etc/apt# pip2 install ceph-deploy

root@ceph-deploy:/etc/apt# ceph-deploy --version

2.0.1

# su - cephadmin

$ mkdir ceph-cluster

$ cd ceph-cluster/

$ ceph-deploy -h 检查

集群初始化:

fsid集群id 可以指定 不指定自己会创建

网络

[--cluster-network CLUSTER_NETWORK] 内网访问 仅用于ceph集群做心跳检测 数据复制

[--public-network PUBLIC_NETWORK]

mon 集群中先启动

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy new --cluster-network 192.168.1.0/24 --public-network 10.4.7.0/24 ceph-mon1.example.local ceph-mon2.example.local ceph-mon3.example.local

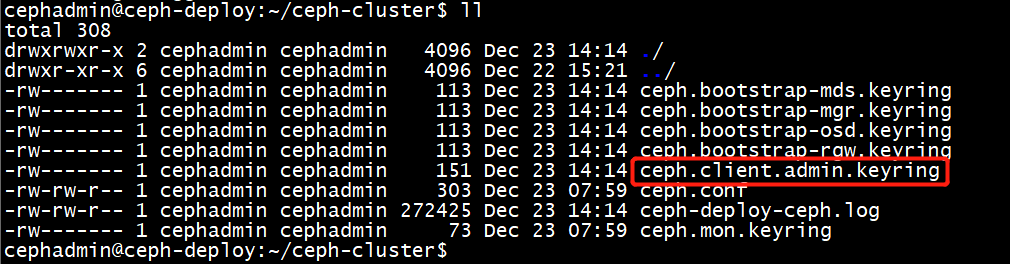

admin 推送配置文件 和认证文件 后面的是推送到的主机

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy admin ceph-node1 ceph-node2 ceph-node3 ceph-node4

推送给本机

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy admin ceph-deploy

认证文件权限有问题

允许用户有读写权限 那个节点执行命令 那个节点执行

cephadmin@ceph-deploy:~$ sudo setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

cephadmin账号已经可以执行命令 了

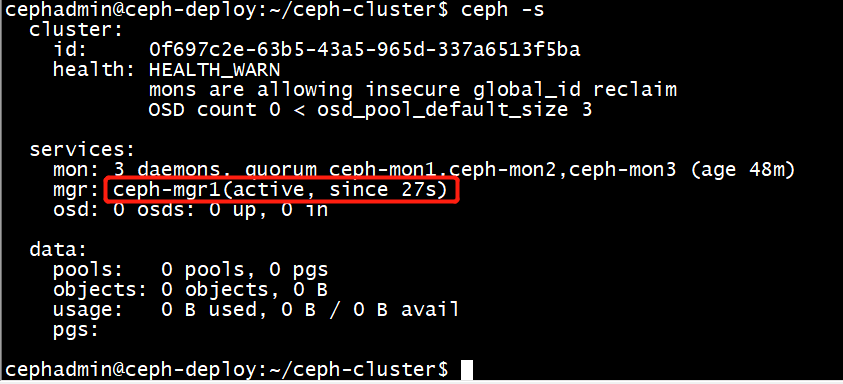

cephadmin@ceph-deploy:~/ceph-cluster$ ceph -s 查看集群状态

root@ceph-node1:~# setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

root@ceph-node2:~# setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

root@ceph-node3:~# setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

root@ceph-node4:~# setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

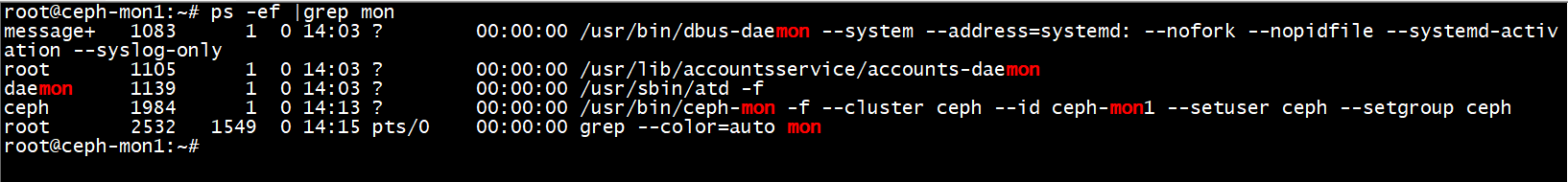

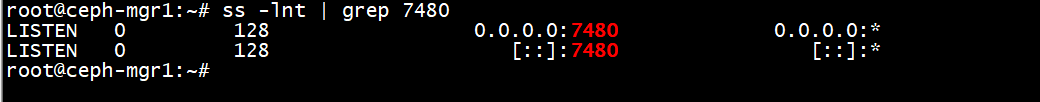

mgr进程启动了

动态配置;cephadmin@ceph-deploy:~/ceph-cluster$ ceph config set mon auth_allow_insecure_global_id_reclaim false (重启后也有效)

查看版本

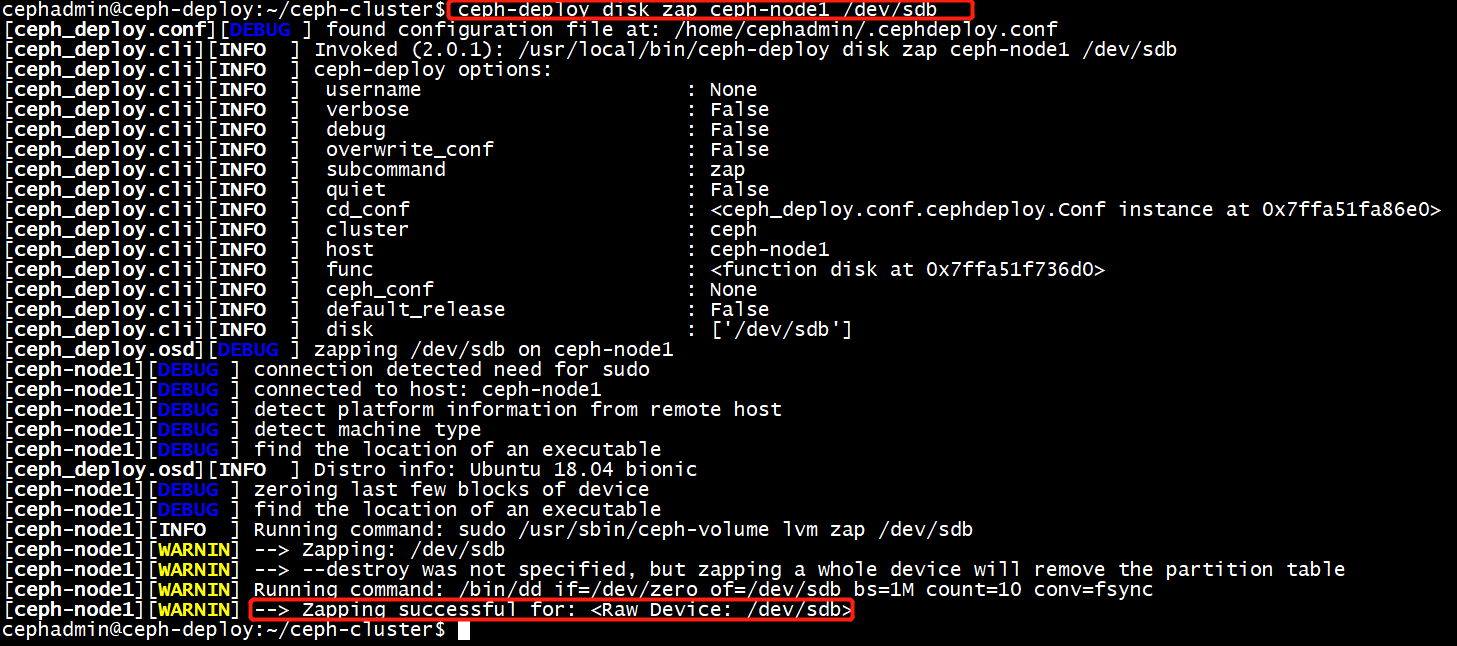

查看磁盘

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy disk list ceph-node1

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy disk list ceph-node2

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy disk list ceph-node3

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy disk list ceph-node4

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy disk zap ceph-node2 /dev/sdb

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy disk zap ceph-node3 /dev/sdb

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy disk zap ceph-node4 /dev/sdb

提升性能 可以换成ssd nvme

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy osd --help

这里有个对应关系

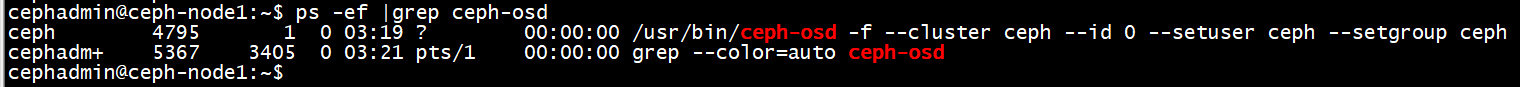

osd ID:0 ceph-node1:/dev/sdb

ceph-deploy osd create ceph-node2 --data /dev/sdb

ceph-deploy osd create ceph-node3 --data /dev/sdb

ceph-deploy osd create ceph-node4 --data /dev/sdb

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd out --help

cephadmin@ceph-deploy:~/cep

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool ls

device_health_metrics

mypool

h-cluster$ ceph osd pool create mypool 32 32

pool 'mypool' created

cephadmin@ceph-deploy:~/ceph-cluster$ ceph pg ls-by-pool mypool | awk '{print $1,$2,$15}'

每个pg有三个osd

列出文件

cephadmin@ceph-deploy:~/ceph-cluster$ rados ls --pool=mypool

msg1

查看mypool中msg1的信息

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd map mypool msg1

osdmap e27 pool 'mypool' (2) object 'msg1' -> pg 2.c833d430 (2.10) -> up ([3,2,0], p3) acting ([3,2,0], p3)

下载到本地

cephadmin@ceph-deploy:~/ceph-cluster$ sudo rados get msg1 --pool=mypool /opt/my.txt

删除文件

cephadmin@ceph-deploy:~/ceph-cluster$ sudo rados rm msg1 --pool=mypool

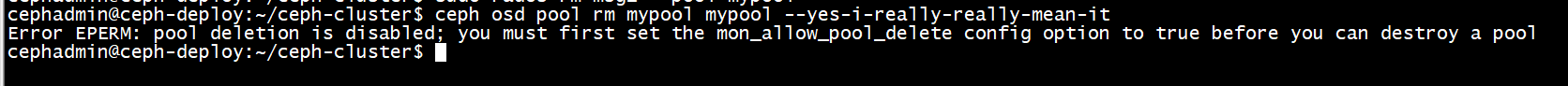

mon禁止删除存储池

直接删出不掉

cephadmin@ceph-deploy:~/ceph-cluster$ ceph tell mon.* injectargs --mon-allow-pool-delete=true 允许删除

删除存储值 cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool rm mypool mypool --yes-i-really-really-mean-it

关掉允许删除

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy mgr create ceph-mgr2

查看状态

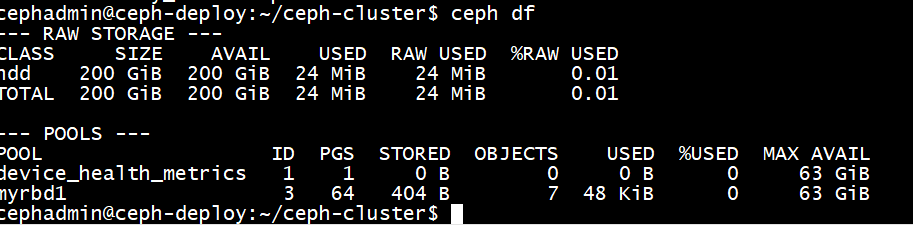

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool create myrbd1 64 64 64个pg 64个组合

myrdb存储池使用rbd功能

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool application enable myrbd1 rbd

存储池初始化

cephadmin@ceph-deploy:~/ceph-cluster$ rbd pool init -p myrbd1

挂载不是挂载到存储池 挂载是挂载到存储池里的镜像

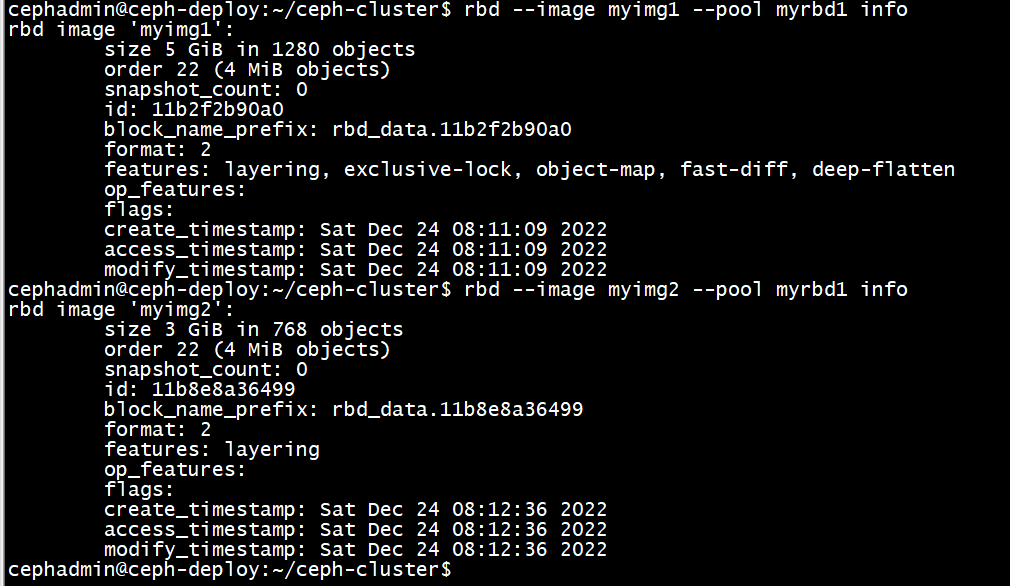

创建镜像 需要指定大小 位置

cephadmin@ceph-deploy:~/ceph-cluster$ rbd create myimg1 --size 5G --pool myrbd1

cephadmin@ceph-deploy:~/ceph-cluster$ rbd create myimg2 --size 3G --pool myrbd1 --image-format 2 --image-feature layering

cephadmin@ceph-deploy:~/ceph-cluster$ rbd ls --pool myrbd1

myimg1

myimg2

centos挂载ceph块存储

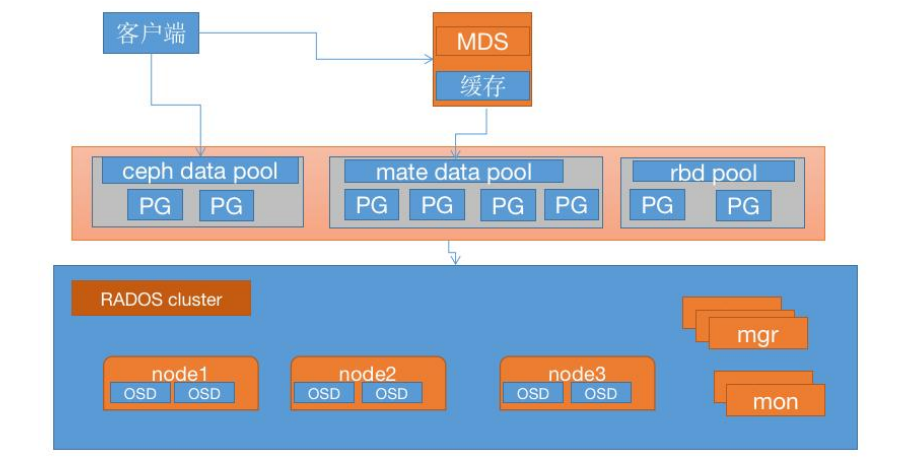

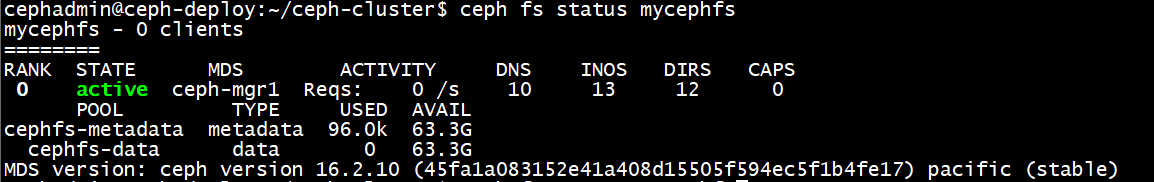

cephadmin@ceph-deploy:~/ceph-cluster$ ceph mds stat

1 up:standby

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool create cephfs-metadata 32 32

pool 'cephfs-metadata' created

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool create cephfs-data 64 64

pool 'cephfs-data' created

cephadmin@ceph-deploy:~/ceph-cluster$ ceph fs new mycephfs cephfs-metadata cephfs-data

new fs with metadata pool 8 and data pool 9

cephadmin@ceph-deploy:~/ceph-cluster$ ceph fs ls

name: mycephfs, metadata pool: cephfs-metadata, data pools: [cephfs-data ]

cephadmin@ceph-deploy:~/ceph-cluster$ ceph mds stat

mycephfs:1 {0=ceph-mgr1=up:active}

root@ceph-client:~# mount -t ceph 10.4.7.134:6789:/ /data -o name=admin,secret=AQCwt6VjoyRqChAAvoUnFFi4oGQ8y6aIZU9FpQ==

这里的IP写mon的 可以写三个可以写一个

共享存储 ceph 会调用他

浙公网安备 33010602011771号

浙公网安备 33010602011771号