Flink四种Sink

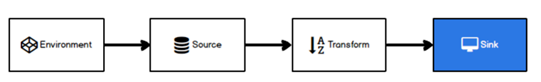

Sink有下沉的意思,在Flink中所谓的Sink其实可以表示为将数据存储起来的意思,也可以将范围扩大,表示将处理完的数据发送到指定的存储系统的输出操作.

之前我们一直在使用的print方法其实就是一种Sink

Flink内置了一些Sink, 除此之外的Sink需要用户自定义!

本次测试使用的Flink版本为1.12

KafkaSink

1)添加kafka依赖

2)启动Kafka集群

kafka群起脚本链接:

https://www.cnblogs.com/traveller-hzq/p/14487977.html

3)Sink到Kafka的实例代码

4.使用 nc -lk 9999命令输入数据

5.在linux启动一个消费者, 查看是否收到数据

bin/kafka-console-consumer.sh --bootstrap-server hadoop102:9092 --topic topic_sensor

RedisSink

1)添加Redis连接依赖

2)启动Redis服务器

./redis-server /etc/redis/6379.conf

3)Sink到Redis的示例代码

Redis查看是否收到数据

注意:

发送了5条数据, redis中只有2条数据. 原因是hash的field的重复了, 后面的会把前面的覆盖掉

ElasticsearchSink

1)添加ES依赖

2)启动ES集群

3)Sink到ES实例代码

Elasticsearch查看是否收到数据

添加log4j2的依赖:

如果是无界流, 需要配置bulk的缓存

自定义Sink

如果Flink没有提供给我们可以直接使用的连接器,那我们如果想将数据存储到我们自己的存储设备中,怎么办?

我们自定义一个到Mysql的Sink

1)在mysql中创建数据库和表

2)导入Mysql驱动

3)写入到Mysql的自定义Sink实例代码

使用nc命令输入命令进行测试

__EOF__

本文作者:Later

本文链接:https://www.cnblogs.com/traveller-hzq/p/14488022.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

本文链接:https://www.cnblogs.com/traveller-hzq/p/14488022.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:基于图像分类模型对图像进行分类

· go语言实现终端里的倒计时

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 分享一个免费、快速、无限量使用的满血 DeepSeek R1 模型,支持深度思考和联网搜索!

· 25岁的心里话

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· ollama系列01:轻松3步本地部署deepseek,普通电脑可用

· 按钮权限的设计及实现