Hudi学习笔记——同步hive metastore

1.使用Flink SQL

如果使用的是flink sql的话,如果想要同步表到hive metastore的话,只需要在flink sql的建表语句中添加 hive_sync 相关的一些配置即可,如下

'hive_sync.enable' = 'true', 'hive_sync.mode' = 'hms', 'hive_sync.metastore.uris' = 'thrift://xxx:9083', 'hive_sync.table'='hudi_xxxx_table', 'hive_sync.db'='default',

如果遇到不能正常建表,或者只能建出ro表的情况,报错如下

org.apache.hudi.exception.HoodieException: Got runtime exception when hive syncing hudi_xxxx_table at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:145) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.sink.StreamWriteOperatorCoordinator.doSyncHive(StreamWriteOperatorCoordinator.java:335) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.sink.utils.NonThrownExecutor.lambda$wrapAction$0(NonThrownExecutor.java:130) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_372] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_372] at java.lang.Thread.run(Thread.java:750) [?:1.8.0_372] Caused by: org.apache.hudi.hive.HoodieHiveSyncException: Failed to sync partitions for table hudi_xxxx_table_ro at org.apache.hudi.hive.HiveSyncTool.syncPartitions(HiveSyncTool.java:341) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:232) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.doSync(HiveSyncTool.java:158) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:142) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] ... 5 more Caused by: org.apache.hudi.hive.HoodieHiveSyncException: Failed to get all partitions for table default.hudi_xxxx_table_ro at org.apache.hudi.hive.HoodieHiveSyncClient.getAllPartitions(HoodieHiveSyncClient.java:180) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.syncPartitions(HiveSyncTool.java:317) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:232) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.doSync(HiveSyncTool.java:158) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:142) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] ... 5 more Caused by: org.apache.hadoop.hive.metastore.api.NoSuchObjectException: @hive#default.hudi_xxxx_table_ro table not found at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$get_partitions_result$get_partitions_resultStandardScheme.read(ThriftHiveMetastore.java) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$get_partitions_result$get_partitions_resultStandardScheme.read(ThriftHiveMetastore.java) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$get_partitions_result.read(ThriftHiveMetastore.java) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:86) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_get_partitions(ThriftHiveMetastore.java:2958) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_partitions(ThriftHiveMetastore.java:2943) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.listPartitions(HiveMetaStoreClient.java:1368) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.listPartitions(HiveMetaStoreClient.java:1362) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at sun.reflect.GeneratedMethodAccessor125.invoke(Unknown Source) ~[?:?] at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_372] at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_372] at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:212) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at com.sun.proxy.$Proxy92.listPartitions(Unknown Source) ~[?:?] at sun.reflect.GeneratedMethodAccessor125.invoke(Unknown Source) ~[?:?] at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_372] at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_372] at org.apache.hadoop.hive.metastore.HiveMetaStoreClient$SynchronizedHandler.invoke(HiveMetaStoreClient.java:2773) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at com.sun.proxy.$Proxy92.listPartitions(Unknown Source) ~[?:?] at org.apache.hudi.hive.HoodieHiveSyncClient.getAllPartitions(HoodieHiveSyncClient.java:175) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.syncPartitions(HiveSyncTool.java:317) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:232) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.doSync(HiveSyncTool.java:158) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] at org.apache.hudi.hive.HiveSyncTool.syncHoodieTable(HiveSyncTool.java:142) ~[blob_p-8126a52f21d07b448344e4277f6fb0837c921987-71810e5577eef736251ddb420010bd50:?] ... 5 more

原因是没有使用正确的hudi-flink-bundle jar包的原因,可用的jar需要自行编译打包hudi项目来得到,参考:Flink SQL操作Hudi并同步Hive使用总结

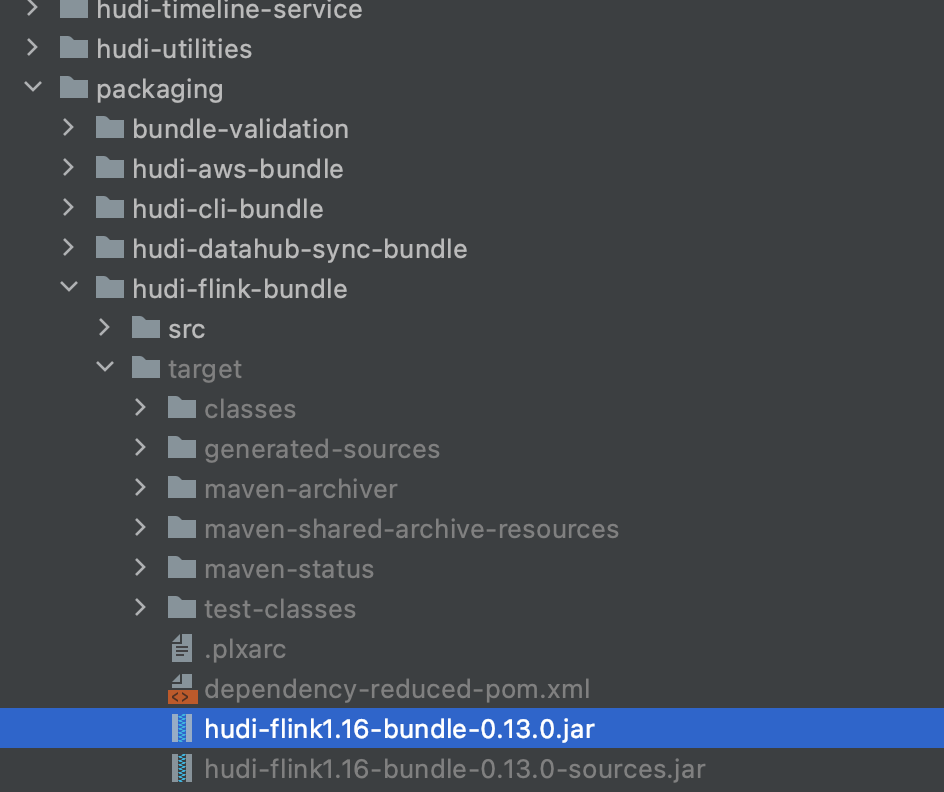

自行编译hudi-flink-bundle jar包的步骤:

1.git clone hudi项目,并且切到使用的hudi版本的分支上,比如0.13.0

git clone git@github.com:apache/hudi.git git checkout release-0.13.0

2.编译hudi-flink-hundle jar包,这里使用的hive metastore是hive2,flink版本是1.16.0

mvn clean package -DskipTests -Drat.skip=true -Pflink-bundle-shade-hive2 -Dflink1.16 -Dscala-2.12 -Dspark3

3.拷贝编译好的jar包到集群的/usr/lib/flink/lib目录下

参考:https://hudi.apache.org/cn/docs/syncing_metastore/

本文只发表于博客园和tonglin0325的博客,作者:tonglin0325,转载请注明原文链接:https://www.cnblogs.com/tonglin0325/p/5323201.html