Spark学习笔记——读写ScyllaDB

Scylla兼容cassandra API,所以可以使用spark读写cassandra的方法来进行读写

1.查看scyllaDB对应的cassandra版本

1 2 | cqlsh:my_db> SHOW VERSION[cqlsh 5.0.1 | Cassandra 3.0.8 | CQL spec 3.3.1 | Native protocol v4] |

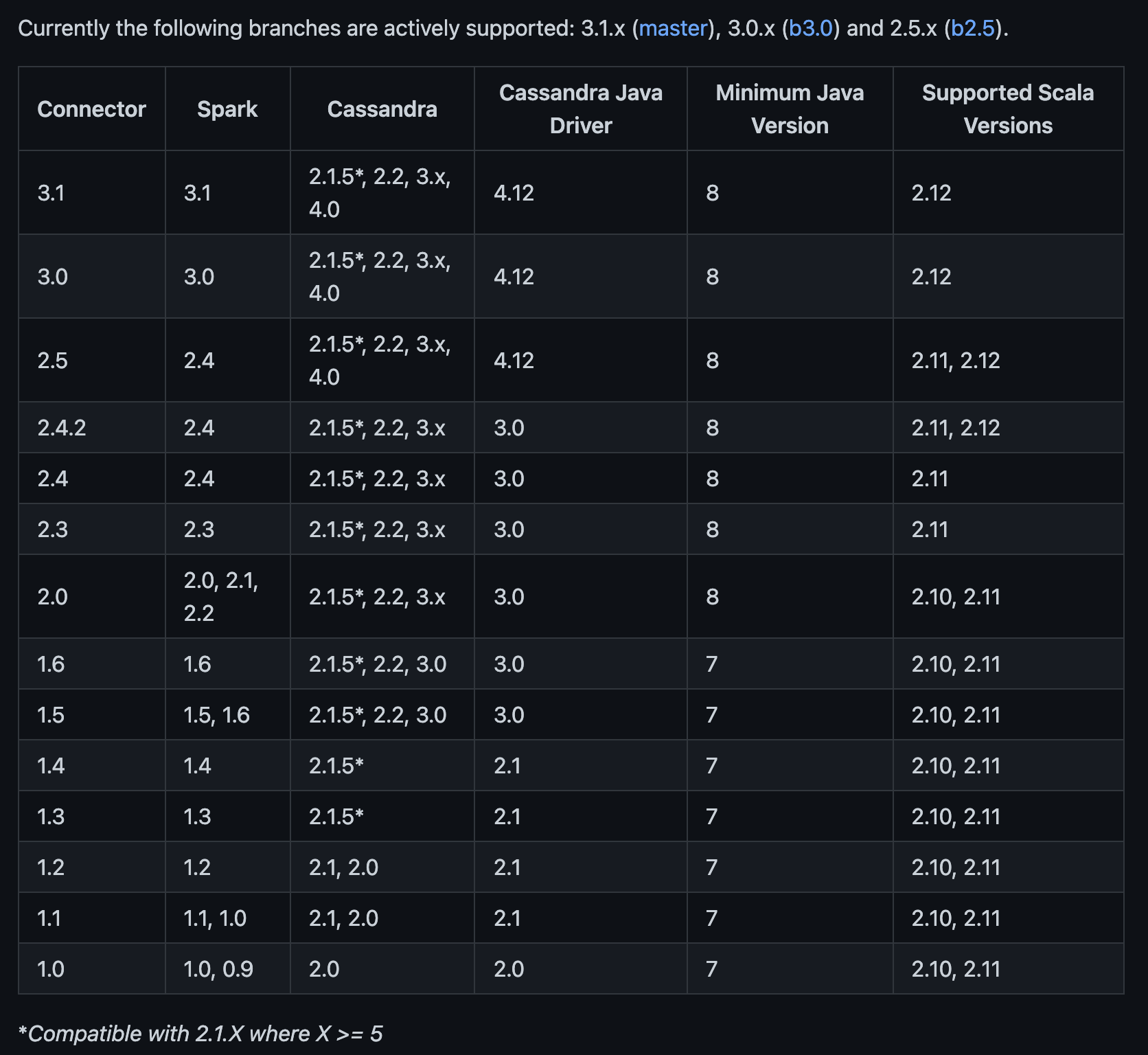

2.查看spark和cassandra对应的版本

参考:https://github.com/datastax/spark-cassandra-connector

3.写scyllaDB

dataset API写scyllaDB

1 2 3 4 5 | ds2.write .mode("append") .format("org.apache.spark.sql.cassandra") .options(Map("table" -> "my_tb", "keyspace" -> "my_db", "output.consistency.level" -> "ALL", "ttl" -> "8640000")) .save() |

RDD API写scyllaDB

1 2 3 4 | import com.datastax.oss.driver.api.core.ConsistencyLevelimport com.datastax.spark.connector._ds.rdd.saveToCassandra("my_db", "my_tb", writeConf = WriteConf(ttl = TTLOption.constant(8640000), consistencyLevel = ConsistencyLevel.ALL)) |

注意字段的数量和顺序需要和ScyllaDB表的顺序一致,可以使用下面方式select字段

1 2 3 4 5 6 7 8 9 10 | val columns = Seq[String]( "a", "b", "c")val colNames = columns.map(name => col(name))val colRefs = columns.map(name => toNamedColumnRef(name))val df2 = df.select(colNames: _*)df2.rdd .saveToCassandra(ks, table, SomeColumns(colRefs: _*), writeConf = WriteConf(ttl = TTLOption.constant(8640000), consistencyLevel = ConsistencyLevel.ALL)) |

不过官方推荐使用DataFrame API,而不是RDD API

If you have the option we recommend using DataFrames instead of RDDs

1 | https://github.com/datastax/spark-cassandra-connector/blob/master/doc/4_mapper.md |

4.读scyllaDB

1 2 3 4 5 | val df = spark .read .format("org.apache.spark.sql.cassandra") .options(Map( "table" -> "words", "keyspace" -> "test" )) .load() |

参考:通过 Spark 创建/插入数据到 Azure Cosmos DB Cassandra API

Cassandra Optimizations for Apache Spark

5.cassandra connector参数

比如如果想实现spark更新scylla表的部分字段,可以将spark.cassandra.output.ignoreNulls设置为true

connector参数:https://github.com/datastax/spark-cassandra-connector/blob/master/doc/reference.md

参数调优参考:Spark + Cassandra, All You Need to Know: Tips and Optimizations

本文只发表于博客园和tonglin0325的博客,作者:tonglin0325,转载请注明原文链接:https://www.cnblogs.com/tonglin0325/p/15531196.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

· 字符编码:从基础到乱码解决