hadoop中hive配置mysql

1.首先下载hive

下载地址 选择带有 bin 选项的 ,不然以后还要自己编译

解压安装 移动到/usr/local/hive 下

进入hive目录,进入conf

cp hive-env.sh.template hive-env.sh cp hive-default.xml.template hive-site.xml cp hive-log4j2.properties.template hive-log4j2.properties

cp hive-exec-log4j.properties.template hive-exec-log4j.properties

配置 hive/conf/hive-env.sh,把下面三项的注释去掉并加上地址

HADOOP_HOME=/usr/local/hadoop export HIVE_CONF_DIR=/usr/local/hive/conf export HIVE_AUX_JARS_PATH=/usr/local/hive

配置 hive/conf/hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration>

<!--<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property> -->

<!-- 如果是远程mysql数据库的话需要在这里写入远程的IP或hosts -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>datanucleus.readOnlyDatastore</name>

<value>false</value>

</property>

<property>

<name>datanucleus.fixedDatastore</name>

<value>false</value>

</property>

<property>

<name>datanucleus.autoCreateSchema</name>

<value>true</value>

</property>

<property>

<name>datanucleus.autoCreateTables</name>

<value>true</value>

</property>

<property>

<name>datanucleus.autoCreateColumns</name>

<value>true</value>

</property>

</configuration>

配置 hive/bin/hive-config.sh 在最后添加

export JAVA_HOME=/usr/local/java export HIVE_HOME=/usr/local/hive export HADOOP_HOME=/usr/local/hadoop

需要注意的是 hive使用mysql的时候需要把mysql 的jdbc包拷贝到hive/lib下,mysql包下载链接https://www.mysql.com/products/connector/

启动 mysql 服务

service mysqld start

使用 mysql -uroot 登陆测试是否成功,如果成功修改root密码:

mysql>use mysql;

mysql> update user set password=passworD("test") where user='root';

mysql> flush privileges;

mysql> exit;

在先启动hadoop服务下,在其中hive:

启动hive服务:

hive --service metastore&

启动hive服务在后台运行:

hive --service hiveserver2 &

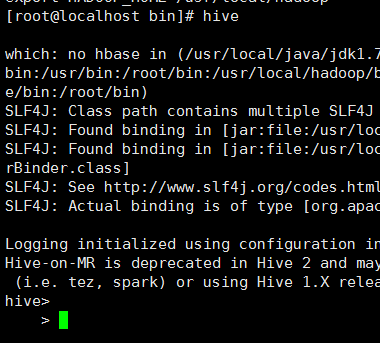

接着启动 hive客户端:

hive

如果进入 hive> shell中证明起启动成功;

首先创建表:

hive> CREATE EXTERNAL TABLE MYTEST(num INT, name STRING)

> ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' //分隔符 ‘\t’

> ;

导入数据:

hive> load data local inpath '/tmp/hive.txt' overwrite into table MYTEST; Copying data from file:/tmp/hive.txt Copying file: file:/tmp/hive.txt Loading data to table default.mytest Deleted hdfs://localhost:9000/user/hive/warehouse/mytest OK Time taken: 0.402 seconds

查看数据:

hive> SELECT * FROM MYTEST; OK NULL NULL 22 world 33 hive Time taken: 0.089 seconds hive>

最后看看/tmp/hive.txt 文档:

sina@ubuntu:~/hive/conf$ cat /tmp/hive.txt 11,hello 22 world 33 hive sina@ubuntu:~/hive/conf$