windows下在pycharm中开发pyspark的环境配置

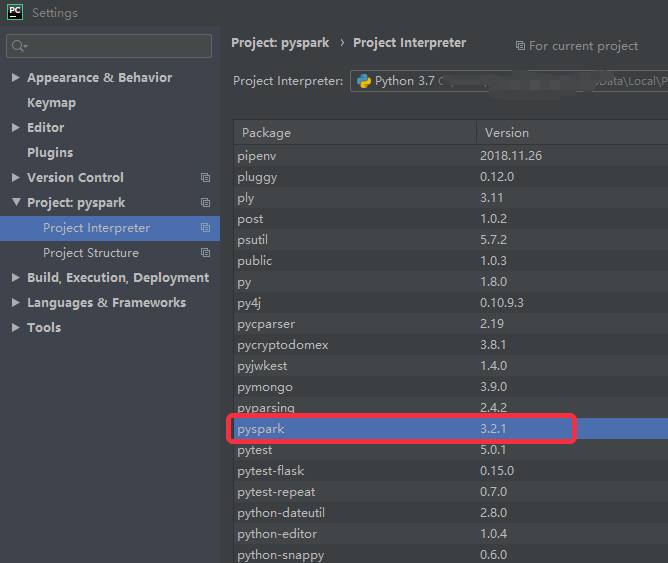

1、安装pyspark

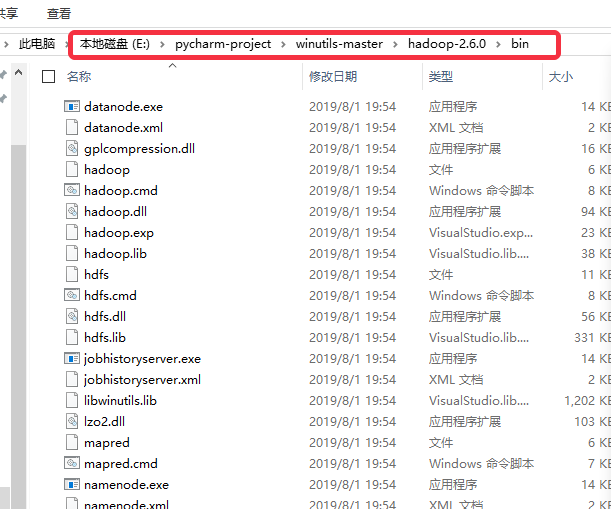

2、下载想要的hadoop版本,解决 missing WINUTILS.EXE 的问题

下载地址:https://github.com/steveloughran/winutils

比如,我保存的本地地址在这

3、pyspark程序中指定系统变量

from pyspark import SparkContext

import os

import sys

#指定系统变量

if sys.platform == "linux":

os.environ["PYSPARK_PYTHON"] = "/home/xxxxx/anaconda3/bin/python3.7"

os.environ["HADOOP_HOME"] = "/usr/bin/hadoop"

else:

os.environ["PYSPARK_PYTHON"]="C:\\Users\\xxxxxx\\AppData\\Local\\Programs\\Python\\Python37\\python.exe"

os.environ["HADOOP_HOME"]="E:\\pycharm-project\\winutils-master\\hadoop-2.6.0"

logFile = "test.txt"

sc = SparkContext("local","my first app")

sc.setLogLevel("ERROR")

logData = sc.textFile(logFile).cache()

numAs = logData.filter(lambda s: 'projtrtr' in s).count()

numBs = logData.filter(lambda s: 'd' in s).count()

temp = logData.first()

print(temp)

print("Lines with projtrtr: %i, lines with d: %i"%(numAs, numBs))

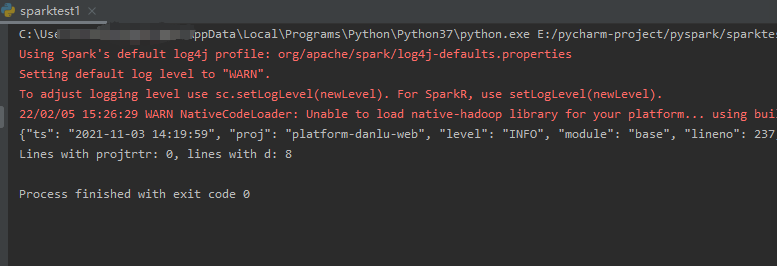

4、此时在pycharm中编写pyspark程序的配置就完成了

上述demo的运行结果:

参考:https://cwiki.apache.org/confluence/display/HADOOP2/WindowsProblems

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 没有源码,如何修改代码逻辑?

· PowerShell开发游戏 · 打蜜蜂

· 在鹅厂做java开发是什么体验

· WPF到Web的无缝过渡:英雄联盟客户端的OpenSilver迁移实战

· 永远不要相信用户的输入:从 SQL 注入攻防看输入验证的重要性