基于mediapipe的单人人体骨架细节提取

MediaPipe 是一款由 Google Research 开发并开源的多媒体机器学习模型应用框架。在谷歌,一系列重要产品,如 、Google Lens、ARCore、Google Home 以及 ,都已深度整合了 MediaPipe。本文将介绍的为基于mediapipe的人体骨架提取方案。

1、mediapipe的安装

安装指令如下:

pip install mediapipe

官网地址:https://developers.google.cn/mediapipe

如果需要除了人体骨架提取以外的mediapipe的功能,可以参照官网内的demo进行编写。

2、demo编写

参照官网给的demo进行简要的更改,如下是对视频进行骨架提取,可根据需求更改为摄像头摄像或者照片。

import cv2

import time

import mediapipe as mp

mp_drawing = mp.solutions.drawing_utils

mp_drawing_styles = mp.solutions.drawing_styles

mp_holistic = mp.solutions.holistic

cap = cv2.VideoCapture('1_demo2.mp4') # 替换为视频路径

fps_start_time = time.time()

fps = 0

with mp_holistic.Holistic(

min_detection_confidence=0.5,

min_tracking_confidence=0.5) as holistic:

while cap.isOpened():

success, image = cap.read()

if not success:

print("Video was ended.")

break

image.flags.writeable = False

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

results = holistic.process(image)

# 画图

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# 脸部骨架绘制

mp_drawing.draw_landmarks(

image,

results.face_landmarks,

mp_holistic.FACEMESH_CONTOURS,

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles

.get_default_face_mesh_contours_style())

# 姿势绘制

mp_drawing.draw_landmarks(

image,

results.pose_landmarks,

mp_holistic.POSE_CONNECTIONS,

landmark_drawing_spec=mp_drawing_styles

.get_default_pose_landmarks_style())

# 左右手绘制

mp_drawing.draw_landmarks(image, results.left_hand_landmarks, mp_holistic.HAND_CONNECTIONS)

mp_drawing.draw_landmarks(image, results.right_hand_landmarks, mp_holistic.HAND_CONNECTIONS)

cv2.imshow('MediaPipe Holistic', cv2.flip(image, 1))

fps_end_time = time.time()

time_diff = fps_end_time - fps_start_time

if time_diff >= 1:

fps = int(1 / time_diff)

fps_start_time = time.time()

cv2.putText(image, f"FPS: {fps}", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2)

if cv2.waitKey(5) & 0xFF == 27:

break

# cap.release()

cv2.destroyAllWindows()

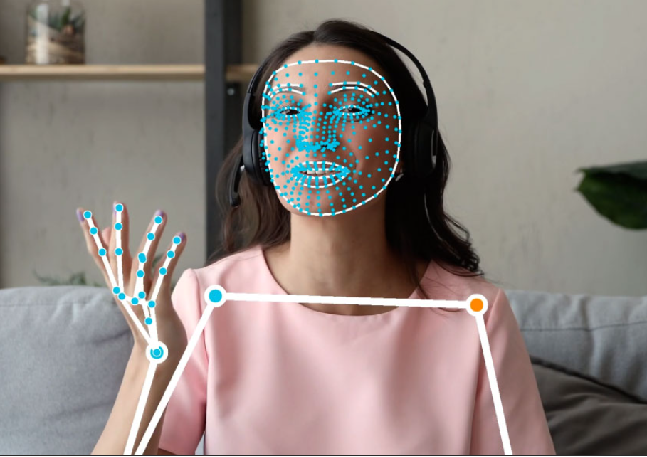

运行结果如下:

3、总结

几个人体骨架提取方案中准确率最高的,且细节成分最多的,但是受限于单人的应用场景无法像多人应用场景一样的泛用。

浙公网安备 33010602011771号

浙公网安备 33010602011771号