requests分块下载文件

将下载或上传任务(一个文件或一个压缩包)人为的划分为几个部分,每一个部分采用一个线程进行上传或下载,如果碰到网络故障,可以从已经上传或下载的部分开始继续上传下载未完成的部分,而没有必要从头开始上传下载。用户可以节省时间,提高速度。

一、分割视频

1、分割的每个小部分的大小:

size = 1024 * 100 # 100k

2、获取视频大小:

当在请求上设置stream=True时,没有立即请求内容,只是获取了请求头。推迟下载响应体直到访问 Response.content 属性

headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:67.0) Gecko/20100101 Firefox/67.0' } resp = requests.get(url, headers=headers, stream=True) content_length = resp.headers['content-length']

3、分割视频:

设置请求头里面的Range参数

可以分割成多少个视频:

count = int(content_length) // size

设置Range:

Range:告知服务端,客户端下载该文件想要从指定的位置开始下载,格式:

Range:告知服务端,客户端下载该文件想要从指定的位置开始下载,格式: ‘Range’: ‘bytes=start-end’。 start开始位置, end结束位置。

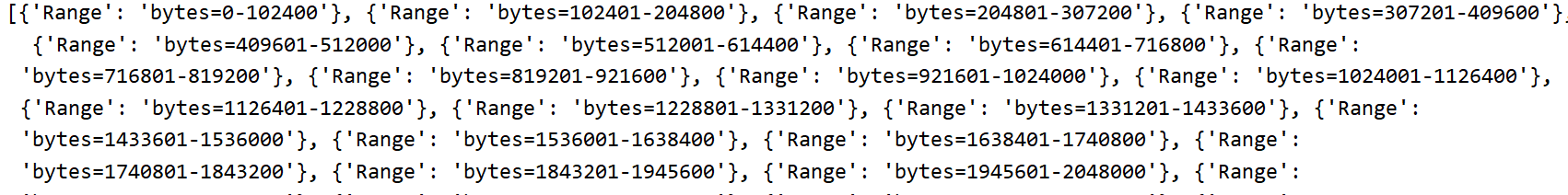

代码:

range_liat = [] for i in range(count): start = i * size # 开始位置 # 结束位置 if i == count - 1: end = content_length # 最后的一部分视频 else: end = start + size if i > 0: start += 1 headers_range = {'Range': f'bytes={start}-{end}'} range_list.append(headers_range)

二、请求视频

1、设置请求头

for i, headers_range in enumerate(range_list): headers_range.update(headers) resp = requests.get(url, headers=headers_range)

2、保存视频

with open(f'{i}', 'wb') as f: f.write(resp.content)

三、断点续传

确保下载文件的文件夹里没有其他文件

1、获取保存视频的文件夹里面的文件的名称:

import os f_list = os.listdir(path)

2、请求一小段视频时,先判断当前文件夹里是否存在,不存在才下载

if not f'{i}' in ts_list: pass

四、合并视频

遍历小段视频保存的文件夹,按顺序保存到一个文件里就好了

import os def file_merge(path, path_name): """ :param path: 小段视频保存文件夹路径 :param path_name: 合并后保存位置+视频名字+格式 """ ts_list = os.listdir(path) with open(path_name, 'wb+') as fw: for i in range(len(ts_list)): # 小段视频路径 path_name_i = os.path.join(path, f'{i}') with open(path_name_i, 'rb') as fr: buff = fr.read() fw.write(buff) # 删除文件 os.remove(path_name_i) print('合并完成:', path)

五、完整代码:

1、requests版本,多进程,没有进度条

import os import time import requests from multiprocessing.pool import Pool def get_range(url): """获取分割文件的位置""" headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36' } size = 1024 * 1000 # 把请求文件对象分割成每1000kb一个文件去下载 res = requests.get(url, headers=headers, stream=True) content_length = res.headers['Content-Length'] # 141062 count = int(content_length) // size headers_list = [] for i in range(count): start = i * size if i == count - 1: end = content_length else: end = start + size if i > 0: start += 1 rang = {'Range': f'bytes={start}-{end}'} rang.update(headers) headers_list.append(rang) return headers_list def down_file(url, headers, i, path): """ :param url: 视频地址 :param headers: 请求头 :param i: 小段视频保存名称 :param path: 保存位置 """ content = requests.get(url, headers=headers).content with open(f'{path}/{i}', 'wb') as f: f.write(content) def file_merge(path, path_name): """ :param path: 小段视频保存文件夹路径 :param path_name: 合并后保存位置+视频名字+格式 """ ts_list = os.listdir(path) ts_list.sort() print(ts_list) with open(path_name, mode='ab+') as fw: for i in range(len(ts_list)): # 小段视频路径 path_name_i = os.path.join(path, f'{i}') with open(path_name_i, mode='rb') as fr: buff = fr.read() fw.write(buff) # 删除文件 os.remove(path_name_i) print('合并完成:', path) if __name__ == '__main__': start_time = time.time() url = 'https://pic.ibaotu.com/00/51/34/88a888piCbRB.mp4' header_list = get_range(url) path = './test' pool = Pool(8) # 进程池 if not os.path.exists(path): os.mkdir(path) for i, headers in enumerate(header_list): ts_list = os.listdir(path) if not f'{i}' in ts_list: pool.apply_async(down_file, args=(url, headers, i, path)) pool.close() pool.join() end_time = time.time() print(f"下载完成,共花费了{end_time - start_time}") file_merge('./test', "./test/merge.mp4")

2、asyncio版本,异步,有进度条

import asyncio import os import time from tqdm import tqdm from aiohttp import ClientSession headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36' } size = 1024 * 1000 # 分割的每个视频长度 def get_range(content_length): """ :param content_length: 视频长度 :return: 请求头:Range """ count = int(content_length) // size # 分割成几个视频 range_list = [] for i in range(count): start = i * size if i == count - 1: end = content_length else: end = start + size if i > 0: start += 1 rang = {'Range': f'bytes={start}-{end}'} range_list.append(rang) return range_list async def async_main(video_url, section_path): """ 分割视频,即设置请求头 :param video_url: 视频地址 :param section_path: 保存位置 """ async with ClientSession() as session: async with session.get(video_url, headers=headers) as resp: content_length = resp.headers['Content-Length'] # 获取视频长度 range_list = get_range(content_length) sem = asyncio.Semaphore(80) # 限制并发数量 if not os.path.exists(section_path): os.mkdir(section_path) # 进度条 with tqdm(total=int(content_length), unit='', ascii=True, unit_scale=True) as bar: down_list = os.listdir(section_path) tasks = [] for i, headers_range in enumerate(range_list): # 判断是否已经下载 if f'{section_path}/{i}' not in down_list: headers_range.update(headers) task = down_f(session, video_url, headers_range, i, section_path, sem, bar) tasks.append(task) else: bar.update(size) await asyncio.gather(*tasks) async def down_f(session, video_url, headers_range, i, section_path, sem, bar): """下载""" async with sem: # 限制并发数量 async with session.get(video_url, headers=headers_range) as resp: chunks = b'' async for chunk in resp.content.iter_chunked(1024): chunks += chunk with open(f'{section_path}/{i}', 'wb') as f: f.write(chunks) bar.update(size) # 更新进度条 def main(video_url, section_path): loop = asyncio.get_event_loop() task = asyncio.ensure_future(async_main(video_url, section_path)) loop.run_until_complete(task) def file_merge(path, path_name): """ :param path: 小段视频保存文件夹路径 :param path_name: 合并后保存位置+视频名字+格式 """ ts_list = os.listdir(path) ts_list.sort() print(ts_list) with open(path_name, mode='ab+') as fw: for i in range(len(ts_list)): # 小段视频路径 path_name_i = os.path.join(path, f'{i}') with open(path_name_i, mode='rb') as fr: buff = fr.read() fw.write(buff) # 删除文件 os.remove(path_name_i) print('合并完成:', path) if __name__ == '__main__': start_time = time.time() url = 'https://pic.ibaotu.com/00/51/34/88a888piCbRB.mp4' path = './test2' main(url, path) end_time = time.time() print(f"下载完成,共花费了{end_time - start_time}") # file_merge('./test2', './test2/merge.mp4')

3.下载文件并显示进度条

# !/usr/bin/python3 # -*- coding: utf-8 -*- import os from urllib.request import urlopen import requests from tqdm import tqdm def download_from_url(url, dst): """ @param: url to download file @param: dst place to put the file :return: bool """ # 获取文件长度 try: file_size = int(urlopen(url).info().get('Content-Length', -1)) except Exception as e: print(e) print("错误,访问url: %s 异常" % url) return False # 判断本地文件存在时 if os.path.exists(dst): # 获取文件大小 first_byte = os.path.getsize(dst) else: # 初始大小为0 first_byte = 0 # 判断大小一致,表示本地文件存在 if first_byte >= file_size: print("文件已经存在,无需下载") return file_size header = {"Range": "bytes=%s-%s" % (first_byte, file_size)} pbar = tqdm( total=file_size, initial=first_byte, unit='B', unit_scale=True, desc=url.split('/')[-1]) # 访问url进行下载 req = requests.get(url, headers=header, stream=True) try: with(open(dst, 'ab')) as f: for chunk in req.iter_content(chunk_size=1024): if chunk: f.write(chunk) pbar.update(1024) except Exception as e: print(e) return False pbar.close() return True if __name__ == '__main__': url = "https://dl.360safe.com/360/inst.exe" download_from_url(url, "inst.exe")

原文链接:https://blog.csdn.net/m0_46652894/article/details/106155852

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:基于图像分类模型对图像进行分类

· go语言实现终端里的倒计时

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 分享一个免费、快速、无限量使用的满血 DeepSeek R1 模型,支持深度思考和联网搜索!

· 25岁的心里话

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· ollama系列01:轻松3步本地部署deepseek,普通电脑可用

· 闲置电脑爆改个人服务器(超详细) #公网映射 #Vmware虚拟网络编辑器