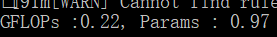

计算模型的Para和GFLOPs

import torch.nn as nn import torch import torch.nn.functional as F class FP_Conv2d(nn.Module): def __init__(self, input_channels, output_channels, kernel_size=-1, stride=-1, padding=-1, dropout=0, groups=1, channel_shuffle=0, shuffle_groups=1, last=0, first=0): super(FP_Conv2d, self).__init__() self.dropout_ratio = dropout self.last = last self.first_flag = first if dropout!=0: self.dropout = nn.Dropout(dropout) self.conv = nn.Conv2d(input_channels, output_channels, kernel_size=kernel_size, stride=stride, padding=padding, groups=groups) self.bn = nn.BatchNorm2d(output_channels) self.relu = nn.ReLU(inplace=True) def forward(self, x): if self.first_flag: x = self.relu(x) if self.dropout_ratio!=0: x = self.dropout(x) x = self.conv(x) x = self.bn(x) x = self.relu(x) return x class Net(nn.Module): def __init__(self, cfg = None): super(Net, self).__init__() if cfg is None: cfg = [192, 160, 96, 192, 192, 192, 192, 192] self.tnn_bin = nn.Sequential( nn.Conv2d(3, cfg[0], kernel_size=5, stride=1, padding=2),#默认0填充,3为输出通道数,cfg[0]为输出通道数 nn.BatchNorm2d(cfg[0]), FP_Conv2d(cfg[0], cfg[1], kernel_size=1, stride=1, padding=0, first=1), FP_Conv2d(cfg[1], cfg[2], kernel_size=1, stride=1, padding=0), nn.MaxPool2d(kernel_size=3, stride=2, padding=1), FP_Conv2d(cfg[2], cfg[3], kernel_size=5, stride=1, padding=2), FP_Conv2d(cfg[3], cfg[4], kernel_size=1, stride=1, padding=0), FP_Conv2d(cfg[4], cfg[5], kernel_size=1, stride=1, padding=0), nn.AvgPool2d(kernel_size=3, stride=2, padding=1), FP_Conv2d(cfg[5], cfg[6], kernel_size=3, stride=1, padding=1), FP_Conv2d(cfg[6], cfg[7], kernel_size=1, stride=1, padding=0), nn.Conv2d(cfg[7], 10, kernel_size=1, stride=1, padding=0), nn.BatchNorm2d(10), nn.ReLU(inplace=True), nn.AvgPool2d(kernel_size=8, stride=1, padding=0),#平均值 ) def forward(self, x): x = self.tnn_bin(x) #x = self.dorefa(x) x = x.view(x.size(0), 10) return x

需要安装thop:pip install thop

无法安装的话:pip install --upgrade git+https://github.com/Lyken17/pytorch-OpCounter.git

#pytorch 计算模型的Para和GFLOPs from torchvision.models import resnet18 import torch from thop import profile from models import nin model = nin.Net() #checkpoint = torch.load('models_save/nin.pth',map_location='cpu') checkpoint = torch.load('models_save/nin_preprune.pth',map_location='cpu') model.load_state_dict(checkpoint['state_dict']) input = torch.randn(1, 3, 32, 32) #模型输入的形状,batch_size=1 flops, params = profile(model, inputs=(input, )) print("GFLOPs :{:.2f}, Params : {:.2f}".format(flops/1e9,params/1e6)) #flops单位G,para单位M

浙公网安备 33010602011771号

浙公网安备 33010602011771号