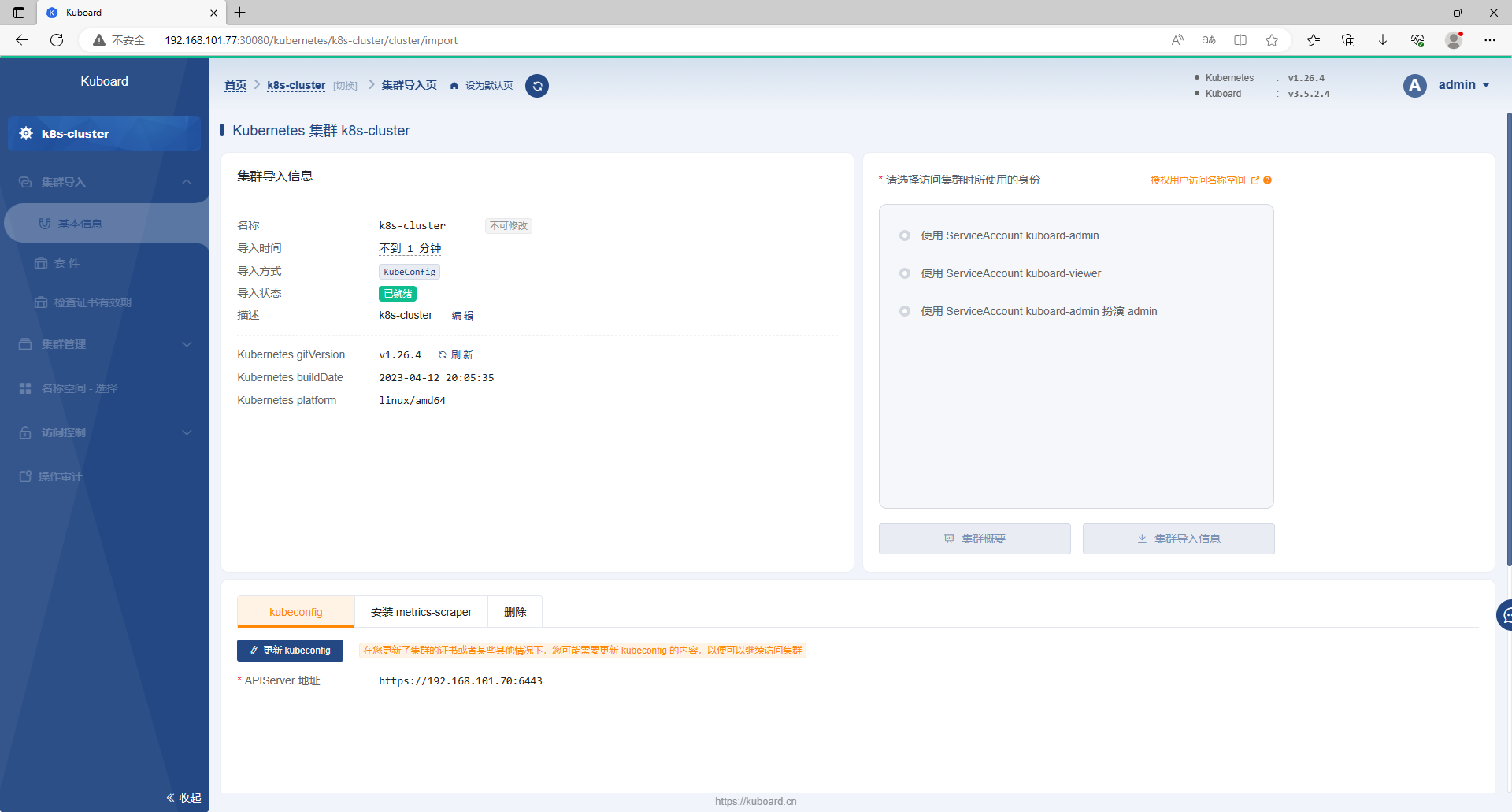

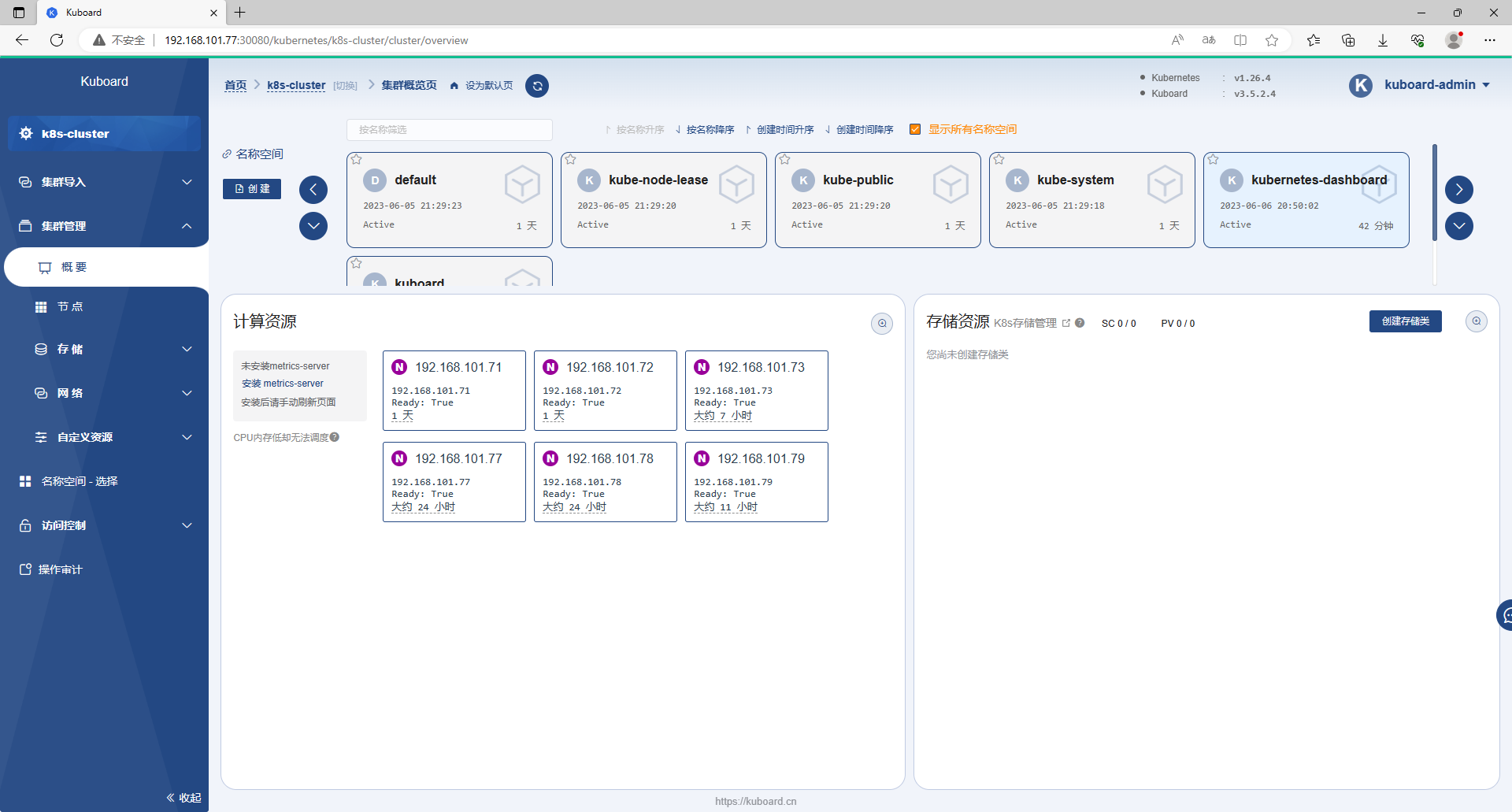

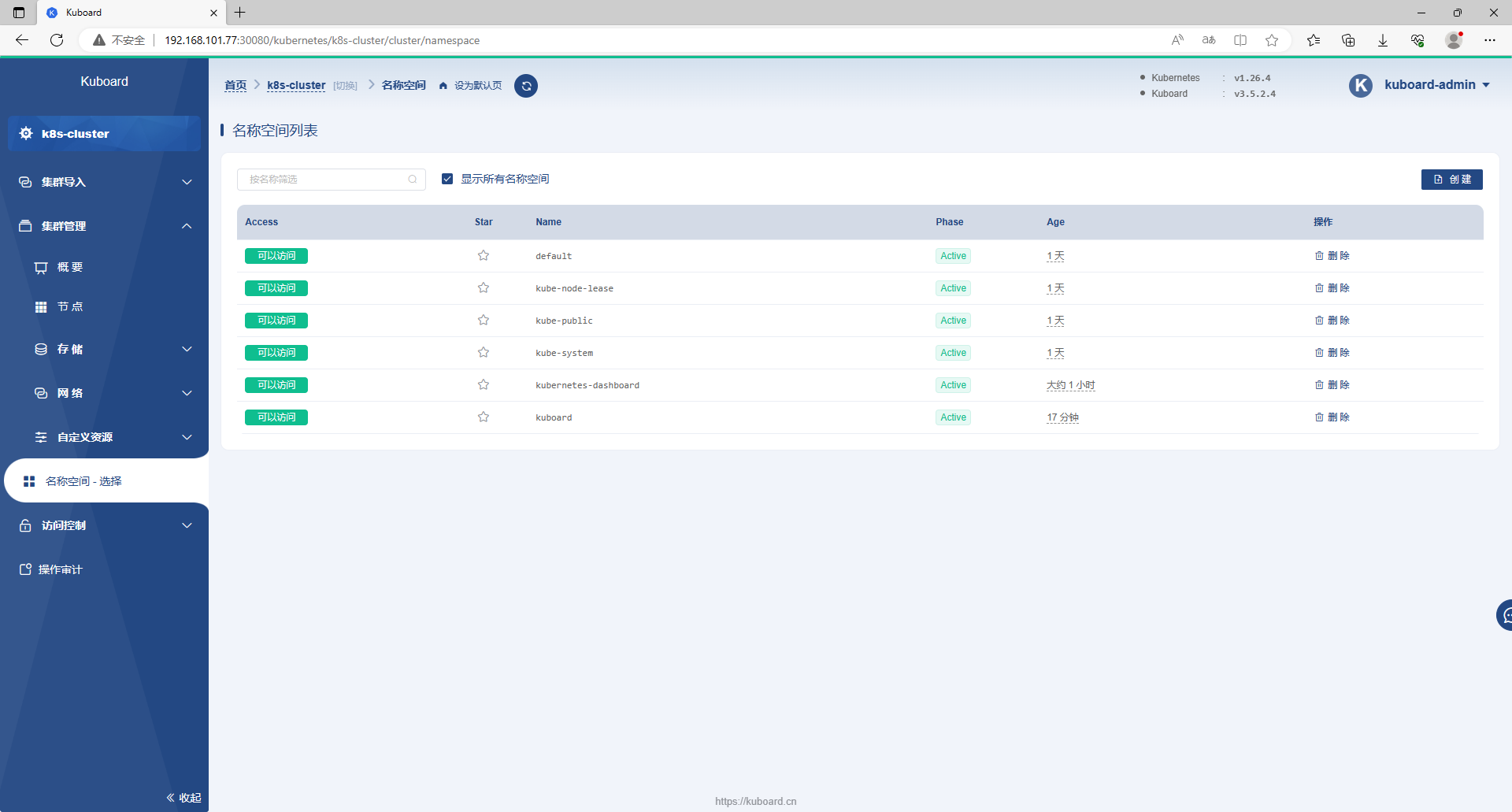

Ubuntu 22.04 LTS 使用 kubeasz 部署 k8s ⾼可⽤集群环境

k8s 集群环境搭建

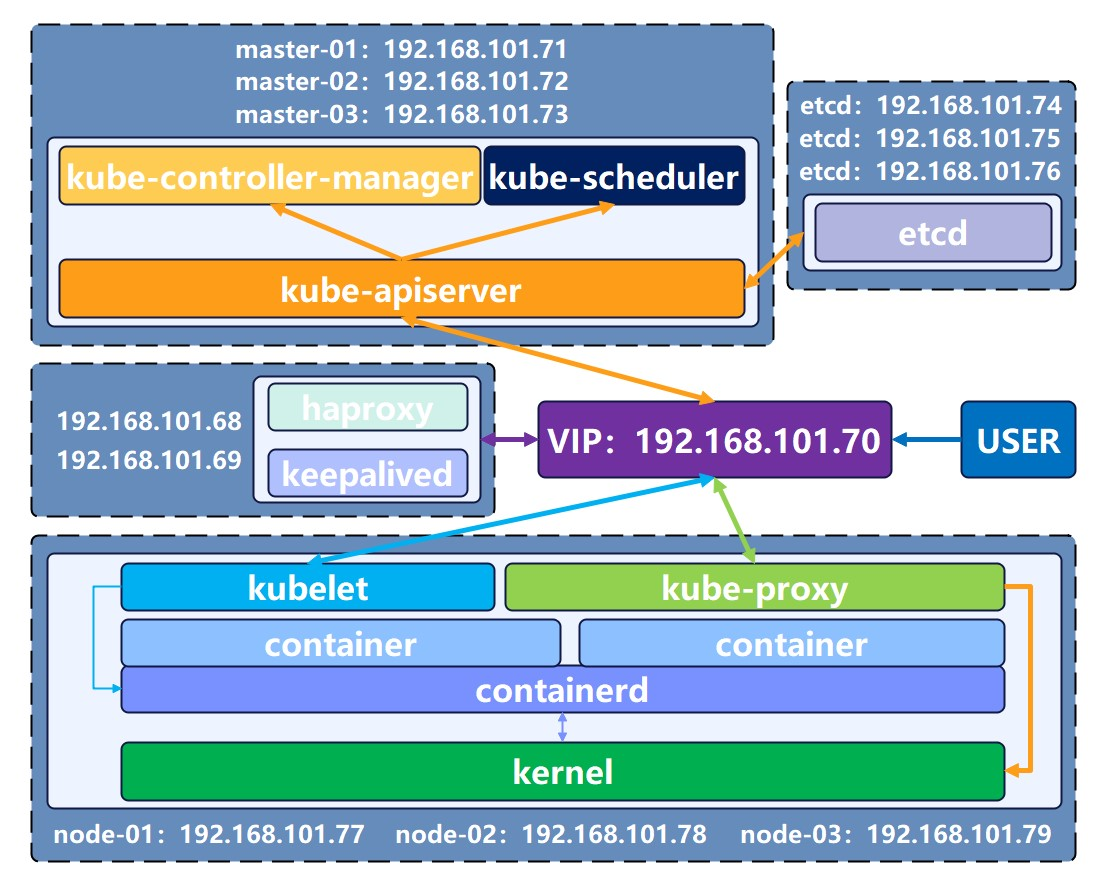

k8s 集群环境主要是 kubernetes 管理端服务(kube-apiserver、kube-controller-manager、kube-scheduler)的⾼可⽤实现,以及 node 节点上的(kubelet、kube-proxy)客户端服务的部署。

Kubernetes 设计架构:https://www.kubernetes.org.cn/kubernetes设计架构

1、k8s 高可用集群环境规划信息

1.1、服务器资源

如果是公司托管的 IDC 环境,建议直接将 harbor 和 node 节点部署在物理机环境,master 节点、etcd、负载均衡等可以是虚拟机。

本次安装使用的服务器资源为:harbor 1台(高可用环境可以2台做harbor镜像同步)、haproxy+keepalived 2台、master 节点3台(高可用环境最少2台)、node 节点3台(高可用环境2~N台)、etcd 3台(高可用环境最少3台才能选举leader)。

| 类型 | 服务器IP | 主机名 | VIP 地址 | 备注 |

|---|---|---|---|---|

| harbor | 192.168.101.67 | harbor-67 | / | 安装 ansible,kubeasz 部署 k8s |

| haproxy-01 | 192.168.101.68 | k8s-haproxy-01-68 | 192.168.101.70 | 代理master api |

| haproxy-02 | 192.168.101.69 | k8s-haproxy-02-69 | 192.168.101.70 | 代理master api |

| k8s-master-01 | 192.168.101.71 | k8s-master-01-71 | / | / |

| k8s-master-02 | 192.168.101.72 | k8s-master-02-72 | / | / |

| k8s-master-03 | 192.168.101.73 | k8s-master-03-73 | / | 最后新增节点 |

| k8s-etcd-01 | 192.168.101.74 | k8s-etcd-01-74 | / | / |

| k8s-etcd-02 | 192.168.101.75 | k8s-etcd-02-75 | / | / |

| k8s-etcd-03 | 192.168.101.76 | k8s-etcd-03-76 | / | / |

| k8s-node-01 | 192.168.101.77 | k8s-node-01-77 | / | / |

| k8s-node-02 | 192.168.101.78 | k8s-node-02-78 | / | / |

| k8s-node-03 | 192.168.101.79 | k8s-node-03-79 | / | 最后新增节点 |

1.2、软件清单

| 名称 | 版本 | 备注 |

|---|---|---|

| Ubuntu | 20.04.6 LTS | 下载地址:https://mirrors.aliyun.com/ubuntu-releases/focal/ubuntu-20.04.6-live-server-amd64.iso |

| kubeasz | 3.6.1 | 下载地址:https://github.com/easzlab/kubeasz/releases/download/3.6.1/ezdown |

| calico | v3.26.0 | / |

| harbor | v2.8.1 | 下载地址:https://github.com/goharbor/harbor/releases/download/v2.8.1/harbor-offline-installer-v2.8.1.tgz |

| containerd | v1.6.20 | 下载地址:https://github.com/containerd/containerd/releases/download/v1.6.21/cri-containerd-cni-1.6.20-linux-amd64.tar.gz |

| nerdctl | v1.3.1 | 下载地址:https://github.com/containerd/nerdctl/releases/download/v1.3.1/nerdctl-1.3.1-linux-amd64.tar.gz |

2、基础环境准备

2.1、系统配置

192.168.101.67 服务器安装 ansible,初始化配置。

2.1.1、安装 ansible

root@localhost:~# apt update

root@localhost:~# apt install -y ansible

2.1.2、配置 ansible hosts

#取消 host_key_checking 配置的注释,避免新连接的主机没有添加到 know_hosts 文件导致卡住。

root@localhost:~# sed -i "s/#host_key_checking.*/host_key_checking = False/g" /etc/ansible/ansible.cfg

#配置连接主机地址和主机名

root@localhost:~# vim /etc/ansible/hosts

[harbor]

192.168.101.67 hostname=harbor

[haproxy+keepalived]

192.168.101.68 hostname=haproxy-01

192.168.101.69 hostname=haproxy-02

[k8s-master]

192.168.101.71 hostname=k8s-master-01

192.168.101.72 hostname=k8s-master-02

192.168.101.73 hostname=k8s-master-03

[k8s-etcd]

192.168.101.74 hostname=k8s-etcd-01

192.168.101.75 hostname=k8s-etcd-02

192.168.101.76 hostname=k8s-etcd-03

[k8s-node]

192.168.101.77 hostname=k8s-node-01

192.168.101.78 hostname=k8s-node-02

192.168.101.79 hostname=k8s-node-03

2.1.3、配置SSH密钥登陆

#安装 sshpass

root@localhost:~# apt install -y sshpass

#生成公钥私钥

root@localhost:~# ssh-keygen -t rsa-sha2-512 -b 4096

Generating public/private rsa-sha2-512 key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa

Your public key has been saved in /root/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:/gyJwZJgJHmFt9cf++c7ndzUmtLd+afE5zOl6JIQPCA root@k8s-harbor-02-67

The key's randomart image is:

+---[RSA 4096]----+

|...o. |

|.oo E . |

| .o. o + |

| . ..o. = . |

| o.o S+ o .|

| . +..o . +|

| . +. o o+BB|

| +o +.BO*|

| oo.+++B|

+----[SHA256]-----+

#编辑批量免密并创建python3软连接脚本

root@localhost:~# vim ssh-copy-id.sh

#!/bin/bash

#目标主机列表

IP="

192.168.101.67

192.168.101.68

192.168.101.69

192.168.101.71

192.168.101.72

192.168.101.73

192.168.101.74

192.168.101.75

192.168.101.76

192.168.101.77

192.168.101.78

192.168.101.79

"

REMOTE_PORT="22"

REMOTE_USER="root"

REMOTE_PASS="123456"

for REMOTE_HOST in ${IP};do

REMOTE_CMD="echo ${REMOTE_HOST} is successfully!"

#添加目标远程主机的公钥

ssh-keyscan -p "${REMOTE_PORT}" "${REMOTE_HOST}" >> ~/.ssh/known_hosts

#通过sshpass配置密钥登录、并创建python3软连接

sshpass -p "${REMOTE_PASS}" ssh-copy-id "${REMOTE_USER}@${REMOTE_HOST}"

ssh ${REMOTE_HOST} ln -sv /usr/bin/python3 /usr/bin/python

echo ${REMOTE_HOST} 密钥登陆配置完成!

done

#执行脚本

root@localhost:~# bash ssh-copy-id.sh

# 192.168.101.67:22 SSH-2.0-OpenSSH_8.2p1 Ubuntu-4ubuntu0.7

# 192.168.101.67:22 SSH-2.0-OpenSSH_8.2p1 Ubuntu-4ubuntu0.7

# 192.168.101.67:22 SSH-2.0-OpenSSH_8.2p1 Ubuntu-4ubuntu0.7

# 192.168.101.67:22 SSH-2.0-OpenSSH_8.2p1 Ubuntu-4ubuntu0.7

# 192.168.101.67:22 SSH-2.0-OpenSSH_8.2p1 Ubuntu-4ubuntu0.7

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@192.168.101.67'"

and check to make sure that only the key(s) you wanted were added.

'/usr/bin/python' -> '/usr/bin/python3'

192.168.101.67 密钥登陆配置完成!

#其他的省略……

2.1.4、使用ansible-playbook 初始化 hostname、内核参数和开机内核加载模块

root@localhost:~# vim init.yaml

- hosts: all

remote_user: root

tasks:

- name: 设置 hostname

shell: hostnamectl set-hostname {{hostname|quote}} #这里的 hostname 是 /etc/ansible/hosts 中配置的

- name: 配置系统内核参数 /etc/sysctl.conf

shell:

cmd: |

cat > /etc/sysctl.conf << EOF

net.ipv4.ip_forward=1

vm.max_map_count=262144

kernel.pid_max=4194303

fs.file-max=1000000

net.ipv4.tcp_max_tw_buckets=6000

net.netfilter.nf_conntrack_max=2097152

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

vm.swappiness=0

EOF

- name: 配置开机模块加载 /etc/modules-load.d/modules.conf

shell:

cmd: |

cat > /etc/modules-load.d/modules.conf << EOF

ip_vs

ip_vs_lc

ip_vs_lblc

ip_vs_lblcr

ip_vs_rr

ip_vs_wrr

ip_vs_sh

ip_vs_dh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

ip_tables

ip_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

xt_set

br_netfilter

nf_conntrack

overlay

EOF

- name: 配置 /etc/security/limits.conf

shell:

cmd: |

cat > /etc/security/limits.conf << EOF

* soft core unlimited

* hard core unlimited

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

* soft memlock 32000

* hard memlock 32000

* soft msgqueue 8192000

* hard msgqueue 8192000

root soft core unlimited

root hard core unlimited

root soft nproc 1000000

root hard nproc 1000000

root soft nofile 1000000

root hard nofile 1000000

root soft memlock 32000

root hard memlock 32000

root soft msgqueue 8192000

root hard msgqueue 8192000

EOF

#执行 ansible-playbool

root@localhost:~# ansible-playbook init.yaml

PLAY [all] *********************************************************************************************************************************************************************************************

TASK [Gathering Facts] *********************************************************************************************************************************************************************************

ok: [192.168.101.71]

ok: [192.168.101.68]

ok: [192.168.101.69]

ok: [192.168.101.72]

ok: [192.168.101.67]

ok: [192.168.101.76]

ok: [192.168.101.73]

ok: [192.168.101.77]

ok: [192.168.101.74]

ok: [192.168.101.75]

ok: [192.168.101.79]

ok: [192.168.101.78]

TASK [设置 hostname] *************************************************************************************************************************************************************************************

changed: [192.168.101.71]

changed: [192.168.101.72]

changed: [192.168.101.69]

changed: [192.168.101.68]

changed: [192.168.101.67]

changed: [192.168.101.73]

changed: [192.168.101.74]

changed: [192.168.101.75]

changed: [192.168.101.76]

changed: [192.168.101.77]

changed: [192.168.101.78]

changed: [192.168.101.79]

TASK [配置系统内核参数 /etc/sysctl.conf] ***********************************************************************************************************************************************************************

changed: [192.168.101.68]

changed: [192.168.101.69]

changed: [192.168.101.67]

changed: [192.168.101.71]

changed: [192.168.101.72]

changed: [192.168.101.73]

changed: [192.168.101.74]

changed: [192.168.101.75]

changed: [192.168.101.76]

changed: [192.168.101.77]

changed: [192.168.101.78]

changed: [192.168.101.79]

TASK [配置开机模块加载 /etc/modules-load.d/modules.conf] *******************************************************************************************************************************************************

changed: [192.168.101.67]

changed: [192.168.101.68]

changed: [192.168.101.69]

changed: [192.168.101.71]

changed: [192.168.101.72]

changed: [192.168.101.73]

changed: [192.168.101.74]

changed: [192.168.101.75]

changed: [192.168.101.76]

changed: [192.168.101.77]

changed: [192.168.101.79]

changed: [192.168.101.78]

TASK [配置 /etc/security/limits.conf] ********************************************************************************************************************************************************************

changed: [192.168.101.68]

changed: [192.168.101.67]

changed: [192.168.101.71]

changed: [192.168.101.69]

changed: [192.168.101.72]

changed: [192.168.101.73]

changed: [192.168.101.74]

changed: [192.168.101.76]

changed: [192.168.101.75]

changed: [192.168.101.77]

changed: [192.168.101.78]

changed: [192.168.101.79]

PLAY RECAP *********************************************************************************************************************************************************************************************

192.168.101.67 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.68 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.69 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.71 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.72 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.73 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.74 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.75 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.76 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.77 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.78 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.79 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

2.1.5、重启服务器后验证一下内核加载模块和内核参数

root@harbor:~# lsmod | grep -E 'br_netfilter|nf_conntrack'

br_netfilter 28672 0

bridge 176128 1 br_netfilter

nf_conntrack 139264 2 nf_nat,ip_vs

nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 5 nf_conntrack,nf_nat,btrfs,raid456,ip_vs

root@harbor:~# sysctl -a | grep -E 'bridge-nf-call-iptables|nf_conntrack_max'

net.bridge.bridge-nf-call-iptables = 1

net.netfilter.nf_conntrack_max = 2097152

net.nf_conntrack_max = 2097152

root@harbor:~# ulimit -a

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 15243

max locked memory (kbytes, -l) 32000

max memory size (kbytes, -m) unlimited

open files (-n) 1000000

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 8192000

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 1000000

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

root@harbor:~#

2.2、keepalived 安装配置

2.2.1、haproxy-01 和 haproxy-02 服务器安装 keepalived

apt install -y keepalived

2.2.2、haproxy-01 配置 keepalived

root@haproxy-01:~# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 1

priority 100

advert_int 3

unicast_src_ip 192.168.101.68

unicast_peer {

192.168.101.69

}

authentication {

auth_type PASS

auth_pass youjump123456

}

virtual_ipaddress {

192.168.101.70 dev ens33 label ens33:1

}

}

2.2.3、haproxy-02 配置 keepalived

root@haproxy-02:~# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 1

priority 80

advert_int 3

unicast_src_ip 192.168.101.69

unicast_peer {

192.168.101.68

}

authentication {

auth_type PASS

auth_pass youjump123456

}

virtual_ipaddress {

192.168.101.70 dev ens33 label ens33:1

}

}

2.2.4、haproxy-01 服务器启动 keepalived 并设置开机自启

root@aproxy-01:~# systemctl start keepalived && systemctl enable keepalived

Synchronizing state of keepalived.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable keepalived

root@haproxy-01:~#

2.2.5、haproxy-02 服务器启动 keepalived 并设置开机自启

root@haproxy-02:~# systemctl start keepalived && systemctl enable keepalived

Synchronizing state of keepalived.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable keepalived

root@haproxy-02:~#

2.2.6、查看 haproxy-01 服务器 IP 地址

root@haproxy-01:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:43:6e:5e brd ff:ff:ff:ff:ff:ff

inet 192.168.101.68/24 brd 192.168.101.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.101.70/32 scope global ens33:1

valid_lft forever preferred_lft forever

inet6 240e:399:49a:eee0:20c:29ff:fe43:6e5e/64 scope global dynamic mngtmpaddr noprefixroute

valid_lft 6917sec preferred_lft 3317sec

inet6 fe80::20c:29ff:fe43:6e5e/64 scope link

valid_lft forever preferred_lft forever

3: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

root@haproxy-01:~#

2.2.7、停止 haproxy-01 服务器 keepalived 服务,查看 haproxy-02 服务器 IP 地址

root@haproxy-02:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:16:2b:4b brd ff:ff:ff:ff:ff:ff

inet 192.168.101.69/24 brd 192.168.101.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.101.70/32 scope global ens33:1

valid_lft forever preferred_lft forever

inet6 240e:399:49a:eee0:20c:29ff:fe16:2b4b/64 scope global dynamic mngtmpaddr noprefixroute

valid_lft 6844sec preferred_lft 3244sec

inet6 fe80::20c:29ff:fe16:2b4b/64 scope link

valid_lft forever preferred_lft forever

3: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

root@haproxy-02:~#

2.3、haproxy 安装配置

以下操作需要在 haproxy-01 和 haproxy-02 服务器同时执行

2.3.1、安装 haproxy

apt install -y haproxy

2.3.2、编辑 /etc/haproxy/haproxy.cfg 文件,添加 k8s 6443 端口代理 和 haproxy web 管理页面配置

vim /etc/haproxy/haproxy.cfg

listen admin_stats

stats enable

bind *:8080

mode http

option httplog

log global

maxconn 10

stats refresh 30s

stats uri /admin

stats realm haproxy

stats auth admin:admin123456

stats hide-version

stats admin if TRUE

listen k8s_api_nodes_6443

bind 192.168.101.70:6443

mode tcp

#balance leastconn

server 192.168.101.71 192.168.101.71:6443 check inter 2000 fall 3 rise 5

server 192.168.101.72 192.168.101.72:6443 check inter 2000 fall 3 rise 5

server 192.168.101.73 192.168.101.73:6443 check inter 2000 fall 3 rise 5

2.3.3、设置 sysctl.conf 参数 net.ipv4.ip_nonlocal_bind = 1,否则在启动 haproxy 时,没有获取到 VIP 地址的服务器,haproxy 会报 'option httplog' not usable with proxy 'k8s_api_nodes_6443' (needs 'mode http'). Falling back to 'option tcplog'.

echo 'net.ipv4.ip_nonlocal_bind = 1' >> /etc/sysctl.conf && sysctl -p

2.3.4、启动 haproxy 并设置开机启动

systemctl start haproxy && systemctl enable haproxy

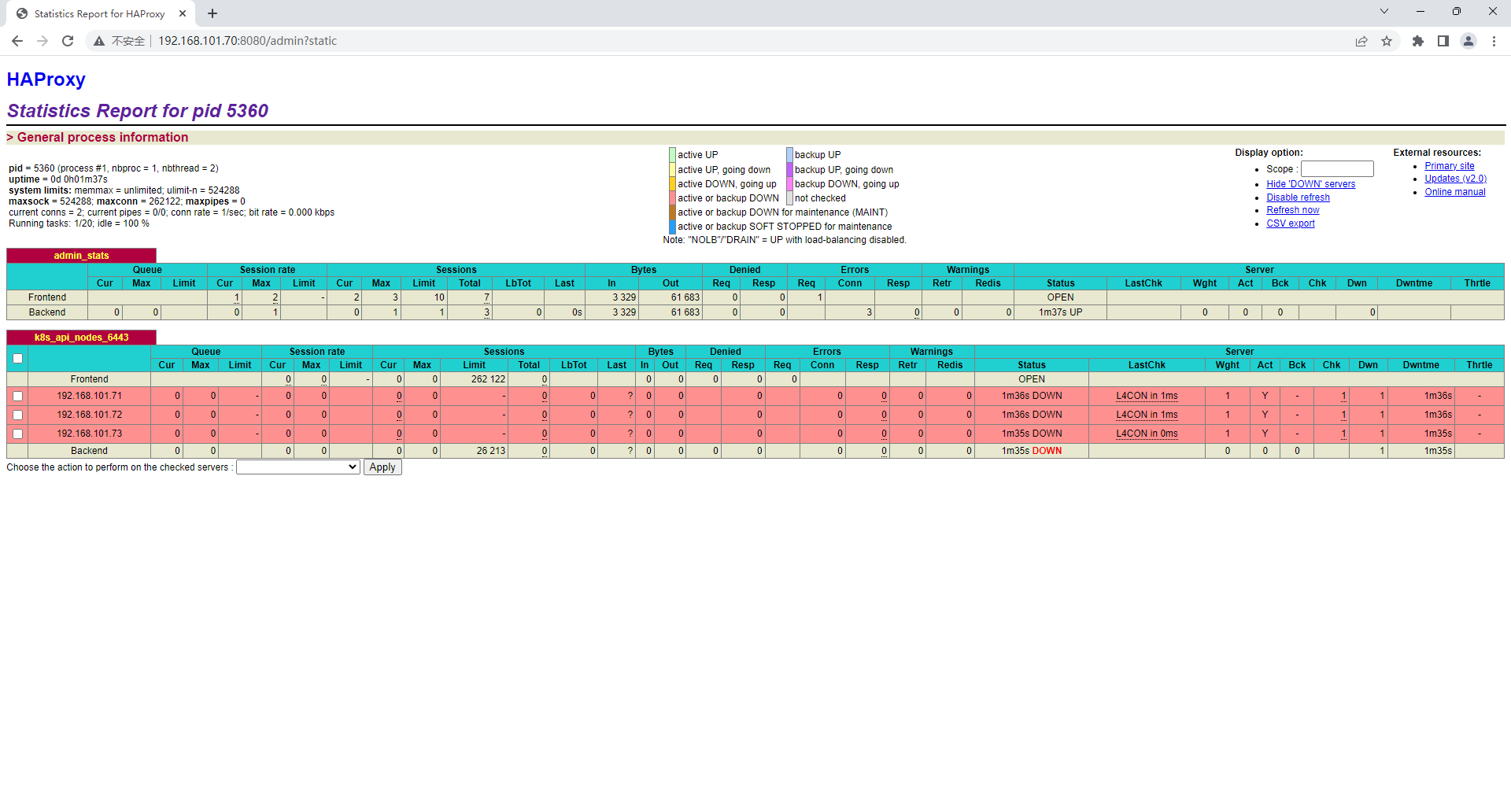

2.3.5、测试 VIP 地址访问 haproxy 管理页:http://192.168.101.70:8080/admin?static

2.4、harbor 服务器安装 docker 和 harbor

2.4.1、下载 docker 和 docker-compose 文件和安装脚本

这里是我自己准备好的相关文件,你也可以自己到官方下载

root@harbor:~# git clone https://gitee.com/dyogame/install-docker

Cloning into 'install-docker'...

remote: Enumerating objects: 9, done.

remote: Counting objects: 100% (9/9), done.

remote: Compressing objects: 100% (9/9), done.

remote: Total 9 (delta 1), reused 0 (delta 0), pack-reused 0

Unpacking objects: 100% (9/9), 76.91 MiB | 3.73 MiB/s, done.

root@harbor:~/install-docker# tree

.

├── daemon.json

├── docker-20.10.19.tgz

├── docker-completions

├── docker-compose-completions

├── docker-compose-linux-x86_64

├── docker-install-offline.sh

└── docker.service

0 directories, 7 files

root@harbor:~/install-docker#

2.4.2、执行脚本,安装 docker 和 docker-compose

#查看安装脚本

root@harbor:~/install-docker# cat docker-install-offline.sh

#!/bin/bash

#输出带颜色的文本

function print(){

#字体颜色 字体背景颜色 30:黑 31:红 32:绿 33:黄 34:蓝色 35:紫色 36:深绿 37:白色

echo -e "\033[1;${1};40m${2}\033[0m"

}

print 34 "解压docker二进制文件压缩包..."

tar xf docker-20.10.19.tgz

print 34 "添加docker二进制文件可执行权限..."

chmod +x docker/*

print 34 "拷贝docker二进制文件到/usr/bin/目录..."

cp docker/* /usr/bin/

print 34 "查看docker版本..."

docker version

print 34 "拷贝daemon.json docker配置文件到/etc/docker/目录..."

mkdir -p /etc/docker/

cp daemon.json /etc/docker/

print 34 "添加docker-compose-linux-x86_64可执行权限..."

chmod +x docker-compose-linux-x86_64

print 34 "拷贝docker-compose-linux-x86_64到/usr/bin/docker-compose..."

cp docker-compose-linux-x86_64 /usr/bin/docker-compose

print 34 "查看docker版本..."

docker-compose version

print 34 "拷贝docker.service到/lib/systemd/system/目录..."

cp docker.service /lib/systemd/system/

systemctl daemon-reload

print 34 "启动docker.service..."

systemctl start docker.service

print 34 "开启docker.service开机启动..."

systemctl enable docker.service

print 34 "添加docker命令补全..."

cp docker-completions /usr/share/bash-completion/completions/docker

source /usr/share/bash-completion/completions/docker

#下载地址:https://github.com/docker/compose/blob/master/contrib/completion/bash/docker-compose?raw=true

print 34 "添加docker-compose命令补全..."

cp docker-compose-completions /usr/share/bash-completion/completions/docker-compose

source /usr/share/bash-completion/completions/docker-compose

#编辑 docker 配置文件

root@harbor:~/install-docker# vim daemon.json

{

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn",

"https://server.ttfddy.com:11443",

"https://hub-mirror.c.163.com",

"https://registry.docker-cn.com"],

"insecure-registries": ["server.ttfddy.com:10080"],

"data-root": "/home/docker",

"log-driver": "json-file",

"log-opts": {"max-size":"500m", "max-file":"3"},

"bip": "192.168.200.1/21"

}

root@harbor:~/install-docker# bash docker-install-offline.sh

解压docker二进制文件压缩包...

添加docker二进制文件可执行权限...

拷贝docker二进制文件到/usr/bin/目录...

查看docker版本...

Client:

Version: 20.10.19

API version: 1.41

Go version: go1.18.7

Git commit: d85ef84

Built: Thu Oct 13 16:43:07 2022

OS/Arch: linux/amd64

Context: default

Experimental: true

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

拷贝daemon.json docker配置文件到/etc/docker/目录...

添加docker-compose-linux-x86_64可执行权限...

拷贝docker-compose-linux-x86_64到/usr/bin/docker-compose...

查看docker版本...

Docker Compose version v2.16.0

拷贝docker.service到/lib/systemd/system/目录...

启动docker.service...

开启docker.service开机启动...

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /lib/systemd/system/docker.service.

添加docker命令补全...

添加docker-compose命令补全...

root@harbor:~/install-docker#

2.4.3、下载解压 harbor 离线安装包,修改默认配置

root@harbor:/home# wget https://github.com/goharbor/harbor/releases/download/v2.8.1/harbor-offline-installer-v2.8.1.tgz

root@harbor:/home# tar xf harbor-offline-installer-v2.8.1.tgz

root@harbor:/home# cd harbor

#harbor 重命名配置文件

root@harbor:/home/harbor# cp harbor.yml.tmpl harbor.yml

#编辑 harbor.yml

root@harbor:/home/harbor# vim harbor.yml

hostname: server.ttfddy.com #修改为自己的域名或IP地址

http:

port: 10080 #http 监听端口

https:

port: 11443 #https 监听端口

certificate: /home/harbor/certs/10063909_server.ttfddy.com.pem #证书

private_key: /home/harbor/certs/10063909_server.ttfddy.com.key #证书私钥

harbor_admin_password: Mimahenfuza! #登陆密码

database:

password: Mimahenfuza! #数据库密码

max_idle_conns: 100

max_open_conns: 900

conn_max_lifetime: 5m

conn_max_idle_time: 0

data_volume: /home/harbor/data #harbor 数据目录

2.4.4、执行安装

#先将证书文件上传到服务器,执行安装脚本

root@harbor:/home/harbor# ./install.sh --with-trivy --with-notary

[Step 0]: checking if docker is installed ...

Note: docker version: 20.10.19

[Step 1]: checking docker-compose is installed ...

Note: docker-compose version: 2.16.0

[Step 2]: loading Harbor images ...

abbd0b6fec72: Loading layer [==================================================>] 38.42MB/38.42MB

3d77f5033ecf: Loading layer [==================================================>] 5.771MB/5.771MB

4792c676ca3f: Loading layer [==================================================>] 4.096kB/4.096kB

4697aa95a6d3: Loading layer [==================================================>] 3.072kB/3.072kB

5e5b05ba0ba9: Loading layer [==================================================>] 17.57MB/17.57MB

34050640e209: Loading layer [==================================================>] 18.36MB/18.36MB

Loaded image: goharbor/registry-photon:v2.8.1

833ca653dc2d: Loading layer [==================================================>] 5.766MB/5.766MB

8146a802a6a3: Loading layer [==================================================>] 9.138MB/9.138MB

06f77b4e0e3b: Loading layer [==================================================>] 15.88MB/15.88MB

5c300800e0ff: Loading layer [==================================================>] 29.29MB/29.29MB

0a781e308e52: Loading layer [==================================================>] 22.02kB/22.02kB

bbca9c1f60e2: Loading layer [==================================================>] 15.88MB/15.88MB

Loaded image: goharbor/notary-server-photon:v2.8.1

e0cd0f3e8809: Loading layer [==================================================>] 8.914MB/8.914MB

d9af03cee462: Loading layer [==================================================>] 3.584kB/3.584kB

c0d671cb9454: Loading layer [==================================================>] 2.56kB/2.56kB

822099b2e3b2: Loading layer [==================================================>] 59.3MB/59.3MB

cf108c795455: Loading layer [==================================================>] 5.632kB/5.632kB

62a83d952ff2: Loading layer [==================================================>] 116.7kB/116.7kB

7bf175a36152: Loading layer [==================================================>] 44.03kB/44.03kB

4441f0d8f678: Loading layer [==================================================>] 60.25MB/60.25MB

23a6833cf837: Loading layer [==================================================>] 2.56kB/2.56kB

Loaded image: goharbor/harbor-core:v2.8.1

801a800b67d2: Loading layer [==================================================>] 8.913MB/8.913MB

d9a79d19a56c: Loading layer [==================================================>] 3.584kB/3.584kB

bfd5a24a5aff: Loading layer [==================================================>] 2.56kB/2.56kB

ab9e0495e5a4: Loading layer [==================================================>] 47.58MB/47.58MB

e698269817a3: Loading layer [==================================================>] 48.37MB/48.37MB

Loaded image: goharbor/harbor-jobservice:v2.8.1

03a2aada91d2: Loading layer [==================================================>] 8.914MB/8.914MB

7e9b36779e7c: Loading layer [==================================================>] 26.03MB/26.03MB

311b7e1783ab: Loading layer [==================================================>] 4.608kB/4.608kB

234f7d5646b6: Loading layer [==================================================>] 26.82MB/26.82MB

Loaded image: goharbor/harbor-exporter:v2.8.1

aeae73443c89: Loading layer [==================================================>] 6.303MB/6.303MB

180e7894c5f7: Loading layer [==================================================>] 4.096kB/4.096kB

c39eb239dac1: Loading layer [==================================================>] 3.072kB/3.072kB

33e480bb5daf: Loading layer [==================================================>] 190.7MB/190.7MB

5e7725650689: Loading layer [==================================================>] 14.02MB/14.02MB

082254237183: Loading layer [==================================================>] 205.5MB/205.5MB

Loaded image: goharbor/trivy-adapter-photon:v2.8.1

5ab51100a273: Loading layer [==================================================>] 44.12MB/44.12MB

e6b073edfdd1: Loading layer [==================================================>] 54.88MB/54.88MB

532a7d3d4545: Loading layer [==================================================>] 19.32MB/19.32MB

ca4477105cc0: Loading layer [==================================================>] 65.54kB/65.54kB

8063f11be909: Loading layer [==================================================>] 2.56kB/2.56kB

055b69c82eb8: Loading layer [==================================================>] 1.536kB/1.536kB

0cf7b58aed05: Loading layer [==================================================>] 12.29kB/12.29kB

0b1aec3e2e23: Loading layer [==================================================>] 2.621MB/2.621MB

c021a11238ab: Loading layer [==================================================>] 416.8kB/416.8kB

Loaded image: goharbor/prepare:v2.8.1

88930aa10578: Loading layer [==================================================>] 92.02MB/92.02MB

b50482ac5278: Loading layer [==================================================>] 3.072kB/3.072kB

cc01dc4dc405: Loading layer [==================================================>] 59.9kB/59.9kB

6c216ddc567c: Loading layer [==================================================>] 61.95kB/61.95kB

Loaded image: goharbor/redis-photon:v2.8.1

24ce6353ec70: Loading layer [==================================================>] 91.19MB/91.19MB

Loaded image: goharbor/nginx-photon:v2.8.1

1154fefbe123: Loading layer [==================================================>] 5.766MB/5.766MB

dc7cea2e4d6a: Loading layer [==================================================>] 9.138MB/9.138MB

134fc04ba5fc: Loading layer [==================================================>] 14.47MB/14.47MB

b6d242f92cde: Loading layer [==================================================>] 29.29MB/29.29MB

7d1cd5e4d8b9: Loading layer [==================================================>] 22.02kB/22.02kB

c177281aafad: Loading layer [==================================================>] 14.47MB/14.47MB

Loaded image: goharbor/notary-signer-photon:v2.8.1

f9beb14877a0: Loading layer [==================================================>] 91.19MB/91.19MB

fa05fcc84f95: Loading layer [==================================================>] 6.1MB/6.1MB

00b601283185: Loading layer [==================================================>] 1.233MB/1.233MB

Loaded image: goharbor/harbor-portal:v2.8.1

d60dd10d32bf: Loading layer [==================================================>] 99.1MB/99.1MB

fac8521dab17: Loading layer [==================================================>] 3.584kB/3.584kB

06df5ec7c26d: Loading layer [==================================================>] 3.072kB/3.072kB

7f8d4e9790a8: Loading layer [==================================================>] 2.56kB/2.56kB

b2f8961fe3c4: Loading layer [==================================================>] 3.072kB/3.072kB

511d29b75635: Loading layer [==================================================>] 3.584kB/3.584kB

348781020cf5: Loading layer [==================================================>] 20.48kB/20.48kB

Loaded image: goharbor/harbor-log:v2.8.1

d4149c9852da: Loading layer [==================================================>] 122.8MB/122.8MB

de435a1f145d: Loading layer [==================================================>] 17.79MB/17.79MB

e9fe18913a7e: Loading layer [==================================================>] 5.12kB/5.12kB

6fa165de532f: Loading layer [==================================================>] 6.144kB/6.144kB

d689ec984d7c: Loading layer [==================================================>] 3.072kB/3.072kB

20988d451ae8: Loading layer [==================================================>] 2.048kB/2.048kB

e734d72353b0: Loading layer [==================================================>] 2.56kB/2.56kB

179522f5118f: Loading layer [==================================================>] 2.56kB/2.56kB

340dc870b7d7: Loading layer [==================================================>] 2.56kB/2.56kB

44fe41ce86f3: Loading layer [==================================================>] 9.728kB/9.728kB

Loaded image: goharbor/harbor-db:v2.8.1

077d904710b1: Loading layer [==================================================>] 5.771MB/5.771MB

21f859b048bf: Loading layer [==================================================>] 4.096kB/4.096kB

2ec18f1f3eca: Loading layer [==================================================>] 17.57MB/17.57MB

24375769c4d2: Loading layer [==================================================>] 3.072kB/3.072kB

dbb394a12a4d: Loading layer [==================================================>] 31.12MB/31.12MB

4e18eca456a3: Loading layer [==================================================>] 49.48MB/49.48MB

Loaded image: goharbor/harbor-registryctl:v2.8.1

[Step 3]: preparing environment ...

[Step 4]: preparing harbor configs ...

prepare base dir is set to /home/harbor

Generated configuration file: /config/portal/nginx.conf

Generated configuration file: /config/log/logrotate.conf

Generated configuration file: /config/log/rsyslog_docker.conf

Generated configuration file: /config/nginx/nginx.conf

Generated configuration file: /config/core/env

Generated configuration file: /config/core/app.conf

Generated configuration file: /config/registry/config.yml

Generated configuration file: /config/registryctl/env

Generated configuration file: /config/registryctl/config.yml

Generated configuration file: /config/db/env

Generated configuration file: /config/jobservice/env

Generated configuration file: /config/jobservice/config.yml

Generated and saved secret to file: /data/secret/keys/secretkey

Successfully called func: create_root_cert

Successfully called func: create_root_cert

Successfully called func: create_cert

Copying certs for notary signer

Copying nginx configuration file for notary

Generated configuration file: /config/nginx/conf.d/notary.upstream.conf

Generated configuration file: /config/nginx/conf.d/notary.server.conf

Generated configuration file: /config/notary/server-config.postgres.json

Generated configuration file: /config/notary/server_env

Generated and saved secret to file: /data/secret/keys/defaultalias

Generated configuration file: /config/notary/signer_env

Generated configuration file: /config/notary/signer-config.postgres.json

Generated configuration file: /config/trivy-adapter/env

Generated configuration file: /compose_location/docker-compose.yml

Clean up the input dir

Note: stopping existing Harbor instance ...

[Step 5]: starting Harbor ...

➜

Notary will be deprecated as of Harbor v2.6.0 and start to be removed in v2.8.0 or later.

You can use cosign for signature instead since Harbor v2.5.0.

Please see discussion here for more details. https://github.com/goharbor/harbor/discussions/16612

[+] Running 15/15

⠿ Network harbor_notary-sig Created 0.2s

⠿ Network harbor_harbor Created 0.2s

⠿ Network harbor_harbor-notary Created 0.2s

⠿ Container harbor-log Started 1.4s

⠿ Container registryctl Started 2.6s

⠿ Container harbor-db Started 3.1s

⠿ Container harbor-portal Started 2.6s

⠿ Container redis Started 2.8s

⠿ Container registry Started 2.9s

⠿ Container notary-signer Started 4.3s

⠿ Container trivy-adapter Started 3.7s

⠿ Container harbor-core Started 4.6s

⠿ Container notary-server Started 5.7s

⠿ Container nginx Started 6.0s

⠿ Container harbor-jobservice Started 5.9s

✔ ----Harbor has been installed and started successfully.----

root@harbor:/home/harbor#

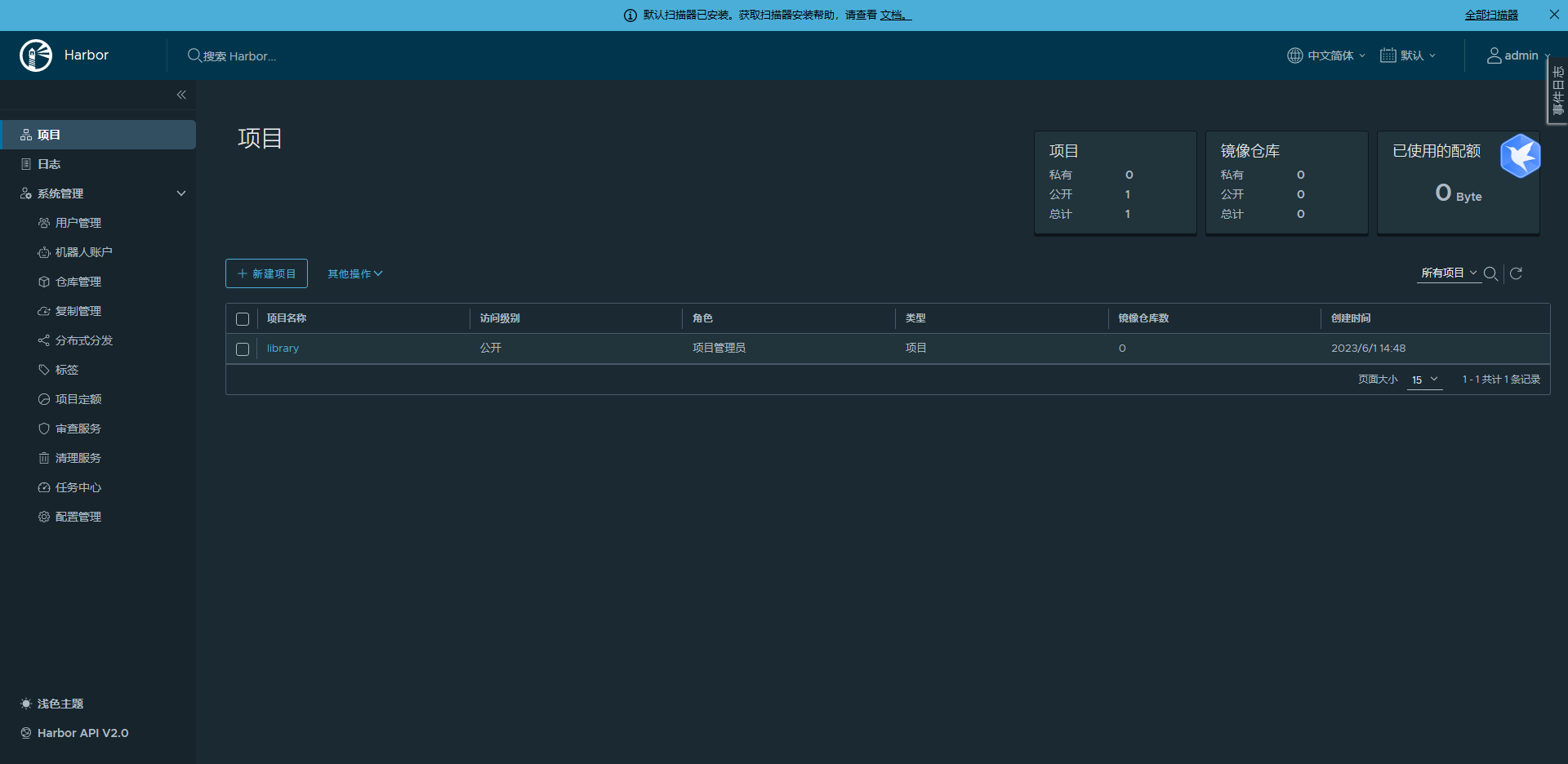

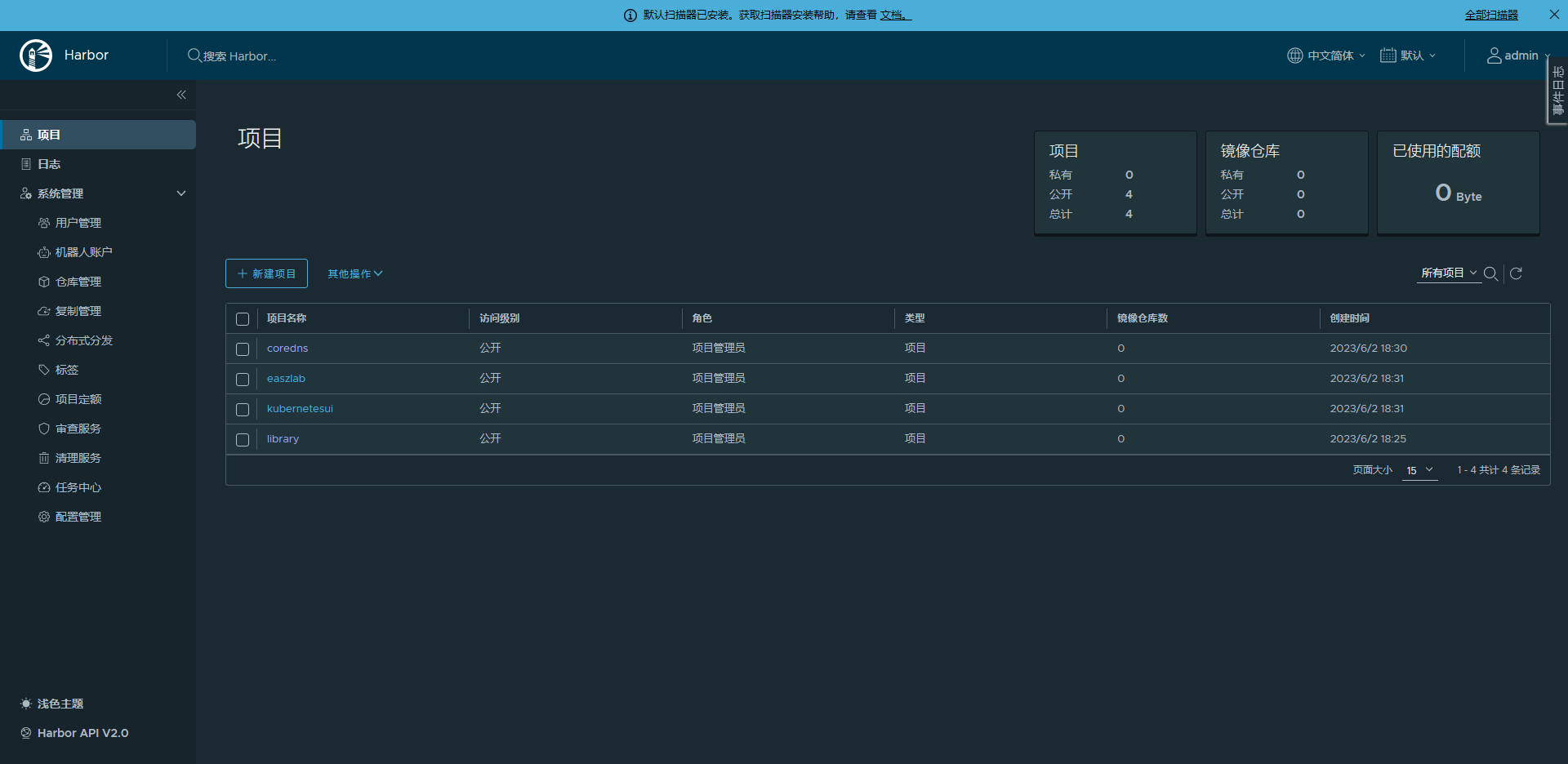

2.4.5、浏览器测试访问并登陆 harbor

新建 coredns、easzlab、kubernetesui、calico 项目,后面需要用到

2.4.6、登陆 harbor 并测试上传下载镜像

#登陆 harbor

root@harbor:/home/harbor# docker login https://server.ttfddy.com:11443

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

#下载 alpine 镜像

root@harbor:/home/harbor# docker pull alpine

Using default tag: latest

latest: Pulling from library/alpine

8a49fdb3b6a5: Downloading

latest: Pulling from library/alpine

8a49fdb3b6a5: Pull complete

Digest: sha256:02bb6f428431fbc2809c5d1b41eab5a68350194fb508869a33cb1af4444c9b11

Status: Downloaded newer image for alpine:latest

docker.io/library/alpine:latest

#修改为 harbor tag

root@harbor:/home/harbor# docker tag alpine:latest server.ttfddy.com:11443/library/alpine:latest

#上传镜像到 harbor

root@harbor:/home/harbor# docker push server.ttfddy.com:11443/library/alpine:latest

The push refers to repository [server.ttfddy.com:11443/library/alpine]

bb01bd7e32b5: Pushed

latest: digest: sha256:c0669ef34cdc14332c0f1ab0c2c01acb91d96014b172f1a76f3a39e63d1f0bda size: 528

#删除本地镜像

root@harbor:/home/harbor# docker rmi -f alpine:latest server.ttfddy.com:11443/library/alpine:latest

Untagged: alpine:latest

Untagged: alpine@sha256:02bb6f428431fbc2809c5d1b41eab5a68350194fb508869a33cb1af4444c9b11

Untagged: server.ttfddy.com:11443/library/alpine:latest

Untagged: server.ttfddy.com:11443/library/alpine@sha256:c0669ef34cdc14332c0f1ab0c2c01acb91d96014b172f1a76f3a39e63d1f0bda

Deleted: sha256:5e2b554c1c45d22c9d1aa836828828e320a26011b76c08631ac896cbc3625e3e

Deleted: sha256:bb01bd7e32b58b6694c8c3622c230171f1cec24001a82068a8d30d338f420d6c

#下载 harbor 镜像

root@harbor:/home/harbor# docker pull server.ttfddy.com:11443/library/alpine:latest

latest: Pulling from library/alpine

8a49fdb3b6a5: Pull complete

Digest: sha256:c0669ef34cdc14332c0f1ab0c2c01acb91d96014b172f1a76f3a39e63d1f0bda

Status: Downloaded newer image for server.ttfddy.com:11443/library/alpine:latest

server.ttfddy.com:11443/library/alpine:latest

root@harbor:/home/harbor#

2.5、k8s master、etcd、node 服务器安装 containerd 和 nerdctl

2.5.1、准备二进制包和安装脚本

#下载 cri-containerd-cni-1.6.20-linux-amd64.tar.gz,这个包里包含了 cri、containerd、cni

root@harbor:~# wget https://github.com/containerd/containerd/releases/download/v1.6.21/cri-containerd-cni-1.6.20-linux-amd64.tar.gz

#下载 nerdctl

root@harbor:~# wget https://github.com/containerd/nerdctl/releases/download/v1.3.1/nerdctl-1.3.1-linux-amd64.tar.gz

#编辑安装脚本

root@harbor:~# vim install.sh

#!/bin/bash

#程序包名称

package_name=cri-containerd-cni-1.6.20-linux-amd64.tar.gz

#程序包解压目录

package_dir=./${package_name%%'.tar.gz'}

echo 正在创建程序包目录:${package_dir}

#创建程序包解压目录

mkdir -p ${package_dir}

echo 正在解压${package_name}到${package_dir}

#解压程序包

tar xf ${package_name} -C ${package_dir}

echo 正在安装 cri、containerd、cni、runc

#拷贝文件到指定目录

cp -r ${package_dir}/usr/local/bin /usr/local/

cp -r ${package_dir}/usr/local/sbin /usr/local/

cp -r ${package_dir}/opt/* /opt

cp -r ${package_dir}/etc/cni /etc/

cp -r ${package_dir}/etc/crictl.yaml /etc/

cp -r ${package_dir}/etc/systemd/system/containerd.service /lib/systemd/system/

echo 正在初始化 containerd 配置

#创建 containerd 配置文件目录

mkdir -p /etc/containerd

#生成 containerd 默认配置文件

containerd config default > /etc/containerd/config.toml

#获取配置行号

num=`sed -n '/\[plugins."io.containerd.grpc.v1.cri".registry.mirrors\]/=' /etc/containerd/config.toml`

#修改 pause 镜像下载地址

sed -i "s#registry.k8s.io/pause:3.6#registry.aliyuncs.com/google_containers/pause:3.7#g" /etc/containerd/config.toml

#添加镜像地址

sed -i "${num} a\\ [plugins.\"io.containerd.grpc.v1.cri\".registry.mirrors.\"docker.io\"]" /etc/containerd/config.toml

let num=${num}+1

sed -i "${num} a\\ endpoint = [\"https://9916w1ow.mirror.aliyuncs.com\",\"https://server.ttfddy.com:11443\"]" /etc/containerd/config.toml

echo 正在启动 containerd

#启动 containerd 并设置服务开机启动

systemctl daemon-reload

systemctl start containerd.service

systemctl enable containerd.service

echo 正在安装 nerdctl

package_name=nerdctl-1.3.1-linux-amd64.tar.gz

package_dir=./${package_name%%'.tar.gz'}

echo 正在创建程序包目录:${package_dir}

#创建程序包解压目录

mkdir -p ${package_dir}

echo 正在解压 ${package_name} 到 ${package_dir}

#解压程序包

tar xf ${package_name} -C ${package_dir}

echo 正在安装 nerdctl

#拷贝文件到指定目录

cp -r ${package_dir}/nerdctl /usr/local/bin/

echo 正在初始化 nerdctl 配置

mkdir -p /etc/nerdctl

cat > /etc/nerdctl/nerdctl.toml <<EOF

namespace = "k8s.io"

debug = false

debug_full = false

insecure_registry = true

EOF

echo 安装完成

2.5.2、准备 ansible-playbook 脚本

root@harbor:~# vim install-containerd.yaml

- hosts: k8s-master,k8s-etcd,k8s-node

remote_user: root

tasks:

- name: "拷贝 nerdctl-1.3.1-linux-amd64.tar.gz 文件"

copy:

src: ./nerdctl-1.3.1-linux-amd64.tar.gz

dest: /tmp/

owner: root

group: root

mode: 0644

- name: "拷贝 cri-containerd-cni-1.6.20-linux-amd64.tar.gz 文件"

copy:

src: ./cri-containerd-cni-1.6.20-linux-amd64.tar.gz

dest: /tmp/

owner: root

group: root

mode: 0644

- name: "拷贝 install.sh 文件"

copy:

src: ./install.sh

dest: /tmp/

owner: root

group: root

mode: 0744

- name: "执行安装脚本"

shell: cd /tmp/ && bash install.sh

2.5.3、执行 ansible-playbook 脚本

root@harbor:~# ansible-playbook install-containerd.yaml

PLAY [k8s-master,k8s-etcd,k8s-node] ********************************************************************************************************************************************************************

TASK [Gathering Facts] *********************************************************************************************************************************************************************************

ok: [192.168.101.71]

ok: [192.168.101.72]

ok: [192.168.101.77]

ok: [192.168.101.76]

ok: [192.168.101.73]

ok: [192.168.101.74]

ok: [192.168.101.75]

ok: [192.168.101.78]

ok: [192.168.101.79]

TASK [拷贝 nerdctl-1.3.1-linux-amd64.tar.gz 文件] **********************************************************************************************************************************************************

changed: [192.168.101.71]

changed: [192.168.101.72]

changed: [192.168.101.75]

changed: [192.168.101.74]

changed: [192.168.101.73]

changed: [192.168.101.76]

changed: [192.168.101.78]

changed: [192.168.101.77]

changed: [192.168.101.79]

TASK [拷贝 cri-containerd-cni-1.6.20-linux-amd64.tar.gz 文件] **********************************************************************************************************************************************

changed: [192.168.101.72]

changed: [192.168.101.74]

changed: [192.168.101.73]

changed: [192.168.101.71]

changed: [192.168.101.75]

changed: [192.168.101.77]

changed: [192.168.101.79]

changed: [192.168.101.78]

changed: [192.168.101.76]

TASK [拷贝 install.sh 文件] ********************************************************************************************************************************************************************************

changed: [192.168.101.71]

changed: [192.168.101.73]

changed: [192.168.101.72]

changed: [192.168.101.74]

changed: [192.168.101.75]

changed: [192.168.101.76]

changed: [192.168.101.78]

changed: [192.168.101.79]

changed: [192.168.101.77]

TASK [执行安装脚本] ******************************************************************************************************************************************************************************************

changed: [192.168.101.72]

changed: [192.168.101.73]

changed: [192.168.101.75]

changed: [192.168.101.74]

changed: [192.168.101.71]

changed: [192.168.101.78]

changed: [192.168.101.76]

changed: [192.168.101.77]

changed: [192.168.101.79]

PLAY RECAP *********************************************************************************************************************************************************************************************

192.168.101.71 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.72 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.73 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.74 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.75 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.76 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.77 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.78 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.101.79 : ok=5 changed=4 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

root@harbor:~#

2.5.4、检查 containerd 和 nerdctl 版本

root@harbor:~# ansible k8s-master,k8s-etcd,k8s-node -m shell -a "containerd -v && nerdctl version"

192.168.101.71 | CHANGED | rc=0 >>

containerd github.com/containerd/containerd v1.6.20 2806fc1057397dbaeefbea0e4e17bddfbd388f38

Client:

Version: v1.3.1

OS/Arch: linux/amd64

Git commit: b224b280ff3086516763c7335fc0e0997aca617a

buildctl:

Version:

Server:

containerd:

Version: v1.6.20

GitCommit: 2806fc1057397dbaeefbea0e4e17bddfbd388f38

runc:

Version: 1.1.5

GitCommit: v1.1.5-0-gf19387a6time="2023-06-01T08:09:07Z" level=warning msg="unable to determine buildctl version: exec: \"buildctl\": executable file not found in $PATH"

#省略部分行……

root@harbor:~#

3、kubeasz 部署 k8s 集群

kubeasz 致⼒于提供快速部署⾼可⽤ k8s 集群的⼯具, 同时也努⼒成为 k8s 实践、使⽤的参考书;基于⼆进制⽅式部署和利⽤ ansible-playbook 实现⾃动化;既提供⼀键安装脚本, 也可以根据安装指南分步执⾏安装各个组件。

kubeasz 从每⼀个单独部件组装到完整的集群,提供最灵活的配置能⼒,⼏乎可以设置任何组件的任何参数;同时⼜为集群创建预置⼀套运⾏良好的默认配置,甚⾄⾃动化创建适合⼤规模集群的 BGP Route Reflector ⽹络模式。

本次部署节点为 192.168.101.67-harbor 服务器 (已安装 docker 环境),主要作⽤如下:

- 从互联⽹下载安装资源

- 将部分镜像修改tag后上传到公司内部镜像仓库服务器(可选)

- 对 master 进⾏初始化

- 对 node 进⾏初始化

- 后期集群维护

添加及删除 master 节点

添加就删除 node 节点

etcd 数据备份及恢复

3.1、下载 kubeasz 项⽬及组件

#下载 ezdown

root@harbor:/home# export release=3.5.3

root@harbor:/home# wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

root@harbor:/home# chmod +x ezdown

#编辑 ezdown 文件可以自定义组件的版本

root@harbor:/home/# vim ezdown

#!/bin/bash

#--------------------------------------------------

# This script is used for:

# 1. to download the scripts/binaries/images needed for installing a k8s cluster with kubeasz

# 2. to run kubeasz in a container (recommended)

# @author: gjmzj

# @usage: ./ezdown

# @repo: https://github.com/easzlab/kubeasz

#--------------------------------------------------

set -o nounset

set -o errexit

set -o pipefail

#set -o xtrace

# default settings, can be overridden by cmd line options, see usage

DOCKER_VER=20.10.22

KUBEASZ_VER=3.5.3

K8S_BIN_VER=v1.26.4

EXT_BIN_VER=1.7.1

SYS_PKG_VER=0.5.2

HARBOR_VER=v2.6.3

REGISTRY_MIRROR=CN

# images downloaded by default(with '-D')

calicoVer=v3.24.5

dnsNodeCacheVer=1.22.13

corednsVer=1.9.3

dashboardVer=v2.7.0

dashboardMetricsScraperVer=v1.0.8

metricsVer=v0.5.2

pauseVer=3.9

#其他省略……

#修改默认的仓库地址

root@harbor:/home# sed -i "s/easzlab.io.local:5000/server.ttfddy.com:11443/g" ezdown

root@harbor:/home# sed -i "s/easzlab.io.local/server.ttfddy.com/g" ezdown

#执行 ./ezdown -D 时会检查当前环境是否安装 docker,没有脚本会下载安装,然后下载基础容器镜像和相关文件,默认下载目录是 /etc/kubeasz/ ,基础镜像下载完成后会修改 tag 并上传到以上修改的 harbor 镜像仓库中。

root@harbor:/home/# ./ezdown -D

#等待下载完成后,查看下载好的文件目录和镜像

root@harbor:/home# ll /etc/kubeasz/

total 140

drwxrwxr-x 12 root root 4096 Jun 1 09:20 ./

drwxr-xr-x 101 root root 4096 Jun 1 09:20 ../

-rw-rw-r-- 1 root root 20304 May 28 04:32 ansible.cfg

drwxr-xr-x 4 root root 4096 Jun 1 09:20 bin/

drwxrwxr-x 8 root root 4096 May 28 04:38 docs/

drwxr-xr-x 3 root root 4096 Jun 1 09:29 down/

drwxrwxr-x 2 root root 4096 May 28 04:38 example/

-rwxrwxr-x 1 root root 26507 May 28 04:32 ezctl*

-rwxrwxr-x 1 root root 32185 May 28 04:32 ezdown*

drwxrwxr-x 4 root root 4096 May 28 04:38 .github/

-rw-rw-r-- 1 root root 301 May 28 04:32 .gitignore

drwxrwxr-x 10 root root 4096 May 28 04:38 manifests/

drwxrwxr-x 2 root root 4096 May 28 04:38 pics/

drwxrwxr-x 2 root root 4096 May 28 04:38 playbooks/

-rw-rw-r-- 1 root root 5997 May 28 04:32 README.md

drwxrwxr-x 22 root root 4096 May 28 04:38 roles/

drwxrwxr-x 2 root root 4096 May 28 04:38 tools/

root@harbor:/home# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry 2 65f3b3441f04 3 weeks ago 24MB

easzlab/kubeasz 3.5.3 de7dadc6de4a 4 weeks ago 157MB

easzlab/kubeasz-k8s-bin v1.26.4 6d2b67b39575 6 weeks ago 1.17GB

easzlab/kubeasz-ext-bin 1.7.1 5c1895de99b2 2 months ago 606MB

calico/kube-controllers v3.24.5 38b76de417d5 6 months ago 71.4MB

server.ttfddy.com:11443/calico/kube-controllers v3.24.5 38b76de417d5 6 months ago 71.4MB

calico/cni v3.24.5 628dd7088041 6 months ago 198MB

server.ttfddy.com:11443/calico/cni v3.24.5 628dd7088041 6 months ago 198MB

server.ttfddy.com:11443/calico/node v3.24.5 54637cb36d4a 6 months ago 226MB

calico/node v3.24.5 54637cb36d4a 6 months ago 226MB

server.ttfddy.com:11443/easzlab/pause 3.9 78d53e70b442 7 months ago 744kB

easzlab/pause 3.9 78d53e70b442 7 months ago 744kB

easzlab/k8s-dns-node-cache 1.22.13 7b3b529c5a5a 8 months ago 64.3MB

server.ttfddy.com:11443/easzlab/k8s-dns-node-cache 1.22.13 7b3b529c5a5a 8 months ago 64.3MB

kubernetesui/dashboard v2.7.0 07655ddf2eeb 8 months ago 246MB

server.ttfddy.com:11443/kubernetesui/dashboard v2.7.0 07655ddf2eeb 8 months ago 246MB

server.ttfddy.com:11443/kubernetesui/metrics-scraper v1.0.8 115053965e86 12 months ago 43.8MB

kubernetesui/metrics-scraper v1.0.8 115053965e86 12 months ago 43.8MB

coredns/coredns 1.9.3 5185b96f0bec 12 months ago 48.8MB

server.ttfddy.com:11443/coredns/coredns 1.9.3 5185b96f0bec 12 months ago 48.8MB

easzlab/metrics-server v0.5.2 f965999d664b 18 months ago 64.3MB

server.ttfddy.com:11443/easzlab/metrics-server v0.5.2 f965999d664b 18 months ago 64.3MB

root@harbor:/home#

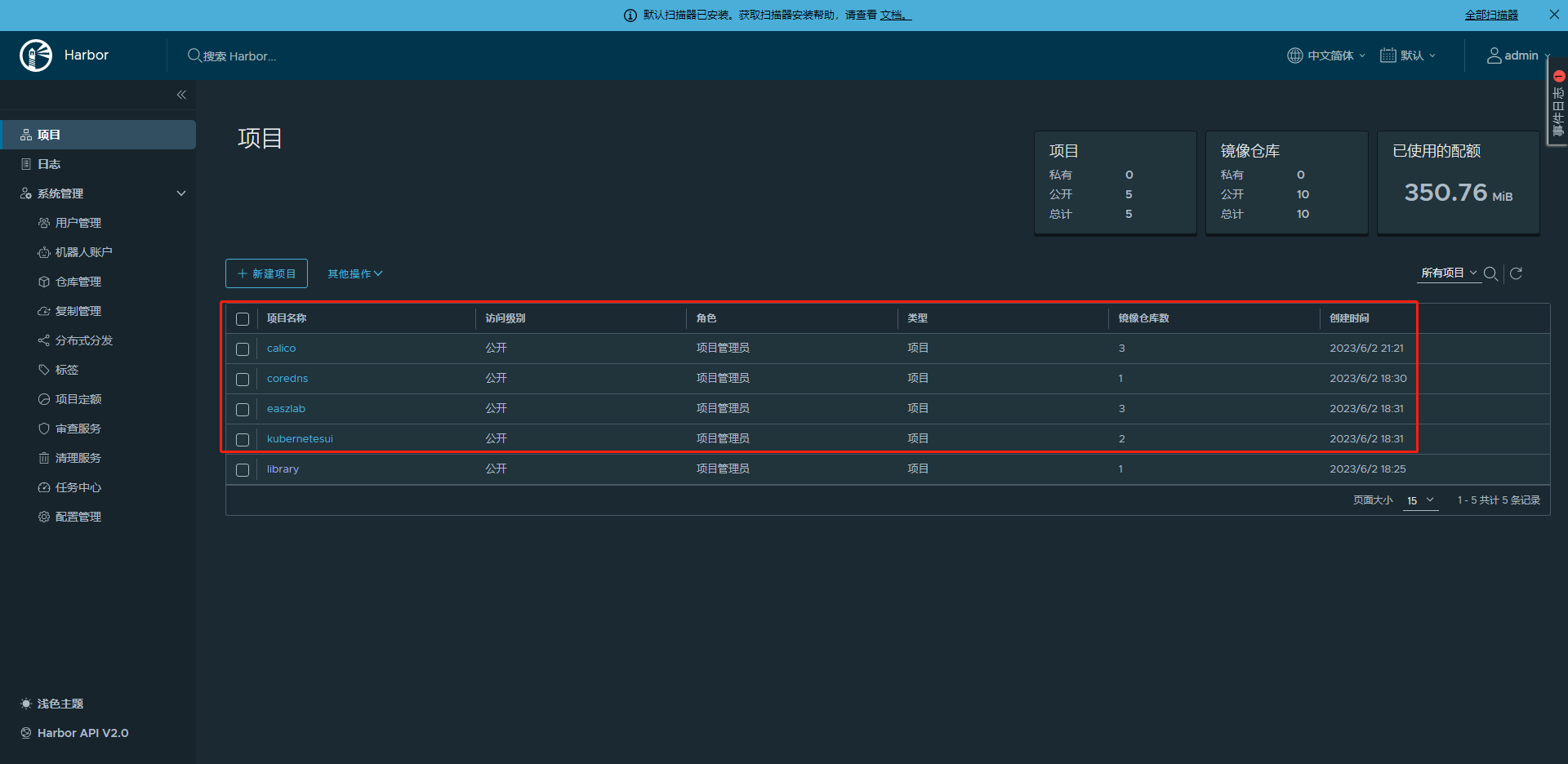

harbor 仓库中的镜像

3.2、使用 ezctl 新建集群配置文件

root@harbor:/home# cd /etc/kubeasz/

root@harbor:/etc/kubeasz# ./ezctl new k8s-cluster

2023-06-02 03:15:50 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-cluster

2023-06-02 03:15:51 DEBUG set versions

2023-06-02 03:15:51 DEBUG cluster k8s-cluster: files successfully created.

2023-06-02 03:15:51 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-cluster/hosts'

2023-06-02 03:15:51 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-cluster/config.yml'

root@harbor:/etc/kubeasz#

3.3、配置 cluster hosts 文件,指定 etcd 节点、master 节点、node 节点、VIP、运⾏时、⽹络组建类型、service IP 与 pod IP 范围等配置信息。

root@harbor:/etc/kubeasz# vim /etc/kubeasz/clusters/k8s-cluster/hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

192.168.101.74

192.168.101.75

192.168.101.76

# master node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_master]

192.168.101.71 k8s_nodename='192.168.101.71'

192.168.101.72 k8s_nodename='192.168.101.72'

# work node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_node]

192.168.101.77 k8s_nodename='192.168.101.77'

192.168.101.78 k8s_nodename='192.168.101.78'

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.1.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-62767"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/opt/kube/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Default 'k8s_nodename' is empty

k8s_nodename=''

3.4、配置 cluster config.yml 文件

root@harbor:/etc/kubeasz# vim /etc/kubeasz/clusters/k8s-cluster/config.yml

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

192.168.101.74

192.168.101.75

192.168.101.76

# master node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_master]

192.168.101.71 k8s_nodename='192.168.101.71'

192.168.101.72 k8s_nodename='192.168.101.72'

# work node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_node]

192.168.101.77 k8s_nodename='192.168.101.77'

192.168.101.78 k8s_nodename='192.168.101.78'

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.1.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-62767"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/opt/kube/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Default 'k8s_nodename' is empty

k8s_nodename=''

root@harbor:/etc/kubeasz# vim clusters/k8s-cluster/hosts

root@harbor:/etc/kubeasz# vim /etc/kubeasz/clusters/k8s-cluster/config.yml

root@harbor:/etc/kubeasz# cat /etc/kubeasz/clusters/k8s-cluster/config.yml

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

CA_EXPIRY: "876000h"

CERT_EXPIRY: "876000h"

# force to recreate CA and other certs, not suggested to set 'true'

CHANGE_CA: false

# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

# k8s version

K8S_VER: "1.26.4"

# set unique 'k8s_nodename' for each node, if not set(default:'') ip add will be used

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character (e.g. 'example.com'),

# regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'

K8S_NODENAME: "{%- if k8s_nodename != '' -%} \

{{ k8s_nodename|replace('_', '-')|lower }} \

{%- else -%} \

{{ inventory_hostname }} \

{%- endif -%}"

############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# [containerd]基础容器镜像

SANDBOX_IMAGE: "server.ttfddy.top:11443/easzlab/pause:3.9"

# [containerd]容器持久化存储目录

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]开启Restful API

ENABLE_REMOTE_API: false

# [docker]信任的HTTP仓库

INSECURE_REG: '["http://easzlab.io.local:5000"]'

############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

MASTER_CERT_HOSTS:

- "192.168.101.70"

- "server.ttfddy.top"

#- "www.test.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node节点最大pod 数

MAX_PODS: 110

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel]

flannel_ver: "v0.19.2"

# ------------------------------------------- calico

# [calico] IPIP隧道模式可选项有: [Always, CrossSubnet, Never],跨子网可以配置为Always与CrossSubnet(公有云建议使用always比较省事,其他的话需要修改各自公有云的网络配置,具体可以参考各个公有云说明)

# 其次CrossSubnet为隧道+BGP路由混合模式可以提升网络性能,同子网配置为Never即可.

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]设置calico 是否使用route reflectors

# 如果集群规模超过50个节点,建议启用该特性

CALICO_RR_ENABLED: false

# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: []

# [calico]更新支持calico 版本: ["3.19", "3.23"]

calico_ver: "v3.24.5"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# ------------------------------------------- cilium

# [cilium]镜像版本

cilium_ver: "1.12.4"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.5.3"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: true

# [kube-router]kube-router 镜像版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

############################

# role:cluster-addon

############################

# coredns 自动安装

dns_install: "yes"

corednsVer: "1.9.3"

ENABLE_LOCAL_DNS_CACHE: false

dnsNodeCacheVer: "1.22.13"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自动安装

metricsserver_install: "yes"

metricsVer: "v0.5.2"

# dashboard 自动安装

dashboard_install: "yes"

dashboardVer: "v2.7.0"

dashboardMetricsScraperVer: "v1.0.8"

# prometheus 自动安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "39.11.0"

# nfs-provisioner 自动安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

# network-check 自动安装

network_check_enabled: false

network_check_schedule: "*/5 * * * *"

############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.6.3"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_PATH: /var/data

HARBOR_TLS_PORT: 8443

HARBOR_REGISTRY: "{{ HARBOR_DOMAIN }}:{{ HARBOR_TLS_PORT }}"

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CHARTMUSEUM: true

3.5、初始化环境

#ezctl 命令的帮助说明

root@harbor:/etc/kubeasz# ./ezctl --help

Usage: ezctl COMMAND [args]

-------------------------------------------------------------------------------------

Cluster setups:

list to list all of the managed clusters

checkout <cluster> to switch default kubeconfig of the cluster

new <cluster> to start a new k8s deploy with name 'cluster'

setup <cluster> <step> to setup a cluster, also supporting a step-by-step way

start <cluster> to start all of the k8s services stopped by 'ezctl stop'

stop <cluster> to stop all of the k8s services temporarily

upgrade <cluster> to upgrade the k8s cluster

destroy <cluster> to destroy the k8s cluster

backup <cluster> to backup the cluster state (etcd snapshot)

restore <cluster> to restore the cluster state from backups

start-aio to quickly setup an all-in-one cluster with default settings

Cluster ops:

add-etcd <cluster> <ip> to add a etcd-node to the etcd cluster

add-master <cluster> <ip> to add a master node to the k8s cluster

add-node <cluster> <ip> to add a work node to the k8s cluster

del-etcd <cluster> <ip> to delete a etcd-node from the etcd cluster

del-master <cluster> <ip> to delete a master node from the k8s cluster

del-node <cluster> <ip> to delete a work node from the k8s cluster

Extra operation:

kca-renew <cluster> to force renew CA certs and all the other certs (with caution)

kcfg-adm <cluster> <args> to manage client kubeconfig of the k8s cluster

Use "ezctl help <command>" for more information about a given command.

#ezctl setup 命令的帮助说明

root@harbor:/etc/kubeasz# ./ezctl setup help

Usage: ezctl setup <cluster> <step>

available steps:

01 prepare to prepare CA/certs & kubeconfig & other system settings

02 etcd to setup the etcd cluster

03 container-runtime to setup the container runtime(docker or containerd)

04 kube-master to setup the master nodes

05 kube-node to setup the worker nodes

06 network to setup the network plugin

07 cluster-addon to setup other useful plugins

90 all to run 01~07 all at once

10 ex-lb to install external loadbalance for accessing k8s from outside

11 harbor to install a new harbor server or to integrate with an existed one

examples: ./ezctl setup test-k8s 01 (or ./ezctl setup test-k8s prepare)

./ezctl setup test-k8s 02 (or ./ezctl setup test-k8s etcd)

./ezctl setup test-k8s all

./ezctl setup test-k8s 04 -t restart_master

root@harbor:/etc/kubeasz#

#kubeasz 使用的相关 ansible-playbook 文件

root@harbor:/etc/kubeasz# ll playbooks/

total 100

drwxrwxr-x 2 root root 4096 May 28 04:38 ./

drwxrwxr-x 13 root root 4096 Jun 2 03:15 ../

-rw-rw-r-- 1 root root 443 May 28 04:32 01.prepare.yml

-rw-rw-r-- 1 root root 58 May 28 04:32 02.etcd.yml

-rw-rw-r-- 1 root root 209 May 28 04:32 03.runtime.yml

-rw-rw-r-- 1 root root 104 May 28 04:32 04.kube-master.yml

-rw-rw-r-- 1 root root 218 May 28 04:32 05.kube-node.yml

-rw-rw-r-- 1 root root 408 May 28 04:32 06.network.yml

-rw-rw-r-- 1 root root 72 May 28 04:32 07.cluster-addon.yml

-rw-rw-r-- 1 root root 34 May 28 04:32 10.ex-lb.yml

-rw-rw-r-- 1 root root 1157 May 28 04:32 11.harbor.yml

-rw-rw-r-- 1 root root 1567 May 28 04:32 21.addetcd.yml

-rw-rw-r-- 1 root root 667 May 28 04:32 22.addnode.yml

-rw-rw-r-- 1 root root 682 May 28 04:32 23.addmaster.yml

-rw-rw-r-- 1 root root 3280 May 28 04:32 31.deletcd.yml

-rw-rw-r-- 1 root root 2180 May 28 04:32 32.delnode.yml

-rw-rw-r-- 1 root root 2233 May 28 04:32 33.delmaster.yml

-rw-rw-r-- 1 root root 1509 May 28 04:32 90.setup.yml

-rw-rw-r-- 1 root root 1051 May 28 04:32 91.start.yml

-rw-rw-r-- 1 root root 931 May 28 04:32 92.stop.yml

-rw-rw-r-- 1 root root 1042 May 28 04:32 93.upgrade.yml

-rw-rw-r-- 1 root root 1779 May 28 04:32 94.backup.yml

-rw-rw-r-- 1 root root 999 May 28 04:32 95.restore.yml

-rw-rw-r-- 1 root root 1416 May 28 04:32 96.update-certs.yml

-rw-rw-r-- 1 root root 337 May 28 04:32 99.clean.yml

root@harbor:/etc/kubeasz#

root@harbor:/etc/kubeasz# ./ezctl setup k8s-cluster 01 #准备CA和基础环境初始化

3.6、部署 etcd 集群

root@harbor:/etc/kubeasz# ./ezctl setup k8s-cluster 02 #部署 etcd 集群

3.7、各 etcd 务器验证 etcd 服务启动状态

root@k8s-etcd-01:~# export NODE_IPS="192.168.101.74 192.168.101.75 192.168.101.76"

root@k8s-etcd-01:~# for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/local/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

https://192.168.101.74:2379 is healthy: successfully committed proposal: took = 41.036731ms

https://192.168.101.75:2379 is healthy: successfully committed proposal: took = 46.839739ms

https://192.168.101.76:2379 is healthy: successfully committed proposal: took = 48.358475ms

#注:以上返回信息表示 etcd 集群运⾏正常,否则异常!

3.8、部署 kubernetes master 节点

root@harbor:/etc/kubeasz# vim roles/kube-master/tasks/main.yml #可自定义配置

root@harbor:/etc/kubeasz# ./ezctl setup k8s-cluster 04

#验证 master 节点

root@harbor:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.101.71 Ready,SchedulingDisabled master 2m5s v1.26.4

192.168.101.72 Ready,SchedulingDisabled master 2m4s v1.26.4

root@harbor:/etc/kubeasz#

3.9、部署 kubernetes node 节点

root@harbor:/etc/kubeasz# vim roles/kube-node/tasks/main.yml #自定义配置

root@harbor:/etc/kubeasz# ./ezctl setup k8s-cluster 05

#验证 node 节点

root@harbor:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.101.71 Ready,SchedulingDisabled master 6m21s v1.27.2

192.168.101.72 Ready,SchedulingDisabled master 6m21s v1.27.2

192.168.101.77 Ready node 52s v1.27.2

192.168.101.78 Ready node 52s v1.27.2

root@harbor:/etc/kubeasz#

3.10、部署 calico ⽹络服务

#将 calico 镜像上传到 harbor

root@harbor:/home/harbor# docker images |grep calico

calico/kube-controllers v3.24.6 baf4466ddf40 2 weeks ago 77.5MB

easzlab.io.local:5000/calico/kube-controllers v3.24.6 baf4466ddf40 2 weeks ago 77.5MB

calico/cni v3.24.6 ca9fea5e07cb 2 weeks ago 212MB

easzlab.io.local:5000/calico/cni v3.24.6 ca9fea5e07cb 2 weeks ago 212MB

easzlab.io.local:5000/calico/node v3.24.6 3953a481aa9d 2 weeks ago 245MB

calico/node v3.24.6 3953a481aa9d 2 weeks ago 245MB

root@harbor:/home/harbor# docker tag calico/cni:v3.24.6 server.ttfddy.com:11443/calico/cni:v3.24.6

root@harbor:/home/harbor# docker tag calico/node:v3.24.6 server.ttfddy.com:11443/calico/node:v3.24.6

root@harbor:/home/harbor# docker tag calico/kube-controllers:v3.24.6 server.ttfddy.com:11443/calico/kube-controllers:v3.24.6

root@harbor:/home/harbor# docker push server.ttfddy.com:11443/calico/cni:v3.24.6

The push refers to repository [server.ttfddy.com:11443/calico/cni]

5f70bf18a086: Pushed

b389d3258e98: Pushed

7d40c15cfd5f: Pushed

a453462bed94: Pushed

cd688847c455: Pushed

27c790b8960b: Pushed

55f42738c8f8: Pushed

07c0e03e87fc: Pushed

824597fb3463: Pushed

fdb0cd1665af: Pushed

0616c2c5b195: Pushed

140e74389dc1: Pushed

v3.24.6: digest: sha256:64ab9209ea185075284e351641579e872ab1be0cf138b38498de2c0bfdb9c148 size: 2823

root@harbor:/home/harbor# docker push server.ttfddy.com:11443/calico/node:v3.24.6

The push refers to repository [server.ttfddy.com:11443/calico/node]

b7fdcf9445c4: Pushed

f3e30e2af6fd: Pushed

v3.24.6: digest: sha256:6c3f839eb73a5798516cceff77577b73649b90a35d97d063056d11cb23012a49 size: 737

root@harbor:/home/harbor# docker push server.ttfddy.com:11443/calico/kube-controllers:v3.24.6

The push refers to repository [server.ttfddy.com:11443/calico/kube-controllers]

647bba5c6667: Pushed

52c4ff8ddede: Pushed

46b843b0be2f: Pushed

5e55970e7692: Pushed

495b022439d9: Pushed

4e8dfff27edf: Pushed

467ece65ac83: Pushed

477262ca4b6b: Pushed

44eec868c2e0: Pushed

3356e33b915c: Pushed

48d8058bfaec: Pushed

498031f2a874: Pushed

95b7dba08a03: Pushed

69b1067cc55d: Pushed

v3.24.6: digest: sha256:d6c9b2023db4488eb8bcc16214089302047bcceb43ad33f2f63f04878d98fd52 size: 3240

root@harbor:/home/harbor#

#修改配置文件 calico 镜像地址为 harbor 地址

root@harbor:/etc/kubeasz# sed -i "s/image: easzlab.io.local:5000/image: server.ttfddy.com:11443/g" roles/calico/templates/calico-v3.24.yaml.j2

root@harbor:/etc/kubeasz# cat roles/calico/templates/calico-v3.24.yaml.j2 | grep image

image: server.ttfddy.com:11443/calico/cni:{{ calico_ver }}

imagePullPolicy: IfNotPresent

image: server.ttfddy.com:11443/calico/node:{{ calico_ver }}

imagePullPolicy: IfNotPresent

image: server.ttfddy.com:11443/calico/node:{{ calico_ver }}

imagePullPolicy: IfNotPresent

image: server.ttfddy.com:11443/calico/kube-controllers:{{ calico_ver }}

imagePullPolicy: IfNotPresent

root@harbor:/etc/kubeasz#

#部署 calico

root@harbor:/etc/kubeasz# ./ezctl setup k8s-cluster 06

#k8s-master-01 服务器验证 calico

root@k8s-master-01:~# calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-------------------+-------+----------+-------------+

| 192.168.101.77 | node-to-node mesh | up | 07:36:07 | Established |

| 192.168.101.72 | node-to-node mesh | up | 07:36:11 | Established |

| 192.168.101.78 | node-to-node mesh | up | 07:36:20 | Established |

+----------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

root@k8s-master-01:~#

3.11、验证 pod 通信

#harbor 服务器

root@harbor:/etc/kubeasz# scp /root/.kube/config 192.168.101.71:/root/.kube/

config 100% 6198 1.6MB/s 00:00

root@harbor:/etc/kubeasz#

#k8s-master-01 服务器

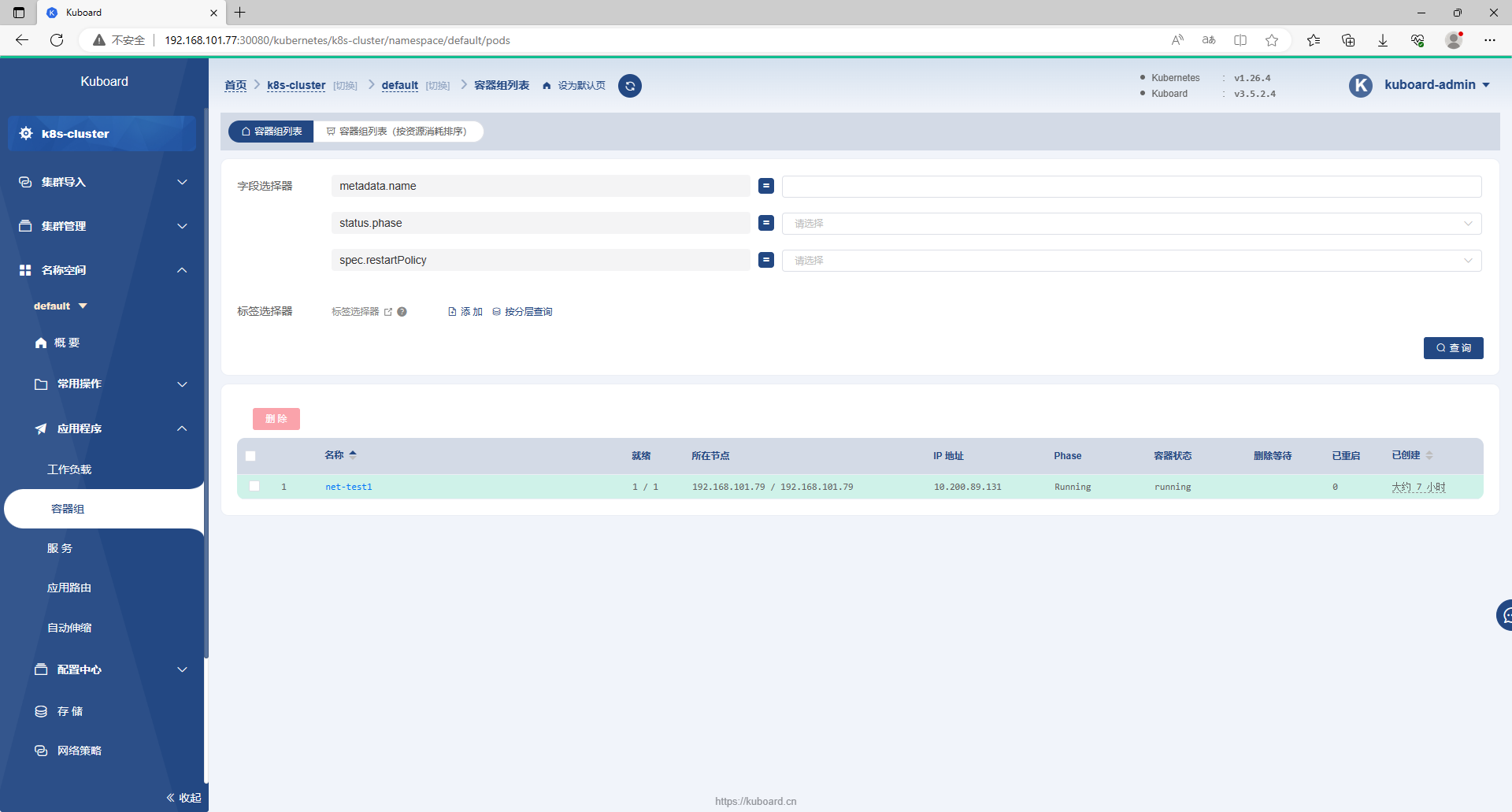

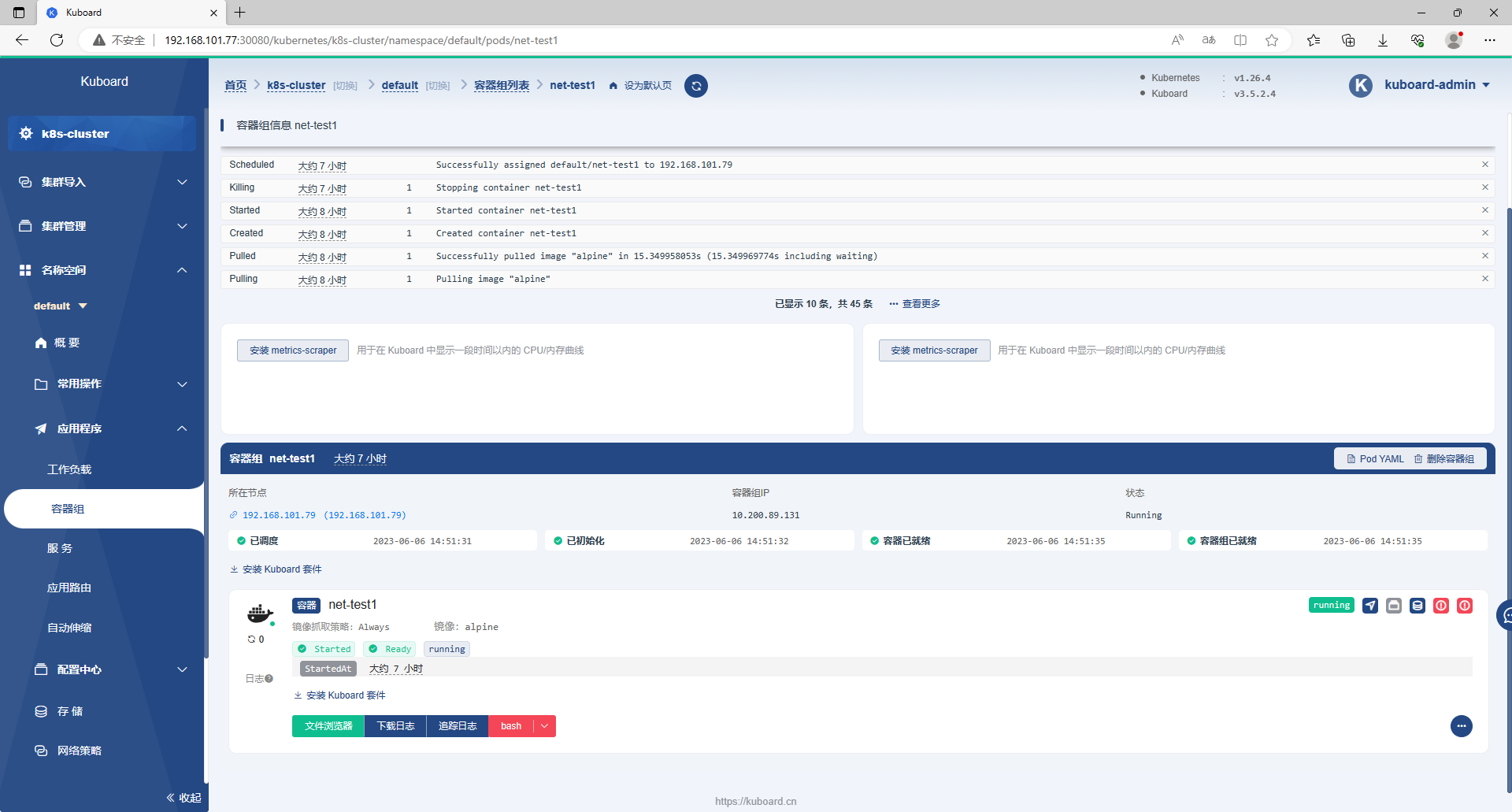

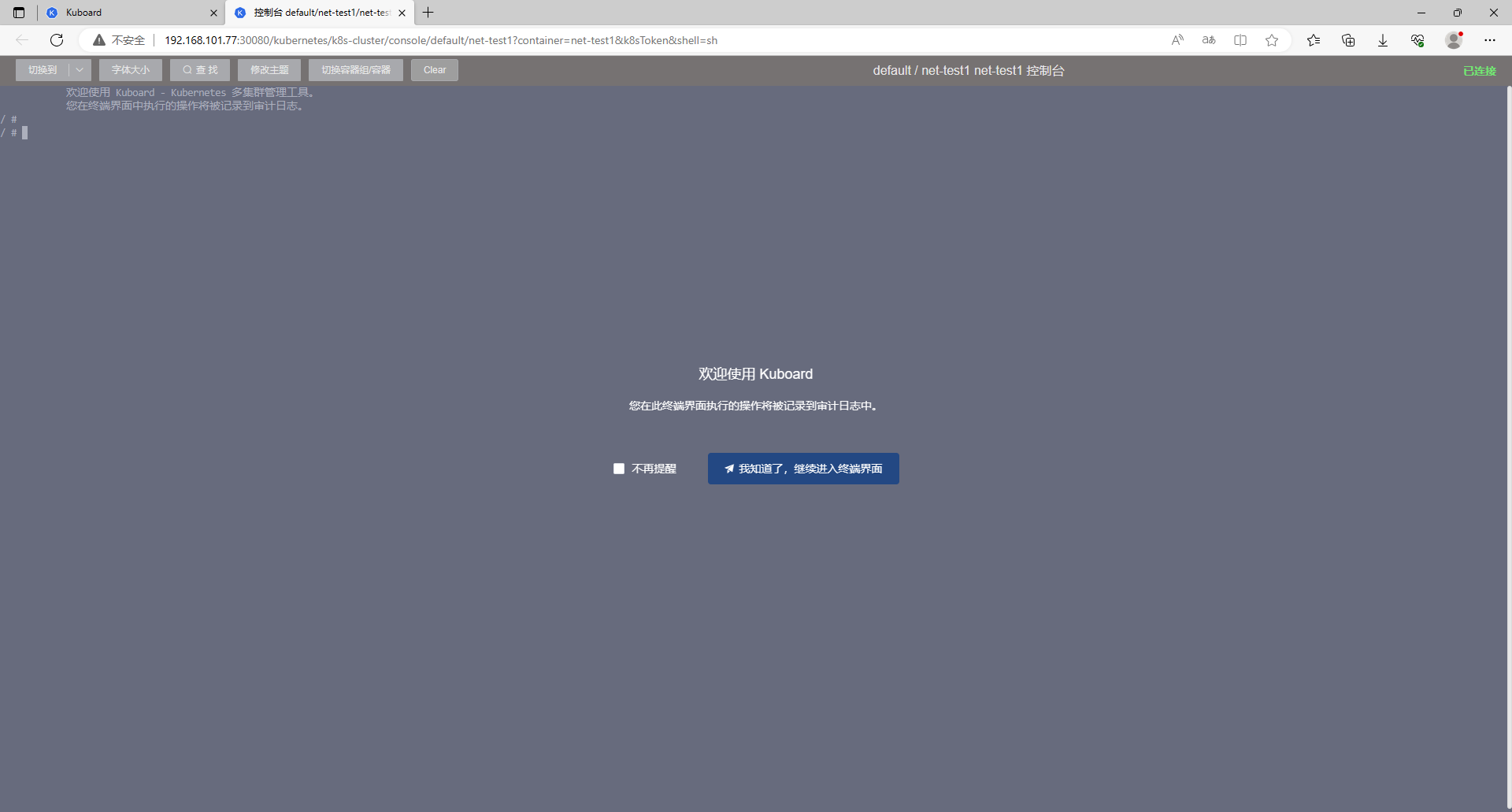

root@k8s-master-01:~# kubectl run net-test1 --image=alpine sleep 36000

pod/net-test1 created

root@k8s-master-01:~# kubectl run net-test2 --image=alpine sleep 36000

pod/net-test2 created

root@k8s-master-01:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 31s 10.200.44.193 192.168.101.78 <none> <none>

net-test2 1/1 Running 0 16s 10.200.154.193 192.168.101.77 <none> <none>

root@k8s-master-01:~# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping 10.200.154.193

PING 10.200.154.193 (10.200.154.193): 56 data bytes

64 bytes from 10.200.154.193: seq=0 ttl=62 time=2.005 ms

64 bytes from 10.200.154.193: seq=1 ttl=62 time=1.437 ms

^C

--- 10.200.154.193 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 1.437/1.721/2.005 ms

/ # ping 223.5.5.5

PING 223.5.5.5 (223.5.5.5): 56 data bytes

64 bytes from 223.5.5.5: seq=0 ttl=116 time=5.726 ms

64 bytes from 223.5.5.5: seq=1 ttl=116 time=5.381 ms

^C

--- 223.5.5.5 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 5.381/5.553/5.726 ms

/ #

3.12、新增 master 节点

#新增 master 节点

root@harbor:/etc/kubeasz# ./ezctl add-master k8s-cluster 192.168.101.73

#检查 master 节点

root@harbor:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.101.71 Ready,SchedulingDisabled master 17h v1.26.4

192.168.101.72 Ready,SchedulingDisabled master 17h v1.26.4

192.168.101.73 Ready,SchedulingDisabled master 3m18s v1.26.4

192.168.101.77 Ready node 17h v1.26.4

192.168.101.78 Ready node 17h v1.26.4

root@harbor:/etc/kubeasz#

3.13、新增 node 节点

#新增 node 节点

root@harbor:/etc/kubeasz# ./ezctl add-node k8s-cluster 192.168.101.79

#检查 node 节点

root@harbor:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.101.71 Ready,SchedulingDisabled master 16h v1.26.4

192.168.101.72 Ready,SchedulingDisabled master 16h v1.26.4

192.168.101.73 Ready,SchedulingDisabled master 3m18s v1.26.4

192.168.101.77 Ready node 16h v1.26.4

192.168.101.78 Ready node 16h v1.26.4

192.168.101.79 Ready node 6m33s v1.26.4

root@harbor:/etc/kubeasz

3.14、部署 CoredDNS

官方地址

- https://github.com/coredns/coredns

- https://coredns.io/

- https://github.com/coredns/deployment/tree/master/kubernetes

#编辑配置文件

root@harbor:/etc/kubeasz# vim coredns-v1.9.4.yaml

# __MACHINE_GENERATED_WARNING__

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1