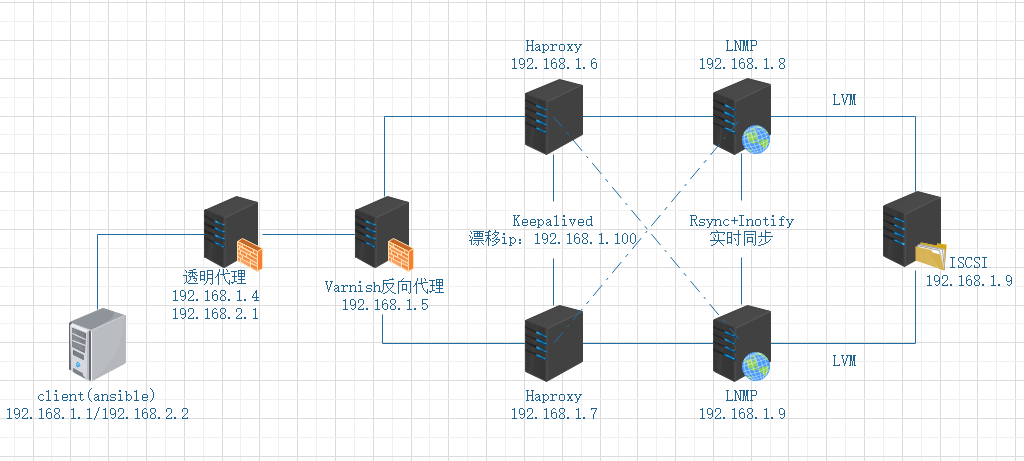

模拟公司真实环境架构搭建

模拟公司真实环境架构搭建

现要求公司的整体架构达到高可用

实验环境:

client(Ansible):192.168.1.1/192.168.2.2、网关192.168.2.1

透明代理:192.168.1.4(192.168.2.1)

Varnish反向代理:192.168.1.5、网关192.168.1.4

负载均衡+keepalived:192.168.1.6/192.168.1.7

LNMP:192.168.1.8/192.168.1.9

Iscsi共享存储:192.168.1.10

实验目的

客户机访问varnish是通过透明代理的转发,最终获取的页面是由varnish所代理的Keepalived漂移ip和Haproxy所负载的LNMP页面,而页面数据存储在由ISCSI所共享的网络存储设备

实验步骤

第一步:Ansible搭建LNMP

使用Ansible(192.168.1.1)搭建LNMP(192.168.1.8/9)

首先搭建Ansible(192.168.1.1)

epel-release Extra Packages for Enterprise Linux(企业版linux扩展包)

# 创建yum缓存,加快yum安装速度

[root@ansible ~]# yum makecache fast

[root@ansible ~]# yum -y install epel-release ansible设置对LNMP的主机的ssh免密登录

# 生成密钥对

[root@ansible ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:ywq6L0QW8JyULoJGrV3K6f7ddhXt9BhsOyVuM04QXtA root@ansible

The key's randomart image is:

+---[RSA 2048]----+

|..o. .o |

| =oo . . E |

|o.B.+ . +. |

|+o+= o.=o.|

|o+. S ++*.|

| .. . . .X..|

| .. . o .+ + |

| .o ..... . . |

| o+o...... |

+----[SHA256]-----+

# 将密钥对上传至准备搭建的LNMP环境(1.8/1.9)

[root@ansible ~]# ssh-copy-id root@192.168.1.8

[root@ansible ~]# ssh-copy-id root@192.168.1.9调整ansible配置文件,将要安装LNMP的两台被控端

[root@ansible ~]# vim /etc/ansible/hosts

添加

[LNMP]

192.168.1.8

192.168.1.9检测被控端连通性

[root@ansible ~]# ansible LNMP -m ping

192.168.1.8 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.1.9 | SUCCESS => {

"changed": false,

"ping": "pong"

}为了之后方便管理Nginx,所以提前在Ansible主机写好了Nginx的启动脚本

[root@ansible ~]# vim nginx

#!/bin/bash

#chkconfig:- 99 20

#description:Nginx Service Control Script

PROG="/usr/local/nginx/sbin/nginx"

PIDF="/usr/local/nginx/logs/nginx.pid"

case "$1" in

start)

$PROG

;;

stop)

kill -s QUIT $(cat $PIDF)

;;

restart)

$0 stop

$0 start

;;

reload)

kill -s HUP $(cat $PIDF)

;;

*)

echo "Usage: $0 {start|stop|restart|reload}"

exit 1

esac

exit 0为了安装完毕验证php,提前在Ansible主机编写好php验证页面

[root@ansible ~]# vim index.php.j2

<?php

$link=mysql_connect('192.168.1.8','root','123.com');

if($link) echo "conn success 1.8";

mysql_close($link);

?>将安装LNMP所用安装包,都准备到Ansible主机,然后编写Playbook文件

[root@ansible ~]# vim lnmp.yml

- hosts: LNMP

remote_user: root

tasks:

- name: install nginx

unarchive: src=/root/nginx-1.11.1.tar.gz dest=/usr/src

- name: yum install pcre* openssl*

yum: name=pcre-devel,openssl-devel,gcc,gcc-c++,zlib-devel

- name: make install nginx

shell: ./configure --prefix=/usr/local/nginx --user=nginx --group=nginx --with-http_stub_status_module --with-pcre && make && make install

args:

chdir: /usr/src/nginx-1.11.1

- name: lnfile

file: src=/usr/local/nginx/sbin/nginx dest=/usr/local/sbin/nginx state=link

- name: create nginx user

user: name=nginx createhome=no shell=/sbin/nologin state=present

- name: nginx start script

copy: src=/root/nginx dest=/etc/init.d/nginx

- name: xp

file: path=/etc/init.d/nginx mode=0755

- name: add system service

shell: chkconfig --add nginx

- name: open system auto started

shell: systemctl enable nginx

- name: start nginx

service: name=nginx state=started

- name: yum install ncurses-devel

yum: name=ncurses-devel

- name: install cmkae

unarchive: src=/root/cmake-2.8.7.tar.gz dest=/usr/src/

- name: make install cmake

shell: ./configure && gmake && gmake install

args:

chdir: /usr/src/cmake-2.8.7

- name: install mysql

unarchive: src=/root/mysql-5.5.22.tar.gz dest=/usr/src/

- name: make install mysql

shell: cmake -DCMAKE_INSTALL_PREFIX=/usr/local/mysql -DSYSCONFDIR=/etc -DDEFAULT_CHARSET=utf8 -DDEFAULT_COLLATION=utf8_general_ci -DWITH_EXTRA_CHARSETS=all && make && make install

args:

chdir: /usr/src/mysql-5.5.22

- name: soft link

file: src=/usr/local/mysql/lib/libmysqlclient.so.18 dest=/usr/lib/libmysqlclient.so.18 state=link

- name: soft link

file: src=/usr/local/mysql/bin/mysql dest=/usr/bin/mysql state=link

- name: mysqldupm soft link

file: src=/usr/local/mysql/bin/mysqldump dest=/usr/bin/mysqldump state=link

- name: add user

user: name=mysql createhome=no shell=/sbin/nologin state=present

- name: mysql config file

copy: src=/usr/src/mysql-5.5.22/support-files/my-medium.cnf dest=/etc/my.cnf remote_src=yes

- name: mysql start script

copy: src=/usr/src/mysql-5.5.22/support-files/mysql.server dest=/etc/init.d/mysqld remote_src=yes

- name: shou quan

file: path=/etc/init.d/mysqld mode=0755

- name: create system service

shell: chkconfig --add mysqld

- name: init database

shell: /usr/local/mysql/scripts/mysql_install_db --user=mysql --group=mysql --basedir=/usr/local/mysql/ --datadir=/usr/local/mysql/data

- name: directory quanxian

file: path=/usr/local/mysql owner=mysql group=mysql recurse=yes

- name: start mysqld

service: name=mysqld state=started

- name: bainliang

shell: echo "PATH=$PATH:/usr/local/mysql/bin" >> /etc/profile && source /etc/profile

- name: yum install gd libxml2-devel libjpeg-devel libpng-devel

yum: name=gd,libxml2-devel,libjpeg-devel,libpng-devel

- name: rz php tar

unarchive: src=/root/php-5.3.28.tar.gz dest=/usr/src

- name: make install php

shell: ./configure --prefix=/usr/local/php --with-gd --with-zlib --with-mysql=mysqlnd --with-pdo-mysql=mysqlnd --with-mysqli=mysqlnd --with-config-file-path=/usr/local/php --enable-fpm --enable-mbstring --with-jpeg-dir=/usr/lib && make && make install

args:

chdir: /usr/src/php-5.3.28

- name: cp configfile

copy: src=/usr/src/php-5.3.28/php.ini-development dest=/usr/local/php/php.ini remote_src=yes

- name: alter primary configfile

replace: path=/usr/local/php/php.ini regexp='^default_charset' replace='default_charset = "utf-8"'

- name: alter primary configfile1

replace: path=/usr/local/php/php.ini regexp='^short_open_tag' replace='short_open_tag = On'

- name: install youhua

unarchive: src=/root/ZendGuardLoader-php-5.3-linux-glibc23-x86_64.tar.gz dest=/usr/src

- name: cp configfile

copy: src=/usr/src/ZendGuardLoader-php-5.3-linux-glibc23-x86_64/php-5.3.x/ZendGuardLoader.so dest=/usr/local/php/lib/php/ remote_src=yes

- name: edit configfile

shell: sed -i '$azend_extension=/usr/local/php/lib/php/ZendGuardLoader.so\nzend_loader.enable=1' /usr/local/php/php.ini

- name: cp php-fpm

copy: src=/usr/src/php-5.3.28/sapi/fpm/init.d.php-fpm dest=/etc/init.d/php-fpm remote_src=yes

- name: script php-fpm quanxian

file: path=/etc/init.d/php-fpm mode=0755

- name: add system service

shell: chkconfig --add php-fpm

- name: cp php-fpm configfile

copy: src=/usr/local/php/etc/php-fpm.conf.default dest=/usr/local/php/etc/php-fpm.conf remote_src=yes

- name: edit php-fpm cfg max_children

replace: path=/usr/local/php/etc/php-fpm.conf regexp='^pm.max_children = 5' replace='pm.max_children = 50'

- name: edit php-fpm cfg star_servers

replace: path=/usr/local/php/etc/php-fpm.conf regexp='^pm.start_servers = 2' replace='pm.start_servers = 20'

- name: edit php-fpm cfg min_spare_servers

replace: path=/usr/local/php/etc/php-fpm.conf regexp='^pm.min_spare_servers = 1' replace='pm.min_spare_servers = 5'

- name: edit php-fpm cfg max_spare_servers

replace: path=/usr/local/php/etc/php-fpm.conf regexp='^pm.max_spare_servers = 3' replace='pm.max_spare_servers = 35'

- name: edit php-fpm pid

replace: path=/usr/local/php/etc/php-fpm.conf regexp=';pid = run/php-fpm.pid' replace='pid = run/php-fpm.pid'

- name:

replace: path=/usr/local/php/etc/php-fpm.conf regexp='user = nobody' replace='user = nginx'

- name:

replace: path=/usr/local/php/etc/php-fpm.conf regexp='group = nobody' replace='group = nginx'

- name: start php-fpm

service: name=php-fpm state=started

- shell: sed -i '/ server_name localhost;/a \ location ~ \.php$ {\n root html;\n fastcgi_pass 127.0.0.1:9000;\n fastcgi_index index.php;\n fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;\n include fastcgi.conf;\n}' /usr/local/nginx/conf/nginx.conf

- replace: path=/usr/local/nginx/conf/nginx.conf regexp=' index index.html index.htm;' replace=' index index.html index.htm index.php;'

- service: name=nginx state=restarted

- file: path=/usr/local/nginx/html/index.php state=touch

- template: src=/root/index.php.j2 dest=/usr/local/nginx/html/index.php执行剧本

ansible-playbook lnmp.yml因为刚才编写php只写了验证一个数据库的index.php,现在打开1.9主机,修改一下php文件

vim /usr/local/nginx/html/index.php

将ip地址改为192.168.1.9即可

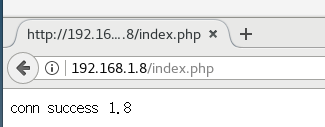

接下来验证,访问php连接数据库是否成功

需要MySQL授权登录,登录两台MySQL,分别执行以下语句

mysql> grant all on *.* to 'root'@'192.168.1.%' identified by '123.com';

使用任意一台服务器,访问192.168.1.8/index.php和192.168.1.9/index.php

第二步:LNMP搭建Rsync+Inotify

做两台LNMP(192.168.1.8/1.9)之间的网页根目录实时同步

为了同步方便,两台LNMP主机之间互相做免密登录,互相做是因为既要1.8监控1.9的目录,1.9的也要监控1.8的目录,无论哪台的网页根目录更新了数据,也会同步到另一台

做lnmp1.8对1.9的免密登录

[root@lnmp8 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:cw6hVt9sz6kINyNzPAHvMmYeuth6jvj91UW8/PUBBJs root@localhost.localdomain

The key's randomart image is:

+---[RSA 2048]----+

| ... |

| +. |

| + E .o |

| o = o o.. |

| o S = + +..|

| . B + + o+|

| X @ . + o|

| . =.= @ = . |

| ..=+*oo . . |

+----[SHA256]-----+

[root@lnmp8 ~]# ssh-copy-id root@192.168.1.9

做lnmp1.9对1.8的免密登录

[root@lnmp9 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:Py9O91cHHaZpgaTJbOBaNC28KIsOEvDT3CopAqforsw root@lnmp9

The key's randomart image is:

+---[RSA 2048]----+

| .+. ... |

|. oo=.o. . o |

|.. o ..oo* =..|

|o +.o.+.. +. .|

|o+.ooo S . . |

|B.o.. . o|

|*. . + . o|

|oo ..+ . .|

|+E .... .. |

+----[SHA256]-----+

[root@lnmp9 ~]# ssh-copy-id root@192.168.1.8

两台分别安装inotify目录监控服务,拖包

tar zxf inotify-tools-3.14.tar.gz -C /usr/src

cd /usr/src/inotify-tools-3.14/

./configure --prefix=/usr/local/inotify && make && make install

ln -s /usr/local/inotify/bin/* /usr/local/bin/

调整两个主机的rsync配置文件

[root@lnmp8 ~]# vim /etc/rsyncd.conf

uid = root

gid = root

use chroot = no

max connections = 0

pid file = /var/run/rsyncd.pid

transfer logging = yes

log file = /var/lib/rsyncd.log

timeout = 900

ignore nonreadable = yes

dont compress = *.gz *.tgz *.zip *.z *.Z *.rpm *.deb *.bz2

port 873

[html]

path = /usr/local/nginx/html

comment = nginx html

writeable = yes

read only = no

直接使用scp传到1.9即可

[root@lnmp8 ~]# scp /etc/rsyncd.conf root@192.168.1.9:/etc

重启Rsync服务(两台)

systemctl restart rsyncd

netstat -anput | grep rsync

编写脚本,并后台执行脚本使得两台LNMP的网页根目录互相监控

[root@lnmp8 ~]# vim nginx_rsync.sh

#!/bin/bash

path=/usr/local/nginx/html/

client=192.168.1.9

/usr/local/bin/inotifywait -mrq --format %w%f -e create,delete,close_write $path | while read file

do

if [ -f $file ]

then

rsync -az --delete $file root@$client::html

else

cd $path && rsync -az --delete ./ root@$client::html

fi

done

同样使用scp传给1.9

[root@lnmp8 ~]# scp nginx_rsync.sh root@192.168.1.9:/root

并更改文件中的ip为1.8

[root@lnmp9 ~]# vim nginx_rsync.sh

192.168.1.8

两台主机赋予脚本执行权限并执行

chmod +x nginx_rsync.sh

./nginx_rsync.sh &

验证LNMP,进入LNMP1.8的监控目录,创建目录1

[root@lnmp8 ~]# cd /usr/local/nginx/html/

[root@lnmp8 html]# mkdir 1

查看LNMP1.9的监控目录,然后在LNMP1.9删除此目录

[root@lnmp9 ~]# ls /usr/local/nginx/html/

1 50x.html index.html index.php

[root@lnmp9 ~]# rm -rf /usr/local/nginx/html/1

再次查看LNMP1.8的监控目录,发现目录1没了,实时同步搭建成功

[root@lnmp8 ~]# ls /usr/local/nginx/html/

50x.html index.html index.php

第三步:搭建ISCSI共享存储

使用ISCSI(192.168.1.10)为两台LNMP(192.168.1.8/1.9)做远程存储

在ISCSI上添加一块硬盘,并重启,用做共享

首先确认ISCSI是否安装了target,如未安装,请执行yum -y install target*

[root@iscsi ~]# rpm -qa | grep target

selinux-policy-targeted-3.13.1-192.el7.noarch

targetcli-2.1.fb46-1.el7.noarch

对刚添加的硬盘/etc/sdb,添加连个LVM分区,分别用来存储两台LNMP的网页根目录

[root@iscsi ~]# fdisk /dev/sdb

# 这里我创建了两个LVM分区,容量分别为10G

设备 Boot Start End Blocks Id System

/dev/sdb1 2048 20973567 10485760 8e Linux LVM

/dev/sdb2 20973568 41943039 10484736 8e Linux LVM

然后做逻辑卷分区,每个分区5G

[root@iscsi ~]# pvcreate /dev/sdb{1,2}

Physical volume "/dev/sdb1" successfully created.

Physical volume "/dev/sdb2" successfully created.

[root@iscsi ~]# vgcreate lnmp8 /dev/sdb1 -s 16M

Volume group "lnmp8" successfully created

[root@iscsi ~]# vgcreate lnmp9 /dev/sdb2 -s 16M

Volume group "lnmp9" successfully created

[root@iscsi ~]# lvcreate -L 5G -n lnmp lnmp8

Logical volume "lnmp" created.

[root@iscsi ~]# lvcreate -L 5G -n lnmp lnmp9

Logical volume "lnmp" created.

启动target服务

[root@iscsi ~]# systemctl start target

[root@iscsi ~]# systemctl enable target

使用targetcli对LVM卷和块分区进行绑定,先来绑定lnmp8卷组的逻辑卷

[root@iscsi ~]# targetcli

# 将逻辑卷/dev/mapper/lnmp8-lnmp作为1.8的共享存储设备,并命名为lnmp8

/> backstores/block create lnmp8 /dev/mapper/lnmp8-lnmp

Created block storage object lnmp8 using /dev/mapper/lnmp8-lnmp.

# 创建本机中的iscsi的共享名为iqn.2020-02.com.server.www:lnmp8

/> iscsi/ create iqn.2020-02.com.server.www:lnmp8

Created target iqn.2020-02.com.server.www:lnmp8.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

# 配置acl,只有客户机(LNMP1.8)的名为iqn.2020-02.com.lnmp.www:lnmp8时才会允许被获取服务端的空间

/> iscsi/iqn.2020-02.com.server.www:lnmp8/tpg1/acls create iqn.2020-02.com.lnmp.www:lnmp8

Created Node ACL for iqn.2020-02.com.lnmp.www:lnmp8

# 将共享名与共享存储设备绑定,客户端能访问到共享名就能获取共享存储设备的空间

/> iscsi/iqn.2020-02.com.server.www:lnmp8/tpg1/luns create /backstores/block/lnmp8

Created LUN 0.

Created LUN 0->0 mapping in node ACL iqn.2020-02.com.lnmp.www:lnmp8

# 添加本地监听端口

/> iscsi/iqn.2020-02.com.server.www:lnmp8/tpg1/portals/ create 192.168.1.8 3260

Using default IP port 3260

Could not create NetworkPortal in configFS

# 在这里出现了报错,无法创建网络端口,是因为默认的0.0.0.0的存在删除即可

/> cd /iscsi/iqn.2020-02.com.server.www:lnmp8/tpg1/portals/

/iscsi/iqn.20.../tpg1/portals> ls

o- portals ..................................................................... [Portals: 1]

o- 0.0.0.0:3260 ...................................................................... [OK]

/iscsi/iqn.20.../tpg1/portals> delete 0.0.0.0 3260

Deleted network portal 0.0.0.0:3260

/iscsi/iqn.20.../tpg1/portals> cd /

/> iscsi/iqn.2020-02.com.server.www:lnmp8/tpg1/portals/ create 192.168.1.10 3260

Using default IP port 3260

Created network portal 192.168.1.10:3260.

# 创建成功

无需退出,继续创建LNMP1.9要使用的共享存储设备

# 将逻辑卷/dev/mapper/lnmp9-lnmp作为1.9的共享存储设备,并命名为lnmp9

/> backstores/block/ create lnmp9 /dev/mapper/lnmp9-lnmp

Created block storage object lnmp9 using /dev/mapper/lnmp9-lnmp.

# 创建本机中的iscsi的共享名为iqn.2020-02.com.server.www:lnmp9

/> iscsi/ create iqn.2020-02.com.server.www:lnmp9

Created target iqn.2020-02.com.server.www:lnmp9.

Created TPG 1.

Default portal not created, TPGs within a target cannot share ip:port.

# 配置acl,只有客户机(LNMP1.9)的名为iqn.2020-02.com.lnmp.www:lnmp9时才会允许被获取服务端的空间

/> iscsi/iqn.2020-02.com.server.www:lnmp9/tpg1/acls create iqn.2020-02.com.lnmp.www:lnmp9

Created Node ACL for iqn.2020-02.com.lnmp.www:lnmp9

# 将共享名与共享存储设备绑定,客户端能访问到共享名就能获取共享存储设备的空间

/> iscsi/iqn.2020-02.com.server.www:lnmp9/tpg1/luns create /backstores/block/lnmp9

Created LUN 0.

Created LUN 0->0 mapping in node ACL iqn.2020-02.com.lnmp.www:lnmp9

# 添加本地监听端口

/> iscsi/iqn.2020-02.com.server.www:lnmp9/tpg1/portals create 192.168.1.10 3260

Using default IP port 3260

Created network portal 192.168.1.10:3260.

# 保存配置

/> saveconfig

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

/> exit # 退出即可

Global pref auto_save_on_exit=true

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

配置两台LNMP作为ISCSI的客户端

LNMP1.8

确认是否安装了ISCSI,如果未安装,请执行yum -y install iscsi*

[root@lnmp8 ~]# rpm -qa | grep iscsi

libiscsi-1.9.0-7.el7.x86_64

iscsi-initiator-utils-6.2.0.874-7.el7.x86_64

iscsi-initiator-utils-iscsiuio-6.2.0.874-7.el7.x86_64

libvirt-daemon-driver-storage-iscsi-3.9.0-14.el7.x86_64

编辑ISCSI的标签名为ISCSI服务端创建的acl中的名称

[root@lnmp8 ~]# vim /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2020-02.com.lnmp.www:lnmp8

启动ISCSI服务

[root@lnmp8 ~]# systemctl restart iscsid

[root@lnmp8 ~]# systemctl enable iscsid

Created symlink from /etc/systemd/system/multi-user.target.wants/iscsid.service to /usr/lib/systemd/system/iscsid.service.

在ISCSI服务端(192.168.1.10)中发现共享存储设备

[root@lnmp8 ~]# iscsiadm -m discovery -p 192.168.1.10:3260 -t sendtargets

192.168.1.10:3260,1 iqn.2020-02.com.server.www:lnmp9

192.168.1.10:3260,1 iqn.2020-02.com.server.www:lnmp8

访问并获取服务端的共享存储设备空间lnmp8

[root@lnmp8 ~]# iscsiadm -m node -T iqn.2020-02.com.server.www:lnmp8 -l

Logging in to [iface: default, target: iqn.2020-02.com.server.www:lnmp8, portal: 192.168.1.10,3260] (multiple)

Login to [iface: default, target: iqn.2020-02.com.server.www:lnmp8, portal: 192.168.1.10,3260] successful.

验证是否成功

[root@lnmp8 ~]# fdisk -l

磁盘 /dev/sdb:5368 MB, 5368709120 字节,10485760 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

创建/dev/sdb1,容量为全部容量

[root@lnmp8 ~]# fdisk /dev/sdb # 创建分区

[root@lnmp8 ~]# partprobe /dev/sdb # 重读分区表

[root@lnmp8 ~]# mkfs.xfs /dev/sdb1 # 格式化为xfs文件系统

设置网络磁盘自动挂载到网页根目录

[root@lnmp8 ~]# vim /etc/fstab

/dev/sdb1 /usr/local/nginx/html xfs defaults,_netdev 0 0

[root@lnmp8 ~]# mount -a

[root@lnmp8 ~]# df -Th

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/sdb1 xfs 5.0G 33M 5.0G 1% /usr/local/nginx/html

LNMP1.9执行与1.8相同的操作

# 编辑ISCSI客户端标签名,与target的acl中一致

[root@lnmp9 ~]# vim /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2020-02.com.lnmp.www:lnmp9

# 启动iscsi服务

[root@lnmp9 ~]# systemctl restart iscsid

[root@lnmp9 ~]# systemctl enable iscsid

Created symlink from /etc/systemd/system/multi-user.target.wants/iscsid.service to /usr/lib/systemd/system/iscsid.service.

# 发现ISCSI服务端共享的存储设备

[root@lnmp9 ~]# iscsiadm -m discovery -p 192.168.1.10:3260 -t sendtargets

192.168.1.10:3260,1 iqn.2020-02.com.server.www:lnmp9

192.168.1.10:3260,1 iqn.2020-02.com.server.www:lnmp8

# 访问并获取服务端的共享存储设备空间lnmp9

[root@lnmp9 ~]# iscsiadm -m node -T iqn.2020-02.com.server.www:lnmp9 -l

Logging in to [iface: default, target: iqn.2020-02.com.server.www:lnmp9, portal: 192.168.1.10,3260] (multiple)

Login to [iface: default, target: iqn.2020-02.com.server.www:lnmp9, portal: 192.168.1.10,3260] successful.

# 创建/dev/sdb1,容量为全部容量

[root@lnmp9 ~]# fdisk /dev/sdb

[root@lnmp9 ~]# partprobe /dev/sdb

[root@lnmp9 ~]# mkfs.xfs /dev/sdb1

# 设置网络磁盘自动挂载到网页根目录

[root@lnmp9 ~]# vim /etc/fstab

/dev/sdb1 /usr/local/nginx/html xfs defaults,_netdev 0 0

[root@lnmp9 ~]# df -Th

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/sdb1 xfs 5.0G 33M 5.0G 1% /usr/local/nginx/html

第四步:Haproxy负载均衡LNMP

搭建Haproxy(192.168.1.6/1.7)拖包,两台分别搭建

# 解决依赖关系

yum -y install pcre-devel bzip2-devel

# 解压安装包

tar zxf haproxy-1.4.24.tar.gz -C /usr/src

cd /usr/src/haproxy-1.4.24/

uname -r # 查看对应内核版本

3.10.0-862.el7.x86_64

# 编译安装

make TARGET=linux310 PREFIX=/usr/local/haproxy

make install PREFIX=/usr/local/haproxy

# 优化命令路径

ln -s /usr/local/haproxy/sbin/* /usr/sbin/

建立配置文件和启动脚本

mkdir /etc/haproxy # 创建配置文件目录

cp /usr/src/haproxy-1.4.24/examples/haproxy.cfg /etc/haproxy/ # 复制源码包中的配置文件

# 复制源码包中的启动脚本

cp /usr/src/haproxy-1.4.24/examples/haproxy.init /etc/init.d/haproxy

# 启动脚本授执行权限

chmod +x /etc/init.d/haproxy

调整修改配置文件

vim /etc/haproxy/haproxy.cfg

# 注释全局配置中这一行

#chroot /usr/share/haproxy # 安装路径

# 注释默认配置中这一行

#redispatch # 客户端访问时产生cookie的对应节点坏掉,就会直接定向到另一台web,影响轮询效果,生成环境中不需要注释

这行以下内容全部清空,自行写入

listen appli1-rewrite 0.0.0.0:10001

在最后进行添加:

listen lnmps 0.0.0.0:80

balance roundrobin # 轮询方式rr轮询

option httpchk GET /index.html # 健康检查方式

server lnmp8 192.168.1.8:80 check inter 2000 rise 3 fall 3

server lnmp9 192.168.1.9:80 check inter 2000 rise 3 fall 3

添加Haproxy为系统服务,方便管理

chkconfig --add haproxy

chkconfig haproxy on

systemctl start haproxy

验证由于之前,做ISCSI网络共享时,对LNMP的网页根目录挂载过,所以同步脚本需要重新执行,也正好,停掉脚本来验证Haproxy,使用client(192.168.1.1)访问负载均衡服务器(192.168.1.6),如下则轮询成功

[root@ansible ~]# curl 192.168.1.6

1.9

[root@ansible ~]# curl 192.168.1.6

1.8

成功后切记运行实时同步脚本

刚做好一台Haproxy负载均衡,我们的环境是使用Keepalived来使负载均衡双机热备,所以需要做另外一台Haproxy,步骤和以上一模一样

第五步:Keepalived+Haproxy

首先需要在两台LNMP中调整ARP限制和虚拟回环ip(将会用作Keepalived的漂移ip)

调整ARP限制

[root@lnmp8 ~]# vim /etc/sysctl.conf

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.default.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.conf.default.arp_announce = 2

[root@lnmp8 ~]# sysctl -p

# 使用scp传到LNMP1.9

[root@lnmp8 ~]# scp /etc/sysctl.conf root@192.168.1.9:/etc

设置虚拟回环ip

[root@lnmp8 ~]# cp /etc/sysconfig/network-scripts/ifcfg-lo /etc/sysconfig/network-scripts/ifcfg-lo:0

[root@lnmp8 ~]# vim /etc/sysconfig/network-scripts/ifcfg-lo:0

修改

DEVICE=lo:0

IPADDR=192.168.1.100

NETMASK=255.255.255.255

NAME=lnmp8

[root@lnmp8 ~]# systemctl restart network

# 使用scp传到LNMP1.9

[root@lnmp8 ~]# scp /etc/sysconfig/network-scripts/ifcfg-lo:0 root@192.168.1.9:/etc/sysconfig/network-scripts/ifcfg-lo:0

修改LNMP1.9中虚拟回环ip的NAME

vim /etc/sysconfig/network-scripts/ifcfg-lo:0

NAME=lnmp9

systemctl restart network

两台LNMP设置虚拟回环ip的访问路由(临时)

route add -host 192.168.1.100 dev lo:0

永久路由

vim /etc/rc.local

添加

route add -host 192.168.1.100 dev lo:0

搭建Keepalive

在Haproxy(192.168.1.6/1.7)上分别搭建Keepalived

192.168.1.6

# 解决依赖关系

[root@haproxy6 ~]# yum -y install popt-devel kernel-devel openssl-devel

# 解压Keepalived安装包

[root@haproxy6 ~]# tar zxf keepalived-1.2.13.tar.gz -C /usr/src

[root@haproxy6 ~]# cd /usr/src/keepalived-1.2.13/

[root@haproxy6 keepalived-1.2.13]# ./configure --prefix=/ --with-kernel-dir=/usr/src/kernel

[root@haproxy6 keepalived-1.2.13]# make && make install

调整配置文件

[root@haproxy6 ~]# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER # 第二台修改为BACKUP

interface ens33 # 当前使用网卡

virtual_router_id 51

priority 100 # 第二台优先级降低

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.100 # 漂移ip

}

}

现在按照以上搭建Keepalived步骤安装192.168.1.7的Keepalived服务

然后使用scp将配置文件传到1.7即可

[root@haproxy6 ~]# scp /etc/keepalived/keepalived.conf root@192.168.1.7:/etc/keepalived/

1.7中修改配置文件的state为backup

[root@haproxy7 ~]# vim /etc/keepalived/keepalived.conf

state BACKUP

两台Haproxy启动Keepalived服务

service keepalived start # 仅支持此命令

验证

首先使用client(192.168.1.1)访问漂移ip(192.168.1.100),我们将LNMP页面修改为Keepalived

[root@ansible ~]# curl 192.168.1.100

Keepalived

将其中一台的Haproxy和Keepalived两个服务停掉继续访问漂移ip

[root@ansible ~]# curl 192.168.1.100

Keepalived

Keepalived+Haproxy搭建成功

第六步:搭建Varnish反向代理

使用Varnish(192.168.1.5)反向代理后端的LNMP的页面,也就是直接反向代理Keepalived的漂移ip

部署Varnish反向代理

# 解决依赖关系

[root@varnish ~]# yum -y install automake autoconf libtool pkgconfig graphviz ncurses-devel pcre-devel

# 以下安装的几个包需要单独拖入

[root@varnish ~]# rpm -ivh jemalloc-devel-5.2.0-1.1.x86_64.rpm --nodeps

[root@varnish ~]# rpm -ivh libedit-3.0-12.20121213cvs.el7.x86_64.rpm

[root@varnish ~]# rpm -ivh libedit-devel-3.0-12.20121213cvs.el7.x86_64.rpm

[root@varnish ~]# rpm -ivh python-docutils-0.11-0.3.20130715svn7687.el7.noarch.rpm --nodeps

[root@varnish ~]# rpm -ivh python-Sphinx-1.6.5-3.10.1.noarch.rpm --nodeps

# 解压varnish安装包

[root@varnish ~]# tar zxf varnish-4.1.11.tgz -C /usr/src

[root@varnish ~]# cd /usr/src/varnish-4.1.11/

# 编译安装

[root@varnish varnish-4.1.11]# ./configure --prefix=/usr/local/varnish && make && make install

# 命令路径优化

[root@varnish ~]# ln -s /usr/local/varnish/bin/* /usr/local/bin

[root@varnish ~]# ln -s /usr/local/varnish/sbin/* /usr/local/sbin

编辑配置文件,配置反向代理

# 复制安装目录中的配置文件模板

[root@varnish ~]# cp /usr/local/varnish/share/doc/varnish/example.vcl /usr/local/varnish/default.vcl

[root@varnish ~]# vim /usr/local/varnish/default.vcl

backend default {

.host = "192.168.1.100"; # Keepalived的漂移ip

.port = "80";

}

启动服务

[root@varnish ~]# varnishd -f /usr/local/varnish/default.vcl

[root@varnish ~]# netstat -anput | grep varnish # 检测服务是否启动成功

验证

使用client(192.168.1.1)访问Varnish反向代理(192.168.1.5),我们将lnmp页面修改为varnish1.5

[root@ansible ~]# curl 192.168.1.5

varnish1.5

Varnish反向代理搭建成功

第七步:搭建客户端Squid透明代理

部署Squid透明代理(192.168.1.4)拖包

# 解压squid安装包

[root@squid ~]# tar zxf squid-3.5.7.tar.gz -C /usr/src

[root@squid ~]# cd /usr/src/squid-3.5.7/

# 编译安装

[root@squid squid-3.5.7]# ./configure --prefix=/usr/local/squid \

--sysconfdir=/etc/ \

--enable-arp-acl \

--enable-linux-netfiler \

--enable-linux-tproxy \

--enable-async-io=100 \

--enable-err-language="Simplify-Chinese" \

--enable-underscore \

--enable-poll \

--enable-gnuregex

[root@squid squid-3.5.7]# make && make install # 这个时间有点久,刷会抖音吧

# 优化命令路径

[root@squid ~]# ln -s /usr/local/squid/sbin/* /usr/local/sbin/

[root@squid ~]# ln -s /usr/local/squid/bin/* /usr/local/bin/

[root@squid ~]# useradd -M -s /sbin/nologin squid

[root@squid ~]# chown -R squid:squid /usr/local/squid/var/

[root@squid ~]# chmod -R 757 /usr/local/squid/var/

作为透明代理,需要用到两块网卡,分别作为client(192.168.1.1)和varnish(192.168.1.5)反向代理的网关,所以一会要将client的地址更改,首先先给squid添加一块网卡(192.168.2.1),同时client的网卡也要更改为192.168.2.2

192.168.1.4

[root@squid ~]# cp /etc/sysconfig/network-scripts/ifcfg-ens33 /etc/sysconfig/network-scripts/ifcfg-ens37

修改:

NAME=ens37

DEVICE=ens37

IPADDR=192.168.2.1

删除:

UUID

[root@squid ~]# systemctl restart network

192.168.1.1

[root@ansible ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

修改ip为192.168.2.2,网关为192.168.2.1

此时varnish(192.168.1.5)的网关也须改为192.168.1.4(squid)

[root@varnish ~]# vim /etc/*/*/*33

GATEWAY=192.168.1.4

[root@varnish ~]# systemctl restart network

squid代理服务器因为两个网段的存在,需要开启ip转发

[root@squid ~]# vim /etc/sysctl.conf

添加

net.ipv4.ip_forward = 1

[root@squid ~]# sysctl -p

调整squid配置文件

[root@squid ~]# vim /etc/squid.conf

修改

http_access deny all修改为http_access allow all

添加

http_port 192.168.2.1:3128 transparent # 此ip为squid代理与client直连的ip(client网关)

启动squid服务

[root@squid ~]# squid

验证透明代理

需要配置防火墙转发规则

[root@squid ~]# iptables -t nat -A PREROUTING -p tcp --dport=80 -s 192.168.2.0/24 -i ens37 -j REDIRECT --to 3128

# ip网段为与client直连网段,网卡设备也是与client一样的设备

使用client(192.168.2.2)访问Varnish(192.168.1.5),修改LNMP网页页面为squid1.4

[root@ansible ~]# curl 192.168.1.5

squid1.4

如图所示,使用varnish(192.168.1.5)中的阻塞日志查看,转发端口是从2.2来的

至此架构搭建完成

浙公网安备 33010602011771号

浙公网安备 33010602011771号