剖析虚幻渲染体系(06)- UE5特辑Part 2(Lumen和其它)

6.5 Lumen

6.5.1 Lumen技术特性

6.2.2.2 Lumen全局动态光照小节已经简介过Lumen的特性,包含间接光照明、天空光、自发光照明、软硬阴影、反射等,本节将更加详细地介绍其技术特性。

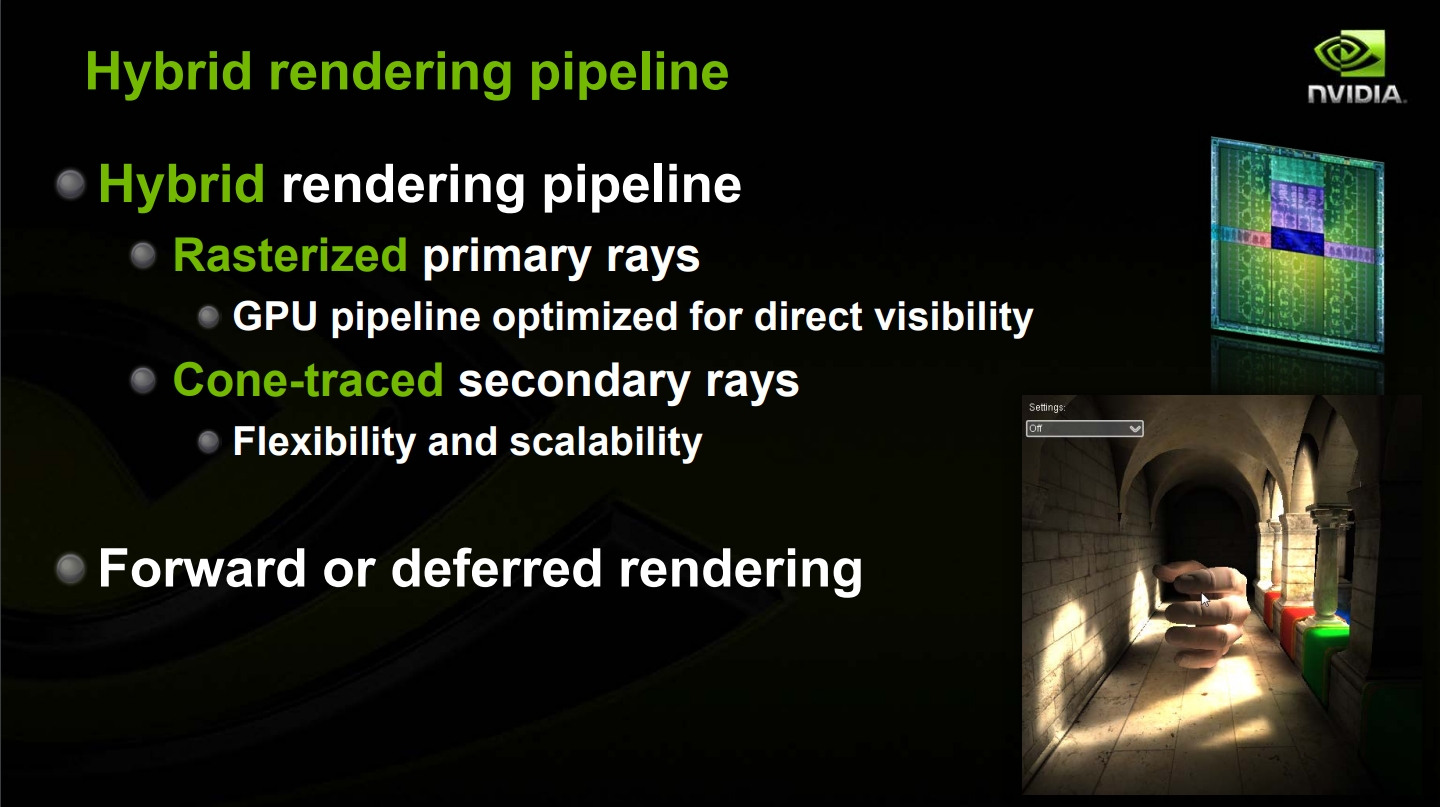

首先需要阐明的是,Lumen是综合使用了多种技术的结合体,而非单一技术的运用。比如,Lumen默认使用有符号距离场(SDF)的软光追,但是当硬件光线追踪被启用时,可以在支持的显卡上实现更高的质量。

下面将Lumen涉及的主要技术点罗列出来。

6.5.1.1 表面缓存(Surface Cache)

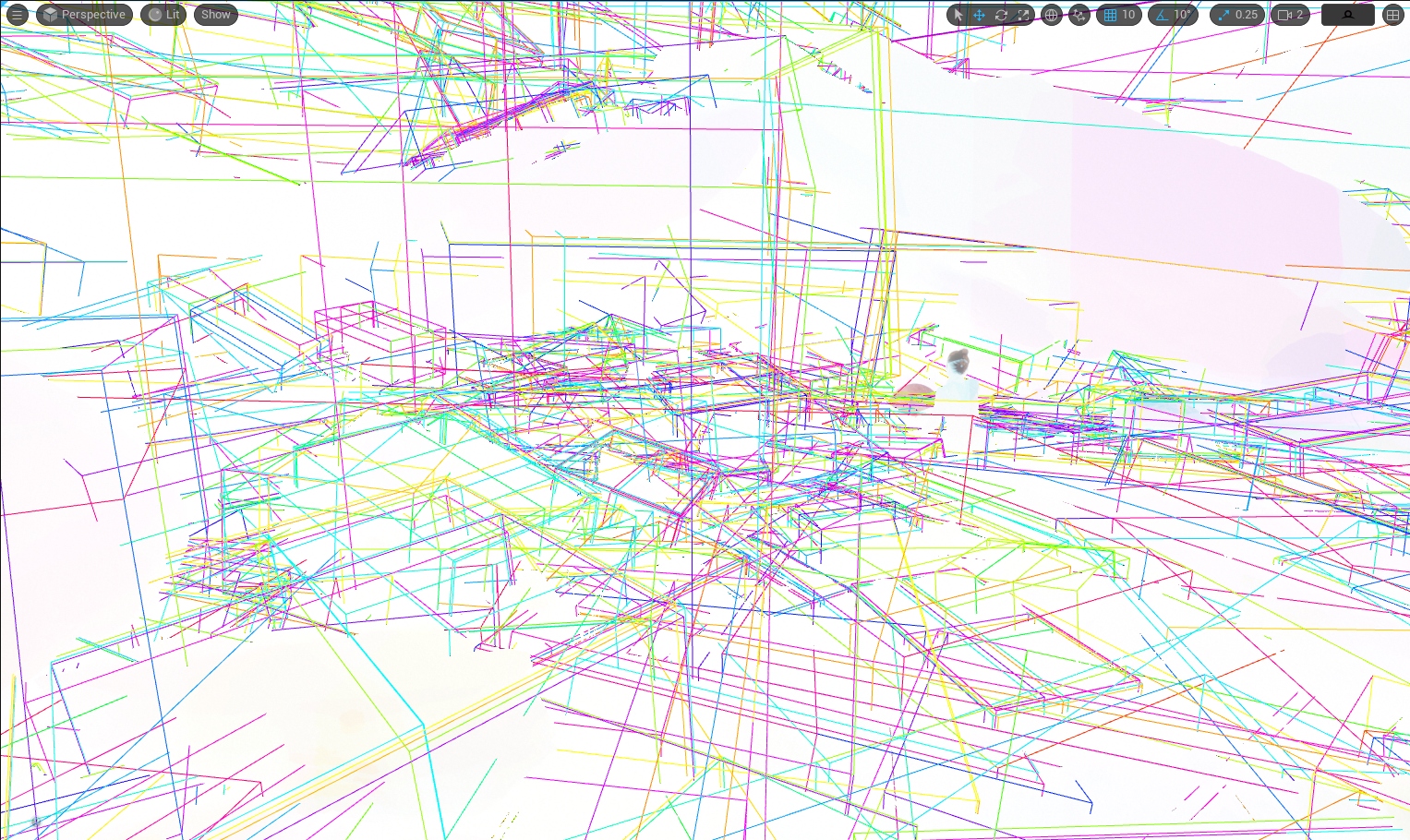

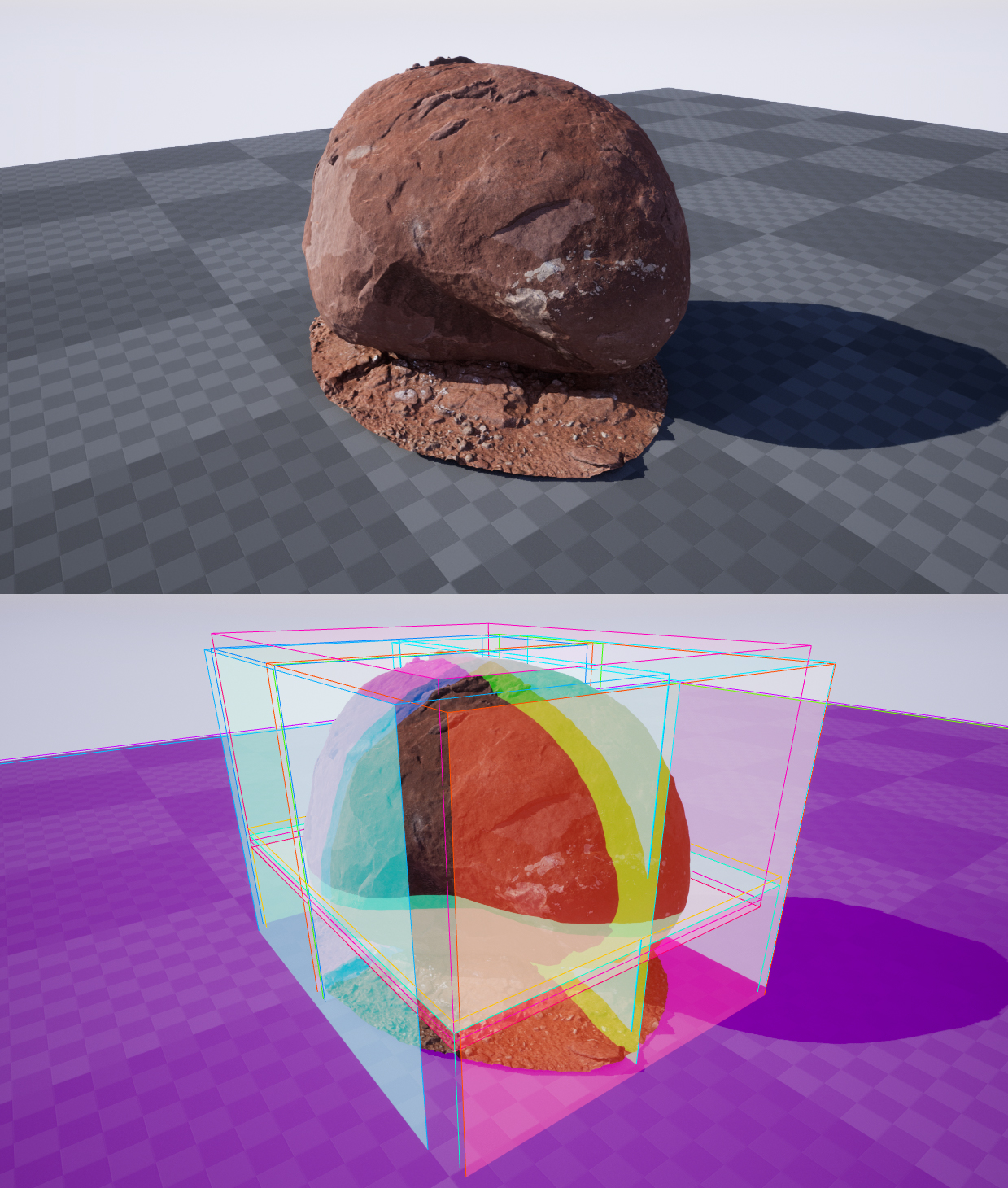

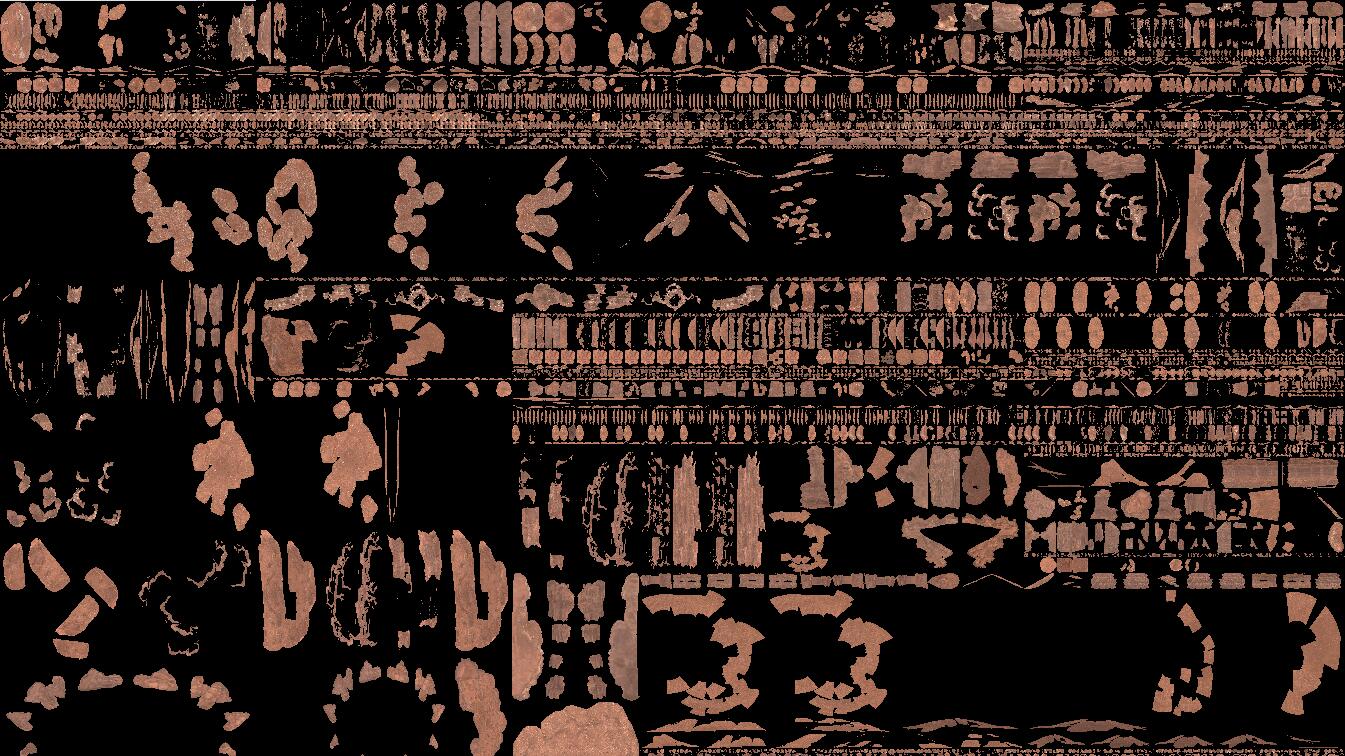

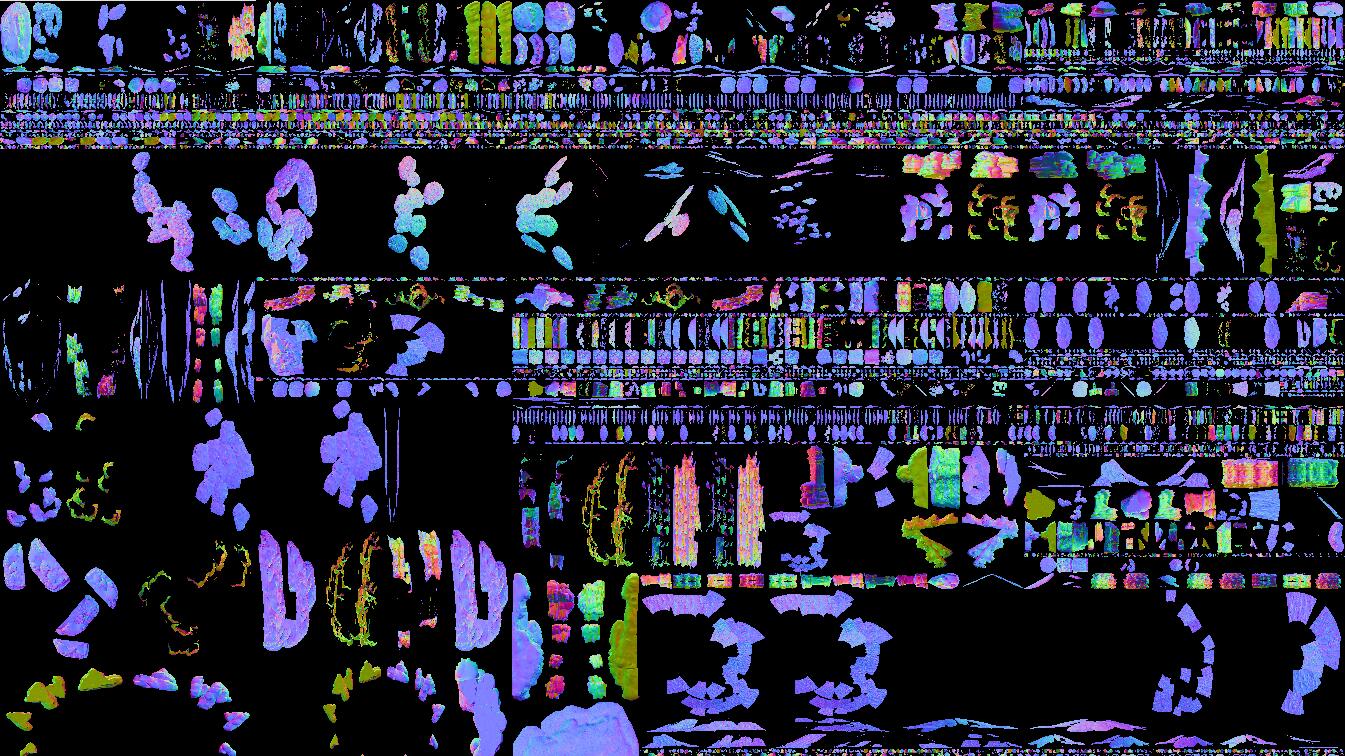

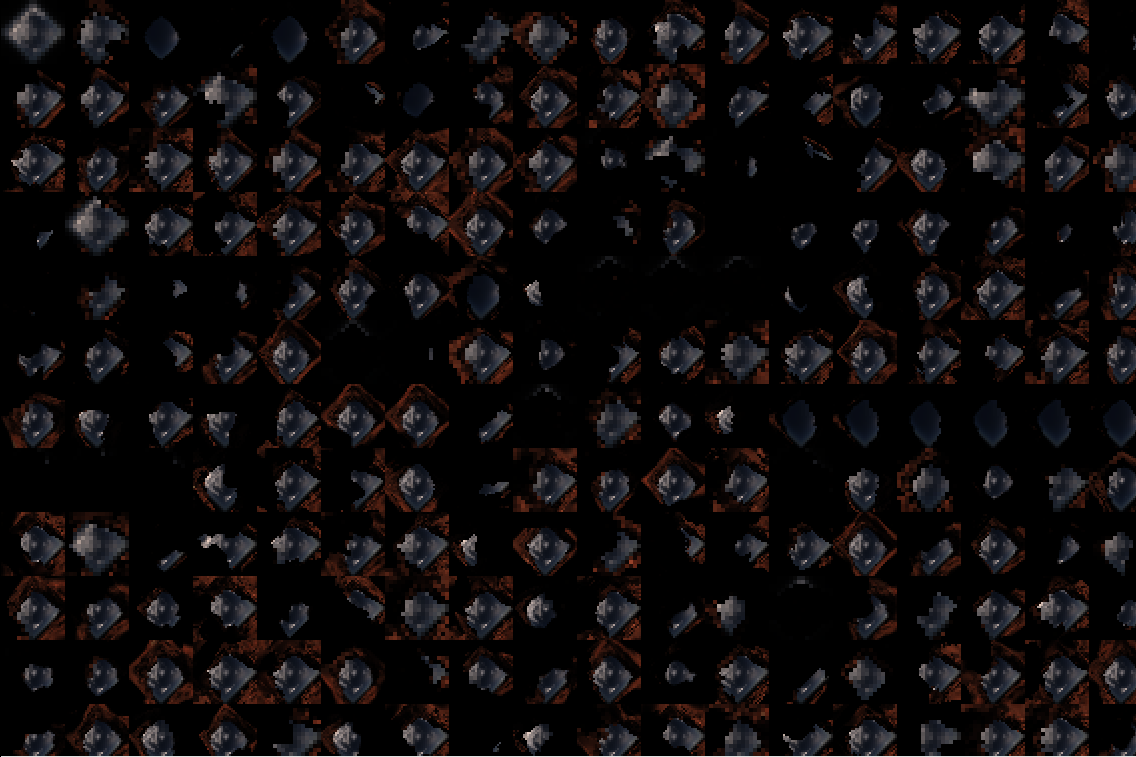

Lumen会为场景表面的附近生成自动化参数,被称为表面缓存(Surface Cache),表面缓存用于快速查询场景中射线命中点的光照。Lumen会为每个网格从多角度捕捉材质属性,这些捕捉位置被称为Cards,是逐网格被离线生成的。通过控制台参数r.Lumen.Visualize.CardPlacement 1可以查看Lumen Cards的可视化效果:

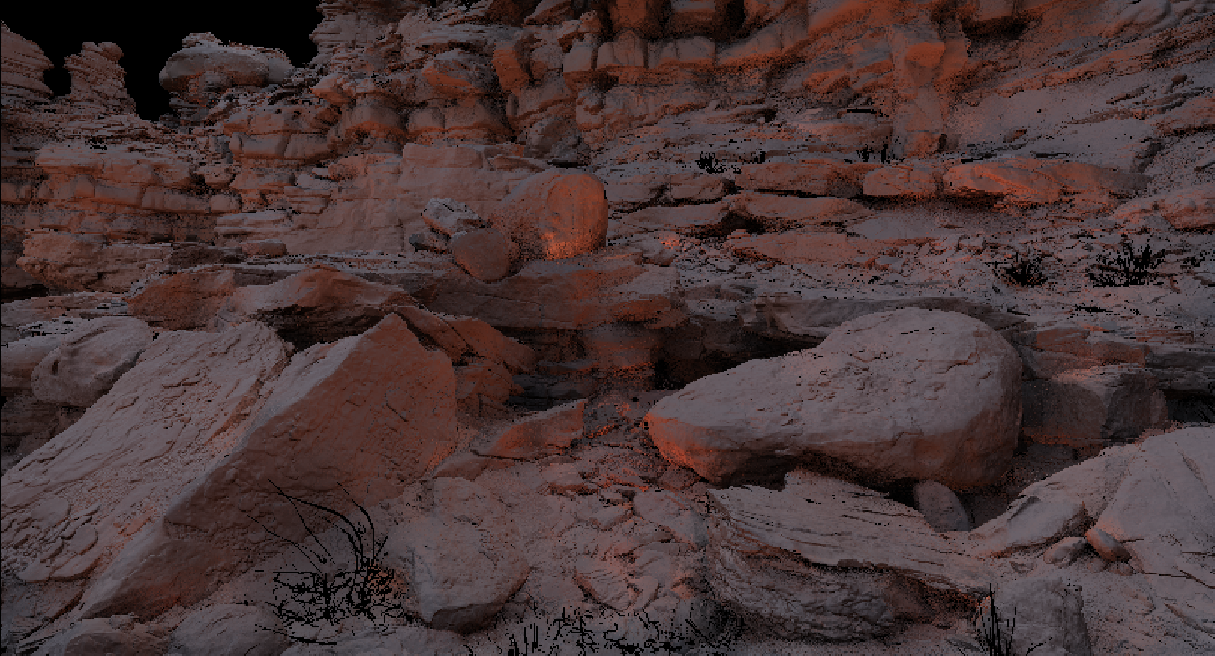

上:正常渲染画面;下:Lumen Card可视化。

Nanite加速了网格捕捉,用于保持Surface Cache与三角形场景同步。特别是高面数的网格,需要使用Nanite来获得高效捕捉。

当Surface Cache被材质属性填充后,Lumen计算这些表面位置的直接和间接照明。这些更新在多个帧上摊销,为许多动态灯光和多反弹的全局照明提供有效的支持。

只有内部简单的网格可以被支持,如墙壁、地板和天花板,它们应该各自使用单独的网格,而不应该合成一个大网格。

6.5.1.2 屏幕追踪(Screen Tracing)

Lumen的特点是先对屏幕进行追踪(称为屏幕追踪或屏幕空间追踪),如果没有击中,或者光线经过表面后,就使用更可靠的方法。

使用屏幕追踪的缺点是,它极大地限制了艺术家的控制,导致只适用于间接照明,如Indirect lighting Scale、Emissive Boost等光照属性。

件光线追踪首先使用屏幕追踪,然后再使用其它开销更大的追踪选项。如果屏幕追踪被禁用于GI和反射,将会看见只有Lumen场景。屏幕跟踪支持任何几何类型,并有助于掩盖Lumen场景和三角形场景之间的不匹配现象。

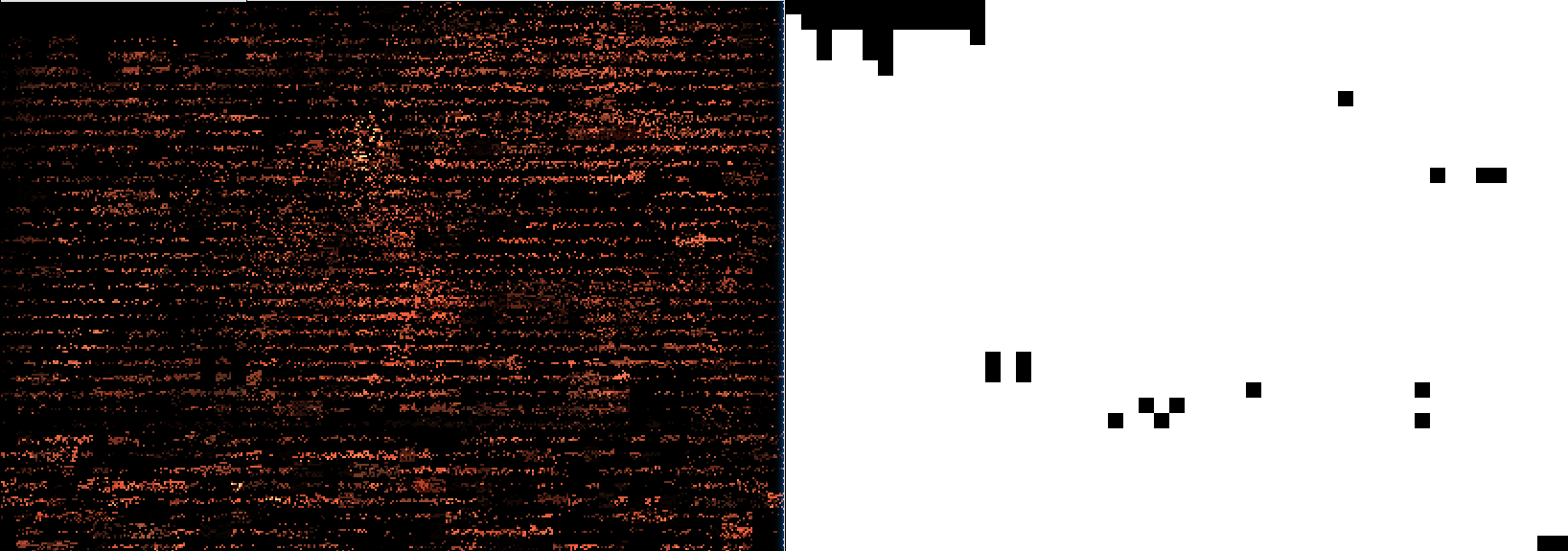

使用r.Lumen.ScreenProbeGather.ScreenTraces 0|1开启或关闭屏幕追踪,以查看场景的对比效果:

上:开启了Lumen屏幕追踪的效果;下:关闭Lumen屏幕追踪的效果。可知在反射上差别最明显,其次是部分间接光。

6.5.1.3 Lumen光线追踪

Lumen支持两种光线追踪模式:

1、软件光线追踪。可以在最广泛的硬件和平台上运行。

2、硬件光线追踪。需要显卡和操作系统支持。

- 软件光线追踪

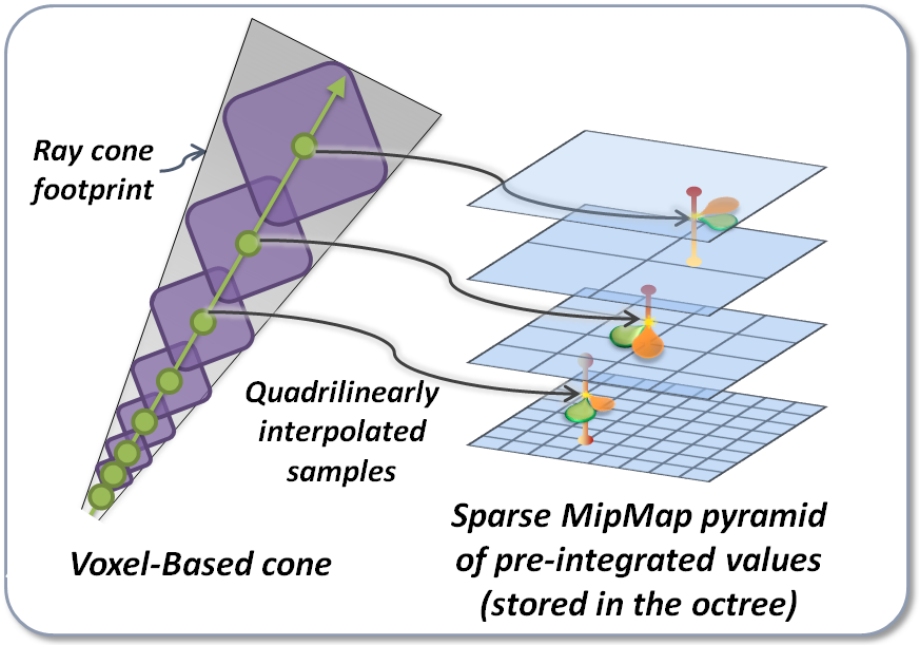

Lumen默认使用依赖有向距离场的软件光线追踪,这意味着可以运行于支持SM5的硬件上。

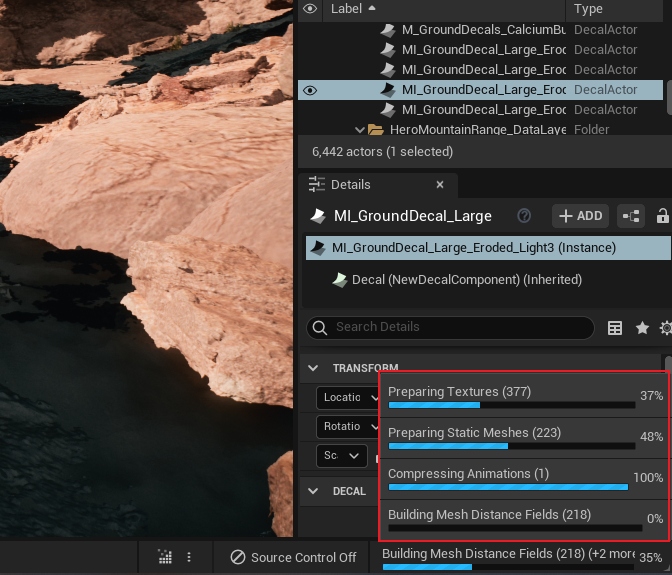

需要在工程设置中开启生成网格距离场(Generate Mesh Distance Fields),UE5默认已开启。

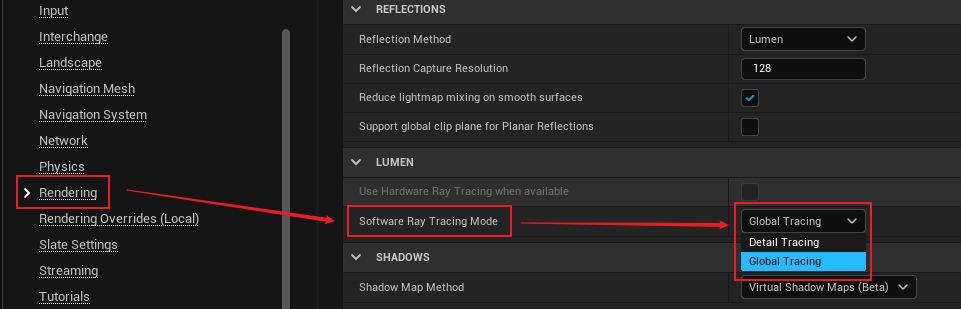

渲染器会合并网格的距离场到一个全局距离场(Global Distance Field)以加速追踪。默认情况下,Lumen追踪每一个网格距离场的前两米的准确性,其它距离的射线则使用合并的全局距离场。如果项目需要精确控制Lumen软光追,则可以在项目设置中使用的软件光线追踪模式的方法:

细节追踪(Detail Tracing)是默认的追踪方法,可以利用单独的网格距离场来达到高质量的GI(前两米才使用,其它距离用全局距离场)。全局追踪(Global Tracing)利用全局距离场来快速追踪,但会损失一定的画质效果。

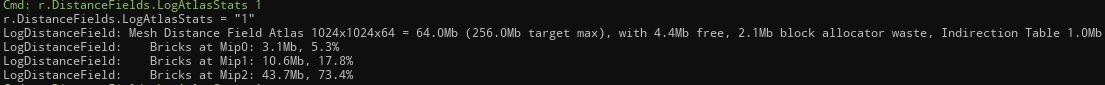

网格距离场会根据摄像机在世界的移动而动态流式加载或卸载。它们会被打包成一个图集(Atlas),可以通过控制台命令r.DistanceFields.LogAtlasStats 1输出信息:

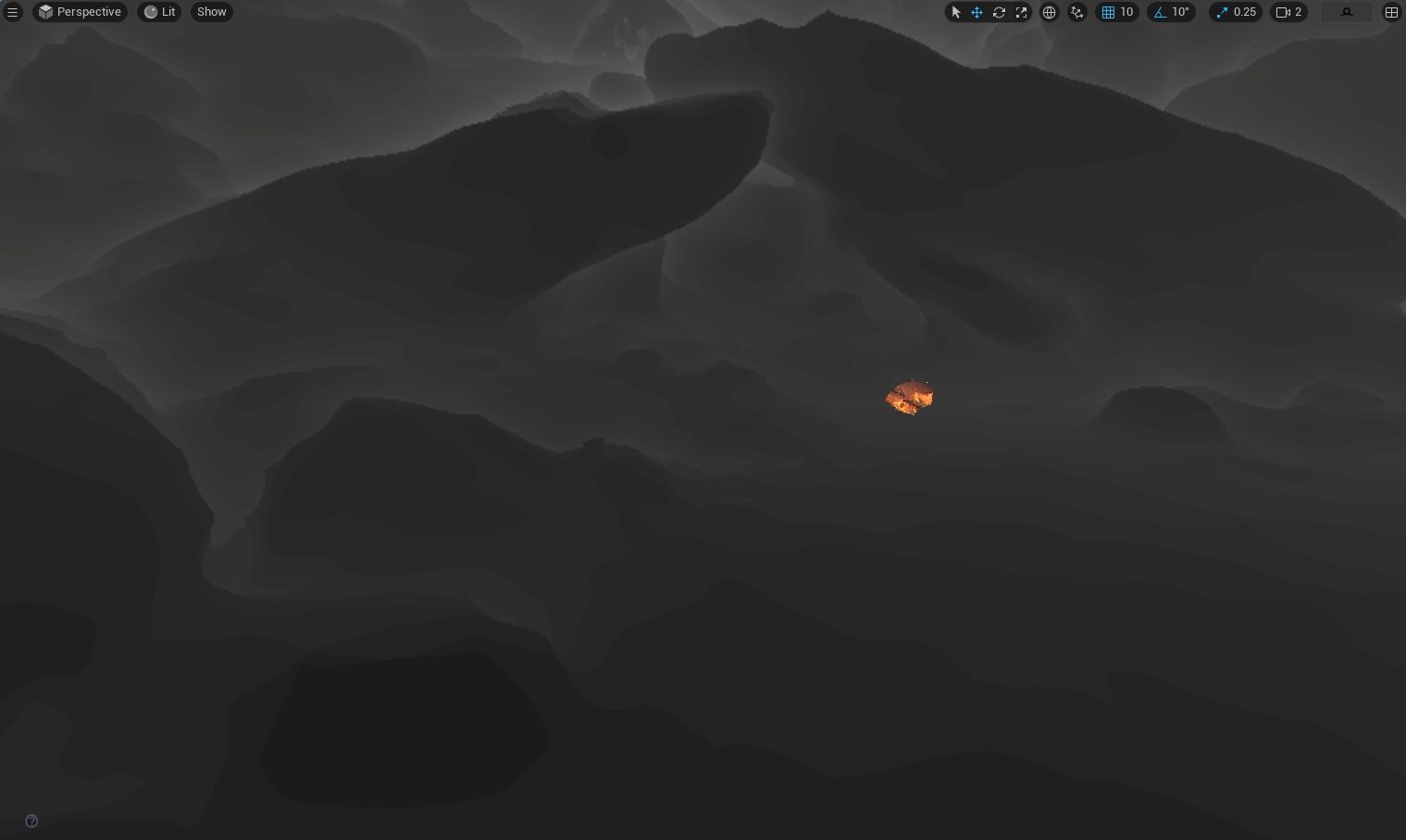

由于Lumen的软光追的质量非常依赖网格距离场,所以关注网格距离场的质量可以提升Lumen的GI效果。下图是现实网格距离场和全局距离场的菜单:

下面两图分别是网格距离场和全局距离场可视化:

但是,软件光线追踪存在着诸多限制,主要有:

-

几何物体限制:

- Lumen场景只支持静态网格、实例化静态网格、层级实例化静态网格(Hierarchical Instanced Static Meshe)。

- 不支持地貌几何体,因此它们没有间接反射光。未来将会支持。

-

材质限制:

- 不支持世界位置偏移(WPO)。

- 不支持透明物体,视Masked物体为不透明物体。

- 距离场数据的构建基于静态网格资产的材质属性,而不是覆盖的组件(override component)。意味着运行时改变材质不会影响到Lumen的GI。

-

工作流限制:

- 软件光线追踪要求层级是由模块组成。墙壁、地板和天花板应该是独立的网格。较大的网格(如山)将有不良的表现,并可能导致自遮挡伪阴影。

- 墙壁应大于10厘米,以避免漏光。

- 距离场的分辨率依赖静态网格导入时的设置,如果压缩率过高,将得不到高质量的距离场数据。

- 距离场无法表达很薄的物体。

上面已经阐述完Lumen的软件光追,下面继续介绍其硬件光追。

- 硬件光线追踪

硬件光线追踪比软件光线追踪支持更大范围的几何物体类型,特别是它支持追踪蒙皮网格。硬件光线追踪也能更好地获得更高的画面质量:它与实际的三角形相交,并有选择地来评估光线击中点的照明,而不是较低质量的Surface Cache。

然而,硬件光线追踪的场景设置成本很高,目前还无法扩展到实例数超过10万的场景。动态变形网格(如蒙皮网格)也会导致更新每一帧的光线追踪加速结构的巨大成本,该成本与蒙皮三角形的数量成正比。

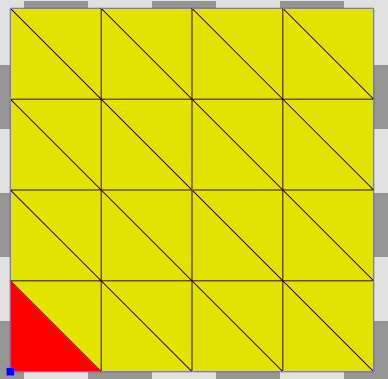

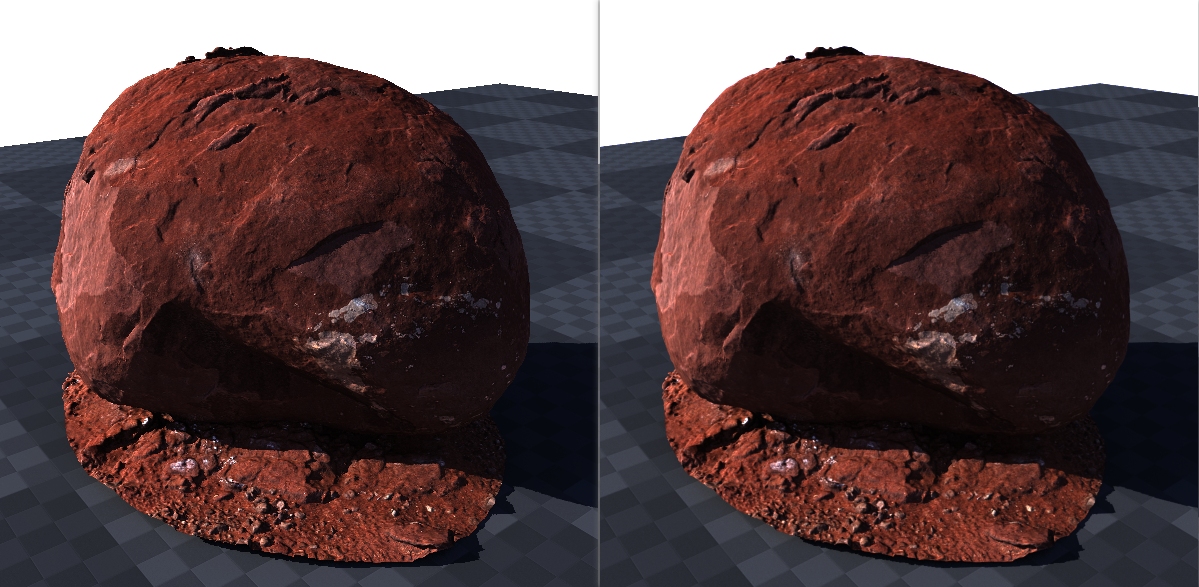

对于使用Nanite的静态网格,硬件光线追踪为了渲染效率,只能在静态网格编辑器设置中Nanite的Proxy Triangle Percent生成的代理网格(Proxy Mesh)上操作。这些Proxy Mesh可以通过控制台命令r.Nanite 0|1来开关可视化:

上:全精度细节的三角形网格;下:对应的Nanite代理网格。

屏幕追踪用于掩盖Nanite渲染的全精度三角形网格和Lumen射线追踪的代理网格之间的不匹配。然而,在某些情况下,不匹配太大而无法掩盖。上面两图就是因为Proxy Triangle Percent数值太小,导致了自阴影的瑕疵。

Lumen只有在满足以下条件时才启用硬件光线追踪:

- 工程设置里开启了Use Hardware Ray Tracing when available和Support Hardware Ray Tracing。

- 工程运行于支持的操作系统、RHI和显卡。目前仅以下平台支持硬件光追:

- 带DirectX 12的Windows10。

- PlayStation 5。

- Xbox系列S / X。

- 显卡必须NVIDIA RTX-2000系列及以上,或者AMD RX 6000系列及以上。

6.5.1.4 Lumen其它说明

Lumen场景运行于摄像机附近的世界,而不是整个世界,实现了大世界和流数据。Lumen依赖于Nanite的LOD和多视图光栅化来快速捕捉场景,以维护Surface Cache,并控制所有操作以防止出现错误。Lumen不需要Nanite来操作,但是在没有启用Nanite的场景中,Lumen的场景捕捉会变得非常慢。如果资产没有良好的LOD设置,这种情况尤其严重。

Lumen的Surface Cache覆盖了距离摄像头200米的位置。在此之后的范围,只有屏幕追踪对于全局照明是开启的。

此外,Lumen还存在其它限制:

- Lumen全局光照不能和光照图(Lightmap)一起使用。未来,Lumen的反射应该被扩展到和Lightmap中使用全局照明,这将进一步提升渲染质量。

- 植物还不能被很好地支持,因为严重依赖于下采样渲染和时间滤波器。

- Lumen的最后收集(Final Gather)会在移动物体周围添加显著的噪点,目前仍在积极开发中。

- 透明材质还不支持Lumen反射。

- 透明材质没有高质量的动态GI。

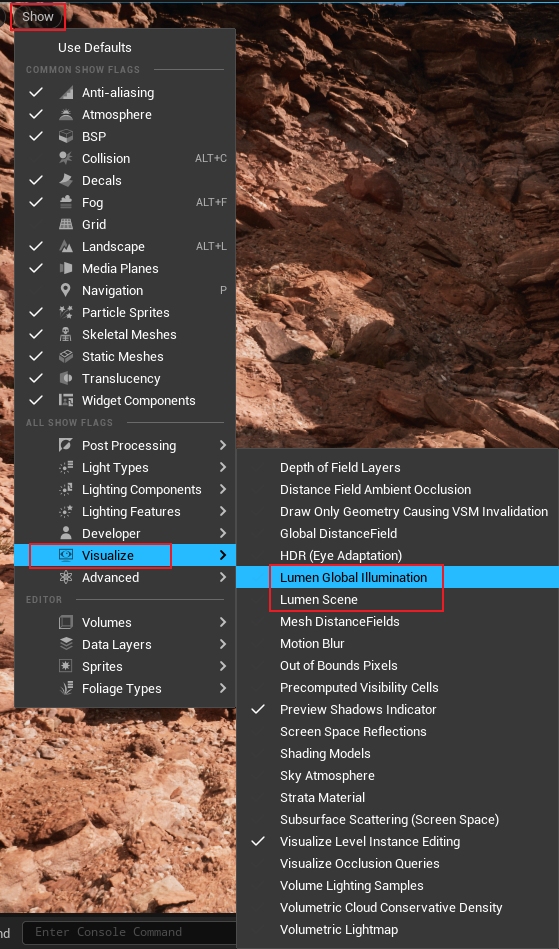

以下是Lumen相关的调试或可视化信息:

上:正常画面;中:Lumen Scene可视化;下:Lumen GI可视化。

当然,除了以上出现的几个可视化选项,实际上Lumen还有很多其它可视化控制命令:

r.Lumen.RadianceCache.Visualize

r.Lumen.RadianceCache.VisualizeClipmapIndex

r.Lumen.RadianceCache.VisualizeProbeRadius

r.Lumen.RadianceCache.VisualizeRadiusScale

r.Lumen.ScreenProbeGather.VisualizeTraces

r.Lumen.ScreenProbeGather.VisualizeTracesFreeze

r.Lumen.Visualize.CardInterpolateInfluenceRadius

r.Lumen.Visualize.CardPlacement

r.Lumen.Visualize.CardPlacementDistance

r.Lumen.Visualize.CardPlacementIndex

r.Lumen.Visualize.CardPlacementOrientation

r.Lumen.Visualize.ClipmapIndex

r.Lumen.Visualize.ConeAngle

r.Lumen.Visualize.ConeStepFactor

r.Lumen.Visualize.GridPixelSize

r.Lumen.Visualize.HardwareRayTracing

r.Lumen.Visualize.HardwareRayTracing.DeferredMaterial

r.Lumen.Visualize.HardwareRayTracing.DeferredMaterial.TileDimension

r.Lumen.Visualize.HardwareRayTracing.LightingMode

r.Lumen.Visualize.HardwareRayTracing.MaxTranslucentSkipCount

r.Lumen.Visualize.MaxMeshSDFTraceDistance

r.Lumen.Visualize.MaxTraceDistance

r.Lumen.Visualize.MinTraceDistance

r.Lumen.Visualize.Stats

r.Lumen.Visualize.TraceMeshSDFs

r.Lumen.Visualize.TraceRadianceCache

r.Lumen.Visualize.VoxelFaceIndex

r.Lumen.Visualize.Voxels

r.Lumen.Visualize.VoxelStepFactor

ShowFlag.LumenGlobalIllumination

ShowFlag.LumenReflections

ShowFlag.VisualizeLumenIndirectDiffuse

ShowFlag.VisualizeLumenScene

此外,还有很多控制命令,以下显示部分命令:

r.Lumen.DiffuseIndirect.Allow

r.Lumen.DiffuseIndirect.CardInterpolateInfluenceRadius

r.Lumen.DiffuseIndirect.CardTraceEndDistanceFromCamera

r.Lumen.DirectLighting

r.Lumen.DirectLighting.BatchSize

r.Lumen.DirectLighting.CardUpdateFrequencyScale

r.Lumen.HardwareRayTracing

r.Lumen.HardwareRayTracing.PullbackBias

r.Lumen.IrradianceFieldGather

r.Lumen.IrradianceFieldGather.ClipmapDistributionBase

r.Lumen.IrradianceFieldGather.ClipmapWorldExtent

r.Lumen.MaxConeSteps

r.Lumen.MaxTraceDistance

r.Lumen.ProbeHierarchy

r.Lumen.ProbeHierarchy.AdditionalSpecularRayThreshold

r.Lumen.ProbeHierarchy.AntiTileAliasing

r.Lumen.RadianceCache.DownsampleDistanceFromCamera

r.Lumen.RadianceCache.ForceFullUpdate

r.Lumen.RadianceCache.NumFramesToKeepCachedProbes

r.Lumen.Radiosity

r.Lumen.Radiosity.CardUpdateFrequencyScale

r.Lumen.Radiosity.ComputeScatter

r.Lumen.Radiosity.ConeAngleScale

r.Lumen.Reflections.Allow

r.Lumen.Reflections.DownsampleFactor

r.Lumen.Reflections.GGXSamplingBias

r.Lumen.Reflections.HardwareRayTracing

r.Lumen.Reflections.HardwareRayTracing.DeferredMaterial

r.Lumen.Reflections.HierarchicalScreenTraces.UncertainTraceRelativeDepthThreshold

r.Lumen.Reflections.MaxRayIntensity

r.Lumen.Reflections.MaxRoughnessToTrace

r.Lumen.Reflections.RoughnessFadeLength

r.Lumen.Reflections.ScreenSpaceReconstruction

r.Lumen.Reflections.ScreenTraces

r.Lumen.Reflections.Temporal

r.Lumen.Reflections.Temporal.DistanceThreshold

r.Lumen.Reflections.Temporal.HistoryWeight

r.Lumen.Reflections.TraceMeshSDFs

r.Lumen.ScreenProbeGather

r.Lumen.ScreenProbeGather.AdaptiveProbeAllocationFraction

r.Lumen.ScreenProbeGather.AdaptiveProbeMinDownsampleFactor

r.Lumen.ScreenProbeGather.DiffuseIntegralMethod

r.Lumen.ScreenProbeGather.DownsampleFactor

r.Lumen.ScreenProbeGather.FixedJitterIndex

r.Lumen.ScreenProbeGather.FullResolutionJitterWidth

r.Lumen.ScreenProbeGather.GatherNumMips

r.Lumen.ScreenProbeGather.GatherOctahedronResolutionScale

r.Lumen.ScreenProbeGather.HardwareRayTracing

r.Lumen.ScreenProbeGather.ImportanceSample.ProbeRadianceHistory

r.Lumen.ScreenProbeGather.MaxRayIntensity

r.Lumen.ScreenProbeGather.OctahedralSolidAngleTextureSize

r.Lumen.ScreenProbeGather.RadianceCache

r.Lumen.ScreenProbeGather.RadianceCache.ClipmapDistributionBase

r.Lumen.ScreenProbeGather.ReferenceMode

r.Lumen.ScreenProbeGather.ScreenSpaceBentNormal

r.Lumen.ScreenProbeGather.ScreenTraces

r.Lumen.ScreenProbeGather.ScreenTraces.HZBTraversal

r.Lumen.ScreenProbeGather.SpatialFilterHalfKernelSize Experimental

r.Lumen.ScreenProbeGather.SpatialFilterMaxRadianceHitAngle

r.Lumen.ScreenProbeGather.Temporal

r.Lumen.ScreenProbeGather.Temporal.ClearHistoryEveryFrame

r.Lumen.ScreenProbeGather.TraceMeshSDFs

r.Lumen.ScreenProbeGather.TracingOctahedronResolution

r.Lumen.TraceMeshSDFs

r.Lumen.TraceMeshSDFs.Allow

r.Lumen.TranslucencyVolume.ConeAngleScale

r.Lumen.TranslucencyVolume.Enable

r.Lumen.TranslucencyVolume.EndDistanceFromCamera

r.LumenParallelBeginUpdate

r.LumenScene.CardAtlasAllocatorBinSize

r.LumenScene.CardAtlasSize

r.LumenScene.CardCameraDistanceTexelDensityScale

r.LumenScene.CardCaptureMargin

r.LumenScene.ClipmapResolution

r.LumenScene.ClipmapWorldExtent

r.LumenScene.ClipmapZResolutionDivisor

r.LumenScene.DiffuseReflectivityOverride

r.LumenScene.DistantScene

r.LumenScene.DistantScene.CardResolution

r.LumenScene.FastCameraMode

r.LumenScene.GlobalDFClipmapExtent

r.LumenScene.GlobalDFResolution

r.LumenScene.HeightfieldSlopeThreshold

r.LumenScene.MaxInstanceAddsPerFrame

r.LumenScene.MeshCardsCullFaces

r.LumenScene.MeshCardsMaxLOD

r.LumenScene.NaniteMultiViewCapture

r.LumenScene.NumClipmapLevels

r.LumenScene.PrimitivesPerPacket

r.LumenScene.RecaptureEveryFrame

r.LumenScene.Reset

r.LumenScene.UploadCardBufferEveryFrame

r.LumenScene.VoxelLightingAverageObjectsPerVisBufferTile

r.SSGI.AllowStandaloneLumenProbeHierarchy

r.Water.SingleLayer.LumenReflections

Lumen相关的控制台指令达到上百个,由此可知Lumen渲染的复杂度有多高!!

6.5.2 Lumen渲染基础

本节将阐述Lumen相关的基础概念和类型。

6.5.2.1 FLumenCard

FLumenCard就是上一小节提及的Card,是FLumenMeshCards的基本组成元素。

// Engine\Source\Runtime\Renderer\Private\Lumen\LumenSceneData.h

// Lumen卡片类型。

class FLumenCard

{

public:

FLumenCard();

~FLumenCard();

// 世界空间的包围盒.

FBox WorldBounds;

// 旋转信息.

FVector LocalToWorldRotationX;

FVector LocalToWorldRotationY;

FVector LocalToWorldRotationZ;

// 位置.

FVector Origin;

// 局部空间的包围盒.

FVector LocalExtent;

// 是否可见.

bool bVisible = false;

// 是否处于远景.

bool bDistantScene = false;

// 所在图集的信息.

bool bAllocated = false;

FIntPoint DesiredResolution;

FIntRect AtlasAllocation;

// 朝向

int32 Orientation = -1;

// 在可见列表的索引.

int32 IndexInVisibleCardIndexBuffer = -1;

// 所在的FLumenMeshCards的Card列表的索引.

int32 IndexInMeshCards = -1;

// 所在的FLumenMeshCards的索引.

int32 MeshCardsIndex = -1;

// 分辨率缩放.

float ResolutionScale = 1.0f;

// 初始化

void Initialize(float InResolutionScale, const FMatrix& LocalToWorld, const FLumenCardBuildData& CardBuildData, int32 InIndexInMeshCards, int32 InMeshCardsIndex);

// 设置变换数据

void SetTransform(const FMatrix& LocalToWorld, FVector CardLocalCenter, FVector CardLocalExtent, int32 InOrientation);

void SetTransform(const FMatrix& LocalToWorld, const FVector& LocalOrigin, const FVector& CardToLocalRotationX, const FVector& CardToLocalRotationY, const FVector& CardToLocalRotationZ, const FVector& InLocalExtent);

// 从图集(场景)中删除.

void RemoveFromAtlas(FLumenSceneData& LumenSceneData);

int32 GetNumTexels() const

{

return AtlasAllocation.Area();

}

inline FVector TransformWorldPositionToCardLocal(FVector WorldPosition) const

{

FVector Offset = WorldPosition - Origin;

return FVector(Offset | LocalToWorldRotationX, Offset | LocalToWorldRotationY, Offset | LocalToWorldRotationZ);

}

inline FVector TransformCardLocalPositionToWorld(FVector CardPosition) const

{

return Origin + CardPosition.X * LocalToWorldRotationX + CardPosition.Y * LocalToWorldRotationY + CardPosition.Z * LocalToWorldRotationZ;

}

};

6.5.2.2 FLumenMeshCards

FLumenMeshCards是计算Surface Cache的基本元素,也是构成Lumen Scene的基本单元。它最多可存储6个面(朝向)的FLumenCard信息,每个朝向可存储0~N个FLumenCard信息(由NumCardsPerOrientation指定)。

// Engine\Source\Runtime\Renderer\Private\Lumen\LumenMeshCards.h

class FLumenMeshCards

{

public:

// 初始化.

void Initialize(

const FMatrix& InLocalToWorld,

const FBox& InBounds,

uint32 InFirstCardIndex,

uint32 InNumCards,

uint32 InNumCardsPerOrientation[6],

uint32 InCardOffsetPerOrientation[6])

{

Bounds = InBounds;

SetTransform(InLocalToWorld);

FirstCardIndex = InFirstCardIndex;

NumCards = InNumCards;

for (uint32 OrientationIndex = 0; OrientationIndex < 6; ++OrientationIndex)

{

NumCardsPerOrientation[OrientationIndex] = InNumCardsPerOrientation[OrientationIndex];

CardOffsetPerOrientation[OrientationIndex] = InCardOffsetPerOrientation[OrientationIndex];

}

}

// 设置变换矩阵.

void SetTransform(const FMatrix& InLocalToWorld)

{

LocalToWorld = InLocalToWorld;

}

// 局部到世界的矩阵.

FMatrix LocalToWorld;

// 局部包围盒.

FBox Bounds;

// 第一个FLumenCard索引.

uint32 FirstCardIndex = 0;

// FLumenCard数量.

uint32 NumCards = 0;

// 6个朝向的FLumenCard数量.

uint32 NumCardsPerOrientation[6];

// 6个朝向的FLumenCard偏移.

uint32 CardOffsetPerOrientation[6];

};

6.5.2.3 FLumenSceneData

FLumenSceneData就是Lumen实现全局光照的场景代表,它使用的不是Nanite的高精度网格,而是基于FLumenCard和FLumenMeshCards为基本元素的粗糙的场景。其定义及相关类型如下:

// Engine\Source\Runtime\Renderer\Private\Lumen\LumenSceneData.h

// Lumen图元实例

class FLumenPrimitiveInstance

{

public:

FBox WorldSpaceBoundingBox;

// FLumenMeshCards索引.

int32 MeshCardsIndex;

bool bValidMeshCards;

};

// Lumen图元

class FLumenPrimitive

{

public:

// 世界空间包围盒.

FBox WorldSpaceBoundingBox;

// 属于此图元的FLumenMeshCards的最大包围盒, 用于早期剔除.

float MaxCardExtent;

// 图元实例列表.

TArray<FLumenPrimitiveInstance, TInlineAllocator<1>> Instances;

// 对应的真实场景的图元信息.

FPrimitiveSceneInfo* Primitive = nullptr;

// 是否合并的实例.

bool bMergedInstances = false;

// 卡片分辨率缩放.

float CardResolutionScale = 1.0f;

// FLumenMeshCards的数量.

int32 NumMeshCards = 0;

// 映射到LumenDFInstanceToDFObjectIndex.

uint32 LumenDFInstanceOffset = UINT32_MAX;

int32 LumenNumDFInstances = 0;

// 获取FLumenMeshCards索引.

int32 GetMeshCardsIndex(int32 InstanceIndex) const

{

if (bMergedInstances)

{

return Instances[0].MeshCardsIndex;

}

if (InstanceIndex < Instances.Num())

{

return Instances[InstanceIndex].MeshCardsIndex;

}

return -1;

}

};

// Lumen场景数据.

class FLumenSceneData

{

public:

int32 Generation;

// 上传GPU的缓冲.

FScatterUploadBuffer CardUploadBuffer;

FScatterUploadBuffer UploadMeshCardsBuffer;

FScatterUploadBuffer ByteBufferUploadBuffer;

FScatterUploadBuffer UploadPrimitiveBuffer;

FUniqueIndexList CardIndicesToUpdateInBuffer;

FRWBufferStructured CardBuffer;

TArray<FBox> PrimitiveModifiedBounds;

// Lumen场景的所有Lumen图元.

TArray<FLumenPrimitive> LumenPrimitives;

// FLumenMeshCards数据.

FUniqueIndexList MeshCardsIndicesToUpdateInBuffer;

TSparseSpanArray<FLumenMeshCards> MeshCards;

TSparseSpanArray<FLumenCard> Cards;

TArray<int32, TInlineAllocator<8>> DistantCardIndices;

FRWBufferStructured MeshCardsBuffer;

FRWByteAddressBuffer DFObjectToMeshCardsIndexBuffer;

// 从图元映射到LumenDFInstance.

FUniqueIndexList PrimitivesToUpdate;

FRWByteAddressBuffer PrimitiveToDFLumenInstanceOffsetBuffer;

uint32 PrimitiveToLumenDFInstanceOffsetBufferSize = 0;

// 从LumenDFInstance映射到DFObjectIndex

FUniqueIndexList DFObjectIndicesToUpdateInBuffer;

FUniqueIndexList LumenDFInstancesToUpdate;

TSparseSpanArray<int32> LumenDFInstanceToDFObjectIndex;

FRWByteAddressBuffer LumenDFInstanceToDFObjectIndexBuffer;

uint32 LumenDFInstanceToDFObjectIndexBufferSize = 0;

// 可见的FLumenMeshCards列表.

TArray<int32> VisibleCardsIndices;

TRefCountPtr<FRDGPooledBuffer> VisibleCardsIndexBuffer;

// --- 从三角形场景中捕获的数据 ---

TRefCountPtr<IPooledRenderTarget> AlbedoAtlas;

TRefCountPtr<IPooledRenderTarget> NormalAtlas;

TRefCountPtr<IPooledRenderTarget> EmissiveAtlas;

// --- 生成的数据 ---

TRefCountPtr<IPooledRenderTarget> DepthAtlas;

TRefCountPtr<IPooledRenderTarget> FinalLightingAtlas;

TRefCountPtr<IPooledRenderTarget> IrradianceAtlas;

TRefCountPtr<IPooledRenderTarget> IndirectIrradianceAtlas;

TRefCountPtr<IPooledRenderTarget> RadiosityAtlas;

TRefCountPtr<IPooledRenderTarget> OpacityAtlas;

// 其它数据.

bool bFinalLightingAtlasContentsValid;

FIntPoint MaxAtlasSize;

FBinnedTextureLayout AtlasAllocator;

int32 NumCardTexels = 0;

int32 NumMeshCardsToAddToSurfaceCache = 0;

// 增删图元数据.

bool bTrackAllPrimitives;

TSet<FPrimitiveSceneInfo*> PendingAddOperations;

TSet<FPrimitiveSceneInfo*> PendingUpdateOperations;

TArray<FLumenPrimitiveRemoveInfo> PendingRemoveOperations;

FLumenSceneData(EShaderPlatform ShaderPlatform, EWorldType::Type WorldType);

~FLumenSceneData();

// 增删图元操作.

void AddPrimitiveToUpdate(int32 PrimitiveIndex);

void AddPrimitive(FPrimitiveSceneInfo* InPrimitive);

void UpdatePrimitive(FPrimitiveSceneInfo* InPrimitive);

void RemovePrimitive(FPrimitiveSceneInfo* InPrimitive, int32 PrimitiveIndex);

// 增删FLumenMeshCards.

void AddCardToVisibleCardList(int32 CardIndex);

void RemoveCardFromVisibleCardList(int32 CardIndex);

void AddMeshCards(int32 LumenPrimitiveIndex, int32 LumenInstanceIndex);

void UpdateMeshCards(const FMatrix& LocalToWorld, int32 MeshCardsIndex, const FMeshCardsBuildData& MeshCardsBuildData);

void RemoveMeshCards(FLumenPrimitive& LumenPrimitive, FLumenPrimitiveInstance& LumenPrimitiveInstance);

bool HasPendingOperations() const

{

return PendingAddOperations.Num() > 0 || PendingUpdateOperations.Num() > 0 || PendingRemoveOperations.Num() > 0;

}

void UpdatePrimitiveToDistanceFieldInstanceMapping(FScene& Scene, FRHICommandListImmediate& RHICmdList);

private:

// 从构建数据增加FLumenMeshCards.

int32 AddMeshCardsFromBuildData(const FMatrix& LocalToWorld, const FMeshCardsBuildData& MeshCardsBuildData, float ResolutionScale);

};

由此可知,FLumenSceneData存储着FLumenMeshCards以及以FLumenMeshCards为基础的图元FLumenPrimitive和图元实例FLumenPrimitiveInstance。每个FLumenPrimitive又存储着若干个FLumenMeshCards,同时存储了一个FPrimitiveSceneInfo指针,标明它是真实世界哪个FPrimitiveSceneInfo的粗糙代表。

6.5.3 Lumen数据构建

Lumen在正在渲染之前,会执行很多数据构建,包含生成Mesh Distance Field、Global Distance Field以及MeshCard。

首次启动Lumen工程时,会构建很多数据,包含网格距离场等。

6.5.3.1 CardRepresentation

为了构建网格卡片代表,UE5独立出了MeshCardRepresentation模块,其核心概念和类型如下:

// Engine\Source\Runtime\Engine\Public\MeshCardRepresentation.h

// FLumenCard构建数据

class FLumenCardBuildData

{

public:

// 中心和包围盒.

FVector Center;

FVector Extent;

// 朝向顺序: -X, +X, -Y, +Y, -Z, +Z

int32 Orientation;

int32 LODLevel;

// 根据朝向旋转Extent.

static FVector TransformFaceExtent(FVector Extent, int32 Orientation)

{

if (Orientation / 2 == 2) // 朝向: -Z, +Z

{

return FVector(Extent.Y, Extent.X, Extent.Z);

}

else if (Orientation / 2 == 1) // 朝向: -Y, +Y

{

return FVector(Extent.Z, Extent.X, Extent.Y);

}

else // (Orientation / 2 == 0), 朝向: -X, +X

{

return FVector(Extent.Y, Extent.Z, Extent.X);

}

}

};

// FLumenMeshCards构建数据.

class FMeshCardsBuildData

{

public:

FBox Bounds;

int32 MaxLODLevel;

// FLumenCard构建数据列表.

TArray<FLumenCardBuildData> CardBuildData;

(......)

};

// 每个卡片表示数据实例的唯一id。

class FCardRepresentationDataId

{

public:

uint32 Value = 0;

bool IsValid() const

{

return Value != 0;

}

bool operator==(FCardRepresentationDataId B) const

{

return Value == B.Value;

}

friend uint32 GetTypeHash(FCardRepresentationDataId DataId)

{

return GetTypeHash(DataId.Value);

}

};

// 卡片代表网格构建过程的有效负载和输出数据.

class FCardRepresentationData : public FDeferredCleanupInterface

{

public:

// 网格卡片构建数据和ID.

FMeshCardsBuildData MeshCardsBuildData;

FCardRepresentationDataId CardRepresentationDataId;

(......)

#if WITH_EDITORONLY_DATA

// 缓存卡片代表的数据.

void CacheDerivedData(const FString& InDDCKey, const ITargetPlatform* TargetPlatform, UStaticMesh* Mesh, UStaticMesh* GenerateSource, bool bGenerateDistanceFieldAsIfTwoSided, FSourceMeshDataForDerivedDataTask* OptionalSourceMeshData);

#endif

};

// 构建任务

class FAsyncCardRepresentationTaskWorker : public FNonAbandonableTask

{

public:

(.....)

void DoWork();

private:

FAsyncCardRepresentationTask& Task;

};

// 构建任务数据载体.

class FAsyncCardRepresentationTask

{

public:

bool bSuccess = false;

#if WITH_EDITOR

TArray<FSignedDistanceFieldBuildMaterialData> MaterialBlendModes;

#endif

FSourceMeshDataForDerivedDataTask SourceMeshData;

bool bGenerateDistanceFieldAsIfTwoSided = false;

UStaticMesh* StaticMesh = nullptr;

UStaticMesh* GenerateSource = nullptr;

FString DDCKey;

FCardRepresentationData* GeneratedCardRepresentation;

TUniquePtr<FAsyncTask<FAsyncCardRepresentationTaskWorker>> AsyncTask = nullptr;

};

// 管理网格距离场的异步构建的类型.

class FCardRepresentationAsyncQueue : public FGCObject

{

public:

// 增加新的构建任务.

ENGINE_API void AddTask(FAsyncCardRepresentationTask* Task);

// 处理异步任务.

ENGINE_API void ProcessAsyncTasks(bool bLimitExecutionTime = false);

// 取消构建.

ENGINE_API void CancelBuild(UStaticMesh* StaticMesh);

ENGINE_API void CancelAllOutstandingBuilds();

// 阻塞构建任务.

ENGINE_API void BlockUntilBuildComplete(UStaticMesh* StaticMesh, bool bWarnIfBlocked);

ENGINE_API void BlockUntilAllBuildsComplete();

(......)

};

// 全局构建队列.

extern ENGINE_API FCardRepresentationAsyncQueue* GCardRepresentationAsyncQueue;

extern ENGINE_API FString BuildCardRepresentationDerivedDataKey(const FString& InMeshKey);

extern ENGINE_API void BeginCacheMeshCardRepresentation(const ITargetPlatform* TargetPlatform, UStaticMesh* StaticMeshAsset, class FStaticMeshRenderData& RenderData, const FString& DistanceFieldKey, FSourceMeshDataForDerivedDataTask* OptionalSourceMeshData);

6.5.3.2 GCardRepresentationAsyncQueue

为了构建Lumen需要的数据,UE5声明了两个全局队列变量:GCardRepresentationAsyncQueue和GDistanceFieldAsyncQueue,前者用于Lumen Card的数据构建,后者用于距离场的数据构建。它们的创建和更新逻辑如下:

// Engine\Source\Runtime\Launch\Private\LaunchEngineLoop.cpp

int32 FEngineLoop::PreInitPreStartupScreen(const TCHAR* CmdLine)

{

(......)

if (!FPlatformProperties::RequiresCookedData())

{

(......)

// 创建全局异步队列.

GDistanceFieldAsyncQueue = new FDistanceFieldAsyncQueue();

GCardRepresentationAsyncQueue = new FCardRepresentationAsyncQueue();

(......)

}

(......)

}

void FEngineLoop::Tick()

{

(......)

// 每帧更新全局异步队列.

if (GDistanceFieldAsyncQueue)

{

QUICK_SCOPE_CYCLE_COUNTER(STAT_FEngineLoop_Tick_GDistanceFieldAsyncQueue);

GDistanceFieldAsyncQueue->ProcessAsyncTasks();

}

if (GCardRepresentationAsyncQueue)

{

QUICK_SCOPE_CYCLE_COUNTER(STAT_FEngineLoop_Tick_GCardRepresentationAsyncQueue);

GCardRepresentationAsyncQueue->ProcessAsyncTasks();

}

(......)

}

由于GDistanceFieldAsyncQueue是UE4就存在的类型,本节将忽略之,将精力放在GCardRepresentationAsyncQueue上。

对于CardRepresentation加入到全局构建队列GCardRepresentationAsyncQueue的时机,可在MeshCardRepresentation.cpp找到答案:

FCardRepresentationAsyncQueue* GCardRepresentationAsyncQueue = NULL;

// 开始缓存网格卡片代表.

void BeginCacheMeshCardRepresentation(const ITargetPlatform* TargetPlatform, UStaticMesh* StaticMeshAsset, FStaticMeshRenderData& RenderData, const FString& DistanceFieldKey, FSourceMeshDataForDerivedDataTask* OptionalSourceMeshData)

{

static const auto CVarCards = IConsoleManager::Get().FindTConsoleVariableDataInt(TEXT("r.MeshCardRepresentation"));

if (CVarCards->GetValueOnAnyThread() != 0)

{

FString Key = BuildCardRepresentationDerivedDataKey(DistanceFieldKey);

if (RenderData.LODResources.IsValidIndex(0))

{

// 构建FCardRepresentationData实例.

if (!RenderData.LODResources[0].CardRepresentationData)

{

RenderData.LODResources[0].CardRepresentationData = new FCardRepresentationData();

}

const FMeshBuildSettings& BuildSettings = StaticMeshAsset->GetSourceModel(0).BuildSettings;

UStaticMesh* MeshToGenerateFrom = StaticMeshAsset;

// 缓存FCardRepresentationData.

RenderData.LODResources[0].CardRepresentationData->CacheDerivedData(Key, TargetPlatform, StaticMeshAsset, MeshToGenerateFrom, BuildSettings.bGenerateDistanceFieldAsIfTwoSided, OptionalSourceMeshData);

}

}

}

// 缓存FCardRepresentationData.

void FCardRepresentationData::CacheDerivedData(const FString& InDDCKey, const ITargetPlatform* TargetPlatform, UStaticMesh* Mesh, UStaticMesh* GenerateSource, bool bGenerateDistanceFieldAsIfTwoSided, FSourceMeshDataForDerivedDataTask* OptionalSourceMeshData)

{

TArray<uint8> DerivedData;

(......)

{

COOK_STAT(Timer.TrackCyclesOnly());

// 创建新的构建任务FAsyncCardRepresentationTask.

FAsyncCardRepresentationTask* NewTask = new FAsyncCardRepresentationTask;

NewTask->DDCKey = InDDCKey;

check(Mesh && GenerateSource);

NewTask->StaticMesh = Mesh;

NewTask->GenerateSource = GenerateSource;

NewTask->GeneratedCardRepresentation = new FCardRepresentationData();

NewTask->bGenerateDistanceFieldAsIfTwoSided = bGenerateDistanceFieldAsIfTwoSided;

// 处理材质混合模式.

for (int32 MaterialIndex = 0; MaterialIndex < Mesh->GetStaticMaterials().Num(); MaterialIndex++)

{

FSignedDistanceFieldBuildMaterialData MaterialData;

// Default material blend mode

MaterialData.BlendMode = BLEND_Opaque;

MaterialData.bTwoSided = false;

if (Mesh->GetStaticMaterials()[MaterialIndex].MaterialInterface)

{

MaterialData.BlendMode = Mesh->GetStaticMaterials()[MaterialIndex].MaterialInterface->GetBlendMode();

MaterialData.bTwoSided = Mesh->GetStaticMaterials()[MaterialIndex].MaterialInterface->IsTwoSided();

}

NewTask->MaterialBlendModes.Add(MaterialData);

}

// Nanite材质用一个粗糙表示覆盖源静态网格。在构建网格SDF之前,需要加载原始数据。

if (OptionalSourceMeshData)

{

NewTask->SourceMeshData = *OptionalSourceMeshData;

}

// 创建Nanite的粗糙代表.

else if (Mesh->NaniteSettings.bEnabled)

{

IMeshBuilderModule& MeshBuilderModule = IMeshBuilderModule::GetForPlatform(TargetPlatform);

if (!MeshBuilderModule.BuildMeshVertexPositions(Mesh, NewTask->SourceMeshData.TriangleIndices, NewTask->SourceMeshData.VertexPositions))

{

UE_LOG(LogStaticMesh, Error, TEXT("Failed to build static mesh. See previous line(s) for details."));

}

}

// 加入全局队列GCardRepresentationAsyncQueue.

GCardRepresentationAsyncQueue->AddTask(NewTask);

}

}

6.5.3.3 GenerateCardRepresentationData

跟踪FCardRepresentationAsyncQueue的调用堆栈,不难查到其最终会进入FMeshUtilities::GenerateCardRepresentationData接口,此接口会执行具体的网格卡片构建逻辑:

// Engine\Source\Developer\MeshUtilities\Private\MeshCardRepresentationUtilities.cpp

bool FMeshUtilities::GenerateCardRepresentationData(

FString MeshName,

const FSourceMeshDataForDerivedDataTask& SourceMeshData,

const FStaticMeshLODResources& LODModel,

class FQueuedThreadPool& ThreadPool,

const TArray<FSignedDistanceFieldBuildMaterialData>& MaterialBlendModes,

const FBoxSphereBounds& Bounds,

const FDistanceFieldVolumeData* DistanceFieldVolumeData,

bool bGenerateAsIfTwoSided,

FCardRepresentationData& OutData)

{

// 构建Embree场景.

FEmbreeScene EmbreeScene;

MeshRepresentation::SetupEmbreeScene(MeshName,

SourceMeshData,

LODModel,

MaterialBlendModes,

bGenerateAsIfTwoSided,

EmbreeScene);

if (!EmbreeScene.EmbreeScene)

{

return false;

}

// 处理上下文.

FGenerateCardMeshContext Context(MeshName, EmbreeScene.EmbreeScene, EmbreeScene.EmbreeDevice, OutData);

// 构建网格卡片.

BuildMeshCards(DistanceFieldVolumeData ? DistanceFieldVolumeData->LocalSpaceMeshBounds : Bounds.GetBox(), Context, OutData);

MeshRepresentation::DeleteEmbreeScene(EmbreeScene);

(......)

return true;

}

由此可知,构建网格卡片过程使用了Embree第三方库。

关于Embree

Embree是由Intel开发维护的开源库,是一个高性能光线追踪内核的集合,帮助开发者提高逼真渲染的应用程序的性能。它的特性有高级头发几何体、运动模糊、动态场景、多关卡实例:

Embree的实现和技术有以下特点:

- 内核为支持SSE、AVX、AVX2和AVX-512指令的最新Intel处理器进行了优化。

- 支持运行时代码选择,以选择遍历和构建算法,以最佳匹配的CPU指令集。

- 支持使用Intel SPMD程序编译器(ISPC)编写的应用程序,还提供了核心射线追踪算法的ISPC接口。

- 包含针对非缓存一致的工作负载(如蒙特卡罗光线追踪算法)和缓存一致的工作负载(如主要可见性和硬阴影射线)优化的算法。

简而言之,Embree是基于CPU的高度优化的光线追踪渲染加速器,但不支持GPU的硬件加速。正是这个特点,Lumen的网格卡片构建时间主要取决于CPU的性能。

构建的核心逻辑位于BuildMeshCards:

void BuildMeshCards(const FBox& MeshBounds, const FGenerateCardMeshContext& Context, FCardRepresentationData& OutData)

{

static const auto CVarMeshCardRepresentationMinSurface = IConsoleManager::Get().FindTConsoleVariableDataFloat(TEXT("r.MeshCardRepresentation.MinSurface"));

const float MinSurfaceThreshold = CVarMeshCardRepresentationMinSurface->GetValueOnAnyThread();

// 确保生成的卡片包围盒不为空.

const FVector MeshCardsBoundsCenter = MeshBounds.GetCenter();

const FVector MeshCardsBoundsExtent = FVector::Max(MeshBounds.GetExtent() + 1.0f, FVector(5.0f));

const FBox MeshCardsBounds(MeshCardsBoundsCenter - MeshCardsBoundsExtent, MeshCardsBoundsCenter + MeshCardsBoundsExtent);

// 初始化部分输出数据.

OutData.MeshCardsBuildData.Bounds = MeshCardsBounds;

OutData.MeshCardsBuildData.MaxLODLevel = 1;

OutData.MeshCardsBuildData.CardBuildData.Reset();

// 处理采样和体素数据.

const float SamplesPerWorldUnit = 1.0f / 10.0f;

const int32 MinSamplesPerAxis = 4;

const int32 MaxSamplesPerAxis = 64;

FIntVector VolumeSizeInVoxels;

VolumeSizeInVoxels.X = FMath::Clamp<int32>(MeshCardsBounds.GetSize().X * SamplesPerWorldUnit, MinSamplesPerAxis, MaxSamplesPerAxis);

VolumeSizeInVoxels.Y = FMath::Clamp<int32>(MeshCardsBounds.GetSize().Y * SamplesPerWorldUnit, MinSamplesPerAxis, MaxSamplesPerAxis);

VolumeSizeInVoxels.Z = FMath::Clamp<int32>(MeshCardsBounds.GetSize().Z * SamplesPerWorldUnit, MinSamplesPerAxis, MaxSamplesPerAxis);

// 单个体素的大小.

const FVector VoxelExtent = MeshCardsBounds.GetSize() / FVector(VolumeSizeInVoxels);

// 随机在半球上生成射线方向.

TArray<FVector4> RayDirectionsOverHemisphere;

{

FRandomStream RandomStream(0);

MeshUtilities::GenerateStratifiedUniformHemisphereSamples(64, RandomStream, RayDirectionsOverHemisphere);

}

// 遍历6个朝向, 给每个朝向生成卡片数据.

for (int32 Orientation = 0; Orientation < 6; ++Orientation)

{

// 初始化高度场和射线等数据.

FIntPoint HeighfieldSize(0, 0);

FVector RayDirection(0.0f, 0.0f, 0.0f);

FVector RayOriginFrame = MeshCardsBounds.Min;

FVector HeighfieldStepX(0.0f, 0.0f, 0.0f);

FVector HeighfieldStepY(0.0f, 0.0f, 0.0f);

float MaxRayT = 0.0f;

int32 MeshSliceNum = 0;

// 根据朝向调整高度场和射线数据.

switch (Orientation / 2)

{

case 0: // 朝向: -X, +X

MaxRayT = MeshCardsBounds.GetSize().X + 0.1f;

MeshSliceNum = VolumeSizeInVoxels.X;

HeighfieldSize.X = VolumeSizeInVoxels.Y;

HeighfieldSize.Y = VolumeSizeInVoxels.Z;

HeighfieldStepX = FVector(0.0f, MeshCardsBounds.GetSize().Y / HeighfieldSize.X, 0.0f);

HeighfieldStepY = FVector(0.0f, 0.0f, MeshCardsBounds.GetSize().Z / HeighfieldSize.Y);

break;

case 1: // 朝向: -Y, +Y

MaxRayT = MeshCardsBounds.GetSize().Y + 0.1f;

MeshSliceNum = VolumeSizeInVoxels.Y;

HeighfieldSize.X = VolumeSizeInVoxels.X;

HeighfieldSize.Y = VolumeSizeInVoxels.Z;

HeighfieldStepX = FVector(MeshCardsBounds.GetSize().X / HeighfieldSize.X, 0.0f, 0.0f);

HeighfieldStepY = FVector(0.0f, 0.0f, MeshCardsBounds.GetSize().Z / HeighfieldSize.Y);

break;

case 2: // 朝向: -Z, +Z

MaxRayT = MeshCardsBounds.GetSize().Z + 0.1f;

MeshSliceNum = VolumeSizeInVoxels.Z;

HeighfieldSize.X = VolumeSizeInVoxels.X;

HeighfieldSize.Y = VolumeSizeInVoxels.Y;

HeighfieldStepX = FVector(MeshCardsBounds.GetSize().X / HeighfieldSize.X, 0.0f, 0.0f);

HeighfieldStepY = FVector(0.0f, MeshCardsBounds.GetSize().Y / HeighfieldSize.Y, 0.0f);

break;

}

// 根据朝向调整射线方向.

switch (Orientation)

{

case 0:

RayDirection.X = +1.0f;

break;

case 1:

RayDirection.X = -1.0f;

RayOriginFrame.X = MeshCardsBounds.Max.X;

break;

case 2:

RayDirection.Y = +1.0f;

break;

case 3:

RayDirection.Y = -1.0f;

RayOriginFrame.Y = MeshCardsBounds.Max.Y;

break;

case 4:

RayDirection.Z = +1.0f;

break;

case 5:

RayDirection.Z = -1.0f;

RayOriginFrame.Z = MeshCardsBounds.Max.Z;

break;

default:

check(false);

};

TArray<TArray<FSurfacePoint, TInlineAllocator<16>>> HeightfieldLayers;

HeightfieldLayers.SetNum(HeighfieldSize.X * HeighfieldSize.Y);

// 填充表面点的数据.

{

TRACE_CPUPROFILER_EVENT_SCOPE(FillSurfacePoints);

TArray<float> Heightfield;

Heightfield.SetNum(HeighfieldSize.X * HeighfieldSize.Y);

for (int32 HeighfieldY = 0; HeighfieldY < HeighfieldSize.Y; ++HeighfieldY)

{

for (int32 HeighfieldX = 0; HeighfieldX < HeighfieldSize.X; ++HeighfieldX)

{

Heightfield[HeighfieldX + HeighfieldY * HeighfieldSize.X] = -1.0f;

}

}

for (int32 HeighfieldY = 0; HeighfieldY < HeighfieldSize.Y; ++HeighfieldY)

{

for (int32 HeighfieldX = 0; HeighfieldX < HeighfieldSize.X; ++HeighfieldX)

{

FVector RayOrigin = RayOriginFrame;

RayOrigin += (HeighfieldX + 0.5f) * HeighfieldStepX;

RayOrigin += (HeighfieldY + 0.5f) * HeighfieldStepY;

float StepTMin = 0.0f;

for (int32 StepIndex = 0; StepIndex < 64; ++StepIndex)

{

FEmbreeRay EmbreeRay;

EmbreeRay.ray.org_x = RayOrigin.X;

EmbreeRay.ray.org_y = RayOrigin.Y;

EmbreeRay.ray.org_z = RayOrigin.Z;

EmbreeRay.ray.dir_x = RayDirection.X;

EmbreeRay.ray.dir_y = RayDirection.Y;

EmbreeRay.ray.dir_z = RayDirection.Z;

EmbreeRay.ray.tnear = StepTMin;

EmbreeRay.ray.tfar = FLT_MAX;

FEmbreeIntersectionContext EmbreeContext;

rtcInitIntersectContext(&EmbreeContext);

rtcIntersect1(Context.FullMeshEmbreeScene, &EmbreeContext, &EmbreeRay);

if (EmbreeRay.hit.geomID != RTC_INVALID_GEOMETRY_ID && EmbreeRay.hit.primID != RTC_INVALID_GEOMETRY_ID)

{

const FVector SurfacePoint = RayOrigin + RayDirection * EmbreeRay.ray.tfar;

const FVector SurfaceNormal = EmbreeRay.GetHitNormal();

const float NdotD = FVector::DotProduct(RayDirection, SurfaceNormal);

const bool bPassCullTest = EmbreeContext.IsHitTwoSided() || NdotD <= 0.0f;

const bool bPassProjectionAngleTest = FMath::Abs(NdotD) >= FMath::Cos(75.0f * (PI / 180.0f));

const float MinDistanceBetweenPoints = (MaxRayT / MeshSliceNum);

const bool bPassDistanceToAnotherSurfaceTest = EmbreeRay.ray.tnear <= 0.0f || (EmbreeRay.ray.tfar - EmbreeRay.ray.tnear > MinDistanceBetweenPoints);

if (bPassCullTest && bPassProjectionAngleTest && bPassDistanceToAnotherSurfaceTest)

{

const bool bIsInsideMesh = IsSurfacePointInsideMesh(Context.FullMeshEmbreeScene, SurfacePoint, SurfaceNormal, RayDirectionsOverHemisphere);

if (!bIsInsideMesh)

{

HeightfieldLayers[HeighfieldX + HeighfieldY * HeighfieldSize.X].Add(

{ EmbreeRay.ray.tnear, EmbreeRay.ray.tfar }

);

}

}

StepTMin = EmbreeRay.ray.tfar + 0.01f;

}

else

{

break;

}

}

}

}

}

const int32 MinCardHits = FMath::Floor(HeighfieldSize.X * HeighfieldSize.Y * MinSurfaceThreshold);

TArray<FPlacedCard, TInlineAllocator<16>> PlacedCards;

int32 PlacedCardsHits = 0;

// 放置一个默认卡片.

{

FPlacedCard PlacedCard;

PlacedCard.SliceMin = 0;

PlacedCard.SliceMax = MeshSliceNum;

PlacedCards.Add(PlacedCard);

PlacedCardsHits = UpdatePlacedCards(PlacedCards, RayOriginFrame, RayDirection, HeighfieldStepX, HeighfieldStepY, HeighfieldSize, MeshSliceNum, MaxRayT, MinCardHits, VoxelExtent, HeightfieldLayers);

if (PlacedCardsHits < MinCardHits)

{

PlacedCards.Reset();

}

}

SerializePlacedCards(PlacedCards, /*LOD level*/ 0, Orientation, MinCardHits, MeshCardsBounds, OutData);

// 尝试通过拆分现有的卡片去放置更多的卡片.

for (uint32 CardPlacementIteration = 0; CardPlacementIteration < 4; ++CardPlacementIteration)

{

TArray<FPlacedCard, TInlineAllocator<16>> BestPlacedCards;

int32 BestPlacedCardHits = PlacedCardsHits;

for (int32 PlacedCardIndex = 0; PlacedCardIndex < PlacedCards.Num(); ++PlacedCardIndex)

{

const FPlacedCard& PlacedCard = PlacedCards[PlacedCardIndex];

for (int32 SliceIndex = PlacedCard.SliceMin + 2; SliceIndex < PlacedCard.SliceMax; ++SliceIndex)

{

TArray<FPlacedCard, TInlineAllocator<16>> TempPlacedCards(PlacedCards);

FPlacedCard NewPlacedCard;

NewPlacedCard.SliceMin = SliceIndex;

NewPlacedCard.SliceMax = PlacedCard.SliceMax;

TempPlacedCards[PlacedCardIndex].SliceMax = SliceIndex - 1;

TempPlacedCards.Insert(NewPlacedCard, PlacedCardIndex + 1);

const int32 NumHits = UpdatePlacedCards(TempPlacedCards, RayOriginFrame, RayDirection, HeighfieldStepX, HeighfieldStepY, HeighfieldSize, MeshSliceNum, MaxRayT, MinCardHits, VoxelExtent, HeightfieldLayers);

if (NumHits > BestPlacedCardHits)

{

BestPlacedCards = TempPlacedCards;

BestPlacedCardHits = NumHits;

}

}

}

if (BestPlacedCardHits >= PlacedCardsHits + MinCardHits)

{

PlacedCards = BestPlacedCards;

PlacedCardsHits = BestPlacedCardHits;

}

}

SerializePlacedCards(PlacedCards, /*LOD level*/ 1, Orientation, MinCardHits, MeshCardsBounds, OutData);

} // for (int32 Orientation = 0; Orientation < 6; ++Orientation)

}

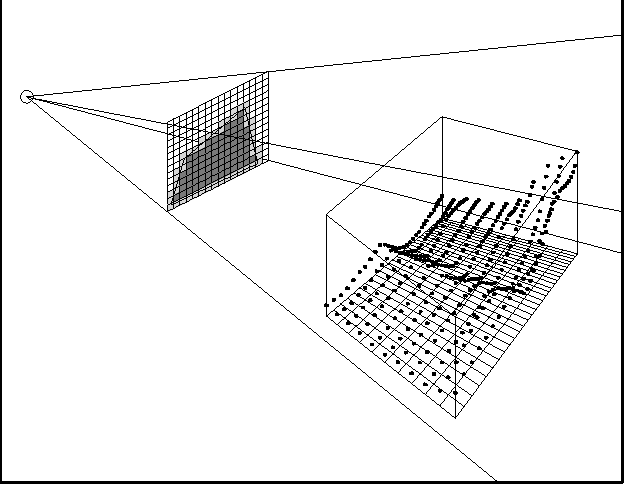

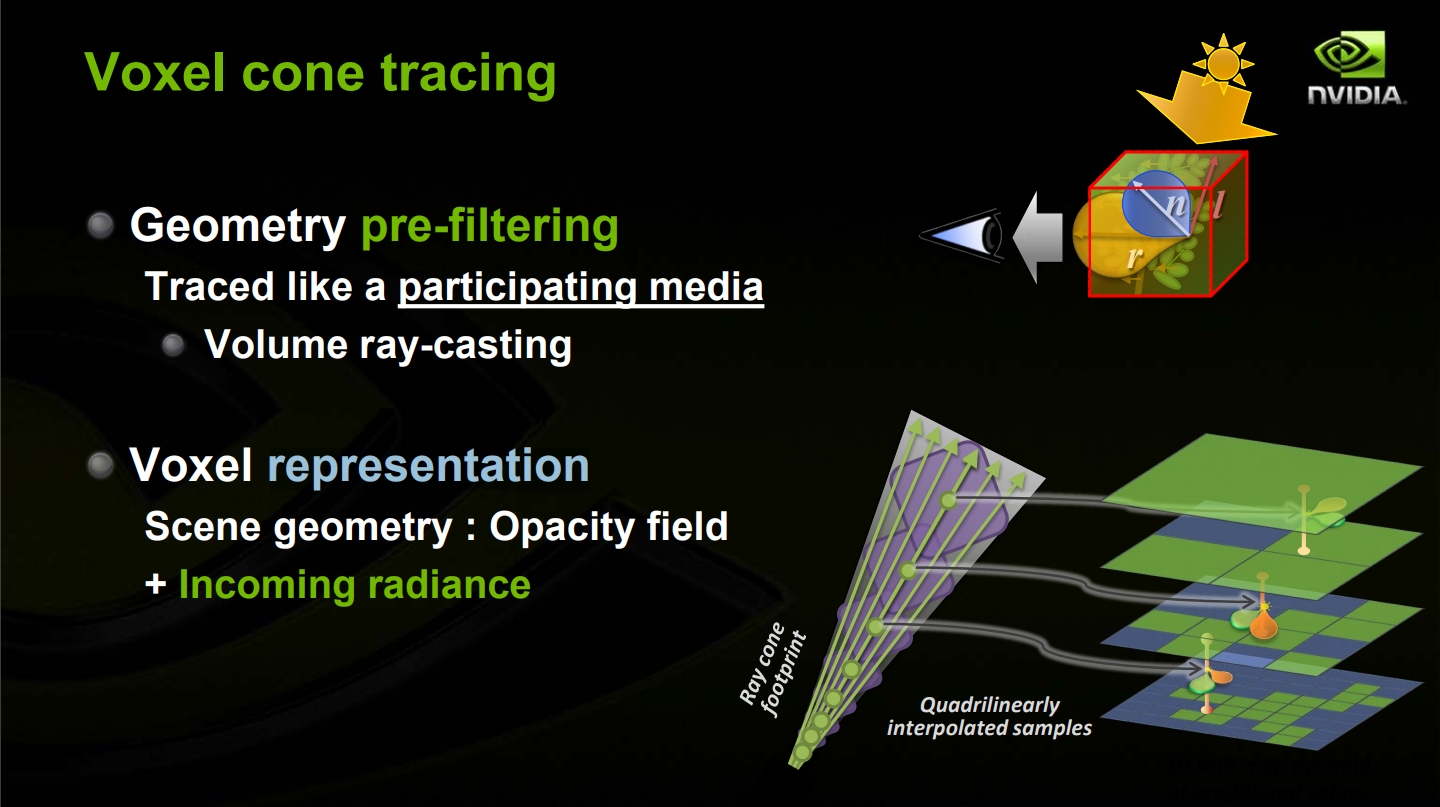

以上代码显示构建卡牌数据时使用了高度场光线追踪(Height Field Ray Tracing)来加速,而光线追踪多年前就存在的技术。它的核心思想和步骤在于将网格离散化成大小相等的3D体素,然后根据分辨率大小从摄像机位置向每个像素位置发射一条光线和3D体素相交测试,从而渲染出高度场的轮廓。而高度场的轮廓将屏幕划分为高度场覆盖区域和高度场以上区域的分界线:

这样获得的轮廓存在明显的锯齿,论文Ray Tracing Height Fields提供了高度场平面、线性近似平面、三角面、双线性表面等方法来重建表面数据以缓解锯齿。

经过以上构建之后,可以出现如下所示的网格卡片数据:

上:网格正常数据;下:网格卡片数据可视化。

网格卡片数据存在LOD,会根据镜头远近选择对应等级的LOD(点击看视频)。

此外,UE5构建出来的网格距离场数据做了改进,利用稀疏存储提升了精度(下图左),明显要好于UE4(下图右)。

6.5.4 Lumen渲染流程

Lumen的主要渲染流程依然在FDeferredShadingSceneRenderer::Render中:

void FDeferredShadingSceneRenderer::Render(FRDGBuilder& GraphBuilder)

{

(......)

bool bAnyLumenEnabled = false;

if (!IsSimpleForwardShadingEnabled(ShaderPlatform))

{

(......)

// 检测是否有视图启用了Lumen.

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

bAnyLumenEnabled = bAnyLumenEnabled

|| GetViewPipelineState(View).DiffuseIndirectMethod == EDiffuseIndirectMethod::Lumen

|| GetViewPipelineState(View).ReflectionsMethod == EReflectionsMethod::Lumen;

}

(......)

}

(......)

// PrePass.

RenderPrePass(...);

(......)

// 更新Lumen场景.

UpdateLumenScene(GraphBuilder);

// 如果在BasePass之前执行遮挡剔除, 则在RenderBasePass之前渲染Lumen场景光照.

// bOcclusionBeforeBasePass默认为false.

if (bOcclusionBeforeBasePass)

{

{

LLM_SCOPE_BYTAG(Lumen);

RenderLumenSceneLighting(GraphBuilder, Views[0]);

}

ComputeVolumetricFog(GraphBuilder);

}

(......)

// BasePass.

RenderBasePass(...);

(......)

// BasePass之后的Lumen光照.

if (!bOcclusionBeforeBasePass)

{

const bool bAfterBasePass = true;

// 渲染阴影.

AllocateVirtualShadowMaps(bAfterBasePass);

RenderShadowDepthMaps(GraphBuilder, InstanceCullingManager);

{

LLM_SCOPE_BYTAG(Lumen);

// 渲染Lumen场景光照.

RenderLumenSceneLighting(GraphBuilder, Views[0]);

}

AddServiceLocalQueuePass(GraphBuilder);

}

(......)

// 渲染Lumen可视化.

RenderLumenSceneVisualization(GraphBuilder, SceneTextures);

// 渲染非直接漫反射和AO.

RenderDiffuseIndirectAndAmbientOcclusion(GraphBuilder, SceneTextures, LightingChannelsTexture, true);

(......)

}

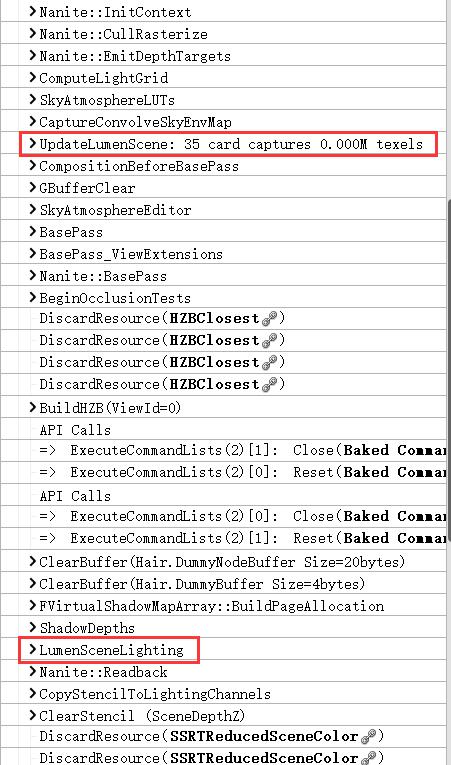

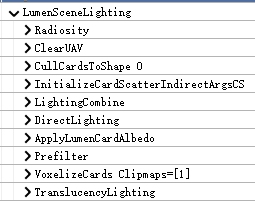

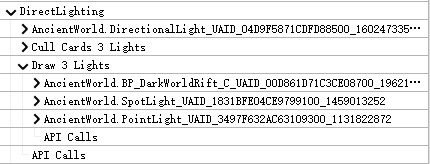

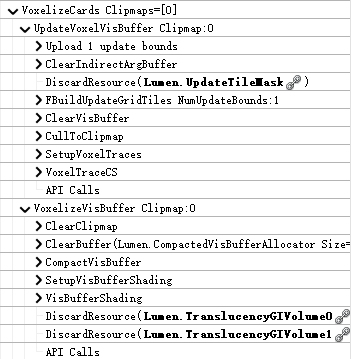

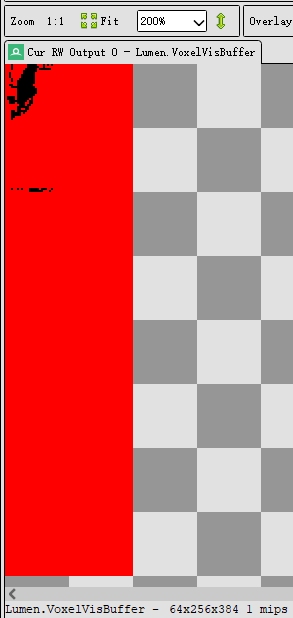

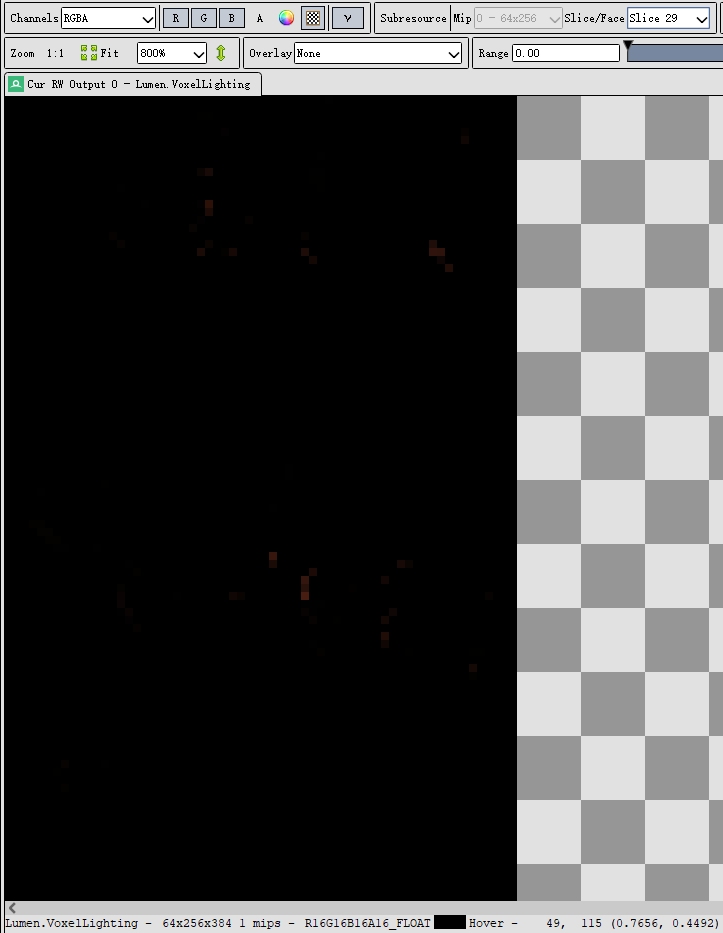

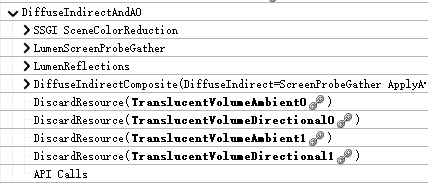

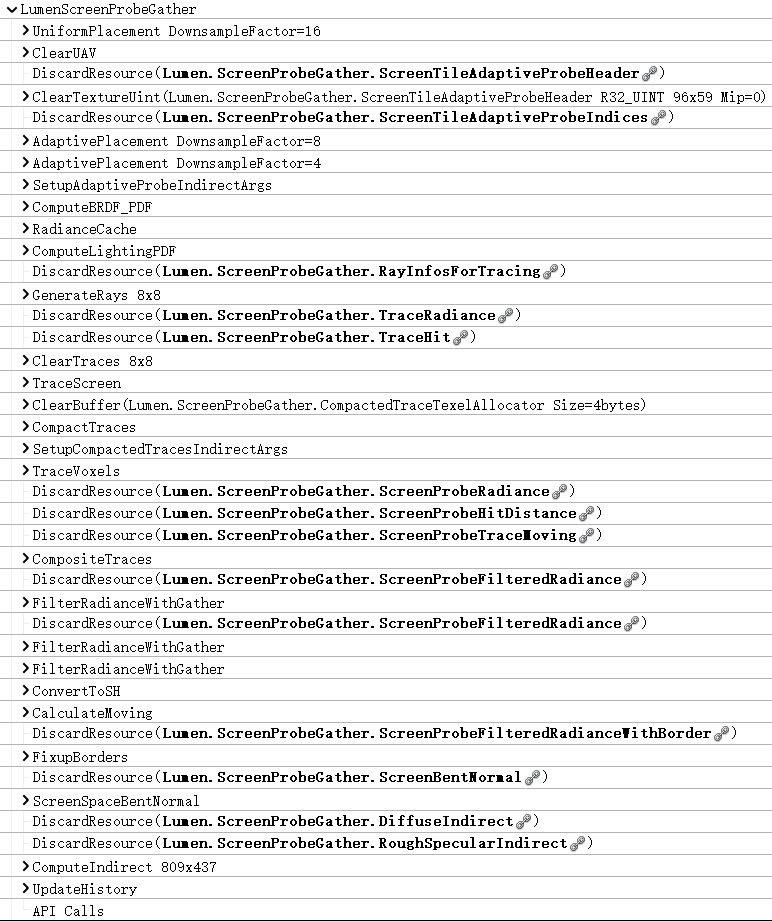

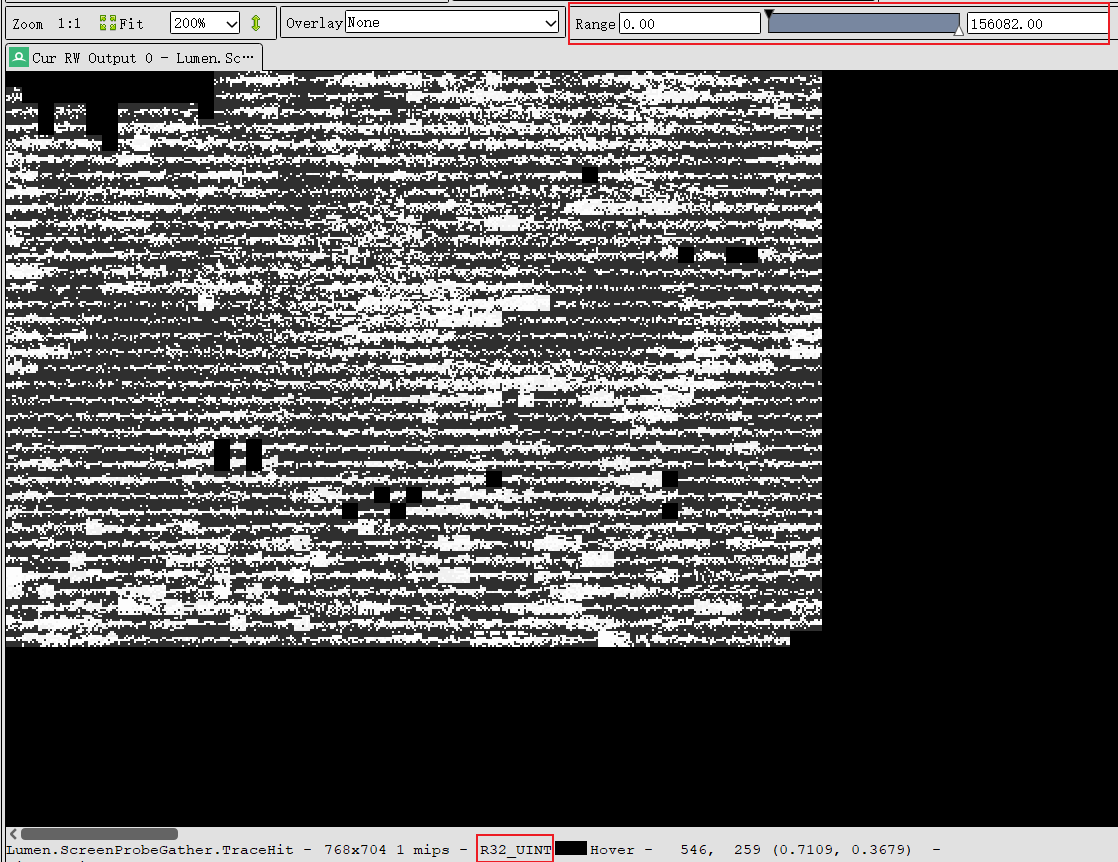

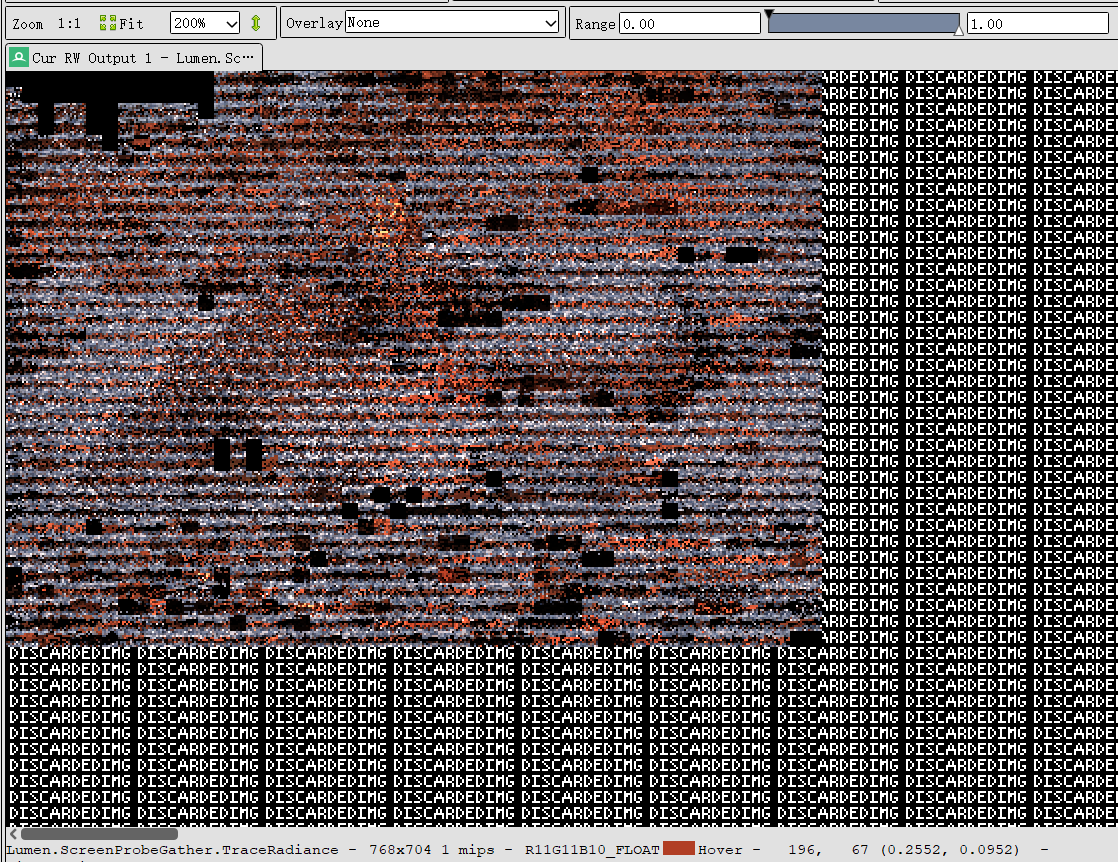

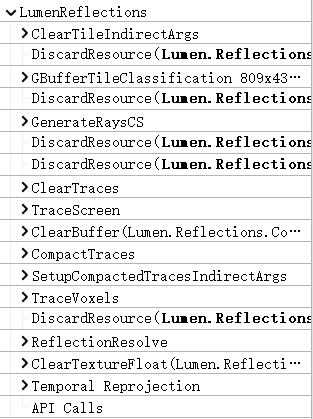

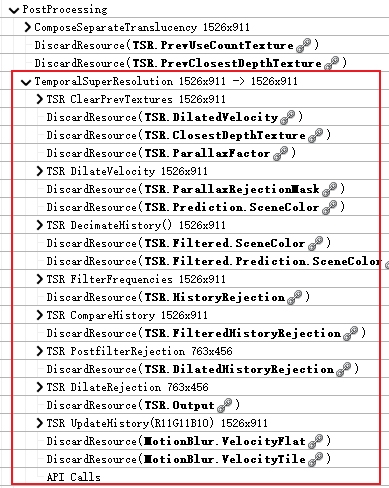

下面的红框是RenderDoc截帧中Lumen的执行步骤:

Lumen的光照主要有更新场景UpdateLumenScene和计算场景光照RenderLumenSceneLighting两个阶段。

6.5.5 Lumen场景更新

6.5.5.1 UpdateLumenScene

Lumen场景更新主要由UpdateLumenScene承担:

// Engine\Source\Runtime\Renderer\Private\Lumen\LumenSceneRendering.cpp

void FDeferredShadingSceneRenderer::UpdateLumenScene(FRDGBuilder& GraphBuilder)

{

LLM_SCOPE_BYTAG(Lumen);

FViewInfo& View = Views[0];

const FPerViewPipelineState& ViewPipelineState = GetViewPipelineState(View);

const bool bAnyLumenActive = ViewPipelineState.DiffuseIndirectMethod == EDiffuseIndirectMethod::Lumen || ViewPipelineState.ReflectionsMethod == EReflectionsMethod::Lumen;

if (bAnyLumenActive

// 非主要视图更新场景

&& !View.bIsPlanarReflection

&& !View.bIsSceneCapture

&& !View.bIsReflectionCapture

&& View.ViewState)

{

const double StartTime = FPlatformTime::Seconds();

// 获取Lumen场景和卡片数据.

FLumenSceneData& LumenSceneData = *Scene->LumenSceneData;

TArray<FCardRenderData, SceneRenderingAllocator>& CardsToRender = LumenCardRenderer.CardsToRender;

RDG_EVENT_SCOPE(GraphBuilder, "UpdateLumenScene: %u card captures %.3fM texels", CardsToRender.Num(), LumenCardRenderer.NumCardTexelsToCapture / 1e6f);

// 更新卡片场景缓冲.

UpdateCardSceneBuffer(GraphBuilder.RHICmdList, ViewFamily, Scene);

// 因为更新了Lumen的图元映射缓冲, 所以需要重新创建视图统一缓冲区.

Lumen::SetupViewUniformBufferParameters(Scene, *View.CachedViewUniformShaderParameters);

View.ViewUniformBuffer = TUniformBufferRef<FViewUniformShaderParameters>::CreateUniformBufferImmediate(*View.CachedViewUniformShaderParameters, UniformBuffer_SingleFrame);

LumenCardRenderer.CardIdsToRender.Empty(CardsToRender.Num());

// 捕捉卡片的临时深度缓冲区.

const FRDGTextureDesc DepthStencilAtlasDesc = FRDGTextureDesc::Create2D(LumenSceneData.MaxAtlasSize, PF_DepthStencil, FClearValueBinding::DepthZero, TexCreate_ShaderResource | TexCreate_DepthStencilTargetable | TexCreate_NoFastClear);

FRDGTextureRef DepthStencilAtlasTexture = GraphBuilder.CreateTexture(DepthStencilAtlasDesc, TEXT("Lumen.DepthStencilAtlas"));

if (CardsToRender.Num() > 0)

{

FRHIBuffer* PrimitiveIdVertexBuffer = nullptr;

FInstanceCullingResult InstanceCullingResult;

// 裁剪卡片, 支持GPU和非GPU裁剪.

#if GPUCULL_TODO

if (Scene->GPUScene.IsEnabled())

{

int32 MaxInstances = 0;

int32 VisibleMeshDrawCommandsNum = 0;

int32 NewPassVisibleMeshDrawCommandsNum = 0;

FInstanceCullingContext InstanceCullingContext(nullptr, TArrayView<const int32>(&View.GPUSceneViewId, 1));

SetupGPUInstancedDraws(InstanceCullingContext, LumenCardRenderer.MeshDrawCommands, false, MaxInstances, VisibleMeshDrawCommandsNum, NewPassVisibleMeshDrawCommandsNum);

// Not supposed to do any compaction here.

ensure(VisibleMeshDrawCommandsNum == LumenCardRenderer.MeshDrawCommands.Num());

InstanceCullingContext.BuildRenderingCommands(GraphBuilder, Scene->GPUScene, View.DynamicPrimitiveCollector.GetPrimitiveIdRange(), InstanceCullingResult);

}

else

#endif // GPUCULL_TODO

{

// Prepare primitive Id VB for rendering mesh draw commands.

if (LumenCardRenderer.MeshDrawPrimitiveIds.Num() > 0)

{

const uint32 PrimitiveIdBufferDataSize = LumenCardRenderer.MeshDrawPrimitiveIds.Num() * sizeof(int32);

FPrimitiveIdVertexBufferPoolEntry Entry = GPrimitiveIdVertexBufferPool.Allocate(PrimitiveIdBufferDataSize);

PrimitiveIdVertexBuffer = Entry.BufferRHI;

void* RESTRICT Data = RHILockBuffer(PrimitiveIdVertexBuffer, 0, PrimitiveIdBufferDataSize, RLM_WriteOnly);

FMemory::Memcpy(Data, LumenCardRenderer.MeshDrawPrimitiveIds.GetData(), PrimitiveIdBufferDataSize);

RHIUnlockBuffer(PrimitiveIdVertexBuffer);

GPrimitiveIdVertexBufferPool.ReturnToFreeList(Entry);

}

}

FRDGTextureRef AlbedoAtlasTexture = GraphBuilder.RegisterExternalTexture(LumenSceneData.AlbedoAtlas);

FRDGTextureRef NormalAtlasTexture = GraphBuilder.RegisterExternalTexture(LumenSceneData.NormalAtlas);

FRDGTextureRef EmissiveAtlasTexture = GraphBuilder.RegisterExternalTexture(LumenSceneData.EmissiveAtlas);

uint32 NumRects = 0;

FRDGBufferRef RectMinMaxBuffer = nullptr;

{

// 上传卡片id,用于在待渲染卡片上操作的批量绘制。

TArray<FUintVector4, SceneRenderingAllocator> RectMinMaxToRender;

RectMinMaxToRender.Reserve(CardsToRender.Num());

for (const FCardRenderData& CardRenderData : CardsToRender)

{

FIntRect AtlasRect = CardRenderData.AtlasAllocation;

FUintVector4 Rect;

Rect.X = FMath::Max(AtlasRect.Min.X, 0);

Rect.Y = FMath::Max(AtlasRect.Min.Y, 0);

Rect.Z = FMath::Max(AtlasRect.Max.X, 0);

Rect.W = FMath::Max(AtlasRect.Max.Y, 0);

RectMinMaxToRender.Add(Rect);

}

NumRects = CardsToRender.Num();

RectMinMaxBuffer = GraphBuilder.CreateBuffer(FRDGBufferDesc::CreateUploadDesc(sizeof(FUintVector4), FMath::RoundUpToPowerOfTwo(NumRects)), TEXT("Lumen.RectMinMaxBuffer"));

FPixelShaderUtils::UploadRectMinMaxBuffer(GraphBuilder, RectMinMaxToRender, RectMinMaxBuffer);

FRDGBufferSRVRef RectMinMaxBufferSRV = GraphBuilder.CreateSRV(FRDGBufferSRVDesc(RectMinMaxBuffer, PF_R32G32B32A32_UINT));

ClearLumenCards(GraphBuilder, View, AlbedoAtlasTexture, NormalAtlasTexture, EmissiveAtlasTexture, DepthStencilAtlasTexture, LumenSceneData.MaxAtlasSize, RectMinMaxBufferSRV, NumRects);

}

// 缓存视图信息.

FViewInfo* SharedView = View.CreateSnapshot();

{

SharedView->DynamicPrimitiveCollector = FGPUScenePrimitiveCollector(&GetGPUSceneDynamicContext());

SharedView->StereoPass = eSSP_FULL;

SharedView->DrawDynamicFlags = EDrawDynamicFlags::ForceLowestLOD;

// Don't do material texture mip biasing in proxy card rendering

SharedView->MaterialTextureMipBias = 0;

TRefCountPtr<IPooledRenderTarget> NullRef;

FPlatformMemory::Memcpy(&SharedView->PrevViewInfo.HZB, &NullRef, sizeof(SharedView->PrevViewInfo.HZB));

SharedView->CachedViewUniformShaderParameters = MakeUnique<FViewUniformShaderParameters>();

SharedView->CachedViewUniformShaderParameters->PrimitiveSceneData = Scene->GPUScene.PrimitiveBuffer.SRV;

SharedView->CachedViewUniformShaderParameters->InstanceSceneData = Scene->GPUScene.InstanceDataBuffer.SRV;

SharedView->CachedViewUniformShaderParameters->LightmapSceneData = Scene->GPUScene.LightmapDataBuffer.SRV;

SharedView->ViewUniformBuffer = TUniformBufferRef<FViewUniformShaderParameters>::CreateUniformBufferImmediate(*SharedView->CachedViewUniformShaderParameters, UniformBuffer_SingleFrame);

}

// 设置场景的纹理缓存.

FLumenCardPassUniformParameters* PassUniformParameters = GraphBuilder.AllocParameters<FLumenCardPassUniformParameters>();

SetupSceneTextureUniformParameters(GraphBuilder, Scene->GetFeatureLevel(), /*SceneTextureSetupMode*/ ESceneTextureSetupMode::None, PassUniformParameters->SceneTextures);

// 捕获网格卡片.

{

FLumenCardPassParameters* PassParameters = GraphBuilder.AllocParameters<FLumenCardPassParameters>();

PassParameters->View = Scene->UniformBuffers.LumenCardCaptureViewUniformBuffer;

PassParameters->CardPass = GraphBuilder.CreateUniformBuffer(PassUniformParameters);

PassParameters->RenderTargets[0] = FRenderTargetBinding(AlbedoAtlasTexture, ERenderTargetLoadAction::ELoad);

PassParameters->RenderTargets[1] = FRenderTargetBinding(NormalAtlasTexture, ERenderTargetLoadAction::ELoad);

PassParameters->RenderTargets[2] = FRenderTargetBinding(EmissiveAtlasTexture, ERenderTargetLoadAction::ELoad);

PassParameters->RenderTargets.DepthStencil = FDepthStencilBinding(DepthStencilAtlasTexture, ERenderTargetLoadAction::ELoad, FExclusiveDepthStencil::DepthWrite_StencilNop);

InstanceCullingResult.GetDrawParameters(PassParameters->InstanceCullingDrawParams);

// 捕获网格卡片Pass.

GraphBuilder.AddPass(

RDG_EVENT_NAME("MeshCardCapture"),

PassParameters,

ERDGPassFlags::Raster,

[this, Scene = Scene, PrimitiveIdVertexBuffer, SharedView, &CardsToRender, PassParameters](FRHICommandList& RHICmdList)

{

QUICK_SCOPE_CYCLE_COUNTER(MeshPass);

// 将所有待渲染的卡片准备数据并提交绘制指令.

for (FCardRenderData& CardRenderData : CardsToRender)

{

if (CardRenderData.NumMeshDrawCommands > 0)

{

FIntRect AtlasRect = CardRenderData.AtlasAllocation;

RHICmdList.SetViewport(AtlasRect.Min.X, AtlasRect.Min.Y, 0.0f, AtlasRect.Max.X, AtlasRect.Max.Y, 1.0f);

CardRenderData.PatchView(RHICmdList, Scene, SharedView);

Scene->UniformBuffers.LumenCardCaptureViewUniformBuffer.UpdateUniformBufferImmediate(*SharedView->CachedViewUniformShaderParameters);

FGraphicsMinimalPipelineStateSet GraphicsMinimalPipelineStateSet;

#if GPUCULL_TODO

if (Scene->GPUScene.IsEnabled())

{

FRHIBuffer* DrawIndirectArgsBuffer = nullptr;

FRHIBuffer* InstanceIdOffsetBuffer = nullptr;

FInstanceCullingDrawParams& InstanceCullingDrawParams = PassParameters->InstanceCullingDrawParams;

if (InstanceCullingDrawParams.DrawIndirectArgsBuffer != nullptr && InstanceCullingDrawParams.InstanceIdOffsetBuffer != nullptr)

{

DrawIndirectArgsBuffer = InstanceCullingDrawParams.DrawIndirectArgsBuffer->GetRHI();

InstanceIdOffsetBuffer = InstanceCullingDrawParams.InstanceIdOffsetBuffer->GetRHI();

}

// GPU裁剪调用GPUInstanced接口.

SubmitGPUInstancedMeshDrawCommandsRange(

LumenCardRenderer.MeshDrawCommands,

GraphicsMinimalPipelineStateSet,

CardRenderData.StartMeshDrawCommandIndex,

CardRenderData.NumMeshDrawCommands,

1,

InstanceIdOffsetBuffer,

DrawIndirectArgsBuffer,

RHICmdList);

}

else

#endif // GPUCULL_TODO

{

// 非GPU裁剪调用普通绘制接口.

SubmitMeshDrawCommandsRange(

LumenCardRenderer.MeshDrawCommands,

GraphicsMinimalPipelineStateSet,

PrimitiveIdVertexBuffer,

0,

false,

CardRenderData.StartMeshDrawCommandIndex,

CardRenderData.NumMeshDrawCommands,

1,

RHICmdList);

}

}

}

}

);

}

// 记录待渲染卡片的id和检测是否存在需要渲染Nanite网格的标记.

bool bAnyNaniteMeshes = false;

for (FCardRenderData& CardRenderData : CardsToRender)

{

bAnyNaniteMeshes = bAnyNaniteMeshes || CardRenderData.NaniteInstanceIds.Num() > 0 || CardRenderData.bDistantScene;

LumenCardRenderer.CardIdsToRender.Add(CardRenderData.CardIndex);

}

// 渲染Lumen场景的Nanite网格.

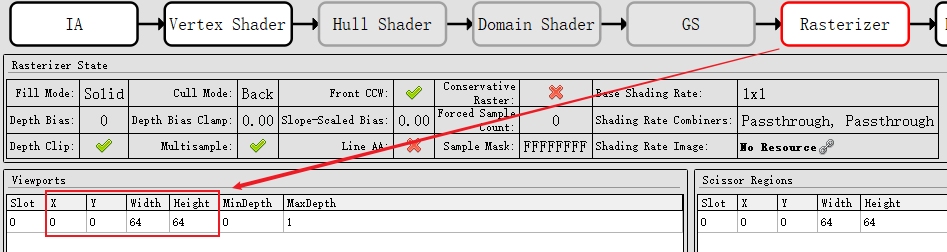

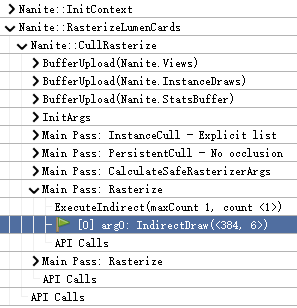

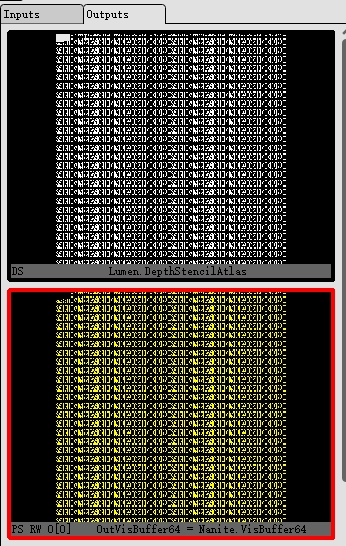

if (UseNanite(ShaderPlatform) && ViewFamily.EngineShowFlags.NaniteMeshes && bAnyNaniteMeshes)

{

TRACE_CPUPROFILER_EVENT_SCOPE(NaniteMeshPass);

QUICK_SCOPE_CYCLE_COUNTER(NaniteMeshPass);

const FIntPoint DepthStencilAtlasSize = DepthStencilAtlasDesc.Extent;

const FIntRect DepthAtlasRect = FIntRect(0, 0, DepthStencilAtlasSize.X, DepthStencilAtlasSize.Y);

FRDGBufferSRVRef RectMinMaxBufferSRV = GraphBuilder.CreateSRV(FRDGBufferSRVDesc(RectMinMaxBuffer, PF_R32G32B32A32_UINT));

// 光栅化上下文.

Nanite::FRasterContext RasterContext = Nanite::InitRasterContext(

GraphBuilder,

FeatureLevel,

DepthStencilAtlasSize,

Nanite::EOutputBufferMode::VisBuffer,

true,

RectMinMaxBufferSRV,

NumRects);

const bool bUpdateStreaming = false;

const bool bSupportsMultiplePasses = true;

const bool bForceHWRaster = RasterContext.RasterScheduling == Nanite::ERasterScheduling::HardwareOnly;

// 非主要上下文(和Nanite的主要Pass区别开来)

const bool bPrimaryContext = false;

// 裁剪上下文

Nanite::FCullingContext CullingContext = Nanite::InitCullingContext(

GraphBuilder,

*Scene,

nullptr,

FIntRect(),

false,

bUpdateStreaming,

bSupportsMultiplePasses,

bForceHWRaster,

bPrimaryContext);

// 多视图渲染.

if (GLumenSceneNaniteMultiViewCapture)

{

const uint32 NumCardsToRender = CardsToRender.Num();

// 第一层while循环是为了拆分卡片数量, 防止同一个批次的卡片超过MAX_VIEWS_PER_CULL_RASTERIZE_PASS.

uint32 NextCardIndex = 0;

while(NextCardIndex < NumCardsToRender)

{

TArray<Nanite::FPackedView, SceneRenderingAllocator> NaniteViews;

TArray<Nanite::FInstanceDraw, SceneRenderingAllocator> NaniteInstanceDraws;

// 给每个待渲染卡片生成一个FPackedViewParams实例, 添加到NaniteViews, 直到NaniteViews达到了最大视图数量.

while(NextCardIndex < NumCardsToRender && NaniteViews.Num() < MAX_VIEWS_PER_CULL_RASTERIZE_PASS)

{

const FCardRenderData& CardRenderData = CardsToRender[NextCardIndex];

if(CardRenderData.NaniteInstanceIds.Num() > 0)

{

for(uint32 InstanceID : CardRenderData.NaniteInstanceIds)

{

NaniteInstanceDraws.Add(Nanite::FInstanceDraw { InstanceID, (uint32)NaniteViews.Num() });

}

Nanite::FPackedViewParams Params;

Params.ViewMatrices = CardRenderData.ViewMatrices;

Params.PrevViewMatrices = CardRenderData.ViewMatrices;

Params.ViewRect = CardRenderData.AtlasAllocation;

Params.RasterContextSize = DepthStencilAtlasSize;

Params.LODScaleFactor = CardRenderData.NaniteLODScaleFactor;

NaniteViews.Add(Nanite::CreatePackedView(Params));

}

NextCardIndex++;

}

// 光栅化卡片.

if (NaniteInstanceDraws.Num() > 0)

{

RDG_EVENT_SCOPE(GraphBuilder, "Nanite::RasterizeLumenCards");

Nanite::FRasterState RasterState;

Nanite::CullRasterize(

GraphBuilder,

*Scene,

NaniteViews,

CullingContext,

RasterContext,

RasterState,

&NaniteInstanceDraws

);

}

}

}

else // 单视图渲染

{

RDG_EVENT_SCOPE(GraphBuilder, "RenderLumenCardsWithNanite");

// 单视图渲染比较暴力, 线性遍历所有待渲染卡片, 每个卡片构建一个view并调用一次绘制.

for(FCardRenderData& CardRenderData : CardsToRender)

{

if(CardRenderData.NaniteInstanceIds.Num() > 0)

{

TArray<Nanite::FInstanceDraw, SceneRenderingAllocator> NaniteInstanceDraws;

for( uint32 InstanceID : CardRenderData.NaniteInstanceIds )

{

NaniteInstanceDraws.Add( Nanite::FInstanceDraw { InstanceID, 0u } );

}

CardRenderData.PatchView(GraphBuilder.RHICmdList, Scene, SharedView);

Nanite::FPackedView PackedView = Nanite::CreatePackedViewFromViewInfo(*SharedView, DepthStencilAtlasSize, 0);

Nanite::CullRasterize(

GraphBuilder,

*Scene,

{ PackedView },

CullingContext,

RasterContext,

Nanite::FRasterState(),

&NaniteInstanceDraws

);

}

}

}

extern float GLumenDistantSceneMinInstanceBoundsRadius;

// 为远处的卡片渲染整个场景.

for (FCardRenderData& CardRenderData : CardsToRender)

{

// bDistantScene标记了是否远处的卡片.

if (CardRenderData.bDistantScene)

{

Nanite::FRasterState RasterState;

RasterState.bNearClip = false;

CardRenderData.PatchView(GraphBuilder.RHICmdList, Scene, SharedView);

Nanite::FPackedView PackedView = Nanite::CreatePackedViewFromViewInfo(

*SharedView,

DepthStencilAtlasSize,

/*Flags*/ 0,

/*StreamingPriorityCategory*/ 0,

GLumenDistantSceneMinInstanceBoundsRadius,

Lumen::GetDistanceSceneNaniteLODScaleFactor());

Nanite::CullRasterize(

GraphBuilder,

*Scene,

{ PackedView },

CullingContext,

RasterContext,

RasterState);

}

}

// Lumen网格捕获Pass.

Nanite::DrawLumenMeshCapturePass(

GraphBuilder,

*Scene,

SharedView,

CardsToRender,

CullingContext,

RasterContext,

PassUniformParameters,

RectMinMaxBufferSRV,

NumRects,

LumenSceneData.MaxAtlasSize,

AlbedoAtlasTexture,

NormalAtlasTexture,

EmissiveAtlasTexture,

DepthStencilAtlasTexture

);

}

ConvertToExternalTexture(GraphBuilder, AlbedoAtlasTexture, LumenSceneData.AlbedoAtlas);

ConvertToExternalTexture(GraphBuilder, NormalAtlasTexture, LumenSceneData.NormalAtlas);

ConvertToExternalTexture(GraphBuilder, EmissiveAtlasTexture, LumenSceneData.EmissiveAtlas);

}

// 上传卡片数据.

{

QUICK_SCOPE_CYCLE_COUNTER(UploadCardIndexBuffers);

// 上传索引缓冲.

{

FRDGBufferRef CardIndexBuffer = GraphBuilder.CreateBuffer(

FRDGBufferDesc::CreateUploadDesc(sizeof(uint32), FMath::Max(LumenCardRenderer.CardIdsToRender.Num(), 1)),

TEXT("Lumen.CardsToRenderIndexBuffer"));

FLumenCardIdUpload* PassParameters = GraphBuilder.AllocParameters<FLumenCardIdUpload>();

PassParameters->CardIds = CardIndexBuffer;

const uint32 CardIdBytes = LumenCardRenderer.CardIdsToRender.GetTypeSize() * LumenCardRenderer.CardIdsToRender.Num();

const void* CardIdPtr = LumenCardRenderer.CardIdsToRender.GetData();

GraphBuilder.AddPass(

RDG_EVENT_NAME("Upload CardsToRenderIndexBuffer NumIndices=%d", LumenCardRenderer.CardIdsToRender.Num()),

PassParameters,

ERDGPassFlags::Copy,

[PassParameters, CardIdBytes, CardIdPtr](FRHICommandListImmediate& RHICmdList)

{

if (CardIdBytes > 0)

{

void* DestCardIdPtr = RHILockBuffer(PassParameters->CardIds->GetRHI(), 0, CardIdBytes, RLM_WriteOnly);

FPlatformMemory::Memcpy(DestCardIdPtr, CardIdPtr, CardIdBytes);

RHIUnlockBuffer(PassParameters->CardIds->GetRHI());

}

});

ConvertToExternalBuffer(GraphBuilder, CardIndexBuffer, LumenCardRenderer.CardsToRenderIndexBuffer);

}

// 上传哈希映射表缓冲.

{

const uint32 NumHashMapUInt32 = FLumenCardRenderer::NumCardsToRenderHashMapBucketUInt32;

const uint32 NumHashMapBytes = 4 * NumHashMapUInt32;

const uint32 NumHashMapBuckets = 32 * NumHashMapUInt32;

FRDGBufferRef CardHashMapBuffer = GraphBuilder.CreateBuffer(

FRDGBufferDesc::CreateUploadDesc(sizeof(uint32), NumHashMapUInt32),

TEXT("Lumen.CardsToRenderHashMapBuffer"));

LumenCardRenderer.CardsToRenderHashMap.Init(0, NumHashMapBuckets);

for (int32 CardIndex : LumenCardRenderer.CardIdsToRender)

{

LumenCardRenderer.CardsToRenderHashMap[CardIndex % NumHashMapBuckets] = 1;

}

FLumenCardIdUpload* PassParameters = GraphBuilder.AllocParameters<FLumenCardIdUpload>();

PassParameters->CardIds = CardHashMapBuffer;

const void* HashMapDataPtr = LumenCardRenderer.CardsToRenderHashMap.GetData();

GraphBuilder.AddPass(

RDG_EVENT_NAME("Upload CardsToRenderHashMapBuffer NumUInt32=%d", NumHashMapUInt32),

PassParameters,

ERDGPassFlags::Copy,

[PassParameters, NumHashMapBytes, HashMapDataPtr](FRHICommandListImmediate& RHICmdList)

{

if (NumHashMapBytes > 0)

{

void* DestCardIdPtr = RHILockBuffer(PassParameters->CardIds->GetRHI(), 0, NumHashMapBytes, RLM_WriteOnly);

FPlatformMemory::Memcpy(DestCardIdPtr, HashMapDataPtr, NumHashMapBytes);

RHIUnlockBuffer(PassParameters->CardIds->GetRHI());

}

});

ConvertToExternalBuffer(GraphBuilder, CardHashMapBuffer, LumenCardRenderer.CardsToRenderHashMapBuffer);

}

// 上传可见卡片索引缓冲.

{

FRDGBufferRef VisibleCardsIndexBuffer = GraphBuilder.CreateBuffer(

FRDGBufferDesc::CreateUploadDesc(sizeof(uint32), FMath::Max(LumenSceneData.VisibleCardsIndices.Num(), 1)),

TEXT("Lumen.VisibleCardsIndexBuffer"));

FLumenCardIdUpload* PassParameters = GraphBuilder.AllocParameters<FLumenCardIdUpload>();

PassParameters->CardIds = VisibleCardsIndexBuffer;

const uint32 CardIdBytes = sizeof(uint32) * LumenSceneData.VisibleCardsIndices.Num();

const void* CardIdPtr = LumenSceneData.VisibleCardsIndices.GetData();

GraphBuilder.AddPass(

RDG_EVENT_NAME("Upload VisibleCardIndices NumIndices=%d", LumenSceneData.VisibleCardsIndices.Num()),

PassParameters,

ERDGPassFlags::Copy,

[PassParameters, CardIdBytes, CardIdPtr](FRHICommandListImmediate& RHICmdList)

{

if (CardIdBytes > 0)

{

void* DestCardIdPtr = RHILockBuffer(PassParameters->CardIds->GetRHI(), 0, CardIdBytes, RLM_WriteOnly);

FPlatformMemory::Memcpy(DestCardIdPtr, CardIdPtr, CardIdBytes);

RHIUnlockBuffer(PassParameters->CardIds->GetRHI());

}

});

ConvertToExternalBuffer(GraphBuilder, VisibleCardsIndexBuffer, LumenSceneData.VisibleCardsIndexBuffer);

}

}

// 预过滤Lumen场景深度.

if (LumenCardRenderer.CardIdsToRender.Num() > 0)

{

TRDGUniformBufferRef<FLumenCardScene> LumenCardSceneUniformBuffer;

{

FLumenCardScene* LumenCardSceneParameters = GraphBuilder.AllocParameters<FLumenCardScene>();

SetupLumenCardSceneParameters(GraphBuilder, Scene, *LumenCardSceneParameters);

LumenCardSceneUniformBuffer = GraphBuilder.CreateUniformBuffer(LumenCardSceneParameters);

}

PrefilterLumenSceneDepth(GraphBuilder, LumenCardSceneUniformBuffer, DepthStencilAtlasTexture, LumenCardRenderer.CardIdsToRender, View);

}

}

FLumenSceneData& LumenSceneData = *Scene->LumenSceneData;

LumenSceneData.CardIndicesToUpdateInBuffer.Reset();

LumenSceneData.MeshCardsIndicesToUpdateInBuffer.Reset();

LumenSceneData.DFObjectIndicesToUpdateInBuffer.Reset();

}

更新Lumen场景的过程主要有裁剪卡片、上传卡片ID、缓存视图和场景纹理、捕获网格卡片、将卡片当做视图光栅化Lumen场景、渲染远处卡片、绘制网格捕获、上传卡片数据及可见数据等步骤。

由于以上过程比较多,无法将所有过程都详细阐述,本节将重点阐述捕获网格卡片和光栅化网格卡片涉及的阶段。

6.5.5.2 CardsToRender

为了阐述捕获网格卡片和光栅化网格卡片的阶段,需要弄清楚LumenCardRenderer.CardsToRender的添加过程。下面捋清Lumen场景上有哪些卡片需要捕获和渲染,它的处理者是InitView阶段的BeginUpdateLumenSceneTasks:

// Engine\Source\Runtime\Renderer\Private\Lumen\LumenSceneRendering.cpp

void FDeferredShadingSceneRenderer::BeginUpdateLumenSceneTasks(FRDGBuilder& GraphBuilder)

{

LLM_SCOPE_BYTAG(Lumen);

const FViewInfo& MainView = Views[0];

const bool bAnyLumenActive = ShouldRenderLumenDiffuseGI(Scene, MainView, true)

|| ShouldRenderLumenReflections(MainView, true);

if (bAnyLumenActive

&& !ViewFamily.EngineShowFlags.HitProxies)

{

SCOPED_NAMED_EVENT(FDeferredShadingSceneRenderer_BeginUpdateLumenSceneTasks, FColor::Emerald);

QUICK_SCOPE_CYCLE_COUNTER(BeginUpdateLumenSceneTasks);

const double StartTime = FPlatformTime::Seconds();

FLumenSceneData& LumenSceneData = *Scene->LumenSceneData;

// 获取待渲染卡片列表并重置.

TArray<FCardRenderData, SceneRenderingAllocator>& CardsToRender = LumenCardRenderer.CardsToRender;

LumenCardRenderer.Reset();

const int32 LocalLumenSceneGeneration = GLumenSceneGeneration;

const bool bRecaptureLumenSceneOnce = LumenSceneData.Generation != LocalLumenSceneGeneration;

LumenSceneData.Generation = LocalLumenSceneGeneration;

const bool bReallocateAtlas = LumenSceneData.MaxAtlasSize != GetDesiredAtlasSize()

|| (LumenSceneData.RadiosityAtlas && LumenSceneData.RadiosityAtlas->GetDesc().Extent != GetRadiosityAtlasSize(LumenSceneData.MaxAtlasSize))

|| GLumenSceneReset;

if (GLumenSceneReset != 2)

{

GLumenSceneReset = 0;

}

LumenSceneData.NumMeshCardsToAddToSurfaceCache = 0;

// 更新脏卡片.

UpdateDirtyCards(Scene, bReallocateAtlas, bRecaptureLumenSceneOnce);

// 更新Lumen场景的图元信息.

UpdateLumenScenePrimitives(Scene);

// 更新远处场景.

UpdateDistantScene(Scene, Views[0]);

const FVector LumenSceneCameraOrigin = GetLumenSceneViewOrigin(MainView, GetNumLumenVoxelClipmaps() - 1);

const float MaxCardUpdateDistanceFromCamera = ComputeMaxCardUpdateDistanceFromCamera();

// 重新分配卡片Atlas.

if (bReallocateAtlas)

{

LumenSceneData.MaxAtlasSize = GetDesiredAtlasSize();

// 在重新创建Atlas之前,应该释放所有内容

ensure(LumenSceneData.NumCardTexels == 0);

LumenSceneData.AtlasAllocator = FBinnedTextureLayout(LumenSceneData.MaxAtlasSize, GLumenSceneCardAtlasAllocatorBinSize);

}

// 每帧捕获和更新卡片纹素以及它们的数量, 是否更新由GLumenSceneRecaptureLumenSceneEveryFrame(控制台命令r.LumenScene.RecaptureEveryFrame)决定.

const int32 CardCapturesPerFrame = GLumenSceneRecaptureLumenSceneEveryFrame != 0 ? INT_MAX : GetMaxLumenSceneCardCapturesPerFrame();

const int32 CardTexelsToCapturePerFrame = GLumenSceneRecaptureLumenSceneEveryFrame != 0 ? INT_MAX : GetLumenSceneCardResToCapturePerFrame() * GetLumenSceneCardResToCapturePerFrame();

if (CardCapturesPerFrame > 0 && CardTexelsToCapturePerFrame > 0)

{

QUICK_SCOPE_CYCLE_COUNTER(FillCardsToRender);

TArray<FLumenSurfaceCacheUpdatePacket, SceneRenderingAllocator> Packets;

TArray<FMeshCardsAdd, SceneRenderingAllocator> MeshCardsAddsSortedByPriority;

// 准备表面缓存更新.

{

TRACE_CPUPROFILER_EVENT_SCOPE(PrepareSurfaceCacheUpdate);

const int32 NumPrimitivesPerPacket = FMath::Max(GLumenScenePrimitivesPerPacket, 1);

const int32 NumPackets = FMath::DivideAndRoundUp(LumenSceneData.LumenPrimitives.Num(), NumPrimitivesPerPacket);

CardsToRender.Reset(GetMaxLumenSceneCardCapturesPerFrame());

Packets.Reserve(NumPackets);

for (int32 PacketIndex = 0; PacketIndex < NumPackets; ++PacketIndex)

{

Packets.Emplace(

LumenSceneData.LumenPrimitives,

LumenSceneData.MeshCards,

LumenSceneData.Cards,

LumenSceneCameraOrigin,

MaxCardUpdateDistanceFromCamera,

PacketIndex * NumPrimitivesPerPacket,

NumPrimitivesPerPacket);

}

}

// 执行准备缓存更新任务.

{

TRACE_CPUPROFILER_EVENT_SCOPE(RunPrepareSurfaceCacheUpdate);

const bool bExecuteInParallel = FApp::ShouldUseThreadingForPerformance();

ParallelFor(Packets.Num(),

[&Packets](int32 Index)

{

Packets[Index].AnyThreadTask();

},

!bExecuteInParallel

);

}

// 打包上述任务的结果.

{

TRACE_CPUPROFILER_EVENT_SCOPE(PacketResults);

const float CARD_DISTANCE_BUCKET_SIZE = 100.0f;

uint32 NumMeshCardsAddsPerBucket[MAX_ADD_PRIMITIVE_PRIORITY + 1];

for (int32 BucketIndex = 0; BucketIndex < UE_ARRAY_COUNT(NumMeshCardsAddsPerBucket); ++BucketIndex)

{

NumMeshCardsAddsPerBucket[BucketIndex] = 0;

}

// Count how many cards fall into each bucket

for (int32 PacketIndex = 0; PacketIndex < Packets.Num(); ++PacketIndex)

{

const FLumenSurfaceCacheUpdatePacket& Packet = Packets[PacketIndex];

LumenSceneData.NumMeshCardsToAddToSurfaceCache += Packet.MeshCardsAdds.Num();

for (int32 CardIndex = 0; CardIndex < Packet.MeshCardsAdds.Num(); ++CardIndex)

{

const FMeshCardsAdd& MeshCardsAdd = Packet.MeshCardsAdds[CardIndex];

++NumMeshCardsAddsPerBucket[MeshCardsAdd.Priority];

}

}

int32 NumMeshCardsInBucketsUpToMaxBucket = 0;

int32 MaxBucketIndexToAdd = 0;

// 选择前N个桶进行分配

for (int32 BucketIndex = 0; BucketIndex < UE_ARRAY_COUNT(NumMeshCardsAddsPerBucket); ++BucketIndex)

{

NumMeshCardsInBucketsUpToMaxBucket += NumMeshCardsAddsPerBucket[BucketIndex];

MaxBucketIndexToAdd = BucketIndex;

if (NumMeshCardsInBucketsUpToMaxBucket > CardCapturesPerFrame)

{

break;

}

}

MeshCardsAddsSortedByPriority.Reserve(GetMaxLumenSceneCardCapturesPerFrame());

// 拷贝前N个桶到CardsToAllocateSortedByDistance

for (int32 PacketIndex = 0; PacketIndex < Packets.Num(); ++PacketIndex)

{

const FLumenSurfaceCacheUpdatePacket& Packet = Packets[PacketIndex];

for (int32 CardIndex = 0; CardIndex < Packet.MeshCardsAdds.Num() && MeshCardsAddsSortedByPriority.Num() < CardCapturesPerFrame; ++CardIndex)

{

const FMeshCardsAdd& MeshCardsAdd = Packet.MeshCardsAdds[CardIndex];

if (MeshCardsAdd.Priority <= MaxBucketIndexToAdd)

{

MeshCardsAddsSortedByPriority.Add(MeshCardsAdd);

}

}

}

// 移除所有不可见的网格卡片.

for (int32 PacketIndex = 0; PacketIndex < Packets.Num(); ++PacketIndex)

{

const FLumenSurfaceCacheUpdatePacket& Packet = Packets[PacketIndex];

for (int32 MeshCardsToRemoveIndex = 0; MeshCardsToRemoveIndex < Packet.MeshCardsRemoves.Num(); ++MeshCardsToRemoveIndex)

{

const FMeshCardsRemove& MeshCardsRemove = Packet.MeshCardsRemoves[MeshCardsToRemoveIndex];

FLumenPrimitive& LumenPrimitive = LumenSceneData.LumenPrimitives[MeshCardsRemove.LumenPrimitiveIndex];

FLumenPrimitiveInstance& LumenPrimitiveInstance = LumenPrimitive.Instances[MeshCardsRemove.LumenInstanceIndex];

LumenSceneData.RemoveMeshCards(LumenPrimitive, LumenPrimitiveInstance);

}

}

}

// 分配远处场景.

extern int32 GLumenUpdateDistantSceneCaptures;

if (GLumenUpdateDistantSceneCaptures)

{

for (int32 DistantCardIndex : LumenSceneData.DistantCardIndices)

{

FLumenCard& DistantCard = LumenSceneData.Cards[DistantCardIndex];

extern int32 GLumenDistantSceneCardResolution;

DistantCard.DesiredResolution = FIntPoint(GLumenDistantSceneCardResolution, GLumenDistantSceneCardResolution);

if (!DistantCard.bVisible)

{

LumenSceneData.AddCardToVisibleCardList(DistantCardIndex);

DistantCard.bVisible = true;

}

DistantCard.RemoveFromAtlas(LumenSceneData);

LumenSceneData.CardIndicesToUpdateInBuffer.Add(DistantCardIndex);

// 加入到CardsToRender列表.

CardsToRender.Add(FCardRenderData(

DistantCard,

nullptr,

-1,

FeatureLevel,

DistantCardIndex));

}

}

// 分配新的卡片.

for (int32 SortedCardIndex = 0; SortedCardIndex < MeshCardsAddsSortedByPriority.Num(); ++SortedCardIndex)

{

const FMeshCardsAdd& MeshCardsAdd = MeshCardsAddsSortedByPriority[SortedCardIndex];

FLumenPrimitive& LumenPrimitive = LumenSceneData.LumenPrimitives[MeshCardsAdd.LumenPrimitiveIndex];

FLumenPrimitiveInstance& LumenPrimitiveInstance = LumenPrimitive.Instances[MeshCardsAdd.LumenInstanceIndex];

LumenSceneData.AddMeshCards(MeshCardsAdd.LumenPrimitiveIndex, MeshCardsAdd.LumenInstanceIndex);

if (LumenPrimitiveInstance.MeshCardsIndex >= 0)

{

// 获取图元实例的网格卡片.

const FLumenMeshCards& MeshCards = LumenSceneData.MeshCards[LumenPrimitiveInstance.MeshCardsIndex];

// 遍历网格卡片的所有卡片, 添加有效的卡片到CardsToRender列表.

for (uint32 CardIndex = MeshCards.FirstCardIndex; CardIndex < MeshCards.FirstCardIndex + MeshCards.NumCards; ++CardIndex)

{

FLumenCard& LumenCard = LumenSceneData.Cards[CardIndex];

// 分配卡片.

FCardAllocationOutput CardAllocation;

ComputeCardAllocation(LumenCard, LumenSceneCameraOrigin, MaxCardUpdateDistanceFromCamera, CardAllocation);

LumenCard.DesiredResolution = CardAllocation.TextureAllocationSize;

if (LumenCard.bVisible != CardAllocation.bVisible)

{

LumenCard.bVisible = CardAllocation.bVisible;

if (LumenCard.bVisible)

{

LumenSceneData.AddCardToVisibleCardList(CardIndex);

}

else

{

LumenCard.RemoveFromAtlas(LumenSceneData);

LumenSceneData.RemoveCardFromVisibleCardList(CardIndex);

}

LumenSceneData.CardIndicesToUpdateInBuffer.Add(CardIndex);

}

// 如果卡片可见且分辨率和预期不一样, 才添加到CardsToRender.

if (LumenCard.bVisible && LumenCard.AtlasAllocation.Width() != LumenCard.DesiredResolution.X && LumenCard.AtlasAllocation.Height() != LumenCard.DesiredResolution.Y)

{

LumenCard.RemoveFromAtlas(LumenSceneData);

LumenSceneData.CardIndicesToUpdateInBuffer.Add(CardIndex);

// 加入到CardsToRender列表.

CardsToRender.Add(FCardRenderData(

LumenCard,

LumenPrimitive.Primitive,

LumenPrimitive.bMergedInstances ? -1 : MeshCardsAdd.LumenInstanceIndex,

FeatureLevel,

CardIndex));

LumenCardRenderer.NumCardTexelsToCapture += LumenCard.AtlasAllocation.Area();

}

} // for

// 如果卡片或卡片纹素超限, 终止循环.

if (CardsToRender.Num() >= CardCapturesPerFrame

|| LumenCardRenderer.NumCardTexelsToCapture >= CardTexelsToCapturePerFrame)

{

break;

}

}

}

}

// 分配和更新卡片Atlas.

AllocateOptionalCardAtlases(GraphBuilder, LumenSceneData, MainView, bReallocateAtlas);

UpdateLumenCardAtlasAllocation(GraphBuilder, MainView, bReallocateAtlas, bRecaptureLumenSceneOnce);

// 处理待渲染卡片.

if (CardsToRender.Num() > 0)

{

// 设置网格通道.

{

QUICK_SCOPE_CYCLE_COUNTER(MeshPassSetup);

// 在渲染之前,确保所有的网格渲染数据都已准备好.

{

QUICK_SCOPE_CYCLE_COUNTER(PrepareStaticMeshData);

// Set of unique primitives requiring static mesh update

TSet<FPrimitiveSceneInfo*> PrimitivesToUpdateStaticMeshes;

for (FCardRenderData& CardRenderData : CardsToRender)

{

FPrimitiveSceneInfo* PrimitiveSceneInfo = CardRenderData.PrimitiveSceneInfo;

if (PrimitiveSceneInfo && PrimitiveSceneInfo->Proxy->AffectsDynamicIndirectLighting())

{

if (PrimitiveSceneInfo->NeedsUniformBufferUpdate())

{

PrimitiveSceneInfo->UpdateUniformBuffer(GraphBuilder.RHICmdList);

}

if (PrimitiveSceneInfo->NeedsUpdateStaticMeshes())

{

PrimitivesToUpdateStaticMeshes.Add(PrimitiveSceneInfo);

}

}

}

if (PrimitivesToUpdateStaticMeshes.Num() > 0)

{

TArray<FPrimitiveSceneInfo*> UpdatedSceneInfos;

UpdatedSceneInfos.Reserve(PrimitivesToUpdateStaticMeshes.Num());

for (FPrimitiveSceneInfo* PrimitiveSceneInfo : PrimitivesToUpdateStaticMeshes)

{

UpdatedSceneInfos.Add(PrimitiveSceneInfo);

}

FPrimitiveSceneInfo::UpdateStaticMeshes(GraphBuilder.RHICmdList, Scene, UpdatedSceneInfos, true);

}

}

// 增加卡片捕获绘制.

for (FCardRenderData& CardRenderData : CardsToRender)

{

CardRenderData.StartMeshDrawCommandIndex = LumenCardRenderer.MeshDrawCommands.Num();

CardRenderData.NumMeshDrawCommands = 0;

int32 NumNanitePrimitives = 0;

const FLumenCard& Card = LumenSceneData.Cards[CardRenderData.CardIndex];

checkSlow(Card.bVisible && Card.bAllocated);

// 创建或处理卡片对应的FVisibleMeshDrawCommand.

AddCardCaptureDraws(Scene,

GraphBuilder.RHICmdList,

CardRenderData,

LumenCardRenderer.MeshDrawCommands,

LumenCardRenderer.MeshDrawPrimitiveIds);

CardRenderData.NumMeshDrawCommands = LumenCardRenderer.MeshDrawCommands.Num() - CardRenderData.StartMeshDrawCommandIndex;

}

}

(.....)

}

}

}

以上可知,网格卡片并不是每帧更新,在GLumenSceneRecaptureLumenSceneEveryFrame(控制台命令r.LumenScene.RecaptureEveryFrame)开启的情况下,网格卡片的分辨率发生改变且可见的情况下,才会加入到待渲染列表,并且每帧都有上限,防止一帧需要更新和绘制的卡片过多导致性能瓶颈。

6.5.5.3 MeshCardCapture

分析完如何将网格卡片加入到待渲染列表,便可以继续分析捕获卡片的具体过程了:

// 捕获网格卡片.

{

FLumenCardPassParameters* PassParameters = GraphBuilder.AllocParameters<FLumenCardPassParameters>();

// 卡片视图信息.

PassParameters->View = Scene->UniformBuffers.LumenCardCaptureViewUniformBuffer;

PassParameters->CardPass = GraphBuilder.CreateUniformBuffer(PassUniformParameters);

// Atlas渲染目标有3个: 基础色, 法线, 自发光.

PassParameters->RenderTargets[0] = FRenderTargetBinding(AlbedoAtlasTexture, ERenderTargetLoadAction::ELoad);

PassParameters->RenderTargets[1] = FRenderTargetBinding(NormalAtlasTexture, ERenderTargetLoadAction::ELoad);

PassParameters->RenderTargets[2] = FRenderTargetBinding(EmissiveAtlasTexture, ERenderTargetLoadAction::ELoad);

// 深度目标缓冲.

PassParameters->RenderTargets.DepthStencil = FDepthStencilBinding(DepthStencilAtlasTexture, ERenderTargetLoadAction::ELoad, FExclusiveDepthStencil::DepthWrite_StencilNop);

InstanceCullingResult.GetDrawParameters(PassParameters->InstanceCullingDrawParams);

// 捕获网格卡片Pass.

GraphBuilder.AddPass(

RDG_EVENT_NAME("MeshCardCapture"),

PassParameters,

ERDGPassFlags::Raster,

[this, Scene = Scene, PrimitiveIdVertexBuffer, SharedView, &CardsToRender, PassParameters](FRHICommandList& RHICmdList)

{

QUICK_SCOPE_CYCLE_COUNTER(MeshPass);

// 将所有待渲染的卡片准备数据并提交绘制指令.

for (FCardRenderData& CardRenderData : CardsToRender)

{

if (CardRenderData.NumMeshDrawCommands > 0)

{

FIntRect AtlasRect = CardRenderData.AtlasAllocation;

// 设置视口.

RHICmdList.SetViewport(AtlasRect.Min.X, AtlasRect.Min.Y, 0.0f, AtlasRect.Max.X, AtlasRect.Max.Y, 1.0f);

// 处理视图数据.

CardRenderData.PatchView(RHICmdList, Scene, SharedView);

Scene->UniformBuffers.LumenCardCaptureViewUniformBuffer.UpdateUniformBufferImmediate(*SharedView->CachedViewUniformShaderParameters);

FGraphicsMinimalPipelineStateSet GraphicsMinimalPipelineStateSet;

#if GPUCULL_TODO

if (Scene->GPUScene.IsEnabled())

{

FRHIBuffer* DrawIndirectArgsBuffer = nullptr;

FRHIBuffer* InstanceIdOffsetBuffer = nullptr;

FInstanceCullingDrawParams& InstanceCullingDrawParams = PassParameters->InstanceCullingDrawParams;

if (InstanceCullingDrawParams.DrawIndirectArgsBuffer != nullptr && InstanceCullingDrawParams.InstanceIdOffsetBuffer != nullptr)

{

DrawIndirectArgsBuffer = InstanceCullingDrawParams.DrawIndirectArgsBuffer->GetRHI();

InstanceIdOffsetBuffer = InstanceCullingDrawParams.InstanceIdOffsetBuffer->GetRHI();

}

// GPU裁剪调用GPUInstanced接口.

SubmitGPUInstancedMeshDrawCommandsRange(

LumenCardRenderer.MeshDrawCommands,

GraphicsMinimalPipelineStateSet,

CardRenderData.StartMeshDrawCommandIndex,

CardRenderData.NumMeshDrawCommands,

1,

InstanceIdOffsetBuffer,

DrawIndirectArgsBuffer,

RHICmdList);

}

#endif // GPUCULL_TODO

(......)

}

}

}

);

}