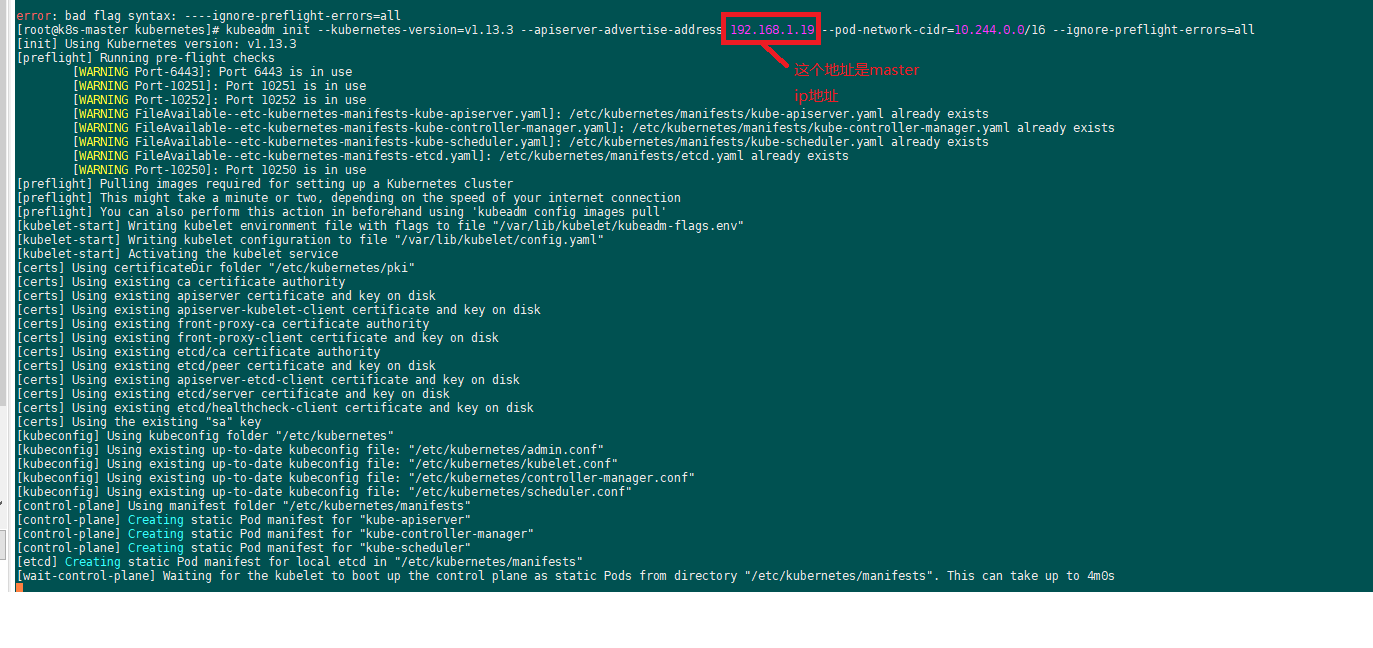

k8s初始化ip解析

打标签:

1.增加节点标签 备注 =:代表增加标签

2.减少节点标签 备注 -:代表减少标签

root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 4m39s v1.13.3 k8s-node01 Ready <none> 108s v1.13.3 k8s-node02 Ready <none> 94s v1.13.3 [root@k8s-master ~]# kubectl label nodes k8s-node01 node-role.kubernetes.io/node01= node/k8s-node01 labeled [root@k8s-master ~]# kubectl label nodes k8s-node02 node-role.kubernetes.io/node02= node/k8s-node02 labeled [root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 7m v1.13.3 k8s-node01 Ready node01 4m9s v1.13.3 k8s-node02 Ready node02 3m55s v1.13.3

需要reset重置在初始化:

[root@k8s-master ~]# kubeadm reset [reset] WARNING: changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted. [reset] are you sure you want to proceed? [y/N]: y [preflight] running pre-flight checks [reset] Reading configuration from the cluster... [reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' W0421 00:34:16.309915 10178 reset.go:213] [reset] Unable to fetch the kubeadm-config ConfigMap, using etcd pod spec as fallback: failed to get config map: Get https://192.168.1.19:6443/api/v1/namespaces/kube-system/configmaps/kubeadm-config: x509: certificate is valid for 10.96.0.1, 10.0.220.15, not 192.168.1.19 [reset] stopping the kubelet service [reset] unmounting mounted directories in "/var/lib/kubelet" [reset] deleting contents of stateful directories: [/var/lib/etcd /var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes] [reset] deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki] [reset] deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf] The reset process does not reset or clean up iptables rules or IPVS tables. If you wish to reset iptables, you must do so manually. For example: iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar) to reset your system's IPVS tables. [root@k8s-master ~]# kubectl -n kube-system get cm kubeadm-config -oyaml The connection to the server localhost:8080 was refused - did you specify the right host or port? [root@k8s-master ~]# [root@k8s-master ~]# [root@k8s-master ~]# [root@k8s-master ~]# [root@k8s-master ~]# [root@k8s-master ~]# [root@k8s-master ~]# kubeadm init --apiserver-advertise-address=192.168.1.19 --kubernetes-version v1.13.3 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16 --apiserver-cert-extra-sans=192.168.1.19 --ignore-preflight-errors=all [init] Using Kubernetes version: v1.13.3 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Activating the kubelet service [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.1.19 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.1.19 127.0.0.1 ::1] [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "ca" certificate and key [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.1.0.1 192.168.1.19 192.168.1.19] [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 18.005146 seconds [uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master" as an annotation [mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: hv6qb2.lknz3y4v17q1izxf [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 192.168.1.19:6443 --token hv6qb2.lknz3y4v17q1izxf --discovery-token-ca-cert-hash sha256:c497e2934c5bc59f872df9aef16cb831533898ff4df22a7f6c18cd69cec97bc7 [root@k8s-master ~]# mkdir -p $HOME/.kube [root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config [root@k8s-master ~]# kubeadm join 192.168.1.19:6443 --token hv6qb2.lknz3y4v17q1izxf --discovery-token-ca-cert-hash sha256:c497e2934c5bc59f872df9aef16cb831533898ff4df22a7f6c18cd69cec97bc7 [preflight] Running pre-flight checks [preflight] Some fatal errors occurred: [ERROR DirAvailable--etc-kubernetes-manifests]: /etc/kubernetes/manifests is not empty [ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists [ERROR Port-10250]: Port 10250 is in use [ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...` [root@k8s-master ~]# kubeadm join 192.168.1.19:6443 --token hv6qb2.lknz3y4v17q1izxf --discovery-token-ca-cert-hash sha256:c497e2934c5bc59f872df9aef16cb831533898ff4df22a7f6c18cd69cec97bc7 --ignore-preflight-errors=all [preflight] Running pre-flight checks [WARNING DirAvailable--etc-kubernetes-manifests]: /etc/kubernetes/manifests is not empty [WARNING FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists [WARNING Port-10250]: Port 10250 is in use [WARNING FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists [discovery] Trying to connect to API Server "192.168.1.19:6443" [discovery] Created cluster-info discovery client, requesting info from "https://192.168.1.19:6443" [discovery] Requesting info from "https://192.168.1.19:6443" again to validate TLS against the pinned public key [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.1.19:6443" [discovery] Successfully established connection with API Server "192.168.1.19:6443" [join] Reading configuration from the cluster... [join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Activating the kubelet service [tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap... [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master" as an annotation This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the master to see this node join the cluster.

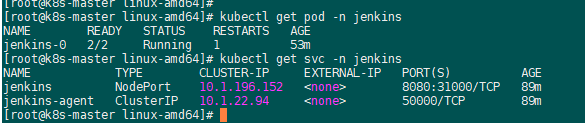

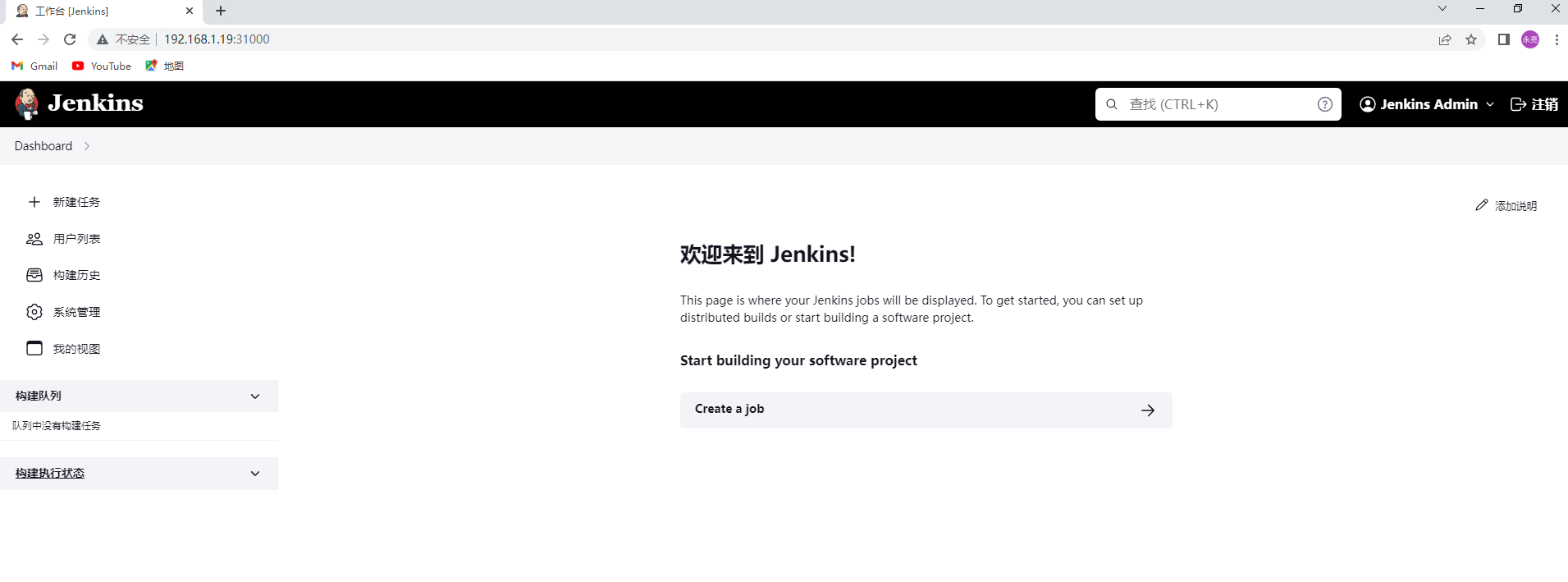

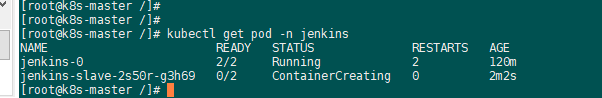

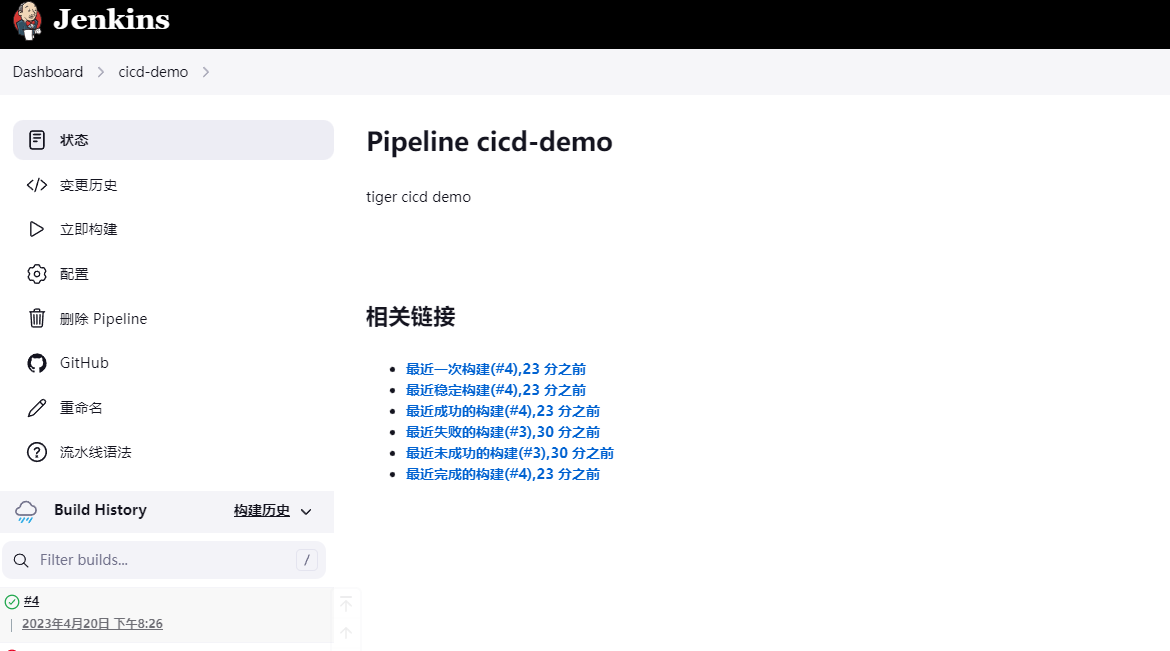

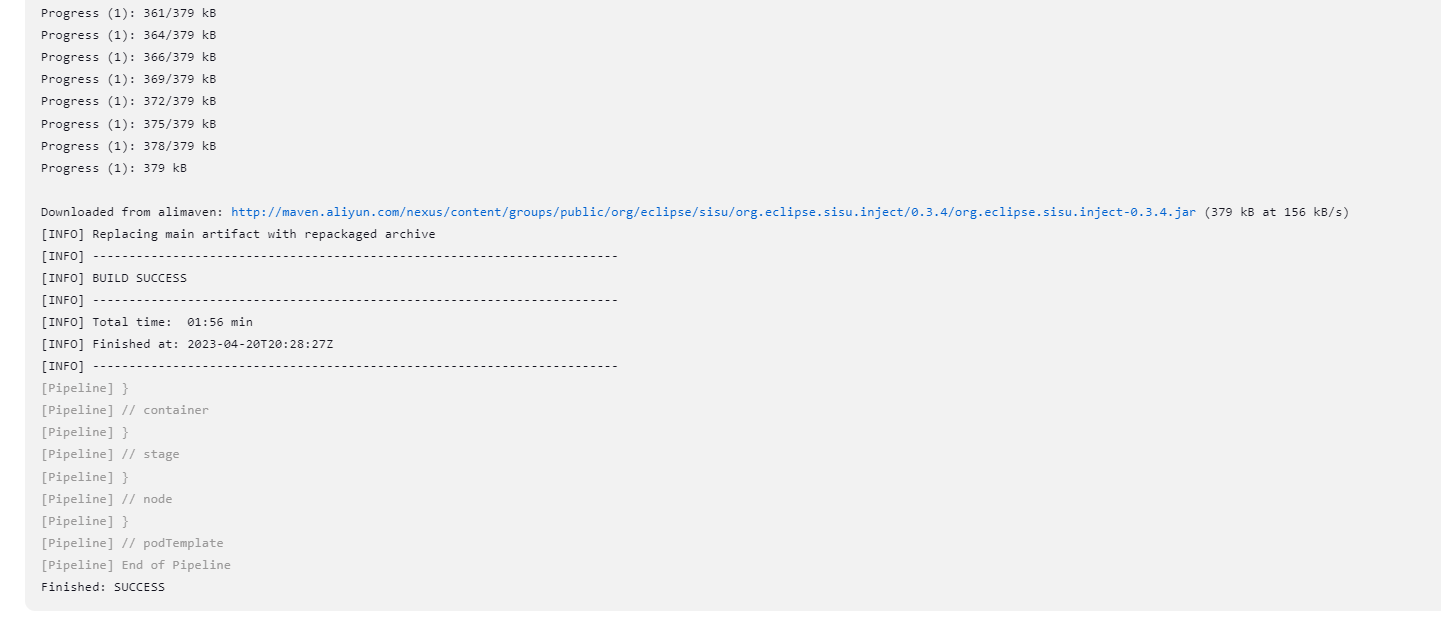

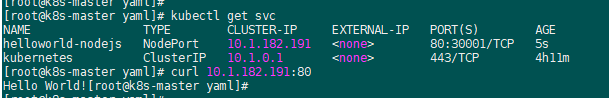

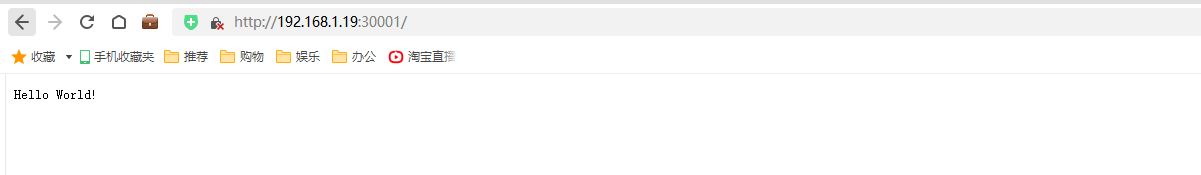

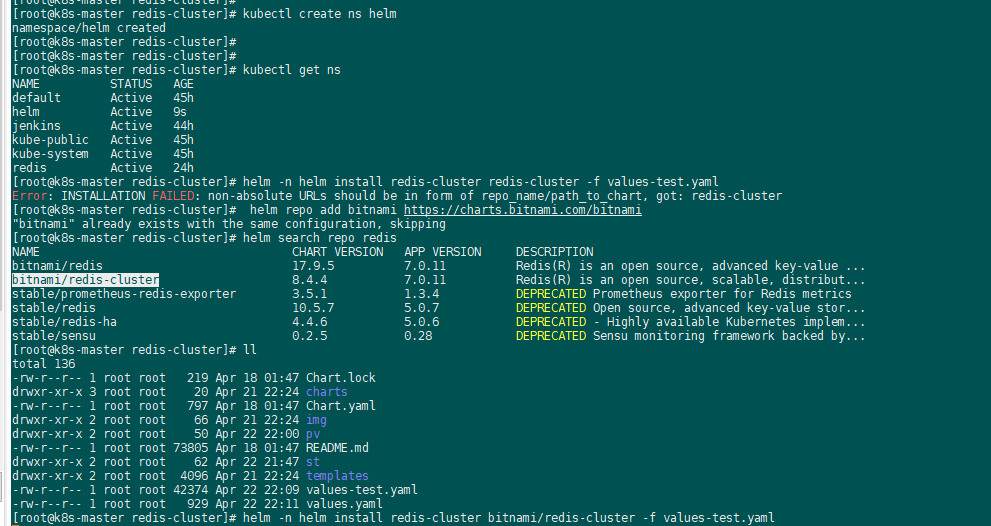

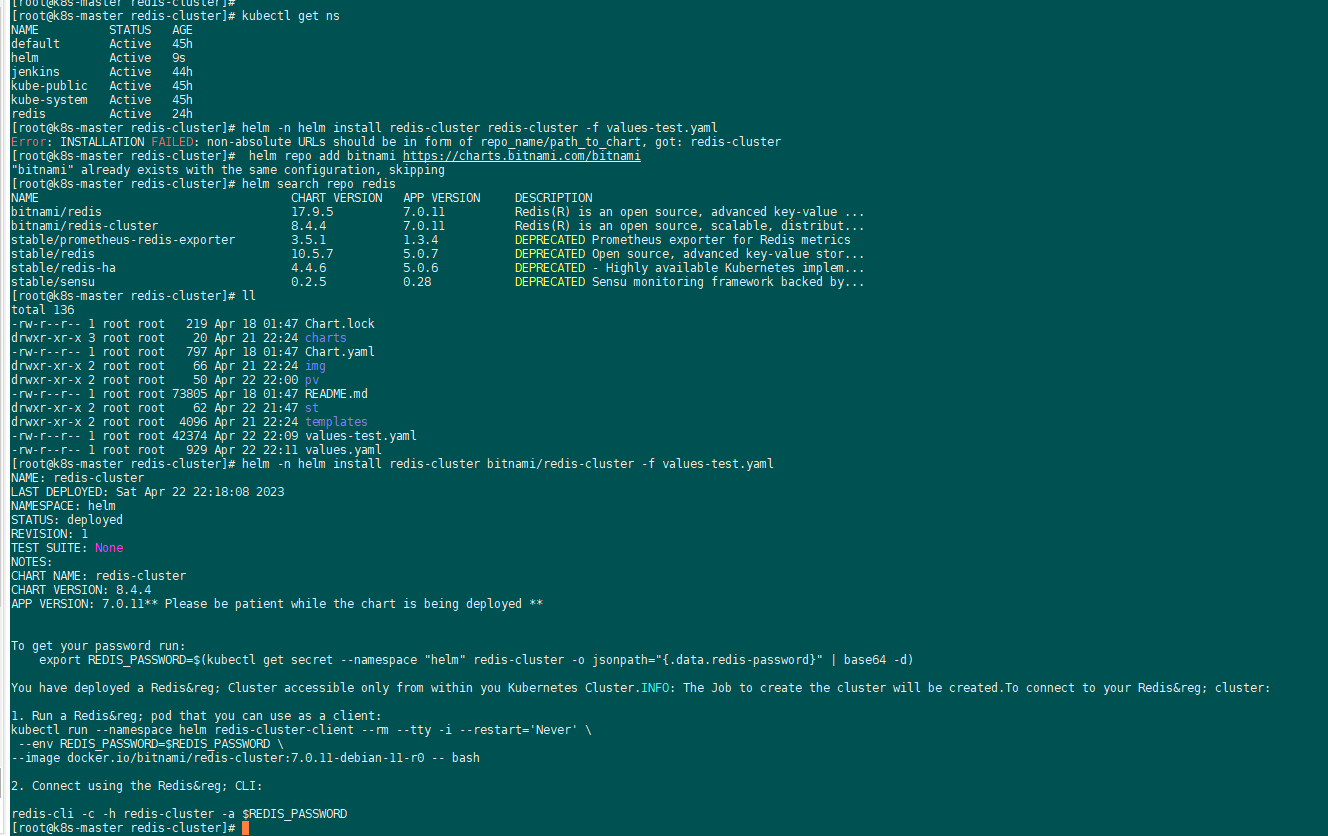

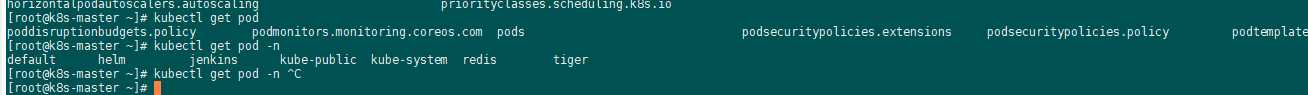

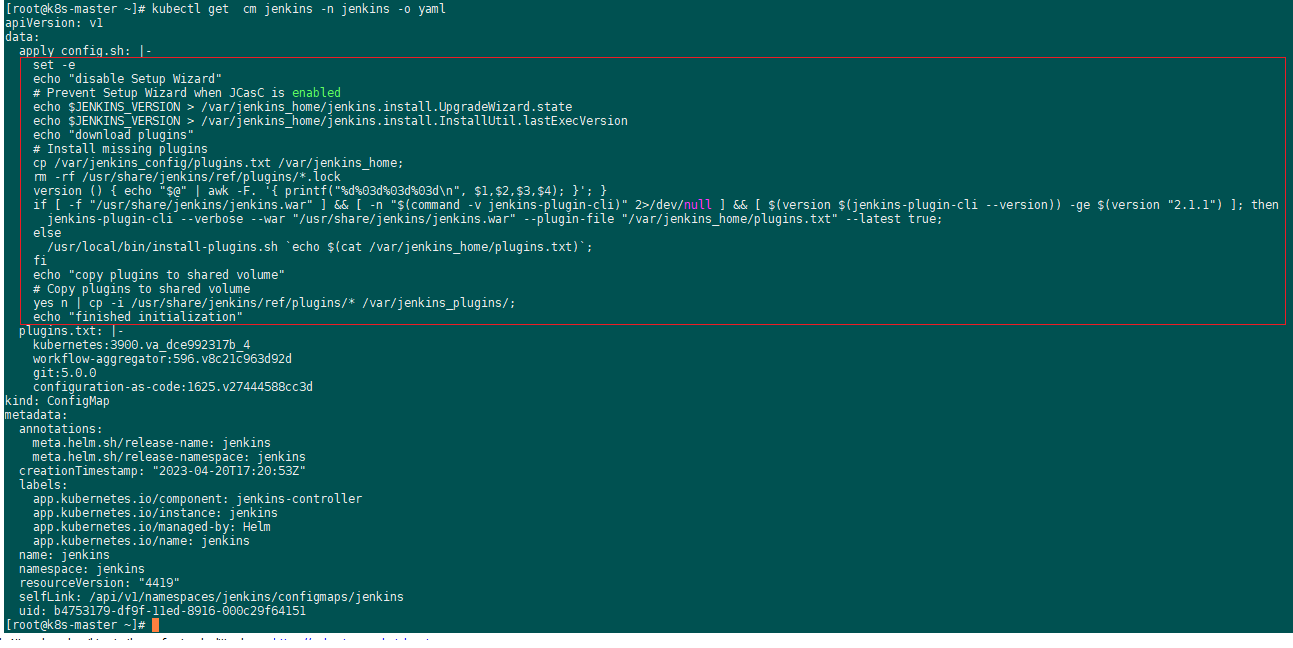

helm install jenkins on k8s:

外部访问jenkins是nodeip+nodeport:

http://192.168.1.19:31000

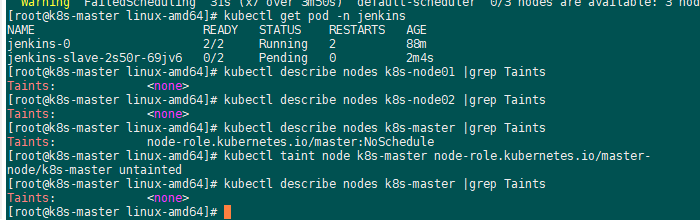

去除node污点:

[root@k8s-master linux-amd64]# kubectl describe nodes k8s-node01 |grep Taints Taints: <none> [root@k8s-master linux-amd64]# kubectl describe nodes k8s-node02 |grep Taints Taints: <none> [root@k8s-master linux-amd64]# kubectl describe nodes k8s-master |grep Taints Taints: node-role.kubernetes.io/master:NoSchedule [root@k8s-master linux-amd64]# kubectl taint node k8s-master node-role.kubernetes.io/master- node/k8s-master untainted [root@k8s-master linux-amd64]# kubectl describe nodes k8s-master |grep Taints Taints: <none> 减号-表示删除污点

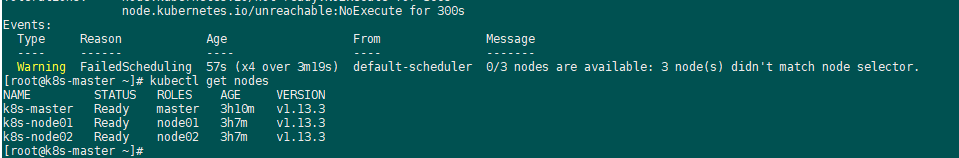

这个问题打标签就可以解决了。

[root@k8s-master ~]# kubectl label nodes k8s-node01 kubernetes.io/os=linux

node/k8s-node01 labeled

[root@k8s-master ~]# kubectl label nodes k8s-node02 kubernetes.io/os=linux

node/k8s-node02 labeled

[root@k8s-master ~]# kubectl label nodes k8s-master kubernetes.io/os=linux

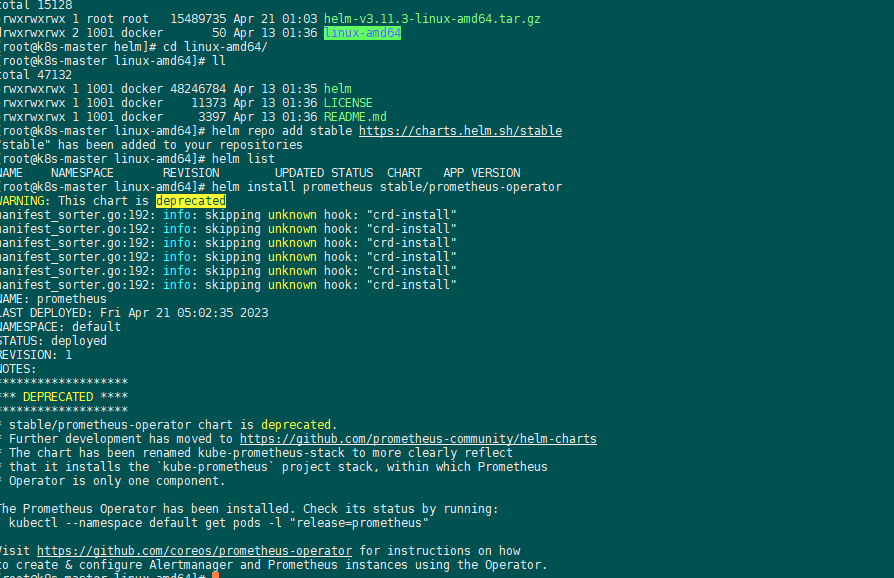

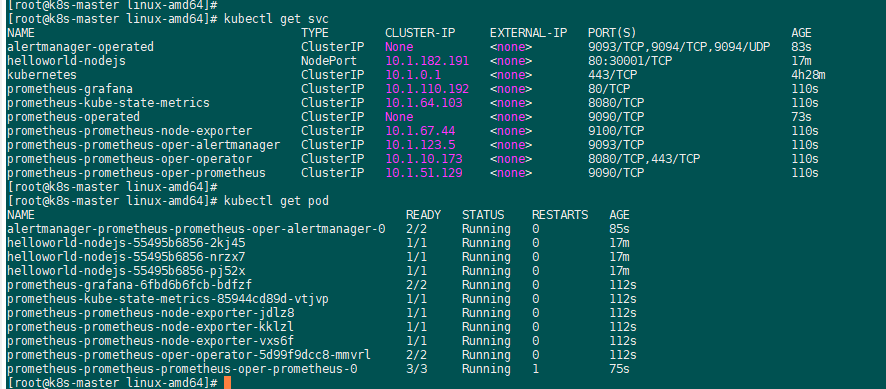

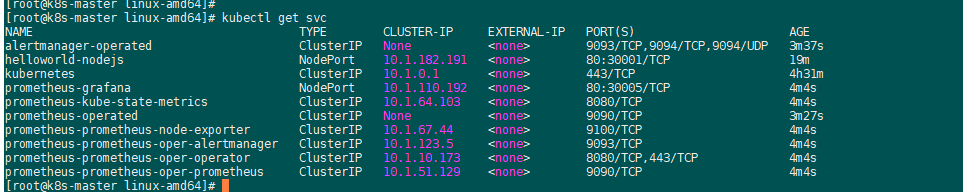

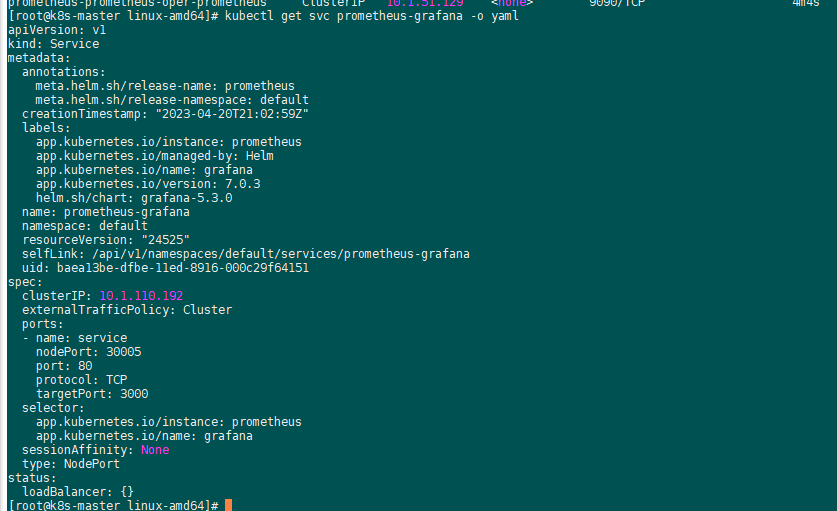

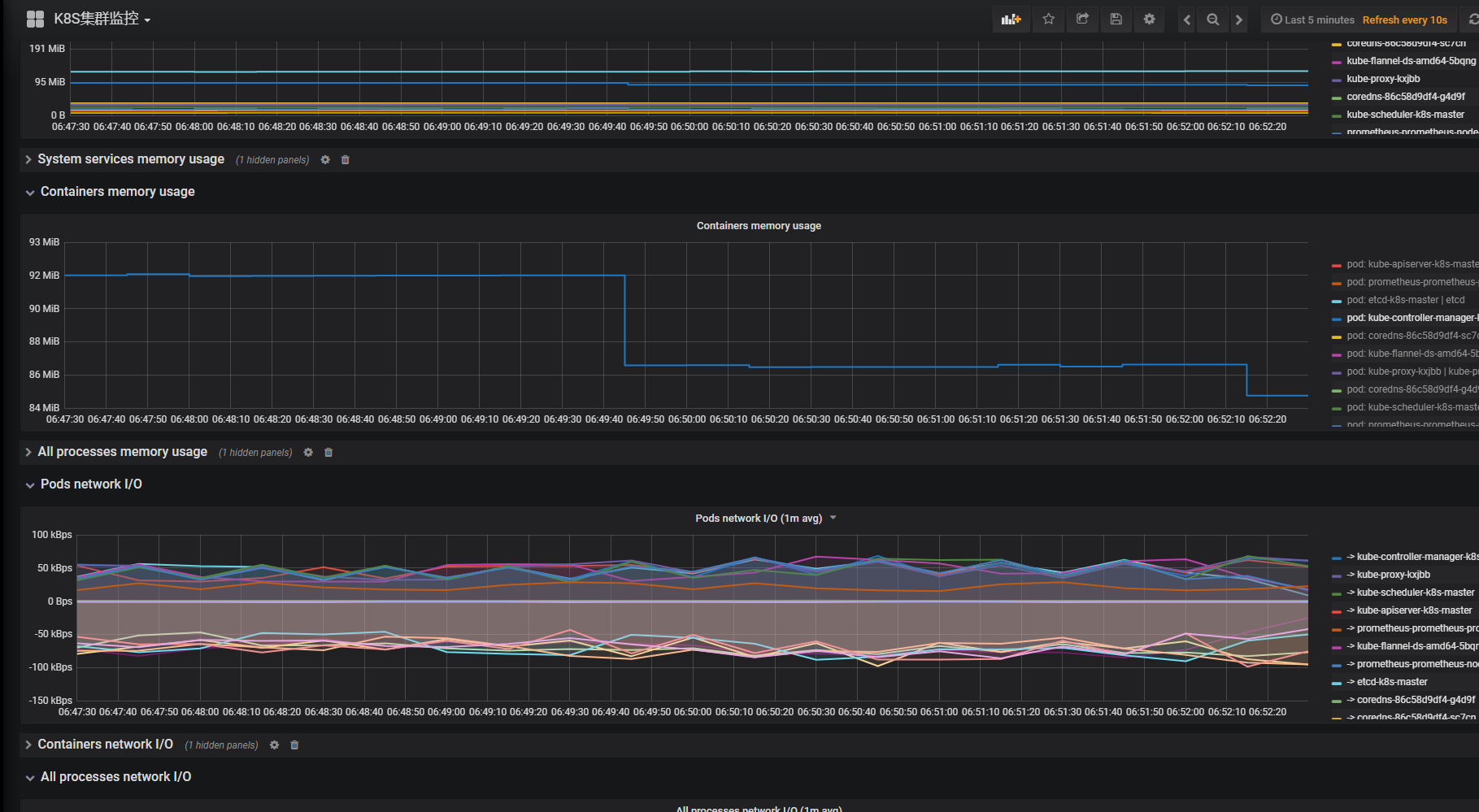

2、helm3安装:普罗米监控和grafana

helm repo add stable https://charts.helm.sh/stable

admin/prom-operator

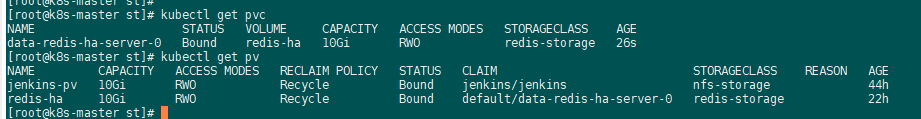

PVC中必须要指定:

volumeName, 代表是绑定的pv的名称

spec:

volumeName: redis-ha

[root@k8s-master st]# cat data-redis-ha-server-0.yaml apiVersion: v1 items: - apiVersion: v1 kind: PersistentVolumeClaim metadata: creationTimestamp: "2023-04-21T13:17:56Z" finalizers: - kubernetes.io/pvc-protection labels: app: redis-ha release: redis-ha name: data-redis-ha-server-0 namespace: default resourceVersion: "41034" selfLink: /api/v1/namespaces/default/persistentvolumeclaims/data-redis-ha-server-0 uid: ee119d01-e046-11ed-8606-000c29f64151 spec: volumeName: redis-ha accessModes: - ReadWriteOnce dataSource: null resources: requests: storage: 10Gi storageClassName: redis-storage volumeMode: Filesystem status: phase: Pending kind: List metadata: resourceVersion: "" selfLink: "" [root@k8s-master st]# cat redis-st.yaml kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: redis-storage annotations: storageclass.kubernetes.io/is-default-class: 'false' provisioner: kubernetes.io/no-provisioner reclaimPolicy: Delete volumeBindingMode: WaitForFirstConsumer

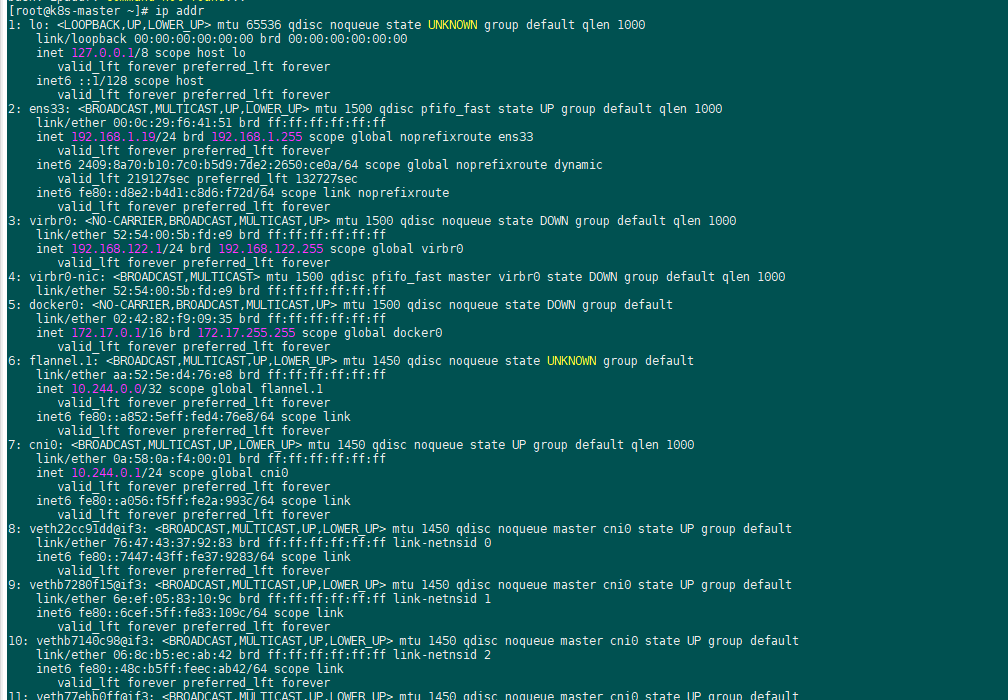

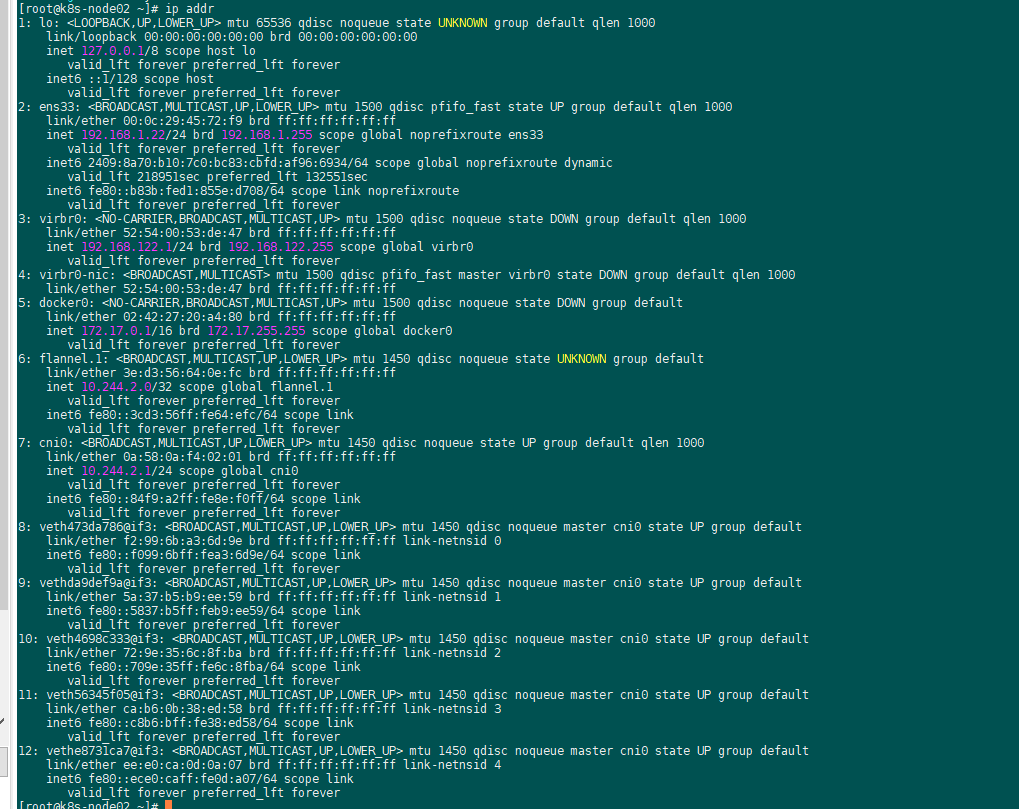

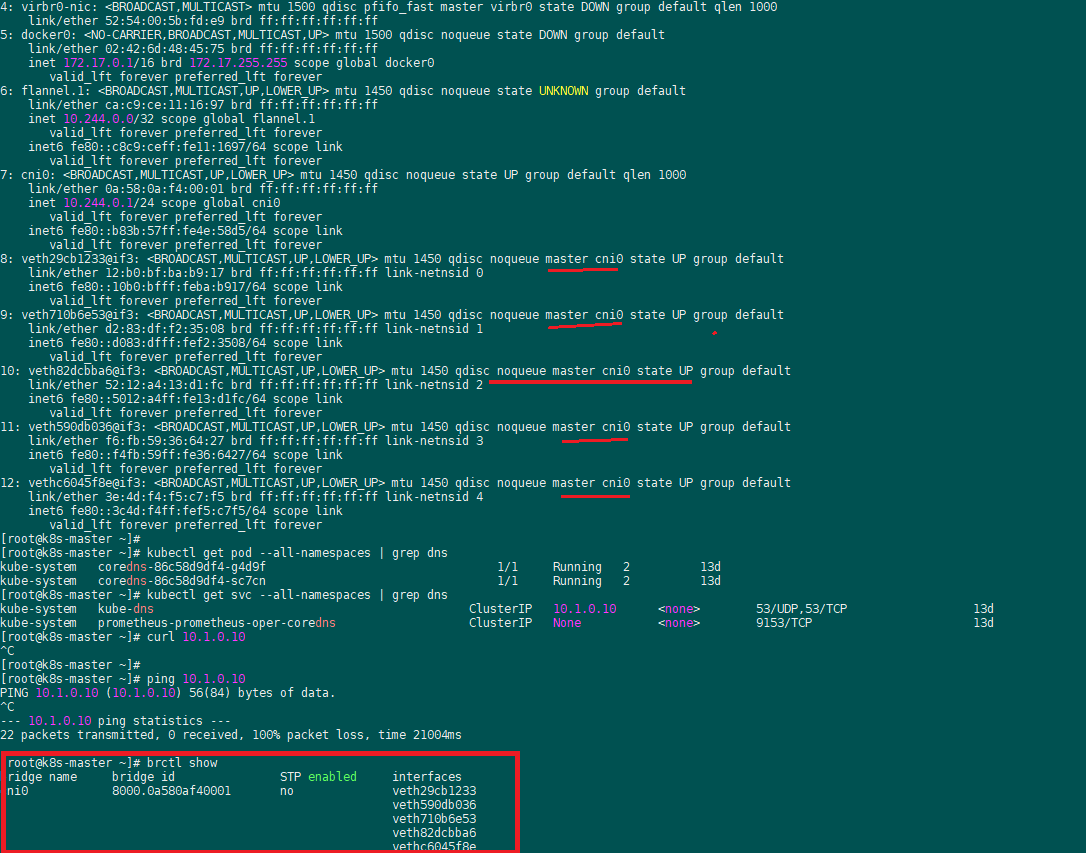

cni0网桥连接了5个虚拟设备

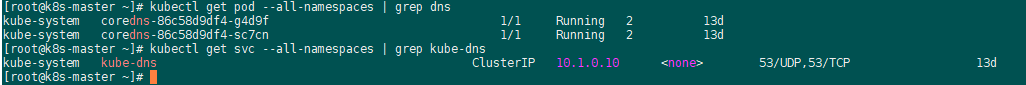

实际上svc kube-dns的endpoint列表就是指向了两个coredns pod ip:

k8s bash自动补全: yum -y install bash-completion [root@k8s-master ~]# source <(kubectl completion bash) [root@k8s-master ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@k8s-master net.d]# echo "source <(kubectl completion bash)" >> /etc/profile

[root@k8s-master net.d]# source /etc/profile

转:k8s遇到问题排查:

https://blog.csdn.net/qq_23435961/article/details/106659069

证书有效期续期:

#脚本方法 #/bin/bash for item in `find /etc/kubernetes/pki -maxdepth 2 -name "*.crt"`; echo ======================$item===================; do openssl x509 -in $item -text -noout| grep Not; done # 指令方法 [root@qtxian-k8s-master-1 ~]# kubeadm alpha certs check-expiration 备份过期证书: mkdir -p /etc/kubernetes.bak/pki cp -rp /etc/kubernetes/pki /etc/kubernetes.bak/pki 续订过期证书: [root@qtxian-k8s-master-1 ~]# kubeadm alpha certs renew all [root@qtxian-k8s-master-1 ~]# kubeadm alpha certs renew all [renew] Reading configuration from the cluster... [renew] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' certificate embedded in the kubeconfig file for the admin to use and for kubeadm itself renewed certificate for serving the Kubernetes API renewed certificate for the API server to connect to kubelet renewed certificate embedded in the kubeconfig file for the controller manager to use renewed certificate for the front proxy client renewed certificate embedded in the kubeconfig file for the scheduler manager to use renewed

新版本(1.15+):kubeadm alpha certs check-expiration 或 openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text |grep ' Not ' 其他同理 cert备份: cp -rp /etc/kubernetes /etc/kubernetes.bak 老版本:kubeadm alpha phase certs all 或 新版本(1.15+):kubeadm alpha certs renew all 使用该命令不用提前删除过期证书 # 重新生成配置 mv /etc/kubernetes/*.conf /tmp/ 老版本:kubeadm alpha phase kubeconfig all 或 新版本(1.15+):kubeadm init phase kubeconfig all # 更新kubectl配置 cp /etc/kubernetes/admin.conf ~/.kube/config kubectl get no # 如果发现集群,能读不能写,则请重启一下组件: docker ps | grep apiserver docker ps | grep scheduler docker ps | grep controller-manager docker restart 容器标识

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!